The Effect of Data Augmentation Methods on Pedestrian Object Detection

Abstract

1. Introduction

- a.

- For heterologous multi-sample augmentation, there are few studies, and the method of multi-sample stacking needs to be further enriched and improved.

- b.

- Only a small number of researchers link the computational process of image information processing algorithms with high-level vision tasks, such as object detection, and rarely consider the feedback of high-level tasks.

- c.

- The specific impact of different data augmentation methods on the target detection remains to be investigated.

2. Methodology

2.1. Supervised Image Fusion

2.2. YOLOv5 Detection

2.3. Unsupervised Data Augmentation

3. Improved Methods

3.1. Improved Semantic Segmentation Network for Bilateral Attention

3.2. Residual Convolutional GAN

- Removing the deconvolution of both the discriminator and the generator;

- Building residual blocks between the convolutional layers;

- Using AvgPooling2D and UpSampling2D to sample up or down, adjusting the length and width of the image;

- Increasing the number of convolution layers of ResBlock to increase the nonlinear capability and depth of the network.

3.3. Retinex Deillumination Algorithm

- Transform the original image to the logarithmic domain S (x,y);

- Calculate the number of image pyramid layers; initialize the constant image matrix R0 (x,y), which is used as the initial value for the iterative operation;

- Eight-neighborhood comparison operations are performed from the top layer to the last layer, and the pixels on the path are calculated according to the following Formula (2):

- Afterthe operation of the nth layer is completed, interpolate the operation result R of the nth layer to become twice the original, which is the same as the size of the n + 1 layer. When the bottom layer is calculated, the R obtained is the final enhanced image. Sc is the center point. (3) is the Logarithmic form of (1). After transformed, the intrinsic property R is calculated by (4).

4. Results and Discussions

4.1. Experimental Data

4.2. Training Configuration

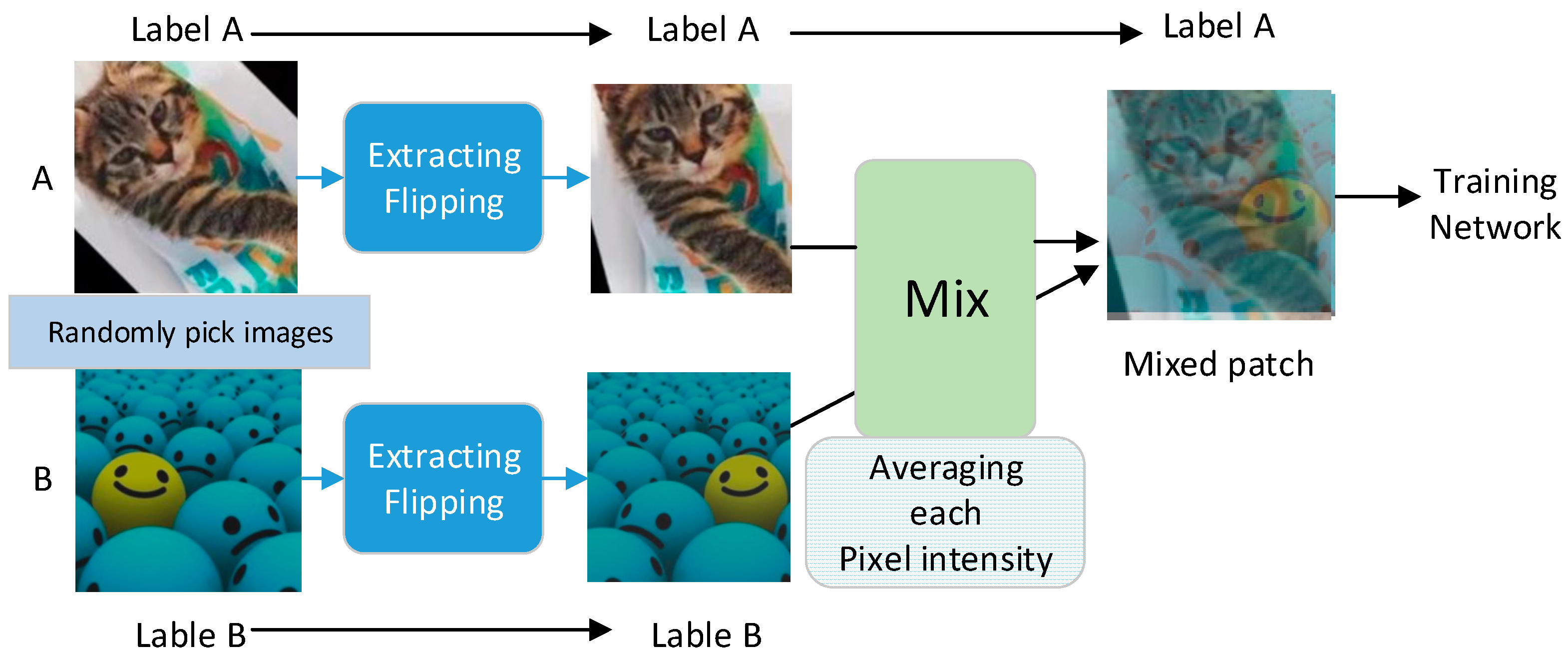

4.2.1. Heterogeneous Multi-Sample Augmentation Training

4.2.2. Residual Convolutional GAN Augmentation Training

4.3. Performance Comparison

4.4. Application

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| GAN | Generative Adversarial Network |

| mAP | Mean Average Precision |

| DCGAN | Deep Convolution Generative Adversarial Network |

| GAP | Global Average Pooling |

| GCT | Gated Channel Attention Mechanism |

| CSP | Cross Stage Partial |

| FPN | Feature Pyramid Networks |

| RDG | Residual Dense Gradient Block |

References

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Bloice, M.D.; Roth, P.M.; Holzinger, A.J.B. Biomedical image augmentation using Augmentor. Bioinformatics 2019, 35, 4522–4524. [Google Scholar] [CrossRef] [PubMed]

- Perez, L.; Wang, J.J.A. The Effectiveness of Data Augmentation in Image Classification using Deep Learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Chawla Nitesh, V.; Bowyer Kevin, W.; Hall Lawrence, O.; Kegelmeyer, W. Philip. Smote: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Tokozume, Y.; Ushiku, Y.; Harada, T. Between-class learning for image classification. arXiv 2017, arXiv:1711.10284. [Google Scholar]

- Inoue, H.J.A. Data Augmentation by Pairing Samples for Images Classification. arXiv 2018, arXiv:1801.02929. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S.J.C. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2016, arXiv:1511.06434. [Google Scholar]

- Fujiwara, K.; Endo, Y.; Kuriyama, S. Sketch-based Deep Generative Models Conditioned on a Background Image. In Proceedings of the 2019 International Conference of Advanced Informatics: Concepts, Theory and Applications (ICAICTA), Yogyakarta, Indonesia, 20–21 September 2019; pp. 1–6. [Google Scholar]

- Wang, X.; Shrivastava, A.; Gupta, A. A-Fast-RCNN: Hard Positive Generation via Adversary for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3039–3048. [Google Scholar]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S. Perceptual Generative Adversarial Networks for Small Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1951–1959. [Google Scholar]

- Tang, L.; Yuan, J.; Ma, J. Image fusion in the loop of high-level vision tasks: A semantic-aware real-time infrared and visible image fusion network. Inf. Fusion 2022, 82, 28–42. [Google Scholar] [CrossRef]

- Tang, H.; Xiao, B.; Li, W.; Wang, G. Pixel convolutional neural network for multi-focus image fusion. Inf. Sci. 2018, 433–434, 125–141. [Google Scholar] [CrossRef]

- Jocher, G.R.; Stoken, A.; Borovec, J.; Chaurasia, A.; Xie, T.; Liu, C.Y.; Abhiram, V.; Laughing, T. ultralytics/yolov5: v5.0—YOLOv5-P6 1280 models, AWS, Supervise.ly and YouTube Integrations. Zenodo 2021. [Google Scholar] [CrossRef]

- Coates, A.; Ng, A.Y. Learning feature representations with k-means. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 561–580. [Google Scholar]

- Le, Z.; Huang, J.; Xu, H.; Fan, F.; Ma, Y.; Mei, X.; Ma, J. UIFGAN: An unsupervised continual-learning generative adversarial network for unified image fusion. Inf. Fusion 2022, 88, 305–318. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.-A. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Liu, D.; Wen, B.; Jiao, J.; Liu, X.; Wang, Z.; Huang, T.S. Connecting Image Denoising and High-Level Vision Tasks via Deep Learning. IEEE Trans. Image Process. 2020, 29, 3695–3706. [Google Scholar] [CrossRef] [PubMed]

- Peng, C.; Tian, T.; Chen, C.; Guo, X.; Ma, J. Bilateral attention decoder: A lightweight decoder for real-time semantic segmentation. Neural Netw. 2021, 137, 188–199. [Google Scholar] [CrossRef]

- Nie, H.; Fu, Z.; Tang, B.-H.; Li, Z.; Chen, S.; Wang, L. A Dual-Generator Translation Network Fusing Texture and Structure Features for SAR and Optical Image Matching. Remote Sens. 2022, 14, 2946. [Google Scholar] [CrossRef]

- Yang, Z.; Zhu, L.; Wu, Y.; Yang, Y. Gated Channel Transformation for Visual Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11791–11800. [Google Scholar]

- Xu, D.; Zhang, N.; Zhang, Y.; Li, Z.; Zhao, Z.; Wang, Y. Multi-scale unsupervised network for infrared and visible image fusion based on joint attention mechanism. Infrared Phys. Technol. 2022, 125, 104242. [Google Scholar] [CrossRef]

- Gonzales, A.M.; Grigoryan, A.M. Fast Retinex for color image enhancement: Methods and algorithms. In Proceedings of the IS&T/SPIE Electronic Imaging, San Francisco, CA, USA, 8–12 February 2015. [Google Scholar]

- Lisani, J.L.; Morel, J.-M.; Petro, A.B.; Sbert, C. Analyzing center/surround retinex. Inf. Sci. 2020, 512, 741–759. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Zhang, Z. Improved adam optimizer for deep neural networks. In Proceedings of the 2018 IEEE/ACM 26th International Symposium on Quality of Service (IWQoS), Banff, AB, Canada, 4–6 June 2018; pp. 1–2. [Google Scholar]

| Joint Low-Level and High-Level Training Strategy |

|---|

| Input: infrared images and visible images |

| Output: fused images |

| For m ≤ M: for p steps: select b infrared images; select b visible images update the weight of semantic loss β update the parameters of the fusion network Nf by optimizer generate fused images for q steps: select b fused images update the parameters of the segmentation network Ns by optimizer |

| Generative Adversarial Networks Training Strategy |

|---|

| Input: fused images (adversarial training iterations time is T, training iteration of the discriminant network is K, small batch sample size is M) |

| Output: generator G (z, theta) |

| 1 Random initialization 2 for t tend to 1 to T do: For k tend to 1 to K do: Select M samples from training set D Select M samples from distribution N(0,1) Update ϕ using random gradient ascending, gradient is End Select M samples from distribution N(0,1) Update theta using random gradient ascending, gradient is end |

| Methods | P | R | mAP@0.5 |

|---|---|---|---|

| Visible original image | 0.66 | 0.174 | 0.209 |

| Densefuse | 0.831 | 0.679 | 0.706 |

| Lpfuse | 0.64 | 0.756 | 0.642 |

| Ours | 0.786 | 0.795 | 0.76 |

| Image | Method | P | R | mAP@0.5 | GFLOPs |

|---|---|---|---|---|---|

| Original visible images | No addition | 0.66 | 0.174 | 0.209 | 15.8 |

| Augmented images | +fusion | 0.786 | 0.795 | 0.76 | 15.9 |

| +fusion +GAN | 0.869 | 0.833 | 0.854 | 17.2 | |

| +fusion +GAN +deillumination | 0.955 | 0.896 | 0.97 | 14.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Su, S.; Wei, J. The Effect of Data Augmentation Methods on Pedestrian Object Detection. Electronics 2022, 11, 3185. https://doi.org/10.3390/electronics11193185

Liu B, Su S, Wei J. The Effect of Data Augmentation Methods on Pedestrian Object Detection. Electronics. 2022; 11(19):3185. https://doi.org/10.3390/electronics11193185

Chicago/Turabian StyleLiu, Bokun, Shaojing Su, and Junyu Wei. 2022. "The Effect of Data Augmentation Methods on Pedestrian Object Detection" Electronics 11, no. 19: 3185. https://doi.org/10.3390/electronics11193185

APA StyleLiu, B., Su, S., & Wei, J. (2022). The Effect of Data Augmentation Methods on Pedestrian Object Detection. Electronics, 11(19), 3185. https://doi.org/10.3390/electronics11193185