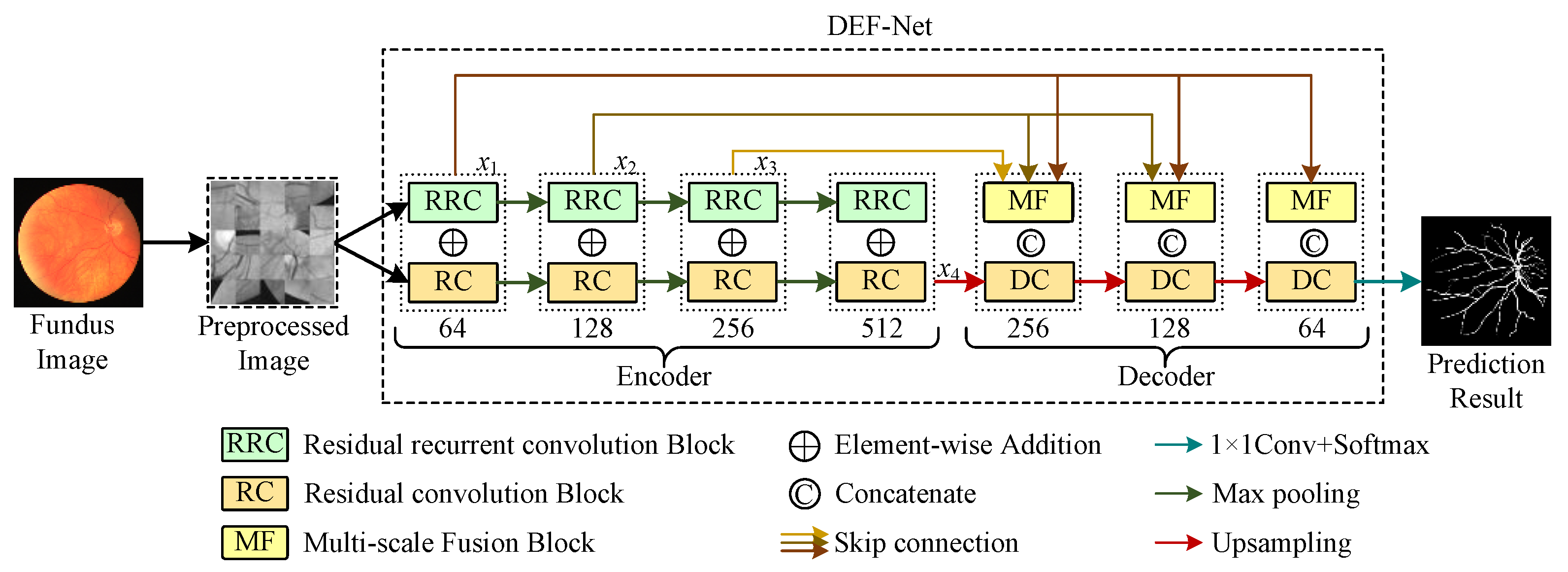

DEF-Net: A Dual-Encoder Fusion Network for Fundus Retinal Vessel Segmentation

Abstract

:1. Introduction

- A residual convolution (RC) block based on convolutional structure is designed to capture detail information and a recurrent residual convolution (RRC) block based on recurrent structure is built to obtain rich contextual features. On the basis, a novel dual-encoder structure by RC blocks and RRC blocks is proposed for stronger feature extraction ability.

- A multiscale fusion (MF) block is adopted to integrate features from different scales into a global vector by taking information from multiple scales into account and guide the original scales to facilitate the flow of features at different scales and enhance the fusion efficiency.

- Experiments conducted on fundus image datasets have displayed the overall performance of our method and the results obtain a superior performance compared to other advanced methods.

2. Materials and Methods

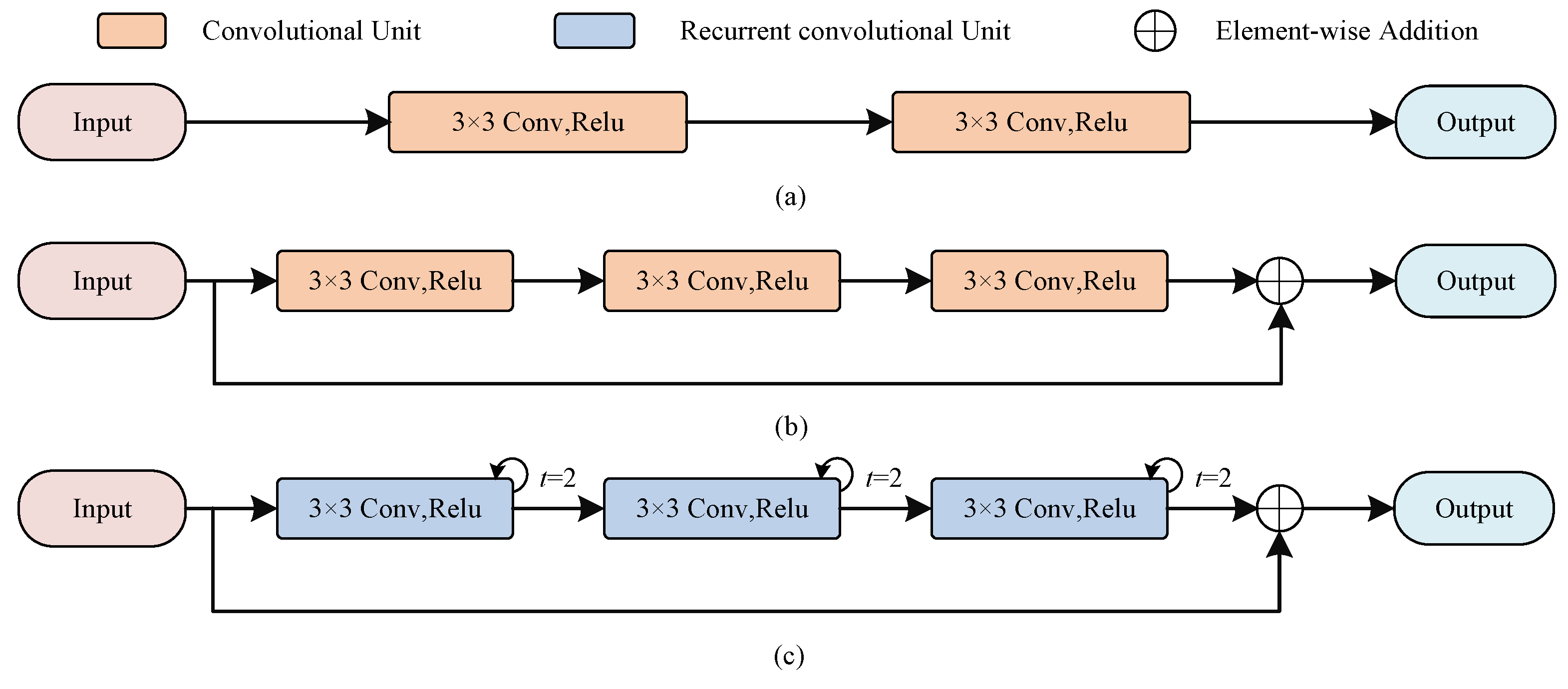

2.1. Dual-Encoder Structure

2.1.1. RC Block

2.1.2. RRC Block

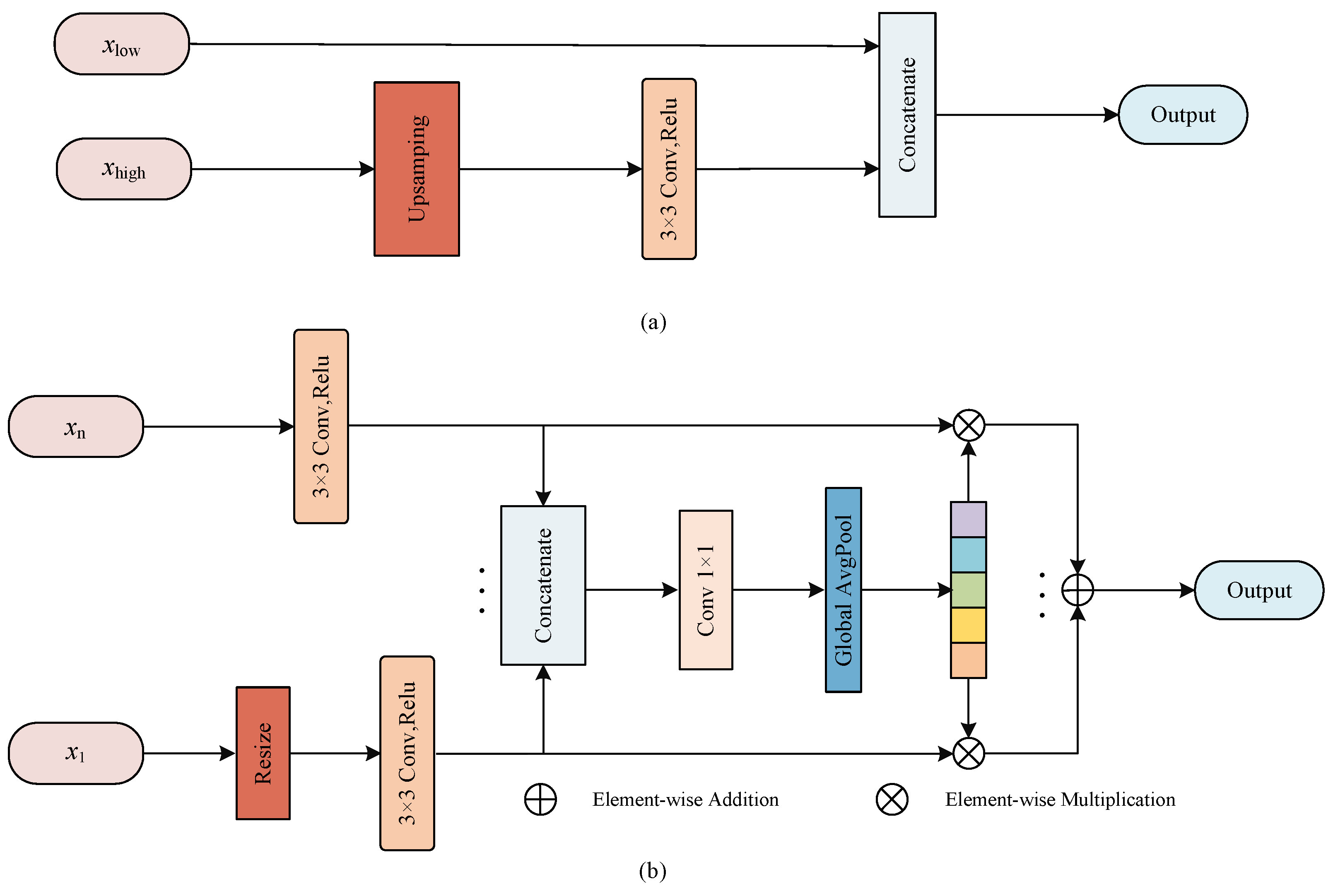

2.2. Decoder

2.2.1. Feature Reconstruction

2.2.2. Feature Fusion

3. Experimental Preparation

3.1. Experimental Materials and Evaluation Metrics

3.2. Experimental Preprocessing

3.3. Experimental Details

4. Results and Analysis

4.1. Ablation Experiment

4.1.1. Effect of the Dual-Encoder

4.1.2. Effect of the MF Block

4.2. Comparisons with Advanced Methods

4.2.1. Quantitative Result

| Method | Year | |||||

|---|---|---|---|---|---|---|

| R2UNet [19] | 2018 | 0.9784 | 0.8171 | 0.9556 | 0.7792 | 0.9813 |

| Joint Loss [28] | 2018 | 0.9752 | - | 0.9542 | 0.7653 | 0.9818 |

| LadderNet [33] | 2019 | 0.9793 | 0.8202 | 0.9561 | 0.7856 | 0.9810 |

| R-sGAN [34] | 2019 | - | 0.7882 | - | 0.7901 | 0.9795 |

| AAUNet [26] | 2020 | 0.9847 | - | 0.9558 | 0.7941 | 0.9798 |

| IterNet [27] | 2020 | 0.9813 | 0.8218 | 0.9574 | 0.7791 | 0.9831 |

| SATNet [35] | 2021 | 0.9822 | 0.8174 | 0.9684 | 0.8117 | 0.9870 |

| Lightweight [36] | 2021 | 0.9806 | - | 0.9568 | 0.7921 | 0.9810 |

| Bridege-Net [37] | 2022 | 0.9834 | 0.8203 | 0.9565 | 0.7853 | 0.9818 |

| DEF-Net | 2022 | 0.9789 | 0.8236 | 0.9556 | 0.8138 | 0.9763 |

| Method | Year | |||||

|---|---|---|---|---|---|---|

| R2UNet [19] | 2018 | 0.9815 | 0.7928 | 0.9634 | 0.7756 | 0.9820 |

| Joint Loss [28] | 2018 | 0.9781 | - | 0.9610 | 0.7633 | 0.9809 |

| LadderNet [33] | 2019 | 0.9839 | 0.8031 | 0.9656 | 0.7978 | 0.9818 |

| Cascade [38] | 2019 | - | - | 0.9603 | 0.7730 | 0.9792 |

| Three stage [39] | 2019 | 0.9776 | - | 0.9607 | 0.7641 | 0.9806 |

| IterNet [27] | 2020 | 0.9851 | 0.8073 | 0.9655 | 0.7970 | 0.9823 |

| NFN+ [29] | 2020 | 0.9832 | - | 0.9688 | 0.7933 | 0.9855 |

| Sine-Net [32] | 2021 | 0.9828 | - | 0.9676 | 0.7856 | 0.9845 |

| Lightweight [36] | 2021 | 0.9810 | - | 0.9635 | 0.7818 | 0.9819 |

| DEF-Net | 2022 | 0.9857 | 0.8076 | 0.9626 | 0.8053 | 0.9835 |

| Method | Year | |||||

|---|---|---|---|---|---|---|

| Joint Loss [28] | 2018 | 0.9801 | - | 0.9612 | 0.7581 | 0.9846 |

| Hierarchical [40] | 2018 | 0.8810 | - | 0.9570 | 0.7910 | 0.9700 |

| SD-UNet [30] | 2019 | 0.9850 | - | 0.9725 | 0.7548 | 0.9899 |

| DUNet [41] | 2019 | 0.9832 | 0.8143 | 0.9641 | 0.7595 | 0.9878 |

| IterNet [27] | 2020 | 0.9881 | 0.8146 | 0.9701 | 0.7715 | 0.9886 |

| AAUNet [26] | 2020 | 0.9824 | - | 0.9640 | 0.7598 | 0.9878 |

| Hybird [31] | 2021 | - | 0.8155 | 0.9626 | 0.7946 | 0.9821 |

| Sine-Net [32] | 2021 | 0.9807 | - | 0.9711 | 0.6776 | 0.9946 |

| WA-Net [42] | 2022 | 0.9665 | 0.8176 | 0.9865 | 0.7767 | 0.9877 |

| DEF-Net | 2022 | 0.9838 | 0.8186 | 0.9607 | 0.7958 | 0.9815 |

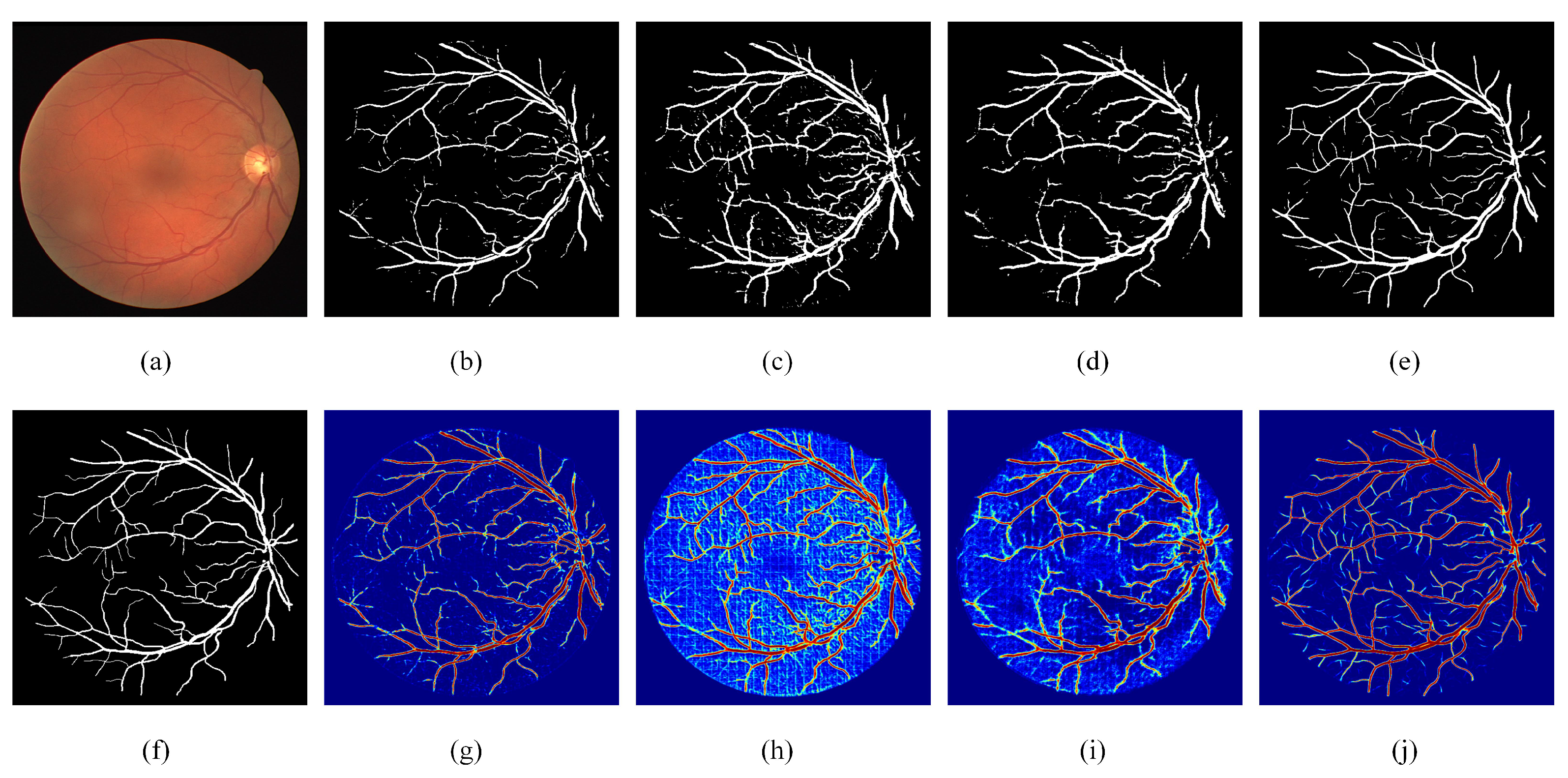

4.2.2. Qualitative Result

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bourne, R.R.; Stevens, G.A.; White, R.A.; Smith, J.L.; Flaxman, S.R.; Price, H.; Jonas, J.B.; Keeffe, J.; Leasher, J.; Naidoo, K.; et al. Causes of vision loss worldwide, 1990–2010: A systematic analysis. Lancet Glob. Health 2013, 1, e339–e349. [Google Scholar] [CrossRef] [Green Version]

- Goutam, B.; Hashmi, M.F.; Geem, Z.W.; Bokde, N.D. A Comprehensive review of deep learning strategies in retinal disease diagnosis using fundus images. IEEE Access 2022, 10, 57796–57823. [Google Scholar] [CrossRef]

- Li, T.; Bo, W.; Hu, C.; Kang, H.; Liu, H.; Wang, K.; Fu, H. Applications of deep learning in fundus images: A review. Med. Image Anal. 2021, 69, 101971. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Chuah, J.H.; Ali, R.; Wang, Y. Retinal vessel segmentation using deep learning: A review. IEEE Access 2021, 9, 111985–112004. [Google Scholar] [CrossRef]

- Liu, Y.; Shen, J.; Yang, L.; Bian, G.; Yu, H. ResDO-UNet: A deep residual network for accurate retinal vessel segmentation from fundus images. Biomed. Signal Process. Control 2022, 79, 104087. [Google Scholar] [CrossRef]

- Sun, L.; Zhao, C.; Yan, Z.; Liu, P.; Duckett, T.; Stolkin, R. A novel weakly-supervised approach for RGB-D-based nuclear waste object detection. IEEE Sens. J. 2018, 19, 3487–3500. [Google Scholar] [CrossRef] [Green Version]

- Dong, Y.; Liu, Y.; Kang, H.; Li, C.; Liu, P.; Liu, Z. Lightweight and efficient neural network with SPSA attention for wheat ear detection. PeerJ Comput. Sci. 2022, 8, e931. [Google Scholar] [CrossRef]

- Yang, L.; Fan, J.; Liu, Y.; Li, E.; Peng, J.; Liang, Z. Automatic detection and location of weld beads with deep convolutional neural networks. IEEE Trans. Instrum. Meas. 2020, 70, 1–12. [Google Scholar] [CrossRef]

- Yang, L.; Gu, Y.; Huo, B.; Liu, Y.; Bian, G. A shape-guided deep residual network for automated CT lung segmentation. Knowl.-Based Syst. 2022, 108981. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.; Gu, Y.; Bian, G.; Liu, Y. An attention-guided network for surgical instrument segmentation from endoscopic images. Comput. Biol. Med. 2022, 106216. [Google Scholar] [CrossRef]

- Yang, L.; Gu, Y.; Bian, G.; Liu, Y. DRR-Net: A dense-connected residual recurrent convolutional network for surgical instrument segmentation from endoscopic images. IEEE Trans. Med. Robot. Bionics 2022, 4, 696–707. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef] [Green Version]

- Xiao, X.; Lian, S.; Luo, Z.; Li, S. Weighted Res-UNet for high-quality retina vessel segmentation. In Proceedings of the 2018 9th International Conference on Information Technology in Medicine and Education (ITME), Hangzhou, China, 19–21 October 2018; pp. 327–331. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Zhao, Z.; Ren, Q.; Xu, Y.; Yu, Y. Dense U-net based on patch-based learning for retinal vessel segmentation. Entropy 2019, 21, 168. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef] [PubMed]

- Alom, M.Z.; Hasan, M.; Yakopcic, C.; Taha, T.M.; Asari, V.K. Recurrent residual convolutional neural network based on U-Net (R2U-Net) for medical image segmentation. arXiv 2018, arXiv:1802.06955. [Google Scholar] [CrossRef]

- Mou, L.; Zhao, Y.; Fu, H.; Liu, Y.; Cheng, J.; Zheng, Y.; Su, P.; Yang, J.; Chen, L.; Frangi, A.F.; et al. CS2-Net: Deep learning segmentation of curvilinear structures in medical imaging. Med. Image Anal. 2021, 67, 101874. [Google Scholar] [CrossRef]

- Keetha, N.V.; Annavarapu, C.S.R. U-Det: A modified U-Net architecture with bidirectional feature network for lung nodule segmentation. arXiv 2020, arXiv:2003.09293. [Google Scholar] [CrossRef]

- Bhavani, M.; Murugan, R.; Goel, T. An efficient dehazing method of single image using multi-scale fusion technique. J. Ambient. Intell. Humaniz. Comput. 2022, 1–13. [Google Scholar] [CrossRef]

- Staal, J.; Abramoff, M.; Niemeijer, M.; Viergever, M.A.; Ginneken, B.V. Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 2004, 23, 501–509. [Google Scholar] [CrossRef]

- Fraz, M.M.; Remagnino, P.; Hoppe, A.; Uyyanonvara, B.; Rudnicka, A.R.; Owen, C.G.; Barman, S.A. An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans. Biomed. Eng. 2012, 59, 2538–2548. [Google Scholar] [CrossRef]

- Ricci, E.; Perfetti, R. Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans. Med. Imaging 2007, 26, 1357–1365. [Google Scholar] [CrossRef]

- Patil, P.; Holambe, R.; Waghmare, L. AAUNet: An attention augmented convolution based UNet for change detection in high resolution satellite images. In Proceedings of the International Conference on Computer Vision and Image Processing (ICCVIP), Bangkok, Thailand, 29–30 November 2022; pp. 407–424. [Google Scholar] [CrossRef]

- Li, L.; Verma, M.; Nakashima, Y.; Nagahara, H.; Kawasaki, R. IterNet: Retinal image segmentation utilizing structural redundancy in vessel networks. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020; pp. 3656–3665. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, X.; Cheng, K.T. Joint segment-level and pixel-wise losses for deep learning based retinal vessel segmentation. IEEE Trans. Biomed. Eng. 2018, 65, 1912–1923. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Xia, Y.; Song, Y.; Zhang, Y.; Cai, W. NFN+: A novel network followed network for retinal vessel segmentation. Neural Netw. 2020, 126, 153–162. [Google Scholar] [CrossRef] [PubMed]

- Guo, C.; Szemenyei, M.; Pei, Y.; Yi, Y.; Zhou, W. SD-UNet: A structured dropout U-Net for retinal vessel segmentation. In Proceedings of the 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering (BIBE), Islamabad, Pakistan, 16–18 December 2019; pp. 439–444. [Google Scholar] [CrossRef]

- Yang, L.; Wang, H.; Zeng, Q.; Liu, Y.; Bian, G. A hybrid deep segmentation network for fundus vessels via deep-learning framework. Neurocomputing 2021, 448, 168–178. [Google Scholar] [CrossRef]

- Atli, I.; Gedik, O.S. Sine-Net: A fully convolutional deep learning architecture for retinal blood vessel segmentation. Eng. Sci. Technol. Int. J. 2021, 24, 271–283. [Google Scholar] [CrossRef]

- Zhuang, J. LadderNet: Multi-path networks based on U-Net for medical image segmentation. arXiv 2018, arXiv:1810.07810. [Google Scholar] [CrossRef]

- Siddique, F.; Iqbal, T.; Awan, S.M.; Mahmood, Z.; Khan, G.Z. A robust segmentation of blood vessels in retinal images. In Proceedings of the 2019 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 16–18 December 2019; pp. 83–88. [Google Scholar] [CrossRef]

- Tong, H.; Fang, Z.; Wei, Z.; Cai, Q.; Gao, Y. SAT-Net: A side attention network for retinal image segmentation. Appl. Intell. 2021, 51, 5146–5156. [Google Scholar] [CrossRef]

- Li, X.; Jiang, Y.; Li, M.; Yin, S. Lightweight attention convolutional neural network for retinal vessel image segmentation. IEEE Trans. Ind. Inform. 2020, 17, 1958–1967. [Google Scholar] [CrossRef]

- Zhang, Y.; He, M.; Chen, Z.; Hu, K.; Li, X.; Gao, X. Bridge-Net: Context-involved U-net with patch-based loss weight mapping for retinal blood vessel segmentation. Expert Syst. Appl. 2022, 195, 116526. [Google Scholar] [CrossRef]

- Wang, X.; Jiang, X.; Ren, J. Blood vessel segmentation from fundus image by a cascade classification framework. Pattern Recognit. 2019, 88, 331–341. [Google Scholar] [CrossRef]

- Yan, Z.; Yang, X.; Cheng, K.T. A three-stage deep learning model for accurate retinal vessel segmentation. IEEE J. Biomed. Health Inform. 2018, 23, 1427–1436. [Google Scholar] [CrossRef]

- Fan, Z.; Lu, J.; Wei, C.; Huang, H.; Cai, X.; Chen, X. A hierarchical image matting model for blood vessel segmentation in fundus images. IEEE Trans. Image Process. 2018, 28, 2367–2377. [Google Scholar] [CrossRef] [PubMed]

- Jin, Q.; Meng, Z.; Pham, T.D.; Chen, Q.; Wei, L.; Su, R. DUNet: A deformable network for retinal vessel segmentation. Knowl.-Based Syst. 2019, 178, 149–162. [Google Scholar] [CrossRef] [Green Version]

- Alvarado-Carrillo, D.E.; Dalmau-Cedeño, O.S. Width attention based convolutional neural network for retinal vessel segmentation. Expert Syst. Appl. 2022, 209, 118313. [Google Scholar] [CrossRef]

| Case | RC | RCC | MF | |||||

|---|---|---|---|---|---|---|---|---|

| U-Net | 🗸 | 0.9574 | 0.7750 | 0.9518 | 0.7138 | 0.9787 | ||

| RC-Net | 🗸 | 🗸 | 0.9630 | 0.7801 | 0.9483 | 0.7205 | 0.9815 | |

| RRC-Net | 🗸 | 🗸 | 0.9498 | 0.7123 | 0.9118 | 0.8581 | 0.9196 | |

| DE-Net | 🗸 | 🗸 | 0.9669 | 0.7577 | 0.9361 | 0.7835 | 0.9583 | |

| DEF-Net | 🗸 | 🗸 | 🗸 | 0.9789 | 0.8236 | 0.9556 | 0.8138 | 0.9763 |

| Case | RC | RCC | MF | |||||

|---|---|---|---|---|---|---|---|---|

| U-Net | 🗸 | 0.9772 | 0.7972 | 0.9591 | 0.7675 | 0.9814 | ||

| RC-Net | 🗸 | 🗸 | 0.9795 | 0.8001 | 0.9629 | 0.7688 | 0.9837 | |

| RRC-Net | 🗸 | 🗸 | 0.9714 | 0.7332 | 0.9166 | 0.7926 | 0.9620 | |

| DE-Net | 🗸 | 🗸 | 0.9747 | 0.7882 | 0.9592 | 0.7848 | 0.9779 | |

| DEF-Net | 🗸 | 🗸 | 🗸 | 0.9857 | 0.8076 | 0.9626 | 0.8053 | 0.9835 |

| Case | RC | RCC | MF | |||||

|---|---|---|---|---|---|---|---|---|

| U-Net | 🗸 | 0.9671 | 0.7346 | 0.9491 | 0.6865 | 0.9791 | ||

| RC-Net | 🗸 | 🗸 | 0.9694 | 0.7421 | 0.9483 | 0.6910 | 0.9855 | |

| RRC-Net | 🗸 | 🗸 | 0.9638 | 0.7478 | 0.9481 | 0.7216 | 0.9792 | |

| DE-Net | 🗸 | 🗸 | 0.9833 | 0.8100 | 0.9609 | 0.7559 | 0.9863 | |

| DEF-Net | 🗸 | 🗸 | 🗸 | 0.9838 | 0.8186 | 0.9607 | 0.7958 | 0.9815 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Gao, G.; Yang, L.; Liu, Y.; Yu, H. DEF-Net: A Dual-Encoder Fusion Network for Fundus Retinal Vessel Segmentation. Electronics 2022, 11, 3810. https://doi.org/10.3390/electronics11223810

Li J, Gao G, Yang L, Liu Y, Yu H. DEF-Net: A Dual-Encoder Fusion Network for Fundus Retinal Vessel Segmentation. Electronics. 2022; 11(22):3810. https://doi.org/10.3390/electronics11223810

Chicago/Turabian StyleLi, Jianyong, Ge Gao, Lei Yang, Yanhong Liu, and Hongnian Yu. 2022. "DEF-Net: A Dual-Encoder Fusion Network for Fundus Retinal Vessel Segmentation" Electronics 11, no. 22: 3810. https://doi.org/10.3390/electronics11223810

APA StyleLi, J., Gao, G., Yang, L., Liu, Y., & Yu, H. (2022). DEF-Net: A Dual-Encoder Fusion Network for Fundus Retinal Vessel Segmentation. Electronics, 11(22), 3810. https://doi.org/10.3390/electronics11223810