1. Introduction

Due to the widespread proliferation of COVID-19, many individuals are required to work from home, and many students are required to attend courses remotely. The significance of remote cooperation has risen dramatically. In most schools and workplaces, the whiteboard represents an important piece of office equipment that promotes local collaboration, as seen in

Figure 1a. However, the traditional whiteboard does not effectively support remote cooperation and coordination. Existing mouse-based electronic whiteboards facilitate distant collaboration, according to [

1]. As illustrated in

Figure 1b, however, we discovered that writing with a mouse is inefficient and unpleasant when using a mouse-based electronic whiteboard. A touch screen with a stylus presents another kind of electronic whiteboard that is commonly used. However, writing and reading handwritten text on a size-restricted touch screen is not pleasant. Virtual reality is now accessible to end users and provides an entirely new method of digital engagement. This method allows users to observe and interact with their surroundings as they would in the physical world. We present a virtual reality whiteboard that enables users to collaborate and use Design Thinking techniques as they would in a physical workspace.

The advent of VR technology and VR devices in recent years has made VR whiteboards viable. As seen in

Figure 1c, the head-mounted display (HMD) and controller allow users to write in a virtual area. Virtual reality technology eliminates the restrictions of space and real objects [

2], allowing users to write and create more freely. However, it does not offer remote collaboration, which is a vital whiteboard feature.

In this paper, we extend our previous proposed virtual reality whiteboard system [

3] for remote collaboration with the natural handwriting input method. Through writing on the VR whiteboard, this Remote Collaborative Whiteboard System (RCWS) enables simultaneous collaboration between numerous individuals.

Using the VR gadget and network service, the natural handwriting and remote collaboration functions were realized. In addition, a number of controlled experiments and user experience questionnaire surveys were conducted to assess the cooperation effectiveness and user experience of the system. Finally, the system’s end-to-end latency is assessed to determine whether the VR whiteboard system fits the requirements of real-time Internet services.

The main contributions of this work are summarized as follows:

Developed a virtual reality whiteboard system in which multiple users can use natural handwriting input for remote collaboration.

Invited volunteers to conduct a replicable, controlled experiment and completed a quantitative evaluation of the collaboration efficiency of the whiteboard.

Assessed the usability of the whiteboard via a usability scale questionnaire based on the first-hand experience of volunteers.

The rest of this paper is organized as follows.

Section 2 reviews some related research and works.

Section 3 describes the system design and implementation.

Section 4 elaborates the controlled evaluation experiment and result analysis.

Section 5 discusses the system and experiment based on the results of the evaluation experiment. Finally, we conclude this paper in

Section 6.

2. Related Works

The collaborative handwriting whiteboard is a human–computer interaction (HCI) device and a computer-supported cooperative work (CSCW) device. There is a wide range of related work in the field of HCI and CSCW. In this section, we focus our discussion on the following two main topics, “Collaborative Virtual Environment (CVE) and Metaverse” and “Natural Interface and Collaborative Whiteboards”, which are most closely related to this VR whiteboard research.

2.1. Collaborative Virtual Environment (CVE) and Metaverse

This research aims to implement a natural handwriting whiteboard system based on a collaborative virtual environment, and it is convenient to develop such a virtual reality application with Unity [

4,

5,

6,

7,

8,

9]. However, VR systems do not naturally support remote collaboration. We need additional network services to implement remote synchronization functions [

10,

11,

12,

13]. We found the following two CVE tools that support Unity. UNet (Unity Networking) is a widely used Unity multiplayer and networking API [

14,

15]. It serves many applications, toolkits, services and games [

16,

17,

18,

19,

20,

21]. However, it is deprecated at present, and Unity does not currently provide an alternative API. PUN (Photon Unity Networking) facilitates the development of multiplayer games [

22]. The free PUN with 20 concurrent users meets our needs, and Photon provides international services, which makes it more convenient to implement the remote collaboration whiteboard.

The concept of metaverse was first generated in 1992. The metaverse world has built a new information dissemination system and communication scenario and, at the same time, changed human behavior patterns. Web3.0 allows users to produce and edit content freely, users are not only the consumers of the platform but also the service providers and producers of the platform as the subject of content production. The metaverse will also blur the boundary between virtual space and real space, reflecting a kind of “borderlessness” in space. The metaverse is intended to break the boundary between virtual and real and integrate them, and at the same time, break the distance and physical limitations of the physical world. Ref. [

23] illustrates a method for developing online 3D meeting applications based on the metaverse concept. The approach is based on virtual reality technology and web 3.0 to develop the application. The application will serve as a multi-person web platform for students and teachers in the educational field, providing a conference whiteboard and projector for discussion and collaboration. The application is also suitable for other extensive scenarios, such as medical training, corporate affairs, and sporting events. Refs. [

24,

25] report that the avatars of scientists and medical practitioners in the metaverse can collaborate remotely through a virtual whiteboard. Ref. [

26] conduct a network interconnection performance measurement study on Workrooms, a social VR platform, to analyze the network protocols, network bandwidth, and data stream links of VR workrooms with virtual whiteboards through black-box testing and user scenario simulation. Ref. [

27] explore the feasibility, opportunities, and challenges of using the Meta Horizon Workrooms platform in a VR classroom scenario. VR technology allows people to use their “virtual selves” to interact with others remotely online [

28]. Participants experimented with collaborative activities and multiplayer games using the whiteboard feature in the virtual room.

2.2. Natural Interface and Collaborative Whiteboards

To implement a whiteboard with a natural input method, the first interaction type that comes to mind is the gesture, simple and natural [

29,

30,

31,

32]. Further, we consider the process of drawing and writing by hand [

33], which carries more information, and makes interactions more abundant. With the development of computer science, some additional devices and technologies serve HCI [

34,

35], which improves the efficiency and user experience [

36,

37,

38,

39]. For example, the high-performance graphics card renders complex 3D graphics in real-time, and the virtual reality technology provides users with an immersive experience [

40,

41]. We can build a three-dimensional virtual scene with HMD [

2,

42,

43], and then create a whiteboard in it [

44,

45]. Moreover, there is a controller that can be used as an input device to provide a natural writing experience [

46,

47].

The traditional whiteboard facilitates local collaboration through natural handwriting. However, it does not facilitate remote collaboration, and material written on traditional whiteboards cannot be kept and shared digitally. Several studies [

48,

49,

50] are devoted to the digitization of whiteboard material. Refs. [

51,

52,

53] discuss the learning effectiveness of a whiteboard with natural handwriting.

Significant studies have been conducted on the electronic whiteboard [

54,

55,

56,

57]. The mouse-based electronic whiteboard facilitates remote collaboration; however, writing and drawing with the mouse seems inefficient and uncomfortable, and it is not easy to simulate a natural writing experience using a mouse. Another commonly used whiteboard device that supports remote collaboration is a touch screen with a stylus [

58,

59]. However, the limitations of its size present a problem.

Existing VR whiteboard systems are predominantly standalone, non-collaborative systems due to the limitations of VR hardware. We compare our work with some related research and products in terms of “Required equipment”, “Natural handwriting experience”, “Support remote collaboration” and “System usability evaluation” as shown in

Table 1. Among the related works, Rekimoto [

54] suggests a public whiteboard with a 2D touch screen for information sharing. Mynatt et al. [

60] design a 2D electronic whiteboard for workplace use. Using HMD, Kukimoto et al. [

61] creates a touch screen to facilitate 3D model design collaboration and idea sharing via annotation. Sakuraba et al. [

62] utilize HMD to serve 3D model design collaboration, but it does not support remote collaboration. “Dry Erase: Infinite VR Whiteboard” is a Steam VR whiteboard game that supports free drawing and writing in the VR space. However, it does not support multiple users and lacks a network connection function. The “Zoom whiteboard” is a public whiteboard in the “Zoom” online conferencing program. Finally, the VR whiteboard proposed in this research is a remote collaborative whiteboard that supports natural handwriting.

3. VR Whiteboard System

The VR whiteboard promotes collaboration amongst numerous individuals using natural handwriting. There are three implementation phases. First, using Unity and HMD, we create a three-dimensional virtual room and a virtual whiteboard. It is then advantageous to have handwriting expertise, thus a controller is used as the input device. This allows for a more organic writing or drawing experience akin to a real whiteboard. Finally, for remote collaboration to be supported, the network service must synchronize written trajectory in real time.

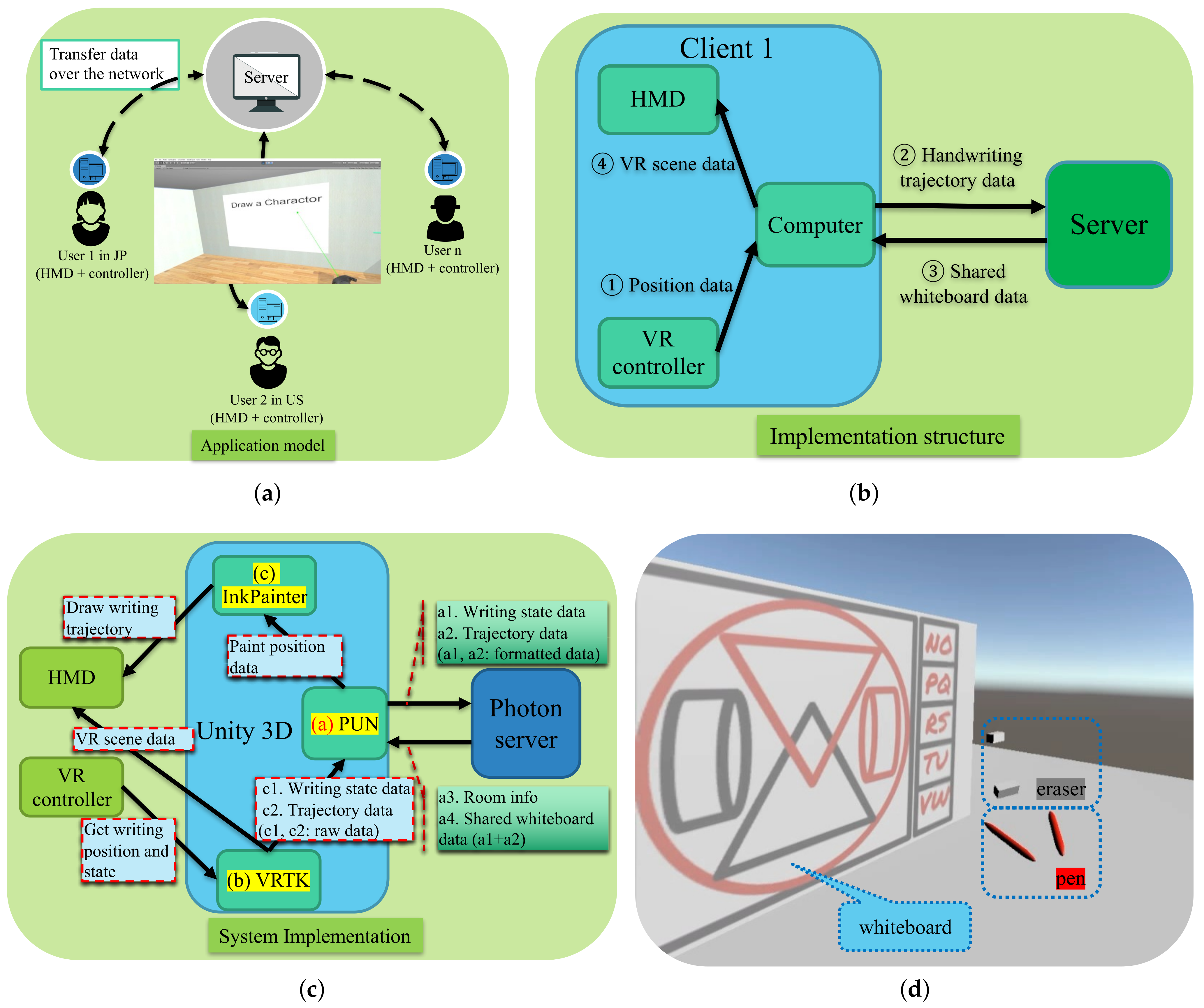

The application model of the VR whiteboard system is shown in

Figure 2a. User 1 is located in Japan, whereas User 2 is located in the United States. Due to various work-related issues, two individuals must cooperate using a whiteboard in an office conference room. These two individuals may now use the VR whiteboard. The system has a server and many clients. The server is hosted on the cloud. Users will require a computer and a VR device kit in order to participate in the shared VR whiteboard.

3.1. System Implementation

The implementation structure of the VR whiteboard system is shown in

Figure 2b. Users enter the VR scene through the HMD, where the system will then perform the following four steps: (1) Positioning data of handwriting trajectory are captured by the VR controllers; (2) Clients send their handwriting trajectory data to the server; (3) Obtain shared whiteboard data from the server; (4) The HMD obtains the VR whiteboard scene data, generates a VR scene, and displays it to users.

The VR whiteboard system consists of three main modules as shown in

Figure 2c. (a) Photon Unity Network (PUN) module implements a multiplayer synchronization function. (b) Virtual Reality Toolkit (VRTK) controls the VR devices. (c) InkPainter draws the writing trajectory.

As shown in

Figure 2c, The PUN module accomplishes multiplayer functionality in the VR whiteboard. It also operates as the system data processing center. It synchronizes the data between clients through the server deployed in the cloud. PUN is a Unity multiplayer package in Photon. Photon is an independent networking engine and multiplayer platform. Algorithm 1 shows the processing flow of the PUN module. First, the application connects to the Photon server and checks whether a VR whiteboard room exists; if so, it enters the room; otherwise, it creates a room and then enters it. The system will then create a pen and eraser for each participant. Subsequently, the application enters the data synchronization stage, transmits the current player’s data to the server, receives data from other players through the server, and processes data from the other two modules.

| Algorithm 1 The processing flow algorithm of the PUN module. |

Input:

Output:

- 1:

if

then - 2:

Create_room() ▹ If there is no room available in the system, create a room (including a whiteboard) and return the room address. - 3:

end if - 4:

Enter_existing_room() ▹ Enter the shared virtual room via the room address. - 5:

Instantiate_objects(pen, eraser) ▹ Instantiate the pen and eraser for the user. - 6:

while do ▹The user can exit the current room with the Exit button. - 7:

Data_sending/receiving/processing(, ) ▹ Send, receive and process the drawing data for users’ remote collaboration in the room. - 8:

end while

|

VRTK is another important module. It connects Unity with VR devices, obtains the handwriting position and state represented by the controller, presents the 3D scene to the user through HMD, and sends handwriting position and state data to the PUN module. InkPainter module shows the writing/drawing trajectory in the shared 3D whiteboard scene, obtains paint position data from the PUN module and draws the trajectory in the VR whiteboard.

3.2. Usage of the Proposed VR Whiteboard

Figure 2d provides a glimpse of the VR whiteboard system. In virtual space, there are virtual pens and erasers. After donning the HMD, the user can view this virtual 3D realm and roam freely in the room-scale region. The system’s particular use method, usage procedure, and other important instructions are presented below.

3.2.1. Get Ready

Execute the system program and power on all connected devices (computer, network, controller, etc.). The user then simply has to don the HMD and grab the controller.

3.2.2. Moving and Controlling

As shown in

Figure 2d, the system will produce a pen and an eraser for each user accessing the virtual realm of the VR whiteboard. Users are able to move and write on the VR whiteboard using the VR controller, just as they would with a mark-pen. The user grasps the VR controller to grab the pen in the VR whiteboard environment by pressing the “Grip” button on the controller, and then releases the pen by pressing “Grip” again. The functionality of the virtual eraser is the same.

3.2.3. Stroke Segmentation

Users write on the virtual whiteboard while holding the pen with the controller. When the virtual pen contacts the virtual whiteboard, it will produce black ink in the appropriate place to enable writing. When the marker is removed from the whiteboard, writing will cease. The above explains how the automated stroke segmentation function is implemented. In addition, when the pen enters writing mode, its color will shift from red to black, allowing users to adjust the status in real time. Using high-speed Ethernet, each user’s writing and erasing activities will be synced with other users, enabling remote collaboration.

4. Evaluation

This section evaluates the VR whiteboard, the traditional whiteboard (pen), and the electronic whiteboard (mouse) in terms of collaborative efficiency and user experience.

4.1. Collaboration Efficiency Experiment

4.1.1. Efficiency Experiment Design

We list the main factors included in the three efficiency experiment scenes, then analyze the constraints and combination relationships between these factors, and finally obtain three combinations of factors that need to be considered in the experiment.

Figure 3 depicts the three scenes of the assessment experiment as identified by factor analysis. In

Table 2, a comparison of experimental scene characteristics is presented in further depth.

Figure 3a depicts a traditional whiteboard (pen) scene. The typical whiteboard is written on with pens and erased using erasers. The electronic whiteboard (mouse) scenario is shown in

Figure 3b. Users manipulate the mouse to choose and control the writing tool in order to write/draw on the whiteboard and to select and hold the eraser to remove the content. The VR whiteboard scenario is shown in

Figure 3c. The user dons an HMD and then enters the virtual whiteboard environment. They subsequently select and operate the pen and eraser with the controller in hand, and begin writing/drawing and erasing akin to using a regular whiteboard.

The average age of the 12 experimental participants is 25. All participants are randomly paired into groups of two. In the end, there are sixteen groups in the assessment experiment, as shown in

Table 3 and

Table 4.

In order to avoid the experience effect, we pre-train each participant before the experiment. We ensure each participant can complete the experimental task without pausing conspicuously during the experimental process. Participants are determined as passing the pre-test only when the deviation of three or more times the pre-test results is smaller than 10 s. Two examples are offered below.

Group 1 Failed. The three consecutive times of pre-test results of group 1 are 65 s, 58 s, 68 s. The max is 68 , the min is 58 s, and the deviation is 10 s (“max”–“min”), which does not meet the requirements (deviation should smaller than 10 s). So, Group 1 needs to continue pre-training.

Group 3 Passed. The three consecutive times of pre-test results of group 3 are 65 s, 59 s, 63 s. The deviation is 6 s, which meets the requirements. At this time, Group 3 can end the pre-training stage, and start the formal experiment process.

The experiment assignments (

Figure 3d) comprise 10 distinct English letters and fundamental graphic components such as straight lines, curves, squares, rounds, and triangles. Each participant picks a color, and then the two participants work together to finish the graphics and text in three experimental scenes. The drawn line cannot exceed the template’s boundary. The constant width of the template provides adequate writing precision, hence controlling an experimental variable.

4.1.2. Experimental Process

Adequately prepare each participant via comprehensive pre-training and pre-testing.

To eliminate the sequence effect, each group completes the task in three experimental scenes in the order stated in

Table 3.

A stopwatch is used to measure the duration of a task and to record the results.

Each participant completes the questionnaire.

Collect questionnaires and process data.

We record the time required to complete the experimental tasks, determine the mean and standard deviation (SD), and conduct a statistical test of significance (

t-test). In each whiteboard scenario, sixteen experiment groups (consisting of two participants) performed collaborative efficiency assessment experiment tasks at least twice. To assess the cooperation effectiveness of the three whiteboard scenes, we consistently add the last two datasets to

Table 3.

The arrangement of the whiteboard efficiency evaluation experiment, regarding the order of the experiments for the three scenes: traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

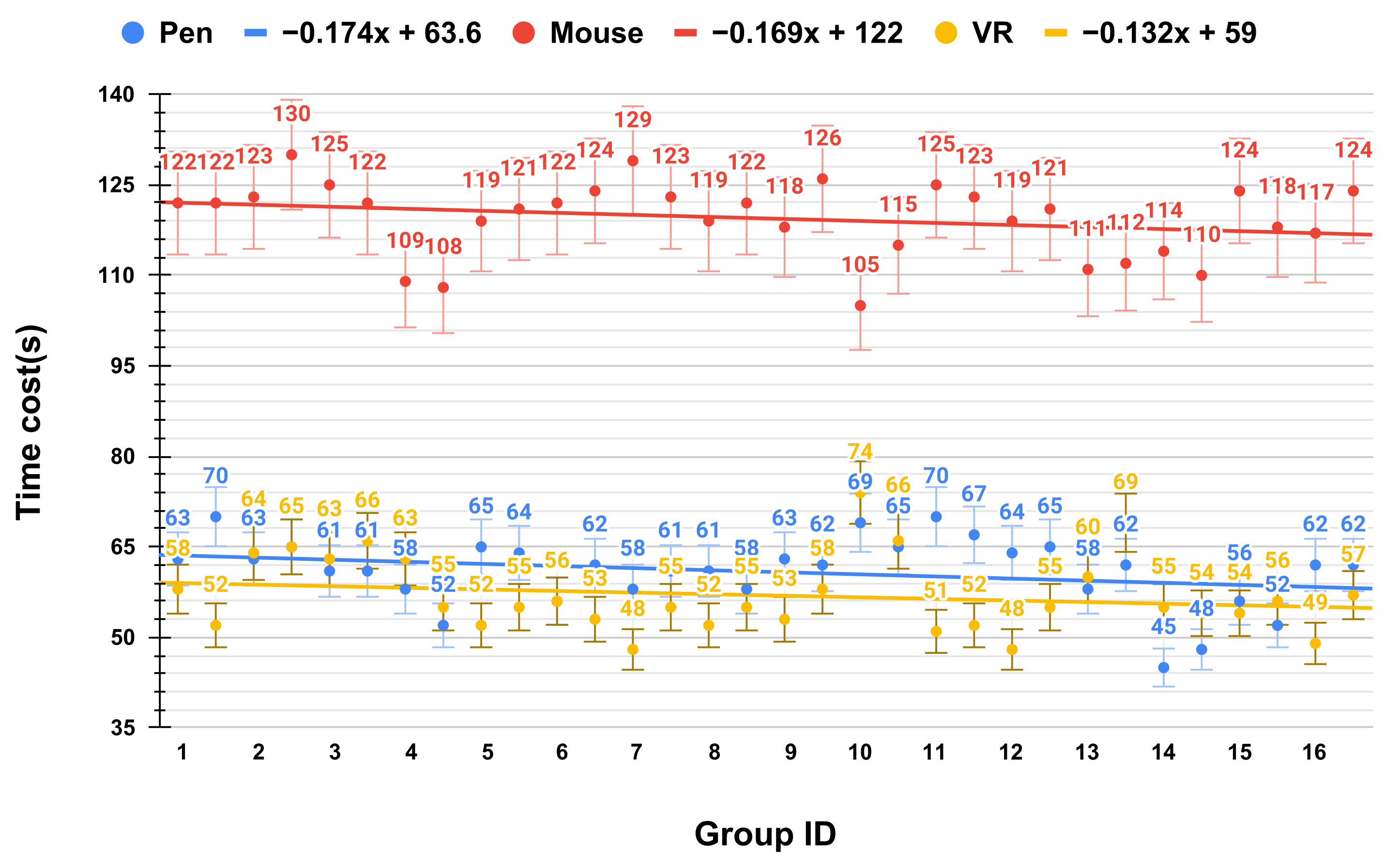

4.1.3. Efficiency Experiment Result

Figure 4 intuitively shows the time cost result of the collaboration efficiency experiment. The three scenes (Pen/Mouse/VR) correspond to blue, red, and yellow dots and lines, respectively. We drew the regression line of the data and added a regression equation in the upper legend. We can directly obtain the approximation average values of the three scenes, (Pen: 63.6 s, Mouse: 122 s, VR: 59 s). Detailed results of the collaboration efficiency experiment are shown in

Table 4. We computed the mean and standard deviation of the three scenarios’ (Pen/Mouse/VR) findings. The average time required to perform the experiment task jointly using a regular whiteboard is 60.9 s, 119.4 s with an electronic whiteboard, and 57.0 s with a virtual reality whiteboard. Clearly, the mean and standard deviation of the VR whiteboard scenario exhibit the lowest values. The difference between the two samples decreases as the

t-test value of the computation result increases.

Figure 4 depicts the experimental results for VR whiteboard remote/local collaboration efficiency (compared to electronic whiteboard). Using the VR whiteboard, the average time spent on remote collaborative activities is reduced (extremely statistically significantly different). The time cost of the VR whiteboard stabilizes as the standard deviation value decreases.

The VR whiteboard provides a higher local collaboration efficiency than the traditional whiteboard as illustrated in

Figure 4. There is no statistically significant difference between the average and consistency of the local collaboration task time for the virtual reality whiteboard and the traditional whiteboard.

4.1.4. Collaborative Efficiency Experiment Conclusion

The virtual reality whiteboard is compatible with natural handwriting. The input device used for the electronic whiteboard is a mouse. The time cost of conventional whiteboards serves as a baseline. The cooperation efficiency of our virtual reality whiteboard is 52% greater than that of the electronic whiteboard.

The VR whiteboard’s local collaboration efficiency is equivalent to that of the traditional whiteboard.

4.2. User Experience Experiment

A questionnaire survey is conducted after the collaboration efficiency experiment. The three experiment scenes (Pen/Mouse/VR), respectively, correspond to three questionnaires.

4.2.1. Questionnaire Design

The user experience scale refers to the method of the semantic differential scale (a measuring tool of semantic differentiation). There are seven options for each question, as shown in

Figure 5. The seven options (Extremely Yes/Quite Yes/Slightly Yes/Pass. I have not experienced three kinds of whiteboards yet./Slightly No/Quite No/Extremely No) correspond to seven scores, respectively. The user experience scale, as shown in

Table 5, consists of 21 questions in 5 aspects (Input/Output, Novelty, Usability, Efficiency, and Comprehensive). There are two rules for generating the questionnaire:

The order of the questions in the questionnaire is different from that in

Table 5, which could improve the validity of the results.

There are two inverted questions (Q4-2, Q5-1 from

Table 5), which help us to assess the validity of the questionnaire results.

4.2.2. User Experience Experiment Results

We collect questionnaire survey results, calculate mean and standard deviation, and implement a statistical significance test (

t-test).The questionnaire results of the three scenes (Pen/Mouse/VR) are shown in

Table 6. The first five rows correspond to the mean and standard deviation of the five aspects (Comprehensive, Input/Output, Efficiency, Novelty, Usability) of the user experience scale, and the sixth row is the overall mean and standard deviation. We draw the color gradient shown in

Table 6. It can be clearly seen that the user experience scale of the VR scene has the highest score (the largest value/the darkest color), and the score of the VR scene is also the most stable (the standard deviation is the smallest/the darkest color). The last two lines are statistical difference tests (

t-test), the smaller the value of the calculation result, the more obvious the difference between the two samples for comparison.

Figure 6 intuitively shows the five aspects of the user experience scale of the three scenarios and the overall average score results. The three scenes (Pen/Mouse/VR) correspond to blue, red, and yellow dots and lines, respectively. The score range is (-3, 3), and the score accuracy is 0.1. In

Figure 6, we can see the user experience experiment result of the VR whiteboard (compared to electronic whiteboard and traditional whiteboard).

The user experience of the VR whiteboard performed better (statistically significant difference) both overall and in each of the five aspects (Comprehensive, Input/Output, Efficiency, Novelty, Usability).

The results of the VR whiteboard are more stable (small standard deviation).

4.2.3. Conclusion of User Experience Experiment

The user experience of the VR whiteboard scored significantly higher than that of the traditional whiteboard by 60% and higher than that of electronic whiteboard by 23%. Since the value range of the scale is (−3, 3), in order to obtain a positive comparison result, the experimental results need to be adjusted to the value range (0, 6). So, the average user experience values for the three scenes (Pen/Mouse/VR) are 3.9, 3, 4.8. The calculation result is then obtained through Equation (

2) as follows.

4.2.4. The Preferences of Users Regarding the Remote Collaborative Whiteboards

In the questionnaires for the “Pen” scene and “VR” scene, there is a specific question for participants to vote on, namely “Which type of whiteboard do you most want to use for remote collaboration?” The 12 participants voted a total of 24 times. If a participant did not experience all three types of whiteboards, they will vote “pass”. The result shows that the “VR whiteboard” received 18 votes (75%) and “electronic whiteboard” received 4 votes (16.7%), which means that users prefer to use the VR whiteboard for remote collaboration.

4.2.5. User Feedback

The last part of the questionnaire invites feedback from the participants (optional). Thankfully, after completing the time-consuming experiment and questionnaire, two participants kindly left their comments as follows.

It was very easy to use for collaborative works because we do not need to think about physical distances each other (VR whiteboard). (From user ID-142)

Muscle get tired in this experiment (VR whiteboard). (From user ID-146)

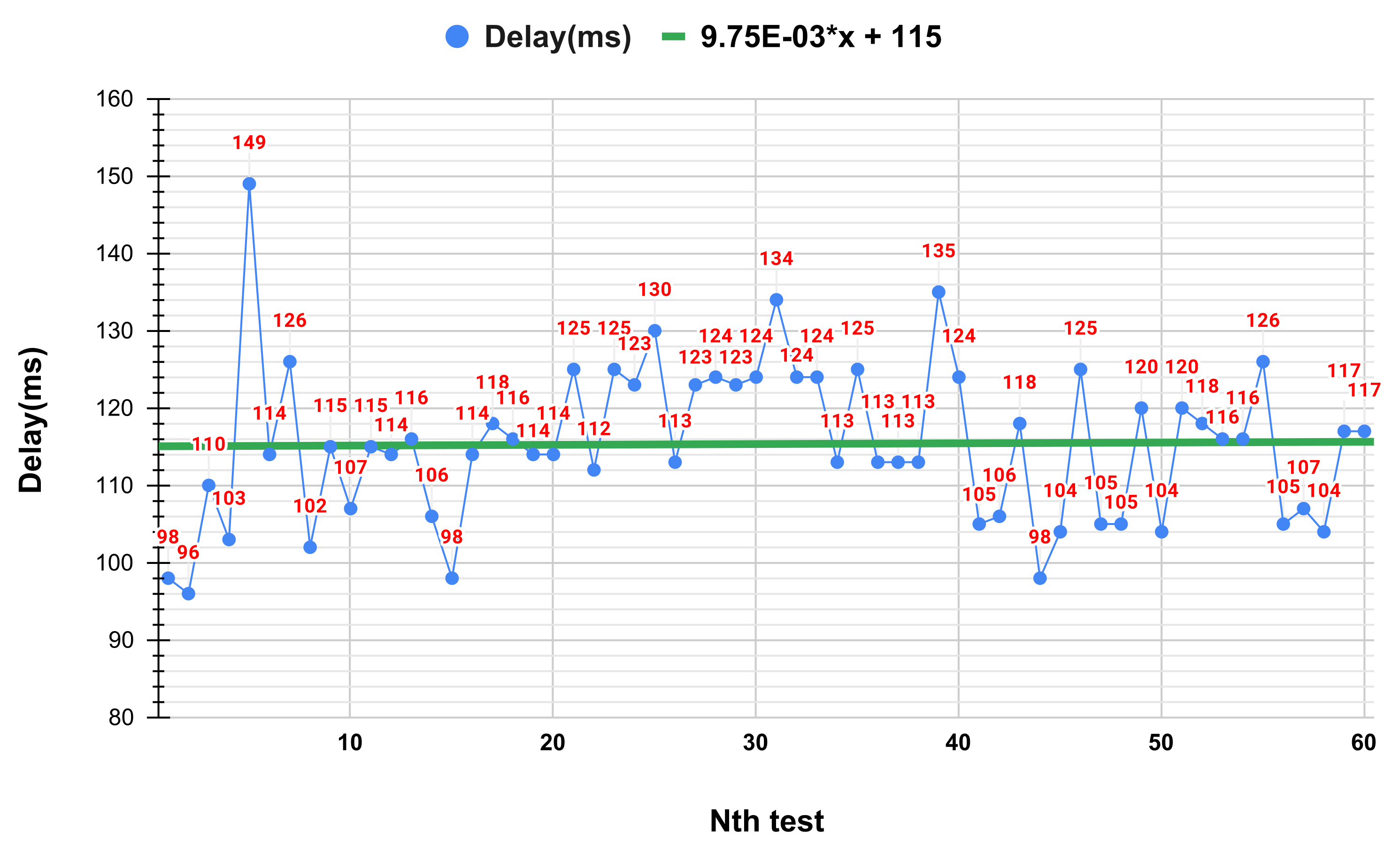

4.3. System End-to-End Delay Evaluation

The end-to-end delay of the VR whiteboard system is measured. The average synchronization delay of the writing/drawing action is 115 ms (

= 10 ms).

Figure 7 visually shows the results of the delay test. We drew a regression line of the data and added a regression equation after the upper legend. The regression equation shows an average value of 60 times of tests. In reference to the Internet-Draft [

63] “Delay Limits for Real-Time Services” submitted to IETF in 2016, the VR whiteboard system meets the delay requirements of “Voice communication” (<150 ms), and “Third person avatar games” (<120 ms). In addition, the experiment participants did not report that the system was slow or delayed.

5. Discussion

The evaluation experiment for the VR whiteboard system has been completed. Subsequently, we present the discussion based on the implementation of the system and evaluation experiment.

5.1. Advantages of the VR Whiteboard

The efficiency experiment proves the high efficiency of the VR whiteboard in remote collaboration. In addition, from the specific results of the user experience scale, other advantages of VR whiteboard were obtained as follows.

5.1.1. Protecting the Privacy of Users

The result of Q3-1 (

Table 5) “It protects my privacy.” (

Pen: 0.08,

Mouse: 0.67,

VR: 1.83) shows that the VR whiteboard gets the highest user experience in the protection of user privacy. For the traditional whiteboard and the electronic whiteboard, it is a common problem of privacy leakage that the content written on the whiteboard/screen is seen by people passing by. However, for the VR whiteboard, only the current collaborating partners can see the written content, this is a protection of privacy.

5.1.2. No Hindrance During Collaboration

The VR whiteboard exhibits the highest user experience in Q4-3 (

Table 5) “There is no conflict when the two write at the same time.” (

Pen: −1.25,

Mouse: 0.08,

VR: 1.92) and Q4-4 (

Table 5) “The two can always write freely.” (

Pen: −0.75,

Mouse: 0.17,

VR: 2.17). It is comfortable and smooth when writing on a traditional whiteboard with a marker pen. However, when multiple people collaborate, they always need to write at the same time, and thus hinder each other. This presents a disadvantage of using traditional whiteboards but is an advantage of using the VR whiteboard. VR whiteboards and electronic whiteboards support remote multi-person collaboration. In addition, VR whiteboards also support natural and smooth writing. The user feedback 1 “It was very easy to use for collaborative works because we do not need to think about physical distances each other (VR whiteboard). (From user ID-142)” also demonstrates this advantage.

5.2. Disadvantages and Limitations of the VR Whiteboard

The proposed remote collaborative whiteboard is based on VR technology and a computer network which are also the disadvantages and limitations of the VR whiteboard. Since VR headsets and controller devices have not been fully developed in terms of size and usability, their popularity is relatively low. New users of the VR whiteboard system, especially those without experience using VR devices, may need time to learn and additional practice to become proficient with the proposed VR whiteboard. In addition, the collaborative function of a VR whiteboard requires stable and low-latency computer network support, which makes the current VR whiteboard system unsuitable for outdoor and mobile scenarios. However, as the VR devices’ performance improves and 5G networks become increasingly popular, the VR whiteboard systems are expected to achieve better latency performance and network robustness in the future.

5.3. Potential Improvements to the VR Whiteboard System

Combining the system performance and user feedback, we summarize some possible improvements in the VR whiteboard system as follows.

5.3.1. Ease of Use

The VR whiteboard fails in Q3-4 (

Table 5) “It is easy to get familiar with it.” (

Pen: 2.08,

Mouse: 0.17,

VR: 1.83) shows no outstanding in Q3-3 (

Table 5) “It is easy to learn how to use it.” (

Pen: 2.17,

Mouse: 1.67,

VR: 2.33). The reason why the VR whiteboard is not easy to use is that many users have no experience in using VR devices, and they need to learn to use VR devices first, and then learn how to use VR whiteboards. With the popularity of VR devices in the future, it will be easy for users to learn to use VR systems. Although the virtual reality whiteboard implements the same natural handwriting input mechanism as the conventional whiteboard, there is no tactile feedback when writing. Currently, the writing condition is indicated by a change in the color of the pen. Future plans include simulating tactile feedback via controller vibration. This will make it simpler to utilize the VR whiteboard.

5.3.2. User Comfort

The VR whiteboard fails in Q3-2 (

Table 5) “It is stable and reliable.” (with the result of

Pen: 1.83,

Mouse: 0.00,

VR: 1.17), and the user feedback of “Muscle get tired in this experiment (VR whiteboard)” (From user ID-146). Currently, VR devices are being designed increasingly lighter and more comfortable, and there is a type of wireless HMD that does not require cords, lowering user fatigue and enhancing user comfort. The VR whiteboard system’s scene is now intermittently misplaced. As positioning technology continues to advance, the system’s stability will become further enhanced.

5.4. Potential Improvement of the Evaluation Experiment

Through the implementation of an experimental and questionnaire survey, we propose a possible improvement for the evaluation experiment of the VR whiteboard system concerning immersive experience. The VR whiteboard scores highest for user experience in Q5-3 (

Table 5) “I feel that time passed quickly during the previous experiment”. “Flow” in psychology is a mental state also referred to as “Zone”. In this state, people are completely immersed in a certain activity, resulting in a change in time perception. For example, when using a system, a pleasant experience makes the user feel that time passes quickly, and a negative experience makes the user feel as though one hour has passed after only one minute. The purpose of this item is to verify whether our virtual reality whiteboard system can bring users a sense of immersion [

40]. However, the experiment did not reach the expected goal in the end. The reason for this is that the duration of a single-system experience spans less than two minutes, which is an inadequate amount of time to allows users to become immersed.

6. Conclusions

This work established a VR whiteboard system based on a VR device and PUN service. It allows several individuals to collaborate remotely using natural handwriting. The end-to-end latency is about 115 ms ( = 10), which meets the requirements of real-time Internet services. In addition, we conducted a number of controlled comparison studies between a traditional whiteboard, an electronic whiteboard, and the proposed VR whiteboard in order to evaluate the operating efficiency. Consequently, we produced the following results: The average time duration required to perform the experimental task collaboratively using a traditional whiteboard measured 60.9 s, 119.4 s with an electronic whiteboard, and 57.0 s with a virtual reality whiteboard. This demonstrates that the VR whiteboard increases cooperation efficiency by 52% compared to the electronic whiteboard, and is also compatible with a traditional whiteboard. Subsequently, we distributed questionnaire surveys with results indicating that the VR whiteboard provides a superior user experience to the traditional whiteboard by 60% and the electronic whiteboard by 23%.

We propose two possible future directions to extend the current work, which may help readers to conduct follow-up research along with this work. Currently, most consumer-grade hand controllers suffer from issues relating to their considerable size and weight, and are also unable to capture finger movements. So, we consider using motion-capture gloves designed by our laboratory [

64] as an input device for the VR whiteboard. In addition, we are considering conducting further research on mixed reality, in which the whiteboard, pen, eraser, and writing content can be both physical and virtual, and can be fully adapted to various application scenarios, such as grabbing a real pen to write on the virtual whiteboard, and then using a real eraser to erase writing content in the virtual scene. We believe these improvements will lead to a more natural writing experience.

Author Contributions

J.W., Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Writing—original draft, Writing—review and editing; L.J., Conceptualization, Funding acquisition, Project administration, Resources, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by JSPS KAKENHI Grant Number 22K12114, JKA Fundation, and NEDO Younger Research Support Project Grant Number JPNP20004.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank all anonymous reviewers for their careful reading of our manuscript and their many insightful comments and suggestions, which helped us improve the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript.

| AR | Augmented Reality |

| CVE | Collaborative Virtual Environment |

| CCU | Concurrent User |

| GUI | Graphical User Interface |

| HMD | Head Mounted Display |

| HCI | Human–Computer Interaction |

| PC | Personal Computer |

| PUN | Photon Unity Networking |

| RCWS | Remote Collaborative Whiteboard System |

| SD | Standard Deviation |

| 3D | Three Dimensional |

| UNet | Unity Networking |

| UoA | University of Aizu |

| VR | Virtual Reality |

| WS | Work Station |

References

- McCanne, S. A Distributed Whiteboard for Network Conferencing; Citeseer: Princeton, NJ, USA, 1992. [Google Scholar]

- Hastings, C.; Brunotte, J. Total immersion: VR headsets in language learning. 2016 Pansig J. 2017, 101. [Google Scholar]

- Wang, J.; Jing, L. Operation Efficiency Study on a New Cooperative VR Whiteboard System. In Proceedings of the International Conference on Human–Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2021; pp. 629–636. [Google Scholar]

- Webel, S.; Bockholt, U.; Engelke, T.; Gavish, N.; Olbrich, M.; Preusche, C. An augmented reality training platform for assembly and maintenance skills. Robot. Auton. Syst. 2013, 61, 398–403. [Google Scholar] [CrossRef]

- Economou, D.; Doumanis, I.; Argyriou, L.; Georgalas, N. User experience evaluation of human representation in collaborative virtual environments. Pers. Ubiquitous Comput. 2017, 21, 989–1001. [Google Scholar] [CrossRef]

- Porwol, L.; Ojo, A. VR-Participation: The feasibility of the Virtual Reality-driven multi-modal communication technology facilitating e-Participation. In Proceedings of the 11th InternationalY Conference on Theory and Practice of Electronic Governance, Galway, Ireland, 4–6 April 2018; pp. 269–278. [Google Scholar]

- Kondo, R.; Sugimoto, M.; Minamizawa, K.; Hoshi, T.; Inami, M.; Kitazaki, M. Illusory body ownership of an invisible body interpolated between virtual hands and feet via visual-motor synchronicity. Sci. Rep. 2018, 8, 7541. [Google Scholar] [CrossRef] [PubMed]

- Mai, C.; Thiem, N.; Hussmann, H. DrawingPresence: A Method for Assessing Temporal Fluctuations of Presence Status in a VR Experience. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 3369827. [Google Scholar] [CrossRef]

- Du, R.; Li, D.; Varshney, A. Geollery: A mixed reality social media platform. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–13. [Google Scholar]

- Guo, A.; Canberk, I.; Murphy, H.; Monroy-Hernández, A.; Vaish, R. Blocks: Collaborative and Persistent Augmented Reality Experiences. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies; ACM: New York, NY, USA, 2019; Volume 3, pp. 1–24. [Google Scholar]

- Dijkstra-Soudarissanane, S.; Assal, K.E.; Gunkel, S.; Haar, F.T.; Hindriks, R.; Kleinrouweler, J.W.; Niamut, O. Multi-sensor capture and network processing for virtual reality conferencing. In Proceedings of the 10th ACM Multimedia Systems Conference, Amherst, MA, USA, 18–21 June 2019; pp. 316–319. [Google Scholar]

- Bai, H.; Sasikumar, P.; Yang, J.; Billinghurst, M. A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Villanueva, A.; Zhu, Z.; Liu, Z.; Peppler, K.; Redick, T.; Ramani, K. Meta-AR-App: An Authoring Platform for Collaborative Augmented Reality in STEM Classrooms. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–14. [Google Scholar]

- Nan, J.; Wei, G.; Yuling, Y.; Lin, W. Leveraging TPDV to Direction Estimation With a Single Pair of Commodity Devices. IEEE Access 2020, 8, 62248–62260. [Google Scholar] [CrossRef]

- Lindblom, A. A Study of Networking Performance in a Multi-user VR Environment: Using Unity and the Mirror Library, diva2:1440801, 17 June 2020. Available online: https://www.cse.unr.edu/~fredh/papers/journal/75-auffmpvra/paper.pdf (accessed on 17 November 2022).

- Carlson, C.P. Socket Golf-Building a Google Cardboard Game in Unity. Ph.D. Thesis, University of Wyoming Libraries, Laramie, WY, USA, 2017. [Google Scholar]

- Zou, Y.; Wang, P.; Tang, Q.; Sun, Y. Implement Multi-Character Display in Virtual Reality Environment Based on Unet and Tracker. In Proceedings of the 2019 2nd IEEE International Conference on Safety Produce Informatization (IICSPI), Chongqing, China, 28–30 November 2019; pp. 530–532. [Google Scholar]

- Salimian, M.; Brooks, S.; Reilly, D. Imrce: A unity toolkit for virtual co-presence. In Proceedings of the Symposium on Spatial User Interaction, Berlin, Germany, 13–14 October 2018; pp. 48–59. [Google Scholar]

- Ahde, J. Real-Time Unity Multiplayer Server Implementation; Kaakkois-Suomen ammattikorkeakoulu: Kouvola, Finland, 2017. [Google Scholar]

- Monaco, J.V. Bug or Feature? Covert Impairments to Human Computer Interaction. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, New York, NY, USA, 25–30 April 2020; pp. 1–15. [Google Scholar]

- Hasada, H.; Zhang, J.; Yamamoto, K.; Ryskeldiev, B.; Ochiai, Y. AR Cooking: Comparing Display Methods for the Instructions of Cookwares on AR Goggles. In Proceedings of the International Conference on Human–Computer Interaction, Island of Rhodes, Greece, 21–23 June 2019; pp. 127–140. [Google Scholar]

- Im, S.B.; Cho, M.G. Implementation of Traveling Contents for Elderly Patients using Virtual Reality and Photon Network. J. Converg. Inf. Technol. 2018, 8, 123–128. [Google Scholar]

- Gupta, Y.P.; Chawla, A.; Pal, T.; Reddy, M.P.; Yadav, D.S. 3d networking and collaborative environment for online education. In Proceedings of the 2022 10th IEEE International Conference on Emerging Trends in Engineering and Technology-Signal and Information Processing (ICETET-SIP-22), Nagpur, India, 29–30 April 2022; pp. 1–5. [Google Scholar]

- Bhugaonkar, K.; Bhugaonkar, R.; Masne, N. The Trend of Metaverse and Augmented & Virtual Reality Extending to the Healthcare System. Cureus 2022, 14, e29071. [Google Scholar]

- Thomason, J. Metaverse, token economies, and non-communicable diseases. Glob. Health J. 2022, 6, 164–167. [Google Scholar] [CrossRef]

- Cheng, R.; Wu, N.; Chen, S.; Han, B. Reality Check of Metaverse: A First Look at Commercial Social Virtual Reality Platforms. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; pp. 141–148. [Google Scholar]

- Hedrick, E.; Harper, M.; Oliver, E.; Hatch, D. Teaching & learning in virtual reality: Metaverse classroom exploration. In Proceedings of the 2022 IEEE Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 13–14 May 2022; pp. 1–5. [Google Scholar]

- Foss, J. Lessons from learning in virtual environments. Br. J. Educ. Technol. 2009, 40, 556–560. [Google Scholar] [CrossRef]

- Dudley, J.J.; Schuff, H.; Kristensson, P.O. Bare-handed 3D drawing in augmented reality. In Proceedings of the 2018 Designing Interactive Systems Conference, Hong Kong, China, 9–13 June 2018; pp. 241–252. [Google Scholar]

- Marin, G.; Dominio, F.; Zanuttigh, P. Hand gesture recognition with leap motion and kinect devices. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1565–1569. [Google Scholar]

- Yamada, S.; Kakue, T.; Shimobaba, T.; Ito, T. Interactive holographic display based on finger gestures. Sci. Rep. 2018, 8, 2010. [Google Scholar] [CrossRef] [PubMed]

- Lazar, J.; Feng, J.H.; Hochheiser, H. Research Methods in Human–Computer Interaction; Morgan Kaufmann: Burlington, MA, USA, 2017. [Google Scholar]

- Johnson, S.; Jackson, B.; Tourek, B.; Molina, M.; Erdman, A.G.; Keefe, D.F. Immersive analytics for medicine: Hybrid 2D/3D sketch-based interfaces for annotating medical data and designing medical devices. In Proceedings of the 2016 ACM Companion on Interactive Surfaces and Spaces, Niagara Falls, ON, Canada, 6–9 November 2016; pp. 107–113. [Google Scholar]

- Swain, V.D.; Saha, K.; Abowd, G.D.; DE Choudhury, M. Social and Ubiquitous Technologies for Remote Worker Wellbeing and Productivity in a Post-Pandemic World. In Proceedings of the 2020 IEEE Second International Conference on Cognitive Machine Intelligence (CogMI), Atlanta, GA, USA, 28–31 October 2020. [Google Scholar]

- Van Dam, A.; Laidlaw, D.H.; Simpson, R.M. Experiments in immersive virtual reality for scientific visualization. Comput. Graph. 2002, 26, 535–555. [Google Scholar] [CrossRef]

- Merchant, Z.; Goetz, E.T.; Cifuentes, L.; Keeney-Kennicutt, W.; Davis, T.J. Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis. Comput. Educ. 2014, 70, 29–40. [Google Scholar] [CrossRef]

- Jang, S.; Vitale, J.M.; Jyung, R.W.; Black, J.B. Direct manipulation is better than passive viewing for learning anatomy in a three-dimensional virtual reality environment. Comput. Educ. 2017, 106, 150–165. [Google Scholar] [CrossRef]

- Putze, S.; Alexandrovsky, D.; Putze, F.; Hoffner, S.; Smeddinck, J.D.; Malaka, R. Breaking the Experience: Effects of Questionnaires in VR User Studies. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–15. [Google Scholar]

- Nicholson, D.T.; Chalk, C.; Funnell, W.R.J.; Daniel, S.J. Can virtual reality improve anatomy education? A randomised controlled study of a computer-generated three-dimensional anatomical ear model. Med. Educ. 2006, 40, 1081–1087. [Google Scholar] [CrossRef] [PubMed]

- Robertson, G.; Czerwinski, M.; Van Dantzich, M. Immersion in desktop virtual reality. In Proceedings of the 10th Annual ACM Symposium on User Interface Software and Technology, Banff, AB, Canada, 14–17 October 1997; pp. 11–19. [Google Scholar]

- Ishida, T.; Lu, Y.; Miyakawa, A.; Sugita, K.; Shibata, Y. Implementation of a high-presence immersive traditional crafting system with remote collaborative work support. Int. J. Grid Util. Comput. 2020, 11, 93–102. [Google Scholar] [CrossRef]

- Shu, Y.; Huang, Y.Z.; Chang, S.H.; Chen, M.Y. Do virtual reality head-mounted displays make a difference? A comparison of presence and self-efficacy between head-mounted displays and desktop computer-facilitated virtual environments. Virtual Real. 2019, 23, 437–446. [Google Scholar] [CrossRef]

- He, Z.; Rosenberg, K.T.; Perlin, K. Exploring configuration of mixed reality spaces for communication. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–6. [Google Scholar]

- Jetter, H.C.; Rädle, R.; Feuchtner, T.; Anthes, C.; Friedl, J.; Klokmose, C.N. In VR, everything is possible!: Sketching and Simulating Spatially-Aware Interactive Spaces in Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–16. [Google Scholar]

- Wang, J.; Endo, T.; Lu, C.; Jing, L. A Novel AR Whiteboard System and Usability Study. In Proceedings of the 2019 IEEE 8th Global Conference on Consumer Electronics (GCCE), Osaka, Japan, 15–18 October 2019; pp. 28–30. [Google Scholar]

- Fang, C.; Zhang, Y.; Dworman, M.; Harrison, C. Wireality: Enabling Complex Tangible Geometries in Virtual Reality with Worn Multi-String Haptics. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–10. [Google Scholar]

- Cherubini, M.; Venolia, G.; DeLine, R.; Ko, A.J. Let us go to the whiteboard: How and why software developers use drawings. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 557–566. [Google Scholar]

- Varona-Marin, D.; Oberholzer, J.A.; Tse, E.; Scott, S.D. Post-meeting Curation of Whiteboard Content Captured with Mobile Devices. In Proceedings of the 2018 ACM International Conference on Interactive Surfaces and Spaces, Tokyo, Japan, 25–28 November 2018; pp. 43–54. [Google Scholar]

- Branham, S.; Golovchinsky, G.; Carter, S.; Biehl, J.T. Let us go from the whiteboard: Supporting transitions in work through whiteboard capture and reuse. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 75–84. [Google Scholar]

- Moran, T.P.; van Melle, W. Tivoli: Integrating structured domain objects into a freeform whiteboard environment. In Proceedings of the CHI’00 Extended Abstracts on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 20–21. [Google Scholar]

- Karsenti, T. The interactive whiteboard: Uses, benefits, and challenges. A survey of 11,683 students and 1131 teachers. Can. J. Learn. Technol. Rev. 2016, 42, 1240714. [Google Scholar] [CrossRef]

- Mariz, C.; Stephenson, J.; Carter, M. Interactive whiteboards in education: A literature scoping survey. Aust. Educ. Comput. 2017, 32, 1–18. [Google Scholar]

- Warwick, P.; Mercer, N.; Kershner, R. ‘Wait, let us just think about this’: Using the interactive whiteboard and talk rules to scaffold learning for co-regulation in collaborative science activities. Learn. Cult. Soc. Interact. 2013, 2, 42–51. [Google Scholar] [CrossRef]

- Rekimoto, J. A multiple device approach for supporting whiteboard-based interactions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Los Angeles, CA, USA, 18–23 April 1998; pp. 344–351. [Google Scholar]

- Dillenbourg, P.; Traum, D. The role of a whiteboard in a distributed cognitive system. In Proceedings of the Swiss Workshop on Distributed and Collaborative Systems, Lausanne, Switzerland, 2 May 1997. [Google Scholar]

- Ju, W.G.; Lee, B.A.; Klemmer, S.R. Range: Exploring proxemics in collaborative whiteboard interaction. In Proceedings of the CHI’07 Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 2483–2488. [Google Scholar]

- Pedersen, E.R.; McCall, K.; Moran, T.P.; Halasz, F.G. Tivoli: An electronic whiteboard for informal workgroup meetings. In Readings in Human–Computer Interaction; Elsevier: Amsterdam, The Netherlands, 1995; pp. 509–516. [Google Scholar]

- Fan, Z.; Chi, M.; Oliveira, M.M. A sketch-based collaborative design system. In Proceedings of the 16th IEEE Brazilian Symposium on Computer Graphics and Image Processing (SIBGRAPI 2003), São Carlos, Brazil, 12–15 October 2003; pp. 125–131. [Google Scholar]

- Tung, T.L. MediaBoard: A shared whiteboard application for the MBone. Master’s Thesis, Computer Science Division (EECS), University of California, Berkeley, CA, USA, 1998. [Google Scholar]

- Mynatt, E.D.; Igarashi, T.; Edwards, W.K.; LaMarca, A. Flatland: New dimensions in office whiteboards. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems, Pittsburgh Pennsylvania, USA, 15–20 May 1999; pp. 346–353. [Google Scholar]

- Kukimoto, N.; Ebara, Y.; Furukawa, M.; Koya-mada, K. Experimental study on thinking support using handwritten annotation at collaborative works in the tele-immersive shared environments. J. Inf. Process. Soc. Jpn. 2007, 48, 21532163. [Google Scholar]

- Sakuraba, Y.; Fujinaga, Y.; Yamazaki, T.; Watanabe, T.; Kaneko, T. A 3D Sketch-Based Modeling Interface for Cooperative Work. In Proceedings of the IPSJ Interaction, Tokyo, Japan, 15–17 March 2012. [Google Scholar]

- Suznjevic, M.; Saldana, J. Delay Limits for Real-Time Services. IETF Draft. 2016. Available online: https://datatracker.ietf.org/doc/html/draft-suznjevic-dispatch-delay-limits-00 (accessed on 26 November 2022).

- Lu, C.; Wang, J.; Jing, L. Hand motion capture system based on multiple inertial sensors: Demo abstract. In Proceedings of the 18th Conference on Embedded Networked Sensor Systems, Virtual Event Japan, 16–19 November 2020; pp. 619–620. [Google Scholar]

Figure 1.

Collaborative whiteboards. (a) Collaborate locally using the traditional whiteboard; (b) Collaborate remotely using the electronic whiteboard; (c) Use of the HMD and controller (VR device).

Figure 1.

Collaborative whiteboards. (a) Collaborate locally using the traditional whiteboard; (b) Collaborate remotely using the electronic whiteboard; (c) Use of the HMD and controller (VR device).

Figure 2.

Remote collaborative whiteboard system design and implementation. (a) System application model; (b) System implementation structure; (c) System implementation and function modules; (d) VR whiteboard system snapshot.

Figure 2.

Remote collaborative whiteboard system design and implementation. (a) System application model; (b) System implementation structure; (c) System implementation and function modules; (d) VR whiteboard system snapshot.

Figure 3.

Collaboration efficiency experiment design regarding the three experiment scenes, including traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard. (a) Traditional whiteboard (pen) scene; (b) Electronic whiteboard (mouse) scene; (c) VR whiteboard scene; (d) Task for evaluation experiment.

Figure 3.

Collaboration efficiency experiment design regarding the three experiment scenes, including traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard. (a) Traditional whiteboard (pen) scene; (b) Electronic whiteboard (mouse) scene; (c) VR whiteboard scene; (d) Task for evaluation experiment.

Figure 4.

The time cost of experiment tasks when using a traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Figure 4.

The time cost of experiment tasks when using a traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Figure 5.

A partial snapshot of the user experience survey scale (questionnaire), containing only two questions as examples.

Table 5 lists all questions.

Figure 5.

A partial snapshot of the user experience survey scale (questionnaire), containing only two questions as examples.

Table 5 lists all questions.

Figure 6.

Statistical summary results of user experience survey scale (questionnaire) when using a traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Figure 6.

Statistical summary results of user experience survey scale (questionnaire) when using a traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Figure 7.

End-to-end delay of the proposed VR whiteboard system. The blue dots indicate the measured latency value for each test, and the green line is the fitted regression line. The y-axis ranges from 80 to 160 in order to show more details, and the regression line is almost flat, meaning that the latency of the proposed VR whiteboard is in a relatively stable state.

Figure 7.

End-to-end delay of the proposed VR whiteboard system. The blue dots indicate the measured latency value for each test, and the green line is the fitted regression line. The y-axis ranges from 80 to 160 in order to show more details, and the regression line is almost flat, meaning that the latency of the proposed VR whiteboard is in a relatively stable state.

Table 1.

Related research on whiteboards in terms of “Required equipment”, “Natural handwriting experience”, “Support remote collaboration” and “System usability evaluation”.

Table 1.

Related research on whiteboards in terms of “Required equipment”, “Natural handwriting experience”, “Support remote collaboration” and “System usability evaluation”.

| Works | 2D/3D | Required

Equipment | Natural

Handwriting

Experience | Support

Remote

Collaboration | System Usability

Evaluation |

|---|

Share information by

public whiteboard [54] | 2D | touch screen

device | yes | yes | no |

Electronic whiteboard

designed for office

work [60] | 2D | electronic

whiteboard | no | yes | no |

Thought share assistance

by annotating [61] | 3D | HMD, touch

screen device | no | yes | Subjective evaluations |

3D model collaboration

in VR scenes [62] | 3D | HMD, tablet | no | yes | Feasibility evaluation |

Dry Erase Infinite VR

Whiteboard | 3D | HMD,

controller | yes | no | no |

| Zoom whiteboard | 2D | mouse,

screen | no | yes | no |

ine

VR whiteboard

(Our research) | 3D | HMD,

controller | yes | yes | Quantitative efficiency

evaluation and user

experience survey scale |

Table 2.

Feature comparison of the three experiment scenes.

Table 2.

Feature comparison of the three experiment scenes.

| | Whiteboards | (Pen)

Traditional

Whiteboard | (Mouse)

Electronic

Whiteboard | (VR)

VR

Whiteboard |

|---|

| Features | |

|---|

| Natural handwriting input method | yes | no | yes |

| Remote cooperative work | no | yes | yes |

| No conflict when collaboration | no | yes | yes |

Table 3.

The arrangement of the whiteboard efficiency evaluation experiment, regarding the order of the experiments for the three scenes: traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Table 3.

The arrangement of the whiteboard efficiency evaluation experiment, regarding the order of the experiments for the three scenes: traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

| Experiment Order | 1st Scene | 2nd Scene | 3rd Scene |

|---|

| Group ID | |

|---|

| 01 | Pen | Mouse | VR |

| 02 | Pen | VR | Mouse |

| 03 | Mouse | Pen | VR |

| 04 | Mouse | VR | Pen |

| 05 | VR | Pen | Mouse |

| 06 | VR | Mouse | Pen |

| 07 | Pen | Mouse | VR |

| 08 | Pen | VR | Mouse |

| 09 | Mouse | Pen | VR |

| 10 | Mouse | VR | Pen |

| 11 | VR | Pen | Mouse |

| 12 | VR | Mouse | Pen |

| 13 | Pen | Mouse | VR |

| 14 | Pen | VR | Mouse |

| 15 | Mouse | Pen | VR |

| 16 | Mouse | VR | Pen |

Table 4.

Time cost results in seconds for the whiteboard efficiency evaluation experiment regarding the traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Table 4.

Time cost results in seconds for the whiteboard efficiency evaluation experiment regarding the traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Group

ID | Pen | Mouse | VR | Group

ID | Pen | Mouse | VR |

|---|

| 1 | 63 | 122 | 58 | 9 | 62 | 126 | 58 |

| 1 | 70 | 122 | 52 | 10 | 69 | 105 | 74 |

| 2 | 63 | 123 | 64 | 10 | 65 | 115 | 66 |

| 2 | 65 | 130 | 65 | 11 | 70 | 125 | 51 |

| 3 | 61 | 125 | 63 | 11 | 67 | 123 | 52 |

| 3 | 61 | 122 | 66 | 12 | 64 | 119 | 48 |

| 4 | 58 | 109 | 63 | 12 | 65 | 121 | 55 |

| 4 | 52 | 108 | 55 | 13 | 58 | 111 | 60 |

| 5 | 65 | 119 | 52 | 13 | 62 | 112 | 69 |

| 5 | 64 | 121 | 55 | 14 | 45 | 114 | 55 |

| 6 | 56 | 122 | 56 | 14 | 48 | 110 | 54 |

| 6 | 62 | 124 | 53 | 15 | 56 | 124 | 54 |

| 7 | 58 | 129 | 48 | 15 | 52 | 118 | 56 |

| 7 | 61 | 123 | 55 | 16 | 62 | 117 | 49 |

| 8 | 61 | 119 | 52 | 16 | 62 | 124 | 57 |

| 8 | 58 | 122 | 55 | mean | 60.9 | 119.4 | 57.0 |

| 9 | 63 | 118 | 53 | SD () | 5.8 | 6.1 | 6.3 |

T-test

(VR and Pen) | 0.01 | p > 0.05: These two sets of results are

not significantly different. |

T-test

(VR and Mouse) | | p << 0.01: These two sets of results are

extremely significantly different. |

Table 5.

List of categories and specific questions of the user experience survey scale (questionnaire) regarding the traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Table 5.

List of categories and specific questions of the user experience survey scale (questionnaire) regarding the traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

| Category | Question |

|---|

| Input/Output | Q1-1 I can write easily. |

| Q1-2 I can erase/revise easily. |

| Q1-3 I can see the whiteboard clearly. |

| Q1-4 It is easy to read the text on the whiteboard. |

| Novelty | Q2-1 It is fun to use it. |

| Q2-2 It catches my interest. |

| Q2-3 The design of it is creative. |

| Q2-4 It is exciting and motivating to use it. |

| Usability | Q3-1 It protects my privacy. |

| Q3-2 It is stable and reliable. |

| Q3-3 It is easy to learn how to use it. |

| Q3-4 It is easy to get familiar with it. |

| Q3-5 It is comfortable to wear/use it. |

| Efficiency | Q4-1 It reacts fast. |

| Q4-2 It wastes my time. |

| Q4-3 There is no conflict when the two write at the same time. |

| Q4-4 The two can always write freely. |

| Comprehensive | Q5-1 The overall/comprehensive impression is bad. |

| Q5-2 I would like to recommend it to my friends. |

| Q5-3 I feel that time passed quickly during the previous experiment. |

| Q5-4 I hope to use it in collaboration. |

Table 6.

Results of user experience survey scale (questionnaire) when using a traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

Table 6.

Results of user experience survey scale (questionnaire) when using a traditional whiteboard (pen), electronic whiteboard (mouse), and the proposed VR whiteboard.

| Category | Pen

(Mean) | Mouse

(Mean) | VR

(Mean) | | Pen

() | Mouse

() | VR

() |

|---|

| Comprehensive | 1.1 | −0.5 | 1.7 | | 0.9 | 1.3 | 1.2 |

| Novelty | 0.4 | −0.5 | 1.9 | | 1.6 | 1.5 | 1.3 |

| Usability | 1.4 | 0.4 | 1.6 | | 1.0 | 1.3 | 0.8 |

| Efficiency | −0.1 | 0.1 | 1.9 | | 1.0 | 1.2 | 0.7 |

| Input/Output | 1.7 | 0.6 | 2.0 | | 0.6 | 1.3 | 0.6 |

| Average | 0.9 | 0.0 | 1.8 | | 0.7 | 1.6 | 1.4 |

T-test

(VR and Pen) | 0.02 | p < 0.05: These two sets of results are significantly

different |

T-test

(VR and Mouse) | | p << 0.01: These two sets of results are extremely

significantly different. |

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).