An Accurate Urine Red Blood Cell Detection Method Based on Multi-Focus Video Fusion and Deep Learning with Application to Diabetic Nephropathy Diagnosis

Abstract

1. Introduction

2. Related Work

3. Methodology for the Proposed D-MVF

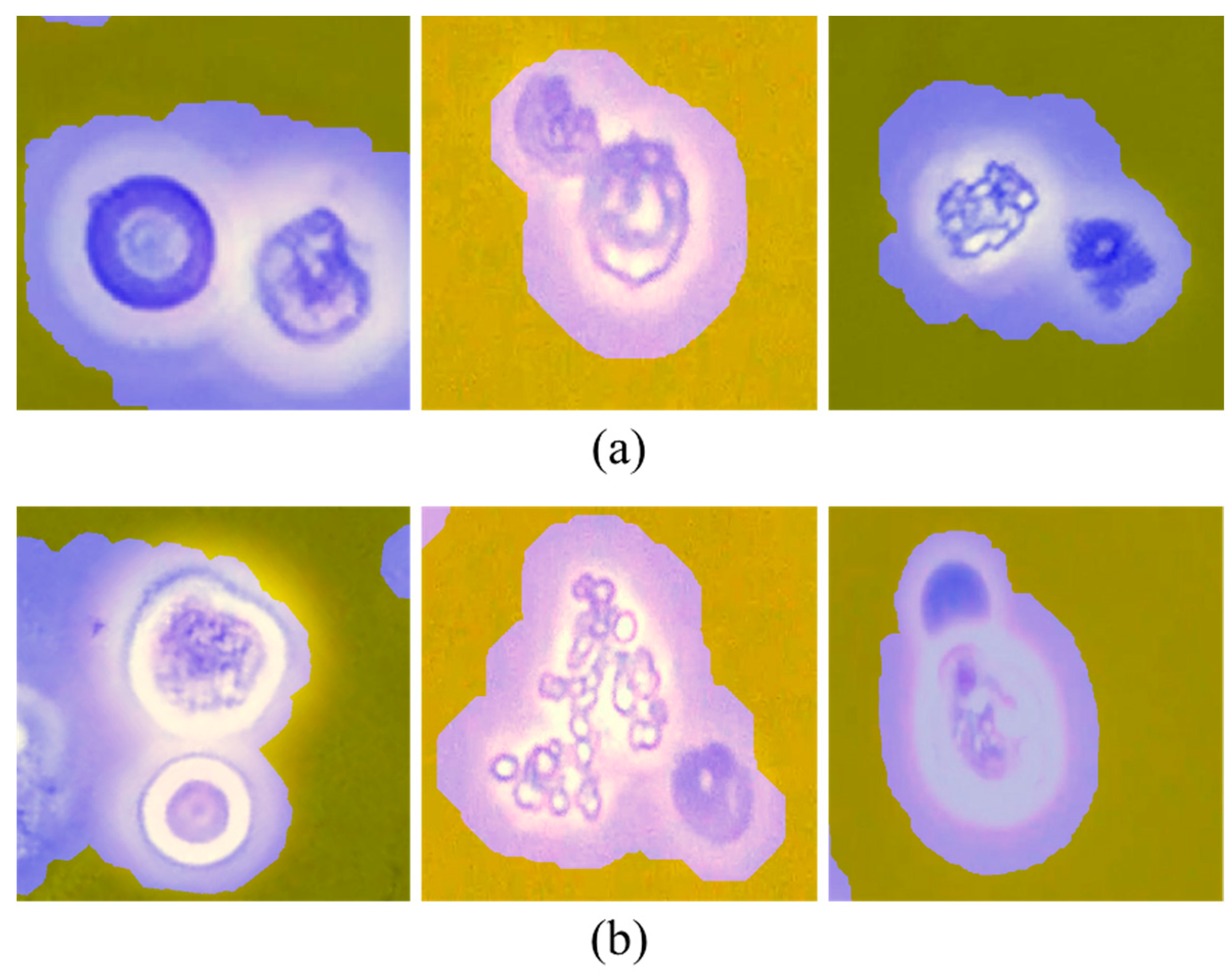

3.1. Multi-Focus Video Fusion

3.1.1. Necessity of Utilizing U-RBC Multi-Focus Videos

3.1.2. Principles of Multi-Focus Video Fusion

| Algorithm 1. Procedure for multi-focus video fusion. | |

| Input: U-RBC multi-focus video v. Output: Fused image f. | |

| 1: | Calculating the heat map H of frame difference data in v; |

| 2: | Obtaining the mask M by binarizing H; |

| 3: | Based on M, segmenting the foreground objects and backgrounds of all Fi, and then splicing the foreground objects and backgrounds with the same positions in different Fi into a series of sub-videos s; |

| 4: | for each s do; |

| 5: | for each frame Si of s do; |

| 6: | Calculating the evaluation index of typical shape K of Si; |

| 7: | end for; |

| 8: | Si with the largest K is the key frame KS of s; |

| 9: | end for; |

| 10: | According to the positions of foreground objects and background objects marked by M, the KS of all s are stitched together to obtain a fused image f. |

3.2. Architecture of the U-RBC Detection

3.3. Diabetic Nephropathy Diagnosis Based on the U-RBC Proportion

4. Experimental Design and Result

4.1. U-RBC Multi-Focus Video Dataset

4.2. Performance Metrics

4.3. Comparison between Different Key Frame Extraction Methods

4.4. Comparison between Different U-RBC Detection Methods

4.5. Comparison between KNN and Artificial Threshold in Diagnosing DN

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fairley, K.F.; Birch, D.F. Hematuria: A simple method for identifying glomerular bleeding. Kidney Int. 1982, 21, 105–108. [Google Scholar] [CrossRef] [PubMed]

- Rizzoni, G.; Braggion, F.; Zacchello, G. Evaluation of glomerular and non-glomerular hematuria by phase-contrast microscopy. J. Pediatr. 1983, 103, 370–374. [Google Scholar] [CrossRef] [PubMed]

- Rath, B.; Turner, C.; Hartley, B.; Chantler, C. What makes red cells dysmorphic in glomerular haematuria? Pediatr. Nephrol. 1992, 6, 424–427. [Google Scholar] [CrossRef] [PubMed]

- Lettgen, B.; Wohlmuth, A. Validity of g1-cells in the differentiation between glomerular and non-glomerular haematuria in children. Pediatr. Nephrol. 1995, 9, 435–437. [Google Scholar] [CrossRef] [PubMed]

- Ozkan, I.A.; Koklu, M.; Sert, I.U. Diagnosis of urinary tract infection based on artificial intelligence methods. Comput. Methods Programs Biomed. 2018, 166, 51–59. [Google Scholar] [CrossRef]

- Fernandez, E.; Barlis, J.; Dematera, K.A.; LLas, G.; Paeste, R.M.; Taveso, D.; Velasco, J.S. Four class urine microscopic recognition system through image processing using artificial neural network. J. Telecommun. Electron. Comput. Eng. (JTEC) 2018, 214–218. [Google Scholar]

- Pan, J.; Jiang, C.; Zhu, T. Classification of urine sediment based on convolution neural network. AIP Conf. Proc. 2018, 1955, 040176. [Google Scholar]

- Ji, Q.; Li, X.; Qu, Z.; Dai, C. Research on urine sediment images recognition based on deep learning. IEEE Access 2019, 7, 166711–166720. [Google Scholar] [CrossRef]

- Li, T.; Jin, D.; Du, C.; Cao, X.; Chen, H.; Yan, J.; Chen, N.; Chen, Z.; Feng, Z.; Liu, S. The image-based analysis and classification of urine sediments using a LeNet-5 neural network. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2020, 8, 109–114. [Google Scholar] [CrossRef]

- Suhail, K.; Brindha, D. A review on various methods for recognition of urine particles using digital microscopic images of urine sediments. Biomed. Signal Process. Control. 2021, 68, 102806. [Google Scholar]

- Zhao, X.; Xiang, J.; Ji, Q. Urine red blood cell classification based on Siamese Network. In Proceedings of the 2nd International Workshop on Electronic communication and Artificial Intelligence (IWECAI), Nanjing, China, 12–14 March 2021; Volume 1873, p. 012089. [Google Scholar]

- Li, K.; Li, M.; Wu, Y.; Li, X.; Zhou, X. An accurate urine erythrocytes detection model coupled faster rcnn with vggnet. In Proceedings of the 2020 Conference on Artificial Intelligence and Healthcare (CAIH2020), Taiyuan China, 23–25 October 2020; pp. 224–228. [Google Scholar]

- Li, X.; Li, M.; Wu, Y.; Zhou, X.; Hao, F.; Liu, X. An accurate classification method based on multi-focus videos and deep learning for urinary red blood cell. In Proceedings of the 2020 Conference on Artificial Intelligence and Healthcare (CAIH2020), Taiyuan China, 23–25 October 2020. [Google Scholar]

- Nian, Z.; Jung, C. Cnn-based multi-focus image fusion with light field data. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1044–1048. [Google Scholar]

- Kou, L.; Zhang, L.; Zhang, K.; Sun, J.; Han, Q.; Jin, Z. A multi-focus image fusion method via region mosaicking on Laplacian pyramids. PLoS ONE 2018, 13, e0191085. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Deng, N.; Xin, B.-J.; Xing, W.-Y.; Zhang, Z.-Y. Nonwovens structure measurement based on NSST multi-focus image fusion. Micron 2019, 123, 102684. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Han, Q.; Kou, L.; Zhang, L.; Zhang, K.; Jin, Z. Multi-focus image fusion algorithm based on Laplacian pyramids. J. Opt. Soc. Am. A-Opt. Image Sci. Vis. 2018, 35, 480–490. [Google Scholar] [CrossRef] [PubMed]

- Albahli, S.; Nida, N.; Irtaza, A.; Yousaf, M.H.; Mahmood, M.T. Melanoma lesion detection and segmentation using yolov4-darknet and active contour. IEEE Access 2020, 8, 198403–198414. [Google Scholar] [CrossRef]

- Montalbo, F. A computer-aided diagnosis of brain tumors using a finetuned yolo-based model with transfer learning. KSII Trans. Internet Inf. Syst. 2021, 14, 4816–4834. [Google Scholar]

- Lyra, S.; Mayer, L.; Ou, L.; Chen, D.; Timms, P.; Tay, A.; Chan, P.; Ganse, B.; Leonhardt, S.; Antink, C.H. A deep learning based camera approach for vital sign monitoring using thermography images for ICU patients. Sensors 2021, 21, 1495. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Detect to Track and Track to Detect. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Han, M.; Wang, Y.; Chang, X.; Qiao, Y. Mining Inter-Video Proposal Relations for Video Object Detection; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Lee, E.Y.; Chung, H.C.; Choi, S.O. Non-diabetic renal disease in patients with non-insulin dependent diabetes mellitus. Yonsei Med. J. 1999, 40, 321–326. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, Y.; Qiu, Q.; Hou, K.; Zhang, L.; Wu, J.; Zhu, H.; Cai, G.; Sun, X.; Zhang, X.; et al. Dysmorphic erythrocytes are superior to hematuria for indicating non-diabetic renal disease in type 2 diabetics. J. Diabetes Investig. 2016, 7, 115–120. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Pan, L.; Meng, W. Key frame extraction from online video based on improved frame difference optimization. In Proceedings of the IEEE 14th International Conference on Communication Technology, Chengdu, China, 9–11 November 2012; pp. 940–944. [Google Scholar]

- Chen, Y.; Hu, W.; Zeng, X.; Li, W. Indexing and matching of video shots based on motion and color analysis. In Proceedings of the 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006. [Google Scholar]

- Zhu, W.; Hu, J.; Sun, G.; Cao, X.; Qiao, Y. A key volume mining deep framework for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1991–1999. [Google Scholar]

- Lin, B.-S.; Chen, J.-L.; Tu, Y.-H.; Shih, Y.-X.; Lin, Y.-C.; Chi, W.-L.; Wu, Y.-C. Using deep learning in ultrasound imaging of bicipital peritendinous effusion to grade inflammation severity. IEEE J. Biomed. Health Inform. 2020, 24, 1037–1045. [Google Scholar] [CrossRef]

- Sun, C.-Y.; Hong, X.-J.; Shi, S.; Shen, Z.-Y.; Zhang, H.-D.; Zhou, L.-X. Cascade faster R-CNN detection for vulnerable plaques in oct images. IEEE Access 2021, 9, 24697–24704. [Google Scholar] [CrossRef]

- Du, X.; Wang, X.; Ni, G.; Zhang, J.; Hao, R.; Zhao, J.; Wang, X.; Liu, J.; Liu, L. Sdof-net: Super depth of field network for cell detection in leucorrhea micrograph. IEEE J. Biomed. Health Inform. 2021, 26, 1229–1238. [Google Scholar] [CrossRef]

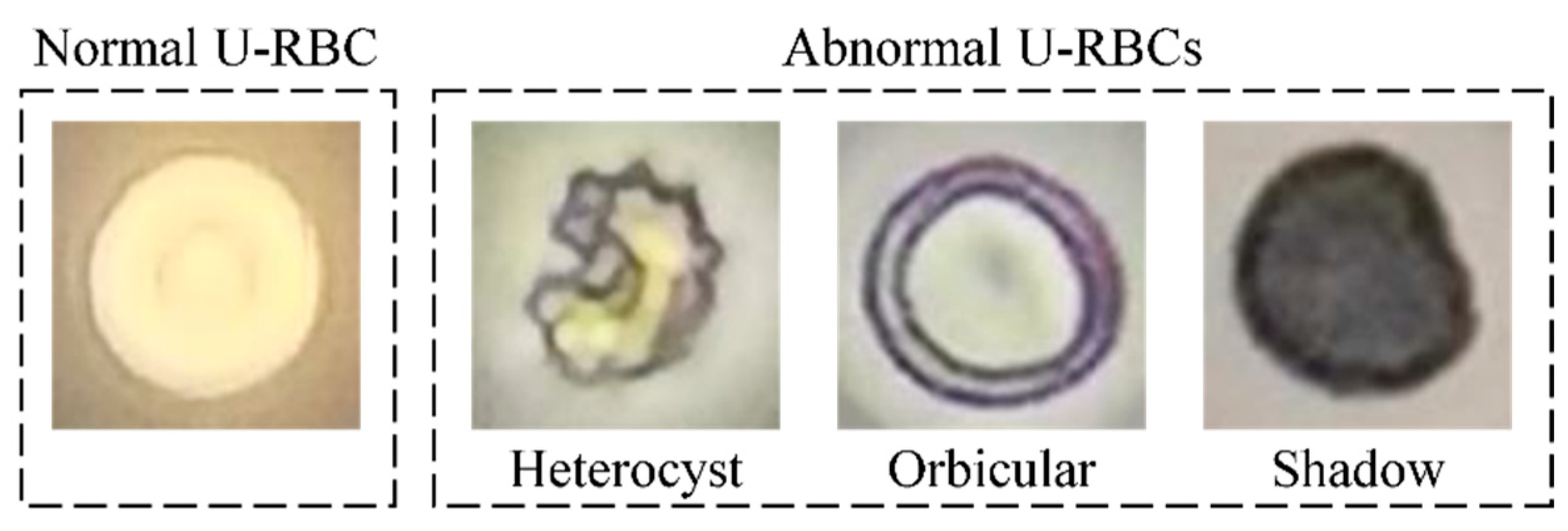

| Method | Index | Normal | Heterocyst | Orbicular | Shadow | Mean |

|---|---|---|---|---|---|---|

| K-FD [25] | PSNR | 36.011 | 31.486 | 31.170 | 35.993 | 33.665 |

| SSIM | 0.957 | 0.937 | 0.910 | 0.966 | 0.950 | |

| Time | 8.662 | 6.800 | 6.275 | 5.254 | 6.748 | |

| K-OF [26] | PSNR | 36.211 | 31.892 | 31.464 | 37.498 | 34.266 |

| SSIM | 0.972 | 0.940 | 0.923 | 0.970 | 0.951 | |

| Time | 9.835 | 7.289 | 7.118 | 6.035 | 7.569 | |

| MIL [27] | PSNR | 36.754 | 32.106 | 32.327 | 36.172 | 34.340 |

| SSIM | 0.974 | 0.938 | 0.929 | 0.966 | 0.952 | |

| Time | 10.732 | 7.680 | 7.591 | 7.142 | 8.286 | |

| EI-TC | PSNR | 36.405 | 33.074 | 33.705 | 37.810 | 35.249 |

| SSIM | 0.972 | 0.938 | 0.939 | 0.970 | 0.955 | |

| Time | 0.605 | 0.521 | 0.562 | 0.463 | 0.538 |

| Data Type | Model | Normal | Heterocyst | Orbicular | Shadow |

|---|---|---|---|---|---|

| Single focus images | Faster R-CNN | 0.784 | 0.443 | 0.871 | 0.680 |

| Cascade R-CNN | 0.801 | 0.396 | 0.829 | 0.682 | |

| RetinaNet | 0.814 | 0.336 | 0.863 | 0.632 | |

| YOLOv4 | 0.764 | 0.525 | 0.831 | 0.674 | |

| Multi-focus videos | D-MVF w Faster R-CNN | 0.943 | 0.671 | 0.894 | 0.909 |

| D-MVF w Cascade R-CNN | 0.947 | 0.686 | 0.919 | 0.918 | |

| D-MVF w RetinaNet | 0.897 | 0.604 | 0.917 | 0.891 | |

| D-MVF w YOLOv4 | 0.974 | 0.760 | 0.961 | 0.963 |

| Data Type | Model | mAP | mAR | HM |

|---|---|---|---|---|

| Single focus images | Faster R-CNN | 0.694 | 0.873 | 0.733 |

| Cascade R-CNN | 0.677 | 0.840 | 0.750 | |

| RetinaNet | 0.661 | 0.929 | 0.722 | |

| YOLOv4 | 0.700 | 0.760 | 0.729 | |

| Multi-focus videos | D-MVF w Faster R-CNN | 0.854 | 0.966 | 0.907 |

| D-MVF w Cascade R-CNN | 0.867 | 0.963 | 0.912 | |

| D-MVF w RetinaNet | 0.827 | 0.978 | 0.896 | |

| D-MVF w YOLOv4 | 0.915 | 0.930 | 0.922 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, F.; Li, X.; Li, M.; Wu, Y.; Zheng, W. An Accurate Urine Red Blood Cell Detection Method Based on Multi-Focus Video Fusion and Deep Learning with Application to Diabetic Nephropathy Diagnosis. Electronics 2022, 11, 4176. https://doi.org/10.3390/electronics11244176

Hao F, Li X, Li M, Wu Y, Zheng W. An Accurate Urine Red Blood Cell Detection Method Based on Multi-Focus Video Fusion and Deep Learning with Application to Diabetic Nephropathy Diagnosis. Electronics. 2022; 11(24):4176. https://doi.org/10.3390/electronics11244176

Chicago/Turabian StyleHao, Fang, Xinyu Li, Ming Li, Yongfei Wu, and Wen Zheng. 2022. "An Accurate Urine Red Blood Cell Detection Method Based on Multi-Focus Video Fusion and Deep Learning with Application to Diabetic Nephropathy Diagnosis" Electronics 11, no. 24: 4176. https://doi.org/10.3390/electronics11244176

APA StyleHao, F., Li, X., Li, M., Wu, Y., & Zheng, W. (2022). An Accurate Urine Red Blood Cell Detection Method Based on Multi-Focus Video Fusion and Deep Learning with Application to Diabetic Nephropathy Diagnosis. Electronics, 11(24), 4176. https://doi.org/10.3390/electronics11244176