Learnable Wavelet Scattering Networks: Applications to Fault Diagnosis of Analog Circuits and Rotating Machinery

Abstract

:1. Introduction

2. Theoretical Background

2.1. Wavelet Transform

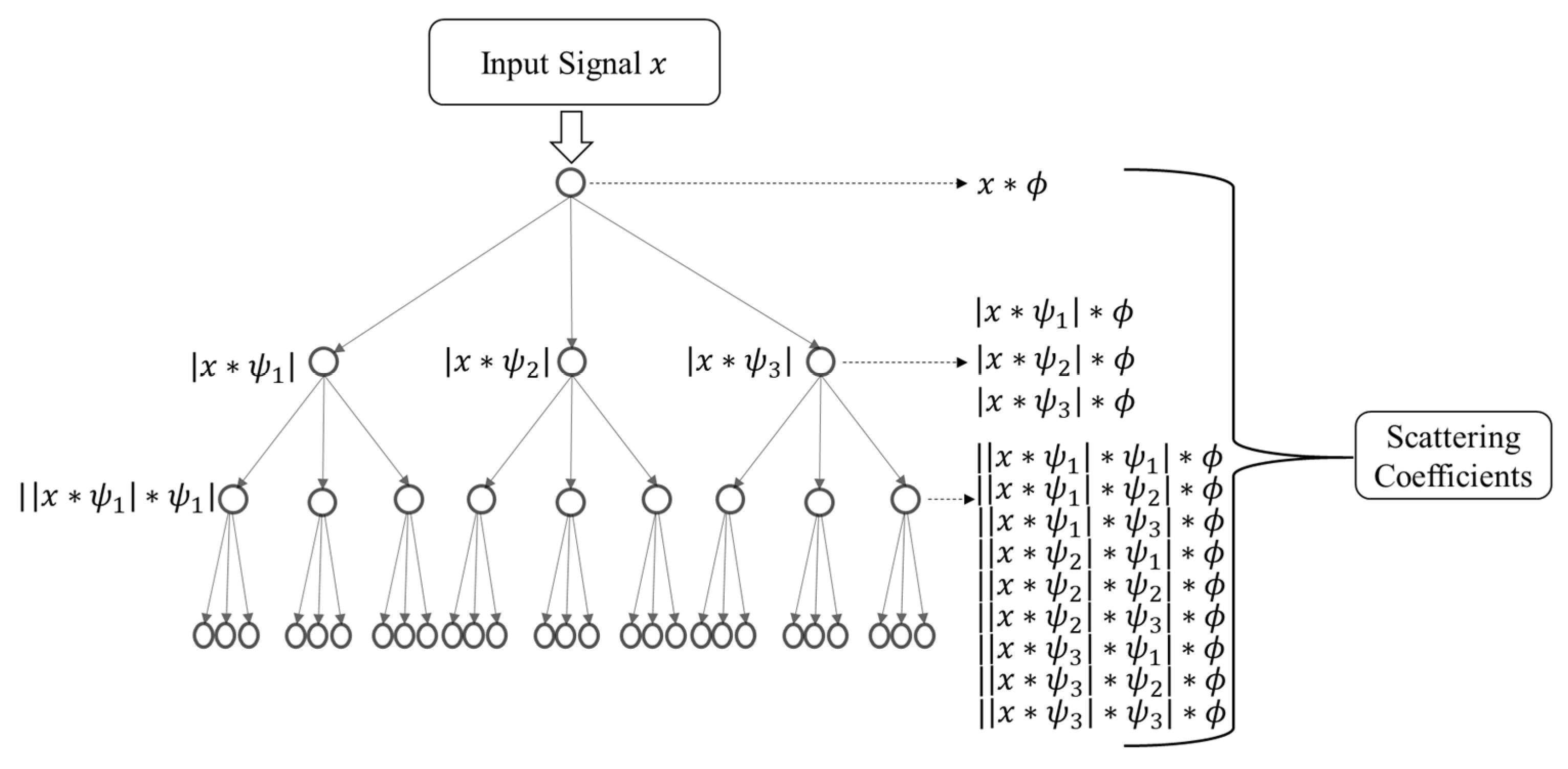

2.2. Wavelet Scattering Networks (WSNs)

2.3. Learnable Wavelet Scattering Networks (LWSNs)

- Split: Let be an original signal. In this step, is divided into two subsets: the even subset and odd subset . The subsets are correlated according to the correlation structure of the original signal.

- Predict: The odd coefficients are predicted from the neighboring even coefficients , and the prediction differences are defined as the detail signal,where is the prediction operator.

- Update: Coarse approximation to the original signal is created by combining the even coefficients and the linear combination of the prediction differenceswhere is the update operator. By iterating on the approximation signal using the three steps, the approximation and the detail signal are obtained at different levels. The optimization of the lifting scheme’s Update (U) and Predict (P) operators in the LWSN is carried out using the genetic algorithm (GA). The optimized Update (U) and Predict (P) operators are converted to the wavelet () and averaging operators () using Claypoole’s algorithm [35], such that the structure in Figure 1 can be used to learn time–frequency representations from the data.

2.4. Genetic Algorithm (GA)

2.5. Support Vector Machine (SVM)

3. Fault Diagnosis Methodology

4. Experiments and Results

4.1. Sallen–Key Bandpass Filter

4.2. Two-Switch Forward Convertor

4.3. Bearing Fault Diagnosis

4.4. Gear Fault Diagnosis

4.5. Transfer Learning

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pecht, M.; Jaai, R. A prognostics and health management roadmap for information and electronics-rich systems. Microelectron. Reliab. 2010, 50, 317–323. [Google Scholar] [CrossRef]

- Binu, D.; Kariyappa, B.S. A survey on fault diagnosis of analog circuits: Taxonomy and state of the art. AEU-Int. J. Electron. Commun. 2017, 73, 68–83. [Google Scholar] [CrossRef]

- Vasan, A.S.S.; Long, B.; Pecht, M. Diagnostics and prognostics method for analog electronic circuits. IEEE Trans. Ind. Electron. 2013, 60, 5277–5291. [Google Scholar] [CrossRef]

- Yang, H.; Meng, C.; Wang, C. Data-driven feature extraction for analog circuit fault diagnosis using 1-D convolutional neural network. IEEE Access 2020, 8, 18305–18315. [Google Scholar] [CrossRef]

- Li, F.; Woo, P.Y. Fault detection for linear analog IC—The method of short-circuit admittance parameters. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 2002, 49, 105–108. [Google Scholar] [CrossRef]

- Tadeusiewicz, M.; Halgas, S.; Korzybski, M. An algorithm for soft-fault diagnosis of linear and nonlinear circuits. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 2002, 49, 1648–1653. [Google Scholar] [CrossRef]

- Luo, H.; Wang, Y.; Lin, H.; Jiang, Y. Module level fault diagnosis for analog circuits based on system identification and genetic algorithm. Meas. J. Int. Meas. Confed. 2012, 45, 769–777. [Google Scholar] [CrossRef]

- Cannas, B.; Fanni, A.; Montisci, A. Algebraic approach to ambiguity-group determination in nonlinear analog circuits. IEEE Trans. Circuits Syst. I Regul. Pap. 2010, 57, 438–447. [Google Scholar] [CrossRef]

- Dai, X.; Gao, Z. From model, signal to knowledge: A data-driven perspective of fault detection and diagnosis. IEEE Trans. Ind. Inform. 2013, 9, 2226–2238. [Google Scholar] [CrossRef] [Green Version]

- Bandyopadhyay, I.; Purkait, P.; Koley, C. Performance of a classifier based on time-domain features for incipient fault detection in inverter drives. IEEE Trans. Ind. Inform. 2019, 15, 3–14. [Google Scholar] [CrossRef]

- Queiroz, L.P.; Rodrigues, F.C.M.; Gomes, J.P.P.; Brito, F.T.; Chaves, I.C.; Paula, M.R.P.; Salvador, M.R.; Machado, J.C. A fault detection method for hard disk drives based on mixture of gaussians and nonparametric statistics. IEEE Trans. Ind. Inform. 2017, 13, 542–550. [Google Scholar] [CrossRef]

- Nasser, A.R.; Azar, A.T.; Humaidi, A.J.; Al-Mhdawi, A.K.; Ibraheem, I.K. Intelligent fault detection and identification approach for analog electronic circuits based on fuzzy logic classifier. Electronics 2021, 10, 2888. [Google Scholar] [CrossRef]

- Shi, J.; Deng, Y.; Wang, Z. Analog circuit fault diagnosis based on density peaks clustering and dynamic weight probabilistic neural network. Neurocomputing 2020, 407, 354–365. [Google Scholar] [CrossRef]

- Aizenberg, I.; Belardi, R.; Bindi, M.; Grasso, F.; Manetti, S.; Luchetta, A.; Piccirilli, M.C. A neural network classifier with multi-valued neurons for analog circuit fault diagnosis. Electronics 2021, 10, 349. [Google Scholar] [CrossRef]

- Yuan, L.; He, Y.; Huang, J.; Sun, Y. A new neural-network-based fault diagnosis approach for analog circuits by using kurtosis and entropy as a preprocessor. IEEE Trans. Instrum. Meas. 2010, 59, 586–595. [Google Scholar] [CrossRef]

- Xiao, Y.; He, Y. A novel approach for analog fault diagnosis based on neural networks and improved kernel PCA. Neurocomputing 2011, 74, 1102–1115. [Google Scholar] [CrossRef]

- Xiao, Y.; Feng, L. A novel linear ridgelet network approach for analog fault diagnosis using wavelet-based fractal analysis and kernel PCA as preprocessors. Meas. J. Int. Meas. Confed. 2012, 45, 297–310. [Google Scholar] [CrossRef]

- Zhang, A.; Chen, C.; Jiang, B. Analog circuit fault diagnosis based UCISVM. Neurocomputing 2016, 173, 1752–1760. [Google Scholar] [CrossRef]

- Song, P.; He, Y.; Cui, W. Statistical property feature extraction based on FRFT for fault diagnosis of analog circuits. Analog Integr. Circuits Signal Process. 2016, 87, 427–436. [Google Scholar] [CrossRef]

- He, W.; He, Y.; Li, B.; Zhang, C. Analog circuit fault diagnosis via joint cross-wavelet singular entropy and parametric t-SNE. Entropy 2018, 20, 604. [Google Scholar] [CrossRef] [Green Version]

- Cui, J.; Wang, Y. A novel approach of analog circuit fault diagnosis using support vector machines classifier. Meas. J. Int. Meas. Confed. 2011, 44, 281–289. [Google Scholar] [CrossRef]

- Liu, Z.; Jia, Z.; Vong, C.M.; Bu, S.; Han, J.; Tang, X. Capturing high-discriminative fault features for electronics-rich analog system via deep learning. IEEE Trans. Ind. Inform. 2017, 13, 1213–1226. [Google Scholar] [CrossRef]

- Zhao, G.; Liu, X.; Zhang, B.; Liu, Y.; Niu, G.; Hu, C. A novel approach for analog circuit fault diagnosis based on Deep Belief Network. Meas. J. Int. Meas. Confed. 2018, 121, 170–178. [Google Scholar] [CrossRef]

- Chen, P.; Yuan, L.; He, Y.; Luo, S. An improved SVM classifier based on double chains quantum genetic algorithm and its application in analogue circuit diagnosis. Neurocomputing 2016, 211, 202–211. [Google Scholar] [CrossRef]

- Wenxin, Y. Analog circuit fault diagnosis via FOA-LSSVM. Telkomnika 2020, 18, 251. [Google Scholar] [CrossRef]

- Liang, H.; Zhu, Y.; Zhang, D.; Chang, L.; Lu, Y.; Zhao, X.; Guo, Y. Analog circuit fault diagnosis based on support vector machine classifier and fuzzy feature selection. Electronics 2021, 10, 1496. [Google Scholar] [CrossRef]

- Gao, T.Y.; Yang, J.L.; Jiang, S.D.; Yang, C. A novel fault diagnostic method for analog circuits using frequency response features. Rev. Sci. Instrum. 2019, 90, 104708. [Google Scholar] [CrossRef]

- He, W.; He, Y.; Li, B.; Zhang, C. A naive-Bayes-based fault diagnosis approach for analog circuit by using image-oriented feature extraction and selection technique. IEEE Access 2020, 8, 5065–5079. [Google Scholar] [CrossRef]

- He, W.; He, Y.; Luo, Q.; Zhang, C. Fault diagnosis for analog circuits utilizing time-frequency features and improved VVRKFA. Meas. Sci. Technol. 2018, 29, 045004. [Google Scholar] [CrossRef]

- Ji, L.; Fu, C.; Sun, W. Soft fault diagnosis of analog circuits based on a ResNet with circuit spectrum map. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 2841–2849. [Google Scholar] [CrossRef]

- Khemani, V.; Azarian, M.H.; Pecht, M.G. Electronic circuit diagnosis with no data. In Proceedings of the 2019 IEEE International Conference on Prognostics and Health Management (ICPHM), San Francisco, CA, USA, 17–20 June 2019; pp. 1–7. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Elsken, T.; Metzen, J.H.; Hutter, F. Simple and efficient architecture search for convolutional neural networks. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018—Workshop Track Proceedings, Vancouver, BC, USA, 30 April–3 May 2018. [Google Scholar]

- Bruna, J.; Mallat, S. Invariant scattering convolution networks. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1872–1886. [Google Scholar] [CrossRef] [Green Version]

- Sweldens, W. The lifting scheme: A construction of second generation wavelets. SIAM J. Math. Anal. 1998, 29, 511–546. [Google Scholar] [CrossRef] [Green Version]

- Wiatowski, T.; Tschannen, M.; Stanic, A.; Grohs, P.; Bolcskei, H. Discrete deep feature extraction: A theory and new architectures. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 5, pp. 3168–3183. [Google Scholar]

- Andén, J.; Lostanlen, V.; Mallat, S. Joint time-frequency scattering. IEEE Trans. Signal Process. 2019, 67, 3704–3718. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Cortes, C.; Burges, C. The MNIST Database of Handwritten Digits. Courant Inst. Math. Sci. 1998. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 25 January 2022).

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Garofolo, J.S.; Lamel, L.F.; Fisher, W.M.; Fiscus, J.G.; Pallett, D.S.; Dahlgren, N.L.; Zue, V. TIMIT Acoustic-Phonetic Continuous Speech Corpus; Linguistic Data Consortium: Philadelphia, PA, USA, 1993. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Davies, D.L.; Bouldin, D.W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979, PAMI-1, 224–227. [Google Scholar] [CrossRef]

- Mao, W.; Wang, L.; Feng, N. A new fault diagnosis method of bearings based on structural feature selection. Electronics 2019, 8, 1406. [Google Scholar] [CrossRef] [Green Version]

- Bearing Data Center|Case School of Engineering|Case Western Reserve University. Available online: https://engineering.case.edu/bearingdatacenter (accessed on 25 January 2022).

- Zhao, Z.; Li, T.; Wu, J.; Sun, C.; Wang, S.; Yan, R.; Chen, X. Deep learning algorithms for rotating machinery intelligent diagnosis: An open source benchmark study. ISA Trans. 2020, 107, 224–255. [Google Scholar] [CrossRef]

- Gear Fault Data. Available online: https://figshare.com/articles/dataset/Gear_Fault_Data/6127874/1 (accessed on 25 January 2022).

- Qiao, Z.; Elhattab, A.; Shu, X.; He, C. A second-order stochastic resonance method enhanced by fractional-order derivative for mechanical fault detection. Nonlinear Dyn. 2021, 106, 707–723. [Google Scholar] [CrossRef]

- Udmale, S.S.; Singh, S.K.; Singh, R.; Sangaiah, A.K. Multi-fault bearing classification using sensors and ConvNet-based transfer learning approach. IEEE Sens. J. 2020, 20, 1433–1444. [Google Scholar] [CrossRef]

| Deep Learning Networks | Wavelet Scattering Networks | Learnable Wavelet Scattering Networks | |

|---|---|---|---|

| Features | Learnt from data | Fixed wavelet type and coefficients (not learnt from data) | Wavelet type and coefficients learnt from data |

| Features Output at | Last layer | Every layer | Every layer |

| Number of Layers | Variable number of hidden (convolutional) layers | Two layers (typically) of fixed wavelets | Two layers of learned wavelets |

| Nonlinearity | Modulus/Rectified Linear Unit/ Hyperbolic Tangent, etc. | Modulus | Modulus |

| Pooling | Max/Averaging, etc. | Averaging | Averaging |

| Learning Algorithm | Gradient Descent and Backpropagation | NA | Lifting method and genetic algorithm |

| Classifier | SoftMax | Any (e.g., SVM) | Any (e.g., SVM) |

| Architecture | Various architectures, e.g., ResNet [32] Alexnet [41], Recurrent Neural Network [42] etc. | See Figure 1 | See Figure 1 |

| Fault Class | Fault Code | Nominal Value | Faulty Range |

|---|---|---|---|

| Healthy | F0 | NA | NA |

| F1 | 1 kΩ | [0.25 k 0.9 k] and [1.1 k 1.75 k] | |

| F2 | 1 kΩ | [0.25 k 0.9 k] and [1.1 k 1.75 k] | |

| F3 | 2 kΩ | [0.5 k 1.8 k] and [2.2 k 3.5 k] | |

| F4 | 2 kΩ | [0.5 k 1.8 k] and [2.2 k 3.5 k] | |

| F5 | 2 kΩ | [0.5 k 1.8 k] and [2.2 k 3.5 k] | |

| F6 | 5 nF | [1.25 n 4.50 n] and [5.50 n 8.75 n] | |

| F7 | 5 nF | [1.25 n 4.50 n] and [5.50 n 8.75 n] |

| Circuit | Literature (GB-DBN) [22] | Wavelet Scattering Networks | Proposed Method (LWSN) |

|---|---|---|---|

| CUT1 | 99.12% | 90.01% | 99.72% |

| CUT2 | 84.34% | 82.45% | 92.93% |

| CUT2 (Experimental Validation) | NA | 81.12% | 90.71% |

| True Class | F0 | 99.4 | 0.6 | ||||||

| F1 | 99.8 | 0.2 | |||||||

| F2 | 99.8 | 0.2 | |||||||

| F3 | 100 | ||||||||

| F4 | 100 | ||||||||

| F5 | 100 | ||||||||

| F6 | 1.8 | 98.2 | |||||||

| F7 | 100 | ||||||||

| F0 | F1 | F2 | F3 | F4 | F5 | F6 | F7 | ||

| Predicted Class | |||||||||

| Fault Class | Fault Code | Nominal Value | Faulty Range | Experimental Values |

|---|---|---|---|---|

| Healthy | F0 | NA | NA | NA |

| F1 | 33 Ω | [8.25 Ω 29.7 Ω] and [36.3 Ω 57.75 Ω] | 10 Ω, 20 Ω, 40 Ω, 50 Ω | |

| F2 | 0.1 μF | [0.025 μF 0.09 μF] and [0.11 μF 0.175 μF] | 0.025 μF, 0.05 μF, 0.12 μF, 0.15 μF | |

| F3 | 100 Ω | [25 Ω 90 Ω] and [110 Ω 175 Ω] | 30 Ω, 80 Ω, 120 Ω, 170 Ω | |

| F4 | 100 μH | [25 μH 90 μH] and [110 μH 175 μH] | 30 μH, 75 μH, 156 μH, 170 μH | |

| F5 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F6 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F7 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F8 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F9 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F10 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F11 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F12 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F13 | 0 Ω | [0.1 Ω 10 Ω] | 2 Ω, 4 Ω, 6 Ω, 8 Ω | |

| F14 | 100 Ω ∗ 10 μF | ([0.025 μF 0.09 μF] and [0.11 μF 0.175 μF]) | (30 Ω 0.025 μF), (30 Ω 0.175 μF), (170 Ω 0.025 μF), (170 Ω 0.175 μF) | |

| F15 | 33 Ω ∗ 33 Ω | ([8.25 Ω 29.7 Ω] and [36.3 Ω 57.75 Ω]) ∗ | (10 Ω, 20 Ω), (10 Ω, 40 Ω), (30 Ω, 10 Ω), (50 Ω, 50 Ω), | |

| ([8.25 Ω 29.7 Ω] and [36.3 Ω 57.75 Ω]) | ||||

| F16 | 33 Ω | [8.25 Ω 29.7 Ω] and [36.3 Ω 57.75 Ω] | 10 Ω, 20 Ω, 40 Ω, 50 Ω |

| True Class | F0 | 91.7 | 0.7 | 3.5 | 0.5 | 3.5 | ||||||||||||

| F1 | 94.4 | 0.2 | 5.3 | |||||||||||||||

| F2 | 0.5 | 89.9 | 9.6 | |||||||||||||||

| F3 | 0.8 | 78.4 | 3.8 | 0.8 | 1.3 | 14.0 | 0.8 | 0.3 | ||||||||||

| F4 | 0.5 | 0.3 | 1.6 | 89.7 | 0.3 | 5.7 | 0.5 | 0.8 | 0.8 | |||||||||

| F5 | 0.3 | 0.3 | 98.2 | 0.5 | 0.5 | 0.3 | ||||||||||||

| F6 | 0.5 | 1.2 | 3.9 | 1.2 | 92.0 | 0.2 | 0.2 | 0.5 | 0.2 | |||||||||

| F7 | 3.1 | 94.8 | 0.3 | 1.3 | 0.5 | |||||||||||||

| F8 | 0.2 | 14.4 | 2.0 | 0.5 | 0.7 | 81.9 | 0.2 | |||||||||||

| F9 | 0.3 | 0.3 | 99.2 | 0.3 | ||||||||||||||

| F10 | 9.9 | 88.3 | 1.8 | |||||||||||||||

| F11 | 1.0 | 0.3 | 0.3 | 0.8 | 97.1 | 0.3 | 0.3 | |||||||||||

| F12 | 4.2 | 0.2 | 1.0 | 1.7 | 0.2 | 1.0 | 90.3 | 1.2 | ||||||||||

| F13 | 0.5 | 0.5 | 0.2 | 0.2 | 0.2 | 98.3 | ||||||||||||

| F14 | 100 | |||||||||||||||||

| F15 | 4.1 | 0.7 | 94.9 | 0.3 | ||||||||||||||

| F16 | 4.7 | 2.3 | 2.3 | 2.3 | 4.7 | 83.7 | ||||||||||||

| F0 | F1 | F2 | F3 | F4 | F5 | F6 | F7 | F8 | F9 | F10 | F11 | F12 | F13 | F14 | F15 | F16 | ||

| Predicted Class | ||||||||||||||||||

| Fault Mode | Description |

|---|---|

| Health State | the normal bearing at 1791 rpm and 0 HP |

| Inner ring 1 | 0.007-inch inner ring fault at 1797 rpm and 0 HP |

| Inner ring 2 | 0.014-inch inner ring fault at 1797 rpm and 0 HP |

| Inner ring 3 | 0.021-inch inner ring fault at 1797 rpm and 0 HP |

| Rolling Element 1 | 0.007-inch rolling element fault at 1797 rpm and 0 HP |

| Rolling Element 2 | 0.014-inch rolling element fault at 1797 rpm and 0 HP |

| Rolling Element 3 | 0.021-inch rolling element fault at 1797 rpm and 0 HP |

| Outer ring 1 | 0.007-inch outer ring fault at 1797 rpm and 0 HP |

| Outer ring 2 | 0.014-inch outer ring fault at 1797 rpm and 0 HP |

| Outer ring 3 | 0.021-inch outer ring fault at 1797 rpm and 0 HP |

| True Class | Healthy | 100.0 | |||||||||

| Inner Ring 1 | 100.0 | ||||||||||

| Inner Ring 2 | 96.7 | 3.3 | |||||||||

| Inner Ring 3 | 100.0 | ||||||||||

| Rolling Element 1 | 100.0 | ||||||||||

| Rolling Element 2 | 100.0 | ||||||||||

| Rolling Element 3 | 100.0 | ||||||||||

| Outer Ring 1 | 100.0 | ||||||||||

| Outer Ring 2 | 4.0 | 96.0 | |||||||||

| Outer Ring 3 | 100.0 | ||||||||||

| Healthy | Inner Ring 1 | Inner Ring 2 | Inner Ring 3 | Rolling Element 1 | Rolling Element 2 | Rolling Element 3 | Outer Ring 1 | Outer Ring 2 | Outer Ring 3 | ||

| Predicted Class | |||||||||||

| True Class | Healthy | 99.0 | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 0.3 | ||

| Missing Tooth | 0.3 | 98.6 | 0.3 | 0.3 | 0.3 | 0.1 | 0.1 | |||

| Root Crack | 0.7 | 1.3 | 91.6 | 1.1 | 1.4 | 0.9 | 0.9 | 1.3 | 0.9 | |

| Spalling | 0.3 | 98.2 | 0.1 | 0.8 | 0.3 | 0.3 | ||||

| Chipping Tip 1a | 1.0 | 0.3 | 0.4 | 0.4 | 95.5 | 0.6 | 0.7 | 0.4 | 0.7 | |

| Chipping Tip 2a | 0.4 | 0.3 | 0.1 | 0.6 | 98.2 | 0.1 | 0.3 | |||

| Chipping Tip 3a | 0.1 | 0.1 | 0.1 | 0.1 | 0.1 | 99.0 | 0.1 | 0.1 | ||

| Chipping Tip 4a | 0.1 | 0.3 | 0.6 | 0.1 | 0.1 | 0.3 | 98.2 | 0.3 | ||

| Chipping Tip 5a | 0.1 | 0.1 | 0.1 | 99.5 | ||||||

| Healthy | Missing Tooth | Root Crack | Spalling | Chipping Tip 1a | Chipping Tip 2a | Chipping Tip 3a | Chipping Tip 4a | Chipping Tip 5a | ||

| Predicted Class | ||||||||||

| Dataset | Source Dataset | Target Dataset | Training Accuracy [51] | Testing Accuracy [51] | Training Accuracy (LWSN) | Testing Accuracy (LWSN) |

|---|---|---|---|---|---|---|

| D1 | 1730 RPM and 3 HP 1750 RPM and 2 HP | 1772 RPM and 1 HP 1797 RPM and 0 HP | 97.22 | 97.02 | 100 | 99.96 |

| D2 | 1772 RPM and 1 HP 1797 RPM and 0 HP | 1730 RPM and 3 HP 1750 RPM and 2 HP | 94.17 | 92.88 | 100 | 99.87 |

| D3 | 1730 RPM and 3 HP 1797 RPM and 0 HP | 1750 RPM and 2 HP 1772 RPM and 1 HP | 96.92 | 95.77 | 100 | 99.39 |

| D4 | 1750 RPM and 2 HP 1772 RPM and 1 HP | 1730 RPM and 3 HP 1797 RPM and 0 HP | 95.77 | 94.48 | 100 | 99.93 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khemani, V.; Azarian, M.H.; Pecht, M.G. Learnable Wavelet Scattering Networks: Applications to Fault Diagnosis of Analog Circuits and Rotating Machinery. Electronics 2022, 11, 451. https://doi.org/10.3390/electronics11030451

Khemani V, Azarian MH, Pecht MG. Learnable Wavelet Scattering Networks: Applications to Fault Diagnosis of Analog Circuits and Rotating Machinery. Electronics. 2022; 11(3):451. https://doi.org/10.3390/electronics11030451

Chicago/Turabian StyleKhemani, Varun, Michael H. Azarian, and Michael G. Pecht. 2022. "Learnable Wavelet Scattering Networks: Applications to Fault Diagnosis of Analog Circuits and Rotating Machinery" Electronics 11, no. 3: 451. https://doi.org/10.3390/electronics11030451