DepTSol: An Improved Deep-Learning- and Time-of-Flight-Based Real-Time Social Distance Monitoring Approach under Various Low-Light Conditions

Abstract

:1. Introduction

- We develop an efficient deep-learning-based physical distance monitoring approach in collaboration with ToF technology to monitor physical distancing under various low-light conditions.

- In comparison to the social distance monitoring solution provided by Adina et al. [8] in the DepTSol model, the limitation of monitoring people at a fixed camera distance in a given environment is addressed by monitoring people at varying camera distances.

- In this article, we evaluate the performance of the newly released, scaled-YOLOv4 algorithm under various low-light environments and perform a comparative analysis between seven different one-stage object detectors in low-light scenarios without applying any image cleansing or visibility enhancement techniques. In the literature, no other studies analyse the performance of deep learning algorithms in the context of low-light scenarios. Based on comparative analysis, in terms of both speed and accuracy, we choose the best algorithm for the implementation of our real-time social distance monitoring framework.

- The proposed technique is not only limited to monitoring social distancing at night, but it is also implementable in generic low-light environments for the detection and tracking of people, as likely violation of safety measures occur at night.

2. Literature Review

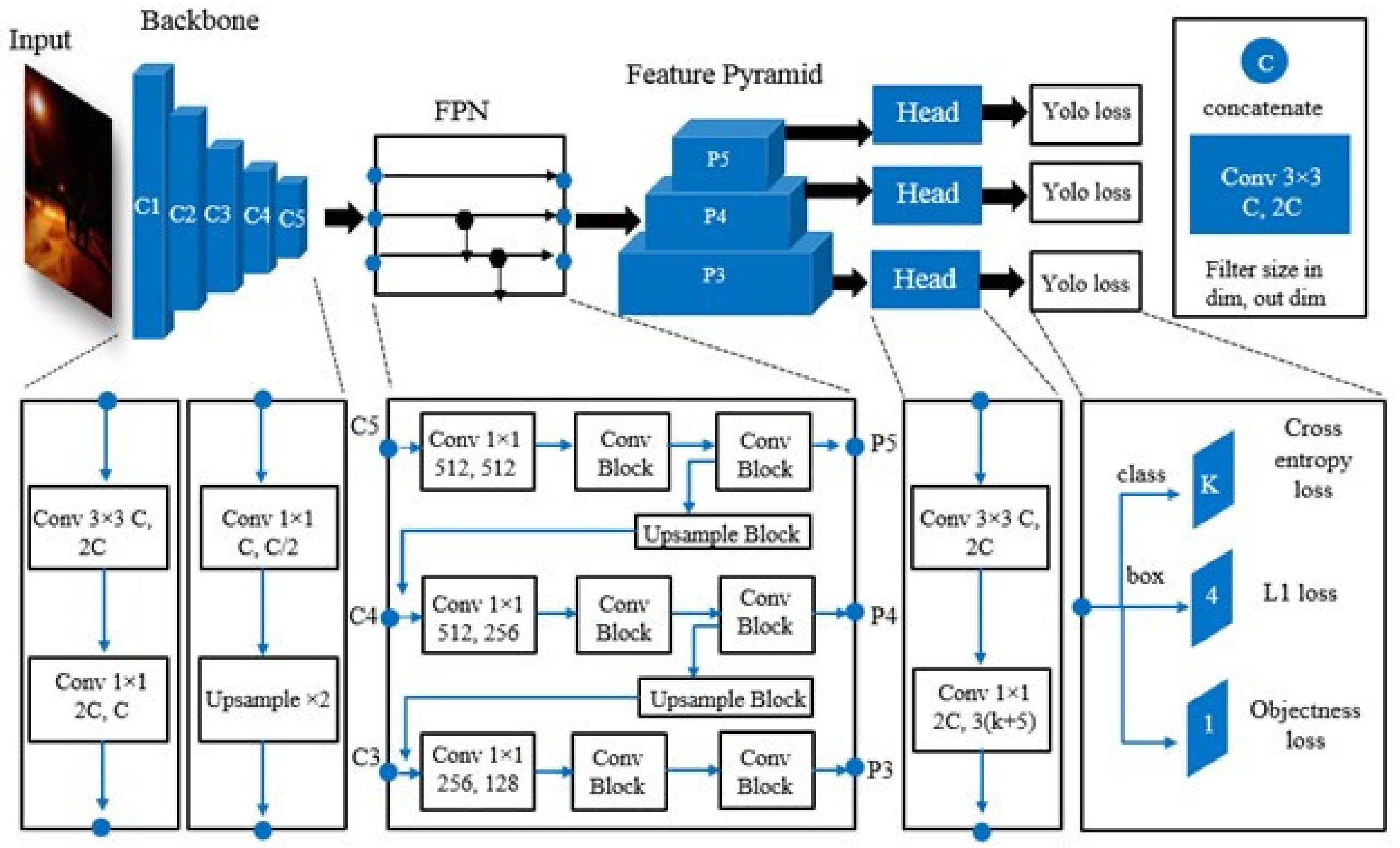

3. Overview of Scaled-YOLOv4 Algorithm

3.1. CSP-ized YOLOv4

3.2. YOLOv4-Tiny

3.3. YOLOv4-Large

4. Materials and Methods

4.1. Data Curation

4.1.1. Training Dataset

4.1.2. Testing Dataset

4.2. Problem Articulation

4.3. Real-Time People Detection

4.4. Camera-to-People Distance Estimation

4.5. Threshold Specification and People Inter-Distance Estimation

4.5.1. Monitoring People at CFD − near

| Algorithm 1: Monitoring people at CFD − near |

| Input: CFDR |

| Output: UDi+n 1 |

| 1 Start variables: |

| c, Global var1 |

| Epx, Global var2 |

| An, Global var3 |

| BBc, Global var4 |

| THud, Global var5 |

| End variables |

| 2 Initialization: CFD − near ← a1, c ← 1, THud ← 180 cm, Epx ← 0 |

|

| Algorithm 2: Monitoring people at CFD − far |

|

4.5.2. Monitoring People at CFD − Far

4.5.3. Monitoring People up to CFDR

| Algorithm 3: Monitoring people up to CFDR |

|

5. Experiments & Results

5.1. Experimental Setup

5.2. Evaluation Measures

5.3. Results

6. Limitations and Discussion

7. Conclusions and Future Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- WHO. Timeline: WHO’s COVID-19 Response. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/interactive-timeline?gclid=CjwKCAjwgZuDBhBTEiwAXNofRFQ1IcUc8OwIpn7BvGoKmB7P5BoUaxN2DlxxMpc2zXF2pcEXDW6ynBoCaOcQAvD_BwE#event-115 (accessed on 14 April 2021).

- WHO. WHO Coronavirus (COVID-19) Dashboard. Available online: https://covid19.who.int/ (accessed on 20 April 2021).

- WHO. COVID-19 Vaccines. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/covid-19-vaccines (accessed on 4 May 2021).

- WHO. Coronavirus Disease (COVID-19) Advice for the Public. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/advice-for-public (accessed on 10 May 2021).

- Ainslie, K.E.; Walters, C.E.; Fu, H.; Bhatia, S.; Wang, H.; Xi, X.; Baguelin, M.; Bhatt, S.; Boonyasiri, A.; Boyd, O.; et al. Evidence of initial success for China exiting COVID-19 social distancing policy after achieving containment. Wellcome Open Res. 2020, 5, 81. [Google Scholar] [CrossRef]

- Rahim, A.; Maqbool, A.; Rana, T. Monitoring social distancing under various low light conditions with deep learning and a single motionless time of flight camera. PLoS ONE 2021, 16, e0247440. [Google Scholar] [CrossRef] [PubMed]

- Prem, K.; Liu, Y.; Russell, T.W.; Kucharski, A.J.; Eggo, R.M.; Davies, N.; Jit, M.; Klepac, P.; Flasche, S.; Clifford, S.; et al. The effect of control strategies to reduce social mixing on outcomes of the COVID-19 epidemic in Wuhan, China: A modelling study. Lancet Public Health 2020, 5, e261–e270. [Google Scholar] [CrossRef] [Green Version]

- Adolph, C.; Amano, K.; Bang-Jensen, B.; Fullman, N.; Wilkerson, J. Pandemic politics: Timing state-level social distancing responses to COVID-19. J. Health Politics Policy Law 2021, 46, 211–233. [Google Scholar] [CrossRef] [PubMed]

- UN. Compendium of Digital Government Initiatives in Response to the COVID-19 Pandemic 2020. Available online: https://publicadministration.un.org/egovkb/Portals/egovkb/Documents/un/2020-Survey/UNDESA%20Compendium%20of%20Digital%20Government%20Initiatives%20in%20Response%20to%20the%20COVID-19%20Pandemic.pdf (accessed on 14 April 2021).

- Punn, N.S.; Sonbhadra, S.K.; Agarwal, S. Monitoring COVID-19 social distancing with person detection and tracking via fine-tuned YOLO v3 and Deepsort techniques. arXiv 2020, arXiv:200501385. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:180402767. [Google Scholar]

- Sahraoui, Y.; Kerrache, C.A.; Korichi, A.; Nour, B.; Adnane, A.; Hussain, R. DeepDist: A Deep-Learning-Based IoV Framework for Real-Time Objects and Distance Violation Detection. IEEE Internet Things Mag. 2020, 3, 30–34. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:150601497. [Google Scholar] [CrossRef] [Green Version]

- Bouhlel, F.; Mliki, H.; Hammami, M. Crowd Behavior Analysis based on Convolutional Neural Network: Social Distancing Control COVID-19. In Proceedings of the VISIGRAPP—16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Online, 8–10 February 2021; Volume 5, pp. 273–280. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:200410934. [Google Scholar]

- Bolton, R.J.; Hand, D.J. Statistical fraud detection: A review. Stat. Sci. 2002, 17, 235–255. [Google Scholar] [CrossRef]

- Rashidian, A.; Joudaki, H.; Vian, T. No evidence of the effect of the interventions to combat health care fraud and abuse: A systematic review of literature. PLoS ONE 2012, 7, e41988. [Google Scholar] [CrossRef]

- Robertson, D.J.; Kramer, R.S.; Burton, A.M. Fraudulent ID using face morphs: Experiments on human and automatic recognition. PLoS ONE 2017, 12, e0173319. [Google Scholar] [CrossRef] [Green Version]

- Bruce, V.; Young, A. Understanding face recognition. Br. J. Psychol. 1986, 77, 305–327. [Google Scholar] [CrossRef]

- Wang, X.; Jhi, Y.C.; Zhu, S.; Liu, P. Behavior Based Software Theft Detection. In Proceedings of the 16th ACM Conference on Computer and Communications Security, Chicago, IL, USA, 9–13 November 2009; pp. 280–290. [Google Scholar]

- Monaro, M.; Gamberini, L.; Sartori, G. The detection of faked identity using unexpected questions and mouse dynamics. PLoS ONE 2017, 12, e0177851. [Google Scholar] [CrossRef] [Green Version]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef]

- Alberti, C.F.; Horowitz, T.; Bronstad, P.M.; Bowers, A.R. Visual attention measures predict pedestrian detection in central field loss: A pilot study. PLoS ONE 2014, 9, e89381. [Google Scholar] [CrossRef]

- Yao, S.; Wang, T.; Shen, W.; Pan, S.; Chong, Y.; Ding, F. Feature selection and pedestrian detection based on sparse representation. PLoS ONE 2015, 10, e0134242. [Google Scholar] [CrossRef]

- Lim, K.; Hong, Y.; Choi, Y.; Byun, H. Real-time traffic sign recognition based on a general purpose GPU and deep-learning. PLoS ONE 2017, 12, e0173317. [Google Scholar] [CrossRef] [Green Version]

- Jiang, D.; Huo, L.; Li, Y. Fine-granularity inference and estimations to network traffic for SDN. PLoS ONE 2018, 13, e0194302. [Google Scholar] [CrossRef] [Green Version]

- Debashi, M.; Vickers, P. Sonification of network traffic flow for monitoring and situational awareness. PLoS ONE 2018, 13, e0195948. [Google Scholar] [CrossRef]

- Kohavi, R.; Rothleder, N.J.; Simoudis, E. Emerging trends in business analytics. Commun. ACM 2002, 45, 45–48. [Google Scholar] [CrossRef]

- Wu, C.; Ye, X.; Ren, F.; Wan, Y.; Ning, P.; Du, Q. Spatial and social media data analytics of housing prices in Shenzhen, China. PLoS ONE 2016, 11, e0164553. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-YOLOv4: Scaling Cross Stage Partial Network. arXiv 2020, arXiv:201108036. [Google Scholar]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalising residual architectures. arXiv 2016, arXiv:160308029. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Li, L. Time-of-Flight Camera—An Introduction; Technical White Paper (SLOA190B); Texas Instruments: Dallas, TX, USA, 2014. [Google Scholar]

- Weyrich, M.; Klein, P.; Laurowski, M.; Wang, Y. Vision Based Defect Detection on 3D Objects and Path Planning for Processing. In Proceedings of the 9th WSEAS International Conference on ROCOM, Venice, Italy, 8–10 March 2011. [Google Scholar]

- Weingarten, J.W.; Gruener, G.; Siegwart, R. A State-of-the-Art 3D Sensor for Robot Navigation. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 3, pp. 2155–2160. [Google Scholar]

- Yuan, F.; Swadzba, A.; Philippsen, R.; Engin, O.; Hanheide, M.; Wachsmuth, S. Laser-Based Navigation Enhanced with 3d Time-of-Flight Data. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 2844–2850. [Google Scholar]

- Bostelman, R.; Russo, P.; Albus, J.; Hong, T.; Madhavan, R. Applications of a 3D Range Camera towards Healthcare Mobility Aids. In Proceedings of the 2006 IEEE International Conference on Networking, Sensing and Control, Ft. Lauderdale, FL, USA, 23–25 April 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 416–421. [Google Scholar]

- Penne, J.; Schaller, C.; Hornegger, J.; Kuwert, T. Robust real-time 3D respiratory motion detection using time-of-flight cameras. Int. J. Comput. Assist. Radiol. Surg. 2008, 3, 427–431. [Google Scholar] [CrossRef] [Green Version]

- Holz, D.; Schnabel, R.; Droeschel, D.; Stückler, J.; Behnke, S. Towards Semantic Scene Analysis with Time-of-Flight Cameras. In Robot Soccer World Cup; Springer: Berlin/Heidelberg, Germany, 2010; pp. 121–132. [Google Scholar]

- Castaneda, V.; Mateus, D.; Navab, N. SLAM combining ToF and high-resolution cameras. In Proceedings of the 2011 IEEE Workshop on Applications of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 672–678. [Google Scholar]

- Du, H.; Oggier, T.; Lustenberger, F.; Charbon, E. A Virtual Keyboard Based on True-3D Optical Ranging. In Proceedings of the British Machine Vision Conference, Oxford, UK, 5–8 September 2005; Volume 1, pp. 220–229. [Google Scholar]

- Soutschek, S.; Penne, J.; Hornegger, J.; Kornhuber, J. 3-D Gesture-Based Scene Navigation in Medical Imaging Applications Using Time-of-Flight Cameras. In Proceedings of the 2008 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Anchorage, AK, USA, 23–28 June 2008; pp. 1–6. [Google Scholar]

- Pycinski, B.; Czajkowska, J.; Badura, P.; Juszczyk, J.; Pietka, E. Time-of-flight camera, optical tracker and computed tomography in pairwise data registration. PLoS ONE 2016, 11, e0159493. [Google Scholar] [CrossRef] [Green Version]

- Loh, Y.P.; Chan, C.S. Getting to Know Low-Light Images with the Exclusively Dark Dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef] [Green Version]

- Rahim, A. Low-Light-Testing-Dataset-Pakistan. Available online: https://github.com/AdinaRahim/Low-Light-Testing-Dataset-Pakistan-.git (accessed on 12 May 2021).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft Coco: Common Objects in Context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Goutte, C.; Gaussier, E. A Probabilistic Interpretation of Precision, Recall and F-score, with Implication for Evaluation. In Proceedings of the European Conference on Information Retrieval, Santiago de Compostela, Spain, 21–23 March 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar]

- Chen, X.; Fang, H.; Lin, T.Y.; Vedantam, R.; Gupta, S.; Dollár, P.; Zitnick, C.L. Microsoft Coco Captions: Data Collection and Evaluation Server. arXiv 2015, arXiv:150400325. [Google Scholar]

- Vicente, S.; Carreira, J.; Agapito, L.; Batista, J. Reconstructing Pascal Voc. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 41–48. [Google Scholar]

- Willmott, C.J.; Matsuura, K. Advantages of the Mean Absolute Error (MAE) over the Root Mean Square Error (RMSE) in Assessing Average Model Performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Nie, J.; Anwer, R.M.; Cholakkal, H.; Khan, F.S.; Pang, Y.; Shao, L. Enriched Feature Guided Refinement Network for Object Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 9537–9546. [Google Scholar]

- Huang, Z.; Wang, J.; Fu, X.; Yu, T.; Guo, Y.; Wang, R. DC-SPP-YOLO: Dense Connection and Spatial Pyramid Pooling Based YOLO for Object Detection. Inf. Sci. 2020, 522, 241–258. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.-C.; Kuo, R.-L.; Shih, S.-R. COVID-19: The first documented coron-avirus pandemic in history. Biomed. J. 2020, 43, 328–333. [Google Scholar] [CrossRef]

- Morens, D.M.; Breman, J.G.; Calisher, C.H.; Doherty, P.C.; Hahn, B.H.; Keusch, G.T.; Kramer, L.D.; LeDuc, J.W.; Monath, T.P.; Taubenberger, J.K. The Origin of COVID-19 and Why it Matters. Am. J. Trop. Med. Hyg. 2020, 103, 955. [Google Scholar] [CrossRef]

| Model | Backbone | Size | FPS | mAP[0.50] | mAP[0.75] | mAPsmall | mAPmedium | mAPlarge |

|---|---|---|---|---|---|---|---|---|

| SSD | VGG-16 | 512 | 44.2 | 73.1% | 57.2% | 13.6% | 31.3% | 47.1% |

| RetinaNet | ResNet-50 | 512 | 22.3 | 70.0% | 62.1% | 23.28% | 28.0% | 56.1% |

| EFGRNet | VGG-16 | 512 | 37.9 | 87.0% | 69.1% | 17.2% | 47.1% | 62.8% |

| YOLOv3 | Darknet53 | 512 | 33.7 | 84.5% | 55.6% | 19.4% | 39.8% | 61.1% |

| YOLOv3-SPP | Darknet53 | 512 | 33.1 | 91.1% | 64.4% | 31.0% | 43.6% | 74.6% |

| YOLOv4 | CSPDarknet53 | 512 | 41.1 | 98.2% | 78.3% | 35.3% | 54.2% | 86.0% |

| CSP-ized YOLOv4 | CSPDarknet53 | 512 | 51.2 | 99.7% | 94.0% | 55.5% | 83.0% | 94.3% |

| Model | mARmax=1 | mARmax=10 | mARmax=100 | mARsmall | mARmedium | mARlarge |

|---|---|---|---|---|---|---|

| SSD | 39.8% | 69.4% | 65.8% | 48.5% | 69.8% | 77.9% |

| RetinaNet | 74.9% | 68.0% | 54.2% | 41.0% | 63.6% | 54.7% |

| EFGRNet | 83.9% | 71.1% | 68.6% | 52.1% | 80.4% | 74.8% |

| YOLOv3 | 86.3% | 79.6% | 75.1% | 50.4% | 94.2% | 89.1% |

| YOLOv3-SPP | 89.0% | 88.4% | 86.1% | 59.0% | 94.0% | 93.6% |

| YOLOv4 | 94.0% | 97.2% | 95.3% | 69.2% | 97.7% | 97.8% |

| CSP-ized YOLOv4 | 96.1% | 99.4% | 98.0% | 73.6% | 98.8% | 99.5% |

| Frame | CFD | Dpx | Dpx + (Epx × c) | THud | THpd | k | UD (cm) | AUD (cm) | Error (cm) | FP | TN | V |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CFD − near | 308.2 | - | 180 | 308.2 | 0.5842 | 180 | 180 | 0 | ||||

| (a) | CFD − far | 255.2 | 308.2 | - | - | - | 180 | 180 | 0 | 0 | 0 | 0 |

| CFDR | 203.1 | 309.1 | - | - | - | 180.54 | 180 | 0.54 | ||||

| MAE = 0.18 cm | ||||||||||||

| CFD − near | 310.0 | - | - | - | 0.5842 | 181.1 | 180 | 1.1 | ||||

| (b) | CFD − far | 151.2 | 204.2 | - | - | - | 119.27 | 120 | −0.73 | 0 | 0 | 1 |

| MAE = 0.92 cm | ||||||||||||

| (c) | CFD − near | 312.3 | - | - | - | 0.5842 | 182.41 | 180 | 2.41 | |||

| CFD − far | 122.1 | 175.1 | - | - | 96.43 | 100 | −3.57 | 0 | 0 | 1 | ||

| MAE = 2.99 cm | ||||||||||||

| CFD − near | 209.0 | - | - | - | 0.5842 | 122.0 | 120 | 2.0 | ||||

| (d) | CFD − far | 115.4 | 168.4 | - | - | - | 98.36 | 100 | −1.64 | 0 | 0 | 3 |

| CFDR | 67.1 | 173.1 | - | - | - | 101.11 | 100 | 1.11 | ||||

| MAE = 1.58 cm | ||||||||||||

| CFD − near | 177.3 | - | - | - | 0.5842 | 103.56 | 100 | 3.56 | ||||

| (e) | CFD − far | 296.7 | 349.7 | - | - | - | 204.20 | 200 | 4.2 | 0 | 0 | 1 |

| MAE = 3.88 cm | ||||||||||||

| CFD − near | 437.0 | - | - | - | 0.5842 | 255.25 | 250 | 5.25 | ||||

| (f) | CFD − far | 156.0 | 209.0 | - | - | - | 122.07 | 120 | 2.07 | 0 | 0 | 1 |

| MAE = 3.66 cm | ||||||||||||

| CFD − near | 436.1 | - | - | - | 0.5842 | 254.70 | 250 | 4.7 | ||||

| (g) | CFD − far | 159.0 | 212.0 | - | - | - | 123.8 | 120 | 3.8 | 0 | 0 | 1 |

| CFDR | 319.0 | 425.0 | - | - | - | 248.24 | 250 | −1.76 | ||||

| MAE = 3.42 cm | ||||||||||||

| CFD − near | 518.1 | - | - | - | 0.5842 | 302.62 | 300 | 2.62 | ||||

| (h) | CFD − far | 222.0 | 275.0 | - | - | - | 160.63 | 160 | 0.63 | 0 | 0 | 2 |

| CFDR | 125.11 | 231.11 | - | - | - | 129.1 | 130 | −0.9 | ||||

| MAE = 1.38 cm | ||||||||||||

| (i) | CFD − near | 246.3 | - | - | - | 0.5842 | 143.86 | 140 | 3.86 | 0 | 0 | 1 |

| MAE = 3.86 cm | ||||||||||||

| (j) | CFD − near | 314.3 | - | - | - | 0.5842 | 183.58 | 180 | 3.58 | |||

| CFD − far | 168.3 | 221.3 | - | - | - | 129.26 | 130 | −0.8 | 0 | 0 | 1 | |

| MAE = 2.19 cm | ||||||||||||

| CFD − near | 312.1 | - | - | - | 0.5842 | 182.29 | 180 | 2.29 | ||||

| (k) | CFD − far | 259.1 | 312.1 | - | - | - | 182.29 | 180 | 2.29 | 0 | 0 | 0 |

| CFDR | 244.5 | 350.5 | - | - | - | 204.72 | 200 | 4.72 | ||||

| MAE = 3.1 cm | ||||||||||||

| (l) | CFD − near | 410.4 | - | - | - | 0.5842 | 239.71 | 240 | -0.29 | |||

| CFD − far | 197.78 | 250.78 | - | - | 146.48 | 150 | −3.52 | 0 | 0 | 0 | ||

| MAE = 1.90 cm | ||||||||||||

| (m) | CFD − near | 322.4 | - | - | - | 0.5842 | 188.31 | 190 | −1.69 | 0 | 0 | 0 |

| MAE = 1.69 cm | ||||||||||||

| CFD − near | 202.2 | - | - | - | 0.5842 | 118.10 | 120 | −1.9 | ||||

| (n) | CFDR | 240.0 | 346.0 | - | - | 0.5842 | 202.13 | 200 | 2.13 | 0 | 0 | 1 |

| MAE = 2.01 cm | ||||||||||||

| (o) | CFD − near | 322.4 | - | - | - | 0.5842 | 188.31 | 190 | −1.69 | 0 | 0 | 0 |

| MAE = 1.69 cm | ||||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahim, A.; Maqbool, A.; Mirza, A.; Afzal, F.; Asghar, I. DepTSol: An Improved Deep-Learning- and Time-of-Flight-Based Real-Time Social Distance Monitoring Approach under Various Low-Light Conditions. Electronics 2022, 11, 458. https://doi.org/10.3390/electronics11030458

Rahim A, Maqbool A, Mirza A, Afzal F, Asghar I. DepTSol: An Improved Deep-Learning- and Time-of-Flight-Based Real-Time Social Distance Monitoring Approach under Various Low-Light Conditions. Electronics. 2022; 11(3):458. https://doi.org/10.3390/electronics11030458

Chicago/Turabian StyleRahim, Adina, Ayesha Maqbool, Alina Mirza, Farkhanda Afzal, and Ikram Asghar. 2022. "DepTSol: An Improved Deep-Learning- and Time-of-Flight-Based Real-Time Social Distance Monitoring Approach under Various Low-Light Conditions" Electronics 11, no. 3: 458. https://doi.org/10.3390/electronics11030458

APA StyleRahim, A., Maqbool, A., Mirza, A., Afzal, F., & Asghar, I. (2022). DepTSol: An Improved Deep-Learning- and Time-of-Flight-Based Real-Time Social Distance Monitoring Approach under Various Low-Light Conditions. Electronics, 11(3), 458. https://doi.org/10.3390/electronics11030458