CCoW: Optimizing Copy-on-Write Considering the Spatial Locality in Workloads

Abstract

:1. Introduction

2. Background and Related Work

2.1. Paging and Virtual Memory

2.2. Fork and Copy-on-Write

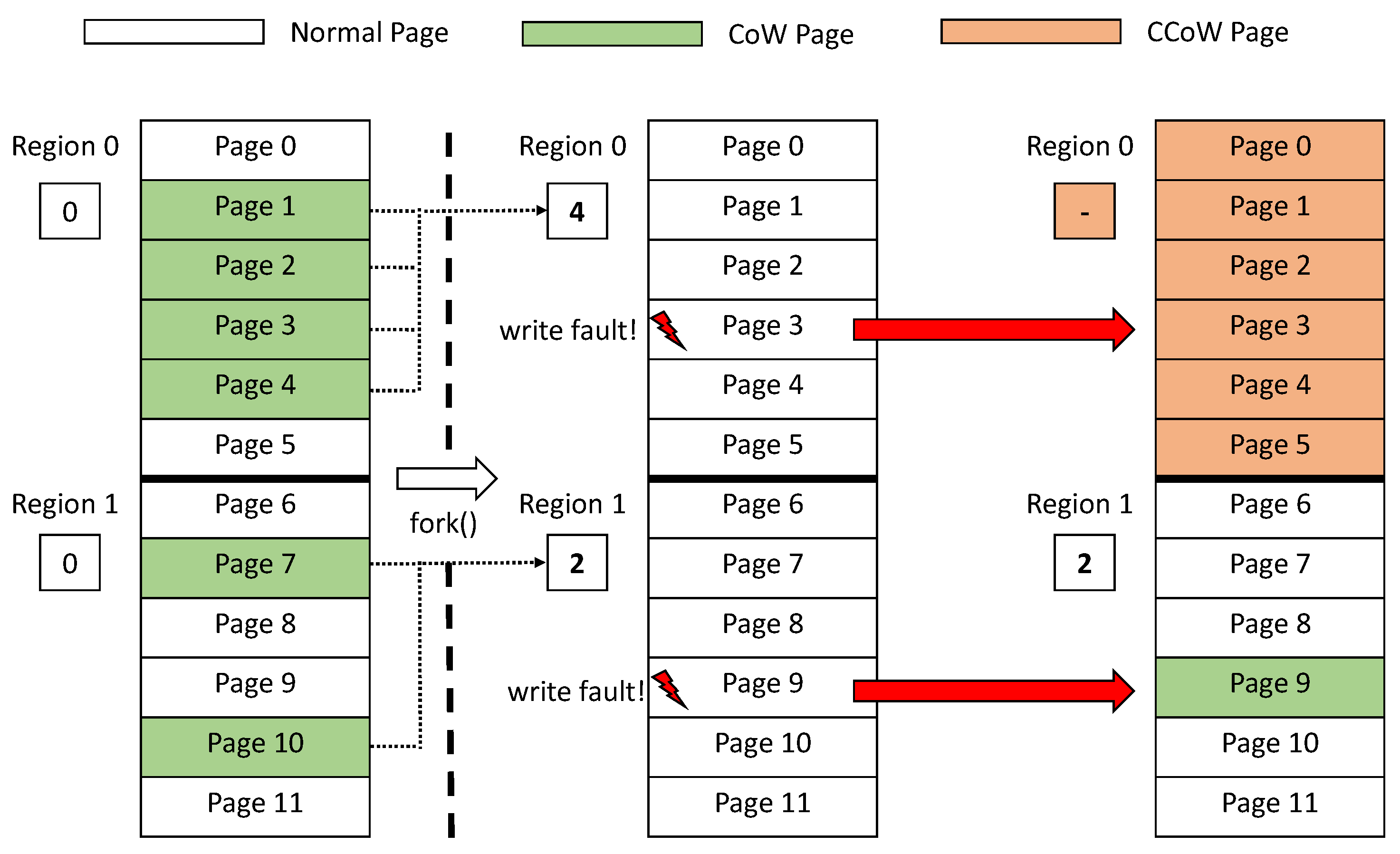

3. CCoW Design

3.1. Motivation

3.2. Identifying the Spatial Locality

3.3. Tracking Access to Precopied Pages

3.4. Capturing the Locality

4. Evaluation

4.1. Characterizing CCoW Performance

4.2. CCoW Performance on Realistic Workload

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gorman, M. Understanding the Linux Virtual Memory Manager; Prentice Hall: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- Bovet, D.P.; Cesati, M. Understanding the Linux Kernel; O’Reilly: Newton, MA, USA, 2001. [Google Scholar]

- Love, R. Linux Kernel Development, 3rd ed.; Addison Wesley: Boston, MA, USA, 2010. [Google Scholar]

- Labs, R. Redis. Available online: https://github.com/redis/redis (accessed on 7 June 2021).

- Silberschatz, A.; Galvin, P.B.; Gagne, G. Operating System Concepts; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 2018. [Google Scholar]

- Harris, S.L.; Harris, D. Digital Design and Computer Architecture; Morgan Kaufmann: Burlington, MA, USA, 2022. [Google Scholar]

- Abi-Chahla, F. Intel Core i7 (Nehalem): Architecture By AMD? Available online: https://www.tomshardware.com/reviews/Intel-i7-nehalem-cpu,2041.html (accessed on 18 October 2021).

- Pham, B.; Bhattacharjee, A.; Eckert, Y.; Loh, G.H. Increasing TLB reach by exploiting clustering in page translations. In Proceedings of the 2014 IEEE 20th International Symposium on High Performance Computer Architecture (HPCA’14), Orlando, FL, USA, 15–19 February 2014; pp. 558–567. [Google Scholar]

- Baruah, T.; Sun, Y.; Mojumder, S.A.; Abellán, J.L.; Ukidave, Y.; Joshi, A.; Rubin, N.; Kim, J.; Kaeli, D. Valkyrie: Leveraging Inter-TLB Locality to Enhance GPU Performance. In Proceedings of the ACM International Conference on Parallel Architectures and Compilation Techniques (PACT’20), Virtual, 5–7 October 2020. [Google Scholar]

- Vavouliotis, G.; Alvarez, L.; Karakostas, V.; Nikas, K.; Koziris, N.; Jiménez, D.A.; Casas, M. Exploiting Page Table Locality for Agile TLB Prefetching. In Proceedings of the 2021 ACM/IEEE 48th Annual International Symposium on Computer Architecture (ISCA’21), Valencia, Spain, 14–18 June 2021. [Google Scholar]

- Schildermans, S.; Aerts, K.; Shan, J.; Ding, X. Ptlbmalloc2: Reducing TLB Shootdowns with High Memory Efficiency. In Proceedings of the 2020 IEEE International Conference on Parallel Distributed Processing with Applications, Big Data Cloud Computing, Sustainable Computing Communications, Social Computing Networking (ISPA/BDCloud/SocialCom/SustainCom), Exeter, UK, 17–19 December 2020. [Google Scholar]

- Kwon, Y.; Yu, H.; Peter, S.; Rossbach, C.J.; Witchel, E. Coordinated and Efficient Huge Page Management with Ingens. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI’16), Savannah, GA, USA, 2–4 November 2016; pp. 705–721. [Google Scholar]

- Kwon, Y.; Yu, H.; Peter, S.; Rossbach, C.J.; Witchel, E. Ingens: Huge Page Support for the OS and Hypervisor. SIGOPS Oper. Syst. Rev. 2017, 51, 83–93. [Google Scholar] [CrossRef]

- Panwar, A.; Bansal, S.; Gopinath, K. HawkEye: Efficient Fine-Grained OS Support for Huge Pages. In Proceedings of the 24th International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS’19), Providence, RI, USA, 13–17 April 2019; pp. 347–360. [Google Scholar]

- Zhu, W.; Cox, A.L.; Rixner, S. A Comprehensive Analysis of Superpage Management Mechanisms and Policies. In Proceedings of the 2020 USENIX Annual Technical Conference (ATC’20), Boston, MA, USA, 15–17 July 2020; pp. 829–842. [Google Scholar]

- Park, C.H.; Cha, S.; Kim, B.; Kwon, Y.; Black-Schaffer, D.; Huh, J. Perforated page: Supporting fragmented memory allocation for large pages. In Proceedings of the ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA’20), Valencia, Spain, 30 May–3 June 2020; pp. 913–925. [Google Scholar]

- Yan, Z.; Lustig, D.; Nellans, D.; Bhattacharjee, A. Nimble Page Management for Tiered Memory Systems. In Proceedings of the 24th International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS’19), Providence, RI, USA, 13–17 April 2019; pp. 331–345. [Google Scholar]

- MongoDB. Available online: https://github.com/mongodb/mongo (accessed on 9 September 2021).

- Zhao, K.; Gong, S.; Fonseca, P. On-Demand-Fork: A Microsecond Fork for Memory-Intensive and Latency-Sensitive Applications. In Proceedings of the 16th European Conference on Computer Systems (EuroSys’21), Online, 26–28 April 2021; pp. 540–555. [Google Scholar]

- Baumann, A.; Appavoo, J.; Krieger, O.; Roscoe, T. A Fork() in the Road. In Proceedings of the Workshop on Hot Topics in Operating Systems (HotOS’19), Bertinoro, Italy, 13–15 May 2019; pp. 14–22. [Google Scholar]

- Ge, Y.; Wang, C.; Shen, X.; Young, H. A Database Scale-out Solution for Emerging Write-Intensive Commercial Workloads. SIGOPS Oper. Syst. Rev. 2008, 42, 102–103. [Google Scholar] [CrossRef]

- Li, C.; Feng, D.; Hua, Y.; Qin, L. Efficient live virtual machine migration for memory write-intensive workloads. Future Gener. Comput. Syst. 2019, 95, 126–139. [Google Scholar] [CrossRef]

- Reiss, C.; Tumanov, A.; Ganger, G.R.; Katz, R.H.; Kozuch, M.A. Heterogeneity and Dynamicity of Clouds at Scale: Google Trace Analysis. In Proceedings of the 3rd ACM Symposium on Cloud Computing (SOCC’12), San Jose, CA, USA, 14–17 October 2012. [Google Scholar]

- Zhang, Q.; Zhani, M.F.; Zhang, S.; Zhu, Q.; Boutaba, R.; Hellerstein, J.L. Dynamic Energy-Aware Capacity Provisioning for Cloud Computing Environments. In Proceedings of the 9th International Conference on Autonomic Computing (ICAC’12), San Jose, CA, USA, 16–20 September 2012; pp. 145–154. [Google Scholar]

- Sharma, B.; Chudnovsky, V.; Hellerstein, J.L.; Rifaat, R.; Das, C.R. Modeling and Synthesizing Task Placement Constraints in Google Compute Clusters. In Proceedings of the 2nd ACM Symposium on Cloud Computing (SOCC’11), Cascais, Portugal, 26–28 October 2011. [Google Scholar]

- Zhang, Q.; Hellerstein, J.; Boutaba, R. Characterizing Task Usage Shapes in Google Compute Clusters. In Proceedings of the 5th International Workshop on Large Scale Distributed Systems and Middleware (LADIS’10), Seattle, WA, USA, 2–3 September 2011. [Google Scholar]

- Sebastian, A.; Le Gallo, M.; Khaddam-Aljameh, R.; Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 2020, 15, 529–544. [Google Scholar] [CrossRef] [PubMed]

- Lipp, M.; Schwarz, M.; Gruss, D.; Prescher, T.; Haas, W.; Fogh, A.; Horn, J.; Mangard, S.; Kocher, P.; Genkin, D.; et al. Meltdown: Reading Kernel Memory from User Space. In Proceedings of the 27th USENIX Security Symposium (USENIX Security’18), Baltimore, MD, USA, 15–17 August 2018. [Google Scholar]

- Kocher, P.; Horn, J.; Fogh, A.; Genkin, D.; Gruss, D.; Haas, W.; Hamburg, M.; Lipp, M.; Mangard, S.; Prescher, T.; et al. Spectre Attacks: Exploiting Speculative Execution. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP’19), San Francisco, CA, USA, 18–19 May 2019. [Google Scholar]

- Cooper, B.F. YCSB: Yahoo! Cloud Serving Benchmark. Available online: https://github.com/brianfrankcooper/YCSB (accessed on 21 June 2021).

- Cooper, B.F.; Silberstein, A.; Tam, E.; Ramakrishnan, R.; Sears, R. Benchmarking Cloud Serving Systems with YCSB. In Proceedings of the Symposium on Cloud Computing (SoCC’10), Indianapolis, IN, USA, 10–11 June 2010; pp. 143–154. [Google Scholar]

| Case | Precopy Ratio | Increased | Unnecessary |

|---|---|---|---|

| Memory Footprint | Precopy Ratio | ||

| CoW | 0% | 0% | - |

| CCoW-95 | 11.1% | 2.6% | 23.4% |

| CCoW-90 | 12.3% | 2.9% | 23.5% |

| CCoW-85 | 22.4% | 5.2% | 23.2% |

| CCoW-80 | 26.9% | 6.7% | 24.9% |

| CCoW-75 | 77.2% | 23.3% | 30.2% |

| CCoW-70 | 94.8% | 33.8% | 35.6% |

| CCoW-all | 100% | 36% | 36% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ha, M.; Kim, S.-H. CCoW: Optimizing Copy-on-Write Considering the Spatial Locality in Workloads. Electronics 2022, 11, 461. https://doi.org/10.3390/electronics11030461

Ha M, Kim S-H. CCoW: Optimizing Copy-on-Write Considering the Spatial Locality in Workloads. Electronics. 2022; 11(3):461. https://doi.org/10.3390/electronics11030461

Chicago/Turabian StyleHa, Minjong, and Sang-Hoon Kim. 2022. "CCoW: Optimizing Copy-on-Write Considering the Spatial Locality in Workloads" Electronics 11, no. 3: 461. https://doi.org/10.3390/electronics11030461