A Novel Android Botnet Detection System Using Image-Based and Manifest File Features

Abstract

:1. Introduction

2. Related Work

2.1. Image-Based Analysis of Malicious Applications

2.2. Botnet Detection on Android

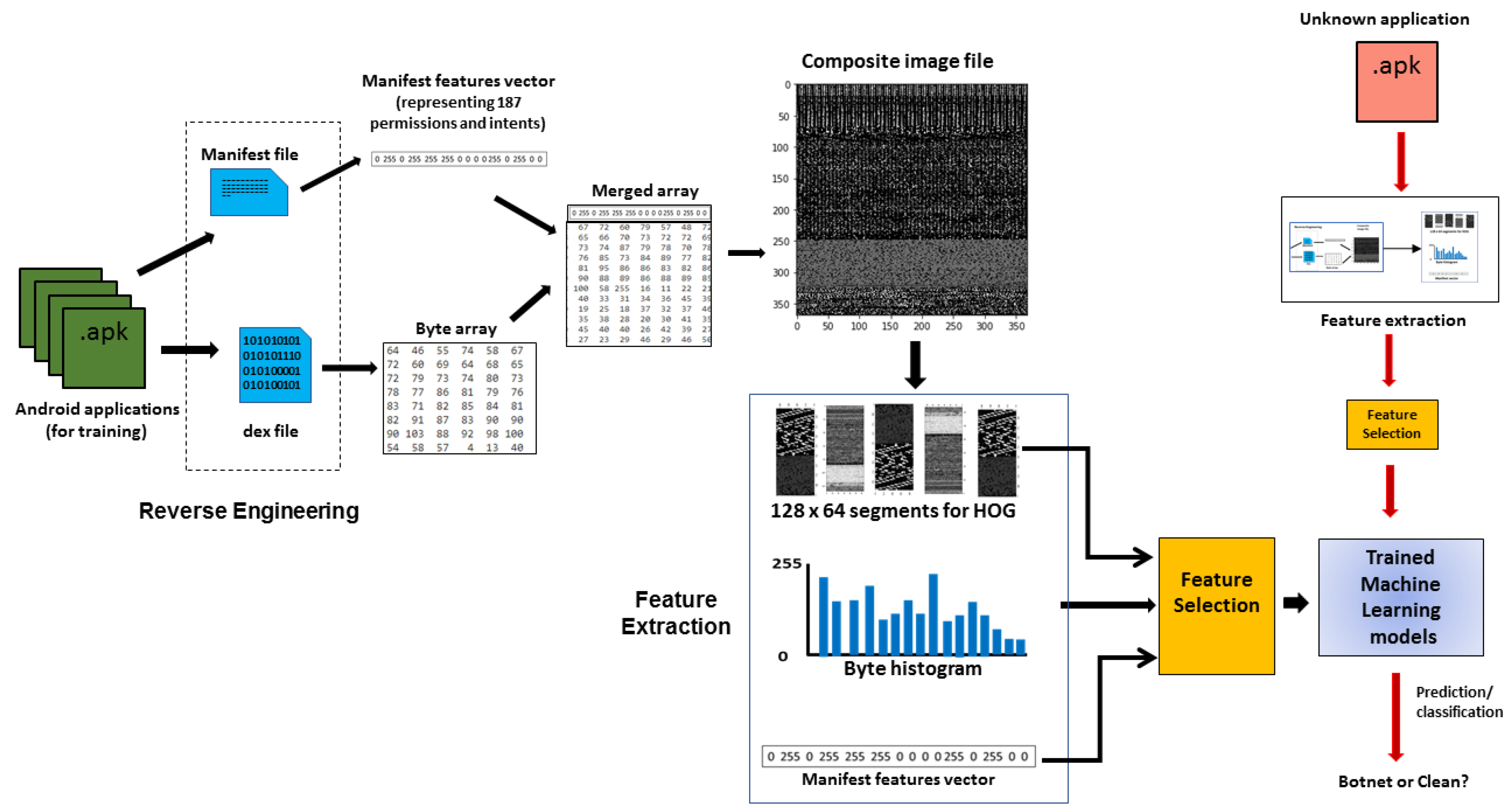

3. Proposed HOG-Based Android Botnet Detection System

3.1. Histogram of Oriented Gradients

3.2. Characterizing Apps with Image and Manifest Features

| Algorithm 1: Extracting image-based and manifest-based features. |

| Input: D = set of images with their class labels |

| Output: V = class labelled set of output vectors |

| 01: Initialize and , the arrays of size K = 8192 with zeros |

| 02: Initialize BH the byte histogram array of size 256 with zeros |

| 03: Initialize HOG parameters: , dim = 128 × 64; ppc = 8 × 8; cpb = 2 × 2 |

| 04: for each image I do |

| 05: Slice the image to separate the first 187 pixels |

| 06: Copy first slice having 187 pixels into a manifest vector |

| 07: // Obtain the HOG vector for the image |

| 08: Convert second slice into an array P of pixel decimal values |

| 09: Copy the first bytes into arrays |

| 10: Reshape to 128 × 64 size arrays |

| 11: Convert into images |

| 12: for each sub-image j do |

| 13: |

| 14: |

| 15: end |

| 16: |

| 17: // Obtain the byte histogram vector for the image |

| 18: |

| 19: for index = 0, 1, 2 …255 do |

| 20: count = 0 |

| 21: for do |

| 22: count = count +1 |

| 23: if count > max |

| 24: count = max |

| 25: end if |

| 26: end |

| 27: |

| 28: end |

| 29: // Obtain the overall output vector for the image |

| 30: |

| 31: end |

3.3. Feature Selection Using CHI Square Algorithm

4. Experiments and Evaluation of the System

4.1. Dataset Description

4.2. Evaluation Metrics

4.3. Machine-Learning Classifiers

- 1.

- K-Nearest Neighbor (KNN): KNN is a supervised classifier that classifies an input data into a specific set of classes based on the distance metric among its nearest neighbors [49]. Various distance metrics are possible candidates for the K-NN algorithm, such as the Euclidean distance, Manhattan distance, City block distance and Hamming distance. Due to its simplicity, Euclidean distance is the preferred choice among these distance measures. The K-NN algorithm uses vectors in a multidimensional feature space as training examples, each having a class label. During the training phase the algorithm stores the feature vectors and their class labels for the purpose of learning the model. During the classification phase, an unlabeled vector is classified by assigning the label, which is most frequent among the k training samples. Here k is a user defined constant whose choice depends on the type of data to be classified.

- 2.

- Support Vector Machines (SVM): SVM classifies the input data into different classes by finding a hyperplane in a higher dimension space of the feature set to distinguish among various classes [50]. This technique transforms the input data, which is divided into separate classes non-linearly, by applying various types of kernel functions, such as linear, polynomial, Gaussian and radial basis functions. SVM follows the concept of minimizing the classification risk as opposed to optimizing the classification accuracy. As a result, SVMs have a better generalization capability and hence can be used in situations where the number of training samples are less and the data has large number of features. SVMs have been popularly used in text and image classification problems and also in voice recognition and anomaly detection (e.g., security, fraud detection and healthcare).

- 3.

- Decision Trees (DT): A Decision Tree uses a tree-like structure that models a labelled data [51]. Its structure consists of leaves and branches, which actually represent the classifications and the combinations of features that lead to those classifications, respectively. During the classification, an unlabeled input is classified by testing its feature values against the nodes of the decision tree. Two popular algorithmic implementations of Decision Trees are the ID3 and C4.5, which use the information entropy measurements to learn the tree from the set of the training data. The procedure followed when building the decision tree, is to choose the data attributes that most efficiently splits its set of inputs into smaller subsets. Normalised information gain is used as the criteria for performing the splitting process. Those attributes that have the highest normalized information gain are used in making the splitting decision.

- 4.

- Random Forest (RF): Random Forest belong to the class of classifiers that are known as the Ensemble Learning classifiers [52]. As the name suggests, RF is a collection of several decision trees that are created first and are then combined in a random manner to build a “forest of trees”. A random sample of data from the training set is utilised for training the constituent trees of the RF. It is observed that due the presence of mutiple DTs in the RF, it circumvents the over-fitting problem encountered in DTs. This is due to the fact that RF performs a “bagging” step that uses bootstrap aggregation to deal with the over-fitting problem. During the classification phase, the RF takes the test features as an input and each DT within the RF is used to predict the desired target variable. The final outcome of the algorithm is achieved by taking the prediction with maximum votes among the constituent DTs.

- 5.

- Extra Trees (ET): Extra Trees is also an ensemble Machine-Learning algorithm that combines the predictions from many decision trees [53]. The concept is similar to the Random Forests, however there are certain key differences between them. One of the difference lies in how they take the input data to learn the models. RF uses bootstrap replicas (sub-sampling of the data), where as the Extra Trees use the whole input data as it is. Another difference lies in how the the cut points are selected in order to split the nodes of the tree. RF chooses the split in an optimal manner, however the Extra Trees do it randomly. That is why another name for Extra Trees is Extremely Randomised Trees. As such, Extra Trees add randomisation to the training process, but at the same time maintains the optimization. In other words, Extra Trees reduce both the bias and variance and are a good choice for classification tasks as compared to Random Forests.

- 6.

- XGBoost (XGB): XGBoost also belongs to the category of Ensemble Learning classifiers similar to RF and ETs, mentioned above [54]. However, they are based on the concept of Boosting, rather than Bagging (which is implemented in RF). Boosting is a process of increasing the prediction capabilities of an ensemble of weak classifiers. It is actually an iterative process where the weights of the each of the constituent weak classifiers are adjusted based on their performance in making the predictions of the target variable. Boosting is an iterative method that uses random sampling of the data without replacement (as opposed to replacement used during the bagging process in the RF). In boosting, errors that occur in the prediction of earlier models are reduced by the predictions of future models. This step is very much different from the bagging process used in Random Forest classifiers that use an ensemble of “independently” trained classifiers.

5. Results and Discussions

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- McAfee. McAfee Labs Threat Report 06.21. Available online: https://www.mcafee.com/enterprise/en-us/assets/reports/rp-threats-jun-2021.pdf (accessed on 28 December 2021).

- Rashid, F.Y. Chamois: The Big Botnet You Didn’t Hear About. Available online: https://duo.com/decipher/chamois-the-big-botnet-you-didnt-hear-about (accessed on 28 December 2021).

- Brook, C. Google Eliminates Android Adfraud Botnet Chamois. Available online: https://threatpost.com/google-eliminates-android-adfraud-botnet-chamois/124311/ (accessed on 28 December 2021).

- Grill, B.; Ruthven, M.; Zhao, X. Detecting and Eliminating Chamois, a Fraud Botnet on Android. Available online: https://android-developers.googleblog.com/2017/03/detecting-and-eliminating-chamois-fraud.html (accessed on 28 December 2021).

- Imperva. Mobile Bots: The Next Evolution of Bad Bots. In Imperva; Report, 2019. Available online: https://www.imperva.com/resources/resource-library/reports/mobile-bots-the-next-evolution-of-bad-bots/ (accessed on 28 December 2021).

- Feng, P.; Ma, J.; Sun, C.; Xu, X.; Ma, Y. A Novel Dynamic Android Malware Detection System With Ensemble Learning. IEEE Access 2018, 6, 30996–31011. [Google Scholar] [CrossRef]

- Wang, W.; Li, Y.; Wang, X.; Liu, J.; Zhang, X. Detecting Android malicious apps and categorizing benign apps with ensemble of classifiers. Future Gener. Comput. Syst. 2018, 78, 987–994. [Google Scholar] [CrossRef] [Green Version]

- Yerima, S.Y.; Alzaylaee, M.K.; Shajan, A.; Vinod, P. Deep Learning Techniques for Android Botnet Detection. Electronics 2021, 10, 519. [Google Scholar] [CrossRef]

- Senanayake, J.; Kalutarage, H.; Al-Kadri, M.O. Android Mobile Malware Detection Using Machine Learning: A Systematic Review. Electronics 2021, 10, 1606. [Google Scholar] [CrossRef]

- Liu, K.; Xu, S.; Xu, G.; Zhang, M.; Sun, D.; Liu, H. A Review of Android Malware Detection Approaches Based on Machine Learning. IEEE Access 2020, 8, 124579–124607. [Google Scholar] [CrossRef]

- Vasan, D.; Alazab, M.; Wassan, S.; Safaei, B.; Zheng, Q. Image-Based malware classification using ensemble of CNN architectures (IMCEC). Comput. Secur. 2020, 92, 101748. [Google Scholar] [CrossRef]

- Bozkir, A.S.; Tahillioglu, E.; Aydos, M.; Kara, I. Catch them alive: A malware detection approach through memory forensics, manifold learning and computer vision. Comput. Secur. 2021, 103, 102166. [Google Scholar] [CrossRef]

- Bozkir, A.S.; Cankaya, A.O.; Aydos, M. Utilization and Comparison of Convolutional Neural Networks in Malware Recognition. In Proceedings of the 27th Signal Processing and Communications Applications Conference (SIU), Sivas, Turkey, 24–26 April 2019. [Google Scholar]

- Nataraj, L.; Karthikeyan, S.; Jacob, G.; Manjunath, B.S. Malware Images: Visualization and Automatic Classification. In Proceedings of the 8th International Symposium on Visualization for Cyber Security, Pittsburgh, PA, USA, 20 July 2011. [Google Scholar]

- Nataraj, L.; Yegneswaran, V.; Porras, P.; Zhang, J. A Comparative Assessment of Malware Classification Using Binary Texture Analysis and Dynamic Analysis. In Proceedings of the 4th ACM Workshop on Security and Artificial Intelligence, Chicago, IL, USA, 21 October 2011. [Google Scholar]

- Kumar, S.; Meena, S.; Khosla, S.; Parihar, A.S. AE-DCNN: Autoencoder Enhanced Deep Convolutional Neural Network For Malware Classification. In Proceedings of the 2021 International Conference on Intelligent Technologies (CONIT), Hubli, India, 25–27 June 2021; pp. 1–5. [Google Scholar]

- El-Shafai, W.; Almomani, I.; AlKhayer, A. Visualized Malware Multi-Classification Framework Using Fine-Tuned CNN-Based Transfer Learning Models. Appl. Sci. 2021, 11, 6446. [Google Scholar] [CrossRef]

- Vasan, D.; Alazab, M.; Wassan, S.; Naeem, H.; Safaei, B.; Zheng, Q. IMCFN: Image-based malware classification using fine-tuned convolutional neural network architecture. Comput. Netw. 2020, 171, 107138. [Google Scholar] [CrossRef]

- Xiao, G.; Li, J.; Chen, Y.; Li, K. MalFCS: An effective malware classification framework with automated feature extraction based on deep convolutional neural networks. J. Parallel. Distrib. Comput. 2020, 141, 49–58. [Google Scholar] [CrossRef]

- Awan, M.J.; Masood, O.A.; Mohammed, M.A.; Yasin, A.; Zain, A.M.; Damaševičius, R.; Abdulkareem, K.H. Image-Based Malware Classification Using VGG19 Network and Spatial Convolutional Attention. Electronics 2021, 10, 2444. [Google Scholar] [CrossRef]

- Hemalatha, J.; Roseline, S.A.; Geetha, S.; Kadry, S.; Damaševičius, R. An Efficient DenseNet-Based Deep Learning Model for Malware Detection. Entropy 2021, 23, 344. [Google Scholar] [CrossRef] [PubMed]

- Yan, H.; Zhou, H.; Zhang, H. Automatic Malware Classification via PRICoLBP. Chinese J. Chem. 2018, 27, 852–859. [Google Scholar] [CrossRef]

- Luo, J.S.; Lo, D.C.T. Binary malware image classification using machine learning with local binary pattern. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 4664–4667. [Google Scholar]

- Kancherla, K.; Mukkamala, S. Image visualization based malware detection. In Proceedings of the 2013 IEEE Symposium on Computational Intelligence in Cyber Security (CICS), Singapore, 16–19 April 2013; pp. 40–44. [Google Scholar]

- Han, K.S.; Lim, J.H.; Kang, B.; Im, E.G. Malware analysis using visualized images and entropy graphs. Int. J. Inf. Secur. 2015, 14, 1–14. [Google Scholar] [CrossRef]

- Wang, T.; Xu, N. Malware variants detection based on opcode image recognition in small training set. In Proceedings of the IEEE 2nd International Conference on Cloud Computing and Big Data Analysis (ICCCBDA), Chengdu, China, 28–30 April 2017; pp. 328–332. [Google Scholar]

- Dai, Y.; Li, H.; Qian, Y.; Lu, X. A malware classification method based on memory dump grayscale image. Digit. Investig. 2018, 27, 30–37. [Google Scholar] [CrossRef]

- Singh, J.; Thakur, D.; Ali, F.; Gera, T.; Kwak, K.S. Deep Feature Extraction and Classification of Android Malware Images. Sensors 2020, 20, 7013. [Google Scholar] [CrossRef] [PubMed]

- Alzahrani, A.J.; Ghorbani, A.A. Real-time signature-based detection approach for SMS botnet. In Proceedings of the 13th Annual Conference on Privacy, Security and Trust (PST), Izmir, Turkey, 21–23 July 2015; pp. 157–164. [Google Scholar]

- Jadhav, S.; Dutia, S.; Calangutkar, K.; Oh, T.; Kim, Y.H.; Kim, J.N. Cloud-based Android botnet malware detection system. In Proceedings of the 17th International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 1–3 July 2015; pp. 347–352. [Google Scholar]

- Moodi, M.; Ghazvini, M.; Moodi, H.; Ghawami, B. A smart adaptive particle swarm optimization–support vector machine: Android botnet detection application. J. Supercomput. 2020, 76, 9854–9881. [Google Scholar] [CrossRef]

- Bernardeschia, C.; Mercaldo, F.; Nardonec, V.; Santoned, A. Exploiting Model Checking for Mobile Botnet Detection. Procedia Comput. Sci. 2019, 159, 963–972. [Google Scholar] [CrossRef]

- Anwar, S.; Zain, J.M.; Inayat, Z.; Haq, R.U.; Karim, A.; Jabir, A.N. A static approach towards mobile botnet detection. In Proceedings of the 3rd International Conference on Electronic Design (ICED), Phuket, Thailand, 11–12 August 2016; pp. 563–567. [Google Scholar]

- Tansettanakorn, C.; Thongprasit, S.; Thamkongka, S.; Visoottiviseth, V. ABIS: A prototype of Android Botnet Identification System. In Proceedings of the Fifth ICT International Student Project Conference (ICT-ISPC), Nakhonpathom, Thailand, 27–28 May 2016; pp. 1–5. [Google Scholar]

- Yusof, M.; Saudi, M.M.; Ridzuan, F. A new mobile botnet classification based on permission and API calls. In Proceedings of the Seventh International Conference on Emerging Security Technologies (EST), Canterbury, UK, 6–8 September 2017; pp. 122–127. [Google Scholar]

- Yusof, M.; Saudi, M.M.; Ridzuan, F. Mobile Botnet Classification by using Hybrid Analysis. Int. J. Eng. Technol. 2018, 7, 103–108. [Google Scholar] [CrossRef] [Green Version]

- Hijawi, W.; Alqatawna, J.; Faris, H. Toward a Detection Framework for Android Botnet. In Proceedings of the International Conference on New Trends in Computing Sciences (ICTCS), Amman, Jordan, 11–13 October 2017; pp. 197–202. [Google Scholar]

- Abdullah, Z.; Saudi, M.M.; Anuar, N.B. ABC: Android Botnet Classification Using Feature Selection and Classification Algorithms. Adv. Sci. Lett. 2017, 23, 4717–4720. [Google Scholar] [CrossRef]

- Karim, A.; Salleh, R.; Shah, S.A.A. DeDroid: A Mobile Botnet Detection Approach Based on Static Analysis. In Proceedings of the 7th International Symposium on UbiCom Frontiers—Innovative Research, Systems and Technologies, Beijing, China, 10–14 August 2015; pp. 1327–1332. [Google Scholar]

- Yerima, S.Y.; Alzaylaee, M.K. Mobile Botnet Detection: A Deep Learning Approach Using Convolutional Neural Networks. In Proceedings of the 2020 International Conference on Cyber Situational Awareness (Cyber SA 2020), Dublin, Ireland, 15–19 June 2020. [Google Scholar]

- Yerima, S.Y.; Bashar, A. Bot-IMG: A framework for image-based detection of Android botnets using machine learning. In Proceedings of the 18th ACS/IEEE International Conference on Computer systems and Applications (AICCSA 2021), Tangier, Morocco, 3–30 November 2021; pp. 1–7. [Google Scholar]

- Hojjatinia, S.; Hamzenejadi, S.; Mohseni, H. Android Botnet Detection using Convolutional Neural Networks. In Proceedings of the 28th Iranian Conference on Electrical Engineering (ICEE), Tabriz, Iran, 4–6 August 2020; pp. 1–6. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; pp. 886–893. [Google Scholar]

- Ebrahimzadeh, R.; Jampour, M. Article: Efficient Handwritten Digit Recognition based on Histogram of Oriented Gradients and SVM. Int. J. Comput. Appl. 2014, 104, 10–13. [Google Scholar]

- Anu, K.A.; Akbar, N.A. Recognition of Facial Expressions Based on Detection of Facial Components and HOG Characteristics. In Intelligent Manufacturing and Energy Sustainability; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Arief, S.S.; Samratul, F.; Arumjeni, M.; Sari, Y.W. HOG Based Pedestrian Detection System for Autonomous Vehicle Operated in Limited Area. In Proceedings of the International Conference on Radar, Antenna, Microwave, Electronics, and Telecommunications (ICRAMET), Bandung, Indonesia, 23–24 November 2021. [Google Scholar]

- Bahassine, S.; Madani, A.; Al-Sarem, M.; Kissi, M. Feature selection using an improved Chi-square for Arabic text classification. J. King Saud Univ.-Comput. 2020, 32, 225–231. [Google Scholar] [CrossRef]

- ISCX. ISCX Android Botnet Dataset. Available online: https://www.unb.ca/cic/datasets/android-botnet.html (accessed on 28 December 2021).

- Weiss, S. Small sample error rate estimation for k-NN classifiers. IEEE T. Pattern. Anal. 1991, 13, 285–289. [Google Scholar] [CrossRef]

- Pontil, M.; Verri, A. Support vector machines for 3D object recognition. IEEE Trans. Pattern. Anal. 1998, 20, 637–646. [Google Scholar] [CrossRef] [Green Version]

- Kruegel, C.; Toth, T. Using Decision Trees to Improve Signature-Based Intrusion Detection. In Recent Advances in Intrusion Detection; Springer: Berlin/Heidelberg, Germany, 2003; pp. 173–191. [Google Scholar]

- Zhang, J.; Zulkernine, M.; Haque, A. Random-Forests-Based Network Intrusion Detection Systems. IEEE Trans. Syst. Man. Cybern. Part C 2008, 38, 649–659. [Google Scholar] [CrossRef]

- Alsariera, Y.A.; Adeyemo, V.E.; Balogun, A.O.; Alazzawi, A.K. AI Meta-Learners and Extra-Trees Algorithm for the Detection of Phishing Websites. IEEE Access 2020, 8, 142532–142542. [Google Scholar] [CrossRef]

- Podlodowski, L.; Kozłowski, M. Application of XGBoost to the cyber-security problem of detecting suspicious network traffic events. In Proceedings of the IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 5902–5907. [Google Scholar]

- Yerima, S.Y.; Khan, S. Longitudinal Performance Analysis of Machine Learning based Android Malware Detectors. In Proceedings of the 2019 International Conference on Cyber Security and Protection of Digital Services (Cyber Security), Oxford, UK, 3–4 June 2019. [Google Scholar]

| Precision (M) | Recall (M) | Accuracy | Precision (C) | Recall (C) | F1-Score | |

|---|---|---|---|---|---|---|

| Extra Trees | 0.965 | 0.942 | 0.960 | 0.955 | 0.974 | 0.960 |

| SVM | 0.911 | 0.921 | 0.926 | 0.938 | 0.930 | 0.926 |

| KNN | 0.889 | 0.942 | 0.923 | 0.953 | 0.907 | 0.923 |

| XGBoost | 0.950 | 0.935 | 0.952 | 0.950 | 0.962 | 0.952 |

| RF | 0.962 | 0.944 | 0.958 | 0.955 | 0.970 | 0.958 |

| DT | 0.913 | 0.940 | 0.936 | 0.954 | 0.933 | 0.936 |

| Precision (M) | Recall (M) | Accuracy | Precision (C) | Recall (C) | F1-Score | |

|---|---|---|---|---|---|---|

| Extra Trees | 0.970 | 0.970 | 0.975 | 0.980 | 0.980 | 0.980 |

| SVM | 0.890 | 0.920 | 0.937 | 0.940 | 0.920 | 0.940 |

| KNN | 0.860 | 0.940 | 0.944 | 0.960 | 0.900 | 0.940 |

| XGBoost | 0.970 | 0.940 | 0.966 | 0.960 | 0.980 | 0.970 |

| RF | 0.970 | 0.960 | 0.973 | 0.970 | 0.980 | 0.970 |

| DT | 0.920 | 0.950 | 0.953 | 0.960 | 0.950 | 0.950 |

| HOG (Original) | HOG (Enhanced) | HOG + BH + MF | |

|---|---|---|---|

| XGBoost | 0.892 | 0.927 | 0.952 |

| Extra Trees | 0.863 | 0.925 | 0.960 |

| RF | 0.871 | 0.919 | 0.958 |

| KNN | 0.877 | 0.877 | 0.920 |

| SVM | 0.811 | 0.866 | 0.926 |

| DT | 0.773 | 0.835 | 0.935 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yerima, S.Y.; Bashar, A. A Novel Android Botnet Detection System Using Image-Based and Manifest File Features. Electronics 2022, 11, 486. https://doi.org/10.3390/electronics11030486

Yerima SY, Bashar A. A Novel Android Botnet Detection System Using Image-Based and Manifest File Features. Electronics. 2022; 11(3):486. https://doi.org/10.3390/electronics11030486

Chicago/Turabian StyleYerima, Suleiman Y., and Abul Bashar. 2022. "A Novel Android Botnet Detection System Using Image-Based and Manifest File Features" Electronics 11, no. 3: 486. https://doi.org/10.3390/electronics11030486

APA StyleYerima, S. Y., & Bashar, A. (2022). A Novel Android Botnet Detection System Using Image-Based and Manifest File Features. Electronics, 11(3), 486. https://doi.org/10.3390/electronics11030486