Applications of Federated Learning; Taxonomy, Challenges, and Research Trends

Abstract

:1. Introduction

1.1. Related Work

1.2. Contribution and Significance

- The main contribution of this research study is that it analyzes and investigates the state-of-the-art research on how federated learning is used to preserve client privacy.

- Furthermore, the taxonomy of FL algorithms is proposed to help the data scientists to have an overview about this technique.

- Moreover, a complete analysis of the industrial applications that can obtain benefits from FL implementation has been presented.

- In addition, the research gaps and challenges have been identified and explained for future research.

- Lastly, the overview of available distributed datasets, which can be used for this approach, are discussed.

1.3. Organization of the Article

2. Background

2.1. Iterative Learning

2.2. How FL Works

3. Systematic Review Protocol

3.1. Research Objectives (RO)

- RO1.

- To explore the areas that can potentially obtain advantages from using FL techniques.

- RO2.

- Evaluating the practicality and feasibility of federated learning in comparison with centralized learning in terms of privacy, performance, availability, reliability, and accuracy.

- RO3.

- To explain about the datasets used in different studies of federated learning and to highlight their experimental potential.

- RO4.

- To explore the research trends in applying federated learning.

- RO5.

- To highlight the challenges that can be encountered due to the employment of federated learning in edge devices.

3.2. Research Questions

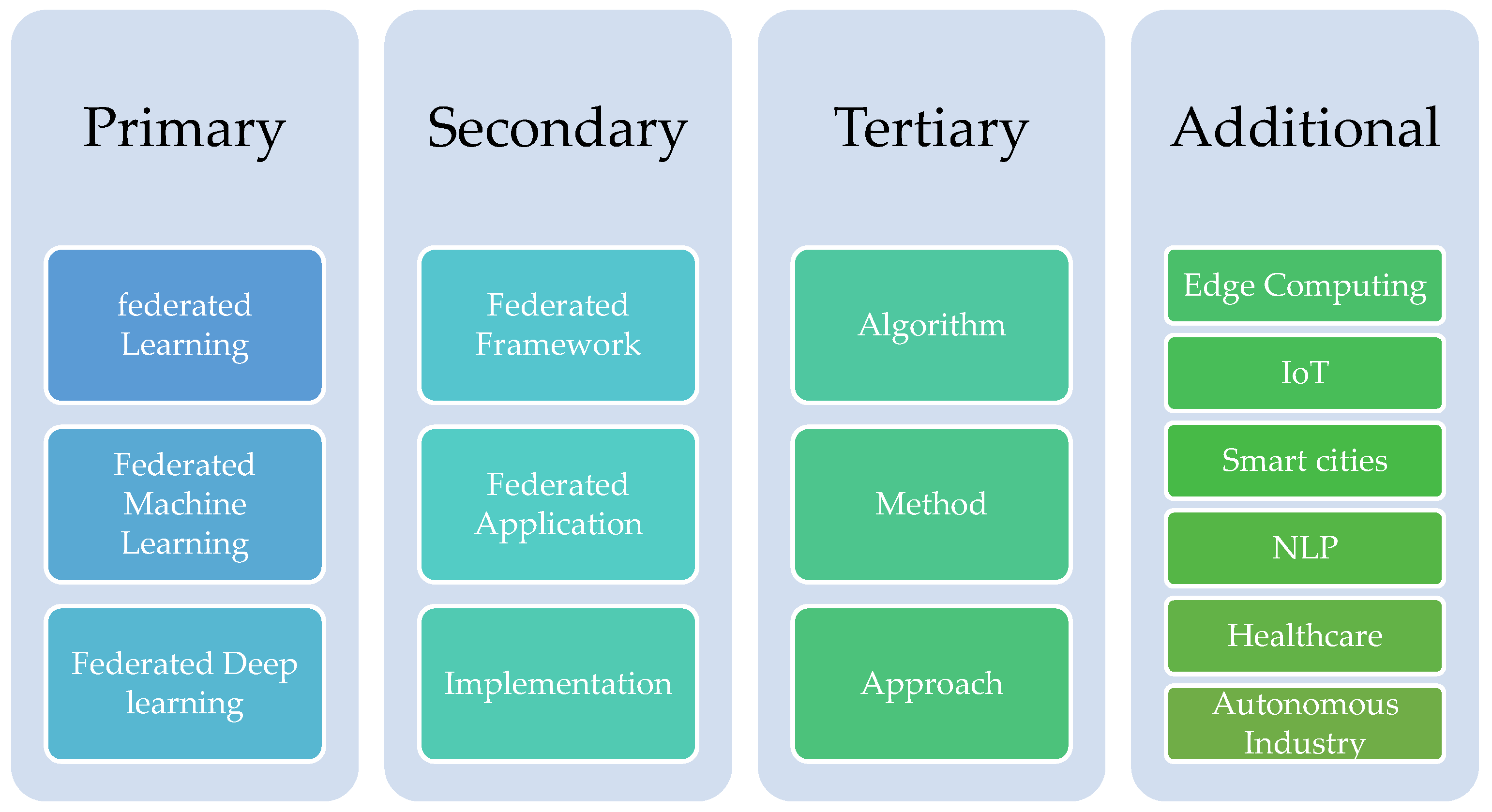

3.3. Search Strategy

3.3.1. Database

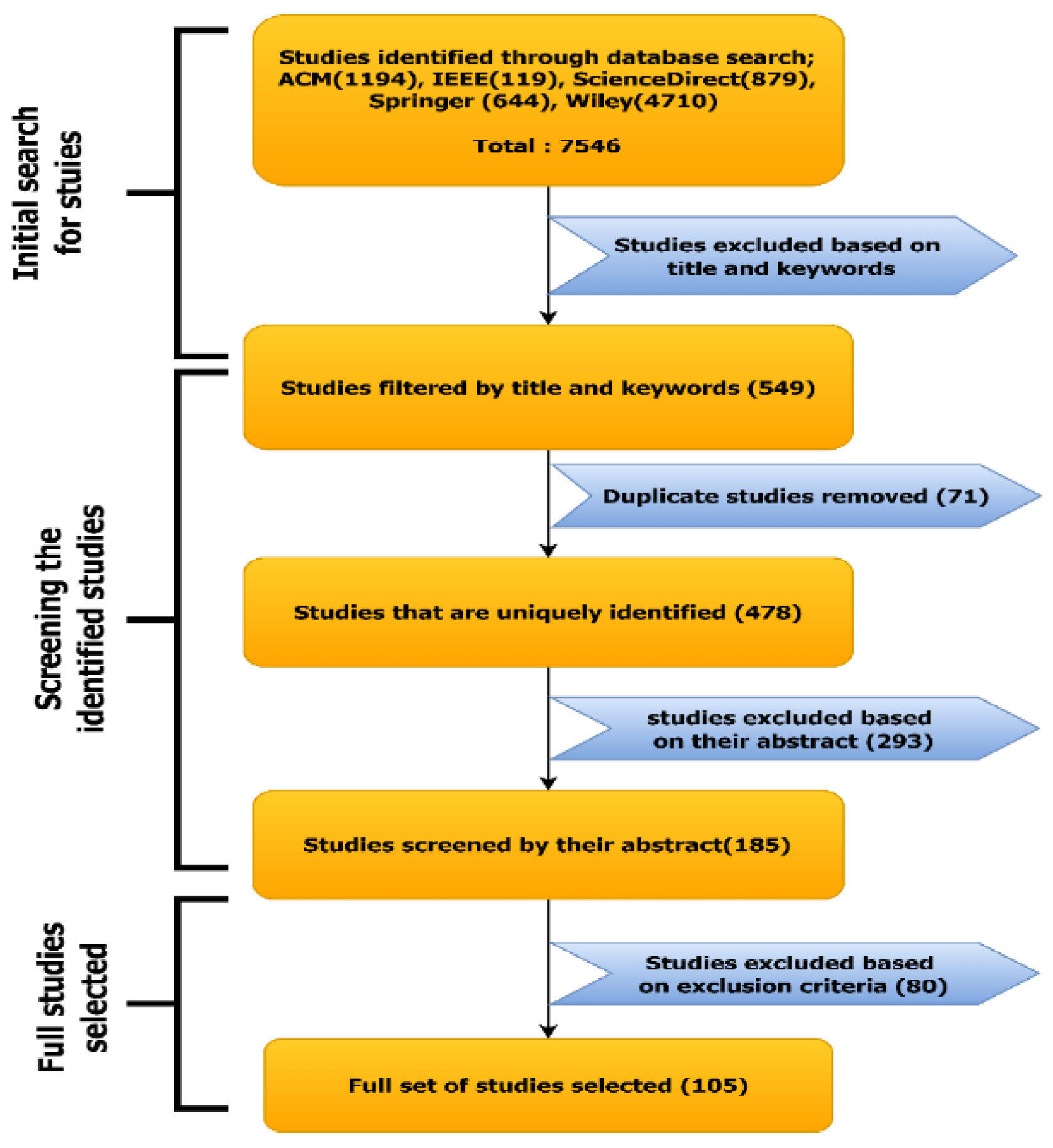

3.3.2. Study Selection

3.3.3. Data Extraction

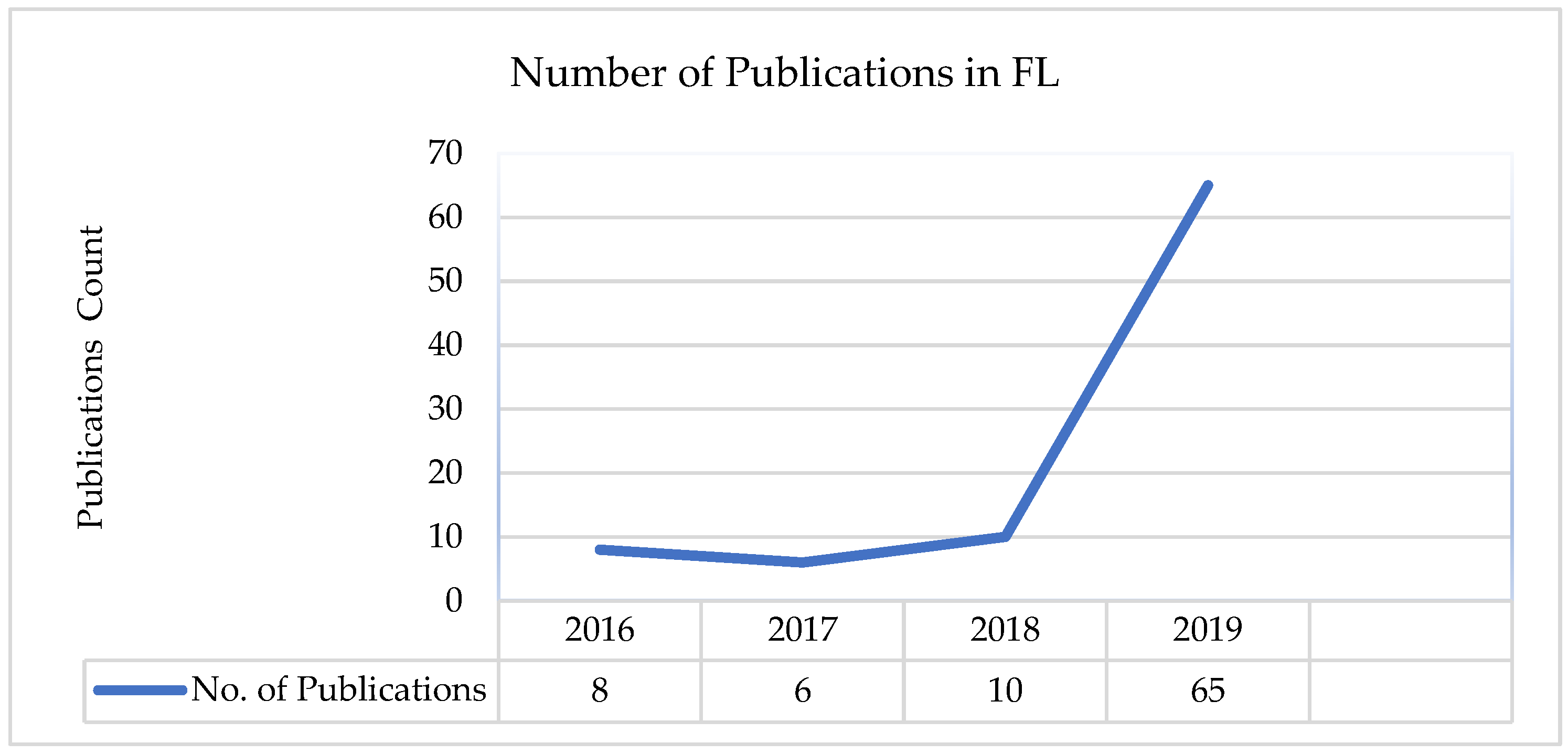

4. Systematic Review Execution

Studies Related to the Research Question

| Sr. No | RQs | Category | Related Studies |

|---|---|---|---|

| 01 | RQ1 | Applications | [3,7,9,11,13,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44] |

| 02 | RQ2 | Algorithms and models | [3,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60] |

| 03 | RQ3 | Data sets | [61,62,63,64,65,66] |

| 04 | RQ4 | Challenges | [3,5,12,13,19,25,26,30,45,46,49,50,67,68,69,70,71,72,73] |

5. Discussions

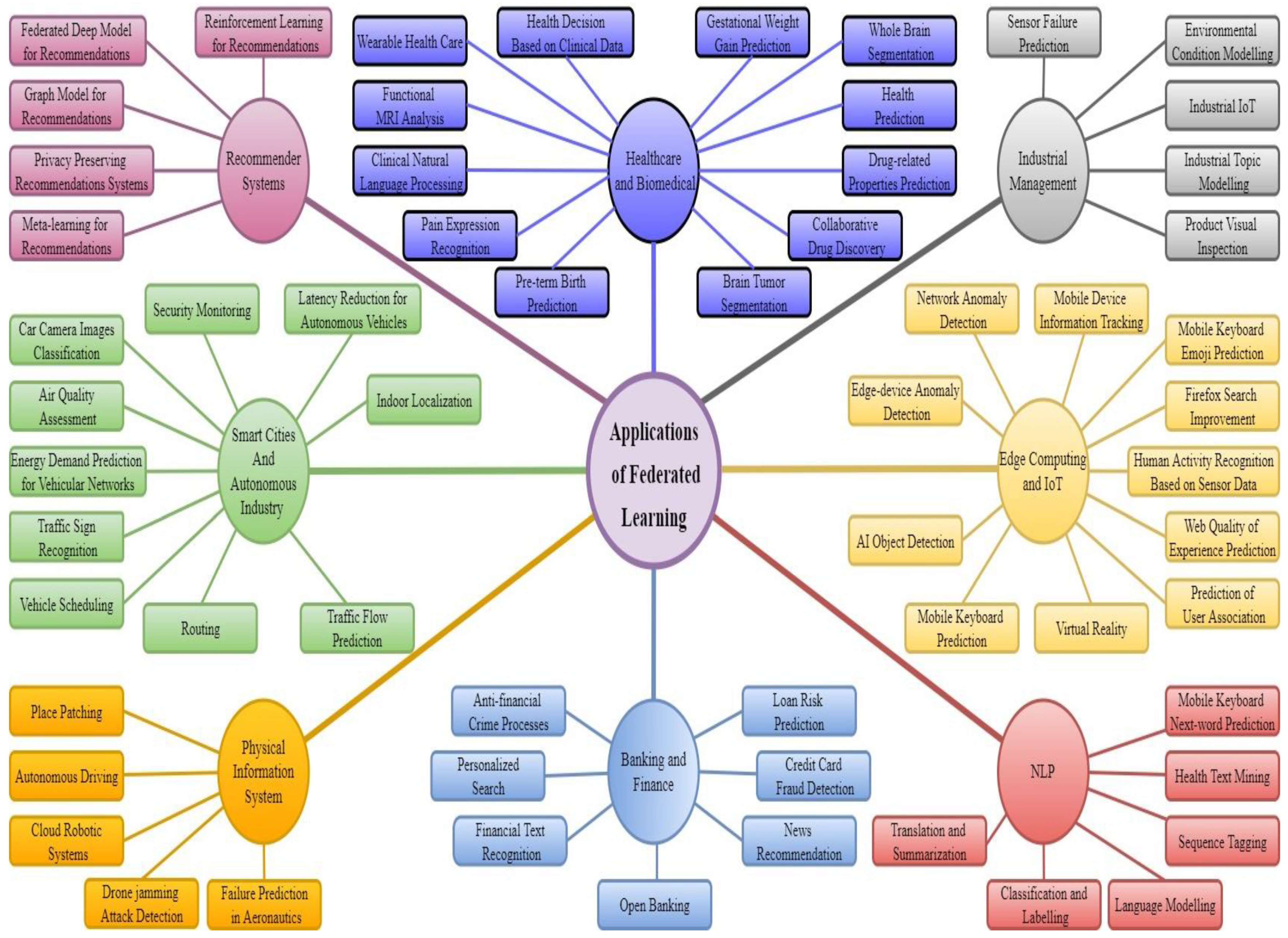

5.1. Applications of Federated Learning

5.1.1. Google Gboard

5.1.2. Healthcare

| Domain | Applications | Related Studies |

|---|---|---|

| Edge computing | FL is implemented in edge systems using the MEC (mobile edge computing) and DRL (deep reinforcement learning) frameworks for anomaly and intrusion detection. | [8,22,81,82,83,84,85] |

| Recommender systems | To learn the matrix, federated collaborative filter methods are built utilizing a stochastic gradient approach and secured matrix factorization using federated SGD. | [86,87,88,89,90,91,92] |

| NLP | FL is applied in next-word prediction in mobile keyboards by adopting the FedAvg algorithm to learn CIFG [93]. | [28,94,95,96,97] |

| IoT | FL could be one way to handle data privacy concerns while still providing a reliable learning model | [12,98,99,100] |

| Mobile service | The predicting services are based on the training data coming from edge devices of the users, such as mobile devices. | [28,94] |

| Biomedical | The volume of biomedical data is continually increasing. However, due to privacy and regulatory considerations, the capacity to evaluate these data is limited. By collectively building a global model for the prediction of brain age, the FL paradigm in the neuroimaging domain works effectively. | [101,102,103,104,105,106,107,108] |

| Healthcare | Owkin [31] and Intel [32] are researching how FL could be leveraged to protect patients‘ data privacy while also using the data for better diagnosis. | [7,79,109,110,111,112,113] |

| Autonomous industry | Another important reason to use FL is that it can potentially minimize latency. Federated learning may enable autonomous vehicles to behave more quickly and correctly, minimizing accidents and increasing safety. Furthermore, it can be used to predict traffic flow. | [9,34,114,115,116,117] |

| Banking and finance | The FL is applied in open banking and in finance for anti-financial crime processes, loan risk prediction, and the detection of financial crimes. | [77,118,119,120,121,122] |

5.1.3. Autonomous Vehicles

5.2. Algorithms and Models

| Ref | Algorithms | Model Implemented | Area | Privacy Mechanism | Remarks | Applied Areas | Related Studies |

|---|---|---|---|---|---|---|---|

| [23] | Secure aggregation FL | Practicality enhancement | CM | Privacy guarantees | State-of-the-art algorithm for aggregation of data. Applicability across the field. | [128,129,130] | |

| [66] | LEAF | \ | Benchmark | \ | Benchmark | Language modeling, sentiment analysis, and image classification. | [131] |

| [132] | FedML | \ | Benchmark | \ | Benchmark | Language modeling, sentiment analysis, and image classification. | [128,133,134,135,136,137,138] |

| [3] | FedAvg | Neural network | Effective Algorithm | \ | SGD based | Model averaging and convergence of algorithms. | [27,71,135,139,140] |

| [141] | FedSVRG | Linear model | Efficient communication and convergence. | [142] | |||

| [143] | FedBCD | NN | Reduction of communication cost. | [144,145] | |||

| [69] | FedProx | \ | Algorithm accuracy, convergence rate, and autonomous vehicles. | [146] | |||

| [147] | Agonistic FL | NN, LM | Optimization problems and reduction of communication cost. | [148,149] | |||

| [150] | FedMA | NN | NN specialized | Communication efficiency, convergence rate, NLP, and image classification. | [151] | ||

| [152] | PFNM | NN | Language modeling and image classification. | [150] | |||

| [153] | Tree-based FL | DT | DP | DT-specialized | Privacy preservation. | [154] | |

| [155] | SimFL | hashing | |||||

| [10] | FedXGB | Algorithm accuracy and convergence rate. | [155] | ||||

| [156] | FedForest | Privacy preservation and accuracy of algorithms. | [157] | ||||

| [56] | SecureBoost | DT | CM | DT-specialized | Privacy preservation, scalability, and credit risk analysis. | [158] | |

| [159] | Ridge Regression FL | LM | CM | LM-specialized | Reduction in model complexity. | [160,161] | |

| [162] | PPRR | [163] | |||||

| [164] | Linear regression FL | Global regression and goodness-of-fit diagnosis. | [165,166] | ||||

| [167] | Logistic regression FL | Biomedical and image classification. | [168] | ||||

| [169] | Federated MTL | \ | Multi-task learning | Simulation on human activity recognition and vehicle sensor. | [170] | ||

| [171] | Federated meta-learning | NN | Meta-learning | Efficient communication, convergence, and recommender system. | [89,171,172,173,174,175] | ||

| [64] | Personalized FedAvg | Efficient communication, convergence, anomaly detection, and IoT. | [84,143,171,176] | ||||

| [177] | LFRL | Reinforcement learning | Cloud robotic systems and autonomous vehicle navigation. | [178] |

5.3. Datasets for Federated Learning

| Dataset | No. of Data Items | No. of Clients | Details |

|---|---|---|---|

| Street 5 [61] | 956 | 26 | https://dataset.fedai.org (accessed on 11 August 2020) |

| Street 20 [61] | 956 | 20 | https://github.com/FederatedAI/FATE/tree/master/research/federated_object_detection_benchmark (accessed on 11 August 2020) |

| Shakespears [3] | 16,068 | 715 | Dataset constructed from “The Complete Works of William Shakespeare”. |

| CIFAR-100 | 60,000 | 500 | CIFAR-100 produced by google [182] by randomly distributing the data among 500 nodes, with each node having 100 records [59] |

| StackOverflow [62] | 135,818,730 | 342,477 | Google TFF [65] team maintained this federated dataset comprised of data from StackOverflow. |

| Federated EMNIST | 671,585 and 77,483 | 3400 | EMNIST [66] is a federated partition version that covers natural heterogeneity stemming from writing style. |

5.4. Challenges and Research Scope

| Ref | Year | Research Type | Problem Area | Contribution | Related Researches |

|---|---|---|---|---|---|

| [25] | 2018 | Experimental | Statistical heterogeneity | “They demonstrated a mechanism to improve learning on non-IID data by creating a small subset of data which is globally shared over the edge nodes.” | [3,26,30,67,184,185] |

| [3] | 2017 | Experimental | Statistical and communication cost | “They experimented a method for the FL of deep networks, relying on iterative model averaging, and conducted a detailed empirical evaluation.” | [3,25,26,67,186,187] |

| [67] | 2020 | Experimental | Convergence analysis and resource allocation | “They presented a novel algorithm for FL in wireless networks to resolve resource allocation optimization that captures the trade-off between the FL convergence.“ | [3,25,26,27] |

| [49] | 2019 | Experimental | Communication cost | “They proposed a technique named CMFL, which provides client nodes with the feedback regarding the tendency of global model updations.” | [45,46] |

| [45] | 2018 | Framework | Communication cost | “ A framework is presented for atomic sparsification of SGD that can lead to distributed training in a short time.” | [3,46,49,50] |

| [26] | 2019 | Experimental | Statistical heterogeneity | “They demonstrated that the accuracy degradation in FL is usually because of imbalanced distributed training data and proposed a new approach for balancing data using [188].” | [3,25,30,67,68,69,70] |

| [50] | 2017 | Experimental | Communication efficiency | “They proposed structured updates, parametrized by using less number of variables, which can minimize the communication cost by two orders of magnitude.” | [45,46,49] |

| [46] | 2018 | Numerical Experiment | Communication cost | “They performed analysis of SGD with k-sparsification or compression and showed that this approach converges at the same rate as vanilla SGD (equipped with error compensation).” | [3,46,49,50] |

| [189] | 2020 | Experimental | Biasnesss of data | “They demonstrated that generative models can be used to resolve several data-related issues even when ensuring the data‘s privacy. They also explored these models by applying it to images using a novel algorithm for differentially private federated GANs and to text with differentially private federated RNNs.” | [47,48] |

5.4.1. Imbalanced Data

- Size imbalance: when the size of each edge node’s data sample is uneven.

- Local imbalance: this is also known as non-identical distribution (non-identically distributed) or independent distribution because not all nodes have the same data distribution.

- Global imbalance: denotes a collection of data that is class imbalanced across all nodes.

5.4.2. Expensive Communication

5.4.3. Systems Heterogeneity

5.4.4. Statistical Heterogeneity

5.4.5. Privacy Concerns

6. Conclusions

7. Future Directions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AST-GNN | Attention-based Spatial-Temporal Graph Neural Networks |

| ATV | Aggregated Trust Value |

| CMFL | Communication Mitigated Overhead for Federated Learning |

| CNN | Convolutional Neural Networks |

| DFNAS | Direct Federated NAS |

| DL | Deep Learning |

| DNN | Deep Neural Networks |

| DP | Differential Policy |

| DRL | Deep Reinforcement Learning |

| DT | Decision Trees |

| FASTGNN | Federated Attention-based Spatial-Temporal Graph Neural Networks |

| FedAvg | Federated Averaging |

| FedBCD | Federated Stochastic Block Coordinate Descent |

| FederatedMTL | Federated Multi-Task Learning framework |

| FedMA | Federated Learning with Matched Averaging |

| FL | Federated Learning |

| GAN | Generative Adversarial Networks |

| HAR | Human Activity Recognition |

| IID | Independent and Identically Distributed |

| IoT | Internet-of-Things |

| IoV | Internet of Vehicles |

| KLD | KullbackLeibler Divergence |

| LFRL | Lifelong Federated Reinforcement Learning |

| LM | Linear Model |

| LSTM | Long Short-Term Memory |

| MAPE | Mean Absolute Percentage Error |

| MEC | Mobile Edge Computing |

| ML | Machine Learning |

| MLP | Multilayer Perceptron |

| MSL | Multi-weight Subjective Logic |

| NAS | Neural Architecture Search |

| NLP | Natural Language Processing |

| oVML | On-Vehicle Machine Learning |

| RNN | Recurrent Neural Network |

| SGD | Stochastic Gradient Descent |

| SMC | Secure Multiparty Computation |

| SMOTE | Synthetic Minority Over-sampling Technique |

| SVM | Support Vector Machine |

| TFF | TensorFlow Federated |

| TSL | Traditional Subjective Logic |

| TTS | Text-to-Speech |

| URLLC | Ultra-Reliable Low Latency Communication |

Appendix A

| Ref. | Year | Domain | Sub-Domain | Dataset | Approach | Tools/Techniques | Contribution | Shortcoming and Future Scope |

|---|---|---|---|---|---|---|---|---|

| [191] | 2019 | Erlang-programming language | FL | NA | Functional implementation of ANN in Erlang. | Nifty, C language, Erlang language | Creating ffl-erl, a framework for federated learning in Erlang language. | Erlang incurs a performance penalty, and it needs to be explored in different scenarios of FL. |

| [192] | 2019 | Image-based geolocation | FL | MNIST, CIFAR-10 | An asynchronous aggregation scheme is proposed to overcome performance degradation caused by imposing hard data constraints on edge devices. | PyTorch, Apache drill-based tool | The convergence rate has become better than the synchronous approach. | The system’s performance with the data constraints needs to be explored. |

| [193] | 2019 | Algorithms | FL and gossip learning | Spam base dataset, HAR dataset, Pendigits dataset | To reduce communication cost, subsampling was applied for FL and GL approaches. | Simulations, Stunner, secure aggregation protocol | Gossip learning proved better performance than FL in all the given scenarios while the training data was distributed uniformly over the nodes. | Gossip learning relies on message passing and no cloud resources. |

| [194] | 2020 | Poison defense in FL | Generative Adversarial Network | MNIST and Fashion-MNIST | To defend against poison attacks, GAN deployed at server side to regenerate user‘s edge training data and verification of accuracy for each model trained through data. The client node with lower accuracy than the threshold is recognized as an attacker. | PyTorch | The PDGAN employs partial classes data for the reconstruction of samples of local data of each node model. This method increased the efficiency with the rate of 3.4%, i.e., 89.4%. | There is a need to investigate the performance of PDGAN over class level heterogeneity. |

| [195] | 2019 | Data distribution in FL | IoT | FEMNIST, MNIST | In the proposed mechanism, the multi-criteria role of each end node device is processed in a prioritized fashion by leveraging a priority-aware aggregation operator. | CNN, LEAF | The mechanism proved to achieve online adjustment of the parameters by employing a local search strategy with backtracking. | Extensive experiments on diverse datasets needs to be performed for examining the positives of this mechanism. |

| [26] | 2019 | Data distribution in FL | Edge systems | Imbalanced EMNIST, FEMNIST and imbalanced CINIC-1 | A self-balancing FL framework called Astraea is proposed to overcome the issue of Global data imbalance by data augmentation and client rescheduling. | CNN, Tensor flow Federated | Astraea averages the local data imbalance and forms the mediator to reschedule the training of participant nodes based on KLD of their data distribution. It resulted in +5.59% and +5.89% improvement of accuracy for both datasets. | The Global data augmentation, perhaps, was a shortcoming for FL, as the data is shared globally by using this model. |

| [81] | 2019 | Mobile edge computing | FL | NA | DRL techniques are integrated with FL framework to optimize the MEC communication and caching. | Simulator AI chipset hardware, Tensor Flow | “In-Edge AI” is claimed to reach optimal performance but with low training overhead. | The applicability of this framework needs to be explored over real-world scenarios to find the efficacy in real manner. |

| [196] | 2019 | IoT | Edge systems and FL | MNIST/CIFAR-10 datasets | Communication-efficient FedAvg (CE-FedAvg) is introduced. FedAvg is integrated with Adam optimization for reducing the number of rounds to achieve convergence of the algorithm. | Raspberry Pi, Tensor Flow, ReLU, CNN | CE-FedAvg can reach a pre-satisfied accuracy in fewer communication rounds than in non-IID settings (up to 6× fewer) as compared to FedAvg. CE-FedAvg is cost-effective, robust to aggressive compression of transferred data, converged with up to 3× less iteration rounds. | The model is effective and cost efficient; however, there can be scope to add AI task management to reduce more computing cost. |

| [197] | 2018 | Human activity recognition | FL | The Heterogeneity Human Activity Recognition Dataset (2015) | A SoftMax regression and a DNN model are developed separately for HAR, to prove that accuracy similar to to centralized models can be achieved using FL. | Tensor Flow Apache Spark and Dataproc | In the experiments, FL achieved 89% accuracy, while in centralized training 93% accuracy is for DNN. | The models can be enhanced by integrating optimization techniques. |

| [41] | 2021 | Internet traffic classification | FL | ISCXVPN2016 dataset | An FL Internet traffic classification protocol (FLIC) is introduced to achieve an accuracy comparable to centralized DNN for Internet application identification. | Tensorflow | FLIC can classify new applications on the fly with an accuracy of 88% under non-IID traffic across clients. | There is a need to explore the protocol for real-world scenarios where the systems are heterogeneous systems. |

| [125] | 2018 | Algorithms evaluation | FL | MNIST | The algorithms FedAvg, CO-OP, and Federated Stochastic Variance Reduced Gradient are executed on MNIST, using both i.i.d. and non-i.i.d. partitionings of the data. | Ubuntu 16.04 LTS via VirtualBox 5.2, Python JSON | An MLP model FedAvg achieves the highest accuracy of 98% among these algorithms, regardless of the manner of data partitioning. | The evaluation study can be expanded by having the algorithm compared with some newly introduced benchmarks. |

| [6] | 2020 | Mobile Networks | FL | MNIST | A worker selection mechanism is formed for FL tasks evaluated based on the reputation threshold. A worker with a lesser reputation than the threshold is treated as an unreliable worker. | Tensor Flow | ATV scheme under lower reputation thresholds provides higher accuracy than MSL. MSL scheme performs the same as ATV scheme when the reputation is higher than 0.35. | Some validation schemes for non-IID datasets can be developed to detect the performance under poisoning attacks. |

| [96] | 2021 | NLP | FL | LJSpeech dataset | Dynamic transformer (FedDT-TTS) is proposed, where encoder and decoder increase layers dynamically to provide faster convergence with lesser communication cost in FL for the TTS task, and then compare FedT-TTS and FedDT-TTS on an unbalanced dataset. | Simulations, Python | Their model greatly improved transformer models’ performance in the federated learning, reducing total training time by 40%. | The generalization ability of this model can be examined over diverse kinds of datasets. |

| [37] | 2021 | Traffic speed forecasting | FL | PeMS dataset and METR-LA | FASTGNN framework for traffic speed forecasting with FL is proposed, which integrates AST-GNN for local training to protect the topological information. | Simulations | FASTGNN can provide similar performance compared with the three baseline algorithms, where the MAPE of FASTGNN is only 0.31% more than MAPE of STGCN. | The generalization ability of this model can be examined over diverse kinds of datasets. |

| [146] | 2021 | Autonomous vehicles | FL | MNIST FEMNIST | FedProx for computer vision is used and analyzed based on the capability to learn an underrepresented class while balancing system accuracy. | Python, TensorFlow, CNN, rectifier linear unit (ReLU) | FedProx local optimizer allows better accuracy using DNN implementations. | There is a tradeoff between intensity and resource allocation efforts of FedPRox. |

| [7] | 2020 | Healthcare | FL | NA | Surveyed recent FL approaches for Healthcare. | NA | Summarized the FL challenges of statistical, system heterogeneity, and privacy issues with recent solutions. | The solutions can be described more technically for health informatics. |

| [9] | 2018 | Vehicular networks | FL | To minimalize the network-wide power consumption of vehicular users with reliability in relation of probabilistic waiting delays, a joint transmit resource allocation and power method for enabling URLLC in vehicular networks is proposed. | Manhattan mobility model | The method provides approximately 60% reduced VUEs large queue lengths, without additional power consumption, compared to an average queue-based baseline. | The solutions can be described more technically. | |

| [61] | 2019 | Image detection | FL | Steet-5. Street 20 | A non-IID image dataset containing 900+ images taken by 26 street cameras with seven types of objects is presented. | Object detection algorithms (YOLO and Faster R-CNN) | These datasets also capture the realistic non-IID distribution problem in federated learning. | The data heterogeneity and imbalancing in these public datasets should be addressed for object identification using novel FL techniques. |

| [198] | 2021 | Smart cities and IoT | FL | NA | The latest research on the applicability of FL over fields of smart cities. | Survey, literature review | Provides the current improvement of FL from the IoT, transportation, communications, finance, medical, and other fields. | The detailed use case scenarios can be described more technically for smart cities. |

| [114] | 2020 | Autonomous vehicles | FL | Data collected by the oVML. | A Blockchain-based FL (BFL) is designed for privacy-aware IoV, where local oVML model updates are transferred and verified in distributed fashion. | Simulations | BFL is efficient in communication of autonomous vehicles. | The model BFL is needed to be explored over real world scenarios where the performance can be described more technically for IoV. |

| [135] | 2020 | Neural networks | FL | CIFAR10, CINIC-10 | An approach for computationally lightweight direct federated NAS, and a single step method to search for ready-to-deploy neural network models. | FedML | The inefficiency of the current practice of applying predefined neural network architecture to FL is claimed and addressed by using DFNAS. It resulted in lesser consume computation and communication bandwidth with 92% test accuracy. | The DFNAS applicability is needed to be explored over some real-world scenarios, such as text recommendation. |

| [101] | 2021 | Medical imaging | FL | NA | Reviews the latest research of FL to find its applicability in medical imaging. | Survey | Explains how patient privacy is maintained across sites using FL. | The technical presentation of the medical imaging can be illustrated by using a certain case study. |

References

- Reinsel, D.; Gantz, J.; Rydning, J. Data Age 2025: The Digitization of the World From Edge to Core. Int. Data Corp. 2018, 16, 28. [Google Scholar]

- Khan, L.U.; Saad, W.; Han, Z.; Hong, C.S. Dispersed Federated Learning: Vision, Taxonomy, and Future Directions. IEEE Wireless Commun. 2021, 28, 192–198. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Ji, S.; Saravirta, T.; Pan, S.; Long, G.; Walid, A. Emerging Trends in Federated Learning: From Model Fusion to Federated X Learning. arXiv 2021, arXiv:2102.12920. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and Open Problems in Federated Learning. arXiv 2021, arXiv:1912.04977. [Google Scholar]

- Kang, J.; Xiong, Z.; Niyato, D.; Zou, Y.; Zhang, Y.; Guizani, M. Reliable Federated Learning for Mobile Networks. IEEE Wirel. Commun. 2020, 27, 72–80. [Google Scholar] [CrossRef] [Green Version]

- Xu, J.; Wang, F.; Glicksberg, B.S.; Su, C.; Walker, P.; Bian, J. Federated Learning for Healthcare Informatics. J. Healthc. Inform. Res. 2020, 5, 1–19. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, J.; Jiang, L.; Tan, R.; Niyato, D. Mobile Edge Computing, Blockchain and Reputation-based Crowdsourcing IoT Federated Learning: A Secure, Decentralized and Privacy-preserving System. arXiv 2020, arXiv:1906.10893. [Google Scholar]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Federated Learning for Ultra-Reliable Low-Latency V2V Communications. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–7. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Ma, Z.; Liu, X.; Ma, S.; Nepal, S.; Deng, R. Boosting Privately: Privacy-Preserving Federated Extreme Boosting for Mobile Crowdsensing. arXiv 2019, arXiv:1907.10218. [Google Scholar]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning : Concept and Applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.-C.; Yang, Q.; Niyato, D.; Miao, C. Federated Learning in Mobile Edge Networks: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef] [Green Version]

- Niknam, S.; Dhillon, H.S.; Reed, J.H. Federated Learning for Wireless Communications: Motivation, Opportunities and Challenges. IEEE Commun. Mag. 2019, 58, 46–51. [Google Scholar] [CrossRef]

- Zhang, C.; Xie, Y.; Bai, H.; Yu, B.; Li, W.; Gao, Y. A survey on federated learning. Knowl.-Based Syst. 2021, 216, 106775. [Google Scholar] [CrossRef]

- Mothukuri, V.; Parizi, R.M.; Pouriyeh, S.; Huang, Y.; Dehghantanha, A.; Srivastava, G. A survey on security and privacy of federated learning. Futur. Gener. Comput. Syst. 2020, 115, 619–640. [Google Scholar] [CrossRef]

- Hou, D.; Zhang, J.; Man, K.L.; Ma, J.; Peng, Z. A Systematic Literature Review of Blockchain-based Federated Learning: Architectures, Applications and Issues. In Proceedings of the 2021 2nd Information Communication Technologies Conference (ICTC), Nanjing, China, 7–9 May 2021; pp. 302–307. [Google Scholar]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Proces. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Bouzinis, P.S.; Diamantoulakis, P.D.; Karagiannidis, G.K. Wireless Federated Learning (WFL) for 6G Networks—Part I: Research Challenges and Future Trends. IEEE Commun. Lett. 2021, 26, 3–7. [Google Scholar] [CrossRef]

- Soto, J.C.; Kyt, W.; Jahn, M.; Pullmann, J.; Bonino, D.; Pastrone, C.; Spirito, M. Towards a Federation of Smart City Services. In Proceedings of the International Conference on Recent Advances in Computer Systems (RACS 2015), Hail, Saudi Arabia, 30 November–1 December 2015; pp. 163–168. [Google Scholar]

- Liu, Y.; Zhang, L.; Ge, N.; Li, G. A Systematic Literature Review on Federated Learning: From A Model Quality Perspective. arXiv 2020, arXiv:2012.01973. [Google Scholar]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Roselander, J.; Kiddon, C.; Mazzocchi, S.; McMahan, B.; et al. Towards Federated Learning at Scale: System Design. Proc. Mach. Learn. Syst. 2019, 1, 374–388. [Google Scholar]

- Nishio, T.; Yonetani, R. Client Selection for Federated Learning with Heterogeneous Resources in Mobile Edge. In Proceedings of the ICC 2019—IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the ACM Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Quiñones, D.; Rusu, C. How to develop usability heuristics: A systematic literature review. Comput. Stand. Interfaces 2017, 53, 89–122. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated Learning with Non-IID Data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- Duan, M.; Liu, D.; Chen, X.; Tan, Y.; Ren, J.; Qiao, L.; Liang, L. Astraea: Self-Balancing Federated Learning for Improving Classification Accuracy of Mobile Deep Learning Applications. In Proceedings of the 2019 IEEE 37th International Conference on Computer Design (ICCD), Abu Dahbi, United Arab Emirates, 17–20 November 2019; pp. 246–254. [Google Scholar]

- Li, X.; Huang, K.; Yang, W.; Wang, S.; Zhang, Z. On the Convergence of FedAvg on Non-IID Data. arXiv 2019, arXiv:1907.02189. [Google Scholar]

- Ramaswamy, S.; Mathews, R.; Rao, K.; Beaufays, F. Federated Learning for Emoji Prediction in a Mobile Keyboard. arXiv 2019, arXiv:1906.04329. [Google Scholar]

- Brisimi, T.S.; Chen, R.; Mela, T.; Olshevsky, A.; Paschalidis, I.C.; Shi, W. Federated learning of predictive models from federated Electronic Health Records. Int. J. Med. Inform. 2018, 112, 59–67. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Yin, Y.; Fu, Z.; Zhang, S.; Deng, H.; Liu, D. LoAdaBoost: Loss-Based AdaBoost Federated Machine Learning on medical Data. PLoS ONE 2018, 15, e0230706. [Google Scholar]

- Federated Learning—OWKIN. Available online: https://owkin.com/federated-learning/ (accessed on 13 June 2020).

- Intel Works with University of Pennsylvania in Using Privacy-Preserving AI to Identify Brain Tumors|Intel Newsroom. Available online: https://newsroom.intel.com/news/intel-works-university-pennsylvania-using-privacy-preserving-ai-identify-brain-tumors/#gs.7wuma4 (accessed on 13 June 2020).

- Xiao, Z.; Xu, X.; Xing, H.; Song, F.; Wang, X.; Zhao, B. A federated learning system with enhanced feature extraction for human activity recognition. Knowl.-Based Syst. 2021, 229, 107338. [Google Scholar] [CrossRef]

- Qi, Y.; Hossain, M.S.; Nie, J.; Li, X. Privacy-preserving blockchain-based federated learning for traffic flow prediction. Futur. Gener. Comput. Syst. 2021, 117, 328–337. [Google Scholar] [CrossRef]

- Demertzis, K. Blockchained Federated Learning for Threat Defense. arXiv 2021, arXiv:2102.12746. [Google Scholar]

- Zhang, W.; Li, X.; Ma, H.; Luo, Z.; Li, X. Federated learning for machinery fault diagnosis with dynamic validation and self-supervision. Knowl. Based Syst. 2021, 213, 106679. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, S.; James, J.Q.; Yu, S. FASTGNN: A Topological Information Protected Federated Learning Approach for Traffic Speed Forecasting. IEEE Trans. Ind. Inform. 2021, 17, 8464–8474. [Google Scholar] [CrossRef]

- Zellinger, W.; Wieser, V.; Kumar, M.; Brunner, D.; Shepeleva, N.; Gálvez, R.; Langer, J.; Fischer, L.; Moser, B. Beyond federated learning: On confidentiality-critical machine learning applications in industry. Procedia Comput. Sci. 2019, 180, 734–743. [Google Scholar] [CrossRef]

- Huang, Z.; Liu, F.; Zou, Y. Federated Learning for Spoken Language Understanding. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 3467–3478. [Google Scholar] [CrossRef]

- Yang, Z.; Chen, M.; Wong, K.K.; Poor, H.V.; Cui, S. Federated Learning for 6G: Applications, Challenges, and Opportunities. Engineering, 2021; in press. [Google Scholar]

- Mun, H.; Lee, Y. Internet Traffic Classification with Federated Learning. Electronics 2020, 10, 27. [Google Scholar] [CrossRef]

- Mahmood, Z.; Jusas, V. Implementation Framework for a Blockchain-Based Federated Learning Model for Classification Problems. Symmetry 2021, 13, 1116. [Google Scholar] [CrossRef]

- Moubayed, A.; Sharif, M.; Luccini, M.; Primak, S.; Shami, A. Water Leak Detection Survey: Challenges & Research Opportunities Using Data Fusion & Federated Learning. IEEE Access 2021, 9, 40595–40611. [Google Scholar] [CrossRef]

- Tzinis, E.; Casebeer, J.; Wang, Z.; Smaragdis, P. Separate But Together: Unsupervised Federated Learning for Speech Enhancement from Non-IID Data. In Proceedings of the 2021 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 17–20 October 2021; pp. 46–50. [Google Scholar]

- Wang, H.; Sievert, S.; Liu, S.; Charles, Z.; Papailiopoulos, D.; Wright, S. ATOMO: Communication-efficient learning via atomic sparsification. Adv. Neural Inf. Process. Syst. 2018, 31, 9850–9861. [Google Scholar]

- Stich, S.U.; Cordonnier, J.B.; Jaggi, M. Sparsified SGD with Memory. Adv. Neural Info. Process. Syst. 2018, 31, 1–12. [Google Scholar]

- Nikolenko, S.I. Synthetic Data for Deep Learning; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. J. Priv. Confid. 2017, 7, 17–51. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Wang, W.; Li, B. CMFL: Mitigating Communication Overhead for Federated Learning. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; pp. 954–964. [Google Scholar]

- Konečný, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Huang, A.; Liu, Y.; Chen, T.; Zhou, Y.; Sun, Q.; Chai, H.; Yang, Q. StarFL: Hybrid Federated Learning Architecture for Smart Urban Computing. ACM Trans. Intell. Syst. Technol. 2021, 12, 1–23. [Google Scholar] [CrossRef]

- Deng, Y.; Lyu, F.; Ren, J.; Chen, Y.-C.; Yang, P.; Zhou, Y.; Zhang, Y. FAIR: Quality-Aware Federated Learning with Precise User Incentive and Model Aggregation. In Proceedings of the IEEE INFOCOM 2021—IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10. [Google Scholar]

- Li, X.; Jiang, M.; Zhang, X.; Kamp, M.; Dou, Q. Fedbn: Federated learning on non-iid features via local batch normalization. arXiv 2021, arXiv:2102.07623. [Google Scholar]

- Wang, S.; Lee, M.; Hosseinalipour, S.; Morabito, R.; Chiang, M.; Brinton, C.G. Device Sampling for Heterogeneous Federated Learning: Theory, Algorithms, and Implementation. In Proceedings of the IEEE INFOCOM 2021—IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021. [Google Scholar]

- Cheng, K.; Fan, T.; Jin, Y.; Liu, Y.; Chen, T.; Papadopoulos, D.; Yang, Q. SecureBoost: A Lossless Federated Learning Framework. IEEE Intell. Syst. 2021, 36, 87–98. [Google Scholar] [CrossRef]

- Zeng, D.; Liang, S.; Hu, X.; Xu, Z. FedLab: A Flexible Federated Learning Framework. arXiv 2021, arXiv:2107.11621. [Google Scholar]

- Ye, C.; Cui, Y. Sample-based Federated Learning via Mini-batch SSCA. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Budrionis, A.; Miara, M.; Miara, P.; Wilk, S.; Bellika, J.G. Benchmarking PySyft Federated Learning Framework on MIMIC-III Dataset. IEEE Access 2021, 9, 116869–116878. [Google Scholar] [CrossRef]

- Tan, A.Z.; Yu, H.; Cui, L.; Yang, Q. Towards Personalized Federated Learning. arXiv 2021, arXiv:2103.00710. [Google Scholar]

- Luo, J.; Wu, X.; Luo, Y.; Huang, A.; Huang, Y.; Liu, Y.; Yang, Q. Real-World Image Datasets for Federated Learning. arXiv 2019, arXiv:1910.11089. [Google Scholar]

- TensorFlow Federated. Available online: https://www.tensorflow.org/federated/api_docs/python/tff/simulation/datasets/stackoverflow/load_data (accessed on 17 August 2020).

- Reddi, S.; Charles, Z.; Zaheer, M.; Garrett, Z.; Rush, K.; Konečný, J.; Kumar, S.; McMahan, H.B. Adaptive Federated Optimization. arXiv 2020, arXiv:2003.00295. [Google Scholar]

- Jiang, Y.; Konečný, J.; Rush, K.; Kannan, S. Improving Federated Learning Personalization via Model Agnostic Meta Learning. arXiv 2019, arXiv:1909.12488. [Google Scholar]

- TensorFlow Federated. Available online: https://www.tensorflow.org/federated (accessed on 17 August 2020).

- Caldas, S.; Duddu, S.M.K.; Wu, P.; Li, T.; Konečný, J.; McMahan, H.B.; Smith, V.; Talwalkar, A. LEAF: A Benchmark for Federated Settings. arXiv 2018, arXiv:1812.01097. [Google Scholar]

- Dinh, C.T.; Tran, N.H.; Nguyen, M.N.H.; Hong, C.S.; Bao, W.; Zomaya, A.Y.; Gramoli, V. Federated Learning Over Wireless Networks: Convergence Analysis and Resource Allocation. IEEE/ACM Trans. Netw. 2020, 29, 398–409. [Google Scholar] [CrossRef]

- Verma, D.C.; White, G.; Julier, S.; Pasteris, S.; Chakraborty, S.; Cirincione, G. Approaches to address the data skew problem in federated learning. In Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications, Baltimore, MD, USA, 14–18 April 2019; p. 11006. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated Optimization in Heterogeneous Networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- He, H.; Garcia, E.A. Learning from Imbalanced Data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Yang, H.; Fang, M.; Liu, J. Achieving Linear Speedup with Partial Worker Participation in Non-IID Federated Learning. arXiv 2021, arXiv:2101.11203. [Google Scholar]

- Xia, Q.; Ye, W.; Tao, Z.; Wu, J.; Li, Q. A survey of federated learning for edge computing: Research problems and solutions. High-Confidence Comput. 2021, 1, 100008. [Google Scholar] [CrossRef]

- Qu, L.; Zhou, Y.; Liang, P.P.; Xia, Y.; Wang, F.; Fei-Fei, L.; Adeli, E.; Rubin, D. Rethinking Architecture Design for Tackling Data Heterogeneity in Federated Learning. arXiv 2021, arXiv:2106.06047. [Google Scholar]

- Pham, Q.V.; Dev, K.; Maddikunta, P.K.R.; Gadekallu, T.R.; Huynh-The, T. Fusion of Federated Learning and Industrial Internet of Things: A Survey. arXiv 2021, arXiv:2101.00798. [Google Scholar]

- Zhang, X.; Hou, H.; Fang, Z.; Wang, Z. Industrial Internet Federated Learning Driven by IoT Equipment ID and Blockchain. Wirel. Commun. Mob. Comput. 2021, 2021, 1–9. [Google Scholar] [CrossRef]

- Federated Learning for Privacy Preservation of Healthcare Data in Internet of Medical Things–EMBS. Available online: https://www.embs.org/federated-learning-for-privacy-preservation-of-healthcare-data-in-internet-of-medical-things/ (accessed on 28 November 2021).

- Vatsalan, D.; Sehili, Z.; Christen, P.; Rahm, E. Privacy-Preserving Record Linkage for Big Data: Current Approaches and Research Challenges. In Handbook of Big Data Technologies; Springer International Publishing: Cham, Switzerland, 2017; pp. 851–895. [Google Scholar] [CrossRef]

- Chehimi, M.; Saad, W. Quantum Federated Learning with Quantum Data. arXiv 2021, arXiv:2106.00005. [Google Scholar]

- Friedman, C.P.; Wong, A.K.; Blumenthal, D. Policy: Achieving a nationwide learning health system. Sci. Transl. Med. 2010, 2, 57cm29. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Coronavirus (COVID-19) Events as They Happen. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/events-as-they-happen (accessed on 14 June 2020).

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-Edge AI : Intelligentizing Mobile Edge Computing, Caching and Communication by Federated Learning. IEEE Netw. 2000, 33, 156–165. [Google Scholar] [CrossRef] [Green Version]

- Amiria, M.M.; Gündüzb, D.; Kulkarni, S.R.; Poor, H.V. Convergence of Update Aware Device Scheduling for Federated Learning at the Wireless Edge. IEEE Trans. Wirel. Commun. 2021, 20, 3643–3658. [Google Scholar] [CrossRef]

- Du, Z.; Wu, C.; Yoshinaga, T.; Yau, K.-L.A.; Ji, Y.; Li, J. Federated Learning for Vehicular Internet of Things: Recent Advances and Open Issues. IEEE Open J. Comput. Soc. 2020, 1, 45–61. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; He, K.; Chen, X. Personalized Federated Learning for Intelligent IoT Applications: A Cloud-Edge Based Framework. IEEE Open J. Comput. Soc. 2020, 1, 35–44. [Google Scholar] [CrossRef]

- Lin, B.Y.; He, C.; Zeng, Z.; Wang, H.; Huang, Y.; Soltanolkotabi, M.; Xiang, R.; Avestimehr, S. FedNLP: A Research Platform for Federated Learning in Natural Language Processing. arXiv 2021, arXiv:2104.08815. [Google Scholar]

- Ammad-Ud-Din, M.; Ivannikova, E.; Khan, S.A.; Oyomno, W.; Fu, Q.; Tan, K.E.; Flanagan, A. Federated collaborative filtering for privacy-preserving personalized recommendation system. arXiv 2019, arXiv:1901.09888. [Google Scholar]

- Zhao, S.; Bharati, R.; Borcea, C.; Chen, Y. Privacy-Aware Federated Learning for Page Recommendation. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 1071–1080. [Google Scholar]

- Chai, D.; Wang, L.; Chen, K.; Yang, Q. Secure Federated Matrix Factorization. IEEE Intell. Syst. 2020, 36, 11–20. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, F.; Chen, F.; Li, H. Meta-SGD: Learning to Learn Quickly for Few-Shot Learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Wang, Q.; Yin, H.; Chen, T.; Yu, J.; Zhou, A.; Zhang, X. Fast-adapting and privacy-preserving federated recommender system. Very Large Data Bases J. 2021. [Google Scholar] [CrossRef]

- Yang, L.; Tan, B.; Zheng, V.W.; Chen, K.; Yang, Q. Federated Recommendation Systems. In Federated Learning; Springer: Cham, Switzerland, 2020; Volume 12500, pp. 225–239. [Google Scholar]

- Jalalirad, A.; Scavuzzo, M.; Capota, C.; Sprague, M. A Simple and Efficient Federated Recommender System. In Proceedings of the 6th IEEE/ACM International Conference on Big Data Computing, Applications and Technologies, Auckland, New Zealand, 2–5 December 2019; pp. 53–58. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef] [Green Version]

- Yang, T.; Andrew, G.; Eichner, H.; Sun, H.; Li, W.; Kong, N.; Beaufays, F. Applied federated learning: Improving google keyboard query suggestions. arXiv 2018, arXiv:1812.02903. [Google Scholar]

- Basu, P.; Roy, T.S.; Naidu, R.; Muftuoglu, Z.; Singh, S.; Mireshghallah, F. Benchmarking Differential Privacy and Federated Learning for BERT Models. arXiv 2021, arXiv:2106.13973. [Google Scholar]

- Hong, Z.; Wang, J.; Qu, X.; Liu, J.; Zhao, C.; Xiao, J. Federated Learning with Dynamic Transformer for Text to Speech. arXiv 2021, arXiv:2107.08795. [Google Scholar]

- Liu, M.; Ho, S.; Wang, M.; Gao, L.; Jin, Y.; Zhang, H. Federated Learning Meets Natural Language Processing: A Survey. arXiv 2021, arXiv:2107.08795. [Google Scholar]

- Nguyen, T.D.; Marchal, S.; Miettinen, M.; Fereidooni, H.; Asokan, N.; Sadeghi, A.R. DIOT: A Federated Self-learning Anomaly Detection System for IoT. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–10 July 2019; pp. 756–767. [Google Scholar]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated Learning for Internet of Things: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Federated Learning for Internet of Things and Big Data|Hindawi. Available online: https://www.hindawi.com/journals/wcmc/si/891247/ (accessed on 28 November 2021).

- Ng, D.; Lan, X.; Yao, M.M.-S.; Chan, W.P.; Feng, M. Federated learning: A collaborative effort to achieve better medical imaging models for individual sites that have small labelled datasets. Quant. Imaging Med. Surg. 2021, 11, 852–857. [Google Scholar] [CrossRef] [PubMed]

- Stripelis, D.; Ambite, J.L.; Lam, P.; Thompson, P. Scaling Neuroscience Research Using Federated Learning. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), Nice, France, 13–16 April 2021; pp. 1191–1195. [Google Scholar]

- Yates, T.S.; Ellis, C.T.; Turk-Browne, N.B. The promise of awake behaving infant fMRI as a deep measure of cognition. Curr. Opin. Behav. Sci. 2020, 40, 5–11. [Google Scholar] [CrossRef]

- Połap, D.; Srivastava, G.; Yu, K. Agent architecture of an intelligent medical system based on federated learning and blockchain technology. J. Inf. Secur. Appl. 2021, 58, 102748. [Google Scholar] [CrossRef]

- Marek, S.; Greene, D.J. Precision functional mapping of the subcortex and cerebellum. Curr. Opin. Behav. Sci. 2021, 40, 12–18. [Google Scholar] [CrossRef]

- Smith, D.M.; Perez, D.C.; Porter, A.; Dworetsky, A.; Gratton, C. Light through the fog: Using precision fMRI data to disentangle the neural substrates of cognitive control. Curr. Opin. Behav. Sci. 2021, 40, 19–26. [Google Scholar] [CrossRef]

- Li, X.; Gu, Y.; Dvornek, N.; Staib, L.H.; Ventola, P.; Duncan, J.S. Multi-site fMRI analysis using privacy-preserving federated learning and domain adaptation: ABIDE results. Med. Image Anal. 2020, 65, 101765. [Google Scholar] [CrossRef]

- Can, Y.S.; Ersoy, C. Privacy-preserving Federated Deep Learning for Wearable IoT-based Biomedical Monitoring. ACM Trans. Internet Technol. 2021, 21, 1–17. [Google Scholar] [CrossRef]

- Pharma Companies Join Forces to Train AI for Drug Discovery Collectively|BioPharmaTrend. Available online: https://www.biopharmatrend.com/post/97-pharma-companies-join-forces-to-train-ai-for-drug-discovery-collectively/ (accessed on 10 July 2020).

- Yoo, J.H.; Son, H.M.; Jeong, H.; Jang, E.-H.; Kim, A.Y.; Yu, H.Y.; Jeon, H.J.; Chung, T.-M. Personalized Federated Learning with Clustering: Non-IID Heart Rate Variability Data Application. In Proceedings of the 2021 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 20–22 October 2021; pp. 1046–1051. [Google Scholar]

- Aich, S.; Sinai, N.K.; Kumar, S.; Ali, M.; Choi, Y.R.; Joo, M.-I.; Kim, H.-C. Protecting Personal Healthcare Record Using Blockchain & Federated Learning Technologies. In Proceedings of the 2021 23rd International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 7–10 February 2021; pp. 109–112. [Google Scholar]

- Sarma, K.V.; Harmon, S.; Sanford, T.; Roth, H.R.; Xu, Z.; Tetreault, J.; Xu, D.; Flores, M.G.; Raman, A.G.; Kulkarni, R.; et al. Federated learning improves site performance in multicenter deep learning without data sharing. J. Am. Med. Inform. Assoc. 2021, 28, 1259–1264. [Google Scholar] [CrossRef]

- Pfitzner, B.; Steckhan, N.; Arnrich, B. Federated Learning in a Medical Context: A Systematic Literature Review. ACM Trans. Internet Technol. 2021, 21, 1–31. [Google Scholar] [CrossRef]

- Pokhrel, S.R.; Choi, J. Federated Learning With Blockchain for Autonomous Vehicles: Analysis and Design Challenges. IEEE Trans. Commun. 2020, 68, 4734–4746. [Google Scholar] [CrossRef]

- Saputra, Y.M.; Hoang, D.T.; Nguyen, D.N.; Dutkiewicz, E.; Mueck, M.D.; Srikanteswara, S. Energy Demand Prediction with Federated Learning for Electric Vehicle Networks. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; pp. 1–6. [Google Scholar]

- Savazzi, S.; Nicoli, M.; Bennis, M.; Kianoush, S.; Barbieri, L. Opportunities of Federated Learning in Connected, Cooperative, and Automated Industrial Systems. IEEE Commun. Mag. 2021, 59, 16–21. [Google Scholar] [CrossRef]

- Xianjia, Y.; Queralta, J.P.; Heikkonen, J.; Westerlund, T. An Overview of Federated Learning at the Edge and Distributed Ledger Technologies for Robotic and Autonomous Systems. Proc. Comput. Sci. 2021, 191, 135–142. [Google Scholar] [CrossRef]

- Federated Machine Learning in Anti-Financial Crime Processes Frequently Asked Questions. Available online: https://finreglab.org/wp-content/uploads/2020/12/FAQ-Federated-Machine-Learning-in-Anti-Financial-Crime-Processes.pdf (accessed on 24 October 2021).

- Federated Learning: The New Thing in AI/ML for Detecting Financial Crimes and Managing Risk—Morning Consult. Available online: https://morningconsult.com/opinions/federated-learning-the-new-thing-in-ai-ml-for-detecting-financial-crimes-and-managing-risk/ (accessed on 28 November 2021).

- Long, G.; Tan, Y.; Jiang, J.; Zhang, C. Federated Learning for Open Banking. In Federated Learning; Springer: Cham, Switzerland, 2020; pp. 240–254. [Google Scholar]

- Federated Machine Learning for Finance or Fintech|Techwasti. Available online: https://medium.com/techwasti/federated-machine-learning-for-fintech-b875b918c5fe (accessed on 28 November 2021).

- Federated Machine Learning for Loan Risk Prediction. Available online: https://www.infoq.com/articles/federated-machine-learning/ (accessed on 28 November 2021).

- Rieke, N.; Hancox, J.; Li, W.; Milletarì, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The future of digital health with federated learning. NPJ Digit. Med. 2020, 3, 1–7. [Google Scholar] [CrossRef]

- Elbir, A.M.; Soner, B.; Coleri, S. Federated Learning for Vehicular Networks. arXiv 2020, arXiv:2006.01412. [Google Scholar]

- Nilsson, A.; Smith, S.; Ulm, G.; Gustavsson, E.; Jirstrand, M. A Performance Evaluation of Federated Learning Algorithms. In Proceedings of the Second Workshop on Distributed Infrastructures for Deep Learning, Rennes, France, 10–11 December 2018; pp. 1–8. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.Y.; Mao, B.H. Borderline-SMOTE : A New Over-Sampling Method in Imbalanced Data Sets Learning. In Advances in Intelligent Computing; Springer: Cham, Switzerland, 2005; Volume 3644, pp. 878–887. [Google Scholar]

- Fereidooni, H.; Marchal, S.; Miettinen, M.; Mirhoseini, A.; Mollering, H.; Nguyen, T.D.; Rieger, P.; Sadeghi, A.-R.; Schneider, T.; Yalame, H.; et al. SAFELearn: Secure Aggregation for private FEderated Learning. In Proceedings of the 2021 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 27 May 2021; pp. 56–62. [Google Scholar] [CrossRef]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical Secure Aggregation for Federated Learning on User-Held Data. arXiv 2016, arXiv:1611.04482. [Google Scholar]

- Yang, C.S.; So, J.; He, C.; Li, S.; Yu, Q.; Avestimehr, S. LightSecAgg: Rethinking Secure Aggregation in Federated Learning. arXiv 2021, arXiv:2109.14236. [Google Scholar]

- LEAF. Available online: https://leaf.cmu.edu/ (accessed on 25 January 2022).

- He, C.; Li, S.; So, J.; Zeng, X.; Zhang, M.; Wang, H.; Shen, L.; Yang, Y.; Yang, Q.; Avestimehr, S.; et al. FedML: A Research Library and Benchmark for Federated Machine Learning. arXiv 2020, arXiv:2007.13518. [Google Scholar]

- Razmi, N.; Matthiesen, B.; Dekorsy, A.; Popovski, P. Ground-Assisted Federated Learning in LEO Satellite Constellations. IEEE Wirel. Commun. Lett. 2022. [Google Scholar] [CrossRef]

- Huang, B.; Li, X.; Song, Z.; Yang, X. FL-NTK: A Neural Tangent Kernel-based Framework for Federated Learning Convergence Analysis. arXiv 2021, arXiv:2105.05001. [Google Scholar]

- Garg, A.; Saha, A.K.; Dutta, D. Direct Federated Neural Architecture Search. arXiv 2020, arXiv:2010.06223. [Google Scholar]

- Zhu, W.; White, A.; Luo, J. Federated Learning of Molecular Properties with Graph Neural Networks in a Heterogeneous Setting. Cell Press, 2022; under review. [Google Scholar]

- Lee, J.W.; Oh, J.; Lim, S.; Yun, S.Y.; Lee, J.G. TornadoAggregate: Accurate and Scalable Federated Learning via the Ring-Based Architecture. arXiv 2020, arXiv:2012.03214. [Google Scholar]

- Cheng, G.; Chadha, K.; Duchi, J. Fine-tuning in Federated Learning: A simple but tough-to-beat baseline. arXiv 2021, arXiv:2108.07313. [Google Scholar]

- Sahu, A.K.; Li, T.; Sanjabi, M.; Zaheer, M.; Talwalkar, A.; Smith, V. On the convergence of federated optimization in heterogeneous networks. arXiv 2018, arXiv:1812.06127. [Google Scholar]

- Federated Learning: A Simple Implementation of FedAvg (Federated Averaging) with PyTorch | by Ece Işık Polat | Towards Data Science. Available online: https://towardsdatascience.com/federated-learning-a-simple-implementation-of-fedavg-federated-averaging-with-pytorch-90187c9c9577 (accessed on 25 January 2022).

- Konečný, J.; McMahan, H.B.; Ramage, D.; Richtárik, P. Federated Optimization: Distributed Machine Learning for On-Device Intelligence. arXiv 2016, arXiv:1610.02527. [Google Scholar]

- FedSVRG Based Communication Efficient Scheme for Federated Learning in MEC Networks | Request PDF. Available online: https://www.researchgate.net/publication/352418092_FedSVRG_Based_Communication_Efficient_Scheme_for_Federated_Learning_in_MEC_Networks (accessed on 19 January 2022).

- Liu, Y.; Kang, Y.; Zhang, X.; Li, L.; Cheng, Y.; Chen, T.; Hong, M.; Yang, Q. A Communication Efficient Collaborative Learning Framework for Distributed Features. arXiv 2019, arXiv:1912.11187. [Google Scholar]

- Wu, R.; Scaglione, A.; Wai, H.T.; Karakoc, N.; Hreinsson, K.; Ma, W.K. Federated Block Coordinate Descent Scheme for Learning Global and Personalized Models. arXiv 2020, arXiv:2012.13900. [Google Scholar]

- GitHub—REIYANG/FedBCD: Federated Block Coordinate Descent (FedBCD) code for ‘Federated Block Coordinate Descent Scheme for Learning Global and Personalized Models’, Accepted by AAAI Conference on Artificial Intelligence 2021. Available online: https://github.com/REIYANG/FedBCD (accessed on 25 January 2022).

- Donevski, I.; Nielsen, J.J.; Popovski, P. On Addressing Heterogeneity in Federated Learning for Autonomous Vehicles Connected to a Drone Orchestrator. Front. Commun. Netw. 2021, 2, 28. [Google Scholar] [CrossRef]

- Mohri, M.; Sivek, G.; Suresh, A.T. Agnostic Federated Learning. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Ro, J.; Chen, M.; Mathews, R.; Mohri, M.; Suresh, A.T. Communication-Efficient Agnostic Federated Averaging. arXiv 2021, arXiv:2104.02748. [Google Scholar]

- Afonin, A.; Karimireddy, S.P. Towards Model Agnostic Federated Learning Using Knowledge Distillation. arXiv 2021, arXiv:2110.15210. [Google Scholar]

- Wang, H.; Yurochkin, M.; Sun, Y.; Papailiopoulos, D.; Khazaeni, Y. Federated Learning with Matched Averaging. arXiv 2020, arXiv:2002.06440. [Google Scholar]

- Layer-Wise Federated Learning with FedMA—MIT-IBM Watson AI Lab. Available online: https://mitibmwatsonailab.mit.edu/research/blog/fedma-layer-wise-federated-learning-with-the-potential-to-fight-ai-bias/ (accessed on 25 January 2022).

- Golam Kibria, B.M.; Banik, S. Some Ridge Regression Estimators and Their Performances. J. Mod. Appl. Stat. Methods 2016, 15, 12. [Google Scholar]

- Zhao, L.; Ni, L.; Hu, S.; Chen, Y.; Zhou, P.; Xiao, F.; Wu, L. InPrivate Digging: Enabling Tree-based Distributed Data Mining with Differential Privacy. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 2087–2095. [Google Scholar] [CrossRef]

- Wu, Y.; Cai, S.; Xiao, X.; Chen, G.; Ooi, B.C. Privacy preserving vertical federated learning for tree-based models. Proc. VLDB Endow. 2020, 13, 2090–2103. [Google Scholar] [CrossRef]

- Li, Q.; Wen, Z.; He, B. Practical Federated Gradient Boosting Decision Trees. Proc. Conf. AAAI Artif. Intell. 2020, 34, 4642–4649. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Liu, Z.; Liang, Y.; Meng, C.; Zhang, J.; Zheng, Y. Federated Forest. IEEE Trans. Big Data 2020. [Google Scholar] [CrossRef]

- Dong, T.; Li, S.; Qiu, H.; Lu, J. An Interpretable Federated Learning-based Network Intrusion Detection Framework. arXiv 2022, arXiv:2201.03134. [Google Scholar]

- Chen, Y.; Qin, X.; Wang, J.; Yu, C.; Gao, W. FedHealth: A Federated Transfer Learning Framework for Wearable Healthcare. IEEE Intell. Syst. 2020, 35, 83–93. [Google Scholar] [CrossRef] [Green Version]

- Nikolaenko, V.; Weinsberg, U.; Ioannidis, S.; Joye, M.; Boneh, D.; Taft, N. Privacy-Preserving Ridge Regression on Hundreds of Millions of Records. In Proceedings of the 2013 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 19–22 May 2013; pp. 334–348. [Google Scholar] [CrossRef]

- Yurochkin, M.; Agarwal, M.; Ghosh, S.; Greenewald, K.; Hoang, N.; Khazaeni, Y. Bayesian Nonparametric Federated Learning of Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9-15 June 2019; pp. 12583–12597. [Google Scholar]

- Kibria, B.M.G.; Lukman, A.F. A New Ridge-Type Estimator for the Linear Regression Model: Simulations and Applications. Scientifica 2020, 2020, 1–16. [Google Scholar] [CrossRef]

- Chen, Y.-R.; Rezapour, A.; Tzeng, W.-G. Privacy-preserving ridge regression on distributed data. Inf. Sci. 2018, 451-452, 34–49. [Google Scholar] [CrossRef]

- Awan, S.; Li, F.; Luo, B.; Liu, M. Poster: A reliable and accountable privacy-preserving federated learning framework using the blockchain. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 2561–2563. [Google Scholar]

- Sanil, A.P.; Karr, A.F.; Lin, X.; Reiter, J.P. Privacy preserving regression modelling via distributed computation. In Proceedings of the KDD-2004—Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 677–682. [Google Scholar]

- An Example of Implementing FL for Linear Regression.|Download Scientific Diagram. Available online: https://www.researchgate.net/figure/An-example-of-implementing-FL-for-linear-regression_fig3_346038614 (accessed on 25 January 2022).

- Anand, A.; Dhakal, S.; Akdeniz, M.; Edwards, B.; Himayat, N. Differentially Private Coded Federated Linear Regression. In Proceedings of the 2021 IEEE Data Science and Learning Workshop (DSLW), Toronto, ON, Canada, 5–6 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Hardy, S.; Henecka, W.; Ivey-Law, H.; Nock, R.; Patrini, G.; Smith, G.; Thorne, B. Private federated learning on vertically partitioned data via entity resolution and additively homomorphic encryption. arXiv 2017, arXiv:1711.10677. [Google Scholar]

- Mandal, K.; Gong, G. PrivFL: Practical privacy-preserving federated regressions on high-dimensional data over mobile networks. In Proceedings of the 2019 ACM SIGSAC Conference on Cloud Computing Security Workshop, London, UK, 11 November 2019; pp. 57–68. [Google Scholar]

- Sattler, F.; Müller, K.R.; Samek, W. Clustered Federated Learning : Model-Agnostic Distributed Multi-Task Optimization under Privacy Constraints. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3710–3722. [Google Scholar] [CrossRef] [PubMed]

- Marfoq, O.; Neglia, G.; Bellet, A.; Kameni, L.; Vidal, R. Federated Multi-Task Learning under a Mixture of Distributions. Adv. Neural Inform. Proces. Syst. 2021, 34. [Google Scholar]

- Chen, F.; Luo, M.; Dong, Z.; Li, Z.; He, X. Federated Meta-Learning with Fast Convergence and Efficient Communication. arXiv 2018, arXiv:1802.07876. [Google Scholar]

- Zhou, F.; Wu, B.; Li, Z. Deep Meta-Learning: Learning to Learn in the Concept Space. arXiv 2018, arXiv:1802.03596. [Google Scholar]

- Lin, S.; Yang, G.; Zhang, J. A Collaborative Learning Framework via Federated Meta-Learning. In Proceedings of the 2020 IEEE 40th International Conference on Distributed Computing Systems (ICDCS), Singapore, 29 November–1 December 2020; pp. 289–299. [Google Scholar] [CrossRef]

- Federated Meta-Learning for Recommendation—arXiv Vanity. Available online: https://www.arxiv-vanity.com/papers/1802.07876/ (accessed on 25 January 2022).

- Yue, S.; Ren, J.; Xin, J.; Zhang, D.; Zhang, Y.; Zhuang, W. Efficient Federated Meta-Learning over Multi-Access Wireless Networks. IEEE J. Sel. Areas Commun. 2022. [Google Scholar] [CrossRef]

- Pye, S.K.; Yu, H. Personalized Federated Learning : A Combinational Approach. arXiv 2021, arXiv:2108.09618. [Google Scholar]

- Liu, B.; Wang, L.; Liu, M. Lifelong Federated Reinforcement Learning: A Learning Architecture for Navigation in Cloud Robotic Systems. IEEE Robot. Autom. Lett. 2019, 4, 4555–4562. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.; Liu, Y.; Chen, T.; Liu, M.; Yang, Q. Federated Transfer Reinforcement Learning for Autonomous Driving. arXiv 2019, arXiv:1910.06001:1910.06001. [Google Scholar]

- Wang, H.; Wu, Z.; Xing, E.P. Removing Confounding Factors Associated Weights in Deep Neural Networks Improves the Prediction Accuracy for Healthcare Applications. Biocomputing 2018, 24, 54–65. [Google Scholar] [CrossRef] [Green Version]

- Basnayake, V. Federated Learning for Enhanced Sensor Realiabity of Automated Wireless Networks. Master’s Thesis, University of Oulu, Oulu, Finland, 2019. [Google Scholar]

- Zeiler, M.; Fergus, R.; Wan, L.; Zhang, S.; Le Cun, Y. Regularization of Neural Networks using DropConnect. Int. Conf. Mach. Learn. 2012, 28, 1058–1066. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2009. [Google Scholar]

- Ren, J.; Ni, W.; Nie, G.; Tian, H. Research on Resource Allocation for Efficient Federated Learning. arXiv 2021, arXiv:2104.09177. [Google Scholar]

- Luo, M.; Chen, F.; Hu, D.; Zhang, Y.; Liang, J.; Feng, J. No Fear of Heterogeneity: Classifier Calibration for Federated Learning with Non-IID Data. Adv. Neural Inform. Proces. Syst. 2021, 34. [Google Scholar]

- Chen, M.; Mao, B.; Ma, T. FedSA: A staleness-aware asynchronous Federated Learning algorithm with non-IID data. Futur. Gener. Comput. Syst. 2021, 120, 1–12. [Google Scholar] [CrossRef]

- Asad, M.; Moustafa, A.; Ito, T.; Aslam, M. Evaluating the Communication Efficiency in Federated Learning Algorithms. In Proceedings of the 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Dalian, China, 5–7 May 2021; pp. 552–557. [Google Scholar] [CrossRef]

- Shahid, O.; Pouriyeh, S.; Parizi, R.M.; Sheng, Q.Z.; Srivastava, G.; Zhao, L. Communication Efficiency in Federated Learning: Achievements and Challenges. arXiv 2021, arXiv:2107.10996. [Google Scholar]

- Kullback-Leibler Divergence—An Overview. ScienceDirect Topics. Available online: https://www.sciencedirect.com/topics/engineering/kullback-leibler-divergence (accessed on 24 March 2020).

- Augenstein, S.; McMahan, H.B.; Ramage, D.; Ramaswamy, S.; Kairouz, P.; Chen, M.; Mathews, R. Generative Models for Effective ML on Private, Decentralized Datasets. arXiv 2019, arXiv:1911.06679. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar] [CrossRef] [Green Version]

- Ulm, G.; Gustavsson, E.; Jirstrand, M. Functional Federated Learning in Erlang (ffl-erl). In International Workshop on Functional and Constraint Logic Programming; Springer: Cham, Switzerland, 2019; pp. 162–178. [Google Scholar] [CrossRef] [Green Version]

- Sprague, M.R.; Jalalirad, A.; Scavuzzo, M.; Capota, C.; Neun, M.; Do, L.; Kopp, M. Asynchronous Federated Learning for Geospatial Applications. In Communications in Computer and Information Science; Springer: Cham, Switzerland, 2019; Volume 967, pp. 21–28. [Google Scholar] [CrossRef]

- Hegedűs, I.; Danner, G.; Jelasity, M. Gossip Learning as a Decentralized Alternative to Federated Learning. In IFIP International Conference on Distributed Applications and Interoperable Systems; Springer: Cham, Switzerland, 2019; pp. 74–90. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.; Chen, J.; Zhang, J.; Wu, D.; Teng, J.; Yu, S. PDGAN: A Novel Poisoning Defense Method in Federated Learning Using Generative Adversarial Network. In Algorithms and Architectures for Parallel Processing; Springer: Cham, Switzerland, 2020; pp. 595–609. [Google Scholar] [CrossRef]

- Anelli, V.W.; Deldjoo, Y.; Di Noia, T.; Ferrara, A. Towards Effective Device-Aware Federated Learning. In International Conference of the Italian Association for Artificial Intelligence; Springer: Cham, Switzerland, 2019; pp. 477–491. [Google Scholar] [CrossRef] [Green Version]

- Mills, J.; Hu, J.; Min, G. Communication-Efficient Federated Learning for Wireless Edge Intelligence in IoT. IEEE Internet Things J. 2019, 7, 5986–5994. [Google Scholar] [CrossRef] [Green Version]

- Sozinov, K.; Vlassov, V.; Girdzijauskas, S. Human Activity Recognition Using Federated Learning. In Proceedings of the 2018 IEEE International Conference on Parallel & Distributed Processing with Applications, Ubiquitous Computing & Communications, Big Data & Cloud Computing, Social Computing & Networking, Sustainable Computing & Communications (ISPA/IUCC/BDCloud/SocialCom/SustainCom), Melbourne, VIC, Australia, 11–13 December 2018; pp. 1103–1111. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhou, Y.; Sun, Y.; Wang, Z.; Liu, B.; Li, K. Federated Learning in Smart Cities: A Comprehensive Survey. arXiv 2021, arXiv:2102.01375. [Google Scholar]

| Ref | Year | Journal/Conference | Problem Area | Contribution | Related Research |

|---|---|---|---|---|---|

| [12] | 2020 | arXiv:1909.11875v2 | Applications and challenges of FL implementation of FL in edge networks | “They highlighted the challenges of FL and reviewed prevailing solutions. Discusses the applications of FL for the optimization of MEC.” | [11,13,14,15] |

| [11] | 2019 | “ACM Transactions on Intelligent Systems and Technology” | Survey on FL | “They presented an initial tutorial on classification of different FL settings, e.g., horizontal FL, vertical FL, and Federated Transfer Learning.” | [7,15,16,17] |

| [13] | 2019 | arXiv preprint arXiv:1908.06847 | Survey on implementation of FL in wireless networks | “They provided a survey on FL in optimizing resource allocation while preserving data privacy in wireless networks.” | [5,12,18,19] |

| [17] | 2019 | arXiv preprint arXiv:1908.07873 | Survey on FL approaches and its challenges | “This paper provides a detailed tutorial on Federated Learning and discusses execution issues of FL.” | [5,12,14,17,20] |

| [12] | [11] | [13] | [17] | [20] | This Paper | |

|---|---|---|---|---|---|---|

| Problem Discussed | Literature Survey | Systematic Survey | Literature Survey | Literature Survey | Systematic Literature Review | Systematic Literature Review |

| Discussion of novel algorithms | × | × | × | √ | √ | √ |

| Discussion of the applications of FL in field of data science | √ | × | √ | √ | √ | √ |

| Discussion of the datasets of FL | × | × | × | × | × | √ |

| FL implementation in edge networks | √ | √ | × | × | √ | √ |

| Taxonomy for FL approach | √ | × | × | × | √ | √ |

| Challenges and gaps on implementing FL | √ | × | × | × | √ | √ |

| Year | 2019 | 2019 | 2018 | 2019 | 2021 | 2021 |

| Sr. No | RQs | Motivation |

|---|---|---|

| RQ1 | Which types of mobile edge applications and sub-fields can obtain advantage from FL? | The industries can obtain many benefits by deploying the FL, and these areas of interest need to be determined. |

| RQ2 | Which algorithms, tools, and techniques have been implemented in edge-based applications using federated learning? | This would help to find the implementation and advantages of deploying FL in mobile edges |

| RQ3 | Which datasets have been used for the implementation of federated learning? | To know about the details of datasets available to experiment in the field of FL. |

| RQ4 | What are the possible challenges and gaps of implementing federated learning in mobile edge networks? | The implementation of FL in different fields may face some issues and challenges, which are need to be discussed. |

| Sr. No | Digital Library | Link |

|---|---|---|

| 01 | ACM Digital Library | http://dl.acm.org (accessed on 27 April 2020) |

| 02 | IEEE Xplore | http://ieeexplore.ieee.org (accessed on 20 April 2020) |

| 03 | ScienceDirect | http://www.sciencedirect.com (accessed on 24 April 2020) |

| 04 | Springer Link | http://link.springer.com (accessed on 30 April 2020) |

| 05 | Wiley Online Library | http://onlinelibrary.wiley.com (accessed on 28 April 2020 |

| Criteria | Description | |

|---|---|---|

| Inclusion Criteria | IC1 | Papers that unambiguously examine federated learning and are accessible. |

| IC2 | Papers that mention and investigate the implementation approaches and applications of federated learning. | |

| IC3 | Papers that are focused on presenting research trend opportunities and the challenges of adopting federated learning. | |

| IC4 | Papers that are published as technical reports and book chapters. | |

| IC5 | Papers that are focused on presenting research trend opportunities and challenges of adopting federated learning. | |

| Exclusion Criteria | EC1 | Papers that have duplicate or identical titles |

| EC2 | Papers that do not entail federated learning as a primary study. | |

| EC3 | Papers that are not accessible | |

| EC4 | Papers in which the methodology is unclear |

| Library | Initial Results without Filtering | Title and Keyword Selected | Abstract Selected | Full Text Selected |

|---|---|---|---|---|

| ACM | 1194 | 158 | 43 | 22 |

| IEEE | 119 | 70 | 32 | 17 |

| ScienceDirect | 879 | 115 | 46 | 29 |

| Springer | 644 | 55 | 28 | 16 |

| Wiley | 4710 | 80 | 36 | 21 |

| Results | 7546 | 478 | 185 | 105 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shaheen, M.; Farooq, M.S.; Umer, T.; Kim, B.-S. Applications of Federated Learning; Taxonomy, Challenges, and Research Trends. Electronics 2022, 11, 670. https://doi.org/10.3390/electronics11040670

Shaheen M, Farooq MS, Umer T, Kim B-S. Applications of Federated Learning; Taxonomy, Challenges, and Research Trends. Electronics. 2022; 11(4):670. https://doi.org/10.3390/electronics11040670

Chicago/Turabian StyleShaheen, Momina, Muhammad Shoaib Farooq, Tariq Umer, and Byung-Seo Kim. 2022. "Applications of Federated Learning; Taxonomy, Challenges, and Research Trends" Electronics 11, no. 4: 670. https://doi.org/10.3390/electronics11040670

APA StyleShaheen, M., Farooq, M. S., Umer, T., & Kim, B.-S. (2022). Applications of Federated Learning; Taxonomy, Challenges, and Research Trends. Electronics, 11(4), 670. https://doi.org/10.3390/electronics11040670