Enhanced Robots as Tools for Assisting Agricultural Engineering Students’ Development

Abstract

:1. Introduction

2. Methodology

2.1. Educational Settings

- Professors of agricultural engineering;

- Students during their final thesis;

- Students during their internship period;

- Students during their curricular activities.

2.2. Technical Background and Content

3. Key Implementation Elements and Directions

3.1. Realistic Operations Assignment

3.2. Use of Alternative Vendors and Recycling

3.3. Simple but Robust Electromechanical Layout

3.4. Low-Level Controlling Mechanism

3.5. Provision for “Smart”, High-Level Functions

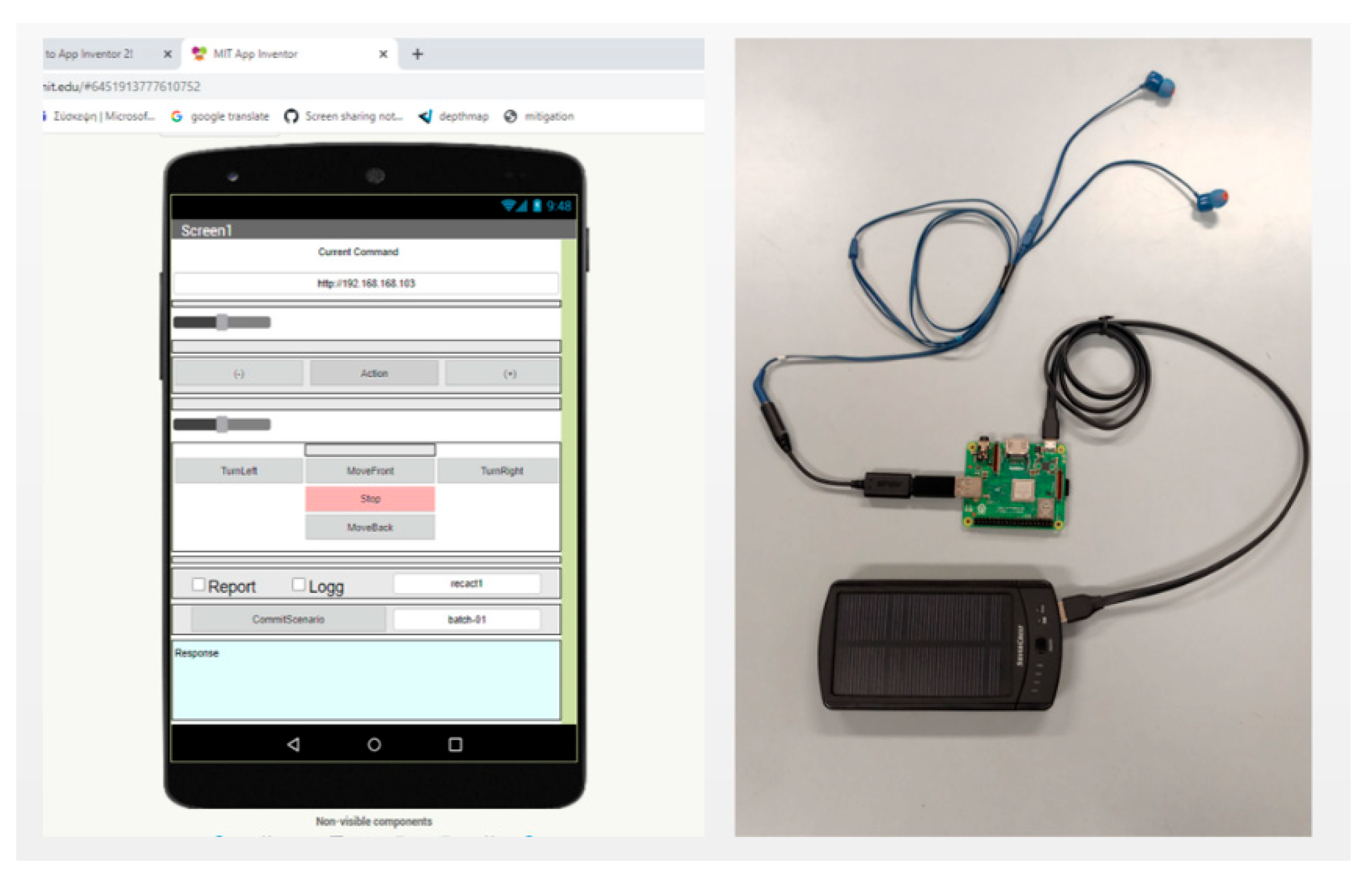

3.6. Control via Smartphones/Tablets

3.7. Voice Command Options

3.8. Machine Vision Options

3.9. Efficient Monitoring Functions

3.10. Efficient GPS Functionality

3.11. Larger Driving Circuits, Batteries, and Assistance by Solar Panels

3.12. Modularity and Reusability

3.13. COVID-19 Restrictions Considerations

3.14. Priority for Safety

3.15. Fluent Documentation and Versioning

3.16. Components’ Interoperation Overview

4. Experimentation, Results, and Evaluation

4.1. Technical Experimentation Aspect

4.2. Educational Evaluation Aspect

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Statements | Mean |

|---|---|

| The proposed application assists students to better understand hardware interconnection issues | 4.321 |

| The proposed application assists students to better understand software cooperation issues | 4.056 |

| The scaled-up version of the proposed system is suitable for farm usage | 4.135 |

| The scaled-up version of the proposed system is more attractive as an educational activity | 4.097 |

| The occasional failures during the implementation stages affect the students′ faith and cause them to lose their confidence to finish the work | 2.449 |

| The occasional failures during the implementation stages make students consider that their instructors are inadequate | 2.317 |

| The teamworking experience enhances the students′ self-esteem | 4.114 |

| The teamworking experience results in team bonding | 4.396 |

| Students′ involvement in the design and implementation stages increased their ability to compile unknown and innovative technologies | 4.340 |

| Students′ involvement in the implementation stages increased their ability to document and communicate their work | 4.471 |

| The proposed activity helps students to understand the significance of the fusion of Informatics, Networking, Robotics and Artificial Intelligence in modern Agriculture | 4.437 |

| This activity adds on the skills needed for students′ future professional career | 4.339 |

| The presented activity assists to better understand the objectives of your school or university′s curriculum | 4.373 |

| Similar activities should be added to the school or university′s curriculum | 4.547 |

References

- Saiz-Rubio, V.; Rovira-Más, F. From smart farming towards agriculture 5.0: A review on crop data management. Agronomy 2020, 10, 207. [Google Scholar] [CrossRef] [Green Version]

- Cortignani, R.; Carulli, G.; Dono, G. COVID-19 and labour in agriculture: Economic and productive impacts in an agricultural area of the Mediterranean. Ital. J. Agron. 2020, 15, 172–181. [Google Scholar] [CrossRef]

- Shen, Y.; Guo, D.; Long, F.; Mateos, L.A.; Ding, H.; Xiu, Z.; Hellman, R.B.; King, A.; Chen, S.; Zhang, C.; et al. Robots under COVID-19 pandemic: A comprehensive survey. IEEE Access 2021, 9, 1590–1615. [Google Scholar] [CrossRef] [PubMed]

- National Research Council. Transforming Agricultural Education for a Changing World; The National Academies Press: Washington, DC, USA, 2009. [Google Scholar] [CrossRef]

- Ahmad, L.; Nabi, F. Agriculture 5.0: Artificial Intelligence, IoT, and Machine Learning, 1st ed.; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Anwar, S.; Bascou, N.A.; Menekse, M.; Kardgar, A. A systematic review of studies on educational robotics. J. Pre-Coll. Eng. Educ. Res. J-PEER 2019, 9, 2. [Google Scholar] [CrossRef] [Green Version]

- Sapounidis, T.; Alimisis, D. Educational robotics curricula: Current trends and shortcomings. In Education in & with Robotics to Foster 21st-Century Skills, Proceedings of the EDUROBOTICS 2021: Educational Robotics International Conference, Siena, Italy, 25–26 February 2021; Springer: Cham, Switzerland, 2021; pp. 127–138. [Google Scholar] [CrossRef]

- Phan, M.H.; Ngo, H.Q.T. A multidisciplinary mechatronics program: From Project-Based learning to a Community-Based approach on an open platform. Electronics 2020, 9, 954. [Google Scholar] [CrossRef]

- Fisher-Maltese, C.; Zimmerman, T.D. A Garden-Based approach to teaching life science produces shifts in students’ attitudes toward the environment. Int. J. Environ. Sci. Educ. 2015, 10, 51–66. [Google Scholar]

- Stubbs, E.A.; Myers, B.E. Multiple Case Study of STEM in School-Based Agricultural Education. J. Agric. Educ. 2015, 56, 188–203. [Google Scholar] [CrossRef]

- Roehrig, G.H.; Moore, T.J.; Wang, H.H.; Park, M.S. Is adding the E enough? Investigating the impact of K-12 engineering standards on the implementation of STEM integration. Sch. Sci. Math. 2012, 112, 31–44. [Google Scholar] [CrossRef]

- Tan, J.T.C.; Iocchi, L.; Eguchi, A.; Okada, H. Bridging Robotics Education between High School and University: RoboCup@Home Education. In Proceedings of the IEEE AFRICON Conference, Accra, Ghana, 25–27 September 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Grimstad, L.; From, P.J. The Thorvald II agricultural robotic system. Robotics 2017, 6, 24. [Google Scholar] [CrossRef] [Green Version]

- Fountas, S.; Mylonas, N.; Malounas, I.; Rodias, E.; Santos, C.H.; Pekkeriet, E. Agricultural Robotics for Field Operations. Sensors 2020, 20, 2672. [Google Scholar] [CrossRef] [PubMed]

- Pozzi, M.; Prattichizzo, D.; Malvezzi, M. Accessible Educational Resources for Teaching and Learning Robotics. Robotics 2021, 10, 38. [Google Scholar] [CrossRef]

- Borges, J.; Dias, T.G.; Cunha, J.F. A new Group-Formation method for student projects. Eur. J. Eng. Educ. 2019, 34, 573–585. [Google Scholar] [CrossRef]

- Markham, T. Project Based Learning. Teach. Libr. 2011, 39, 38–42. [Google Scholar]

- Smith, B.L.; MacGregor, J.T. What is collaborative learning. In Collaborative Learning: A Sourcebook for Higher Education; Goodsell, A.S., Maher, M.R., Tinto, V., Eds.; National Center on Postsecondary Teaching, Learning and Assessment, Pennsylvania State University: State College, PA, USA, 1992. [Google Scholar]

- King, A. Structuring Peer Interaction to Promote High-Level Cognitive Processing. Theory Pract. 2002, 41, 33–39. [Google Scholar] [CrossRef]

- King, A. Technology: The Future of Agriculture. Nat. Cell Biol. 2017, 544, S21–S23. [Google Scholar] [CrossRef] [Green Version]

- Shamshiri, R.R.; Weltzien, C.; Hameed, I.A.; Yule, I.J.; Grift, T.E.; Balasundram, S.K.; Pitonakova, L.; Ahmad, D.; Chowdhary, G. Research and development in agricultural robotics: A perspective of digital farming. Int. J. Agric. Biol. Eng. 2018, 11, 1–11. [Google Scholar] [CrossRef]

- Fountas, S.; Gemtos, T.A.; Blackmore, S. Robotics and Sustainability in Soil Engineering. In Soil Engineering; Springer: Berlin, Germany, 2010; pp. 69–80. [Google Scholar]

- Loukatos, D.; Petrongonas, E.; Manes, K.; Kyrtopoulos, I.-V.; Dimou, V.; Arvanitis, K.G. A Synergy of Innovative Technologies towards Implementing an Autonomous DIY Electric Vehicle for Harvester-Assisting Purposes. Machines 2021, 9, 82. [Google Scholar] [CrossRef]

- Loukatos, D.; Templalexis, C.; Lentzou, D.; Xanthopoulos, G.; Arvanitis, K.G. Enhancing a flexible robotic spraying platform for distant plant inspection via High-Quality thermal imagery data. Comput. Electron. Agric. 2021, 190, 106462. [Google Scholar] [CrossRef]

- Arduino Uno. Arduino Uno Board Description on the Official Arduino Site. 2021. Available online: https://store.arduino.cc/products/arduino-uno-rev3 (accessed on 25 September 2021).

- Arduino Mega. Arduino Mega Board Description on the Official Arduino Site. Available online: https://store.arduino.cc/products/arduino-mega-2560-rev3 (accessed on 25 September 2021).

- Raspberry. Raspberry Pi 3 Model B Board Description on the Official Raspberry Site. 2021. Available online: https://www.raspberrypi.org/products/raspberry-pi-3-model-b (accessed on 30 September 2021).

- MIT App Inventor. Description of the MIT App Inventor Programming Environment. 2021. Available online: http://appinventor.mit.edu/explore/ (accessed on 10 October 2021).

- SOPARE. Sound Pattern Recognition—SOPARE. 2021. Available online: https://www.bishoph.org/ (accessed on 30 October 2021).

- EDGE IMPULSE. The Edge Impulse Machine Learning Development Platform. 2021. Available online: https://www.edgeimpulse.com/ (accessed on 20 September 2021).

- ASUS. ASUS AI Noise-Canceling Mic Adapter with USB-C 3.5 mm Connection. 2021. Available online: https://www.asus.com/Accessories/Streaming-Kit/All-series/AI-Noise-Canceling-Mic-Adapter/ (accessed on 30 September 2021).

- Pixy2. Description of the Pixy2 AI-Assisted Robot Vision Camera. 2021. Available online: https://pixycam.com/pixy2/ (accessed on 25 September 2021).

- Intel® NCS2. Intel® Neural Compute Stick 2. 2021. Available online: https://www.intel.com/content/www/us/en/developer/tools/neural-compute-stick/overview.html (accessed on 30 September 2021).

- OAK-D. Luxonis OAK-D Documentation. 2021. Available online: https://docs.luxonis.com/projects/hardware/en/latest/pages/BW1098OAK.html (accessed on 20 September 2021).

- Gpsd. A GPS Service Daemon for Linux. 2021. Available online: https://gpsd.gitlab.io/gpsd/ (accessed on 20 September 2021).

- U-Center. The U-Center Evaluation Software Description. 2021. Available online: https://www.u-blox.com/en/product/u-center (accessed on 21 September 2021).

- U-Blox M9. The U-Blox M9 Products Generation. 2021. Available online: https://www.u-blox.com/en/robust-nature (accessed on 25 September 2021).

- Loukatos, D.; Zoulias, E.; Kyrtopoulos, L.-V.; Chondrogiannis, E.; Arvanitis, K.G. A Mixed Reality Approach Enriching the Agricultural Engineering Education Paradigm, against the COVID-19 Constraints. In Proceedings of the IEEE Global Engineering Education Conference (EDUCON), Vienna, Austria, 21–23 April 2021; pp. 1587–1592. [Google Scholar] [CrossRef]

- Muthuprasad, T.; Aiswarya, S.; Aditya, K.S.; Jha, G.K. Students’ perception and preference for online education in India during COVID-19 pandemic. Soc. Sci. Humanit. Open 2021, 3, 100101. [Google Scholar] [CrossRef]

- FLIR Lepton 3.5. Description of the FLIR Lepton 3.5 Thermal Module. Available online: https://www.flir.eu/news-center/camera-cores--components/flir-lepton-3.5-now-available-to-manufacturers-and-makers/ (accessed on 25 September 2021).

- Loukatos, D.; Kahn, K.; Alimisis, D. Flexible Techniques for Fast Developing and Remotely Controlling DIY Robots, with AI Flavor. In Educational Robotics in the Context of the Maker Movement; Advances in Intelligent Systems and Computing; Proceedings of EDUROBOTICS 2018: International Conference on Educational Robotics, Rome, Italy, 11 October 2018; Moro, M., Alimisis, D., Iocchi, L., Eds.; Springer: Cham, Switzerland, 2020; Volume 946. [Google Scholar] [CrossRef]

- ZED-F9P. The SparkFun ZED-F9P GPS-RTK2 Board Description. 2021. Available online: https://www.sparkfun.com/products/15136 (accessed on 25 September 2021).

- QGIS. The QGIS Geographic Information System Application Software, Release 3.10. 2021. Available online: https://blog.qgis.org/2019/11/02/qgis-3-10-a-coruna-is-released/ (accessed on 25 September 2021).

- QGroundControl. Description of the QGroundControl (QGC) Application. 2021. Available online: http://qgroundcontrol.com/ (accessed on 10 October 2021).

- Visioli, A. Practical PID Control; Springer: London, UK, 2006; ISBN 978-1-84628-585-1. [Google Scholar]

- Gravity INA219. The Gravity I2C Digital Wattmeter Module Using the INA219 Chip. 2020. Available online: https://www.dfrobot.com/product-1827.html (accessed on 25 September 2021).

- Likert, R. A Technique for the Measurement of Attitudes. Arch. Psychol. 1932, 140, 55. [Google Scholar]

- Google Forms. Repository of Guidance and Tools for the Google Forms. 2021. Available online: https://www.google.com/forms/about/ (accessed on 28 September 2021).

- Shaik, K.; Prajwal, E.; Sujeshkumar, B.; Bonu, M.; Reddy, B.V. GPS Based Autonomous Agricultural Robot. In Proceedings of the International Conference on Design Innovations for 3Cs Compute Communicate Control (ICDI3C), Bangalore, India, 25–28 April 2018; pp. 100–105. [Google Scholar] [CrossRef]

- Cantelli, L.; Bonaccorso, F.; Longo, D.; Melita, C.D.; Schillaci, G.; Muscato, G. A Small Versatile Electrical Robot for Autonomous Spraying in Agriculture. AgriEngineering 2019, 1, 391–402. [Google Scholar] [CrossRef] [Green Version]

- Sowjanya, K.D.; Sindhu, R.; Parijatham, M.; Srikanth, K.; Bhargav, P. Multipurpose autonomous agricultural robot. In Proceedings of the International Conference of Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 20–22 April 2017; IEEE: Piscataway, NJ, USA, 2017; Volume 2, pp. 696–699. [Google Scholar]

- Mueller-Sim, T.; Jenkins, M.; Abel, J.; Kantor, G. The Robotanist: A Ground-Based agricultural robot for High-Throughput crop phenotyping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3634–3639. [Google Scholar] [CrossRef]

- Jayakrishna, P.V.S.; Reddy, M.S.; Sai, N.J.; Susheel, N.; Peeyush, K.P. Autonomous Seed Sowing Agricultural Robot. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics (ICACCI), Bangalore, India, 19–22 September 2018; pp. 2332–2336. [Google Scholar] [CrossRef]

- Gaus, C.-C.; Urso, L.-M.; Minßen, T.-F.; de Witte, T. Economics of mechanical weeding by a swarm of small field robots. In Proceedings of the 57th Annual Conference of German Association of Agricultural Economists (GEWISOLA), Munich, Germany, 13–15 September 2017; German Association of Agricultural Economists (GEWISOLA): Weihenstephan, Germany, 2017. [Google Scholar]

| Component | Fruit Transporting | Spraying Vehicle | Total Cost (€) |

|---|---|---|---|

| Frame | √ | √ | 20 |

| Wheels | √ | √ | 20 |

| Gears/chains | √ | √ | 20 |

| Motors | √ | √ | 70 |

| Motor drivers | √ | √ | 30 |

| Fluid pumps | - | √ | 30 |

| Spraying parts | - | √ | 15 |

| Pallet bin | √ | - | 10 |

| Arduino | √ | √ | 20 |

| Raspberry | √ | √ | 45 |

| IMU | √ | √ | 40 |

| GPS | √ | √ | 50 |

| GPS (RTK) | √ | √ | 350 |

| Simple camera | √ | √ | 30 |

| Pixy2 | √ | √ | 50 |

| OAK-D | √ | √ | 300 |

| Thermal Camera | - | √ | 450 |

| ASUS stick | √ | √ | 60 |

| Access point | √ | √ | 30 |

| Wires | √ | √ | 15 |

| Batteries | √ | √ | 30 |

| Energy meter | √ | √ | 15 |

| Solar equipment | √ | √ | 50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Loukatos, D.; Kondoyanni, M.; Kyrtopoulos, I.-V.; Arvanitis, K.G. Enhanced Robots as Tools for Assisting Agricultural Engineering Students’ Development. Electronics 2022, 11, 755. https://doi.org/10.3390/electronics11050755

Loukatos D, Kondoyanni M, Kyrtopoulos I-V, Arvanitis KG. Enhanced Robots as Tools for Assisting Agricultural Engineering Students’ Development. Electronics. 2022; 11(5):755. https://doi.org/10.3390/electronics11050755

Chicago/Turabian StyleLoukatos, Dimitrios, Maria Kondoyanni, Ioannis-Vasileios Kyrtopoulos, and Konstantinos G. Arvanitis. 2022. "Enhanced Robots as Tools for Assisting Agricultural Engineering Students’ Development" Electronics 11, no. 5: 755. https://doi.org/10.3390/electronics11050755

APA StyleLoukatos, D., Kondoyanni, M., Kyrtopoulos, I.-V., & Arvanitis, K. G. (2022). Enhanced Robots as Tools for Assisting Agricultural Engineering Students’ Development. Electronics, 11(5), 755. https://doi.org/10.3390/electronics11050755