Computational Simulation of an Agricultural Robotic Rover for Weed Control and Fallen Fruit Collection—Algorithms for Image Detection and Recognition and Systems Control, Regulation, and Command

Abstract

:1. Introduction

- Very low development cost and the initial cost is only at the intellectual level, at least until there is an investment in materials/hardware;

- Possibility to test different scenarios and hypotheses in the production and validation of software or algorithms without the existence of hardware;

- Increased freedom and creativity, since there are no worries about damaging hardware;

- Ability to perform several iterations quickly (while in a real scenario it would be necessary to prepare the system and the environment in which it is located);

- Can be flexible and dynamic, adapting specific sensors to improve results;

- Opportunity to test several hypotheses simultaneously (several simulations running in parallel).

- A new control algorithm was developed for a robotic rover that uses an image recognition technique when performing two agricultural maintenance tasks (localized spraying and fallen fruit collection);

- Implementation of the control algorithm and simulation of the tasks to be performed by using robotic simulation software, enabling low costs;

- Validation of the control algorithm in two case studies (localized spraying and fallen fruit collection) by using several operating scenarios created in an orchard environment;

- Algorithm performance was extensively evaluated in different tests and the results showed a high success rate and good precision, allowing the generalization of its applications.

2. Materials and Methods

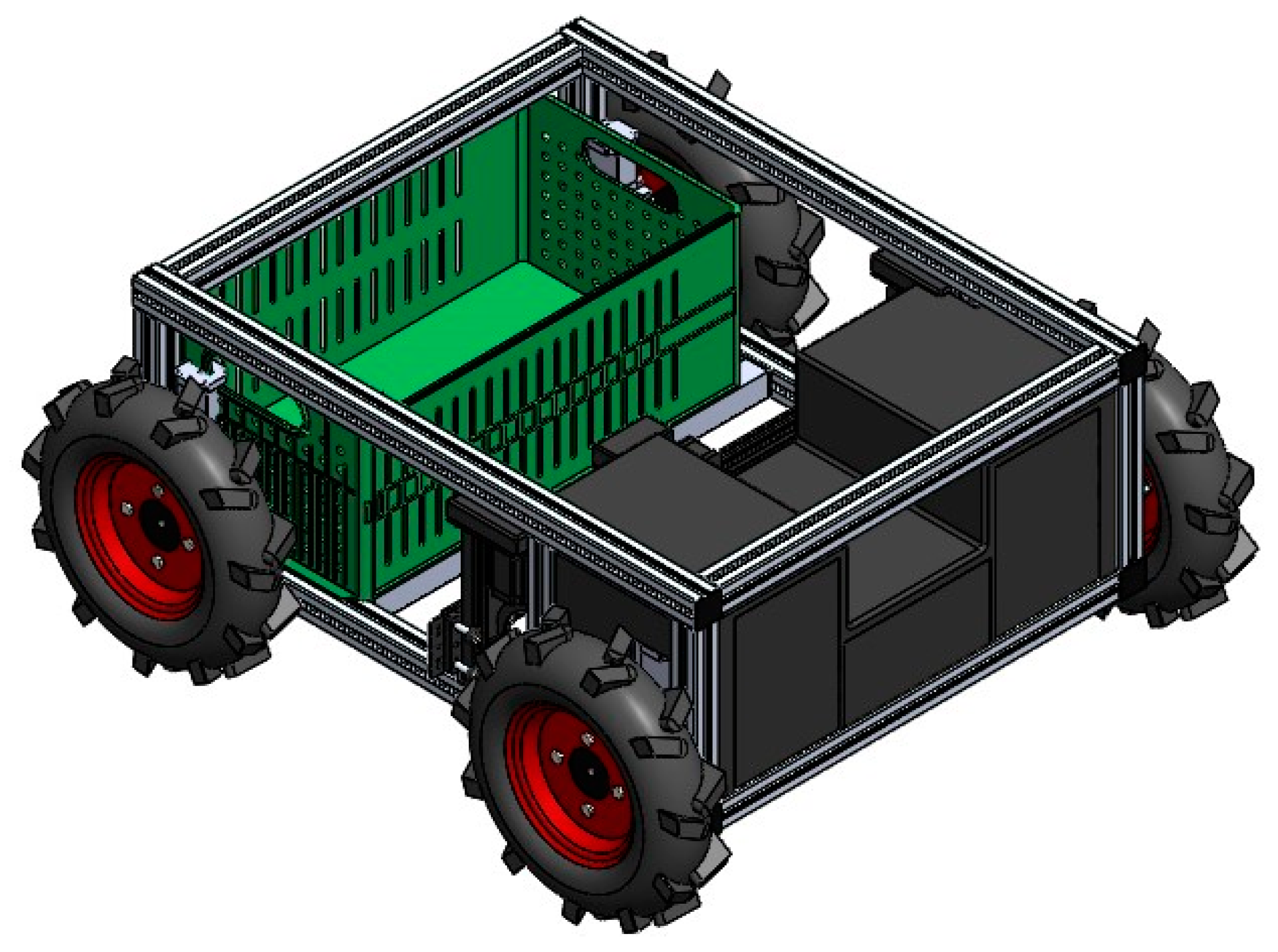

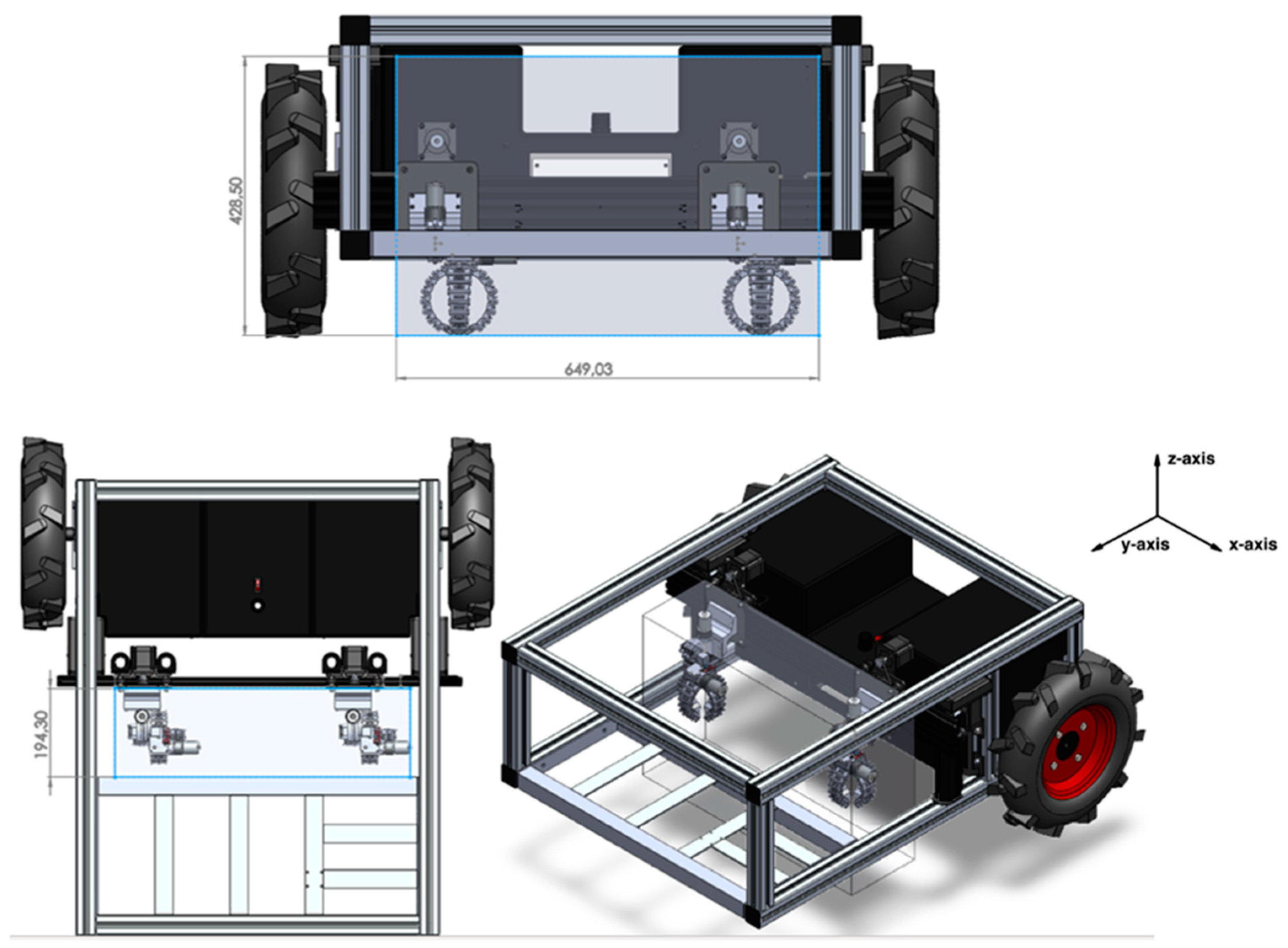

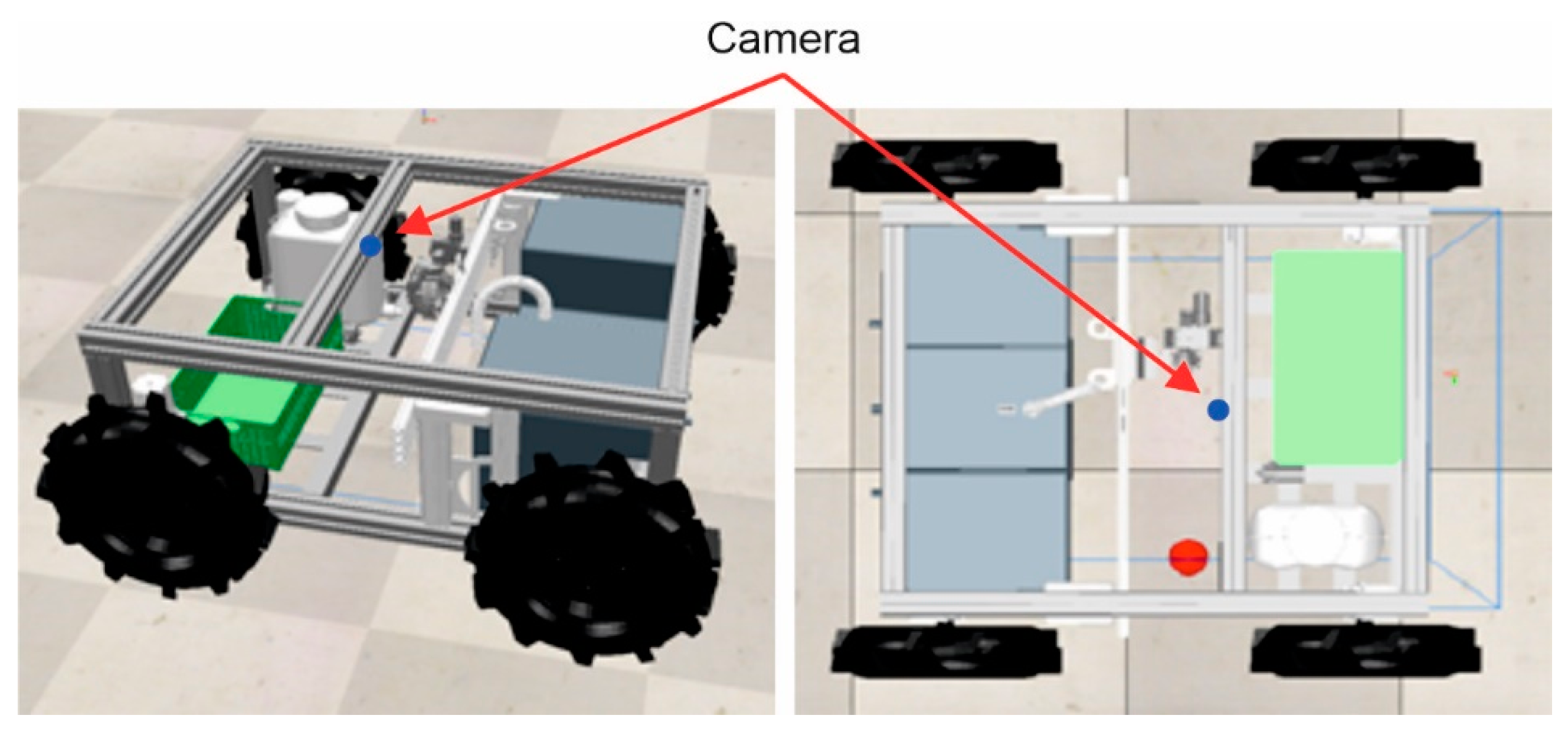

2.1. Robotic Rover

2.2. CoppeliaSim Simulator

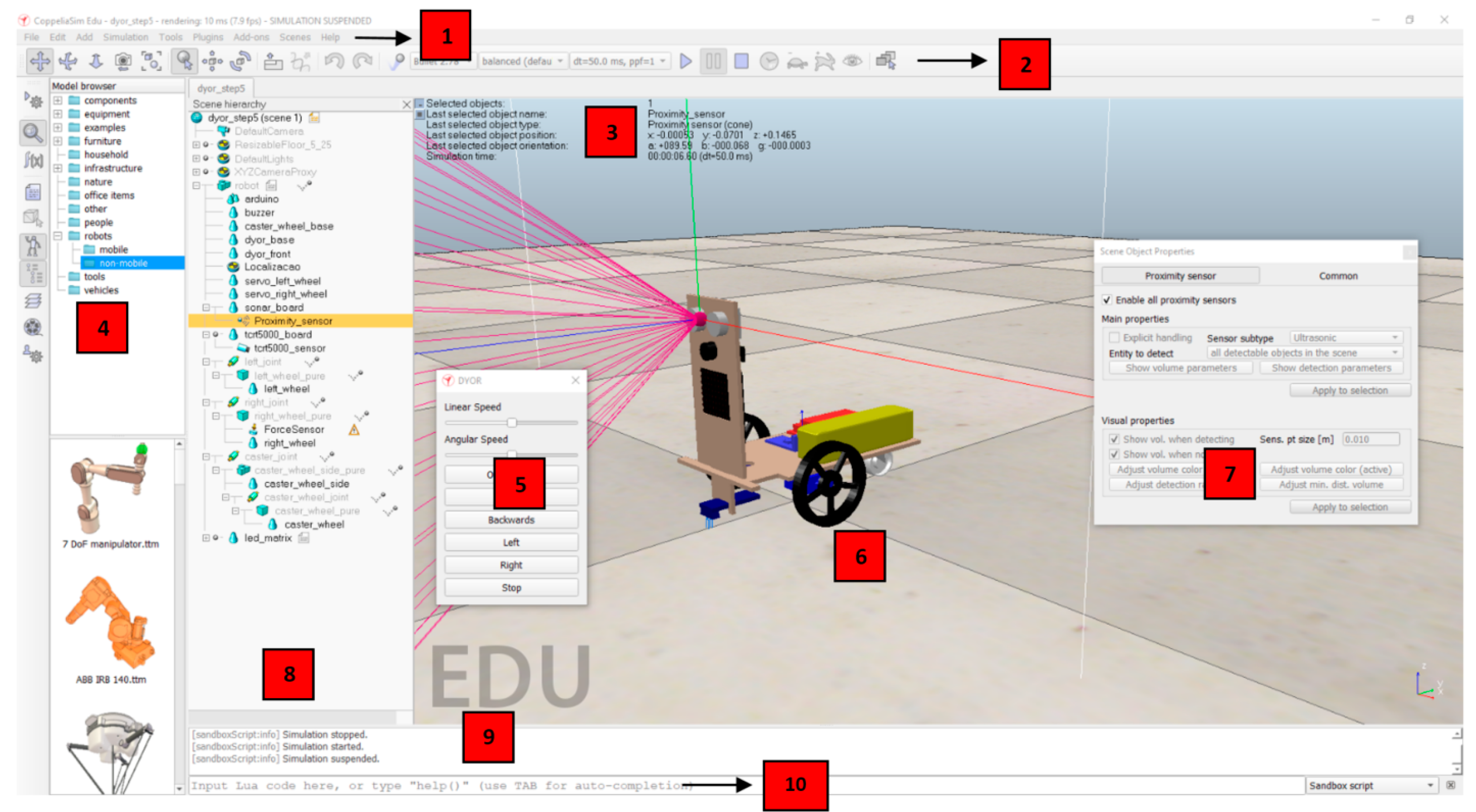

2.2.1. CoppeliaSim’s Graphical Interface

- Menu bar (1): Allows access to the simulator features that, unlike the most commonly used ones, cannot be accessed through interaction with models, pop-up menus, and toolbars;

- Toolbars (2): These elements are present for the user’s convenience and represent the most used and essential functions for interaction with the simulator, specifications);

- Informative Text (3): Contains information allusive to the object that is selected at a given moment and of parameters and simulation states;

- Model Browser (4): In a top part, it shows CoppeliaSim model folders; on the other hand, in the bottom part, there are thumbnails of the models that can be included in the scene through the drag-and-drop action supported by the simulator;

- Dialog Boxes (5): Feature that appears during interaction with the main window and, through which, it becomes possible to edit various parameters relating to the models or the scene;

- Scene (6): Demonstrates the graphic part of the simulation, that is, the final result of what was created and programmed;

- Customized User Interface (7): It is possible to make a quick configuration of all the components inserted in the “scene” through this window that appears for each of the objects whenever requested;

- Scene Hierarchy (8): Here, the entire content of a scene can be analyzed, that is, all the objects that compose it. Once each object is built hierarchically, this constitution is represented by the tree of its hierarchy, in which by double-clicking on the name of each object the user can access the “Custom Interface” that allows it to be changed. It is also with this simulator functionality, through drag-and-drop, that the parental relationships between objects are created (child objects are dragged into the structure of the parent object);

- Status Bar (9): The element responsible for displaying information about operations, commands, and error messages. In addition, the user can also use it to print strings from a script;

- Command Line (10): It is used to enter and execute the Lua code.

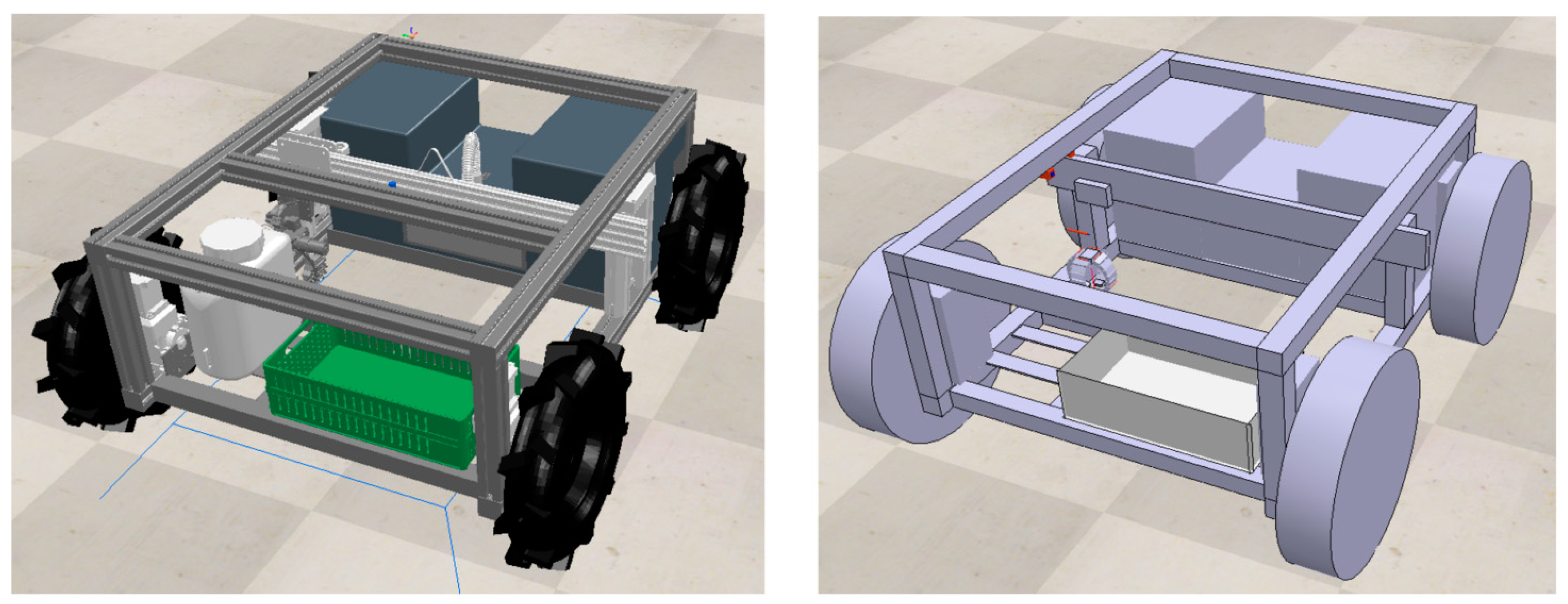

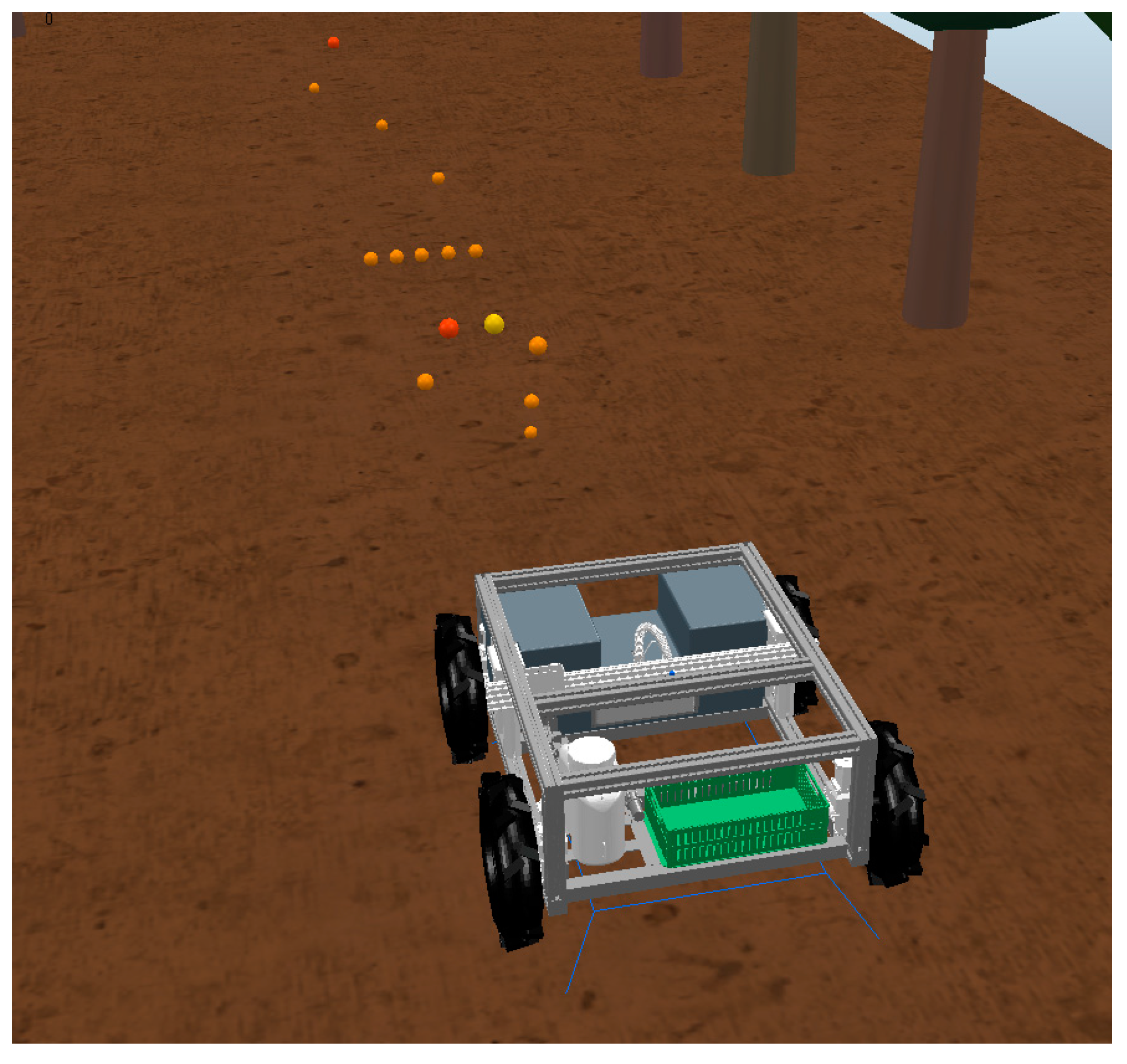

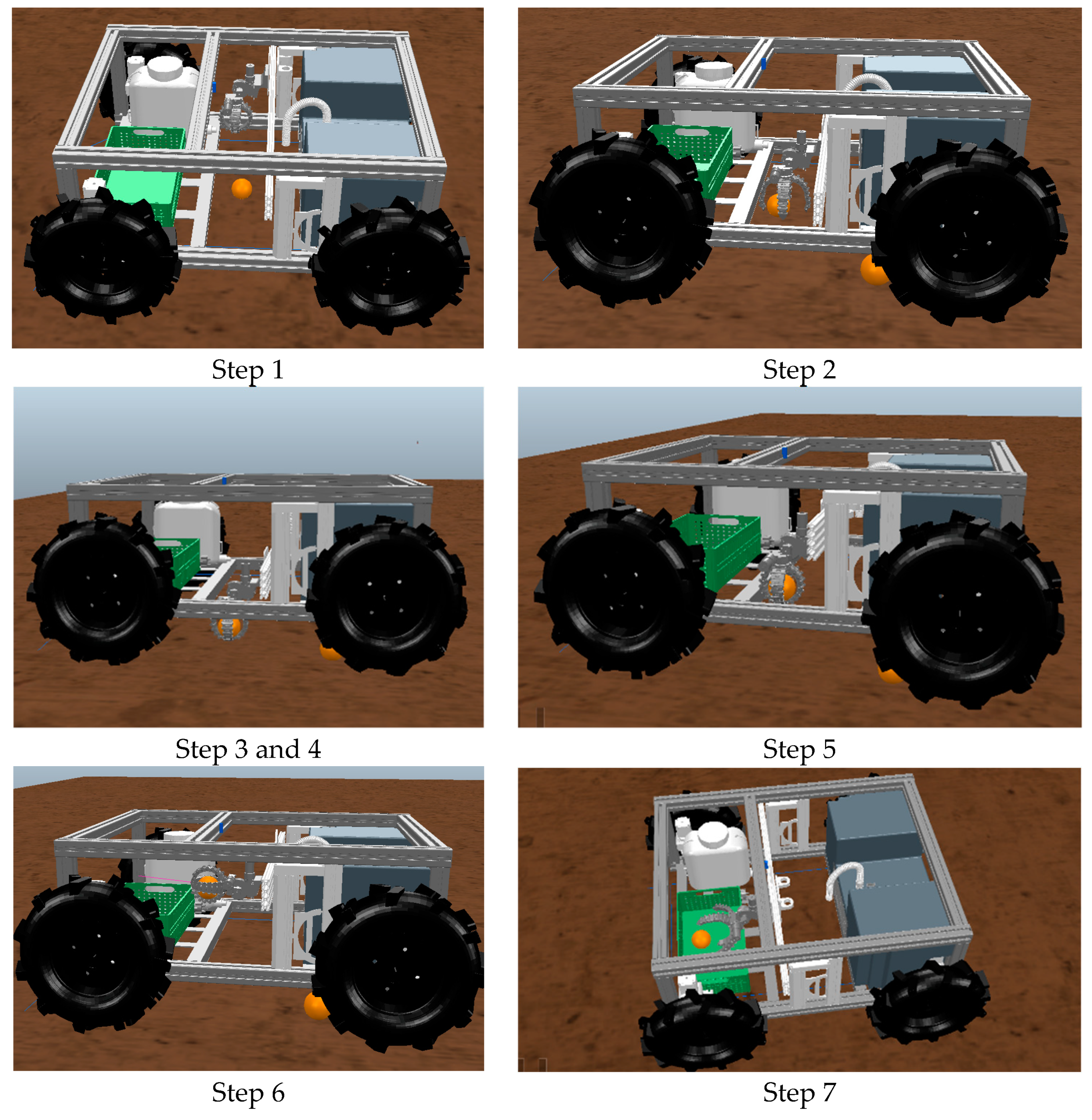

2.2.2. Robotic Platform Creation

2.2.3. Inserting a Video Camera

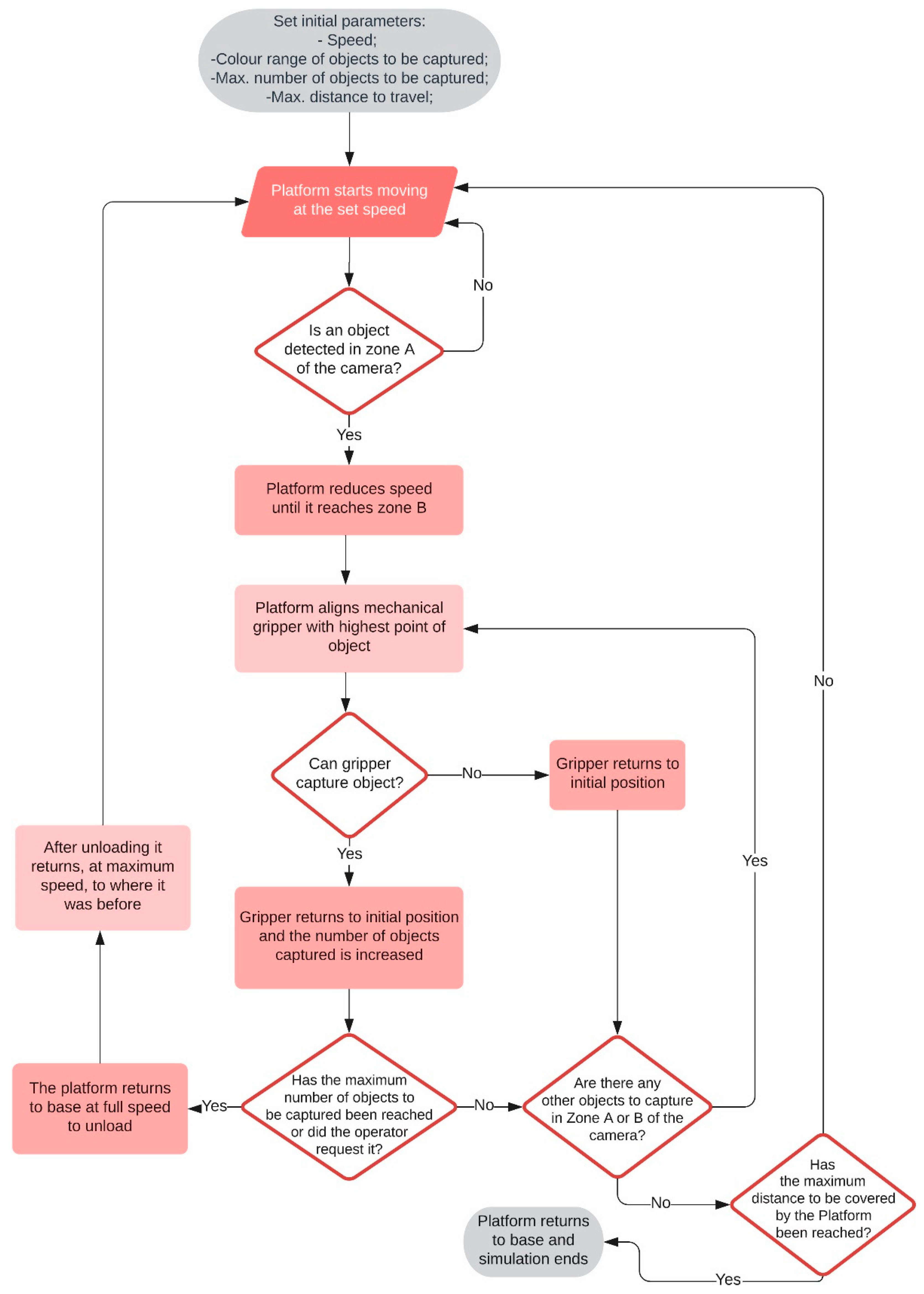

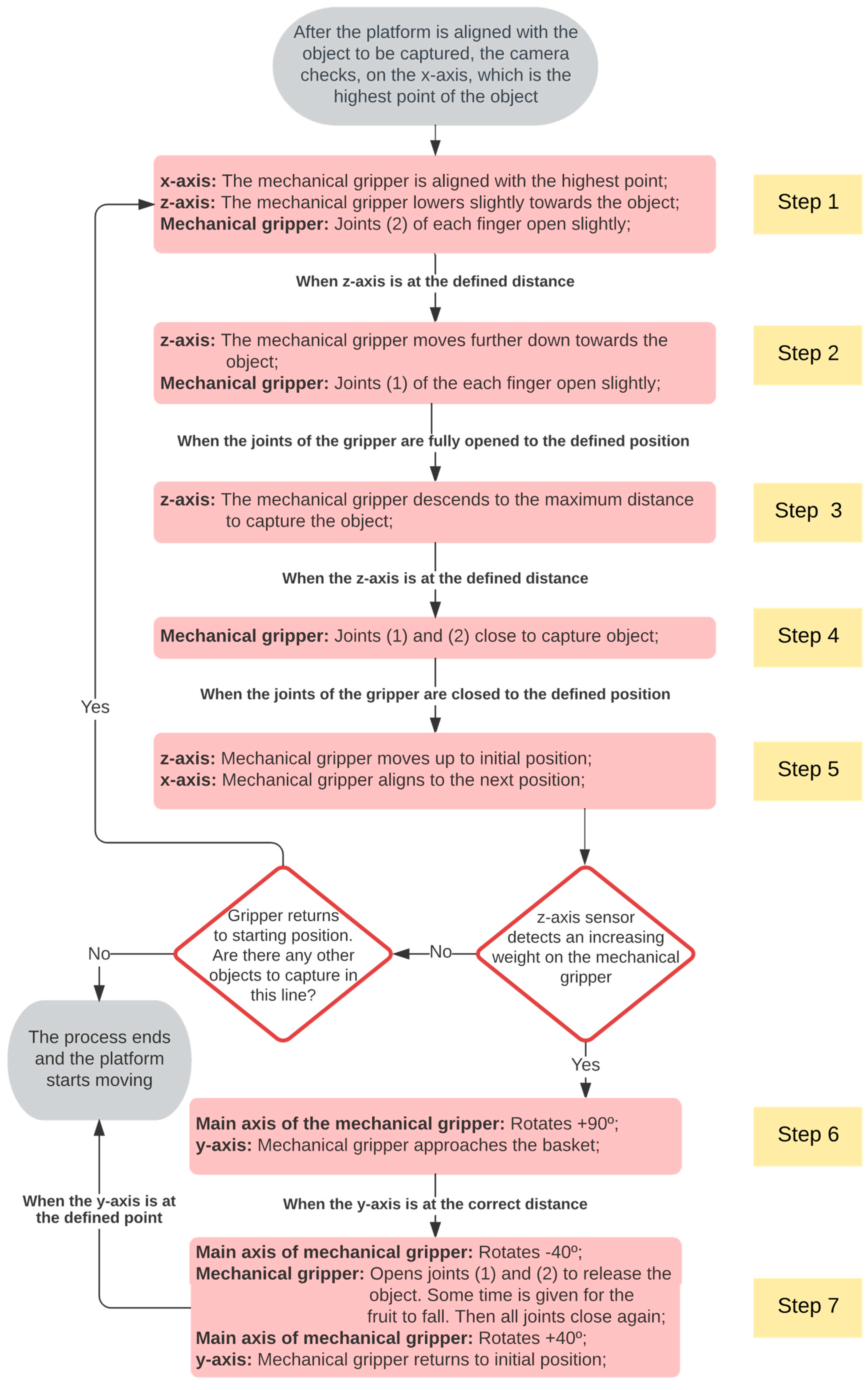

2.3. Proposed Algorithm

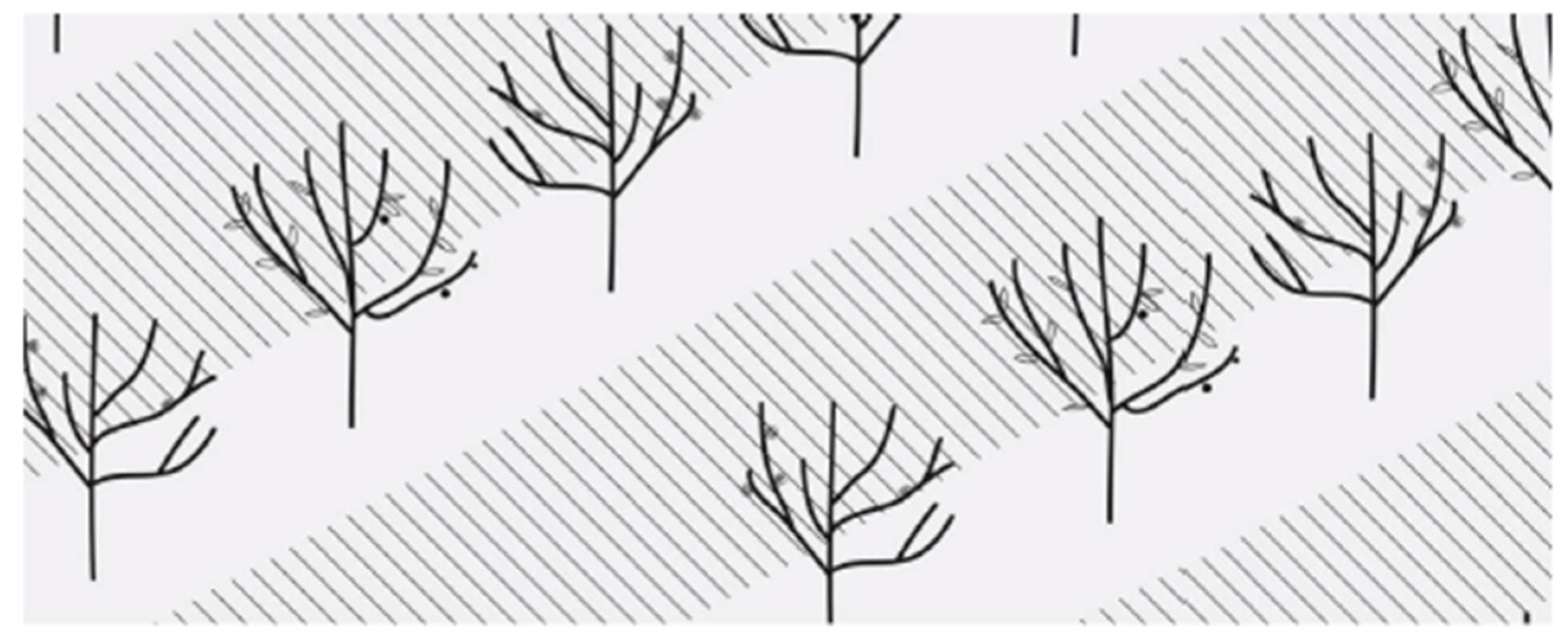

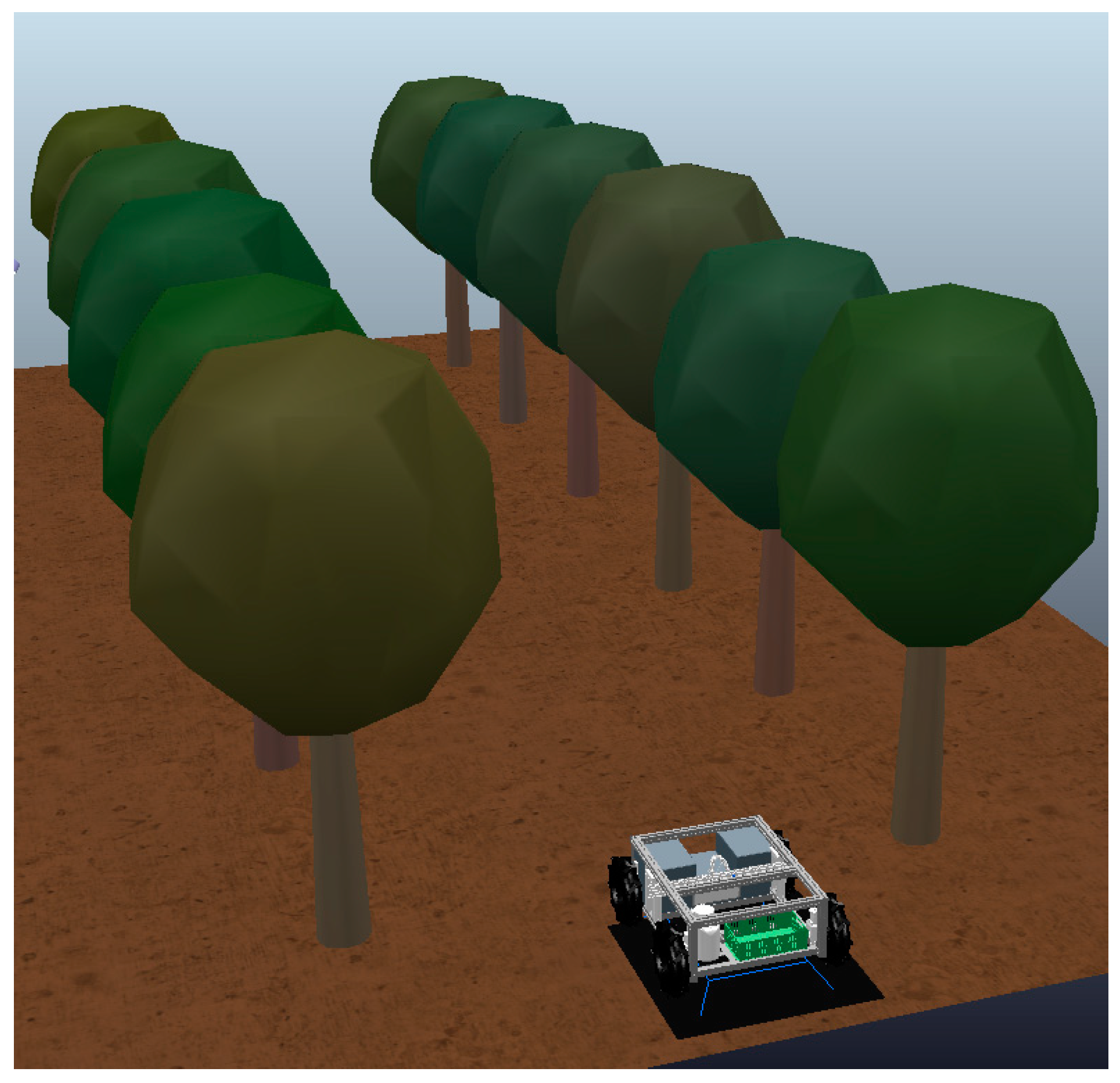

2.4. Recreating a 3D Orchard Environment

3. Results Analysis and Discussion

3.1. Rover Behavior

3.1.1. Operating Speed

3.1.2. Image Processing

3.1.3. Operation Time

3.1.4. Physical Simulation Engine

3.1.5. Differentiation of Colors in Image Pixels

3.1.6. Size of the Objects to Be Captured

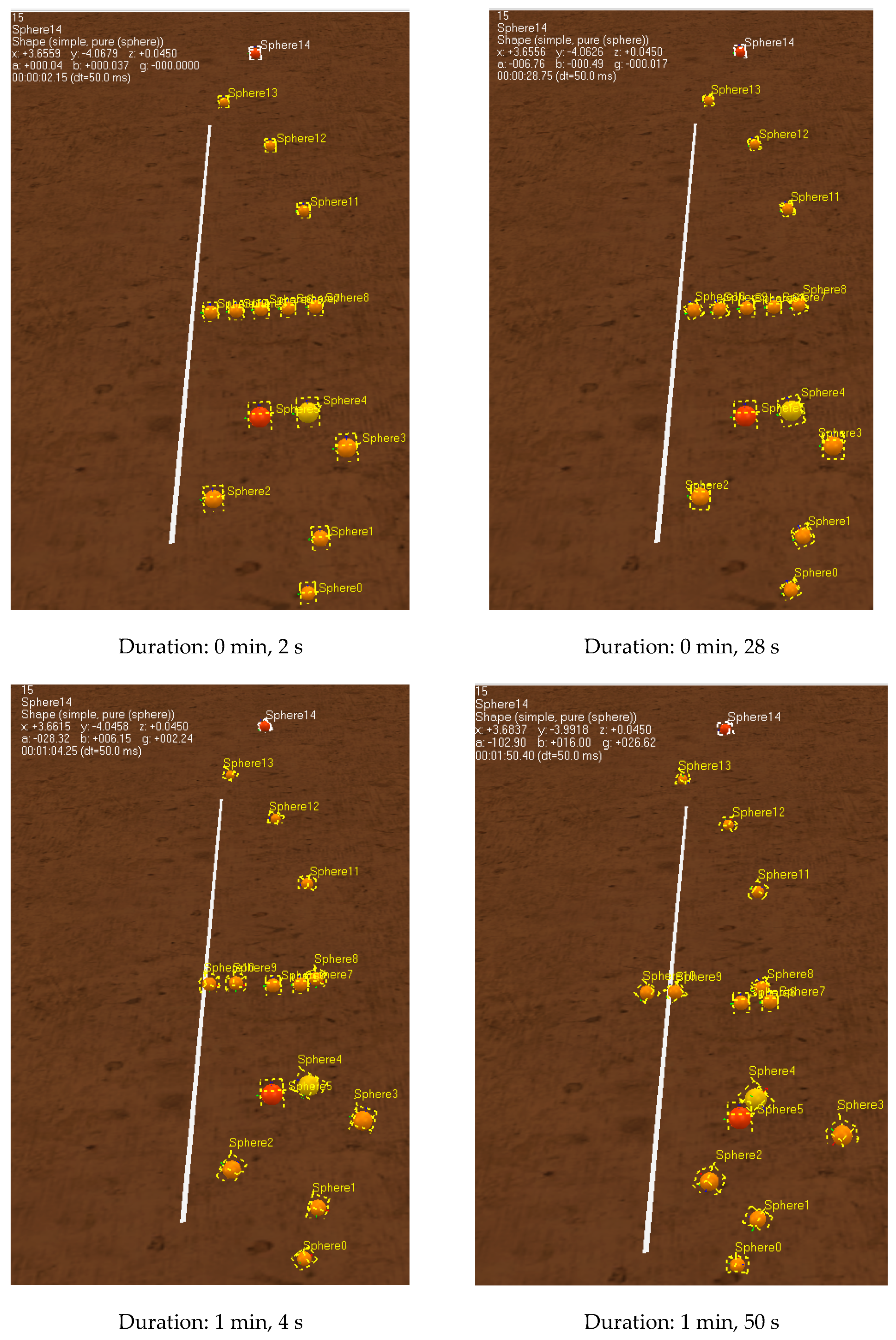

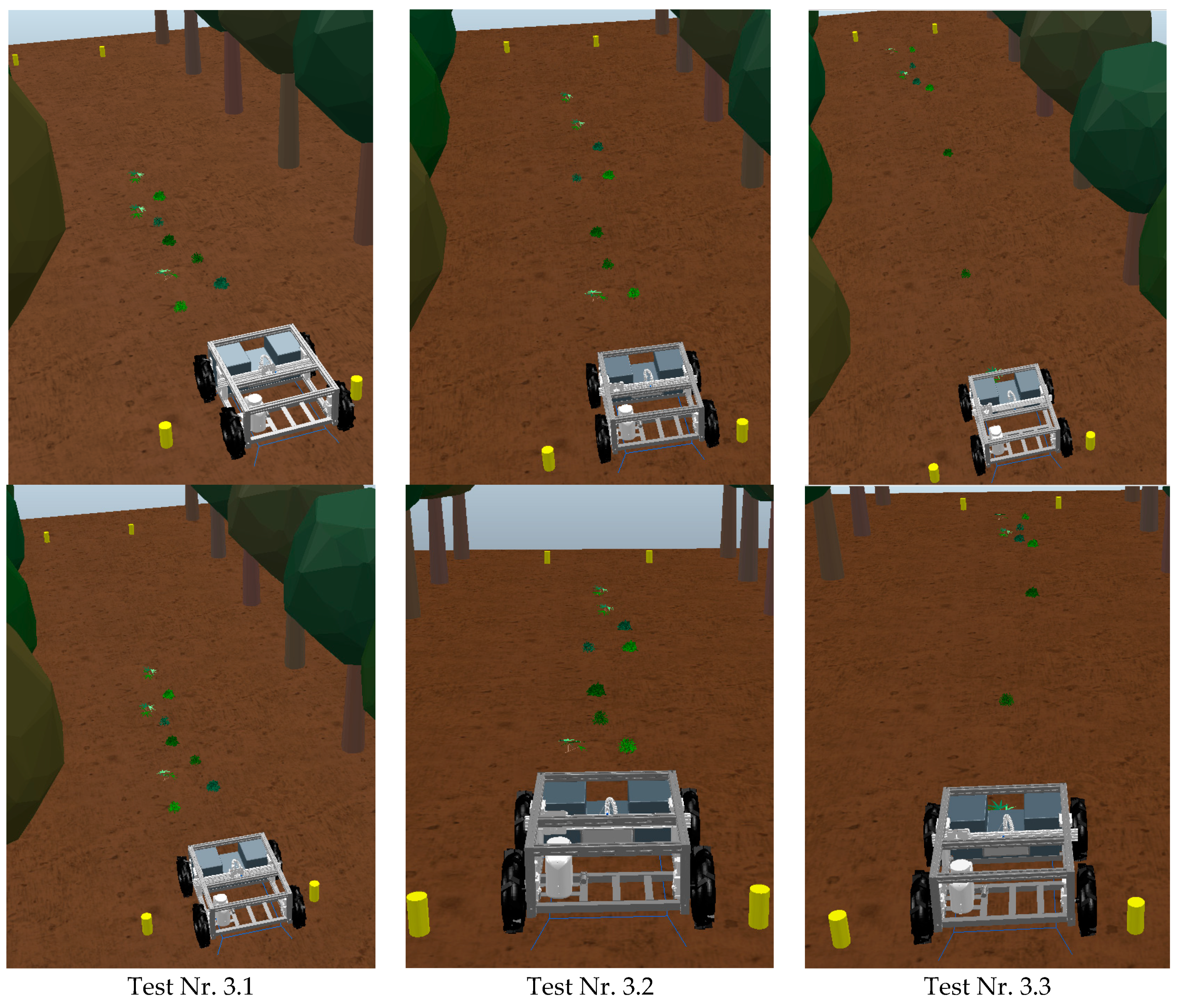

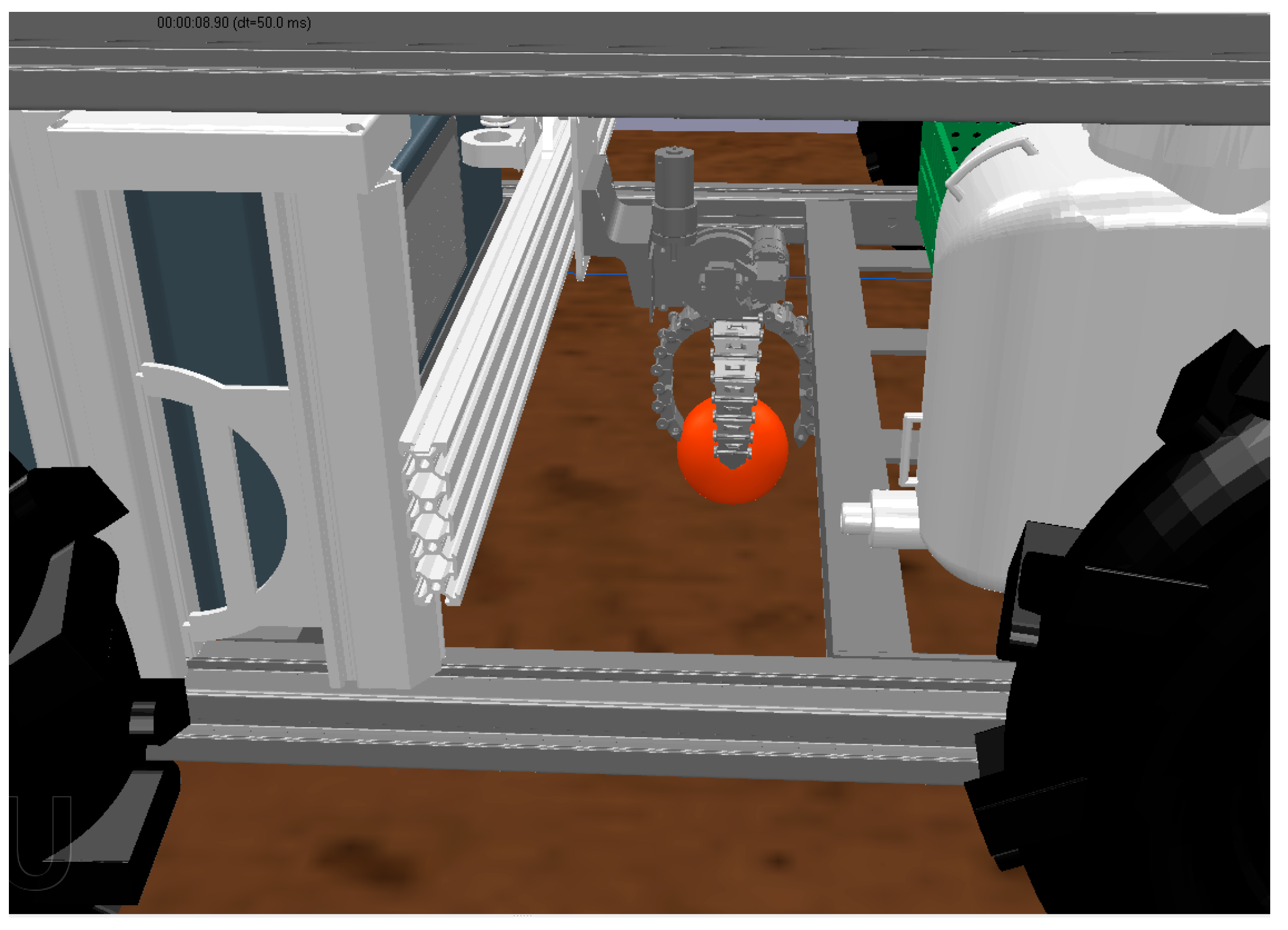

3.2. Case Study Nr. 1—Object Capture

- Scenario Nr. 1: Objects with equal diameter and with different colors;

- Scenario Nr. 2: Objects with various diameters and the same color range;

- Scenario Nr. 3: Different sizes and color ranges.

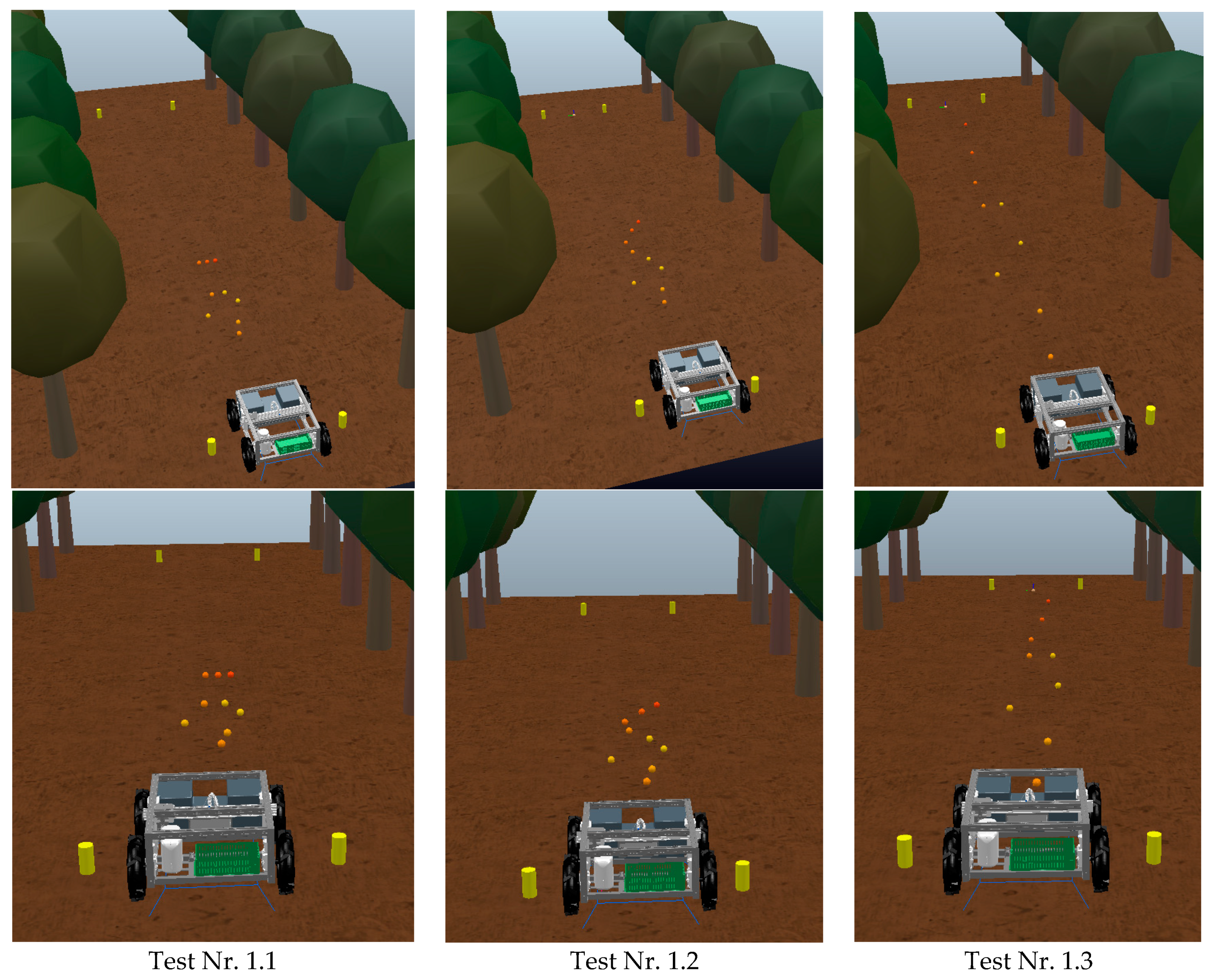

3.2.1. Scenario Nr. 1

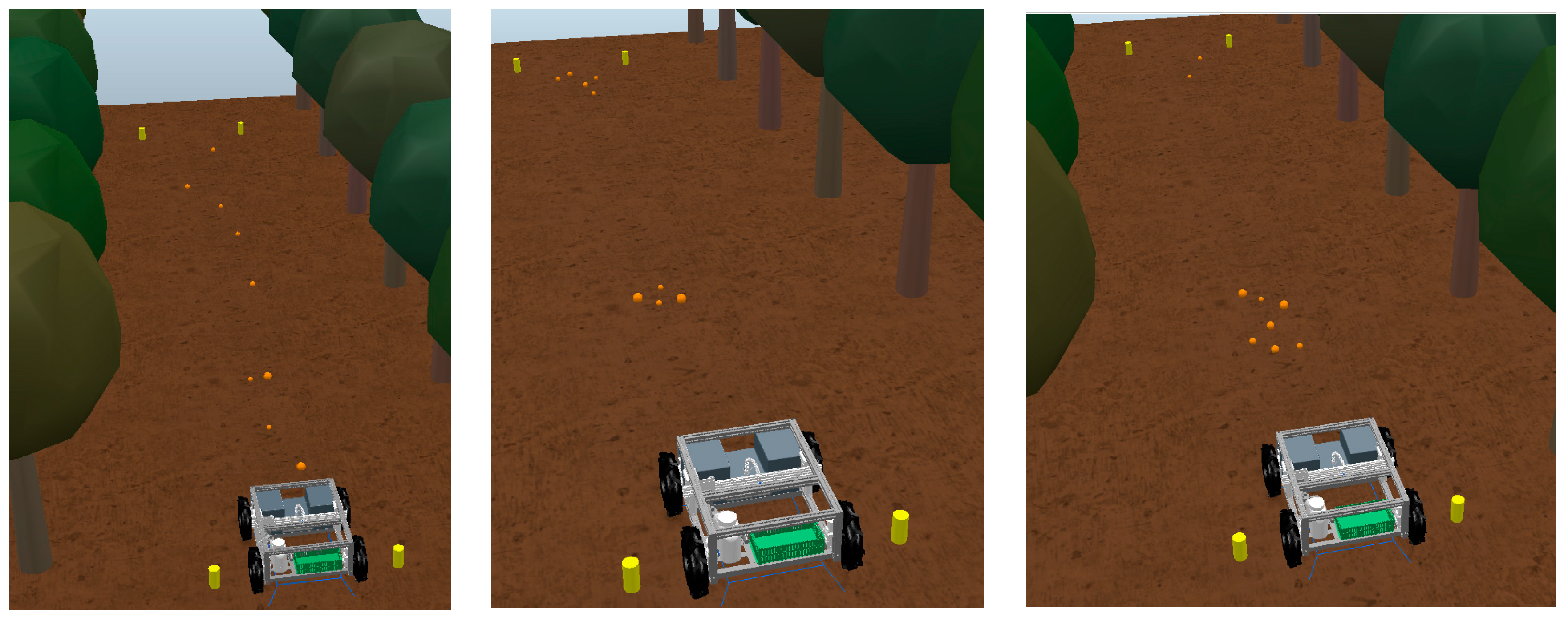

3.2.2. Scenario Nr. 2

3.2.3. Scenario Nr. 3

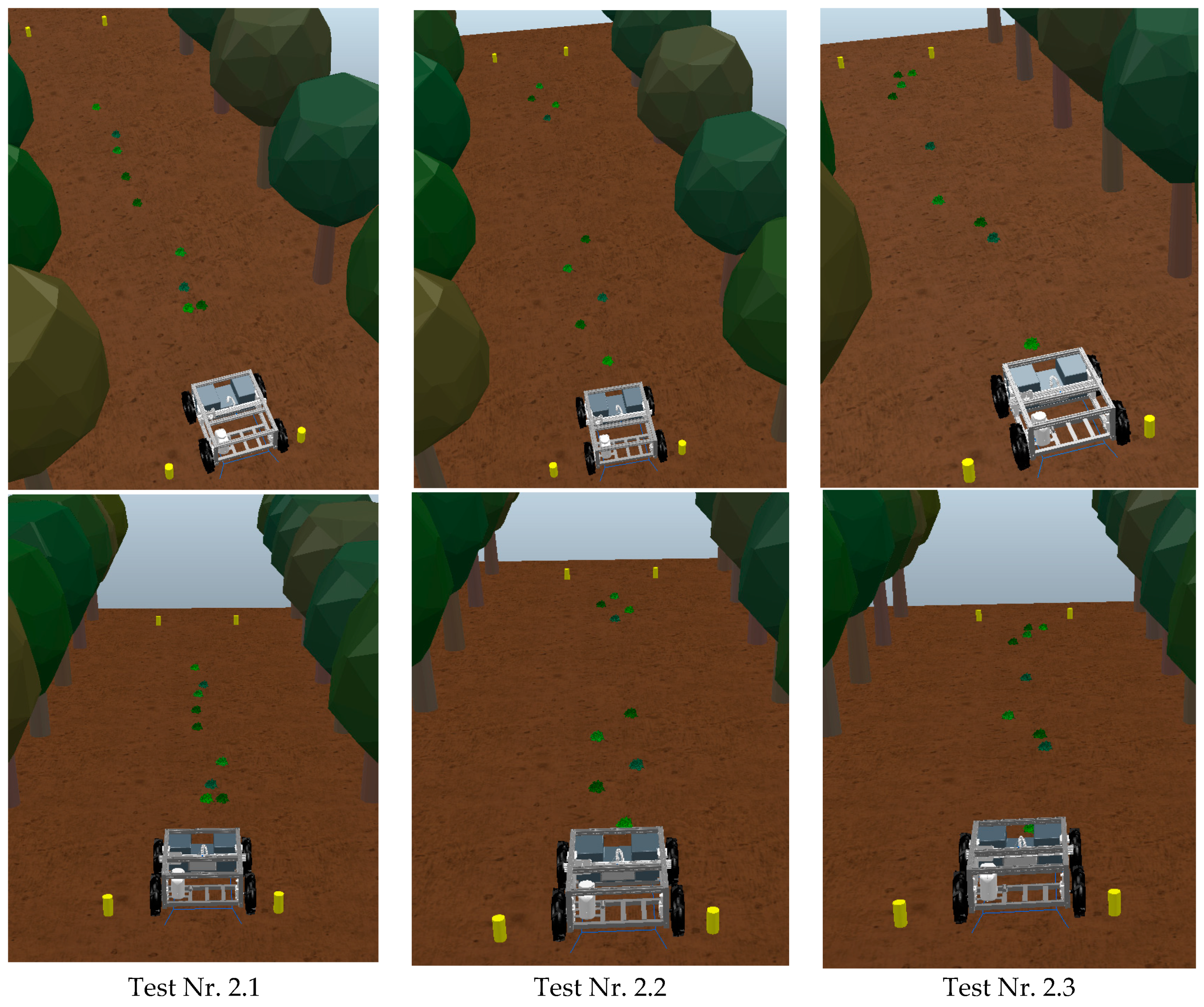

3.3. Case Study Nr. 2—Controlled Spraying

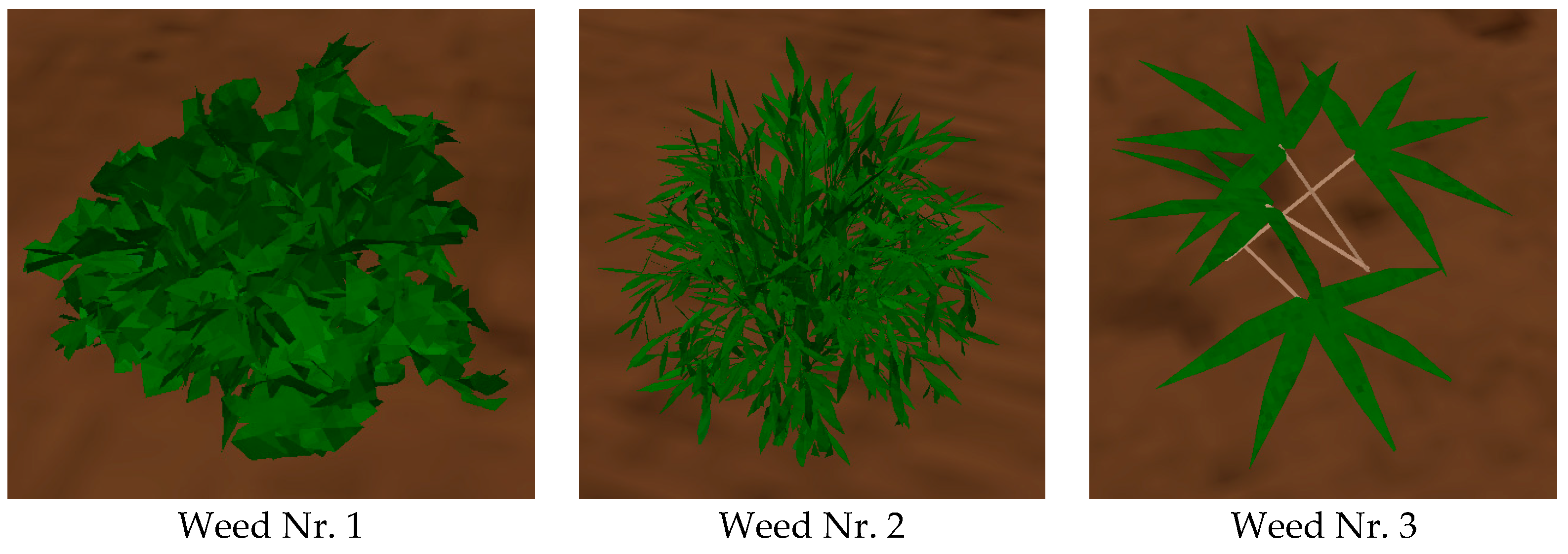

- Scenario Nr. 1: Weeds with identical shapes and colors;

- Scenario Nr. 2: Weeds with the same shape but with different color ranges;

- Scenario Nr. 3: Weeds with different shapes and color ranges.

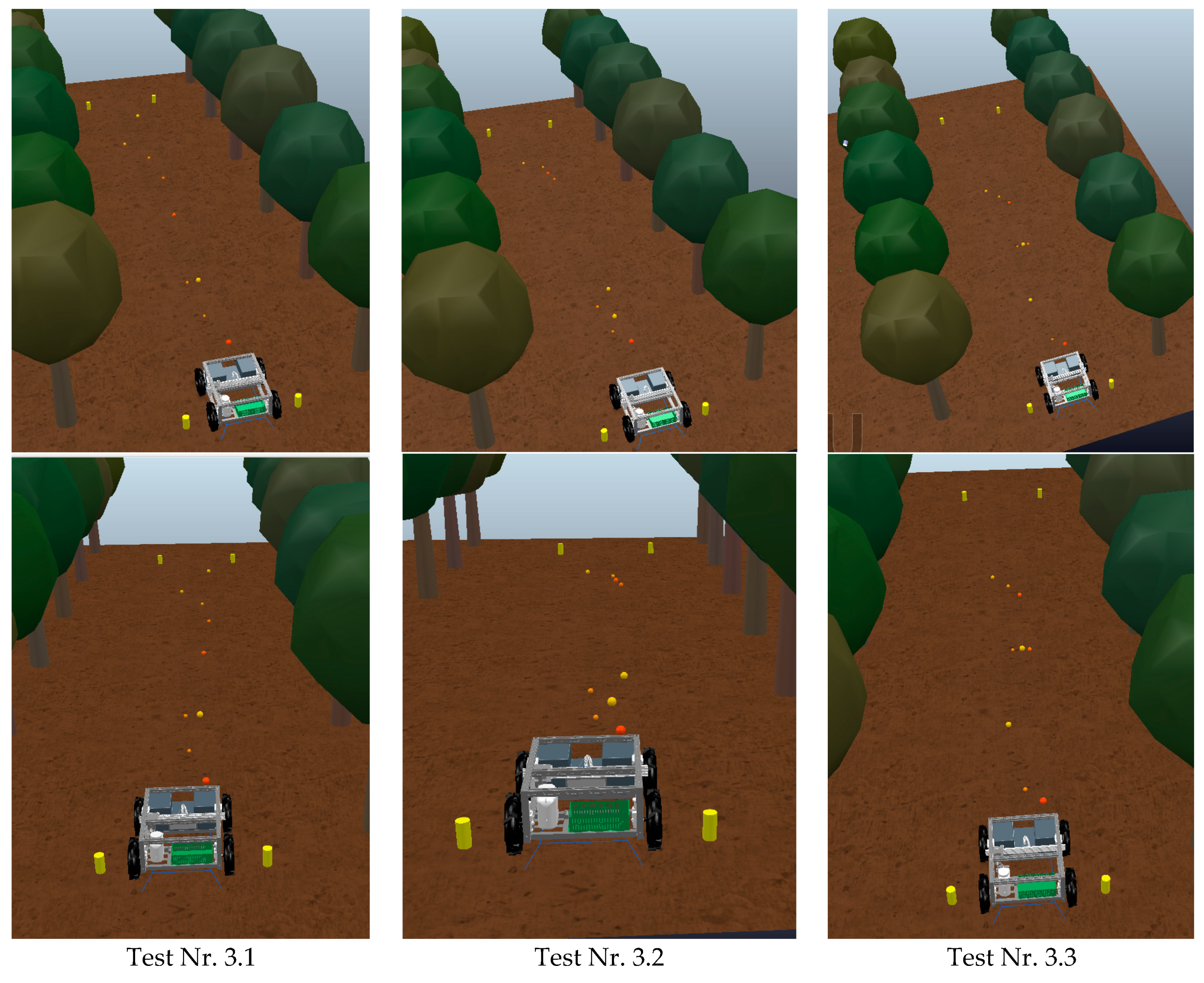

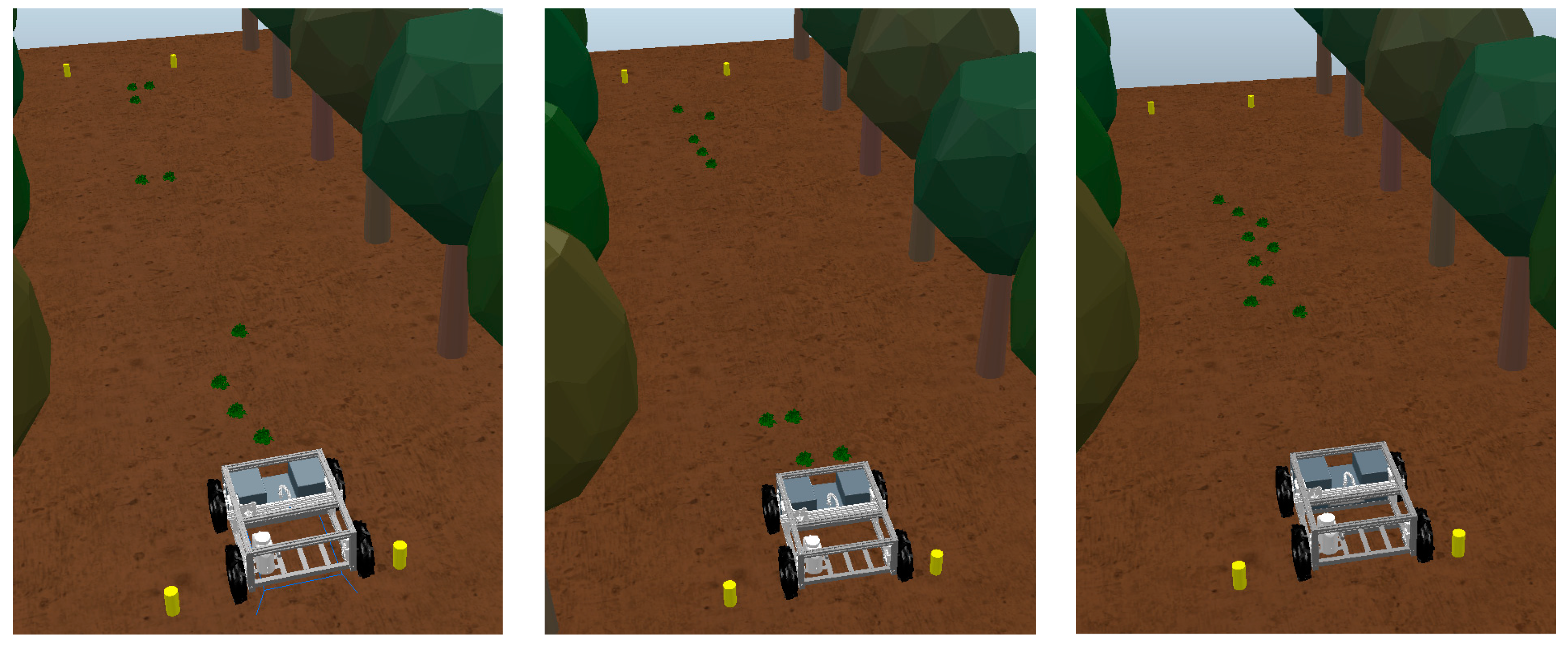

3.3.1. Scenario Nr. 1

3.3.2. Scenario Nr. 2

3.3.3. Scenario Nr. 3

3.4. Results Analysis

3.4.1. Results of Case Study Nr. 1

3.4.2. Results of Case Study Nr. 2

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Faria, S.D.N. Sensor Fusion for Mobile Robot Localization Using UWB and ArUco Markers. Master’s Thesis, Faculdade de Engenharia da Universidade do Porto, Porto, Portugal, 2021. [Google Scholar]

- Teixeira, G.E. Mobile Robotics Simulation for ROS Based Robots Using Visual Components. Master’s Thesis, Faculdade de Engenharia da Universidade do Porto, Porto, Portugal, 2019. [Google Scholar]

- Teixeira, F.M. Simulação de um Sistema Robótico de Co-Transporte. Master’s Thesis, Instituto Superior de Engenharia do Porto, Porto, Portugal, 2020. [Google Scholar]

- Li, Z.; Li, P.; Yang, H.; Wang, Y. Stability tests of two-finger tomato grasping for harvesting robots. Biosyst. Eng. 2013, 116, 163–170. [Google Scholar] [CrossRef]

- Telegenov, K.; Tlegenov, Y.; Shintemirov, A. A low-cost open-source 3-D-printed three-finger gripper platform for research and educational purposes. IEEE Access 2015, 3, 638–647. [Google Scholar] [CrossRef]

- Weber, P.; Rueckert, E.; Calandra, R.; Peters, J.; Beckerle, P. A low-cost sensor glove with vibrotactile feedback and multiple finger joint and hand motion sensing for human-robot interaction. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 99–104. [Google Scholar]

- Taheri, H.; Rowe, J.B.; Gardner, D.; Chan, V.; Gray, K.; Bower, C.; Reinkensmeyer, D.J.; Wolbrecht, E.T. Design and preliminary evaluation of the FINGER rehabilitation robot: Controlling challenge and quantifying finger individuation during musical computer game play. J. Neuroeng. Rehabil. 2014, 11, 10. [Google Scholar] [CrossRef] [Green Version]

- Edlerman, E.; Linker, R. Autonomous multi-robot system for use in vineyards and orchards. In Proceedings of the 2019 27th Mediterranean Conference on Control and Automation (MED), Akko, Israel, 1–4 July 2019; pp. 274–279. [Google Scholar]

- Lepej, P.; Rakun, J. Simultaneous localisation and mapping in a complex field environment. Biosyst. Eng. 2016, 150, 160–169. [Google Scholar] [CrossRef]

- Blok, P.M.; van Boheemen, K.; van Evert, F.K.; IJsselmuiden, J.; Kim, G.H. Robot navigation in orchards with localization based on Particle filter and Kalman filter. Comput. Electron. Agric. 2019, 157, 261–269. [Google Scholar] [CrossRef]

- Chou, H.-Y.; Khorsandi, F.; Vougioukas, S.G.; Fathallah, F.A. Developing and evaluating an autonomous agricultural all-terrain vehicle for field experimental rollover simulations. Comput. Electron. Agric. 2022, 194, 106735. [Google Scholar] [CrossRef]

- Basiri, A.; Mariani, V.; Silano, G.; Aatif, M.; Iannelli, L.; Glielmo, L. A survey on the application of path-planning algorithms for multi-rotor UAVs in precision agriculture. J. Navig. 2022, 1, 1–20. [Google Scholar] [CrossRef]

- Wang, S.; Dou, W.; Li, T.; Han, Y.; Zhang, Z.; Chen, J. Path Planning Optimization and Motion Control of a Greenhouse Unmanned Ground Vehicle. Lect. Notes Electr. Eng. 2022, 644, 5145–5156. [Google Scholar]

- Jiang, Y.; Xu, X.; Zhang, L.; Zou, T. Model Free Predictive Path Tracking Control of Variable-Configuration Unmanned Ground Vehicle. ISA Trans. 2022. Available online: https://pubmed.ncbi.nlm.nih.gov/35148886/ (accessed on 10 February 2022). [CrossRef]

- Wu, Y.; Li, C.; Yuan, C.; Li, M.; Li, H. Predictive Control for Small Unmanned Ground Vehicles via a Multi-Dimensional Taylor Network. Appl. Sci. 2022, 12, 682. [Google Scholar] [CrossRef]

- Bayar, G.; Bergerman, M.; Koku, A.B.; Konukseven, E.I. Localization and control of an autonomous orchard vehicle. Comput. Electron. Agric. 2015, 115, 118–128. [Google Scholar] [CrossRef] [Green Version]

- Cutulle, M.A.; Maja, J.M. Determining the utility of an unmanned ground vehicle for weed control in specialty crop systems. Ital. J. Agron. 2021, 16, 365–478. [Google Scholar] [CrossRef]

- Du, Y.; Mallajosyula, B.; Sun, D.; Chen, J.; Zhao, Z.; Rahman, M.; Quadir, M.; Jawed, M.K. A Low-cost Robot with Autonomous Recharge and Navigation for Weed Control in Fields with Narrow Row Spacing. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3263–3270. [Google Scholar]

- Machleb, J.; Peteinatos, G.G.; Sökefeld, M.; Gerhards, R. Sensor-Based Intrarow Mechanical Weed Control in Sugar Beets with Motorized Finger Weeders. Agronomy 2021, 11, 1517. [Google Scholar] [CrossRef]

- Kurpaska, S.; Bielecki, A.; Sobol, Z.; Bielecka, M.; Habrat, M.; Śmigielski, P. The Concept of the Constructional Solution of the Working Section of a Robot for Harvesting Strawberries. Sensors 2021, 21, 3933. [Google Scholar] [CrossRef]

- Rysz, M.W.; Mehta, S.S. A risk-averse optimization approach to human-robot collaboration in robotic fruit harvesting. Comput. Electron. Agric. 2021, 182, 106018. [Google Scholar] [CrossRef]

- Cruz Ulloa, C.; Krus, A.; Barrientos, A.; del Cerro, J.; Valero, C. Robotic Fertilization in Strip Cropping using a CNN Vegetables Detection-Characterization Method. Comput. Electron. Agric. 2022, 193, 106684. [Google Scholar] [CrossRef]

- Mohapatra, B.N.; Jadhav, R.V.; Kharat, K.S. A Prototype of Smart Agriculture System Using Internet of Thing Based on Blynk Application Platform. J. Electron. Electromed. Eng. Med. Inform. 2022, 4, 24–28. [Google Scholar] [CrossRef]

- Mammarella, M.; Comba, L.; Biglia, A.; Dabbene, F.; Gay, P. Cooperation of Unmanned Systems for Agricultural Applications: A Theoretical Framework. Biosyst. Eng. 2021. Available online: https://www.sciencedirect.com/science/article/pii/S1537511021002750 (accessed on 17 December 2021). [CrossRef]

- Aslan, M.F.; Durdu, A.; Sabanci, K.; Ropelewska, E.; Gültekin, S.S. A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses. Appl. Sci. 2022, 12, 1047. [Google Scholar] [CrossRef]

- Veiros, A.F.R. Sistema Robótico Terrestre Para Apoio a Atividades de Manutenção de Solo em Pomares de Prunóideas. Master’s Thesis, Universidade da Beira Interior, Covilhã, Portugal, 2020. [Google Scholar]

- Vigneault, C.; Bechar, A. Agricultural robots for field operations. Part 2: Operations and systems. Biosyst. Eng. 2017, 153, 110–128. [Google Scholar]

- Oerke, E.C.; Dehne, H.W. Safeguarding production—losses in major crops and the role of crop protection. Crop Prot. 2004, 23, 275–285. [Google Scholar] [CrossRef]

- Tavares, N.; Gaspar, P.D.; Aguiar, M.L.; Mesquita, R.; Simões, M.P. Robotic arm and gripper to pick fallen peaches in the orchards. In Proceedings of the X International Peach Symposium, Naoussa, Greece, 30 May–3 June 2022. [Google Scholar]

- Coppelia Robotics Ltd Robot Simulator CoppeliaSim. Available online: https://www.coppeliarobotics.com/ (accessed on 21 October 2021).

- Miranda, L. Analysis and Simulation of AGVS Routing Strategies Using V-REP. 2017. Available online: https://www.semanticscholar.org/paper/Analysis-and-simulation-of-AGVS-routing-strategies-Miranda/1769570e18411d9c0fec420221eda444fb03fbda (accessed on 12 January 2022).

- Shamshiri, R.R.; Hameed, I.A.; Pitonakova, L.; Weltzien, C.; Balasundram, S.K.; Yule, I.J.; Grift, T.E.; Chowdhary, G. Simulation software and virtual environments for acceleration of agricultural robotics: Features highlights and performance comparison. Int. J. Agric. Biol. Eng. 2018, 11, 12–20. [Google Scholar] [CrossRef]

- Simões, M.P.; Barateiro, A.; Duarte, A.C.; Dias, C.; Ramos, C.; Alberto, D.; Ferreira, D.; Calouro, F.; Vieira, F.; Silvino, P.; et al. +Pessego. Guia Prático da Produção; Centro Operativo e Tecnologíco Hortofrutícola Nacional: Porto, Portugal, 2016; Volume I, ISBN 9789728785048. Available online: https://www.researchgate.net/profile/Maria-Paula-Simoes/publication/344614906_Cap_03-36_Manutencao_solo_pessegueiros_Atividade_enzimatica/links/5f84827e458515b7cf7a7845/Cap-03-36-Manutencao-solo-pessegueiros-Atividade-enzimatica.pdf (accessed on 12 January 2022).

- Simões, M.P.A.F. A Fertilização Azotada em Pessegueiros: Influência no Estado de Nutrição, Produção e Susceptibilidade a Phomopsis Amygdali. Available online: https://www.repository.utl.pt/handle/10400.5/1591?locale=en (accessed on 12 January 2022).

- Leopoldo Armesto DYOR. Available online: http://dyor.roboticafacil.es/en/ (accessed on 27 October 2021).

- Universitária, I. O que são Padrões de Cores RGB e CMYK?—Imprensa Universitária. Available online: https://imprensa.ufc.br/pt/duvidas-frequentes/padrao-de-cor-rgb-e-cmyk/ (accessed on 27 October 2021).

—Object detection; Zone B

—Object detection; Zone B  —Picking object.

—Picking object.

; Interline:

; Interline:  .

.

| Robot Specification | Value |

|---|---|

| Approximate weight | 90 kg |

| Payload | 15 kg |

| Maximum speed | 1.4 m/s |

| Acceleration | 1 m/s2 |

| Length | 1200 mm |

| Width | 1050 mm |

| Height | 500 mm |

| Item | Color Code | ||

|---|---|---|---|

| Red | Green | Blue | |

| Use Plan | 0.376 | 0.258 | 0.164 |

| Yellow peach | 0.933 | 0.772 | 0.043 |

| Orange peach | 0.976 | 0.584 | 0.082 |

| Reddish peach | 0.992 | 0.309 | 0.081 |

| Toning Weed Nr. 1 | 0.234 | 0.775 | 0.074 |

| Toning Weed Nr. 2 | 0.031 | 0.473 | 0.030 |

| Toning Weed Nr. 3 | 0.191 | 0.509 | 0.331 |

| Toning Weed Nr. 4 | 0.208 | 0.740 | 0.207 |

| Case Study Nr. 1 | Time without Stop | Time with Stop | |

|---|---|---|---|

| Scenario Nr. 1 | Test Nr. 1.1 | 3 min, 10 s | 3 min, 14 s |

| Test Nr. 1.2 | 3 min, 10 s | 3 min, 16 s | |

| Test Nr. 1.3 | 3 min, 10 s | 3 min, 26 s | |

| Scenario Nr. 2 | Test Nr. 2.1 | 3 min, 11 s | 3 min, 19 s |

| Test Nr. 2.2 | 3 min, 10 s | 3 min, 38 s | |

| Test Nr. 2.3 | 3 min, 08 s | 3 min, 14 s | |

| Scenario Nr. 3 | Test Nr. 3.1 | 3 min, 13 s | 3 min, 21 s |

| Test Nr. 3.2 | 3 min, 10 s | 3 min, 16 s | |

| Test Nr. 3.3 | 3 min, 14 s | 3 min, 20 s | |

| Case Study Nr. 2 | Time | |

|---|---|---|

| Scenario Nr. 1 | Test Nr. 1.1 | 1 min, 08 s |

| Test Nr. 1.2 | 1 min, 06 s | |

| Test Nr. 1.3 | 1 min, 05 s | |

| Scenario Nr. 2 | Test Nr. 2.1 | 1 min, 12 s |

| Test Nr. 2.2 | 1 min, 09 s | |

| Test Nr. 2.3 | 1 min, 06 s | |

| Scenario Nr. 3 | Test Nr. 3.1 | 1 min, 09 s |

| Test Nr. 3.2 | 1 min, 05 s | |

| Test Nr. 3.3 | 1 min, 05 s | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ribeiro, J.P.L.; Gaspar, P.D.; Soares, V.N.G.J.; Caldeira, J.M.L.P. Computational Simulation of an Agricultural Robotic Rover for Weed Control and Fallen Fruit Collection—Algorithms for Image Detection and Recognition and Systems Control, Regulation, and Command. Electronics 2022, 11, 790. https://doi.org/10.3390/electronics11050790

Ribeiro JPL, Gaspar PD, Soares VNGJ, Caldeira JMLP. Computational Simulation of an Agricultural Robotic Rover for Weed Control and Fallen Fruit Collection—Algorithms for Image Detection and Recognition and Systems Control, Regulation, and Command. Electronics. 2022; 11(5):790. https://doi.org/10.3390/electronics11050790

Chicago/Turabian StyleRibeiro, João P. L., Pedro D. Gaspar, Vasco N. G. J. Soares, and João M. L. P. Caldeira. 2022. "Computational Simulation of an Agricultural Robotic Rover for Weed Control and Fallen Fruit Collection—Algorithms for Image Detection and Recognition and Systems Control, Regulation, and Command" Electronics 11, no. 5: 790. https://doi.org/10.3390/electronics11050790

APA StyleRibeiro, J. P. L., Gaspar, P. D., Soares, V. N. G. J., & Caldeira, J. M. L. P. (2022). Computational Simulation of an Agricultural Robotic Rover for Weed Control and Fallen Fruit Collection—Algorithms for Image Detection and Recognition and Systems Control, Regulation, and Command. Electronics, 11(5), 790. https://doi.org/10.3390/electronics11050790