MobileNets Can Be Lossily Compressed: Neural Network Compression for Embedded Accelerators

Abstract

:1. Introduction

- We present and evaluate ZFPe, a modified ZFP compression algorithm and hardware implementation optimized for efficient embedded hardware implementation.

- We present an alternative neural network compression approach using ZFPe, and demonstrate its relative effectiveness, even on models traditionally difficult to quantize with post-training quantization.

- We evaluate the performance impact of ZFPe in the context of embedded neural network acceleration.

2. Background and Related Works

2.1. Embedded Neural Network Acceleration

2.2. Neural Network Compression

2.3. Quantization Effectiveness on MobileNets

2.4. Floating-Point Compression

3. ZFPe: A Novel Lossy Floating-Point Compression for Embedded Accelerators

3.1. Original ZFP Algorithm Analysis

- Fixed-point conversion: All values in the block are aligned to the maximum exponent in the block and converted to the fixed point.

- Block transformation: A series of simple convolution operations are applied to the block in order to spatially de-correlate values [18]. The resulting block is statistically transformed such that most integers turn into small signed values clustered around zero. This is similar to the discrete cosine transform used by JPEG.

- Deterministic reordering: Values in a block are shuffled according to a predetermined sequence, which results in values in a roughly monotonically decreasing order. This stage is not necessary for compression.

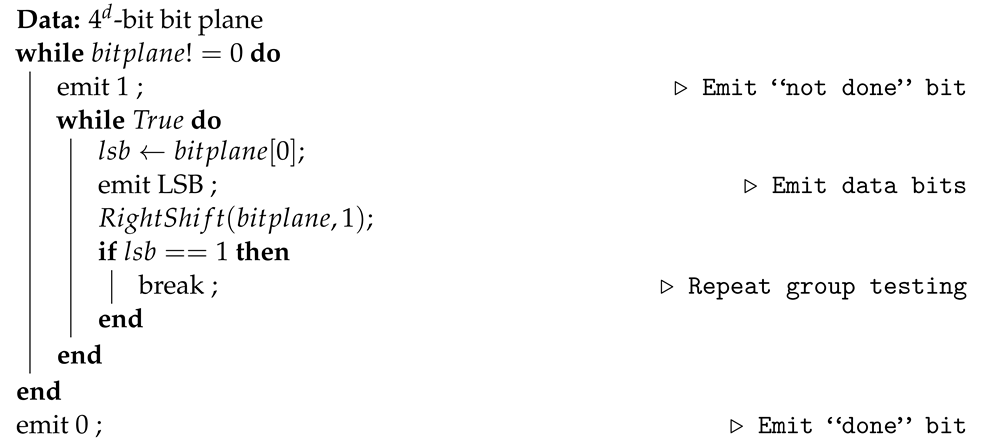

- Embedded coding: The group testing algorithm is used to encode each block in a smaller number of bits. It encodes one bit plane at a time in the order of significance until either the error tolerance bound is hit or all the provided bit budget is consumed.

| Algorithm 1: ZFP’s embedded coding stage emits bits one by one from each bit plane. |

|

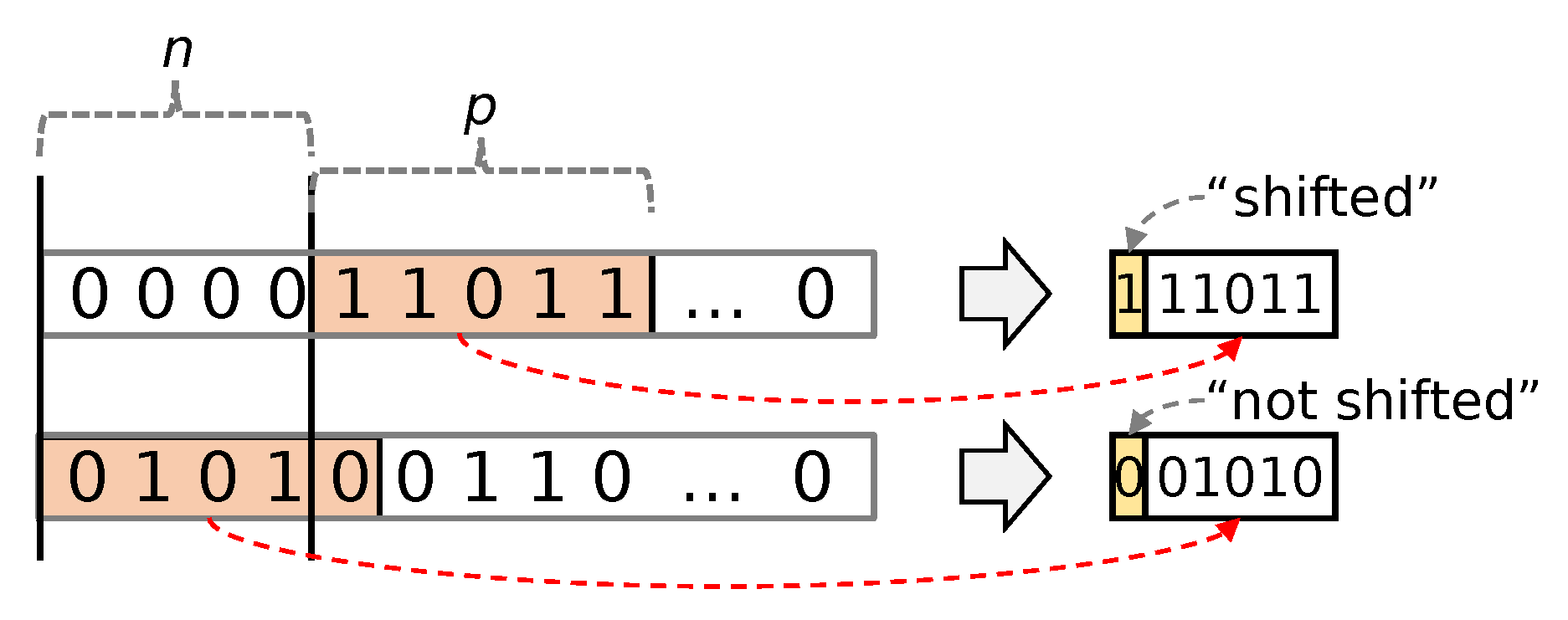

3.2. Novel ZFPe Optimizations

4. Neural Network Accelerator Architecture

4.1. ZFPe Accelerator Architecture

4.2. Burst Memory Arbiter

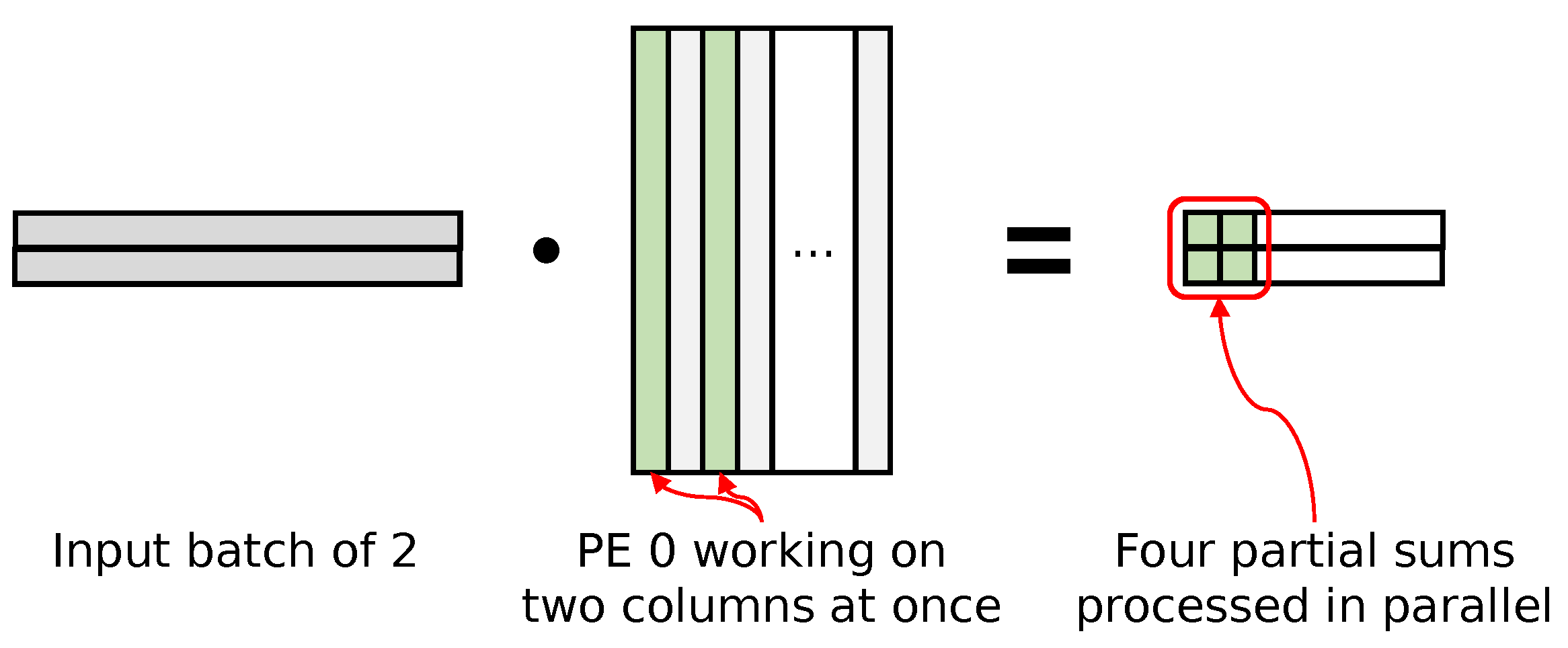

4.3. Neural Network Inference Accelerator

4.4. Simplified Floating-Point Cores

5. Evaluation

5.1. Implementation Detail

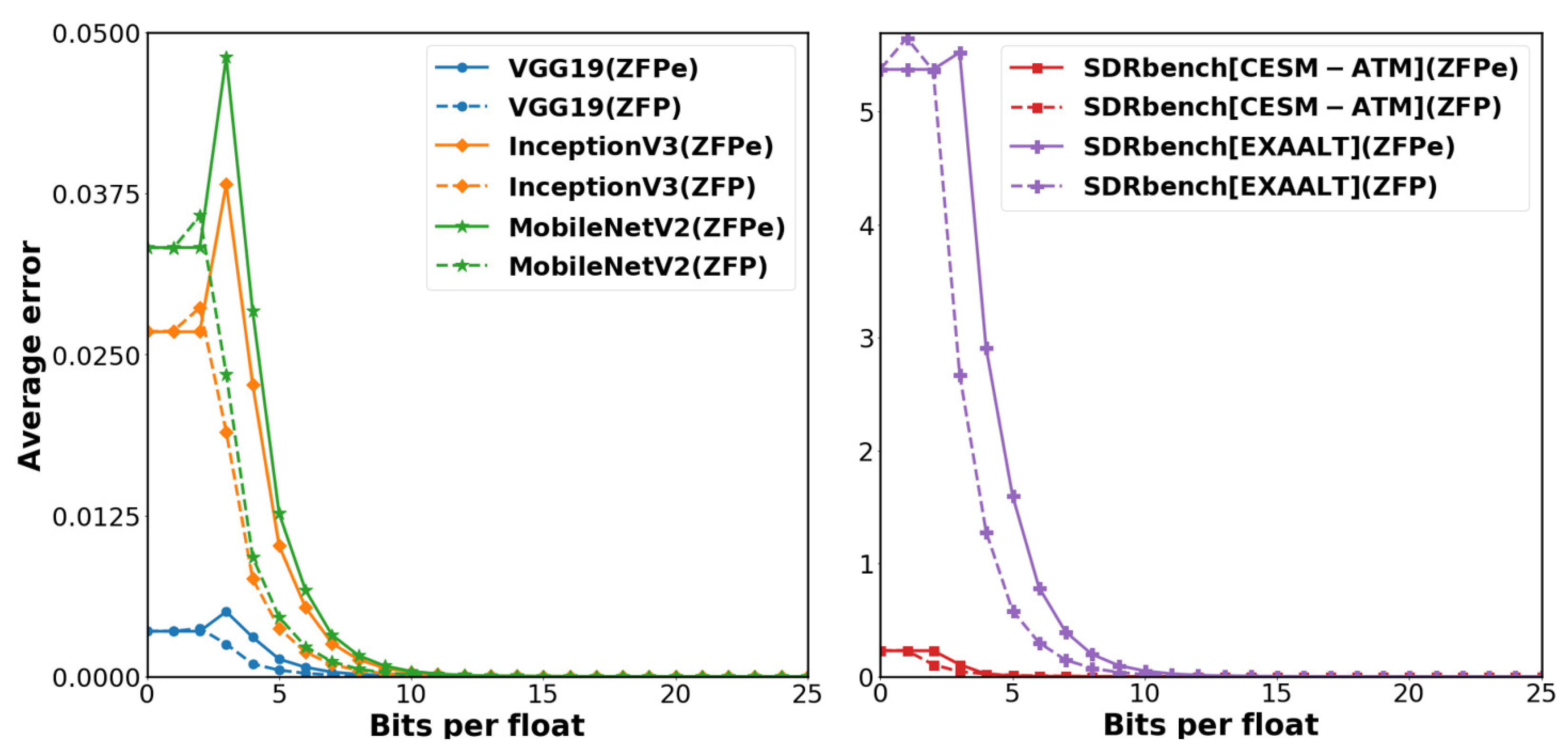

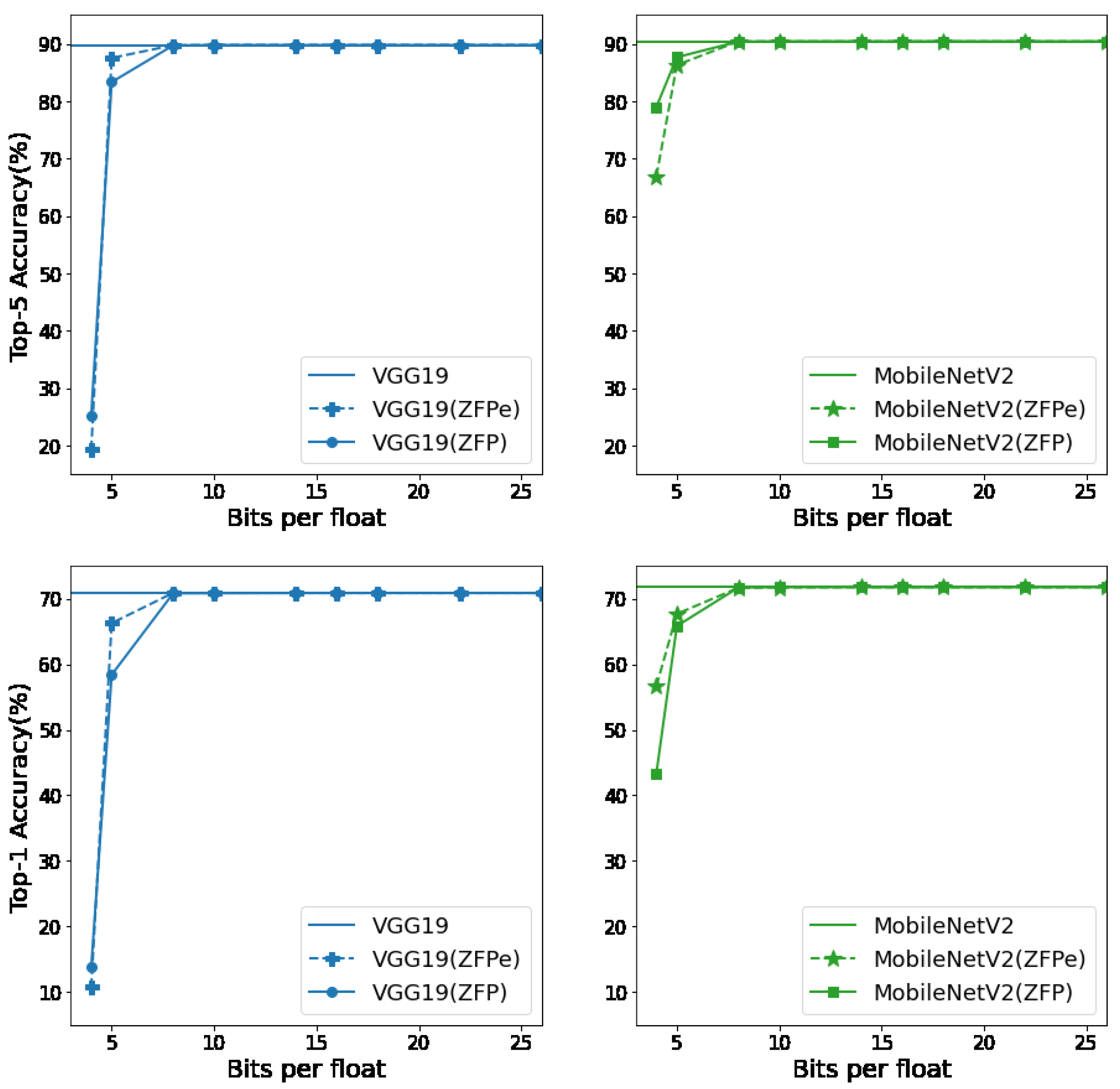

5.2. ZFPe Compression Efficiency Evaluation

5.3. Neural Network Accuracy—Single Dense Layer

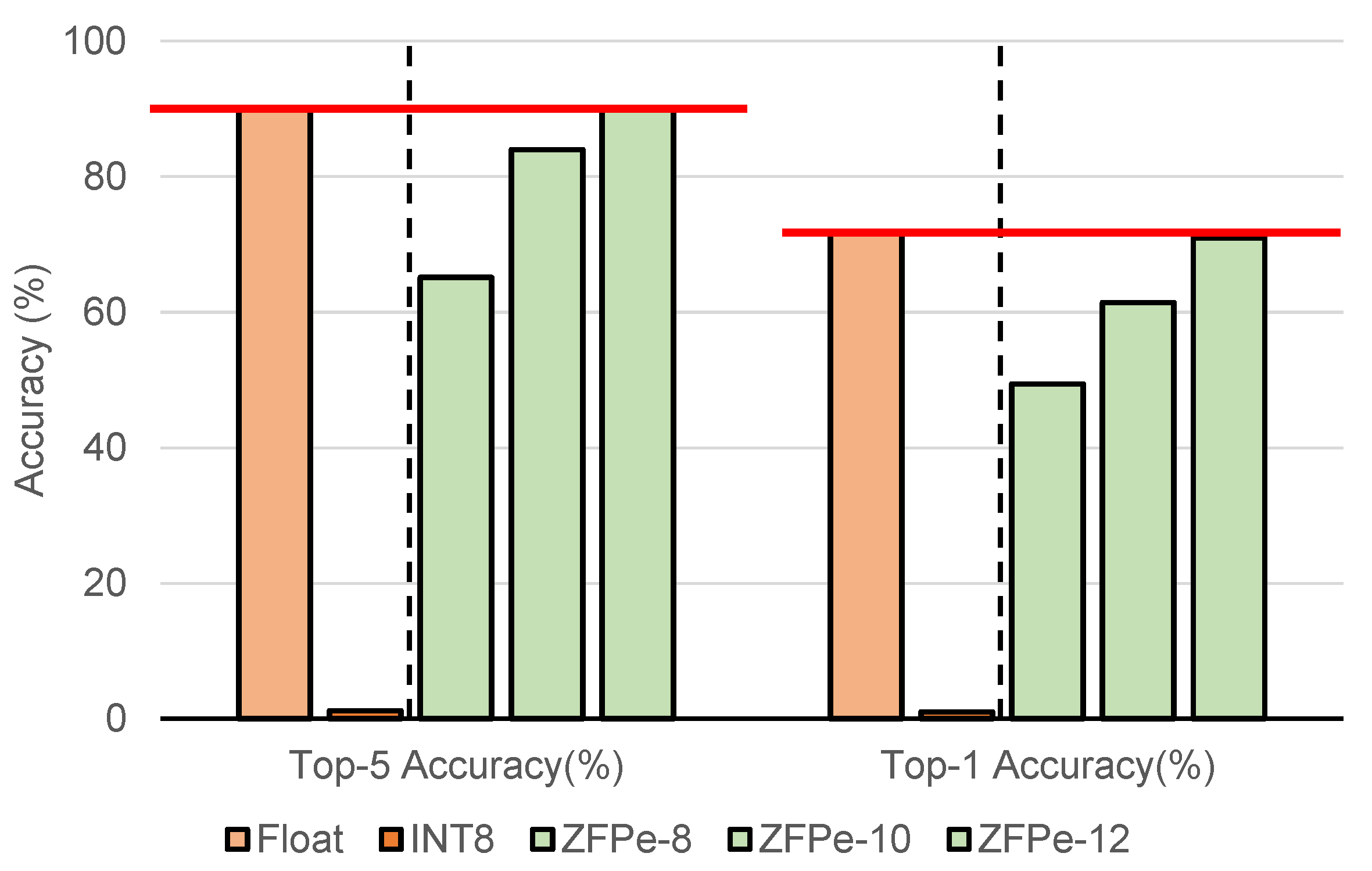

5.4. Neural Network Accuracy—Complete MobileNet V2

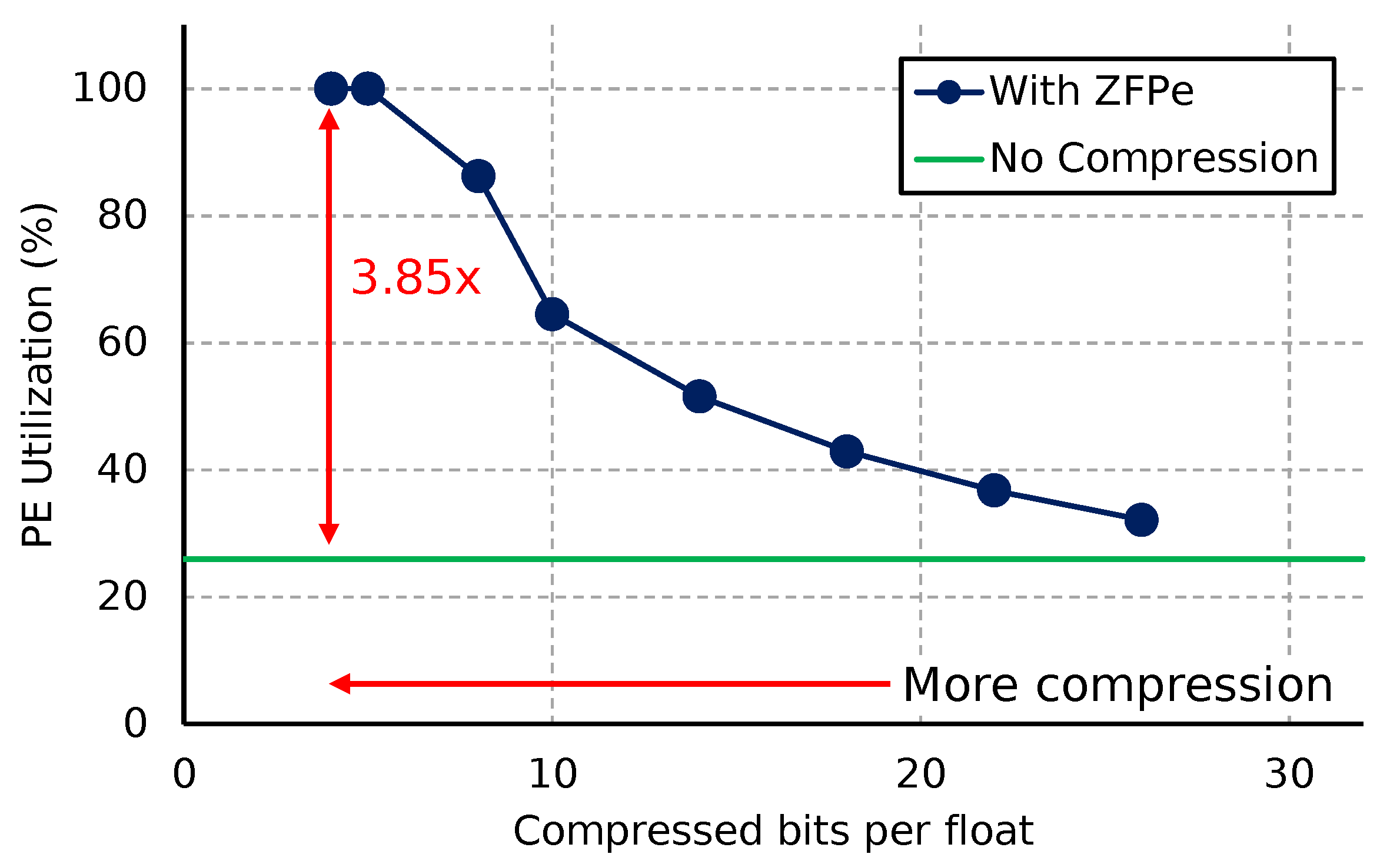

5.5. Accelerator Performance Evaluation

5.6. Comparison against State of the Art

5.7. Power-Performance Evaluation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mittal, S. A Survey on optimized implementation of deep learning models on the NVIDIA Jetson platform. J. Syst. Archit. 2019, 97, 428–442. [Google Scholar] [CrossRef]

- Guo, K.; Zeng, S.; Yu, J.; Wang, Y.; Yang, H. A survey of FPGA-based neural network accelerator. arXiv 2017, arXiv:1712.08934. [Google Scholar]

- Qiu, J.; Wang, J.; Yao, S.; Guo, K.; Li, B.; Zhou, E.; Yu, J.; Tang, T.; Xu, N.; Song, S.; et al. Going deeper with embedded fpga platform for convolutional neural network. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 21–23 February 2016; pp. 26–35. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Sanchez-Iborra, R.; Skarmeta, A.F. Tinyml-enabled frugal smart objects: Challenges and opportunities. IEEE Circuits Syst. Mag. 2020, 20, 4–18. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Park, E.; Ahn, J.; Yoo, S. Weighted-Entropy-Based Quantization for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7197–7205. [Google Scholar]

- Dokic, K.; Martinovic, M.; Mandusic, D. Inference speed and quantisation of neural networks with TensorFlow Lite for Microcontrollers framework. In Proceedings of the 2020 5th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Corfu, Greece, 25–27 September 2020; pp. 1–6. [Google Scholar]

- Migacz, S. NVIDIA 8-bit inference with TensorRT. In Proceedings of the GPU Technology Conference, San Jose, CA, USA, 8–11 May 2017. [Google Scholar]

- Kim, J.H.; Lee, J.; Anderson, J.H. FPGA architecture enhancements for efficient BNN implementation. In Proceedings of the 2018 International Conference on Field-Programmable Technology (FPT), Naha, Japan, 10–14 December 2018; pp. 214–221. [Google Scholar]

- Liang, S.; Yin, S.; Liu, L.; Luk, W.; Wei, S. FP-BNN: Binarized neural network on FPGA. Neurocomputing 2018, 275, 1072–1086. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Krishnamoorthi, R. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv 2018, arXiv:1806.08342. [Google Scholar]

- Jin, S.; Di, S.; Liang, X.; Tian, J.; Tao, D.; Cappello, F. DeepSZ: A novel framework to compress deep neural networks by using error-bounded lossy compression. In Proceedings of the 28th International Symposium on High-Performance Parallel and Distributed Computing, Phoenix, AZ, USA, 24–28 June 2019; pp. 159–170. [Google Scholar]

- Xiong, Q.; Patel, R.; Yang, C.; Geng, T.; Skjellum, A.; Herbordt, M.C. Ghostsz: A transparent fpga-accelerated lossy compression framework. In Proceedings of the 2019 IEEE 27th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), San Diego, CA, USA, 28 April–1 May 2019; pp. 258–266. [Google Scholar]

- Sun, G.; Jun, S.W. ZFP-V: Hardware-optimized lossy floating point compression. In Proceedings of the 2019 International Conference on Field-Programmable Technology (ICFPT), Tianjin, China, 9–13 December 2019; pp. 117–125. [Google Scholar]

- Lindstrom, P. Fixed-rate compressed floating-point arrays. IEEE Trans. Vis. Comput. Graph. 2014, 20, 2674–2683. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient processing of deep neural networks: A tutorial and survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef] [Green Version]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Yun, S.; Wong, A. Do All MobileNets Quantize Poorly? Gaining Insights into the Effect of Quantization on Depthwise Separable Convolutional Networks through the Eyes of Multi-scale Distributional Dynamics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2447–2456. [Google Scholar]

- Laskaridis, S.; Venieris, S.I.; Almeida, M.; Leontiadis, I.; Lane, N.D. SPINN: Synergistic progressive inference of neural networks over device and cloud. In Proceedings of the 26th Annual International Conference on Mobile Computing and Networking, London, UK, 21–25 September 2020; pp. 1–15. [Google Scholar]

- Cooke, R.A.; Fahmy, S.A. Quantifying the latency benefits of near-edge and in-network FPGA acceleration. In Proceedings of the Third ACM International Workshop on Edge Systems, Analytics and Networking, Heraklion, Greece, 27 April 2020; pp. 7–12. [Google Scholar]

- Chen, J.; Hong, S.; He, W.; Moon, J.; Jun, S.W. Eciton: Very Low-Power LSTM Neural Network Accelerator for Predictive Maintenance at the Edge. In Proceedings of the 2021 31st International Conference on Field Programmable Logic and Applications (FPL), Dresden, Germany, 30 August–3 September 2021. [Google Scholar]

- Chen, Y.H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 2016, 52, 127–138. [Google Scholar] [CrossRef] [Green Version]

- Moons, B.; Uytterhoeven, R.; Dehaene, W.; Verhelst, M. 14.5 Envision: A 0.26-to-10TOPS/W subword-parallel dynamic-voltage-accuracy-frequency-scalable Convolutional Neural Network processor in 28nm FDSOI. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 246–247. [Google Scholar]

- Shin, D.; Lee, J.; Lee, J.; Yoo, H.J. 14.2 DNPU: An 8.1 TOPS/W reconfigurable CNN-RNN processor for general-purpose deep neural networks. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 240–241. [Google Scholar]

- Whatmough, P.N.; Lee, S.K.; Brooks, D.; Wei, G.Y. DNN engine: A 28-nm timing-error tolerant sparse deep neural network processor for IoT applications. IEEE J. Solid-State Circuits 2018, 53, 2722–2731. [Google Scholar] [CrossRef]

- Dundar, A.; Jin, J.; Martini, B.; Culurciello, E. Embedded Streaming Deep Neural Networks Accelerator With Applications. IEEE Trans. Neural Networks Learn. Syst. 2017, 28, 1572–1583. [Google Scholar] [CrossRef] [PubMed]

- Jiao, L.; Luo, C.; Cao, W.; Zhou, X.; Wang, L. Accelerating low bit-width convolutional neural networks with embedded FPGA. In Proceedings of the 2017 27th International Conference on Field Programmable Logic and Applications (FPL), Ghent, Belgium, 4–8 September 2017; pp. 1–4. [Google Scholar]

- Feng, G.; Hu, Z.; Chen, S.; Wu, F. Energy-efficient and high-throughput FPGA-based accelerator for Convolutional Neural Networks. In Proceedings of the 2016 13th IEEE International Conference on Solid-State and Integrated Circuit Technology (ICSICT), Nanjing, China, 25–28 October 2016; pp. 624–626. [Google Scholar]

- Roukhami, M.; Lazarescu, M.T.; Gregoretti, F.; Lahbib, Y.; Mami, A. Very Low Power Neural Network FPGA Accelerators for Tag-Less Remote Person Identification Using Capacitive Sensors. IEEE Access 2019, 7, 102217–102231. [Google Scholar] [CrossRef]

- Lemieux, G.G.; Edwards, J.; Vandergriendt, J.; Severance, A.; De Iaco, R.; Raouf, A.; Osman, H.; Watzka, T.; Singh, S. TinBiNN: Tiny binarized neural network overlay in about 5000 4-LUTs and 5 mw. arXiv 2019, arXiv:1903.06630. [Google Scholar]

- Rongshi, D.; Yongming, T. Accelerator Implementation of Lenet-5 Convolution Neural Network Based on FPGA with HLS. In Proceedings of the 2019 3rd International Conference on Circuits, System and Simulation (ICCSS), Nanjing, China, 13–15 June 2019; pp. 64–67. [Google Scholar]

- Fuhl, W.; Santini, T.; Kasneci, G.; Rosenstiel, W.; Kasneci, E. Pupilnet v2. 0: Convolutional neural networks for cpu based real time robust pupil detection. arXiv 2017, arXiv:1711.00112. [Google Scholar]

- Li, Z.; Eichel, J.; Mishra, A.; Achkar, A.; Naik, K. A CPU-based algorithm for traffic optimization based on sparse convolutional neural networks. In Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada, 30 April–3 May 2017; pp. 1–5. [Google Scholar]

- Semiconductor, L. Lattice ECP5. Available online: https://www.latticesemi.com/Products/FPGAandCPLD/ECP5 (accessed on 1 April 2021).

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. EIE: Efficient inference engine on compressed deep neural network. ACM SIGARCH Comput. Archit. News 2016, 44, 243–254. [Google Scholar] [CrossRef]

- Lin, D.; Talathi, S.; Annapureddy, S. Fixed point quantization of deep convolutional networks. In Proceedings of the 33rd International Conference on Machine Learning, New York City, NY, USA, 19–24 June 2016; pp. 2849–2858. [Google Scholar]

- Shin, S.; Hwang, K.; Sung, W. Fixed-point performance analysis of recurrent neural networks. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 976–980. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2704–2713. [Google Scholar]

- Luo, J.H.; Wu, J.; Lin, W. Thinet: A filter level pruning method for deep neural network compression. In Proceedings of the IEEE international conference on computer vision, Venice, Italy, 22–29 October 2017; pp. 5058–5066. [Google Scholar]

- Pietron, M.; Wielgosz, M. Retrain or Not Retrain?-Efficient Pruning Methods of Deep CNN Networks. In Proceedings of the International Conference on Computational Science, Amsterdam, The Netherlands, 3–5 June 2020; pp. 452–463. [Google Scholar]

- Fang, J.; Shafiee, A.; Abdel-Aziz, H.; Thorsley, D.; Georgiadis, G.; Hassoun, J.H. Post-training piecewise linear quantization for deep neural networks. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 69–86. [Google Scholar]

- Lee, D.; Kim, B. Retraining-based iterative weight quantization for deep neural networks. arXiv 2018, arXiv:1805.11233. [Google Scholar]

- Jin, Q.; Yang, L.; Liao, Z. Adabits: Neural network quantization with adaptive bit-widths. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 2146–2156. Available online: https://openaccess.thecvf.com/content_CVPR_2020/papers/Jin_AdaBits_Neural_Network_Quantization_With_Adaptive_Bit-Widths_CVPR_2020_paper.pdf (accessed on 1 February 2022).

- Gong, J.; Shen, H.; Zhang, G.; Liu, X.; Li, S.; Jin, G.; Maheshwari, N.; Fomenko, E.; Segal, E. Highly efficient 8-bit low precision inference of convolutional neural networks with intelcaffe. In Proceedings of the 1st on Reproducible Quality-Efficient Systems Tournament on Co-Designing Pareto-Efficient Deep Learning, Williamsburg, VA, USA, 24 April 2018; p. 1. [Google Scholar]

- Mellempudi, N.; Kundu, A.; Mudigere, D.; Das, D.; Kaul, B.; Dubey, P. Ternary neural networks with fine-grained quantization. arXiv 2017, arXiv:1705.01462. [Google Scholar]

- Zhu, C.; Han, S.; Mao, H.; Dally, W. Trained ternary quantization. arXiv 2016, arXiv:1612.01064. [Google Scholar]

- Courbariaux, M.; Hubara, I.; Soudry, D.; El-Yaniv, R.; Bengio, Y. Binarized neural networks: Training deep neural networks with weights and activations constrained to +1 or −1. arXiv 2016, arXiv:1602.02830. [Google Scholar]

- Pouransari, H.; Tu, Z.; Tuzel, O. Least squares binary quantization of neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 698–699. [Google Scholar]

- Fujii, T.; Sato, S.; Nakahara, H. A threshold neuron pruning for a binarized deep neural network on an FPGA. IEICE Trans. Inf. Syst. 2018, 101, 376–386. [Google Scholar] [CrossRef] [Green Version]

- Nagel, M.; Baalen, M.v.; Blankevoort, T.; Welling, M. Data-free quantization through weight equalization and bias correction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1325–1334. [Google Scholar]

- Jain, S.R.; Gural, A.; Wu, M.; Dick, C.H. Trained quantization thresholds for accurate and efficient fixed-point inference of deep neural networks. arXiv 2019, arXiv:1903.08066. [Google Scholar]

- Di, S.; Cappello, F. Fast error-bounded lossy hpc data compression with sz. In Proceedings of the 2016 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Chicago, IL, USA, 23–27 May 2016; pp. 730–739. [Google Scholar]

- Tao, D.; Di, S.; Chen, Z.; Cappello, F. Significantly improving lossy compression for scientific data sets based on multidimensional prediction and error-controlled quantization. In Proceedings of the 2017 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Orlando, FL, USA, 29 May–2 June 2017; pp. 1129–1139. [Google Scholar]

- Liang, X.; Di, S.; Tao, D.; Li, S.; Li, S.; Guo, H.; Chen, Z.; Cappello, F. Error-controlled lossy compression optimized for high compression ratios of scientific datasets. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 438–447. [Google Scholar]

- Lindstrom, P.; Isenburg, M. Fast and efficient compression of floating-point data. IEEE Trans. Vis. Comput. Graph. 2006, 12, 1245–1250. [Google Scholar] [CrossRef]

- Diffenderfer, J.; Fox, A.L.; Hittinger, J.A.; Sanders, G.; Lindstrom, P.G. Error analysis of zfp compression for floating-point data. SIAM J. Sci. Comput. 2019, 41, A1867–A1898. [Google Scholar] [CrossRef] [Green Version]

- SDRBench. Scientific Data Reduction Benchmarks. Available online: http://sdrbench.github.io (accessed on 1 July 2021).

- Sun, G.; Kang, S.; Jun, S.W. BurstZ: A bandwidth-efficient scientific computing accelerator platform for large-scale data. In Proceedings of the 34th ACM International Conference on Supercomputing, Barcelona, Spain, 29 June–2 July 2020; pp. 1–12. [Google Scholar]

- Choi, H.; Lee, J.; Sung, W. Memory access pattern-aware DRAM performance model for multi-core systems. In Proceedings of the (IEEE ISPASS) IEEE International Symposium on Performance Analysis of Systems and Software, Austin, TX, USA, 10–12 April 2011; pp. 66–75. [Google Scholar]

- Cuppu, V.; Jacob, B.; Davis, B.; Mudge, T. A performance comparison of contemporary DRAM architectures. In Proceedings of the 26th annual international symposium on Computer architecture, Atlanta, GA, USA, 1–4 May 1999; pp. 222–233. [Google Scholar]

- Radiona. ULX3S. Available online: https://radiona.org/ulx3s/ (accessed on 1 April 2021).

- Shah, D.; Hung, E.; Wolf, C.; Bazanski, S.; Gisselquist, D.; Milanovic, M. Yosys+ nextpnr: An open source framework from verilog to bitstream for commercial fpgas. In Proceedings of the 2019 IEEE 27th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), San Diego, CA, USA, 28 April–1 May 2019; pp. 1–4. [Google Scholar]

- Ehliar, A. Area efficient floating-point adder and multiplier with IEEE-754 compatible semantics. In Proceedings of the 2014 International Conference on Field-Programmable Technology (FPT), Shanghai, China, 10–12 December 2014; pp. 131–138. [Google Scholar]

- Roesler, E.; Nelson, B. Novel optimizations for hardware floating-point units in a modern FPGA architecture. In International Conference on Field Programmable Logic and Applications; Springer: Berlin/Heidelberg, Germany, 2002; pp. 637–646. [Google Scholar]

- Zhao, K.; Di, S.; Lian, X.; Li, S.; Tao, D.; Bessac, J.; Chen, Z.; Cappello, F. SDRBench: Scientific Data Reduction Benchmark for Lossy Compressors. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 2716–2724. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 1 April 2021).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Semiconductor, L. Lattice Diamond Software. Available online: https://www.latticesemi.com/latticediamond (accessed on 1 April 2021).

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing fpga-based accelerator design for deep convolutional neural networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2015; pp. 161–170. [Google Scholar]

| Module | Slice (%) | DP16KD BRAM (%) | MULT18X18D Multiplier (%) |

|---|---|---|---|

| 1× PE | 2.7 K (6%) | 11 (5%) | 1 (0.5%) |

| 1× Decompressor | 2.8 K (6%) | 0 (0%) | 0 (0%) |

| 1× Compressor | 3 K (7%) | 0 (0%) | 0 (0%) |

| Shell | 2 K (5%) | 3 (1%) | 0 (0%) |

| Total | 32 K (76%) | 91 (43%) | 8 (5%) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, S.-M.; Jun, S.-W. MobileNets Can Be Lossily Compressed: Neural Network Compression for Embedded Accelerators. Electronics 2022, 11, 858. https://doi.org/10.3390/electronics11060858

Lim S-M, Jun S-W. MobileNets Can Be Lossily Compressed: Neural Network Compression for Embedded Accelerators. Electronics. 2022; 11(6):858. https://doi.org/10.3390/electronics11060858

Chicago/Turabian StyleLim, Se-Min, and Sang-Woo Jun. 2022. "MobileNets Can Be Lossily Compressed: Neural Network Compression for Embedded Accelerators" Electronics 11, no. 6: 858. https://doi.org/10.3390/electronics11060858

APA StyleLim, S.-M., & Jun, S.-W. (2022). MobileNets Can Be Lossily Compressed: Neural Network Compression for Embedded Accelerators. Electronics, 11(6), 858. https://doi.org/10.3390/electronics11060858