1. Introduction

When successful, Denial-of-Service (DoS) attacks may stop legitimate users from accessing a specific network resource such as a web server [

1]. Distributed DoS (DDoS) attack [

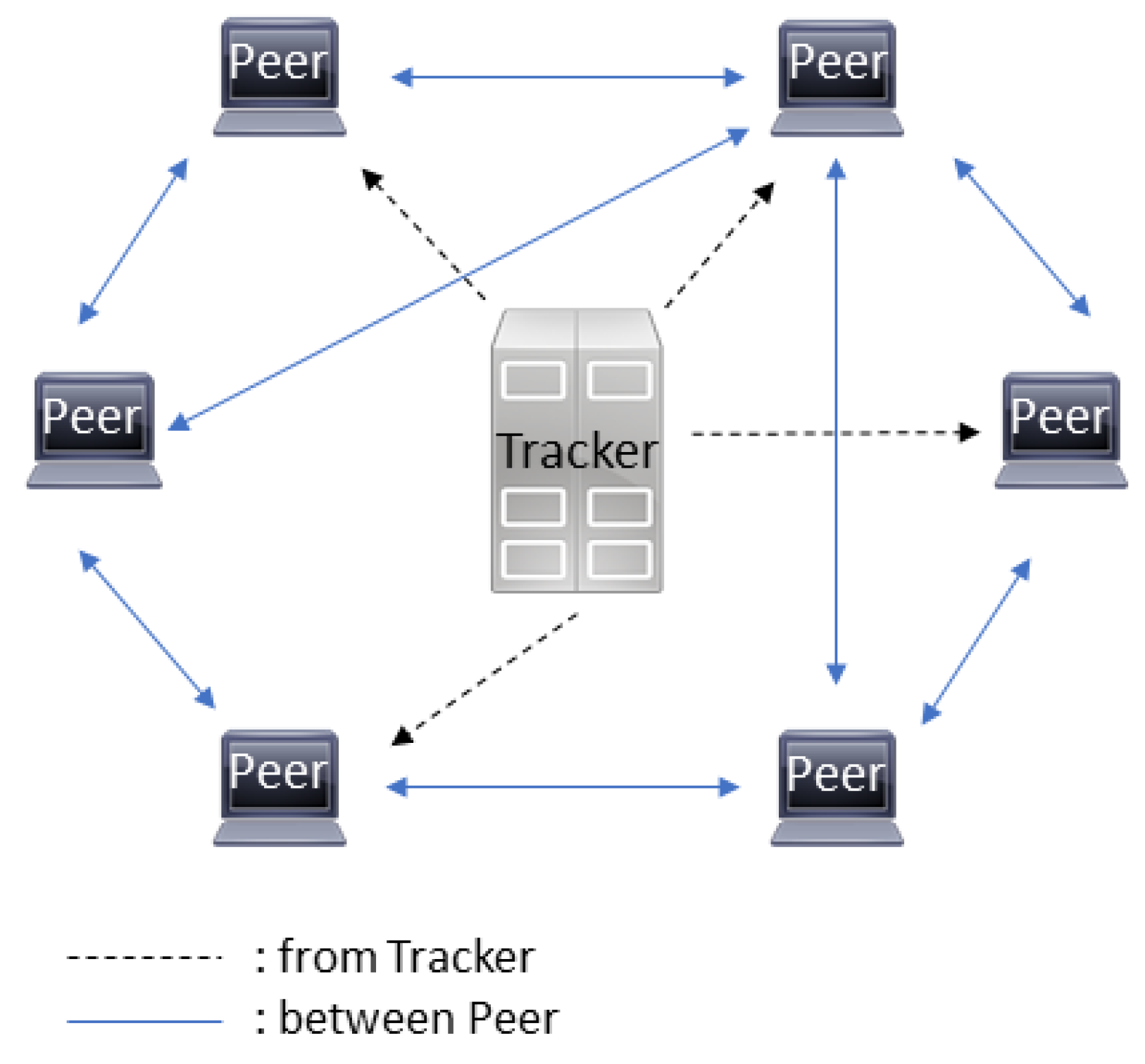

2] is more effective because the attack originates from multiple sources, is more difficult to block, and is more effective in data load. Index poisoning of a torrent file is a type of DDoS attack that uses the BitTorrent protocol. Peer-to-peer file-sharing systems are still the most popular method for any type of content sharing over the internet, especially using the BitTorrent protocol. Like any other technology using the internet, it is vulnerable to attacks, suffering from different kinds of denial-of-service attacks, such as query flooding and pollution [

3]. For example, in the content pollution attack, a malicious user publishes a large number of decoys (same or similar meta-data) so that queries of a given content return predominantly fake/corrupted copies [

4]. This type of attack has been used by media corporations to fight back against their copyrighted material being shared, inserting corrupted files, and making those copies useless. Examples include HBO with the TV show Rome in 2005 and MediaDefender with the movie Sicko in 2007. This work analyses the impact of a flooding attack using torrent index poisoning and aims to enlighten and provide helpful information on mitigating a DDoS attack. It explores reverse proxy features, which provide excellent protection to mitigate these attacks when adequately applied and tuned. The document is organized as follows: After this Introduction, the peer-to-peer and BitTorrent protocols are described in

Section 2. Some types of attacks are presented in

Section 3. Next, in

Section 4, the case study is described and the kind of measures that were used to mitigate the attack. The results are presented in

Section 5. The Discussion is in

Section 6. Finally,

Section 7 gives the Conclusions.

4. Case Study—Attack on a Higher Education Institution

The institution in the case study has over 3500 users, between students and staff. Due to the SARS-CoV-2 pandemic, an e-learning Moodle cluster solution was implemented [

11]. With servers capable of responding to the requirements of distance learning, a system based on the premises of High Availability, High Performance, Load Balancing was implemented, as shown in

Figure 4. Many of the institution’s teachers had almost one and a half years of experience implementing and using Moodle for online exams and student evaluation. During this time, the system proved to be robust, even on exams with a high number of students, several times with over 500 students simultaneously.On the afternoon of the 8th of September of 2021, while students had an exam, an attack hit the network.

In the middle of the afternoon, the network was unresponsive, all connections to the outside were extremely slow or timed out, and the Moodle cluster had the same behavior. Two main points were severely affected by the way the cluster was designed. First, the perimeter firewalls could not process the high volume of incoming requests, becoming unresponsive via the management console. Second, the reverse proxy cluster was also unable to respond to all requests (shown in the red area in

Figure 4).

4.1. Perimeter Firewalls

The firewall cluster protecting the outside border of the institution was composed of a cluster of two firewalls configured in high availability. Regular daily traffic is usually around 30 to 50 Mbps, inbound and outbound. As shown in

Figure 5, during the attack, the incoming traffic was as high as 800 Mbps for several hours, which is 15 times above the normal traffic of the institution.

4.2. Reverse Proxy Cluster

All incoming traffic to the web servers passes through the firewalls and is directed to the cluster of the reverse proxy servers running HAProxy. The cluster is composed of thrual servers running on a VMware ESXI cluster in high availability. Each of the reserve proxy servers is configured with a different level of priority, making one the master, the other the first backup, and the last one the second backup. Each node uses the Keepalived software, which uses the Virtual Router Redundancy Protocol (VRRP), sending multicast messages using the 112 protocol [

11]. The Keepalived checks every two seconds if HAProxy is running and messages the other nodes.

The high number of requests hitting the master resulted in warnings on the ESXI, for CPU and memory usage (

Figure 6), even in servers with 16 cores and 16 GB of RAM. The master never changed to one of the backups because the server and proxy service were up and running and were just unable to provide the service.

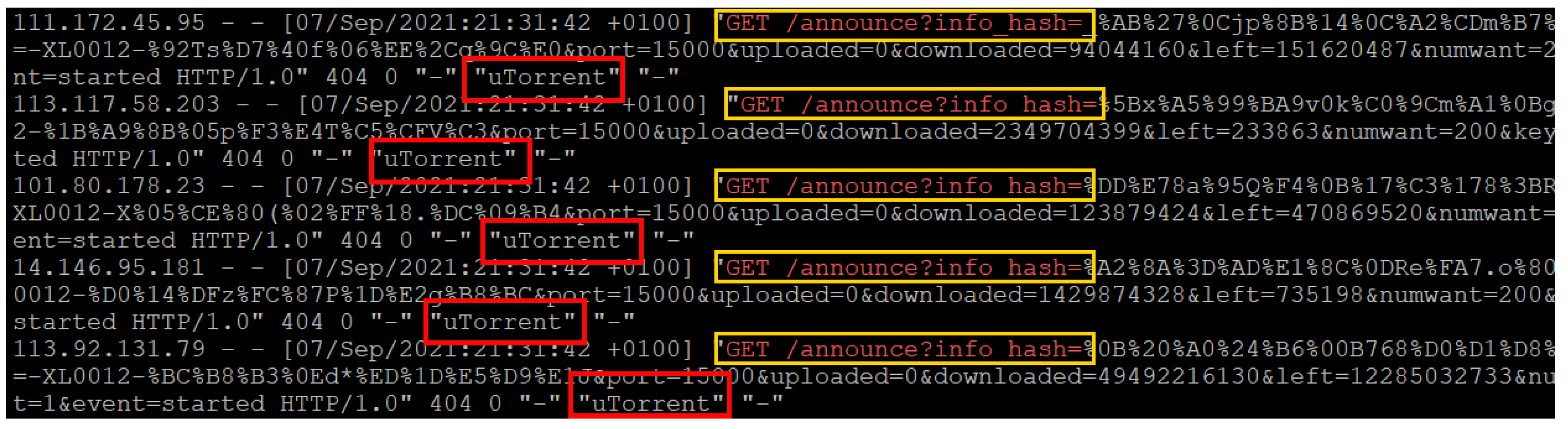

While accessing the reverse proxy server logs for analysis, three things were clear. First, the huge quantity of requests sent to the servers made it impossible to read with a tail command due to the speed at which the text scrolled. Second, the kind of requests that were being sent to the server all had the path “/announce/” with a query string info_hash in the URL, as shown in

Figure 7 with yellow boxes. Third, the browser that was accessing the URL did not show an expected name, such as Firefox, Chrome, Edge, and so on, but the BitTorrent client uTorrent, as shown in

Figure 7, with red boxes.

This attack continued for several days. The reserve proxy logs were kept to generate charts using the GoAcess-webstat tool. The regular size of the compressed log file each day was between 300 and 500 Kb (shown in

Figure 8 in yellow box). The huge number of requests received during the attack resulted in files of over 300 megabytes. For stats, the institution uses the GoAcess-webstat tool, which is configured to automatically generate stats for all websites. As the log files increased (which was caused by the attack), this was unable to process files, and the application crashed.

A deeper analysis of the log file size for this work provided other unknown information at the time. The attack happened again in October and occurred in two different waves. In the list of the ten biggest log files of all time for the institution website (

Figure 9), all are from October; eight files were between 700 and 950 megabytes, another was near 1.5 gigabytes, and the last one was over 4 gigabytes but uncompressed. For the last log file, it was uncompressed because the log rotation process (Linux logrotated daemon) was not able to compress the existing file on rotation due to the lack of disk space. This was another consequence of this attack, resulting in the reverse proxy servers disk becoming full of web logs.

4.3. Accepting and Validating the Client Requests

This attack type implies using the target IP address or server name. The IP address is often added to a rogue or malicious DNS server to disguise the target on the torrent file. In this case, for example, the IPv4 address of the institutional website was used, so the attackers could add this IP or use a valid one, but with a fake server address, for example, server100.trackerlist.something, which could be resolved to the same IP address. Then, the core problem is that the server should only accept requests to valid websites that this server is running [

12].

SNI—Server Name Indication

Transport Layer Security (TLS) is a cryptographic protocol designed to provide communications security over a computer network, implementing an extension called Server Name Indication (SNI) [

13]. Nowadays, one web server may provide multiple websites with only one IP address. With multiple websites, each with its own SSL certificate, the server must provide the correct certificate to the client. SNI is an extension of the TLS/SSL protocol, which is used on the HTTPS protocol [

14]. It is included in the TLS/SSL handshake process in order to ensure that client devices are able to see the correct SSL certificate for the website they are trying to access. With this extension, the client specifies the hostname or domain name of the website during the TLS handshake instead of when the HTTP connection opens after the handshake negotiation. With that, this verification is completed at the very beginning of the connection.

4.4. HAProxy

HAProxy [

15], which stands for High Availability Proxy, is a popular open-source software TCP/HTTP Load Balancer and proxying solution. Its most common use is to improve the performance and reliability of a server environment by distributing the workload across multiple servers (web servers, applications, and databases). It is used in many high-profile environments, including GitHub, Imgur, Instagram, and Twitter [

16]. The configuration of HaProxy is based on defining the interfaces that receive requests (frontends) and those that connect to internal servers (backends), implying the definition of at least a frontend and a backend. Since version 1.8, HAProxy has supported SNI and SNI health checks. Since HAProxy is a reverse proxy server, it can work in HTTP or TCP proxy, and both may be used with SNI [

17].

4.4.1. HAProxy Mode HTTP

The most usual scenario implemented with HAProxy is shown in

Figure 10, acting as a reverse proxy server. The clients connect to the HAProxy, which decides if a connection to an internal server is made. The clients can only see the perimeter server, which will act as a web server, as shown in

Figure 10. HAProxy operates at layer 7 with the backend servers using this format. Operating in HTTP mode, HAProxy can extract the SNI (ssl_fc_sni) from the request or the host header information (hdr(host)) to filter the incoming session and route it to the proper backend server.

4.4.2. HAProxy Mode TCP

When configured in TCP mode (

Figure 11), HAProxy will operate at layer 4, the TCP layer. In this case, traffic passes to the backends via HAProxy, and the HTTPS data are not decrypted, so the reverse proxy server cannot analyze what is being exchanged between the client and server. Still, at the beginning of the connection, on the TLS/SSL ClientHello, HAProxy can extract SNI from the first client hello, before the TLS session is even established. This way, it can filter the session using the req_ssl_sni value. Neither the ssl_fc_sni indicator nor the host header information (hdr(host)) is a valid option in TCP mode [

18].

4.4.3. HAProxy Configuration

In HAProxy, ssl_fc_sni and req_ssl_sni are used with Access Control Lists (ACLs). The objective is to only accept traffic to a server with a valid name, for example, the institutional website address name. The configuration file for HProxy contains several sections. When HAProxy is configured as a reverse proxy, it has to define two sections: frontend and backend. Since an instance of HAProxy may have multiple frontends and backends, the frontend section has a set of rules that define what client requests should be accepted and how to forward them to the backends. The backend section defines the internal server or servers that will receive forwarded requests. Although HAProxy is configured in HTTP mode, a small portion of the configuration is related to TCP. The HAProxy documentation page states that content switching is needed, and it is recommended to first wait for a complete client hello (type 1) [

19].

Listing 1 is a portion of the front and backend configuration used to mitigate the attack. If the SNI matches that name, HAProxy will proxy the request to the backend server. If the SNI does not match, it is assumed that it is an invalid/illegal access, and the request should be discarded. In this case, HAProxy will make a TCP request reject. This option will close the connection without a response once a session has been created but before the HTTP parser has been initialized.

| Listing 1. HAProxy configuration for the institutional address website (relevant parts only). |

![Electronics 12 00165 i001]() |

5. Results

The HAProxy Stats page is a valuable resource for real-time information. However, this page was unresponsive and impossible to access during the attack. Another resource is GoAcess-webstat, a tool to generate stats that could be useful for understanding the impact on the firewall and the servers. Unfortunately, the size of the logs made the application useless since it could not process the amount of information recorded on the logs files. The tool has another limitation, which is that it is only able to show the stats from the last 12 months.

5.1. Hits and Requests—Year 2020

When studying the website traffic behavior during the year 2020, some observations were found that we must observe carefully. These occurred during the exams period at the end of each semester (February and June) and September and at the beginning of October, when students verified their classes schedule. There were four other access peeks during the year, but none over the ones on this period.

On 6 October, there were 7914 visitors, while the year average was 1590. On 2 October, there were 126,232 hits, while the average was 28,748 (

Figure 12). Using this tool, there are other indicators to point out:

The most requested URL/File was /PT/Default.aspx with over 546k hits (4.57% of all accesses).

The total sum of 404 requests was around 562k hits, with 380 MB in responses.

In the context of this type of attack, the impact of 404 status codes [

20] is extremely important to understand.

In the year 2020, there were a total of 1,021,899 requests that resulted in a 404 error, with a percentage of 8.54% of all requests of that year, as shown in

Figure 13.

5.2. Hits and Requests until August 2021

On 6 January, there were 20,275 visitors, whereas the average that year was 1559. Previously during 2020, it were 1590. The 22 and 23 of January were record days in the number of hits, with 142,357 and 203,408, respectively, while the average was 27,913 and previously 28,748 (

Figure 14).

The chart with the access until September 2021 is consistent with that of the year before. The results in 2020 are similar. The 404 requests also remain constant, with a percentage of 8.11% of all requests for that year, as shown in

Figure 15.

5.3. Hits and Requests—September 2021

With the attack, the record of hits requests to the website made the remaining 11 months irrelevant, while the max number of visitors stayed the same, meaning that were never over 21 thousand visitors per day. The number of hits explains the type of attack, and the limit on the axis on the left changes two hundred thousand to five million, as shown in

Figure 16.

There were over 5 million hits on the 9th of September. The seven days with top hits were 9th, 8th, 7th, 10th, 11th, 12th, and 13th in descending order.

The 404 requests skyrocket to over fifteen million, with the percentage of that type of requests passing from 8.54% to 46.89%, as shown on

Figure 17. The number of 404 requests is similar to the number of valid requests (2xx) of the last year, almost a 40% growth in twelve months.

6. Discussion

Deep logging analysis is extremely helpful in understanding the nature of a DDoS attack. Without this knowledge, stopping, blocking, or at least minimizing the impact of this type of attack may be impossible. The Torrent Network design, especially for the websites that share torrent files and mag links, makes it extremely difficult to report and block files with invalid announcers. If this was possible, those kinds of attacks could be blocked at the source, but unfortunately, most of these websites share illegal or copyrighted software and movies. Using Open Source Reserve Proxy software is a reasonable solution for server protection. Other defense mechanisms using Open Source, such as the use of IPTABLES firewalls, can improve this protection by blocking incoming attackers, implementing a connection limit during a defined period of time, or analyzing the request type or content. In the Open Source software, there are also web application firewalls; some may be used as modules with reserve proxy solutions such as ModSecurity. The use of HAProxy configured with the Server Name Indication detection was the way found to minimize the impact of this kind of attack.

This work implements a solution using HAProxy, but other reserve proxy solutions may be used to obtain the same result. As demonstrated in

Figure 4, the network was already designed using reserve proxy servers. Based on the same scenario, NGINX can be used as a reserve proxy server and block access to requests to the URL /announce.

NGINX is more than a reverse proxy solution. It is also a web server, meaning that the same configuration may be used as a web server (Listing 2). Other web servers such Apache Web Server use a similar configuration and can also be used for the same end (Listing 3).

| Listing 2. NGINX configuration block relative URL /announce (relevant parts only). |

![Electronics 12 00165 i002]() |

| Listing 3. Apache configuration block relative URL /announce (relevant parts only). |

![Electronics 12 00165 i003]() |

Even IIS, which is a different solution from the Linux alternatives, has a request filtering feature that allows denying access to specific URLs, returning an HTTP Error 404.5—URL Sequence denied error message.

By analyzing the stats charts, we can see that another essential element should be changed. The institution uses a template master page for its website. This template is used on all their website, including the error pages. This is a typical behavior that web developers use to create a single template web page, and they use it as a base for all others. This master page includes the files required for almost all pages, including CSS styles files, JavaScript files, Font files, etc. These are a lot of useless elements for a static web page, such as an error 404 page. Using the master page for the error page means that at each attack request, the server would reply with all included files (CSS, JavaScript), lots of bandwidth, and server resources with useless information. This example shows that the error pages should be trimmed to the bare minimum, minimizing the impact of the attacks.

Table 1 shows the size of the initial 404 web page and the current size. The same table is compared with the default 404 page from other reverse servers or browsers.

The current 404 page was changed at the end of 2018 and did not have an impact on this attack. Taking into account the values on

Table 1, still, the size of the webpage could have been optimized. There were over 13.5 million 404 requests in September. If the original version was still online, the total downloaded data from the web server was 443 Gib. Using the actual version, the total data amounted to 26.9 Gib, while using the default NGINX or HAProxy, the total was 2.74 GiB. That is a total 26.9 GiB difference from the actual version to an optimized version on a single attack.

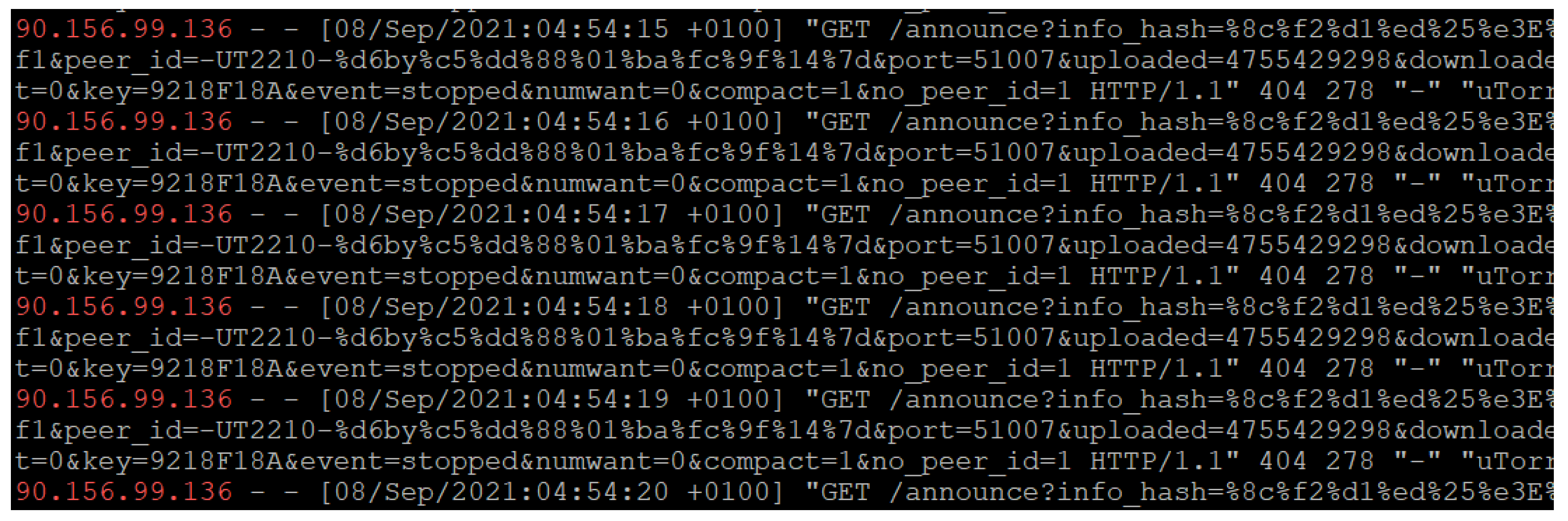

On the server exposed to the internet, a firewall was configured. The server was running Debian Linux operating system with Uncomplicated Firewall (UFW), allowing only HTTP, HTTPS, and SSH. An analysis of the log files shows that the same source makes a new request at each second, as shown in

Figure 18 with the red text color.

UFW uses iptables and may be configured to limit incoming network connections to a certain number per IP and port or limit concurrent connections. The institution had a cluster of firewalls protecting the perimeter, but there should also exist server protection. In this case, on the file /etc/ufw/before.rules, the configuration on Listing 4 should have been added.

| Listing 4. Limiting number of connections per IP with ufw. |

![Electronics 12 00165 i004]() |

The are also other firewall solutions for Linux distributions, such as Config Server Firewall (CSF) or Firewalld, and both allow similar configurations.

7. Conclusions

A configuration similar to the one presented in this work, applied on a reverse proxy server, can partially prevent an Index Torrent poisoning attack. After this implementation, the attack cannot reach the internal servers, only the perimeter firewall and reverse proxy. The connections are closed and discarded at the reverse proxy server, and no reply is sent back to the user. This way, the impact on these two elements is minimized, since no connection tracking from the outer firewall to the internal web servers was required. This type of tracking is one of the most demanding features in terms of memory and CPU for network devices and servers. Like many other DDoSs, all that systems administrators can do is try to minimize the impact of these types of attacks on their services. Still, in our case study the perimeter firewalls were almost unresponsive via their administration pages. Furthermore, any internal services open to the internet were practically unreachable, with lots of timeouts. The implemented solution uses only open-source software without any costs to the institution. The size of the error pages 4xx, and 5xx may influence the attack’s impact. Using small optimized error web pages may result in a dozen gigabytes not being sent from the web servers to the attackers. Even with small changes, since one DDoS attack may result in millions of requests, the difference is significant. The lack of protection on the server firewall was an error. The most popular Linux distributions allow the installation of firewall tools—for Debian or Ubuntu-based, UFW or CSF. If one of these tools is used and a limitation to the number of connections per IP or concurrent connections is implemented, the attack could have been mitigated on the reserve proxy and web servers.