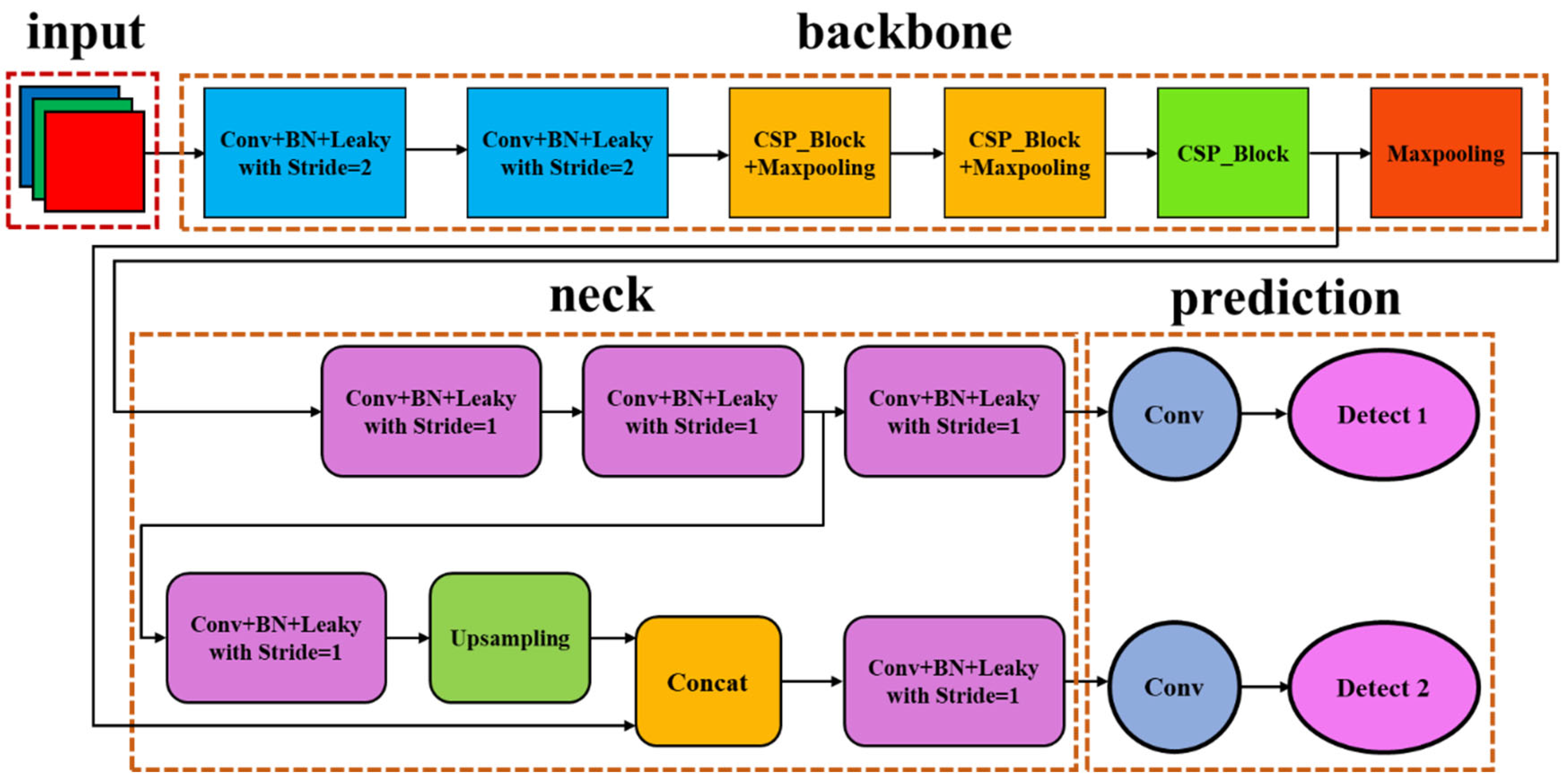

Figure 1.

YOLOv4-tiny architecture. (Different color means different function performed in a single block).

Figure 1.

YOLOv4-tiny architecture. (Different color means different function performed in a single block).

Figure 2.

YOLOv5 architecture. (Different color means different function performed in a single block).

Figure 2.

YOLOv5 architecture. (Different color means different function performed in a single block).

Figure 3.

YOLOv7 architecture. (Different color means different function performed in a single block).

Figure 3.

YOLOv7 architecture. (Different color means different function performed in a single block).

Figure 4.

ResNet18 architecture. (Different color means different function performed in a single block).

Figure 4.

ResNet18 architecture. (Different color means different function performed in a single block).

Figure 5.

Onboard cameras surrounding a JetRacer. (a) Three front cameras indicated #1, #2, and #3. (b) Left camera indicated #4. (c) Two rear cameras indicated #5 and #6. (d) Right camera indicated #7.

Figure 5.

Onboard cameras surrounding a JetRacer. (a) Three front cameras indicated #1, #2, and #3. (b) Left camera indicated #4. (c) Two rear cameras indicated #5 and #6. (d) Right camera indicated #7.

Figure 6.

Planar road map where color yellow represents the lines to distinguish lanes in different directions and color white stands for the lines to indicate road edge.

Figure 6.

Planar road map where color yellow represents the lines to distinguish lanes in different directions and color white stands for the lines to indicate road edge.

Figure 7.

Planar road map with traffic signs.

Figure 7.

Planar road map with traffic signs.

Figure 8.

Data collection using the front-end camera and handle controller. (a) Front-end camera; (b) handle controller.

Figure 8.

Data collection using the front-end camera and handle controller. (a) Front-end camera; (b) handle controller.

Figure 9.

Traffic signs and roads in the training phase. (a) Speed limit sign. (b) Stop sign. (c) Traffic light.

Figure 9.

Traffic signs and roads in the training phase. (a) Speed limit sign. (b) Stop sign. (c) Traffic light.

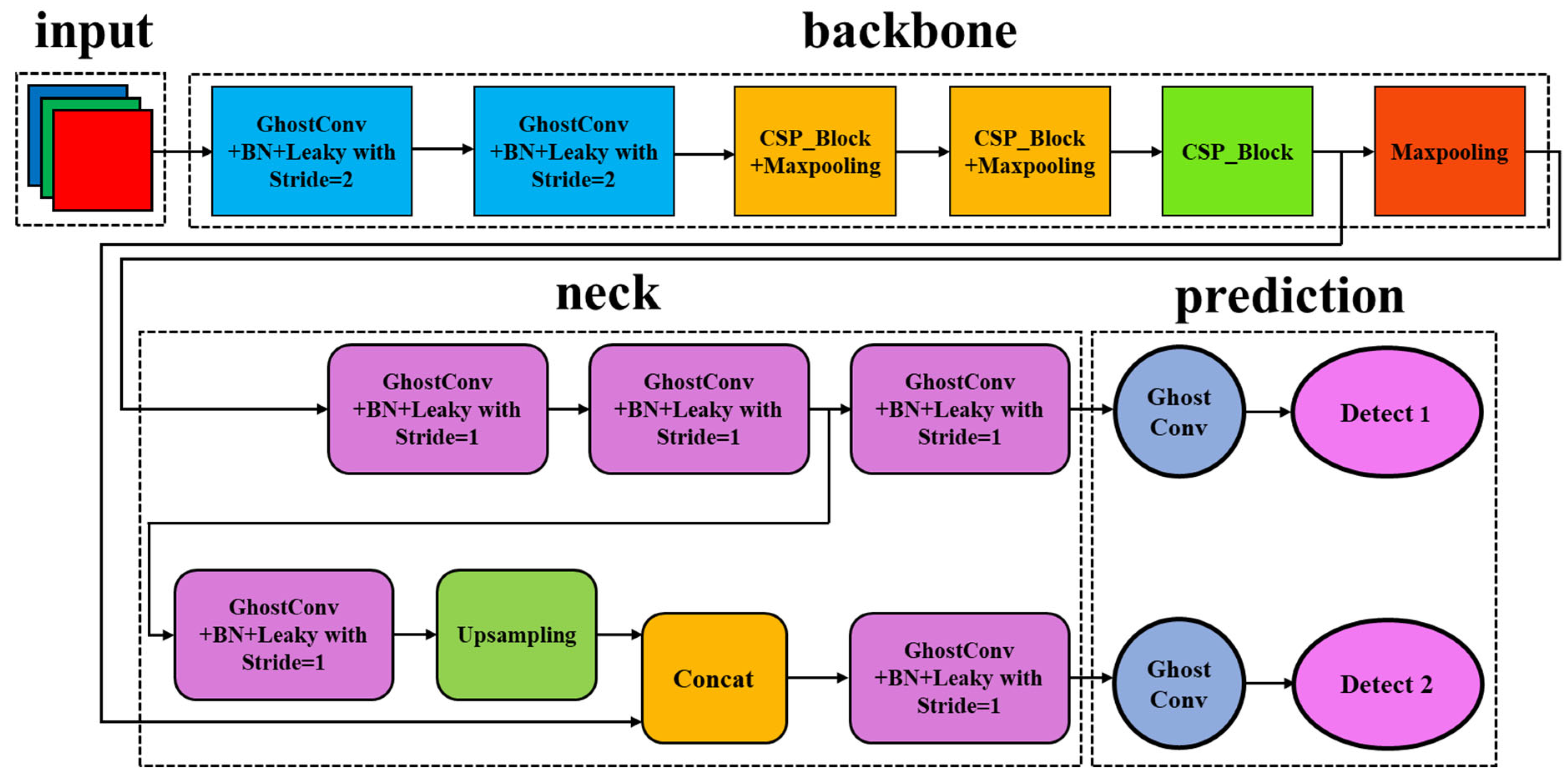

Figure 10.

The architecture of the LW-YOLOv4-tiny model. (Different color means different function performed in a single block).

Figure 10.

The architecture of the LW-YOLOv4-tiny model. (Different color means different function performed in a single block).

Figure 11.

The architecture of the LW-ResNet18 model. (Different color means different function performed in a single block).

Figure 11.

The architecture of the LW-ResNet18 model. (Different color means different function performed in a single block).

Figure 12.

Execution flow of ghost convolution.

Figure 12.

Execution flow of ghost convolution.

Figure 13.

Pixel-wise average pooling.

Figure 13.

Pixel-wise average pooling.

Figure 14.

Real-time object detection by using LW-YOLOv4-tiny. (a) Real-time image of model car one on the outer lane. (b) Real-time object detection of model car one on the outer lane. (c) Real-time image of model car two on the inner lane. (d) Real-time object detection of model car two on the inner lane.

Figure 14.

Real-time object detection by using LW-YOLOv4-tiny. (a) Real-time image of model car one on the outer lane. (b) Real-time object detection of model car one on the outer lane. (c) Real-time image of model car two on the inner lane. (d) Real-time object detection of model car two on the inner lane.

Figure 15.

Real-time vehicle detection by using LW-YOLOv4-tiny. (a) Left camera module. (b) Right camera module.

Figure 15.

Real-time vehicle detection by using LW-YOLOv4-tiny. (a) Left camera module. (b) Right camera module.

Figure 16.

Measuring distance between the vehicle and the target object.

Figure 16.

Measuring distance between the vehicle and the target object.

Figure 17.

Measuring the distance between two JetRacers by using dual cameras. (a) Left camera. (b) Right camera.

Figure 17.

Measuring the distance between two JetRacers by using dual cameras. (a) Left camera. (b) Right camera.

Figure 18.

Steering angle prediction by using LW-ResNet18.

Figure 18.

Steering angle prediction by using LW-ResNet18.

Figure 19.

PID controller. (a) The architecture of the PID controller; (b) going straight; (c) taking a right turn.

Figure 19.

PID controller. (a) The architecture of the PID controller; (b) going straight; (c) taking a right turn.

Figure 20.

System diagram of the autonomous driving system.

Figure 20.

System diagram of the autonomous driving system.

Figure 21.

Information fusion of self-driving.

Figure 21.

Information fusion of self-driving.

Figure 22.

A green dot line as a visualized steering assistance.

Figure 22.

A green dot line as a visualized steering assistance.

Figure 23.

Training and validation losses of the LW-YOLOv4-tiny model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 23.

Training and validation losses of the LW-YOLOv4-tiny model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 24.

Training and validation losses of the YOLOv4-tiny model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 24.

Training and validation losses of the YOLOv4-tiny model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 25.

Training and validation losses of the YOLOv5s model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 25.

Training and validation losses of the YOLOv5s model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 26.

Training and validation losses of the YOLOv5n model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 26.

Training and validation losses of the YOLOv5n model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 27.

Training and validation losses of the YOLOv7 model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 27.

Training and validation losses of the YOLOv7 model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 28.

Training and validation losses of the YOLOv7-tiny model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 28.

Training and validation losses of the YOLOv7-tiny model. (a) Box training loss. (b) Objectness training loss. (c) Classification training loss. (d) Box validation loss. (e) Objectness validation loss. (f) Classification validation loss.

Figure 29.

Training and validation losses of steering angle prediction model. (a) LW-ResNet18. (b) ResNet18. (c) CNN. (d) Nvidia-CNN.

Figure 29.

Training and validation losses of steering angle prediction model. (a) LW-ResNet18. (b) ResNet18. (c) CNN. (d) Nvidia-CNN.

Figure 30.

The precision–recall curve for the object detection model. (a) LW-YOLOv4-tiny; (b) YOLOv4-tiny; (c) YOLOv5s; (d) YOLOv5n; (e) YOLOv7; (f) YOLOv7-tiny.

Figure 30.

The precision–recall curve for the object detection model. (a) LW-YOLOv4-tiny; (b) YOLOv4-tiny; (c) YOLOv5s; (d) YOLOv5n; (e) YOLOv7; (f) YOLOv7-tiny.

Figure 31.

Predicted and actual values of steering angle prediction model. (a) LW-ResNet18; (b) ResNet18; (c) CNN; (d) Nvidia-CNN.

Figure 31.

Predicted and actual values of steering angle prediction model. (a) LW-ResNet18; (b) ResNet18; (c) CNN; (d) Nvidia-CNN.

Table 1.

Parameter setting of PID controller.

Table 1.

Parameter setting of PID controller.

| Parameter | | | |

| Value | 0.06 | 0.0015 | 1.52 |

Table 2.

Hardware specifications.

Table 2.

Hardware specifications.

| Component | GPU Workstation | Jetson Nano |

|---|

| GPU | NVIDIA GeForce GTX 1080 Ti | NVIDIA Maxwell architecture with 128 NVIDIA CUDA® cores |

| CPU | Intel (R) Core (TM) i7-7700 CPU @ 3.60 GHz | Quad-core ARM Cortex-A57 MPCore processor |

| Memory | 32 GB | 4 GB 64-bit LPDDR4, 1600 MHz 25.6 GB/s |

| Storage | 256 GB × 1 (SSD)

1 TB × 1 (HDD) | 16 GB eMMC 5.1 |

Table 3.

Recipe of packages.

Table 3.

Recipe of packages.

| Software | Version |

|---|

| LabelImg | 1.8 |

| Anaconda® Individual Edition | 4.9.2 |

| Jupyter Notebook | 6.1.4 |

| TensorFlow | 2.2 |

| TensorRT | 7.2.3 |

| PyTorch | 1.6 |

| JetPack | 4.5 |

Table 4.

Training and inference time of object detection models (unit: s).

Table 4.

Training and inference time of object detection models (unit: s).

| Method | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny |

|---|

| Training | 274 | 296 | 497 | 436 | 2124 | 1314 |

| Inference | 4.98 | 5.32 | 6.64 | 6.48 | 10.68 | 5.75 |

Table 5.

Training and inference time of steering angle prediction models (unit: s).

Table 5.

Training and inference time of steering angle prediction models (unit: s).

| Method | LW-ResNet18 | ResNet18 | CNN | Nvidia-CNN |

|---|

| Training | 2832 | 2880 | 360 | 364 |

| Inference | 23.79 | 25.18 | 21.27 | 21.56 |

Table 6.

Parameters of object detection models.

Table 6.

Parameters of object detection models.

| Method | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny |

|---|

| Number of parameters | 3,940,751 | 5,892,596 | 7,043,902 | 1,776,094 | 37,239,708 | 6,036,636 |

Table 7.

Parameters of steering angle prediction models.

Table 7.

Parameters of steering angle prediction models.

| Method | LW-ResNet18 | ResNet18 | CNN | Nvidia-CNN |

|---|

| Number of parameters | 6,367,975 | 11,180,161 | 776,289 | 1,872,643 |

Table 8.

Speed and precision of object detection models.

Table 8.

Speed and precision of object detection models.

| Metrics | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5n | YOLOv5n | YOLOv7 | YOLOv7-Tiny |

|---|

| FPS | 56.1 | 46.8 | 30.4 | 41.7 | 22.9 | 43.1 |

| Precision (%) | 97.3 | 97.2 | 99.1 | 97.7 | 98.9 | 97.0 |

Table 9.

Speed and loss of steering angle prediction models.

Table 9.

Speed and loss of steering angle prediction models.

| Metrics | LW-ResNet18 | ResNet18 | CNN | Nvidia-CNN |

|---|

| FPS | 32.6 | 27.0 | 31.9 | 31.5 |

| MSE | 0.0683 | 0.0712 | 0.0849 | 0.0953 |

Table 10.

FPS of integrated models.

Table 10.

FPS of integrated models.

| | O. D. | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny |

|---|

| St. A. P. | |

|---|

| Nvidia-CNN | 20.4 | 19.5 | 15.6 | 18.1 | 10.8 | 19.0 |

| CNN | 20.6 | 19.6 | 15.8 | 18.2 | 11.0 | 19.1 |

| ResNet18 | 18.9 | 18.4 | 14.5 | 17.1 | 9.7 | 17.8 |

| LW-ResNet18 | 19.4 | 18.9 | 15.1 | 17.8 | 10.5 | 18.6 |

| Average | 19.8 | 19.1 | 15.3 | 17.8 | 10.5 | 18.6 |

Table 11.

The accuracy of integrated models.

Table 11.

The accuracy of integrated models.

| | Precision (%) | O. D. |

|---|

| MSE | | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny |

|---|

| St. A. P. | Nvidia-CNN | (0.0953, 97.3) | (0.0953, 97.2) | (0.0953, 99.1) | (0.0953, 97.7) | (0.0953, 98.9) | (0.0953, 97.0) |

| CNN | (0.0849, 97.3) | (0.0849, 97.2) | (0.0849, 99.1) | (0.0849, 97.7) | (0.0849, 98.9) | (0.0849, 97.0) |

| ResNet18 | (0.0712, 97.3) | (0.0849, 97.2) | (0.0849, 99.1) | (0.0849, 97.7,) | (0.0849, 98.9) | (0.0849, 97.0) |

| LW-ResNet18 | (0.0683, 97.3) | (0.0849, 97.2) | (0.0849, 99.1) | (0.0849, 97.7) | (0.0849, 98.9) | (0.0849, 97.0) |

Table 12.

FR of Integrated Models.

Table 12.

FR of Integrated Models.

| | O. D. | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny |

|---|

| St. A. P. | |

|---|

| Nvidia-CNN | 2.1031 | 2.0103 | 1.6082 | 1.8660 | 1.1134 | 1.9588 |

| CNN | 2.1237 | 2.0206 | 1.6289 | 1.8763 | 1.1340 | 1.9691 |

| ResNet18 | 1.9485 | 1.8969 | 1.4948 | 1.7629 | 1.0000 | 1.8351 |

| LW-ResNet18 | 2.0000 | 1.9485 | 1.5567 | 1.8351 | 1.0825 | 1.9175 |

| Average | 2.0438 | 1.9691 | 1.5722 | 1.8351 | 1.0825 | 1.9201 |

Table 13.

PR of Integrated Models.

Table 13.

PR of Integrated Models.

| | O. D. | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny | Average |

|---|

| St. A. P. | |

|---|

| Nvidia-CNN | 1.0031 | 1.0021 | 1.0216 | 1.0072 | 1.0196 | 1.0000 | 1.0089 |

| CNN | 1.1260 | 1.1248 | 1.1468 | 1.1306 | 1.1445 | 1.1225 | 1.1325 |

| ResNet18 | 1.3426 | 1.3412 | 1.3674 | 1.3481 | 1.3647 | 1.3385 | 1.3504 |

| LW-ResNet18 | 1.3996 | 1.3982 | 1.4255 | 1.4054 | 1.4226 | 1.3953 | 1.4078 |

Table 14.

PPI of Integrated Models.

Table 14.

PPI of Integrated Models.

| | O. D. | LW-YOLOv4-Tiny | Yolov4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny | Average |

|---|

| St. A. P. | |

|---|

| Nvidia-CNN | 2.1096 | 2.0145 | 1.6429 | 1.8794 | 1.1352 | 1.9588 | 1.7901 |

| CNN | 2.3913 | 2.2728 | 1.8680 | 2.1213 | 1.2979 | 2.2103 | 2.0269 |

| ResNet18 | 2.6161 | 2.5441 | 2.0440 | 2.3766 | 1.3647 | 2.4563 | 2.2336 |

| LW- ResNet18 | 2.7992 | 2.7244 | 2.2191 | 2.5790 | 1.5400 | 2.6755 | 2.4229 |

| Average | 2.4790 | 2.3890 | 1.9435 | 2.2391 | 1.3344 | 2.3252 | |

Table 15.

PI of Integrated Models.

Table 15.

PI of Integrated Models.

| | O. D. | LW-YOLOv4-Tiny | YOLOv4-Tiny | YOLOv5s | YOLOv5n | YOLOv7 | YOLOv7-Tiny | Average |

|---|

| St. A. P. | |

|---|

| Nvidia-CNN | 1.8584 | 1.7746 | 1.4472 | 1.6556 | 1.0000 | 1.7255 | 1.5769 |

| CNN | 2.1065 | 2.0021 | 1.6455 | 1.8687 | 1.1433 | 1.9471 | 1.7855 |

| ResNet18 | 2.3045 | 2.2411 | 1.8006 | 2.0936 | 1.2022 | 2.1638 | 1.9676 |

| LW-ResNet18 | 2.4658 | 2.3999 | 1.9548 | 2.2718 | 1.3566 | 2.3569 | 2.1343 |

| Average | 2.1838 | 2.1044 | 1.7120 | 1.9724 | 1.1755 | 2.0483 | |