Single-Image Defogging Algorithm Based on Improved Cycle-Consistent Adversarial Network

Abstract

1. Introduction

1.1. Fog Removal Methods

1.1.1. Image Enhancement Defogging Algorithm

1.1.2. Image Restoration Defogging Algorithm

1.2. Deep Learning Defogging Algorithm

2. Theoretical Basis of Generative Adversarial Networks

2.1. Research Status of Generative Adversarial Network

2.2. Works Principle of Generative Adversarial Networks

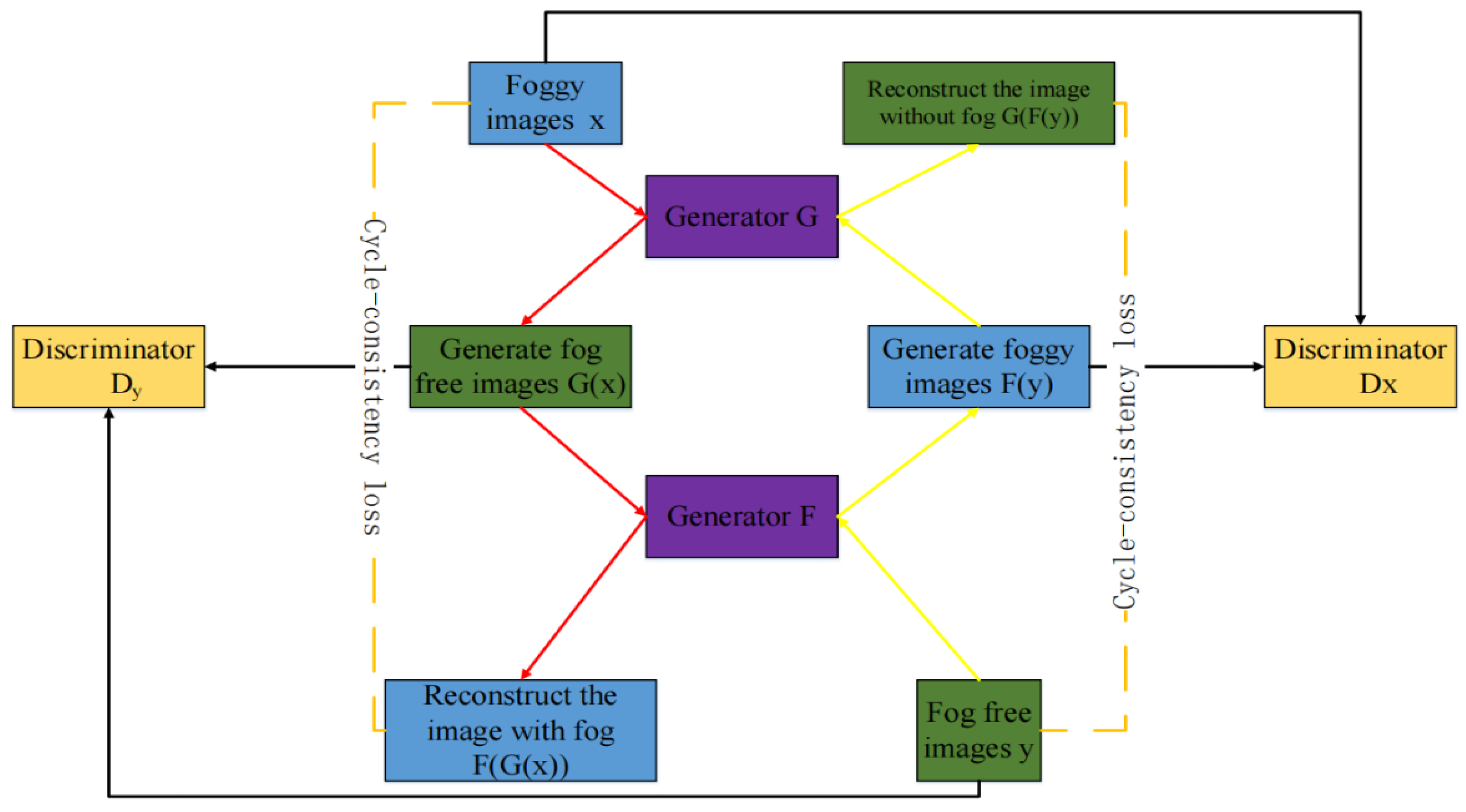

2.3. Principle of Defogging Cycle-Consistent Adversarial Networks

- (1)

- Fog–fog branch: image with fog—image without fog—reconstruct image with fog. The fog–fog branch is shown by the red connecting line in Figure 1;

- (2)

- No fog–no fog branch: no fog image —foggy image —reconstructed image without fog. The no fog–no fog branch is shown by the yellow connection line in Figure 1.

3. Improved Cycle-Consistent Adversarial Networks Network Model

3.1. Improved Generator Structure

| Algorithm 1 Cycle-consistent adversarial network defogging algorithm |

| A:= foggy_image B:= fog_free_image for epoch in range (0, epochs) for batch in range (0, maxbatch) 1 same_B = Generator_A2B (real_A) 2 same_A = Generator_B2A (real_B) 3 loss_indentity = criterion_indentity(same_A,real_A) + criterion_indentity(same_B,real_B) 4 fake_B = Generator_A2B (real_A) 5 pred_fake_B = Discriminator_B (fake_B) 6 fake_A = Generator_B2A (real_B) 7 pred_fake_A = Discriminator_A (fake_A) 8 loss_GAN = criterion_GAN (pred_fake_A, target_real_A) + criterion_GAN(pred_fake_B, target_real_B) 9 recovered_A = Generator_B2A (fake_B) 10 loss_cycle_ABA + criterion_cycle (recovered_A, real_A) 11 recovered_B + Generator_ B2A (fake_B) 12 loss_cycle_BAB = criterion_cycle (recovered_B, real_B) 13 Total_loss = loss_indentity + loss_GAN + loss_cycle_ABA + loss_cycle_BAB 14 Total_loss backward() |

3.1.1. Coding Module

3.1.2. Self-Attention Module

3.1.3. Residual Network Module

3.1.4. Atrous Convolution Multi-Scale Fusion Module

3.1.5. Decoding Module

3.2. Discriminator Structure

3.3. Improved Loss Function

- (1)

- Adversarial loss: The goal of generator is to learn the mapping from to . The input of the generator is the image with fog and the output is the image without fog. The discriminator is used to judge whether the input image is real non-foggy data or generated non-foggy data . The adversarial loss of generator and discriminator is expressed as:

- (2)

- Cycle-consistency loss: CycleGAN introduced cycle-consistency loss to solve the problem that the output distribution cannot be consistent with the target distribution when there is only adversarial loss. For the generator and discriminator, every foggy image , is able to return the generator’s result to the original image. Meanwhile, for a fog-free image , can make return to the original image . is the cyclic image of the original image , and is the cyclic image of the original image . In the training process, the more similar the result of is to , the better, and the closer gf is to the original image , the better. To train the generator and at the same time to improve their performance, the design cycle of coherence loss includes two constraints: and . Therefore, the cycle-consistency loss is used to calculate the L1 norm between the input of the defogging network and the cyclic image.

- (3)

- Perceptual feature loss: It is difficult to recover all texture information in the image due to cyclic-consistency loss and counter loss. Therefore, we use perceptual loss to make the generated image semantically closer to the real target image. Under the constraints of generator and generator , perceived loss is defined as:

4. Experiment Results and Discussion

4.1. Data Description and Experimental Details

4.1.1. Data Description

4.1.2. Experimental Details

4.2. Experimental Evaluation on Synthetic Data Sets

4.2.1. Experimental Evaluation of Indoor Synthetic Data Sets

4.2.2. Experimental Evaluation of Outdoor Synthetic Data Sets

4.3. Experimental Evaluation of Real Data Sets

4.4. Experimental Evaluation of Fog Removal Algorithms in Recent Years

4.5. Ablation Experiment

- (1)

- Primitive CycleGAN network;

- (2)

- A self-attention (SA) module was added to the original CycleGAN network to verify the validity of the atrous convolution multi-scale (ACMS) feature fusion module;

- (3)

- A atrous convolution multi-scale (ACMS) feature fusion module was added to the original CycleGAN network to verify the validity of the self-attention (SA) module;

- (4)

- The I-CycleGAN network designed in this paper fused self-attention (SA) module and atrous convolution multi-scale (ACMS) feature fusion module into the original CycleGAN network for global evaluation.

5. Summary and Prospect

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Garcia-Mateos, G.; Hernandez-Hernandez, J.L.; Escarabajal-Henarejos, D.; Jaen-Terrones, S.; Molina-Martinez, J.M. Study and comparison of color models for automatic image analysis in irrigation management applications. Agric. Water Manag. 2015, 151, 158–166. [Google Scholar] [CrossRef]

- Hernandez-Hernandez, J.L.; Garcia-Mateos, G.; Gonzalez-Esquiva, J.M.; Escarabajal-Henarejos, D.; Ruiz-Canales, A.; Molina-Martinez, J.M. Optimal color space selection method for plant/soil segmentation in agriculture. Comput. Electron. Agric. 2016, 122, 124–132. [Google Scholar] [CrossRef]

- Sanaullah, M.; Akhtaruzzaman, M.; Hossain, M.A. Land-robot technologies: The integration of cognitive systems in military and defense. NDC E-J. 2022, 2, 123–156. [Google Scholar]

- Currie, G.; Rohren, E. Intelligent imaging in nuclear medicine: The principles of artificial intelligence, machine learning and deep learning. Semin. Nucl. Med. 2021, 51, 102–111. [Google Scholar] [CrossRef]

- Kumar, R.; Kaushik, B.K.; Balasubramanian, R. Multispectral transmission map fusion method and architecture for image dehazing. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 2693–2697. [Google Scholar] [CrossRef]

- Kim, J.Y.; Kim, L.S.; Hwang, S.H. An advanced contrast enhancement using partially overlapped sub-block histogram equalization. IEEE Trans. Circuits Syst. Video Technol. 2001, 11, 475–484. [Google Scholar]

- Stark, J.A. Adaptive image contrast enhancement using generalizations of histogram equalization. IEEE Trans. Image Process. 2000, 9, 889–896. [Google Scholar] [CrossRef]

- Daubechies, I. The wavelet transform, time-frequency localization and signal analysis. IEEE Trans. Inf. Theory 1990, 36, 961–1005. [Google Scholar] [CrossRef]

- Seow, M.J.; Asari, V.K. Ratio rule and homomorphic filter for enhancement of digital colour image. Neurocomputing 2006, 69, 954–958. [Google Scholar] [CrossRef]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 820–827. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Contrast restoration of weather degraded images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 713–724. [Google Scholar] [CrossRef]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Tarel, J.P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the 2009 IEEE International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009; pp. 2201–2208. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Singh, D.; Kumar, V. Dehazing of remote sensing images using improved restoration model based dark channel prior. Imaging Sci. J. 2017, 65, 282–292. [Google Scholar] [CrossRef]

- Anan, S.; Khan, M.I.; Kowsar, M.M.S.; Deb, K. Image defogging framework using segmentation and the dark channel prior. Entropy 2021, 23, 285. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, S.; Wang, X. Single image dehazing based on bright channel prior model and saliency analysis strategy. IET Image Process. 2021, 15, 1023–1031. [Google Scholar] [CrossRef]

- Nishino, K.; Kratz, L.; Lombardi, S. Bayesian defogging. Int. J. Comput. Vis. 2012, 98, 263–278. [Google Scholar] [CrossRef]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 154–169. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-in-one dehazing network. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4780–4788. [Google Scholar]

- Yang, D.; Sun, J. Proximal Dehaze-Net: A prior learning-based deep network for single image dehazing. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 702–717. [Google Scholar]

- Zhang, H.; Patel, V. Densely connected pyramid dehazing network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3194–3203. [Google Scholar]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.H. Gated fusion network for single image dehazing. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3253–3261. [Google Scholar]

- Wang, T.; Zhao, L.; Huang, P.; Zhang, X.; Xu, J. Haze concentration adaptive network for image dehazing. Neurocomputing 2021, 439, 75–85. [Google Scholar] [CrossRef]

- Feng, T.; Wang, C.; Chen, X.; Fan, H.; Zeng, K.; Li, Z. URNet: A U-Net based residual network for image dehazing. Appl. Soft Comput. 2021, 102, 106884. [Google Scholar] [CrossRef]

- Li, S.; Yuan, Q.; Zhang, Y.; Lv, B.; Wei, F. Image dehazing algorithm based on deep learning coupled local and global features. Appl. Sci. 2022, 12, 8552. [Google Scholar] [CrossRef]

- Wang, C.; Chen, R.; Lu, Y.; Yan, Y.; Wang, H. Recurrent context aggregation network for single image dehazing. IEEE Signal Process. Lett. 2021, 28, 419–423. [Google Scholar] [CrossRef]

- Li, B.; Gou, Y.; Gu, S.; Liu, J.; Zhou, J.; Peng, X. You only look yourself: Unsupervised and untrained single image dehazing neural network. Int. J. Comput. Vision 2021, 129, 1754–1767. [Google Scholar] [CrossRef]

- Sun, Z.; Liu, C.; Qu, H.; Xie, G. A novel effective vehicle detection method based on swin transformer in hazy scenes. Mathematics 2022, 10, 2199. [Google Scholar] [CrossRef]

- Zhu, Z.; Luo, Y.; Qi, G.; Meng, J.; Li, Y.; Mazur, N. Remote sensing image defoging networks based on dual self-attention boost residual octave convolution. Remote Sens. 2021, 13, 3104. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S. Generative adversarial nets. In Proceedings of the International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using cycle-consistent Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar]

- Engin, D.; Genc, A.; Kemal Ekenel, H. Cycle-Dehaze: Enhanced CycleGAN for single image dehazing. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and PatternRecognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9380–9388. [Google Scholar]

- Zhao, S.; Zhang, L.; Shen, Y.; Zhou, Y. RefineDNet:A weakly supervised refinement framework for single image dehazing. IEEE Trans. Image Process. 2021, 30, 3391–3404. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. Comput. Sci. 2014, 2672–2680. [Google Scholar] [CrossRef]

- Qu, Y.; Chen, Y.; Huang, J.; Xie, Y. Enhanced Pix2pix Dehazing Network. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8152–8160. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Liu, W.; Hou, X.; Duan, J.; Qiu, G. End-to-End single image fog removal using enhanced cycle consistent adversarial networks. IEEE Trans. Image Process. 2020, 29, 7819–7833. [Google Scholar] [CrossRef]

- Dong, Y.; Liu, Y.; Zhang, H.; Chen, S.; Qiao, Y. FD-GAN: Generative adversarial networks with Fusion-Discriminator for single image dehazing. Assoc. Adv. Artif. Intell. 2020, 34, 10729–10736. [Google Scholar] [CrossRef]

- Chen, X.; Li, Y.; Kong, C.; Dai, L. Unpaired image dehazing with physical-guided restoration and depth-guided refinement. IEEE Signal Process. Lett. 2022, 29, 587–591. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein GANs. Adv. Neural Inf. Process. Syst. 2017, 30, 5767–5777. [Google Scholar]

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-HAZE: A dehazing benchmark with real hazy and haze-free outdoor images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 754–762. [Google Scholar]

| Layer | Convolution | Stride | Input Channels | Output Channels |

|---|---|---|---|---|

| Layer 1 | 7 × 7 | 1 | 3 | 64 |

| Layer 2 | 3 × 3 | 2 | 64 | 128 |

| Layer 3 | 3 × 3 | 2 | 128 | 256 |

| Layer | Convolution | Stride | Input Channels | Output Channels |

|---|---|---|---|---|

| Layer 1 | 3 × 3 | 2 | 256 | 64 |

| Layer 2 | 3 × 3 | 2 | 64 | 32 |

| Layer 3 | 7 × 7 | 1 | 32 | 3 |

| Layer | Convolution | Stride | Input Channels | Output Channels |

|---|---|---|---|---|

| Layer 1 | 4 × 4 | 2 | 3 | 64 |

| Layer 2 | 4 × 4 | 2 | 64 | 128 |

| Layer 3 | 4 × 4 | 2 | 128 | 256 |

| Layer 4 | 4 × 4 | 2 | 256 | 512 |

| Layer 5 | 4 × 4 | 1 | 512 | 1 |

| Quantitative Evaluation | DCP | DehazeNet | AOD-Net | GFN | CycleGAN | I-CycleGAN |

|---|---|---|---|---|---|---|

| PSNR | 16.57 | 18.63 | 20.72 | 22.68 | 21.67 | 23.22 |

| SSIM | 0.8022 | 0.8432 | 0.8398 | 0.8753 | 0.8593 | 0.8809 |

| Quantitative Evaluation | DCP | DehazeNet | AOD-Net | GFN | CycleGAN | I-CycleGAN |

|---|---|---|---|---|---|---|

| PSNR | 18.54 | 21.13 | 20.19 | 23.51 | 22.98 | 25.72 |

| SSIM | 0.8135 | 0.8509 | 0.8611 | 0.8701 | 0.8445 | 0.8859 |

| Quantitative Evaluation | DCP | DehazeNet | AOD-Net | GFN | CycleGAN | I-CycleGAN |

|---|---|---|---|---|---|---|

| PSNR | 15.74 | 17.78 | 18.17 | 20.58 | 19.89 | 21.02 |

| SSIM | 0.7011 | 0.7689 | 0.7715 | 0.8131 | 0.8045 | 0.8166 |

| Quantitative Evaluation | YOLY | I-CycleGAN | |

|---|---|---|---|

| Indoor data set | PSNR | 20.51 | 23.22 |

| SSIM | 0.8691 | 0.8809 | |

| Outdoor data set | PSNR | 23.92 | 25.72 |

| SSIM | 0.8825 | 0.8859 | |

| PSNR | SSIM | |

|---|---|---|

| CycleGAN | 21.67 | 0.8593 |

| CycleGAN and SA | 22.83 | 0.8764 |

| CycleGAN and ACMS | 22.43 | 0.8696 |

| CycleGAN, SA, and ACMS | 23.22 | 0.8809 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Sun, X.; Chen, Y.; Duan, Y.; Wang, Y. Single-Image Defogging Algorithm Based on Improved Cycle-Consistent Adversarial Network. Electronics 2023, 12, 2186. https://doi.org/10.3390/electronics12102186

Zhang J, Sun X, Chen Y, Duan Y, Wang Y. Single-Image Defogging Algorithm Based on Improved Cycle-Consistent Adversarial Network. Electronics. 2023; 12(10):2186. https://doi.org/10.3390/electronics12102186

Chicago/Turabian StyleZhang, Junkai, Xiaoming Sun, Yan Chen, Yan Duan, and Yongliang Wang. 2023. "Single-Image Defogging Algorithm Based on Improved Cycle-Consistent Adversarial Network" Electronics 12, no. 10: 2186. https://doi.org/10.3390/electronics12102186

APA StyleZhang, J., Sun, X., Chen, Y., Duan, Y., & Wang, Y. (2023). Single-Image Defogging Algorithm Based on Improved Cycle-Consistent Adversarial Network. Electronics, 12(10), 2186. https://doi.org/10.3390/electronics12102186