An Enhanced Deep Learning Model for Obstacle and Traffic Light Detection Based on YOLOv5

Abstract

1. Introduction

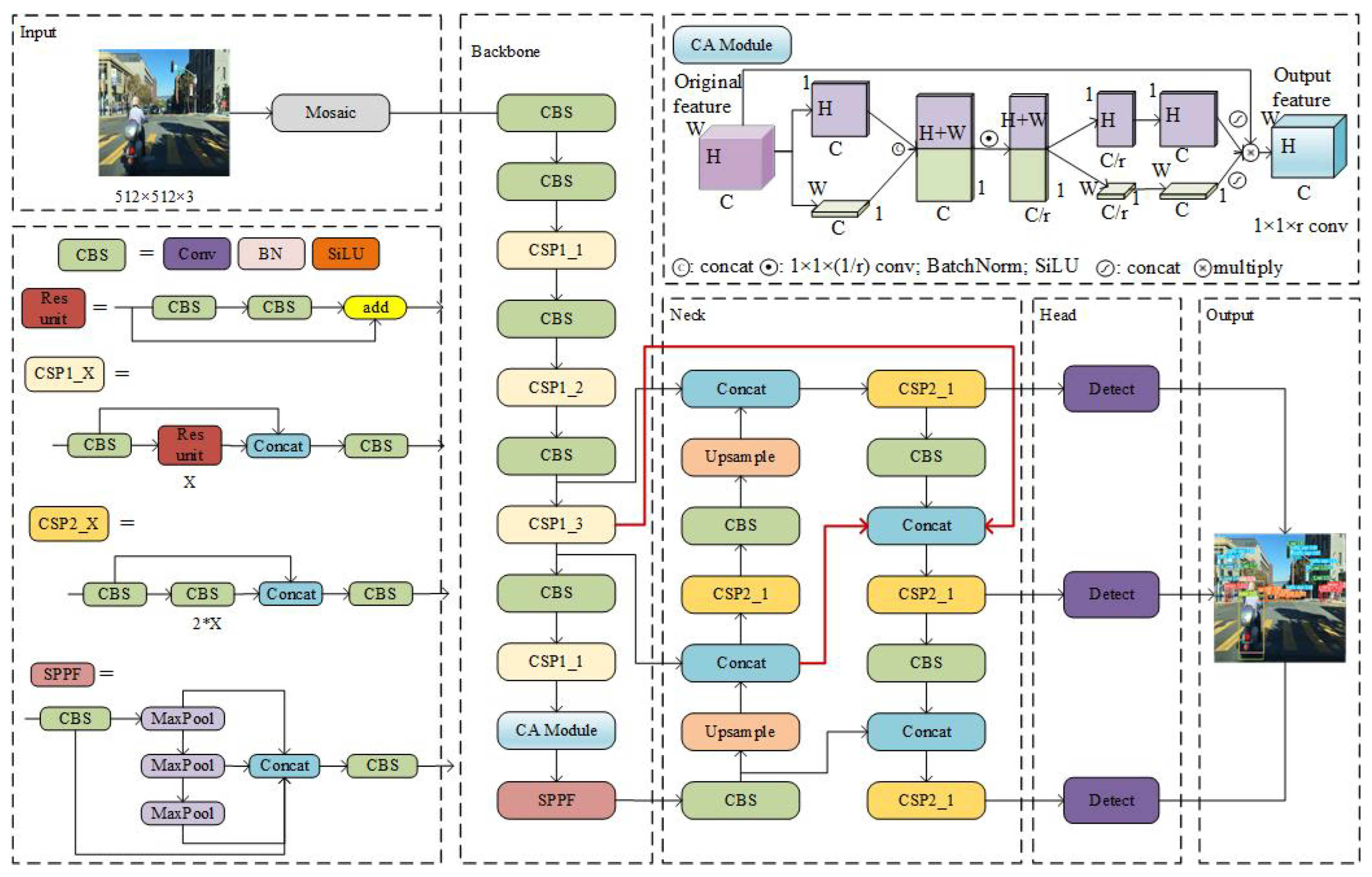

- An improved deep learning model based on YOLOv5 is proposed. A coordinate attention layer is added to the backbone network of YOLOv5 to improve its ability to extract effective features in the target image. The feature pyramid network in YOLOv5 is replaced with a weighted bidirectional feature pyramid structure to fuse the extracted feature maps of different sizes and obtain more feature information. A SIoU loss function is introduced to increase the angle calculation of the frame, improve the detection speed of the frame regression, and improve the mean average precision.

- The proposed model’s detection performance for pedestrians, vehicles, and traffic lights under different conditions is tested and evaluated using the BDD100K database [29]. The results show that the improved model can achieve higher mean average precision and better detection ability, especially for small targets.

2. Methodology

2.1. Coordinate Attention Module

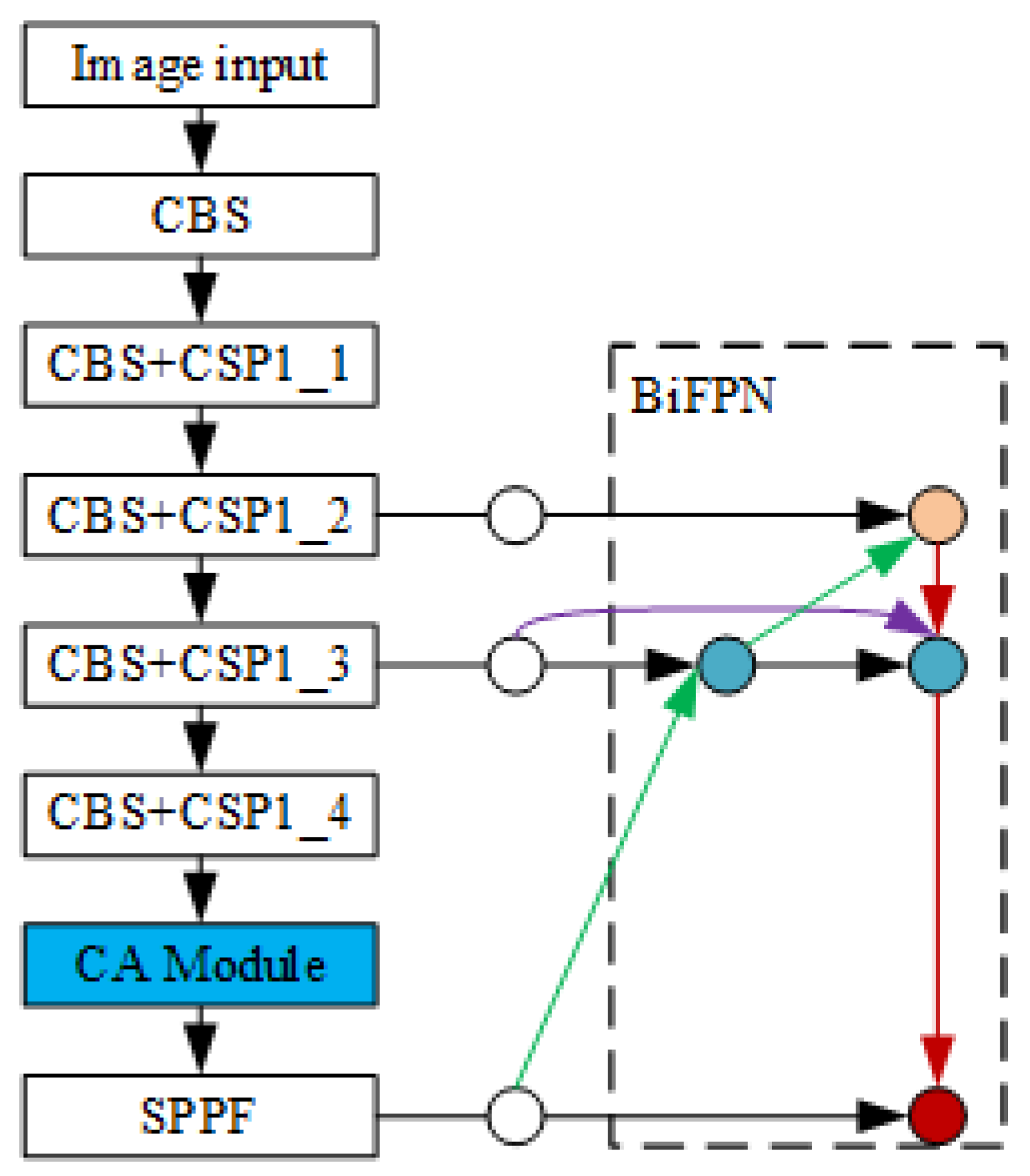

2.2. Bidirectional Feature Pyramid Network Model

2.3. Loss Function

3. Experiment Results and Discussion

3.1. BDD100K Dataset

3.2. Evaluation Metrics

3.3. Results and Discussion

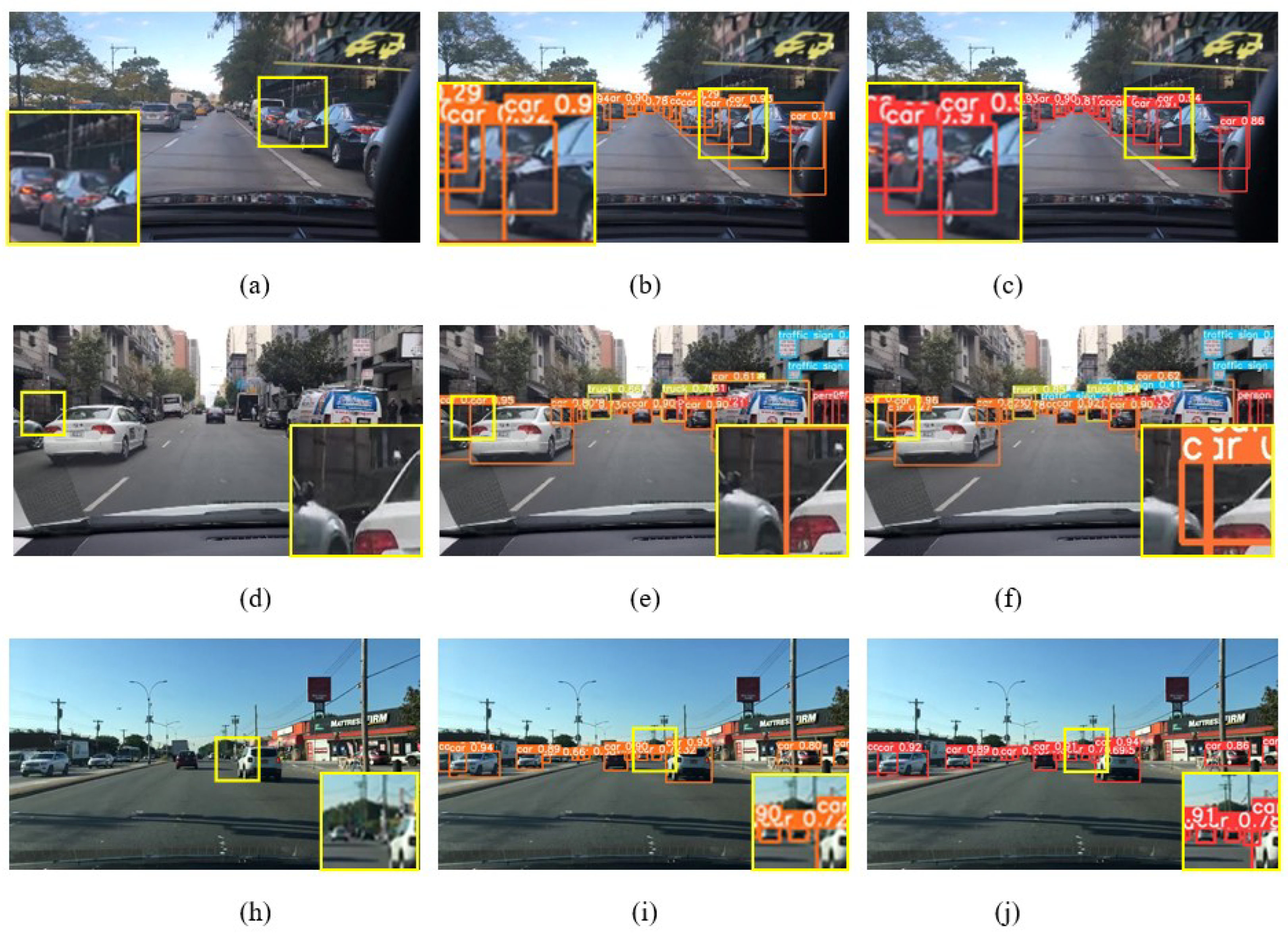

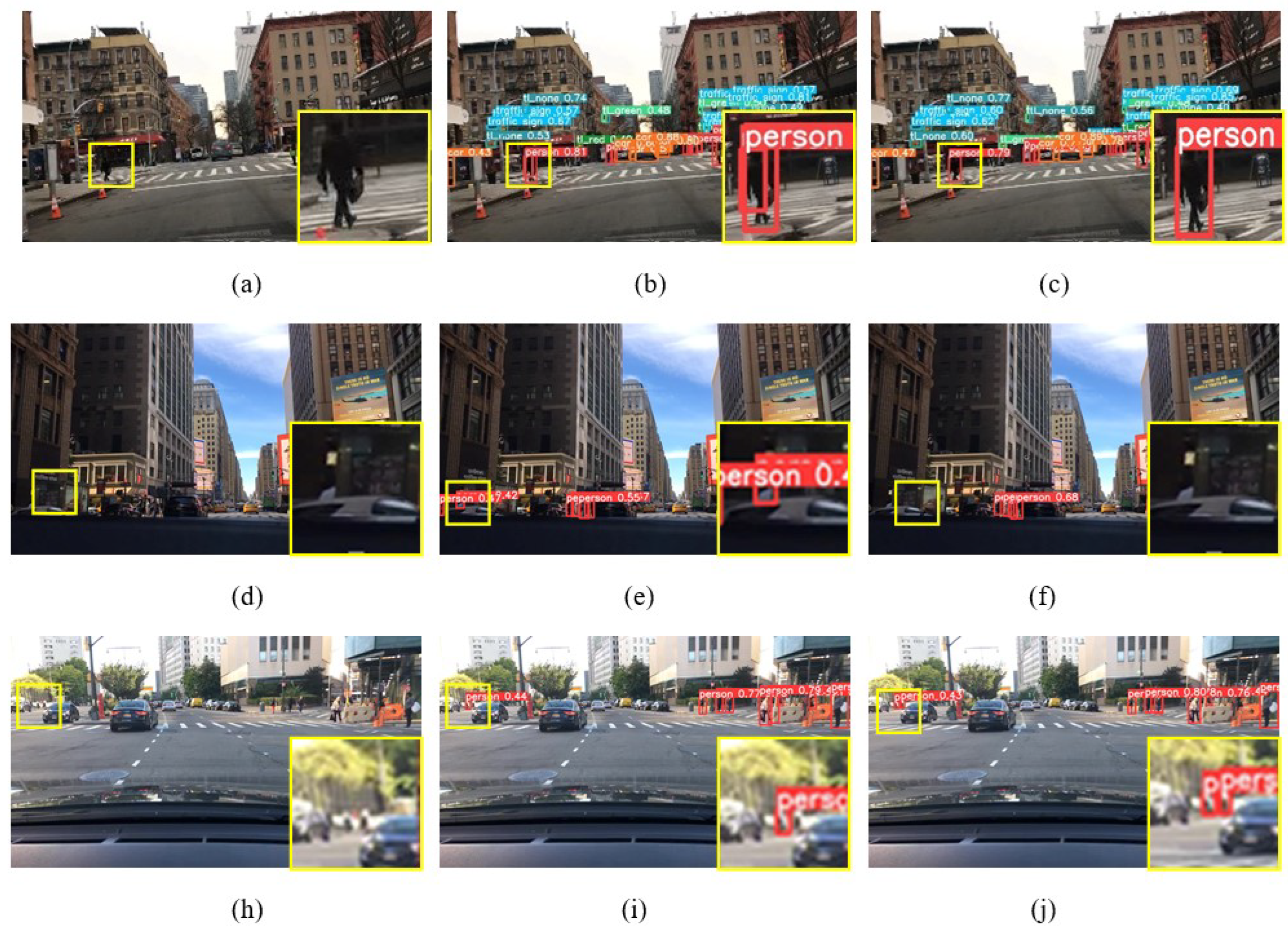

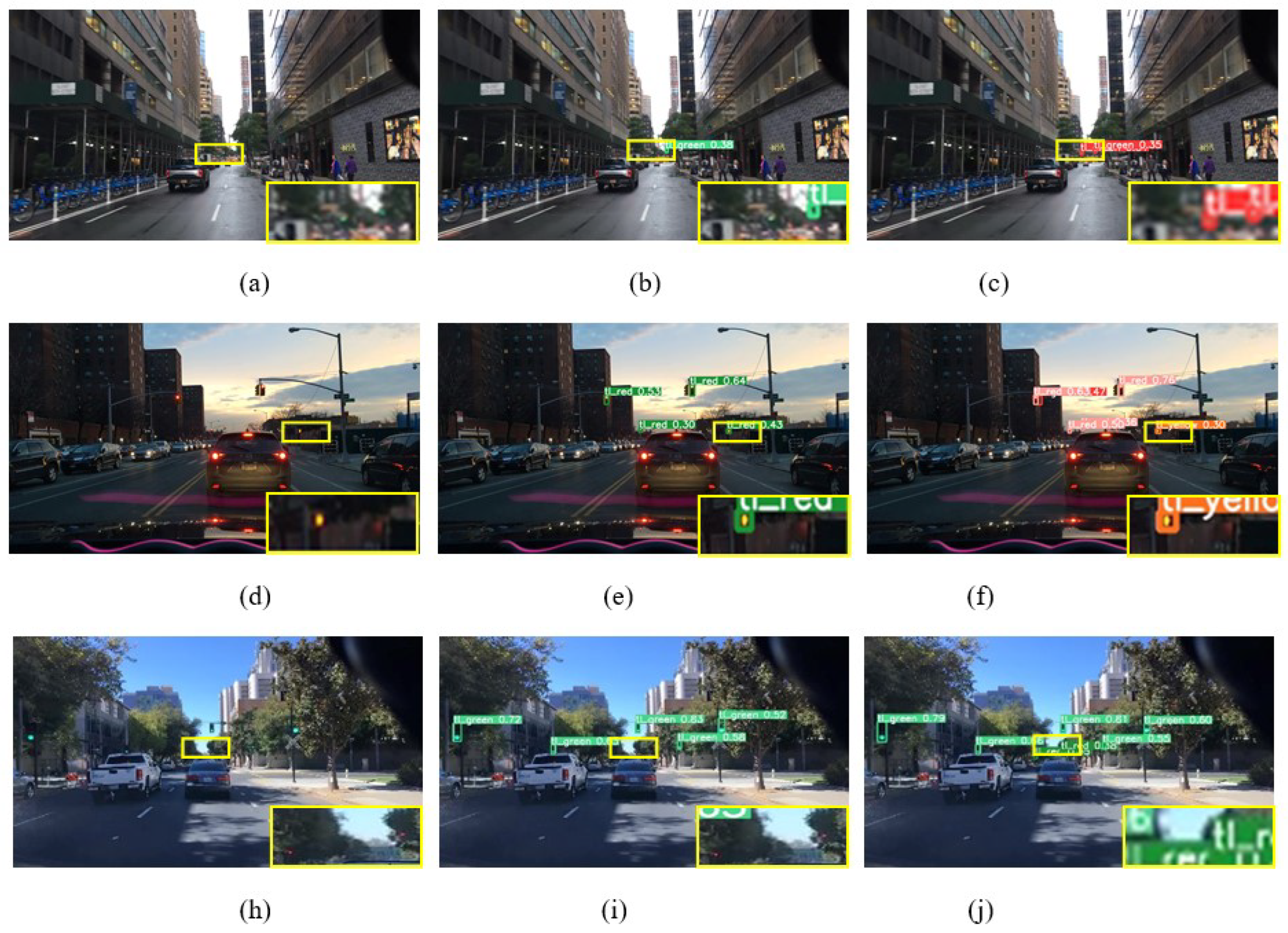

3.3.1. Detection Results

3.3.2. Detection Performance under Special Circumstances

3.3.3. Ablation Experiments

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mori, H.; Kotani, S.; Saneyoshi, K.; Sanada, H.; Kobayashi, Y.; Mototsune, A.; Nakata, T. The matching fund project for practical use of robotic travel aid for the visually impaired. Adv. Robot. 2004, 18, 453–472. [Google Scholar] [CrossRef]

- Kumar, K.M.A.; Krishnan, A. Remote controlled human navigational assistance for the blind using intelligent computing. In Proceedings of the Mediterranean Symposium on Smart City Application, New York, NY, USA, 25–27 October 2017; pp. 1–4. [Google Scholar]

- Nanavati, A.; Tan, X.Z.; Steinfeld, A. Coupled indoor navigation for people who are blind. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 201–202. [Google Scholar]

- Keroglou, C.; Kansizoglou, I.; Michailidis, P.; Oikonomou, K.M.; Papapetros, I.T.; Dragkola, P.; Michailidis, I.T.; Gasteratos, A.; Kosmatopoulos, E.B.; Sirakoulis, G.C. A Survey on Technical Challenges of Assistive Robotics for Elder People in Domestic Environments: The ASPiDA Concept. IEEE Trans. Med. Robot. Bionics 2023. [Google Scholar] [CrossRef]

- Elachhab, A.; Mikou, M. Obstacle Detection Algorithm by Stereoscopic Image Processing. In Proceedings of the Ninth International Conference on Soft Computing and Pattern Recognition (SoCPaR 2017), Marrakech, Morocco, 11–13 December 2018; pp. 13–23. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Qiu, X.; Huang, Y.; Guo, Z.; Hu, X. Obstacle detection and depth estimation using deep learning approaches. J. Univ. Shanghai Sci. Technol. 2020, 42, 558–565. [Google Scholar]

- Duan, Z.; Wang, J.; Ding, Q.; Wen, Q. Research on Obstacle Detection Algorithm of Blind Path Based on Deep Learning. Comput. Meas. Control 2021, 29, 27–32. [Google Scholar]

- Wang, W.; Jin, J.; Chen, J. Rapid Detection Algorithm for Small Objects Based on Receptive Field Block. Laser Optoelectron. Progress 2020, 57, 021501. [Google Scholar] [CrossRef]

- Jiang, Y.; Tong, G.; Yin, H.; Xiong, N. A pedestrian detection method based on genetic algorithm for optimize XGBoost training parameters. IEEE Access 2019, 7, 118310–118321. [Google Scholar] [CrossRef]

- Huang, X.; Ge, Z.; Jie, Z.; Yoshie, O. Nms by representative region: Towards crowded pedestrian detection by proposal pairing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10750–10759. [Google Scholar]

- Ouyang, Z.; Niu, J.; Liu, Y.; Guizani, M. Deep CNN-based real-time traffic light detector for self-driving vehicles. IEEE Trans. Mob. Comput. 2019, 19, 300–313. [Google Scholar] [CrossRef]

- Balaska, V.; Bampis, L.; Kansizoglou, I.; Gasteratos, A. Enhancing satellite semantic maps with ground-level imagery. Robot. Auton. Syst. 2021, 139, 103760. [Google Scholar] [CrossRef]

- Rangari, A.P.; Chouthmol, A.R.; Kadadas, C.; Pal, P.; Singh, S.K. Deep Learning based smart traffic light system using Image Processing with YOLO v7. In Proceedings of the 2022 4th International Conference on Circuits, Control, Communication and Computing (I4C), Bangalore, India, 21–23 December 2022; pp. 129–132. [Google Scholar]

- Zhou, Y.; Wen, S.; Wang, D.; Mu, J.; Richard, I. Object detection in autonomous driving scenarios based on an improved faster-RCNN. Appl. Sci. 2021, 11, 11630. [Google Scholar] [CrossRef]

- Hnewa, M.; Radha, H. Multiscale domain adaptive yolo for cross-domain object detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 3323–3327. [Google Scholar]

- Wu, S.; Yan, Y.; Wang, W. CF-YOLOX: An Autonomous Driving Detection Model for Multi-Scale Object Detection. Sensors 2023, 23, 3794. [Google Scholar] [CrossRef] [PubMed]

- Castelló, V.O.; Igual, I.S.; del Tejo Catalá, O.; Perez-Cortes, J.C. High-Profile VRU Detection on Resource-Constrained Hardware Using YOLOv3/v4 on BDD100K. J. Imaging 2020, 6, 142. [Google Scholar] [CrossRef]

- Yu, F.; Xian, W.; Chen, Y.; Liu, F.; Liao, M.; Madhavan, V.; Darrell, T. Bdd100k: A diverse driving video database with scalable annotation tooling. arXiv 2018, arXiv:1805.04687. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Haut, J.M.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Li, J. Visual attention-driven hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8065–8080. [Google Scholar] [CrossRef]

- Santavas, N.; Kansizoglou, I.; Bampis, L.; Karakasis, E.; Gasteratos, A. Attention! A lightweight 2d hand pose estimation approach. IEEE Sens. J. 2020, 21, 11488–11496. [Google Scholar] [CrossRef]

- Sun, J.; Jiang, J.; Liu, Y. An introductory survey on attention mechanisms in computer vision problems. In Proceedings of the 2020 6th International Conference on Big Data and Information Analytics (BigDIA), Shenzhen, China, 4–6 December 2020; pp. 295–300. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Xie, C.; Zhu, H.; Fei, Y. Deep coordinate attention network for single image super-resolution. IET Image Process. 2022, 16, 273–284. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligenc, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

| Detection Category | Precision (%) | AP50 (%) |

|---|---|---|

| pedestrians | 75.10 | 68.10 |

| vehicles | 84.60 | 80.60 |

| traffic lights | 69.30 | 55.20 |

| Attention Mechanism | Parameters | GFLOPs | mAP (%) |

|---|---|---|---|

| SE | 16.0 | 62.95 | |

| CBAM | 16.1 | 62.70 | |

| CA | 16.0 | 63.05 |

| Model | mAP (%) | AP50 (%) | Inference Time/ms | ||

|---|---|---|---|---|---|

| People | Car | Traffic Lights | |||

| AD-Faster-RCNN | 56.15 | 62.00 | 50.30 | - | - |

| SSD | 45.39 | 44.33 | 46.46 | - | - |

| MS-DAYOLO | 55.70 | 45.37 | 73.74 | 48.00 | |

| YOLOv4-416 | 60.86 | 50.32 | 71.40 | - | - |

| YOLOv4 | 60.45 | 51.70 | 69.20 | - | - |

| YOLOv5 | 66.10 | 56.20 | 76.00 | 47.03 | 3.4 |

| YOLOv6 | 53.00 | 47.00 | 71.20 | 40.80 | 3.15 |

| YOLOv7-tiny | 56.03 | 51.70 | 72.60 | 43.80 | 2.6 |

| Ours | 67.97 | 68.10 | 80.60 | 55.20 | 4.7 |

| Model | CA | BiFPN | SIoU | mAP (%) | AP50 (%) | ||

|---|---|---|---|---|---|---|---|

| Pedestrians | Vehicles | Traffic Lights | |||||

| Experiment 1 | √ | √ | × | 65.50 | 64.60 | 80.30 | 51.60 |

| Experiment 2 | √ | × | √ | 61.38 | 58.20 | 77.40 | 48.53 |

| Experiment 3 | × | √ | √ | 60.97 | 53.70 | 76.90 | 48.20 |

| Experiment 4 | √ | √ | √ | 67.96 | 68.10 | 80.60 | 55.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhang, W.; Yang, X. An Enhanced Deep Learning Model for Obstacle and Traffic Light Detection Based on YOLOv5. Electronics 2023, 12, 2228. https://doi.org/10.3390/electronics12102228

Li Z, Zhang W, Yang X. An Enhanced Deep Learning Model for Obstacle and Traffic Light Detection Based on YOLOv5. Electronics. 2023; 12(10):2228. https://doi.org/10.3390/electronics12102228

Chicago/Turabian StyleLi, Zhenwei, Wei Zhang, and Xiaoli Yang. 2023. "An Enhanced Deep Learning Model for Obstacle and Traffic Light Detection Based on YOLOv5" Electronics 12, no. 10: 2228. https://doi.org/10.3390/electronics12102228

APA StyleLi, Z., Zhang, W., & Yang, X. (2023). An Enhanced Deep Learning Model for Obstacle and Traffic Light Detection Based on YOLOv5. Electronics, 12(10), 2228. https://doi.org/10.3390/electronics12102228