1. Introduction

In traditional computer networks, the control plane and data plane are tightly coupled, and the entire structure is highly dispersed. Therefore, it is difficult to quickly expand new features and have precise control over network behavior. Software-defined networking (SDN) [

1] is a new paradigm that enhances network performance and makes monitoring and managing network status and behavior easier. By separating data forwarding and routing control in the network, centralized control and distributed forwarding can be achieved. SDN is considered a revolution in computer networking, providing a new experimental approach for researching new internet architectures and greatly propelling the development of the next generation of the internet [

2].

However, while SDN has brought network flexibility and scalability, it has also introduced new security concerns to the network, as its flattened network structure increases the attack surface [

3]. Numerous studies have shown that SDN networks are currently highly vulnerable to various security attacks. Attackers can launch multiple security attacks against SDN’s application plane, control plane, and data plane, posing a significant threat to network security. Of particular concern is the topology attack, which involves tampering with the topology information in the controller [

4]. The controller collects topology information of the entire network to create a global view of the network. The accuracy of the controller’s global topology information is crucial, as many applications heavily rely on this information.

Currently, almost all mainstream open-source controllers such as Floodlight, OpenDayLight, Maestro, NOX, and Ryu use the same mechanism to collect topology information, which is the OpenFlow Discovery Protocol (OFDP). According to the OFDP protocol, the controller periodically sends LLDP packets to discover network topology. During the process of topology discovery, when the controller’s LLDP packet reaches a switch, the switch forwards the packet to its adjacent switch before sending it back to the controller. Based on the path recorded in each LLDP packet, the controller constructs a topology link between different ports of the switches. However, the transmission path of LLDP packets lacks an effective verification mechanism, allowing attackers to inject false links into the controller by relaying LLDP packets. This can disrupt the controller’s global view and is known as Relay Link Forged Attack (RLFA), which is a type of topology forgery attack against SDN controllers.

Several defensive measures have been proposed in the literature to reduce the risk of topology forgery attacks [

5,

6,

7]. For instance, TopoGuard [

5] and SPHINX [

7] monitor network packets aimed at the controller to detect topology forgery attacks. TopoGuard+ [

7] has introduced two novel topology forgery attacks that can bypass the defenses of TopoGuard and SPHINX. Additionally, they propose using delay detection to detect RLFA. Subsequently, many detection methods for RLFA [

8,

9,

10,

11,

12] have also relied on delay measurements. However, these defensive techniques against RLFA heavily rely on the timing information of LLDP frames. Therefore, in cases where the network is complex or subject to delayed attacks that cause significant fluctuations in network latency information, these methods may prove ineffective. Moreover, it is challenging to manually extract effective features for such attacks. Therefore, detecting Relay Link Forgery Attacks has become a challenging task.

In recent years, transformer models based on self-attention mechanisms [

13] have achieved tremendous success in both natural language processing (NLP) [

14] and computer vision (CV) [

15] fields. Inspired by sentiment classification tasks in NLP and the application of transformers in network flow classification [

16,

17], we have found that transformers have great potential in extracting information from network flows. Compared to traditional detection methods that rely solely on delay information, utilizing transformer models can better integrate various types of information from network flows. Therefore, we have applied transformer models to the detection of relay link forgery attacks.

In this paper, we propose a transformer-based detection model for Relay Link Forgery Attacks (RLFATs) in SDN. On one hand, the attack information is concealed within the network stream of the controller. On the other hand, the attack can cause abnormal network states, and these anomalous information will also be included in the network stream. Therefore, we utilize a transformer to extract features from the network stream of the controller, enabling the classification of whether the network has been subjected to RLFA. The deployment location of the RLFAT detection module in SDN is illustrated in

Figure 1. Through experimental verification, the proposed model in this article demonstrates excellent performance in terms of accuracy, recall rate, F1 score, and AUC metrics.

The main contributions of this paper are as follows:

To our knowledge, this article is the first work that uses deep learning methods for RLFA detection. We propose a transformer-based deep learning detection model (RLFAT) that utilizes the powerful feature extraction ability of transformers on sequential data to detect Relay Link Forgery Attacks on SDN.

We created a dataset based on random topology structures, random network states, and random attack initiation, which contains a more diverse range of network states. This enables our model to have good adaptability after learning from the dataset.

We tested RLFAT with different parameters for RLFA detection as well as several commonly used deep learning models. We evaluated these different models based on accuracy, recall rate, F1 score, and AUC. The results indicate that our proposed method has the best performance among all methods.

The structure of the remaining parts of this article is as follows:

Section 2 introduces related works;

Section 3 provides background information on link forgery attacks and defines the threat model;

Section 4 describes the deep learning models used and our proposed detection model;

Section 5 presents the experimental process and evaluates the experimental results;

Section 6 concludes the paper and suggests future work.

2. Related Work

In this section, we will briefly describe the technical development of topology forgery attack detection in SDN networks.

Topology forgery attacks in SDN were first introduced in [

5]. It is a protocol-based attack where the adversary does not need to have access to the control plane or know the vulnerabilities of the controller. The two types of security threats in this attack are link forgery attack (LFA) and host location hijacking attack (HLHA). The purpose of LFA is to add a false link between switches in the controller’s topology view. On the other hand, the purpose of HLHA is to manipulate the position of hosts in the network to mislead the flow of network traffic. The RLFA studied in this article is a type of LFA. The TopoGuard proposed in the article protects the network from the impact of LFA through a port label policy. However, the main drawback of TopoGuard is that an adversary can pretend to be a switch with a relay LLDP packet by disrupting the host’s label, rendering it ineffective in defending against the RLFA studied in this article.

SPHINX [

7] is a more general anomaly detector. It also studied topology forgery attacks and discovered potential anomalies by comparing the flow graphs of two network traffics. However, the defense mechanism of SPHINX cannot explicitly detect host hijacking attacks or link forgery attacks.

In [

6], two new topology attack methods are proposed that can bypass the defense of TopoGuard. They developed the TopoGuard+ framework, which includes a Link Latency Inspector (LLI) module to detect false links. LLI calculates the latency of links between switches and compares it with the latency threshold. However, with the continuous change of network state, a single latency threshold is difficult to accurately distinguish between true and false links.

In [

8], a hypothesis testing method is used to perform statistical testing on the link latency for each new link. In [

9], it is proposed that with the increase of LLDP packet size, false links produce unusual latency deviations compared to normal links. Large LLDP packets will attempt silent relaying on the attacker’s interface. In [

10], it is proposed to combine delay attacks with link forgery attacks to bypass the defense of the TopoGuard+ framework by disrupting the network latency. Then, using machine learning to classify the latency of LLDP packets is proposed. However, their machine learning model is trained on a single topology environment, which lacks generality and practicality for large networks.

In their further work [

18], they expanded their work and proposed a real-time and scalable defense mechanism to detect attacks. They expanded the topology of the training dataset and used multiple machine learning classification algorithms to train the model. However, their detection performance, as indicated by the low f1 score, was insufficient for detecting delay attacks, highlighting the need to improve detection capability.

In [

11], a delay-based self-learning monitoring for switches was also used to address link-fabrication attacks in hybrid networks. In [

12], a delay threshold was also employed to defend against spoofing attacks on the relay links.

There are also some other detection schemes, but they all have certain implementation issues. In [

19], the author proposed a new link discovery protocol that improved the controller discovery method, reduced the controller workload, and enhanced efficiency and security. However, the promotion of the new protocol is inevitably affected by various factors such as network deployment, applications, and standardization, given that the existing OFDP protocol is already quite mature. In [

20], an active detection method called SPV was proposed, which employed a verification method based on covert probing. SPV gradually verified legitimate links and detected false links by sending probing packets that were difficult to distinguish from normal traffic. However, this method is not suitable for controllers with large-scale applications, as large-scale deployment would make covert probing no longer covert.

Based on the above review, we can see that the mainstream approach to combating RLFA in the literature is to analyze the delay information of LLDP packets in various ways, with the aim of filtering out relayed LLDP packets. However, the existence of delay attacks and the complexity of delay in large-scale networks make the detection methods relying solely on delay information unreliable. At the same time, false positives may also be caused by fluctuations in delay on some normal links. Moreover, some other non-mainstream defense methods are also difficult to implement and deploy. Therefore, to achieve comprehensive detection of RLFA, we have adopted the popular Transformer framework and proposed a new detection model called RLFAT, which extracts information from the complete network flow received by the controller, achieving effective detection of RLFA attack information.

3. Relay Link Forgery Attack

This section introduces the background of Spoofing Attacks on Relay Links (RLFA), including the structure of Software-Defined Networking (SDN), the process of SDN link discovery, and the introduction of RLFA. We then define the threat model used in this paper.

3.1. Background Information

3.1.1. The Composition Structure of SDN

Software-Defined Networking (SDN) is a modern network management approach that separates the control plane and data plane in network infrastructure. A typical SDN implementation architecture consists of three planes:

The control plane contains the SDN controller, which serves as the central brain of the network. The SDN controller has a global view of the network and communicates with data plane elements to make centralized, automated decisions about network behavior.

The data plane includes physical and virtual network devices such as switches and routers that forward traffic based on instructions received from the control plane. The data plane devices are responsible for the actual forwarding of network packets.

The application layer includes network applications that run on top of the control layer and interact with the network using SDN APIs. These applications provide network services and functionalities, such as network monitoring, security, and load balancing, and can be customized to meet specific needs.

In summary, the control plane provides centralized control and management, the data plane implements forwarding decisions, and the application plane provides network services and functionalities. SDN encompasses various types of technologies, including functional separation, network virtualization, and automation achieved through programmability.

3.1.2. The Link Discovery Process of SDN

OFDP is the protocol used by OpenFlow controllers to discover the underlying topology. The OpenFlow protocol utilizes OFDP to achieve bi-directional communication between switches and controllers [

21]. In SDN, OFDP has undergone minor modifications to perform topology discovery based on LLDP [

22]. LLDP is a link layer protocol that allows directly connected devices to declare the physical connection between them. When LLDP is enabled on switches in the network, they periodically broadcast packets containing information such as their device type, name, and other relevant details. Other devices on the same network receive these packets and use the information to construct a graphical representation of the network topology.

The specific process of SDN topology discovery is shown in

Figure 2. To initiate the SDN topology discovery process, the controller first creates an LLDP packet and sends it as a packet-out message to switch S1. Upon receiving the packet-out message, switch S1 broadcasts the LLDP packet from its P2 port. When switch S2 receives the LLDP packet, it writes its own information S2 and the receiving port P3 into the LLDP packet. Subsequently, switch S2 sends a packet-in message carrying the LLDP packet to the controller based on the pre-installed rules in its flow table. Therefore, based on the information in the LLDP packet, the controller can infer the existence of an internal link from (S1, P2) to (S2, P3).

3.1.3. Relay Link Forgery Attack

The purpose of a Relay Link Forgery Attack is to insert an illegitimate link between two legitimate switches in the global view of the controller. The Relay Link Forgery Attack is a type of link forgery attack. Based on the different methods of generating LLDP packets, link forgery attacks can be classified into two types: forgery attacks and relay attacks. Forgery attacks occur when an attacker launches an attack by sending fake LLDP packets to the controller. The relay attack, which is the focus of this article, refers to the attacker launching an attack by relaying genuine LLDP packets between two switches that are not directly connected, using methods such as wireless communication. Compared to forgery attacks, which can be defended against using various signature algorithms, detecting relay attacks is much more difficult.

3.2. Threat Model

The attack discussed in this article is the Relay Link Fabrication Attack (RLFA), which is a serious security threat. We assume that an attacker can gain control of any two hosts or virtual machines in an SDN network by infecting them with viruses, trojans, or malware, or even in worse cases, the attacker could be an insider. As shown in

Figure 3, the attacker listens to the LLDP packets sent from switch S1 and relays them to h2, which ultimately sends the LLDP packet to switch S2. From the controller’s perspective, there seems to be a normal link between switches S1 and S2, but in reality, this link is created by the attacker’s relayed LLDP packets. It is worth noting that the attacker does not create any forged packets during the entire attack process but instead uses normal packets to relay them, making RLFA difficult to detect directly.

Attackers often take various covert ways to hide their attack behavior to avoid detection and recognition as much as possible. In the Relay Link Fabrication Attack, attackers can use an out-of-band communication channel, i.e., relay LLDP packets in a communication channel outside the SDN network, to hide their attack behavior. This out-of-band communication channel may be fiber, wireless connection, or any other communication method that is not controlled by the SDN network. Moreover, this attack does not generate any false packets, and all packets come from normal packets inside the network. Therefore, it can easily bypass methods that detect link fabrication attacks against forged LLDP packets. Since the SDN network topology may be dynamic, hosts can be powered off and replaced with switches, and the network should be able to adapt quickly accordingly. This means that the schemes that expect to prevent relay attacks by adding labels to ports, such as TopoGuard [

5], must have a forgetting mechanism. The Relay Link Fabrication Attack can easily evade this defense mechanism [

6] by using this forgetting mechanism. For attack methods based on delay detection, attackers can also use delay attack methods [

10] to increase the delay of the real network link to evade detection.

Attackers can use the Relay Link Fabrication Attack to fabricate links in an SDN network, which can lead to the routing table and topology structure of the SDN network being tampered with, resulting in network traffic forwarding errors and reduced network performance and availability. In addition, RLFA attacks may also lead to further denial of service attacks. Most SDN controllers provide a spanning tree service to prevent broadcast storms and save energy. When any topology update occurs, the spanning tree service is triggered to shut down some redundant ports to prevent network loops. Attackers can use this function to launch denial of service attacks by injecting a fake link into the existing topology, thereby shutting down normal switch ports.

4. Detection Model

In this section, we first briefly introduce the Transformer deep learning model we used and then describe our defensive model.

4.1. Transformer Model

The Transformer architecture, proposed by Ashish Vaswani et al. in their paper “Attention Is All You Need” [

14], is primarily used in the field of natural language processing (NLP) and revolutionized the way we handle data sequences.The main idea behind the Transformer model is the self-attention mechanism. Unlike traditional recurrent neural networks (RNNs) and convolutional neural networks (CNNs), the Transformer model uses self-attention to process input sequences. The self-attention mechanism allows the model to weigh the importance of each element in the sequence and leverage this information when making predictions.

Utilizing an architecture that depends solely on attention mechanisms, the Transformer introduces a multi-head attention mechanism as a key innovation [

23]. It consists of several components, including encoders and decoders, which are composed of multiple layers of self-attention and feed-forward neural networks. The encoder takes in an input sequence and generates a representation of that sequence, while the decoder takes in that representation and generates an output sequence.

A series of identical layers make up the encoder in the Transformer model. Each of these layers comprises two sub-layers: a multi-head self-attention layer and a fully connected feed-forward neural network layer. The multi-head self-attention layer is used to compute a representation of the input sequence, while the feed-forward network is used to increase the nonlinearity of the representation.

Similar to the encoder, the Transformer’s decoder is composed of multiple identical layers. In addition to the multi-head self-attention and feed-forward network layers similar to the encoder, the decoder also contains an additional multi-head attention layer, which allows the decoder to use the results of the encoder to generate the output sequence.

As a crucial element of the Transformer model, the multi-head attention mechanism partitions the input into query, key, and value segments by mapping the query and key to a high-dimensional space. By calculating similarity in different subspaces of the space, the multi-head attention mechanism is able to weigh the importance of different elements in the input sequence. The mechanism consists of multiple attention heads that can process the input sequence in parallel, and their results are concatenated to obtain the final result.

By introducing the multi-head attention mechanism, the Transformer model becomes capable of concentrating on particular elements of the input sequence when making predictions. This enables the model to handle sequences of different lengths better and can process input sequences in parallel, making its training and usage faster. Additionally, by utilizing the self-attention mechanism to capture the relationships between different elements in the sequence, the Transformer is able to handle long sequences of data better than traditional recurrent neural networks.

In summary, the Transformer architecture is a complex deep learning framework designed for processing sequence data, and it leverages self-attention mechanisms to weigh the importance of different elements in the input sequence. The Transformer is a powerful deep learning architecture that has had a significant impact on the field of natural language processing and is widely used in various applications.

4.2. Defensive Model

The most covert aspect of RLFA is that it can make almost all data packets appear legitimate, and LLDP packets cannot be detected during the relay process. Therefore, when facing such attacks, detection needs to comprehensively consider network behavior and network state.

Due to the false link information received by the controller being included in the network stream, which includes a series of packet-in packets containing LLDP packets with relays, these packets are individually legitimate, but the behavior represented by their sequence is illegitimate. Only by taking a sequential perspective can the corresponding attack behavior be analyzed. Meanwhile, RLFA will disrupt the global view of the SDN network, causing anomalies in the network itself. For example, because Relay Link Forgery Attacks require relays, the delay of the inserted false links differs from that of normal links, which is a commonly used direction for detecting RLAT attacks. In addition, the literature indicates that relayed link forgery attacks will cause the IP address of the victim host to appear more frequently within a certain range [

12], and it will also cause fluctuations in the number of packet-in and packet-out data packets [

18]. This indicates that in addition to abnormal delays, there is a lot of other attack information available for mining in the network stream received by the controller. These abnormal information are also included in the network stream received by the controller. Therefore, to detect this type of attack, it is necessary to consider the network behavior and network state comprehensively, and this information is all included in the network stream.

Therefore, in this paper, we analyze the data stream received by the controller. We abstract the problem as a binary classification problem, namely whether there is RLFA attack information in the network flow received by the controller. To address this binary classification problem, we use the Transformer model, which has powerful sequence feature extraction capabilities, to automatically extract features from the network flow. This enables us to comprehensively consider both behavioral information and state information in the network flow.

As the network flow we are dealing with is a sequence of temporal information, Transformer, compared to RNN which also deals with temporal information, can use distributed training and has better training efficiency on GPUs. At the same time, when analyzing and predicting longer sequences, Transformer can capture longer-range associations between elements. Additionally, Transformer can avoid the common issues of vanishing and exploding gradients that are frequently encountered in RNNs. Furthermore, Transformer’s learned features are more robust for transfer learning because of its more structured memory, enabling it to process longer sequences of information [

24].

In this paper, we utilized the encoder module of the Transformer model to detect RLFA. The structure of the RLFAT model we used is illustrated in

Figure 4. The input of the model is the network flow received by the controller, which is a sequence of packets. The datasets were collected in both normal and attack states, and the details of the dataset collection are described in

Section 5.2. Analogous to NLP tasks, we treat each data packet as a word and the entire network flow as a sentence, from which we extract information on the presence of attack behavior. Firstly, the input packet sequence passes through the encoding layer, which incorporates position encoding to indicate the position information of each packet in the sequence. Then, the multi-head self-attention layer in each encoder block extracts sequence features, and the resulting output is fed to the next layer through a feed-forward layer. Finally, after several layers of encoding blocks, the extracted features are processed through a fully connected layer, outputting the probability value that the flow was collected in an attack state.

5. Results and Evaluation

In this section, we first introduce the evaluation criteria for our experiments, which is followed by the collection process of the dataset we used in our experiments. Then, we describe the training process of RLFAT and finally evaluate the proposed model based on the experimental results.

5.1. Evaluation Criteria

We employ a variety of metrics to evaluate our proposed model, such as accuracy, recall, precision, and F1 score. These metrics are commonly used evaluation measures for binary classification problems and are defined as follows:

In this context, true positive (TP) and true negative (TN) represent the correctly predicted values, while false positive (FP) and false negative (FN) indicate the events of misclassification.

To evaluate the ability of our model to identify samples, we used the ROC [

25] curve and evaluated it by calculating the AUC (area under the ROC curve). The larger the AUC, the better, as it indicates a higher diagnostic value of the model.

AUC ≈ 1.0: The ideal state of the model’s judgment;

AUC = 0.7–0.9: High accuracy of the model;

AUC = 0.5: The model has no diagnostic value.

Here, M represents the number of positive samples and N represents the number of negative samples.

5.2. Acquisition of the Dataset

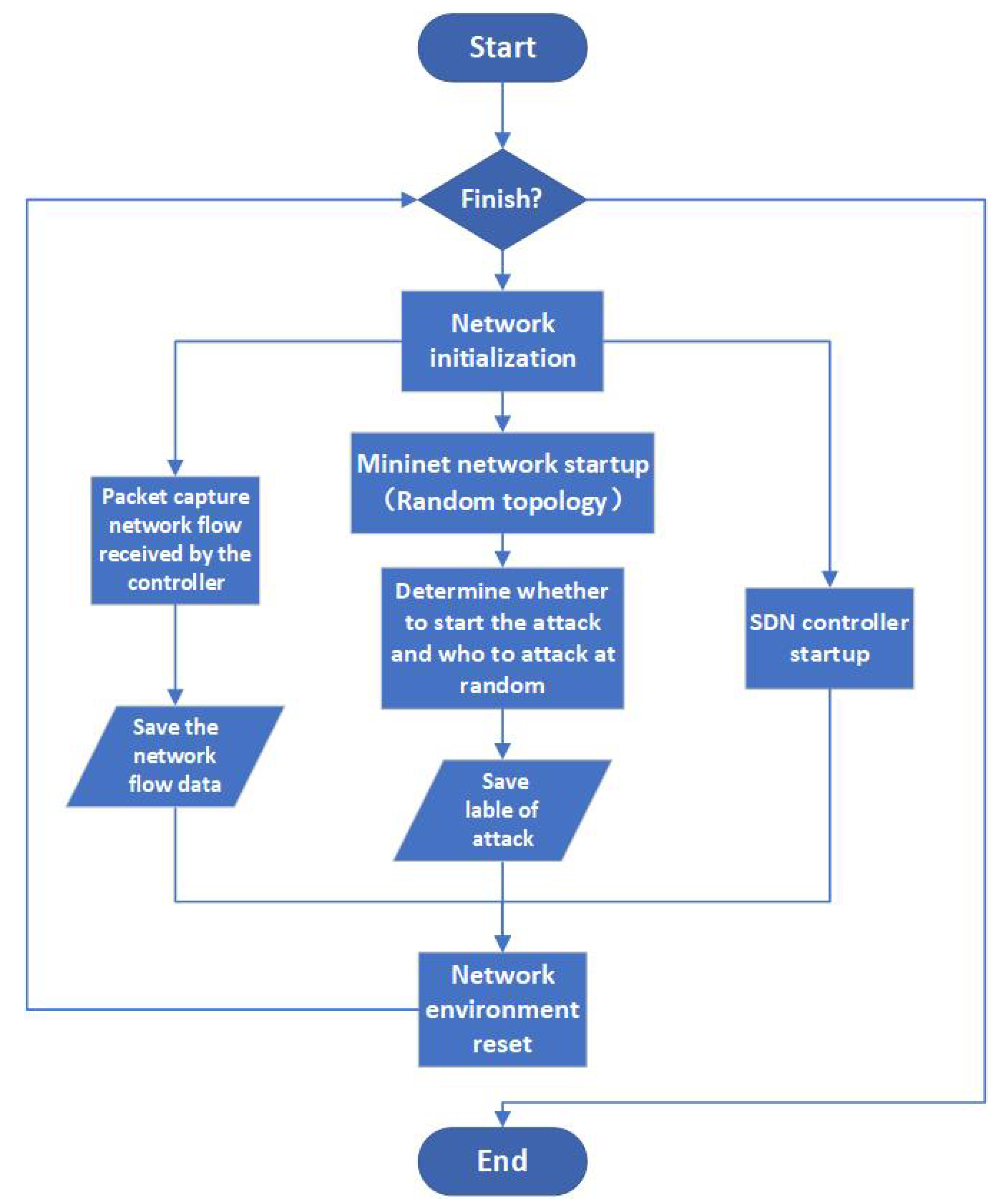

Due to the lack of detailed datasets for RLFA in SDN networks, we developed a script to automatically generate the required training dataset in a simulated environment. The process flowchart of the generation process is shown in

Figure 5. This script was developed using Python and runs on a virtual machine of Ubuntu. The network is simulated using Mininet, the SDN controller is implemented using the Ryu controller, and network flow capture is performed using the pypcap package.The automatic generation script consists of three parallel modules: the controller module, the network flow collection module, and the network activity module. The controller module is responsible for automatically starting and resetting the controller. The network flow collection module listens in on the corresponding port of the controller and captures the network flows received by the controller during the attack process. The captured network flows in this study contain 256 complete data packets received by the controller and their corresponding timestamps.

The network activity module is the core of the entire process. To ensure the applicability of the dataset, we randomly generate a neighbor matrix to generate the Mininet simulation network, with a network size of 5 to 10 switches, and simulate normal network activity. Then, we randomly select whether to launch an attack, generate attack labels based on whether an attack is launched, and wait for the network flow collection module to collect network flows. Afterwards, the entire network is reset.

The labels generated by the network activity module and the network flows collected by the network flow collection module are used as a data entry in the dataset. Each data entry represents a network flow, which is a sequence of multiple packets. In our simulation experiments, the length of the packet sequence is 256, which contains about 3–5 SDN topology collection cycles. The information of each packet is placed in a single row, with an 8-bit timestamp indicating when the packet was received by the controller. Among the packets received by the controller, more than 95 percent of them have a length of less than 248, and most of the protocol information and network information are contained in the packet headers. Therefore, we align the length of each packet to 248 bits and add the 8-bit timestamp to the front. Thus, each row contains the timestamp of when the packet was received by the controller and the complete hexadecimal data of the packet. A total of 256 such rows form a 256*256 matrix.

After one month of collection from 10 parallel switches, our script has collected a total of 334,000 records, of which 166,604 were in the attack state and the rest were in the non-attack state. The data are roughly balanced. Furthermore, we have uploaded and open-sourced the dataset on the Kaggle website [

26].

5.3. Training Environment and Process

Our experimental operations were conducted on a Linux 64-bit operating system, AMD EPYC 7302 processor, and NVIDIA RTX 4090 GPU, with 64 GB of RAM and 24 GB of video memory. The experimental environment was programmed in Python, using the PyTorch deep learning framework and main packages such as Numpy, Pandas, PyTorch, Sklearn, and Matplotlib. We used the BCELoss loss function and AdamW optimizer [

27].

We trained our model using the dataset we collected, using 300,000 samples for training and 33,000 samples for testing. We considered models with different numbers of layers and whether to apply dropout to each sublayer during training [

28], with a dropout value of 0.1 as suggested in reference [

13]. We trained each model for 200 to 500 epochs, until the loss function no longer decreased. Each epoch took about 5 to 10 min, and each model was trained for approximately 1 to 4 days.

5.4. Evaluation of Experimental Results

5.4.1. Comparison of Different Model Parameters

After completing the training, we analyzed the accuracy, precision, F1 score, and recall. The results are shown in

Figure 6.

Figure 6a shows the performance of models with different numbers of layers on the training set when Dropout = 0.

Figure 6b shows the performance of models with different numbers of layers on the test set when Dropout = 0.

Figure 6c shows the performance of models with different numbers of layers on the training set when Dropout = 0.1.

Figure 6d shows the performance of models with different numbers of layers on the test set when Dropout = 0.1.

According to the results, it can be seen that increasing the number of model layers can increase the model’s resolution. Applying dropout values to each sub-layer can improve the model’s transferability, but it may slightly reduce the learning effect on the training set. From all our experimental results, it can be seen that our model has a good effect on detecting RLFA.

5.4.2. Comparison of Different Deep Learning Models

Afterwards, in order to verify the superior performance of our RLFAT model, we compared it with the classic and commonly used deep learning models CNN [

29], LSTM [

30], and GRU [

31]. For comparison purposes, all models were trained using the same deep learning framework (pytorch), the same loss function (BCELoss), the same optimizer (AdamW), the same batch size (256), and the same learning rate (

), and they were trained until the loss no longer decreased. CNN uses the classic VGG16 model [

28], while LSTM and GRU both use the default LSTM and GRU model of Pytorch. Their input dimensions are 256*256 (matching the dimensions of a single piece of data in the data set obtained in

Section 5.2), and their output is whether there is a probability of attack. The training results and ROC curves of the models are shown in

Figure 7 and

Figure 8 (Acc represents Accuracy, Pre represents Precision, recall represents Recall, and f1 represents F1 score).

Figure 7 demonstrates that our RLFAT outperforms other models such as LSTM, GRU, and CNN, in all metrics including accuracy, precision, recall, and F1 score. While the performance of LSTM and GRU, which also handle sequential data, is comparable, CNN’s performance is relatively inferior. Similarly, in

Figure 8, our RLFAT model shows a performance improvement of 7.3%, 2.2%, and 2.3% over CNN, LSTM, and GRU, respectively. Therefore, from all of our experimental results, it can be inferred that our RLFAT model, which detects RLFA by extracting attack information from network flows received by the controller, has the best performance.

5.4.3. Comparison with the Traditional Ways

To better evaluate the effectiveness of our RLFAT model, we compared our proposed method with other existing traditional solutions to better evaluate its effectiveness. We chose the classic TopoGuard+ [

7] model and the MLLG model proposed in the literature [

10], which uses delay attacks to impact RLFA detection. We collected the corresponding datasets required by each model in our simulation environment and tested their detection effects on RLFA, comparing them with our model. The results are shown in

Figure 9 (Acc represents Accuracy, Pre represents Precision, recall represents Recall, f1 represents F1 score, and auc represents AUC).

As shown in the figure, our RLFAT model outperforms the traditional TopoGuard+ model and the machine learning-based MLLG model in detecting RLFA.

Due to the interference of link delay attacks, the detection performance of TopoGuard+ is not ideal. As described in reference [

10], the presence of link delay attacks will cause the delay of normal links to be prolonged, which will extend the delay threshold of LLDP packets calculated by TopoGuard+ based on extremely abnormal maximum estimates. This will allow the delay of relayed LLDP packets to pass the detection of Topoguard+, making it unable to detect RLFA attacks.

On the other hand, the detection performance of our RLFAT model is about 10% higher than that of the MLLG model. This is because MLLG is based solely on delay features of machine learning, and the existence of link delay attacks can also affect its detection. In addition, the MLLG model is trained in the same topology environment, which may result in decreased detection efficiency in other different topology environments. Our RLFAT model, on the other hand, uses a self-attention mechanism to extract features. In the self-attention mechanism, the model can dynamically calculate the relative importance of each position to other positions, thus adaptively adjusting the contribution of each position to the output. This mechanism enables the model to simultaneously focus on all positions in the input sequence, thus allowing for a more comprehensive consideration of the data of the entire network flow. Therefore, our model can better capture the relevance between different features in the network flow sequence and the problem we are studying, resulting in better performance and effectiveness in handling network flow sequence data.

6. Conclusions and for the Future Work

In SDN environments, due to the invisibility of RLFA and the instability of network latency, a new model that does not rely on latency detection and takes into account network behavior and environmental state comprehensively is needed. In this article, we introduce the Relay Link Forgery Attack in SDN networks and use an advanced Transformer model to detect it. We simulate the Relay Link Forgery Attack on the SDN controller in different topology network environments and collect the network flows of the controller. We design and develop a deep learning model based on Transformer, which extracts deep features from the network flows received by the controller to detect this attack. Our approach has good performance and is superior to other models, as shown in experiments. However, there are limitations in our work.

First, we use a dataset collected in a simulation environment. This avoids many challenges encountered when collecting data in the real world, such as the high cost and complexity of data collection and the incompleteness or noise of data in many real-world datasets. Furthermore, even if we overcome these problems and collect datasets in a real environment, it is still difficult to prove that it is an effective dataset. The main problem is that in an incompletely controlled real environment, we cannot guarantee that the real environment from which we collect data does not have malicious samples. This will affect the training effectiveness of our attack detection model. However, using a simulated environment to collect datasets also has its limitations. The most obvious is that due to the differences between the simulated environment and the real-world dataset, there is a risk of overfitting, which may not be able to generalize results in the real world. In addition, the dataset collected in the simulation environment may not capture the complexity and diversity of situations in the real world, which may result in performance degradation in the real world. To address this issue, our main strategy is to use multiple datasets collected in simulation environments for training and testing and then cross-validate the results as much as possible to avoid overfitting. Therefore, we plan to expand this approach to address more issues in SDN networks. Finally, considering that our current experiments are all conducted in a simulated environment, we also plan to verify and deploy this approach in actual SDN engineering environments.

Since our main goal is to prove the effectiveness of RLFA detection in RLFA attacks in a controlled experimental environment, our focus is not on evaluating the performance of RLFA in larger and more complex networks but on providing a conceptual proof of the effectiveness of deep learning methods in detecting RLFA attacks. Therefore, in the environment we simulate, the number of controllers is only 10. However, this is mainly due to the limited computing resources for deep learning in the laboratory at present. Of course, small-scale topology structures with less than 10 switches may not fully represent complex network environments. Therefore, in future work, we plan to extend the research to larger and more complex networks.

In future work, we also plan to gradually improve the classification model of our proposed detection algorithm with the rapid development of deep learning technology. We will update our datasets and training models continuously with these technologies. At the same time, because our research shows that using a Transformer deep learning model to extract deep information from SDN network flows can effectively obtain information features related to network security, many attacks on SDN controllers can be detected using similar methods.

Author Contributions

Conceptualization, T.Z. and Y.W.; methodology, T.Z.; software, T.Z.; validation, T.Z. and Y.W.; formal analysis, T.Z.; investigation, T.Z.; resources, T.Z. and Y.W.; data curation, T.Z.; writing—original draft preparation, T.Z.; writing—review and editing, T.Z. and Y.W.; visualization, T.Z.; supervision, Y.W.; project administration, Y.W.; funding acquisition, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kreutz, D.; Ramos, F.; Verissimo, P.E.; Rothenberg, C.E.; Uhlig, S. Software-Defined Networking: A Comprehensive Survey. Proc. IEEE 2014, 103, 14–76. [Google Scholar] [CrossRef]

- Wang, J.; Jiao, H.Y.; Wang, Y.; Chen, S.Y.; Hu, H.X. A method of OpenFlow-based real-time conflict detection and resolution for SDN access control policies. Chin. J. Comput. 2015, 38, 872–883. [Google Scholar]

- Lu, Y.Q.; Mao, Z.S.; Cheng, Z.; Qin, J.C.; Jin, D.Z.; Pan, W. Research on SDN Topology Attack and Its Defense Mechanism. J. South China Univ. Technol. (Nat. Sci. Ed.) 2020, 48, 114–122. [Google Scholar]

- Abdou, A.R.; Van Oorschot, P.C.; Wan, T. Comparative Analysis of Control Plane Security of SDN and Conventional Networks. IEEE Commun. Surv. Tutor. 2018, 20, 3542–3559. [Google Scholar] [CrossRef]

- Hong, S.; Xu, L.; Wang, H.; Gu, G. Poisoning Network Visibility in Software-Defined Networks: New Attacks and Countermeasures. In Proceedings of the Network & Distributed System Security Symposium, San Diego, CA, USA, 8–11 February 2015. [Google Scholar]

- Skowyra, R.; Lei, X.; Gu, G.; Dedhia, V.; Landry, J. Effective Topology Tampering Attacks and Defenses in Software-Defined Networks. In Proceedings of the 2018 48th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Luxembourg, 25–28 June 2018. [Google Scholar]

- Dhawan, M.; Poddar, R.; Mahajan, K.; Mann, V. SPHINX: Detecting Security Attacks in Software-Defined Networks. In Proceedings of the Network & Distributed System Security Symposium, San Diego, CA, USA, 8–11 February 2015. [Google Scholar]

- Smyth, D.; Mcsweeney, S.; O’Shea, D.; Cionca, V. Detecting Link Fabrication Attacks in Software-Defined Networks. In Proceedings of the International Conference on Computer Communication & Networks, Vancouver, BC, Canada, 31 July–3 August 2017. [Google Scholar]

- Shrivastava, P.; Agarwal, A.; Kataoka, K. Detection of Topology Poisoning by Silent Relay Attacker in SDN. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking (MobiCom), New Delhi, India, 29 October–2 November 2018; pp. 792–794. [Google Scholar] [CrossRef]

- Soltani, S.; Shojafar, M.; Mostafaei, H.; Pooranian, Z.; Tafazolli, R. Link Latency Attack in Software-Defined Networks. In Proceedings of the 2021 17th International Conference on Network and Service Management (CNSM), Izmir, Turkey, 25–29 October 2021; pp. 187–193. [Google Scholar]

- Shrivastava, P.; Kataoka, K. Topology poisoning attacks and prevention in hybrid software-defined networks. IEEE Trans. Netw. Serv. Manag. 2021, 19, 510–523. [Google Scholar] [CrossRef]

- Gao, Y.; Xu, M.D. Defense Against Software-Defined Network Topology Poisoning Attacks. Tsinghua Sci. Technol. 2023, 28, 39–46. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- He, H.Y.; Yang, Z.G.; Chen, X.N. PERT: Payload Encoding Representation from Transformer for Encrypted Traffic Classification. In Proceedings of the 2020 ITU Kaleidoscope: Industry-Driven Digital Transformation (ITU K), Ha Noi, Vietnam, 7–11 December 2020. [Google Scholar]

- Bikmukhamedov, R.F.; Nadeev, A.F. Generative transformer framework for network traffic generation and classification. T-Comm 2020, 14, 64–71. [Google Scholar] [CrossRef]

- Soltani, S.; Shojafar, M.; Mostafaei, H.; Tafazolli, R. Real-time Link Verification in Software-Defined Networks. IEEE Trans. Netw. Serv. Manag. 2023. [Google Scholar] [CrossRef]

- Azzouni, A.; Boutaba, R.; Trang, N.T.M.; Pujolle, G. sOFTDP: Secure and efficient topology discovery protocol for SDN. arXiv 2017, arXiv:1705.04527. [Google Scholar]

- Alimohammadifar, A.; Majumdar, S.; Madi, T.; Debbabi, M. Stealthy Probing-Based Verification (SPV): An Active Approach to Defending Software Defined Networks Against Topology Poisoning Attacks. In Proceedings of the 23rd European Symposium on Research in Computer Security, ESORICS 2018, Barcelona, Spain, 3–7 September 2018; Proceedings: Part II. Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Alhiyari, S. SDN-OpenFlow Topology Discovery: An Overview of Performance Issues. Appl. Sci. 2021, 11, 6999. [Google Scholar]

- Li, Y.; Cai, Z.P.; Xu, H. LLMP: Exploiting LLDP for Latency Measurement in Software-Defined Data Center Networks. J. Comput. Sci. Technol. 2018, 33, 277–285. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://gwern.net/doc/www/s3-us-west-2.amazonaws.com/d73fdc5ffa8627bce44dcda2fc012da638ffb158.pdf (accessed on 26 March 2023).

- Alaa, T. Classification Assessment Methods: A detailed tutorial. Appl. Comput. Inform. 2018, 17, 168–192. [Google Scholar]

- Tianyi, Z. SDN Network Flow Data of RLFA. 2023. Available online: https://www.kaggle.com/datasets/skyztyzxy/sdn-network-flow-data-of-rlfa (accessed on 26 March 2023).

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutnik, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).