GFAM: A Gender-Preserving Face Aging Model for Age Imbalance Data

Abstract

:1. Introduction

2. Related Works

2.1. Face Aging

2.2. Image-to-Image Translation

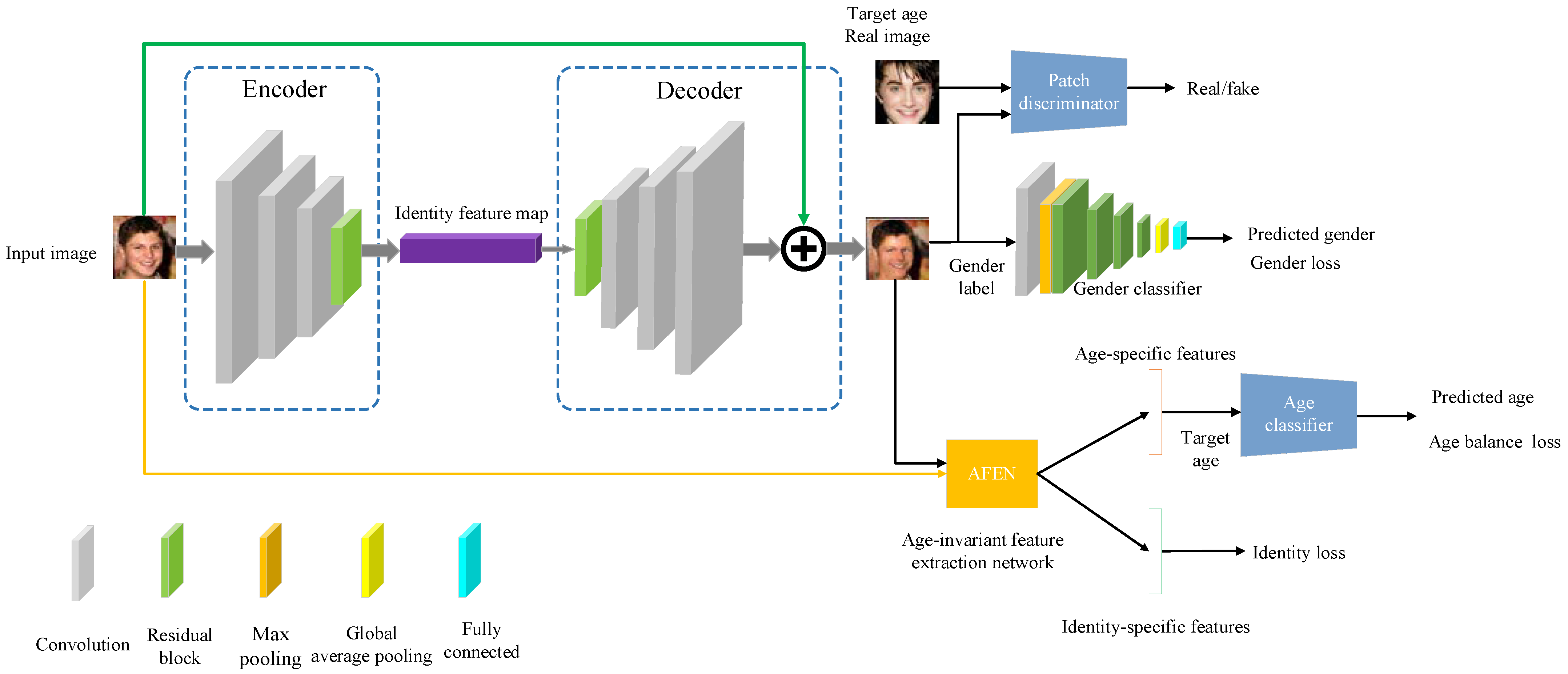

3. Gender-Preserving Face-Aging Model

3.1. Overview

3.2. GAN-Based Architecture

3.3. Gender Classifier

3.4. Identity-Preserving Module

3.5. Optimization

4. Experiments

4.1. Data Collection and Annotation

4.2. Implementation Details

4.3. Qualitative Comparison

4.4. Quantitative Comparison

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, Z.; Song, Y.; Qi, H. Age progression/regression by conditional adversarial autoencoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5810–5818. [Google Scholar]

- Wang, Z.; Tang, X.; Luo, W.; Gao, S. Face aging with identity-preserved conditional generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7939–7947. [Google Scholar]

- Despois, J.; Flament, F.; Perrot, M. AgingMapGAN (AMGAN): High-resolution controllable face aging with spatially-aware conditional GANs. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 613–628. [Google Scholar]

- Song, J.; Zhang, J.; Gao, L.; Liu, X.; Shen, H.T. Dual Conditional GANs for Face Aging and Rejuvenation. In Proceedings of the 2018 International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; pp. 899–905. [Google Scholar]

- Li, Q.; Liu, Y.; Sun, Z. Age progression and regression with spatial attention modules. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11378–11385. [Google Scholar]

- Yang, H.; Huang, D.; Wang, Y.; Jain, A.K. Learning face age progression: A pyramid architecture of gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 31–39. [Google Scholar]

- Huang, Z.; Chen, S.; Zhang, J.; Shan, H. PFA-GAN: Progressive face aging with generative adversarial network. IEEE Trans. Inf. Forensics Secur. 2020, 16, 2031–2045. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Q.; Sun, Z.; Tan, T. A 3 GAN: An attribute-aware attentive generative adversarial network for face aging. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2776–2790. [Google Scholar] [CrossRef]

- Todd, J.T.; Mark, L.S.; Shaw, R.E.; Pittenger, J.B. The perception of human growth. Sci. Am. 1980, 242, 132–145. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Thalmann, N.M.; Thalmann, D. A plastic-visco-elastic model for wrinkles in facial animation and skin aging. In Fundamentals of Computer Graphics: World Scientific, Proceedings of the Second Pacific Conference on Computer Graphics and Applications, Beijing, China, 26–29 August 1994; World Scientific Inc.: River Edge, NJ, USA, 1994; pp. 201–213. [Google Scholar]

- Kemelmacher-Shlizerman, I.; Suwajanakorn, S.; Seitz, S.M. Illumination-aware age progression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3334–3341. [Google Scholar]

- Wang, W.; Yan, Y.; Cui, Z.; Feng, J.; Yan, S.; Sebe, N. Recurrent face aging with hierarchical autoregressive memory. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 654–668. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Li, P.; Hu, Y.; Li, Q.; He, R.; Sun, Z. Global and local consistent age generative adversarial networks. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1073–1078. [Google Scholar]

- Li, P.; Hu, Y.; He, R.; Sun, Z. Global and local consistent wavelet-domain age synthesis. IEEE Trans. Inf. Forensics Secur. 2019, 14, 2943–2957. [Google Scholar] [CrossRef]

- Yao, X.; Puy, G.; Newson, A.; Gousseau, Y.; Hellier, P. High resolution face age editing. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2018; pp. 8624–8631. [Google Scholar]

- Park, T.; Liu, M.-Y.; Wang, T.-C.; Zhu, J.-Y. Semantic image synthesis with spatially-adaptive normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2337–2346. [Google Scholar]

- He, Z.; Kan, M.; Shan, S.; Chen, X. S2gan: Share aging factors across ages and share aging trends among individuals. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9440–9449. [Google Scholar]

- Makhmudkhujaev, F.; Hong, S.; Park, I.K. Re-Aging GAN: Toward Personalized Face Age Transformation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3908–3917. [Google Scholar]

- Liu, L.; Wang, S.; Wan, L.; Yu, H. Multimodal face aging framework via learning disentangled representation. J. Vis. Commun. Image Represent. 2022, 83, 103452. [Google Scholar] [CrossRef]

- Huang, Z.; Zhang, J.; Shan, H. When Age-Invariant Face Recognition Meets Face Age Synthesis: A Multi-Task Learning Framework and A New Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7917–7932. [Google Scholar] [CrossRef] [PubMed]

- Huang, Z.; Chen, S.; Zhang, J.; Shan, H. AgeFlow: Conditional age progression and regression with normalizing flows. arXiv 2021, arXiv:2105.07239. [Google Scholar]

- Zhao, Y.; Po, L.; Wang, X.; Yan, Q.; Shen, W.; Zhang, Y.; Liu, W.; Wong, C.-K.; Pang, C.-S.; Ou, W.; et al. ChildPredictor: A Child Face Prediction Framework with Disentangled Learning; IEEE Transactions on Multimedia; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J.-W. Stargan v2: Diverse image synthesis for multiple domains. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8188–8197. [Google Scholar]

- Liu, M.; Ding, Y.; Xia, M.; Liu, X.; Ding, E.; Zuo, W.; Wen, S. Stgan: A unified selective transfer network for arbitrary image attribute editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3673–3682. [Google Scholar]

- Or-El, R.; Sengupta, S.; Fried, O.; Shechtman, E.; Kemelmacher-Shlizerman, I. Lifespan age transformation synthesis. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany; pp. 739–755. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- He, S.; Liao, W.; Yang, M.Y.; Song, Y.-Z.; Rosenhahn, B.; Xiang, T. Disentangled lifespan face synthesis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3877–3886. [Google Scholar]

- Li, S.; Lee, H.J. Effective Attention-Based Feature Decomposition for Cross-Age Face Recognition. Appl. Sci. 2022, 12, 4816. [Google Scholar] [CrossRef]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Smolley, S.P. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Chen, B.-C.; Chen, C.-S.; Hsu, W.H. Cross-age reference coding for age-invariant face recognition and retrieval. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany; pp. 768–783. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ren, J.; Zhang, M.; Yu, C.; Liu, Z. Balanced MSE for Imbalanced Visual Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 7926–7935. [Google Scholar]

- Eidinger, E.; Enbar, R.; Hassner, T. Age and gender estimation of unfiltered faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Learning face representation from scratch. arXiv 2014, arXiv:1411.7923. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- MEGVII Inc. Face++ Research Toolkit. Available online: https://www.faceplusplus.com/faceid-solution/ (accessed on 21 May 2023).

| Reference | Year | Model-Based | Keywords |

|---|---|---|---|

| Wang et al. [12] | 2018 | RNN | Autoencoder |

| CAAE [1] | 2017 | cGAN | Autoencoder |

| IPCGAN [2] | 2018 | cGAN | Identity-Preserved |

| AMGAN [3] | 2020 | cGAN | High Resolution |

| Dual conditional GAN [4] | 2018 | cGAN | Dual Conditional GANs |

| dual AcGAN [5] | 2020 | cGAN | Spatial Attention Mechanism |

| PAG-GAN [6] | 2018 | GAN | Pyramid Architecture |

| Li et al. [15] | 2018 | GAN | Global and Local Features |

| WGLCA-GAN [16] | 2019 | GAN | Wavelet Transform |

| A3GAN [8] | 2021 | GAN | Attention Mechanism |

| Yao et al. [17] | 2020 | GAN | High Resolution |

| S2GAN [19] | 2019 | GAN | Share Aging Trends |

| PFA-GAN [7] | 2020 | GAN | Progressive Neural Networks |

| Re-Aging GAN [20] | 2021 | GAN | Face Age Transformation |

| Liu et al. [21] | 2021 | GAN | Disentangled Representation |

| MTLFace [22] | 2022 | GAN | Multi-task Learning |

| AgeFlow [23] | 2021 | GAN | Flow-based |

| Zhao et al. [24] | 2022 | GAN | Child Face Prediction |

| Dataset | Images (Subjects) | Age Gap | Subject Type | Dataset Distribution | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| AdienceFaces [37] | 16,477 (2284) | 0–60+ | Non-famous | 0–2 | 4–6 | 8–13 | 15–20 | 25–32 | 38–43 | 48–53 | 61+ |

| 1427 | 2162 | 2294 | 1653 | 4897 | 2350 | 825 | 869 | ||||

| CACD [34] | 163,446 (2000) | 14–62 | Celebrities | 14–20 | 21–30 | 31–40 | 41–50 | 51–60 | 61+ | ||

| 7057 | 39,069 | 43,104 | 40,344 | 30,960 | 2912 | ||||||

| UTKFace [1] | 23,699 (20,000+) | 0–116 | Non-famous | 0–15 | 16–20 | 21–30 | 31–40 | 41–50 | 51–60 | 61+ | |

| 3819 | 1049 | 7784 | 4339 | 2100 | 2211 | 2397 | |||||

| CASIA-WebFace [38] | 494,414 (10,575) | N/A | Both | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| Age_FR | 182,004 (9719) | 0–116 | Both | 0–20 | 21–30 | 31–40 | 41–50 | 51–60 | 61+ | ||

| 9402 | 38,849 | 42,625 | 40,142 | 30,700 | 20,106 | ||||||

| Verification Accuracy (%) | ||||

|---|---|---|---|---|

| Method | 20–30 | 31–40 | 41–50 | 51–60 |

| CAAE [1] | - | 13.59 | 8.75 | 2.67 |

| IPCGAN [2] | - | 99.76 | 99.01 | 96.34 |

| PAG-GAN [6] | - | 99.93 | 98.01 | 89.24 |

| WGLCA-GAN [16] | - | 99.90 | 99.88 | 98.89 |

| GFAM | 100.0 | 99.83 | 99.79 | 99.11 |

| w/o Gender-Preserving Module | With Gender-Preserving Module | |

|---|---|---|

| Gender Accuracy (%) | 75.94 | 79.69 |

| Face Verification Accuracy (%) | ||

|---|---|---|

| w/o identity-preserving module | with identity-preserving module | |

| 31–40 | 99.78 | 99.83 |

| 41–50 | 99.01 | 99.69 |

| 51–60 | 98.98 | 99.11 |

| Age Group | Age Classification (%) | |

|---|---|---|

| w/o Age Classifier | With Age Classifier | |

| 21–30 (144 images) | 34.53 | 36.36 |

| 31–40 (79 images) | 36.36 | 41.46 |

| 41–50 (46 images) | 30.30 | 36.58 |

| 51–60 (23 images) | 43.90 | 45.70 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Lee, H.J. GFAM: A Gender-Preserving Face Aging Model for Age Imbalance Data. Electronics 2023, 12, 2369. https://doi.org/10.3390/electronics12112369

Li S, Lee HJ. GFAM: A Gender-Preserving Face Aging Model for Age Imbalance Data. Electronics. 2023; 12(11):2369. https://doi.org/10.3390/electronics12112369

Chicago/Turabian StyleLi, Suli, and Hyo Jong Lee. 2023. "GFAM: A Gender-Preserving Face Aging Model for Age Imbalance Data" Electronics 12, no. 11: 2369. https://doi.org/10.3390/electronics12112369