1. Introduction

Some real-world problems that exist in many fields, such as industrial manufacturing [

1], medical applications [

2] wireless sensor networks [

3], etc., are usually modeled as optimization problems. With the continuous development of science and technology, these practical optimization problems are often multi-modal and high-dimensional, with a large number of local solutions and highly nonlinear and very strict constraints. One of the challenges is that it is difficult to obtain the optimal solution by using traditional optimization methods on increasingly complex optimization problems. The calculation efficiency of traditional optimization methods is low, and their calculation workload is large. Traditional optimization methods are powerless. It is even more difficult to deal with optimization problems with the aid of traditional optimization methods [

4]. Therefore, many researchers have conducted extensive research to find the best solution to solve the optimization problem. The metaheuristic algorithm is an emerging evolutionary computing technology. Due to its characteristics of high precision, simplicity, and high flexibility, the metaheuristic algorithm has gained clear advantages in avoiding trapping into the state of local optimal stagnation and has strong exploration and exploitation abilities in solving complex optimization problems [

5]. It is widely considered an effective optimization technology for solving complex optimization problems [

6,

7,

8,

9,

10,

11,

12,

13,

14].

In recent years, metaheuristic algorithms based on swarm intelligence technology have been widely studied for their advantages. The swarm intelligence algorithm is mainly inspired by group biological behavior, i.e., it imitates the behavior of animals in the form of a group, such as wolves, fishes, birds, etc. Its core idea is to simulate the behavior of group members following the group leader to obtain food or mates. Common swarm intelligence algorithms include particle swarm optimization (PSO) [

15], grey wolf optimization (GWO) [

16], whale optimization algorithm (WOA) [

17], artificial bee colony (ABC) [

18], firefly algorithm (FA) [

19], etc.

Among the many metaheuristic algorithms available, the sparrow search algorithm (SSA) is an optimization algorithm based on the sparrow population proposed by Xue et al. [

20] in 2020. It simulates the foraging and anti-predation behavior of the sparrow population in nature and takes the search for food sources as an optimization algorithm. SSA has the advantages of simple structure, strong flexibility, and few parameters, and significant advantages in convergence speed, search accuracy, and stability. It has been applied to solve various practical application problems, such as intrusion detection [

21], energy consumption [

22], wireless sensor networks [

23], feature selection [

24], network planning [

25], data compression [

26], engineering problems [

27,

28,

29,

30,

31,

32,

33], etc. However, the second challenge is that SSA is highly susceptible to interference from local solutions, resulting in reduced search capability and search accuracy. Therefore, it is of great significance to study the improvement of SSA.

The idea of ensemble learning was put forward in 2010 [

34], and researchers have achieved good results by combining optimization algorithms and ensemble learning to solve complex optimization problems [

35,

36,

37,

38,

39]. The idea of ensemble learning is to integrate various strategies with different characteristics to achieve better results. In fact, however, not all strategies need to be used, so selective ensemble can be adopted for better performance [

40,

41].

In recent years, more and more swarm intelligence algorithms have been proposed to deal with complex optimization problems. Our team also proposed some new algorithms and achieved good results in engineering applications [

3,

10,

28,

42]. However, the no free lunch (NFL) theorem [

43] logically proves that no metaheuristic algorithm is suitable for solving all optimization problems. This motivates us to constantly develop new metaheuristic algorithms to solve different problems.

Based on the advantages of SSA in dealing with optimization problems and the idea of selective ensemble in algorithm optimization, a multi-strategy sparrow search algorithm with selective ensemble (MSESSA) is proposed. The selective ensemble method is adopted to select strategies to avoid resource loss, and the selective ensemble is combined with three novel and effective learning search strategies to achieve better search capability in different search stages of SSA, eliminate existing limitations as far as possible, and finally optimize the search performance of SSA. MSESSA’s major contributions and innovations are as follows:

Firstly, according to the search characteristics of SSA in different stages, three search learning strategies with different characteristics are proposed: variable logarithmic spiral saltation learning enhances global search capability, neighborhood-guided learning accelerates the convergence of local search, and adaptive Gaussian random walk coordinates exploration and exploitation. Three strategies can be used in the different search stages of the sparrow foraging search process to improve the performance of the algorithm;

Secondly, the idea of selective ensemble is adopted to select the most appropriate search strategy from the strategy pool for the current search stage with the aid of the priority roulette selection method;

Thirdly, the boundary processing mechanism of the original algorithm is modified, and a modified boundary processing mechanism is proposed to dynamically adjust the transgressive sparrows’ locations. The random relocation method is adopted for discoverers and alerters to further conduct global search in a large range, and the relocation method based on the optimal and suboptimal of the population is adopted for scroungers to further conduct better local search;

Finally, MSESSA is tested on the full set of 2017 IEEE Congress on Evolutionary Computation (CEC 2017) test functions. The CEC 2017 function test, Wilcoxon rank-sum test, and ablation experiment results show that MSESSA achieves better comprehensive performance than 13 other advanced algorithms. The stability, effectiveness, and superiority of MSESSA are systematically verified on four practical engineering optimization problems.

This study discusses the details of MSESSA, which is organized as follows:

Section 2 describes the related work of SSA.

Section 3 briefly describes SSA.

Section 4 describes and analyzes the proposed MSESSA in detail.

Section 5 compares MSESSA with 13 other advanced algorithms based on CEC 2017 and verifies the superior performance of MSESSA.

Section 6 applies MSESSA to four classical practical engineering problems.

Section 7 summarizes the research and looks forward to future research.

2. Related Work

According to the no free lunch theorem [

43], an algorithm cannot be skilled in solving all optimization problems. SSA is easily disturbed by the local optimal solution, and it is easy to converge too fast that it enters the stagnation state. SSA also has deficient optimization accuracy and low search efficiency. Therefore, many researchers have begun to conduct considerable studies on the improvement of SSA. Liu et al. [

21] proposed a hybrid strategy improved sparrow search algorithm (HSISSA). They constructed a hybrid circle piecewise mapping method to initialize the population and combined the spiral search method with the Lévy flight formula to update the locations of the discoverers and the alerters, expand the search range of the population, and enhance the search ability. In addition, the simplex method and pinhole imaging method were used to optimize the location of sparrows with poor fitness values and optimal fitness values so as to avoid population search stagnation and local optimization. Finally, the effectiveness of the HSISSA was verified in intrusion detection. Zhang et al. [

24] proposed a mayfly sparrow search hybrid algorithm (MASSA). The idea of the Mayfly algorithm [

44] was added to the optimization process of SSA, and circular chaotic mapping, Lévy flight, and a nonlinear inertia coefficient were added to balance the abilities of global search and local search. Finally, the RFID network planning using the MASSA achieved remarkable results. Wu et al. [

27] proposed a sparrow search algorithm based on quantum computing and multi-strategy enhancement (QMESSA). They adopted an improved circular chaotic mapping theory, combined quantum computing with the quantum gate mutation mechanism, and constructed an adaptive T-distribution and a new position update formula using the enhanced search strategy to accelerate convergence and enhance its variability. The superiority of QMESSA was verified by several classical practical application problems. Ouyang et al. [

28] proposed a learning sparrow search algorithm (LSSA). They introduced lens reverse learning and a sine and cosine guidance mechanism, adopted a differential-based local search strategy, expanded the possibility of the solution, and made the algorithm jump out of stagnation. Finally, they verified the practicability of LSSA in robot path planning. Meng et al. [

30] proposed a multi-strategy improved sparrow search algorithm (MSSSA). They introduced chaotic mapping to obtain high-quality initial populations, adopted a reverse learning strategy to increase population diversity, designed an adaptive parameter control strategy to accommodate an adequate balance between exploration and exploitation, and embedded a hybrid disturbance mechanism in the individual renewal stage. The advantages of MSSSA were verified in solving engineering optimization problems. Ma et al. [

33] proposed an enhanced multi-strategy sparrow search algorithm (EMSSA) based on three strategies. Firstly, in the uniformity-diversification orientation strategy, an adaptive-tent chaos theory was proposed to obtain a more diverse and greatly random initial population. Secondly, in the hazard-aware transfer strategy, a weighted sine and cosine algorithm based on the growth function was constructed to avoid trapping into the state of local optimal stagnation. Thirdly, in the dynamic evolutionary strategy, the similarity perturbation function was designed, and the triangle similarity theory was introduced to improve the exploration ability. The performance of EMSSA in 23 benchmark functions, CEC2014, and CEC2017 was a significant improvement over SSA and other advanced algorithms.

Many scholars have put forward constructive improvement strategies for the shortcomings of SSA in the above studies, and the improved algorithm has also been applied to various application fields. However, the improved algorithm still has limited advantages and instability when dealing with optimization problems. These existing studies have improved the performance of SSA to a certain extent and verified the advancement and effectiveness of SSA in application, which has a guiding role for subsequent research. However, existing research also has some shortcomings and still has limited advantages in dealing with optimization problems:

In the previous work, these improved algorithms still have limitations and uncertainties in practical application, such as insufficient optimization accuracy, low search efficiency, and unstable performance, and there are still some areas to be improved;

Existing research is mainly improved by introducing ideas or formulas of other algorithms, but many machine learning ideas can also realize new improvements.

So far, there are still few studies on the improvement of SSA because the proposed time is relatively short and relatively new. Although existing research can improve the performance of SSA, the proposed improved algorithm still has limitations and uncertainties in application. Improved framework research based on the ideas or formulas of other algorithms is not novel enough, and based on the no free lunch theorem [

43], no algorithm can present advantages in all fields. It is necessary to further study the promising and important metaheuristic algorithm, and this necessity prompts us to develop a metaheuristic algorithm with good comprehensive performance to solve the optimization problem. Therefore, this paper combines the idea of multi-strategy and selective ensemble learning to design three novel strategies to improve SSA and eliminate the limitations and uncertainties in the application process as far as possible. Currently, no scholars have introduced the idea of multi-strategy and selective ensemble learning to the improvement of SSA.

3. Sparrow Search Algorithm

The design of SSA is inspired by sparrows’ foraging and anti-predation behavior. In the process of foraging, the sparrow population has its own roles, and the division of labor is clear. According to the adaptation to the environment in the foraging process, the sparrow population can be divided into discoverers, scroungers, and alerters. Discoverers usually play a role with high environmental fitness, so they are responsible for searching for food extensively, sharing information about foraging direction with other individuals, and guiding the population to flow in the foraging direction. Scroungers join the team of discoverers with the best fitness value to explore foraging information, while alerters mainly perform reconnaissance and early warning tasks and are responsible for detecting dangers and providing danger information to other individuals in the population.

Therefore, the following hypotheses are further obtained for sparrows’ behavior:

Sparrows with high energy reserves are called discoverers, capable enough to forage for food and responsible for finding areas that can provide rich food sources, providing foraging areas or directions for the scroungers. Sparrows with low energy reserves are called scroungers. The level of energy reserve depends on the assessment of individual fitness value;

Once the alerters find a predator, the individual will issue an alarm. When the alarm value is greater than the safety threshold, the discoverers need to guide the scroungers to a safe area;

Every sparrow can become a discoverer if it finds a better food source, but the proportion of discoverers and scroungers in the whole population remains the same;

The scroungers will follow the discoverers who provide the best foraging information to forage. Meanwhile, some scroungers will constantly monitor the discoverers and compete with the discoverers for food to improve their foraging rate;

When aware of danger, sparrows at the edge of the population will quickly move to safety to gain a better position, while sparrows in the group will move randomly.

We can model sparrows’ positions as follows:

represents the number of sparrows, and

represents the dimension of variables to be optimized. The fitness values of all sparrows can be modeled as follows:

The value of each row of represents the fitness value of the individual.

In the sparrow population, the fitness values of all sparrows will be calculated first, the fitness values will be sorted, and the sparrows with the top 20% fitness values will be the discoverers. When the discoverers are within the safe value range, a large range of search will be conducted. The position update formula of the discoverers is shown in Equation (3):

represents the position of the sparrow and the position of the i-th sparrow in the j-th dimension; represents the current iteration number; is the maximum number of iterations; is a random number of ; is a random number obeying the standard normal distribution, which meets ; is a matrix of with each element value being 1; is the warning value and meets ; and is the safety threshold and meets . When , it means there is no danger and it can be searched extensively; otherwise, there is a danger, and the discoverers need to lead other sparrows to fly away from their current locations to a safe area.

The scroungers will follow the foraging direction provided by the discoverers for extensive search. The position update formula of scroungers is shown in Equation (4):

is the optimal position occupied by the current discoverer, is the global worst position, represents a matrix, each element is 1 or −1, and . When , it means that the scroungers with a low fitness value cannot compete for food preferentially with other individuals with a high fitness value and need to fly to a new place to forage; otherwise, it means that the scroungers have become the discoverers and forage alone.

The alerters are responsible for danger detection in the sparrow population. When the alerters are aware of a danger, they will transmit the danger signal to all individuals in the sparrow population, and the sparrow population will immediately show anti-predation behavior. The position update formula of the alerters is shown in Equation (5):

is the current global optimal position, and is a random number subject to standard normal distribution with mean 0 and variance 1. is the random number in , is the fitness value of the current individual, is the current global optimal fitness value, is the current worst fitness value, and is an infinitesimal constant to avoid the denominator being zero.

4. The Proposed Multi-Strategy Sparrow Search Algorithm with Selective Ensemble

In recent years, many scholars have combined swarm intelligence algorithms and machine learning to solve complex optimization problems, which have been proven to be effective in dealing with optimization problems [

45,

46,

47]. Ensemble learning is a kind of machine learning that integrates multiple strategies to solve problems. Algorithms often need to be optimized in more than one stage, so the idea of multi-strategy has gained significant advantages in algorithm optimization. However, in multi-strategy algorithms, knowing when to use the strategy is the key to improve the performance of the algorithm. Zhou et al. [

40] put forward the concept of selective ensemble, which aims to achieve better performance without necessarily using more learning strategies to deal with problems with better performance. Selective ensemble can select the strategy to deal with optimization problems to avoid resource loss. However, how to select strategies more suitable for this stage in different search stages of the algorithm is the key to the research. Roulette wheel selection is the simplest and most commonly used selection method. In this method, the selection probability of each individual is proportional to its fitness value. The greater the fitness, the greater the selection probability. In fact, however, during roulette selection, individual choice is often not based on individual choice probability but on “cumulative probability”. The function of roulette wheel selection is that good individuals can be selected from the current population; good individuals have a greater chance to retain and pass their good information to the next generation so that they can gradually approach the optimal solution. Therefore, we can choose the strategy suitable for the current optimization stage by the roulette selection method.

In this study, MSESSA combines the idea of selective ensemble with multi-strategy. Firstly, three search learning strategies are proposed to improve the performance of the algorithm from different aspects, which include the variable logarithmic spiral saltation learning strategy, suitable for optimizing global search and enhancing search ability; the neighborhood-guided learning strategy, suitable for optimizing local search and accelerating convergence speed; and the adaptive Gaussian random walk strategy, suitable for balancing global search and local search ability. These three novel strategies can greatly improve the global search capability and the local search capability of SSA. All strategies are set with the same initial priority. As the algorithm iteration progresses, if the strategy is suitable for the current search stage, the probability of the strategy being selected will increase, while if the strategy is not suitable for the current search, the probability of the strategy being selected will decrease. Therefore, a priority roulette selection method is designed to select strategies in different stages so that the algorithm can adjust the selection probability. Finally, MSESSA also modifies the boundary processing mechanism. The modified boundary processing mechanism is proposed to dynamically adjust the transgressive sparrows’ locations. The random relocation method is for discoverers and alerters to further conduct global search in a large range, and the relocation method based on the optimal and suboptimal of the population is for scroungers to further conduct better local search.

4.1. Variable Logarithmic Spiral Saltation Learning

As widely known, if the algorithm has strong search ability in the early stage, it will show superior performance. In the search process of SSA, the discoverers lead the population to conduct extensive search, but the randomness of the search process, search method, and search trajectory makes the scroungers blind to the search direction provided by the discoverers and make it difficult to make a correct judgment, resulting in poor convergence effect. The authors of [

42,

48,

49] use the spiral search method to improve the search ability of the algorithm, achieving good results. However, if the algorithm searches with a spiral curve trajectory, there is a certain possibility that it will find poor solutions or is always limited to a certain range, thus reducing the search accuracy. The learning mechanism is a way of information flow, which is often used in algorithm optimization. By adopting a learning mechanism, better information and positions can be shared with other individuals. Other population individuals learn from the information obtained to achieve the location update. The authors of [

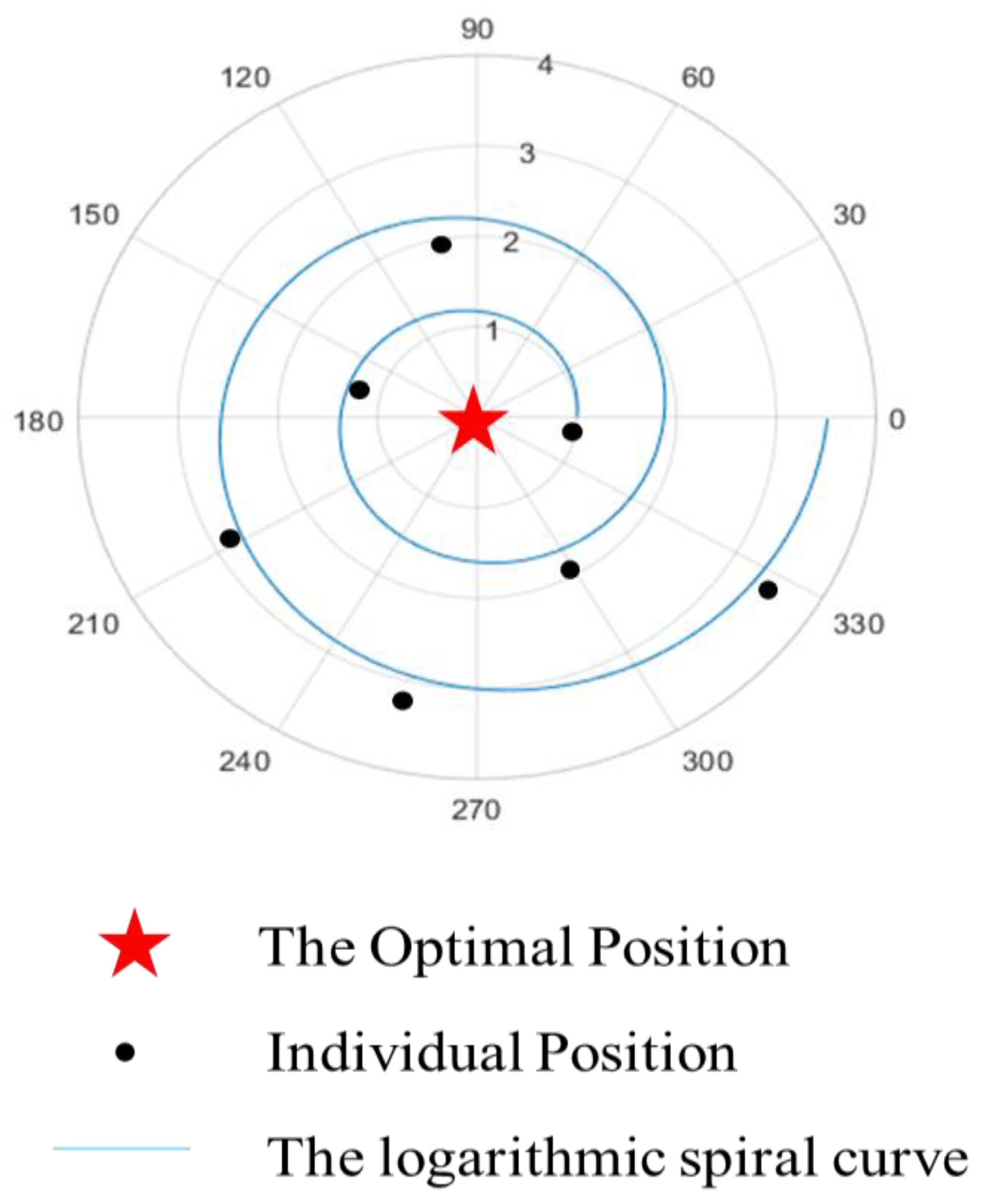

50] proposed a saltation learning strategy based on the optimal positions to improve global search ability, and the algorithm showed better performance. Therefore, this study proposes a variable logarithmic spiral saltation learning strategy to change the search trajectory and search mode of the algorithm and improve the global search ability. The logarithmic spiral curve trajectory shown in

Figure 1 is used to learn the location information of different dimensions of the optimal sparrows, the worst sparrows, and the random sparrows in the population to generate candidate solutions, as well as to further expand the search scope of the algorithm and improve the search ability and accuracy of the algorithm.

As shown in

Figure 2, based on the advantages gained from the logarithmic spiral curves and saltation learning mechanism, we assume that the algorithm performs saltation learning under a variable logarithmic spiral curve trajectory. Each sparrow in each generation only randomly updates one dimension around a random dimension of the current optimal sparrows, and the information used to update this dimension comes from other dimensions. The saltation learning between different dimensions can expand the search space and generate candidate solutions to enrich the diversity of solutions. Then, the position update formula under variable logarithmic spiral saltation learning is Equation (6):

is the optimal solution of the

t-th iteration;

is the worst solution of the

t-th iteration;

,

, and

are three integers randomly selected from

and are not equal to each other;

is the dimension;

is an integer randomly selected from

; and

is the population size.

is the variable factor that determines the shape of the logarithmic spiral, and

is a parameter decreasing from 1 to −1.

and

are, respectively, generated by Equations (7) and (8):

The pseudocode of variable logarithmic spiral saltation learning (VLSSL) is described in Algorithm 1.

| Algorithm 1: Pseudocode of VLSSL |

| 1: Input: the number of sparrows , the current iteration number , the maximum number of iterations , the current optimal location , the current worst location |

| 2: Output: the current new location |

| 3: while |

| 4: for |

| 5: Use Equation (6) update the sparrow’s location |

| 6: Get the current new location |

| 7: end for |

| 8: t = t + 1 |

| 9: end while |

| 10: return |

4.2. Neighborhood-Guided Learning

Generally, the algorithm is prone to problems such as optimal value stagnation and low search accuracy in the late iteration period. The authors of [

13,

51,

52] use a neighborhood-guided strategy to jump out of stagnation with the help of the neighborhood, which presents certain advantages in improving algorithm performance. Considering the superiority of the learning mechanism on algorithm optimization, a neighborhood-guided learning strategy based on the optimal location information of the sparrow population is proposed in this study. A neighborhood-guided system is constructed in the sparrow population to establish the characteristics of each other’s guiding relationship, and the information of the optimal location is learned under the characteristics of the guiding relationship, thus helping SSA to jump out of stagnation and improve the accuracy of the final result.

In SSA, the discoverers play the role of the “bellwether” of the group, leading the sparrow population to forage, providing foraging directions and foraging information. However, it is easy for the discoverers to fall into stagnation in the late iteration, making it easy for the scroungers who follow the discoverers’ foraging search to become trapped in the state of local optimal stagnation, resulting in the insufficient searching ability of the algorithm in the late iteration. Therefore, considering that sparrows forage in groups, this study assumes that there are neighborhood areas near each sparrow. As shown in

Figure 3, the black solid dots represent sparrows in the population, and blue areas represent the neighborhood areas near sparrows. Even if the sparrow is trapped in the state of local optimal stagnation, its neighborhood can guide it to jump out of stagnation and continue to the optimal search.

The neighborhood position generation formula is shown in Equation (9):

is the neighborhood factor, and each sparrow’s neighborhood is updated under the action of the neighborhood factor.

is represented by Equation (10):

is a random number with uniform distribution,

is the global optimal solution, and

is a random position generated by Equation (11):

is the upper bound of the search space, and is the lower bound of the search space.

In the local search stage, the sparrow maintains a guiding relationship with its neighborhood to share information. If the location is better than the current location, the location update will be carried out. The position update formula is shown in Equation (12):

represents the characteristics of the guiding relationship between them, which can be generated by Equation (13):

As shown in

Figure 4, the black solid dots represent the sparrow individual in the population, the red five-pointed star represents the global optimal solution of the population, and the blue arrows represent the guiding direction. With the increase in iteration times, the sparrow will constantly move in the direction guided by the neighborhood, jump out of the current stagnation, and find the global optimal solution.

The pseudocode of neighborhood-guided learning (NGL) is described in Algorithm 2.

| Algorithm 2: Pseudocode of NGL |

| 1: Input: the number of sparrows , the current iteration number , the maximum number of iterations , the optimal location |

| 2: Output: the current new location |

| 3: while |

| 4: for |

| 5: Use Equation (10) update the sparrow’s location |

| 6: Get the current new location |

| 7: end for |

| 8: t = t + 1 |

| 9: end while |

| 10: return |

4.3. Adaptive Gaussian Random Walk

The ideal state of SSA is to conduct global search extensively in the early iteration period and local search in the late iteration period. Therefore, researchers have adopted numerous strategies to improve the search capability and achieved good results [

27,

28,

29,

30]. However, most studies only focus on optimizing the global search or local search and improving the search capability at the corresponding stage to reduce the probability of imbalance, rather than focusing on how to coordinate strategies to better transition algorithms from exploration to exploitation. The Gaussian random walk strategy is one of the classic random walk strategies with strong development ability [

50,

53]. Therefore, an adaptive Gaussian random walk strategy based on adaptive control step size factor is proposed in this study to help SSA coordinate exploration and exploitation so as to obtain better search ability and exploration ability.

The position update formula under adaptive Gaussian random walk strategy is shown in Equation (14).

is Gaussian distribution, expectation is

, standard deviation is

,

is the optimal solution of the

t-th iteration,

and

is the random number of

,

generated by Equation (15):

is the adaptive control step size factor, which adaptively adjusts the step size.

is the worst solution of the

t-th iteration. In the early stage of the algorithm, extensive global search is conducted with a high probability to try to obtain the maximum solution space, while in the late stage, local search is conducted to narrow the search scope and improve the convergence speed. Its expression is shown in Equation (16):

The pseudocode of adaptive Gaussian random walk (AGRW) is described in Algorithm 3.

| Algorithm 3: Pseudocode of ARGW |

| 1: Input: the number of sparrows , the current iteration number , the maximum number of iterations , the current optimal location , the current worst location |

| 2: Output: the current new location |

| 3: while |

| 4: for |

| 5: Use Equation (14) update the sparrow’s location |

| 6: Get the current new location |

| 7: end for |

| 8: t = t + 1 |

| 9: end while |

| 10: return |

4.4. Priority Roulette Selection

Roulette wheel selection is the simplest and most commonly used selection method. In this method, the selection probability of each individual is proportional to its fitness value. The greater the fitness, the greater the selection probability. In fact, however, during roulette selection, individual choice is often not based on individual choice probability but on “cumulative probability”. The function of roulette wheel selection is that good individuals can be selected from the current population; good individuals have a greater chance to retain and pass their good information to the next generation so that they can gradually approach the optimal solution. Based on the advantage of the roulette wheel selection method [

53,

54,

55,

56], we can choose the strategy suitable for the current optimization stage by the roulette selection method.

Therefore, in this study, based on the idea of selective ensemble and multi-strategy, we design a priority roulette selection method that can dynamically select search strategies. Firstly, the priority of all strategies is set to 1, and the priority of each strategy changes as the search phase changes. According to the priority of each strategy, a suitable strategy is selected through the roulette wheel. The higher the priority of the strategy, the greater the probability of being selected, and vice versa. The probability of strategy selection is described by the following:

is the probability of the strategy being selected, and is the priority of the strategy.

It is necessary to use greedy choice thought to determine whether the solution is updated in priority roulette selection. The greedy choice thought is described as Equation (19):

The pseudocode of dynamically adjusted strategy priority (DASP) is described in Algorithm 4.

| Algorithm 4: Pseudocode of DASP |

| 1: Input: the number of sparrows , the current iteration number , the maximum number of iterations , the priority of each strategy , the optimal fitness value , the current fitness value |

| 2: Output: the current new location , the priority of each strategy |

| 3: while |

| 4: for |

| 5: Use Equation (19) to determine if need to update the sparrow’s location |

| 6: Get the current new location |

| 7: |

| 8: end for |

| 9: t = t + 1 |

| 10: end while |

| 11: return , |

As shown in

Figure 5, the blue area with black solid dots represents the original population, the blue arrows represent the population strategy selection, the yellow area represents VLSSL, the purple area represents NGL, the green area represents AGRW, and the yellow arrows represent the results under the corresponding strategy. The red arrow represents the choice of strategy during the next iteration. If the original population changes from black solid dots to yellow solid dots through VLSSL, the previous solution is successfully updated. This means that the strategy is suitable for searching in the current period, the priority of VLSSL will increase, and the probability of VLSSL being selected will increase, and in the pie chart shown in

Figure 6, the proportion of the yellow part will increase. Similarly, if the original population changes from black solid dots to purple solid dots through NGL, the priority of NGL will increase, the probability of NGL being selected will increase, and the proportion of the purple part in the pie chart will increase. If the original population changes from black solid dots to green solid dots through AGRW, the priority of AGRW will increase, the probability of AGRW being selected will increase, and the proportion of the green part in the pie chart will increase.

4.5. Modified Boundary Processing Mechanism

In SSA, once sparrows cross the boundary, their positions will be updated in the boundary, and it is easy to make boundary aggregation, loss of population diversity, and difficult to search for normal optimization. Therefore, the boundary processing mechanism of SSA should be modified. QMESSA [

27] proposed a new boundary control method based on the location information of optimal and suboptimal individuals, which achieved significant advantages. Therefore, in this study, considering the characteristics of different roles in the sparrow population at the search stage, the modified boundary processing mechanism is proposed to dynamically adjust the transgressive sparrows’ locations. The random relocation method is proposed for discoverers and alerters to further conduct global search in a large range and the relocation method based on the optimal and suboptimal of the population is proposed for scroungers to further conduct better local search.

Since discoverers and alerters need to conduct global search in a large range, the random relocation of transgressive sparrows can improve the possibility of obtaining the optimal solution. Therefore, the random relocation method is adopted for the stage of discoverers and alerters, and the adjustment method is shown in Equation (20):

is the upper bound of the search space, and is the lower bound of the search space.

Moreover, it is usually easy for the scroungers to cross the boundary, and the scroungers mainly move to the vicinity of the global optimal. Therefore, the overstepping sparrows are dynamically adjusted based on the optimal and suboptimal sparrows of the population so that the overstepping sparrows at this stage can be replaced with better ones. In the next iteration, better information can be obtained. Search is based on locations that are better than the original location. In this way, the exploitation capability of SSA can be improved. The adjustment method is shown in Equation (21):

and , respectively, represent the global optimal solution and global suboptimal solution in the t-th iteration, and is the random number of .

The pseudocode of the modified boundary processing mechanism (MBPM) is described in Algorithm 5.

| Algorithm 5: Pseudocode of MBPM |

| 1: Input: the number of sparrows , the current iteration number , the maximum number of iterations , the number of discoverers , the number of alerters |

| 2: Output: the current new location |

| 3: while |

| 4: for |

| 5: Use Equation (20) relocate of transgressive sparrows |

| 6: Get the current new location |

| 7: end for |

| 8: for |

| 9: Use Equation (21) relocate of transgressive sparrows |

| 10: Get the current new location |

| 11: end for |

| 12: for |

| 13: Use Equation (20) relocate of transgressive sparrows |

| 14: Get the current new location |

| 15: end for |

| 16: t = t + 1 |

| 17: end while |

| 18: return |

4.6. The Steps and Pseudocode of MSESSA

The steps of MSESSA are as follows, and the pseudocode of MSESSA is described in Algorithm 6:

Set basic parameters, such as population size , maximum number of iterations , the number of discoverers , the number of scroungers , the number of alerters , and objective function dimension;

Initialize the population, calculate the fitness values of all sparrows, and sort them to find out the optimal and worst sparrows;

Use Equation (3) to update the locations of discoverers;

Use Equation (20) to dynamically correct the positions of the transgressive sparrows;

Use Equation (4) to update the positions of scroungers;

Use Equation (21) to dynamically correct the positions of the transgressive sparrows;

Use Equation (5) to update the positions of alerters;

Use Equation (20) to dynamically correct the positions of the transgressive sparrows;

Use Equations (17) and (18) and the initial priority of each strategy to calculate the selection probability of each strategy;

Use priority roulette selection for strategy selection. If , Equation (6) is used to update the sparrow’s position. If , then Equation (12) is used to update the sparrow’s position. If , the sparrow’s position is updated using Equation (14);

Obtain the current position information and determine whether to update the position according to Equation (19). If the current position is better than the previous position, the position update will be carried out, and the priority of the strategy will be updated;

At the end of the iteration, the fitness value and position information of the optimal sparrow will be output.

| Algorithm 6: Pseudocode of MSESSA |

| 1: Input: the number of sparrows , the current iteration number , the maximum number of iterations , the number of discoverers , the number of alerters , the priority of each strategy |

| 2: Output: the optimal fitness value , the optimal location |

| 3: Initialize a population of N sparrows |

| 4: while |

| 5: Rank the fitness values and find the current best sparrow and the current worst sparrow; |

| 6: for |

| 7: Use Equation (3) update the discoverers; |

| 8: Use Equation (20) relocate of transgressive sparrows; |

| 9: Rank the fitness values and update the current best sparrow |

| 10: end for |

| 11: for |

| 12: Use Equation (4) update the scroungers |

| 13: Use Equation (21) relocate of transgressive sparrows |

| 14: Rank the fitness values and update the current best sparrow |

| 15: end for |

| 16: for |

| 17: Use Equation (5) update the alerters |

| 18: Use Equation (20) relocate of transgressive sparrows |

| 19: Rank the fitness values and update the current best sparrow |

| 20: end for |

| 21: for |

| 22: Calculate the probability of each strategy being selected by , Equations (17) and (18) |

| 23: Select a strategy by priority roulette selection |

| 24: if |

| 25: Use Equation (6) update the sparrow’s location |

| 26: end if |

| 27: if |

| 28: Use Equation (12) update the sparrow’s location |

| 29: end if |

| 30: if |

| 31: Use Equation (14) update the sparrow’s location |

| 32: end if |

| 33: Get the current new location |

| 34: If the new location is better than before, update it, and update the priority of each strategy by Equation (19) |

| 35: end for |

| 36: t = t + 1 |

| 37: end while |

| 38: return , |

4.7. The Time Complexity Analysis of MSESSA

Time complexity can measure the efficiency of an algorithm. Suppose that the population size is , the dimension is , and the maximum number of iterations is . The proportion of discoverers is , the proportion of scroungers is , and the proportion of alerters is .

Macroscopically, the time complexity of the SSA algorithm is . Although MSESSA adds the variable logarithmic spiral saltation learning strategy, neighborhood-guided learning strategy, adaptive Gaussian random walk strategy, and modified boundary processing mechanism, it does not change the algorithm structure. All the operations are location updating operations of sparrows and only increase the number of cycles but do not increase the time complexity. Therefore, the time complexity of MSESSA is . The time complexity of MSESSA is the same as that of SSA.

Microscopically, MSESSA adds a certain computational complexity. In the search stage, MSESSA introduces the variable logarithmic spiral saltation learning strategy, neighborhood-guided learning strategy, and adaptive Gaussian random walk strategy. Different strategies are selected according to the search characteristics in different stages of the algorithm. If the variable logarithmic spiral saltation learning strategy is applicable to the discoverer stage, it will be carried out in the discoverer stage with increased. If it is applicable to the scrounger stage, it will be carried out in the scrounger stage with increased. If it is applicable to the alerter stage, it will be carried out in the alerter stage with increased. The same applies to the neighborhood-guided learning strategy, adaptive Gaussian random walk strategy, and variable logarithmic spiral saltation learning strategy. MSESSA introduced a modified boundary processing mechanism in boundary processing. Random relocation of transgressive sparrows is adopted in the discoverer stage and alerter stage with and increased. In the scrounger stage, dynamic adjustment of transgressive sparrows is carried out based on the optimal and suboptimal sparrows of the population with increased, but all strategies do not increase the order of magnitude of the algorithm, so the time complexity of MSESSA is . The time complexity of MSESSA is the same as that of SSA.

5. Experiments and Results

In order to test the performance of MSESSA, experiments of MSESSA and 13 algorithms are carried out on CEC 2017 test suites (dimension = 30, dimension = 50, and dimension = 100). We choose SSA [

20], GWO [

16], WOA [

17], PSO [

15], ABC [

18], FA [

19], QMESSA [

27], LSSA [

28], AGWO [

7], jWOA [

8], VPPSO [

9], NABC [

13], and MSEFA [

41] to conduct the experiment with MSESSA. These algorithms contain a variety of excellent search strategies and frameworks, and most of them are highly advantageous algorithms proposed in recent years. Comparison with these algorithms can show the superiority of the proposed algorithm in dealing with the same problems so as to prove that the proposed algorithm is effective and can contribute to subsequent research. The parameters of the other 13 algorithms are the parameters of the original literature. The proposed algorithm in this study set the number of discoverers

, the number of scroungers

, and the number of alerters

. CEC 2017 is the most widely used set of tests to effectively evaluate the performance of algorithms. In CEC 2017 test suites, C01–C03 are unimodal functions, F4–F10 are simple multimodal functions, C11–C20 are mixed functions, and C21–C30 are combined functions. It should be noted that F2 is not carried out in this study. CEC 2017 includes experience test functions such as spheres, and the test dimensions include 10, 30, 50, and 100. The CEC 2017 unconstrained test problem is extremely difficult to solve with the increase in dimension. To be fair, during the experiment, the population number is set to 100, the maximum number of iterations is set to 500, each algorithm independently runs 30 times, all mean values and standard deviation are calculated, and the optimal value is shown in bold. The test results of CEC 2017 test suites (dimension = 30, dimension = 50, and dimension = 100) are shown in

Table A1,

Table A2 and

Table A3. The convergence curves and the stacked histogram of ranking under the corresponding test functions are shown in

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11 and

Figure 12.

5.1. Comparison of Results on CEC 2017 Functions (Dim = 30)

According to the convergence curves of each function in

Figure 7, MSESSA achieves better results in C01, C04, C06, C10, C11, C12, C13, C17, C19, C20, C25, C27, C28, C29, and C30 on CEC 2017 test suites (dimension = 30). MSESSA presents better performance in terms of unimodal functions, simple multimodal functions, mixed functions, and combined functions. Although the optimization values of unimodal function C03, simple multimodal function C07, mixed function C16, combined function C22, combined function C26, combined function C28, and combined function C29 are slightly worse than those of MSEFA, NABC, and ABC, it can be seen from the convergence curve that MSESSA has strong capability in both early global search and late local search, and the performance of MSESSA is stable from the perspective of the overall search process.

Figure 8 intuitively shows the stacked histogram of ranking for all algorithms on CEC 2017 test suites (dimension = 30). It can be seen that MSESSA ranks top five in all functions, ranking first in 12 functions, second in 4 functions, third in 4 functions, fourth in 6 functions, and fifth in 3 functions. It is enough to see that MSESSA shows strong performance. According to

Table A1 in the

Appendix A section, MSESSA has the largest number of bolds and optimal values. It is worth mentioning that in unimodal function C01, only SSA and NABC are in the same order of magnitude as MSESSA. In simple multimodal function C06 and combined function C30, the optimal value of MSESSA is far ahead of other algorithms. In summary, MSESSA has the best comprehensive performance on CEC 2017 test suites (dimension = 30).

Figure 7.

The convergence curves of MSESSA and other 13 algorithms based on CEC 2017 (Dim = 30).

Figure 7.

The convergence curves of MSESSA and other 13 algorithms based on CEC 2017 (Dim = 30).

Figure 8.

The stacked histogram of ranking for MSESSA and other 13 algorithms based on CEC 2017 (Dim = 30).

Figure 8.

The stacked histogram of ranking for MSESSA and other 13 algorithms based on CEC 2017 (Dim = 30).

5.2. Comparison of Results on CEC 2017 Functions (Dim = 50)

According to the convergence curves of each function in

Figure 9, MSESSA achieves better results in C01, C03, C04, C06, C10, C11, C12, C13, C15, C19, C25, C27, C28, C29, and C30 on CEC 2017 test suites (dimension = 50). MSESSA presents better performance in terms of unimodal functions, simple multimodal functions, mixed functions, and combined functions. Although the optimization values of simple multimodal function C09, mixed function C16, mixed function C17, mixed function C18, mixed function C20, combined function C23, combined function C26, and combined function C29 are slightly worse than those of MSEFA and NABC, However, it can be seen from the convergence curve that MSESSA has strong capability in both early global search and late local search, and the performance of MSESSA is stable from the perspective of the overall search process. The stacked histogram of ranking for all algorithms on CEC 2017 test suites (dimension = 50) can be intuitively seen from

Figure 10. It can be seen that MSESSA ranks top eight in all functions, ranking first in 12 functions, second in 2 functions, third in 6 functions, fourth in 3 functions, fifth in 4 functions, seventh in 1 function, and eighth in 1 function. It is enough to see that MSESSA has reduced advantages compared with CEC 2017 test suites (dimension = 30). However, compared with other algorithms in the same dimension, MSESSA still shows strong performance. As can be seen from

Table A2 in the

Appendix A section, MSEFA has the largest number of bolds in terms of total bolds, followed by MSESSA, but MSESSA has the largest number of bolds in terms of mean bolds, with a total of 12 bolds among 16 bolds, and MSEFA has a total of 18 bolds, among which only 5 bolds are mean bolds. The mean value mainly reflects the centralized trend of test and experimental data. Standard deviation mainly reflects the degree of data dispersion; that is, MSESSA has better optimization ability and MSEFA is stable. It is worth mentioning that the optimal value of MSESSA is far ahead of other algorithms in terms of unimodal function C01 and combined function C30. In summary, MSESSA has the best comprehensive performance on CEC 2017 test suites (dimension = 50).

Figure 9.

The convergence curves of MSESSA and other 13 algorithms based on CEC 2017 (Dim = 50).

Figure 9.

The convergence curves of MSESSA and other 13 algorithms based on CEC 2017 (Dim = 50).

Figure 10.

The stacked histogram of ranking for MSESSA and other 13 algorithms based on CEC 2017 (Dim = 50).

Figure 10.

The stacked histogram of ranking for MSESSA and other 13 algorithms based on CEC 2017 (Dim = 50).

5.3. Comparison of Results on CEC 2017 Functions (Dim = 100)

According to the convergence curves of each function in

Figure 11, MSESSA achieves better results in C03, C04, C06, C10, C11, C12, C13, C15, C17, C19, C21, C22, C25, C27, C29, and C30 on CEC 2017 test suites (dimension = 100). In other words, MSESSA presents better performance in terms of unimodal functions, simple multimodal functions, mixed functions, and combined functions. Although the optimization value of simple multimodal function C09, mixed function C16 and combined function C26 is slightly worse than that of MSEFA, NABC, and GWO, MSESSA is better than that of MSEFA, NABC, and GWO. However, it can be seen from the convergence curve that MSESSA has strong capability in both early global search and late local search, and the performance of MSESSA is stable from the perspective of the overall search process. It can be seen intuitively from

Figure 12 that the stacked histogram of ranking for all algorithms on CEC 2017 test suites (dimension = 100). It can be seen that MSESSA is in the top four in all functions, ranking first in 12 functions, second in 9 functions, third in 5 functions, and fourth in 3 functions. It is enough to see that MSESSA shows strong performance. As can be seen from

Table A3 in the

Appendix A section, MSEFA has the largest number of bolds in terms of total bolds, followed by MSESSA; MSESSA has the largest number of bolds in terms of mean bolds, among which there are 12 bolds in 15 bolds, and MSEFA has 18 bolds in total, among which there are only 5 bolds in mean bolds. The mean value mainly reflects the centralized trend of test and experimental data. Standard deviation mainly reflects the degree of data dispersion, that is, MSESSA has better optimization ability and MSEFA is stable. It is worth mentioning that the optimal value of MSESSA is far ahead of other algorithms in terms of mixed function C13, mixed function C15 and combined function C30. In summary, MSESSA has the best comprehensive performance on CEC 2017 test suites (dimension = 100).

Figure 11.

The convergence curves of MSESSA and other 13 algorithms based on CEC 2017 (Dim = 100).

Figure 11.

The convergence curves of MSESSA and other 13 algorithms based on CEC 2017 (Dim = 100).

Figure 12.

The stacked histogram of ranking for MSESSA and other 13 algorithms based on CEC 2017 (Dim = 100).

Figure 12.

The stacked histogram of ranking for MSESSA and other 13 algorithms based on CEC 2017 (Dim = 100).

In conclusion, in the CEC 2017 test suites experiment, compared with other advanced algorithms in dealing with problems of different dimensions, MSESSA has good optimization performance and is competitive among many algorithms. However, with the increase in dimensions, MSESSA’s advantages slightly decrease.

5.4. Wilcoxon Rank-Sum Test

However, it is far from enough to evaluate the performance of MSESSA only through the mean and variance data in CEC 2017 tests, which is one-sided and not persuasive enough. In order to comprehensively evaluate the performance of the algorithm, the Wilcoxon rank-sum test is used in this study to test whether MSESSA has significant differences from other algorithms. During the experiment, the population is set to 100, the maximum number of iterations is set to 500, and each algorithm independently runs 30 times.

Table A4,

Table A5 and

Table A6 show the rank-sum test results on CEC 2017 test suites (dimension = 30, dimension = 50, and dimension = 100) at the significance level of

p = 5%. When

p < 5%, it is considered that there are significant differences between algorithms; when

p > 5%, it is considered that there are no significant differences between algorithms, and the data will be marked with underlines. NaN indicates that the results of the two algorithms are too similar to make a significant judgment.

From

Table A4,

Table A5 and

Table A6 in the

Appendix A section, it can be seen that NaN does not appear in any of the three tables, and there are not many underlined ones, which indicates that MSESSA has low similarity with its competitors in search results on CEC 2017 test suites (dimension = 30, dimension = 50, and dimension = 100); that is, there are significant differences in optimization results. Therefore, the optimization performance of the proposed MSESSA on CEC2017 test suites is significantly different from other algorithms. Combined with the analysis of CEC2017 test suites results in

Table A1,

Table A2 and

Table A3 in the

Appendix A section, it can be seen that the comprehensive performance of MSESSA is the most prominent among many algorithms. Therefore, it can be verified that MSESSA has certain core competitiveness and better performance compared with other algorithms.

In conclusion, in the Wilcoxon rank-sum test experiment, compared with other advanced algorithms in dealing with problems of different dimensions, MSESSA has a good optimization performance and is competitive among many algorithms. However, with the increase in dimensions, MSESSA’s advantages slightly decrease.

5.5. Ablation Experiment Test

In addition, in order to better verify the role of the proposed strategies in the optimization process and the validity of the idea of selective ensemble, the ablation experiment of MSESSA and SSA, the sparrow search algorithm which only uses the variable logarithmic spiral saltation learning strategy in the search process (SSA1), the sparrow search algorithm which only uses the neighborhood-guided learning strategy in the search process (SSA2), the sparrow search algorithm which only uses the adaptive Gaussian random walk strategy in the search process (SSA3), and the sparrow search algorithm which only uses modified boundary processing mechanism (SSA4), and the sparrow search algorithm which ensemble those four strategies in the search process (MESSA) is carried out. In the experiment, the population is set to 100, the maximum number of iterations is set to 500, and each algorithm independently runs 30 times to calculate the mean value and standard deviation.

Table 1,

Table 2 and

Table 3 list the test results of CEC 2017 test suites (dimension = 30, dimension = 50, and dimension = 100).

Table 1.

Ablation experiment tests on CEC 2017 functions (Dim = 30).

Table 1.

Ablation experiment tests on CEC 2017 functions (Dim = 30).

| Function | Index | SSA | SSA1 | SSA2 | SSA3 | SSA4 | MESSA | MSESSA |

|---|

| C01 | Mean | 8.549 × 103 | 9.951 × 103 | 7.051 × 103 | 7.237 × 103 | 9.967 × 103 | 6.519 × 103 | 1.936 × 103 |

| Std | 5.735 × 103 | 6.598 × 103 | 4.479 × 103 | 6.291 × 103 | 8.048 × 103 | 5.035 × 103 | 1.896 × 103 |

| C03 | Mean | 6.769 × 103 | 4.134 × 104 | 4.719 × 104 | 4.213 × 104 | 4.885 × 104 | 4.816 × 104 | 2.157 × 104 |

| Std | 7.913 × 103 | 8.848 × 103 | 7.832 × 103 | 8.374 × 103 | 7.468 × 103 | 7.826 × 103 | 4.454 × 103 |

| C04 | Mean | 1.148 × 102 | 1.407 × 102 | 1.092 × 102 | 1.185 × 102 | 8.913 × 101 | 9.743 × 101 | 8.254 × 101 |

| Std | 2.961 × 101 | 3.219 × 101 | 2.651 × 101 | 1.883 × 101 | 3.194 × 101 | 2.819 × 101 | 3.139 × 101 |

| C05 | Mean | 2.507 × 102 | 2.062 × 102 | 2.292 × 102 | 2.159 × 102 | 2.509 × 102 | 2.298 × 102 | 1.137 × 102 |

| Std | 4.983 × 101 | 4.880 × 101 | 5.641 × 101 | 5.672 × 101 | 4.779 × 101 | 5.426 × 101 | 3.044 × 101 |

| C06 | Mean | 4.847 × 101 | 2.151 × 101 | 4.725 × 101 | 2.071 × 101 | 5.144 × 101 | 4.653 × 101 | 1.166 × 10−2 |

| Std | 9.471 × 100 | 1.270 × 101 | 9.658 × 100 | 1.059 × 101 | 8.855 × 100 | 1.030 × 101 | 2.600 × 10−3 |

| C07 | Mean | 5.360 × 102 | 4.327 × 102 | 5.474 × 102 | 4.681 × 102 | 5.696 × 102 | 5.159 × 102 | 1.904 × 102 |

| Std | 6.095 × 101 | 1.309 × 102 | 9.250 × 101 | 1.405 × 102 | 7.161 × 101 | 9.828 × 101 | 2.539 × 101 |

| C08 | Mean | 1.625 × 102 | 1.483 × 102 | 1.793 × 102 | 1.498 × 102 | 1.724 × 102 | 1.732 × 102 | 1.024 × 102 |

| Std | 3.109 × 101 | 2.338 × 101 | 2.991 × 101 | 1.926 × 101 | 3.474 × 101 | 2.360 × 101 | 2.112 × 101 |

| C09 | Mean | 4.450 × 103 | 4.650 × 103 | 4.520 × 103 | 4.480 × 103 | 4.478 × 103 | 4.366 × 103 | 1.480 × 102 |

| Std | 1.744 × 102 | 5.602 × 102 | 1.751 × 102 | 7.877 × 102 | 3.453 × 102 | 4.465 × 102 | 1.877 × 101 |

| C10 | Mean | 4.409 × 103 | 3.990 × 103 | 4.435 × 103 | 4.118 × 103 | 4.160 × 103 | 4.331 × 103 | 3.119 × 103 |

| Std | 6.839 × 102 | 7.098 × 102 | 6.395 × 102 | 7.584 × 102 | 6.188 × 102 | 6.381 × 102 | 5.770 × 102 |

| C11 | Mean | 1.632 × 102 | 1.925 × 102 | 1.535 × 102 | 1.893 × 102 | 1.354 × 102 | 1.596 × 102 | 1.029 × 102 |

| Std | 6.461 × 101 | 5.870 × 101 | 4.381 × 101 | 5.115 × 101 | 6.354 × 101 | 5.066 × 101 | 4.095 × 101 |

| C12 | Mean | 1.625 × 106 | 7.439 × 106 | 1.781 × 106 | 6.943 × 106 | 2.579 × 106 | 1.861 × 106 | 1.257 × 106 |

| Std | 1.592 × 106 | 5.319 × 106 | 1.415 × 106 | 7.040 × 106 | 1.877 × 106 | 1.255 × 106 | 5.376 × 105 |

| C13 | Mean | 1.621 × 104 | 7.841 × 104 | 1.539 × 104 | 5.261 × 104 | 1.008 × 104 | 1.776 × 104 | 8.717 × 103 |

| Std | 1.694 × 104 | 1.575 × 105 | 1.773 × 104 | 1.401 × 105 | 1.139 × 104 | 1.516 × 104 | 1.250 × 104 |

| C14 | Mean | 4.179 × 104 | 8.679 × 104 | 3.264 × 104 | 8.580 × 104 | 6.952 × 104 | 4.410 × 104 | 1.132 × 104 |

| Std | 3.943 × 104 | 1.077 × 105 | 2.614 × 104 | 8.212 × 104 | 4.879 × 104 | 4.170 × 104 | 5.591 × 103 |

| C15 | Mean | 7.314 × 103 | 2.730 × 104 | 1.082 × 104 | 1.217 × 104 | 4.170 × 103 | 7.526 × 103 | 6.737 × 103 |

| Std | 9.864 × 103 | 1.043 × 105 | 1.317 × 104 | 2.069 × 104 | 4.458 × 103 | 1.111 × 104 | 7.053 × 103 |

| C16 | Mean | 1.359 × 103 | 1.311 × 103 | 1.403 × 103 | 1.215 × 103 | 1.269 × 103 | 1.333 × 103 | 9.145 × 102 |

| Std | 2.553 × 102 | 2.827 × 102 | 3.696 × 102 | 3.555 × 102 | 3.642 × 102 | 3.388 × 102 | 1.222 × 102 |

| C17 | Mean | 7.865 × 102 | 6.135 × 102 | 7.649 × 102 | 6.690 × 102 | 7.505 × 102 | 7.227 × 102 | 2.226 × 102 |

| Std | 1.760 × 102 | 2.523 × 102 | 2.618 × 102 | 2.664 × 102 | 1.998 × 102 | 2.955 × 102 | 4.537 × 101 |

| C18 | Mean | 5.350 × 105 | 5.976 × 105 | 5.508 × 105 | 1.136 × 106 | 7.154 × 105 | 5.334 × 105 | 1.491 × 105 |

| Std | 5.206 × 105 | 6.950 × 105 | 5.826 × 105 | 9.664 × 105 | 1.066 × 106 | 6.695 × 105 | 6.904 × 104 |

| C19 | Mean | 8.556 × 103 | 7.216 × 103 | 4.577 × 103 | 1.446 × 104 | 5.219 × 103 | 1.456 × 104 | 2.820 × 103 |

| Std | 1.369 × 104 | 1.000 × 104 | 5.575 × 103 | 3.023 × 104 | 5.345 × 103 | 1.701 × 104 | 1.887 × 103 |

| C20 | Mean | 6.480 × 102 | 5.678 × 102 | 7.652 × 102 | 6.087 × 102 | 7.867 × 102 | 7.333 × 102 | 2.836 × 102 |

| Std | 2.353 × 102 | 2.009 × 102 | 2.295 × 102 | 2.251 × 102 | 2.189 × 102 | 2.293 × 102 | 8.310 × 101 |

| C21 | Mean | 4.024 × 102 | 3.641 × 102 | 3.773 × 102 | 3.649 × 102 | 4.053 × 102 | 4.113 × 102 | 3.191 × 102 |

| Std | 4.792 × 101 | 3.581 × 101 | 5.079 × 101 | 4.498 × 101 | 3.623 × 101 | 3.934 × 101 | 3.130 × 101 |

| C22 | Mean | 2.885 × 103 | 2.635 × 103 | 2.133 × 103 | 2.843 × 103 | 2.868 × 103 | 3.772 × 103 | 9.891 × 102 |

| Std | 2.207 × 103 | 2.290 × 103 | 2.417 × 103 | 2.470 × 103 | 2.230 × 103 | 2.143 × 103 | 1.682 × 103 |

| C23 | Mean | 6.088 × 102 | 5.265 × 102 | 6.406 × 102 | 5.157 × 102 | 6.868 × 102 | 6.521 × 102 | 5.018 × 102 |

| Std | 7.659 × 101 | 5.645 × 101 | 9.241 × 101 | 4.039 × 101 | 1.025 × 102 | 6.949 × 101 | 3.754 × 101 |

| C24 | Mean | 6.897 × 102 | 6.384 × 102 | 6.826 × 102 | 6.219 × 102 | 8.092 × 102 | 7.008 × 102 | 5.378 × 102 |

| Std | 8.497 × 101 | 7.641 × 101 | 9.012 × 101 | 5.309 × 101 | 8.801 × 101 | 9.221 × 101 | 6.224 × 101 |

| C25 | Mean | 4.021 × 102 | 4.102 × 102 | 4.003 × 102 | 4.188 × 102 | 3.866 × 102 | 4.013 × 102 | 3.893 × 102 |

| Std | 1.444 × 101 | 1.842 × 101 | 1.728 × 101 | 1.957 × 101 | 1.286 × 101 | 1.798 × 101 | 1.612 × 101 |

| C26 | Mean | 3.545 × 103 | 2.628 × 103 | 3.213 × 103 | 3.050 × 103 | 3.184 × 103 | 4.025 × 103 | 1.999 × 103 |

| Std | 1.183 × 103 | 1.229 × 103 | 1.598 × 103 | 8.824 × 102 | 1.260 × 103 | 1.353 × 103 | 2.124 × 103 |

| C27 | Mean | 5.754 × 102 | 5.472 × 102 | 5.630 × 102 | 5.395 × 102 | 5.000066 × 102 | 5.750 × 102 | 5.000060 × 102 |

| Std | 4.742 × 101 | 1.920 × 101 | 4.169 × 101 | 1.907 × 101 | 2.539 × 10−04 | 3.018 × 101 | 3.215 × 10−4 |

| C28 | Mean | 4.377 × 102 | 4.833 × 102 | 4.323 × 102 | 4.862 × 102 | 4.730 × 102 | 4.398 × 102 | 4.562 × 102 |

| Std | 2.243 × 101 | 2.166 × 101 | 2.268 × 101 | 3.206 × 101 | 3.315 × 101 | 2.492 × 101 | 3.926 × 101 |

| C29 | Mean | 1.234 × 103 | 1.074 × 103 | 1.175 × 103 | 1.047 × 103 | 1.004 × 103 | 1.191 × 103 | 7.867 × 102 |

| Std | 2.555 × 102 | 2.668 × 102 | 2.484 × 102 | 2.479 × 102 | 2.747 × 102 | 2.995 × 102 | 2.168 × 102 |

| C30 | Mean | 1.431 × 104 | 3.537 × 104 | 1.533 × 104 | 1.361 × 104 | 4.575 × 103 | 1.488 × 104 | 3.068 × 103 |

| Std | 8.584 × 103 | 9.373 × 104 | 1.089 × 104 | 7.351 × 103 | 5.438 × 103 | 7.138 × 103 | 3.586 × 103 |

Table 2.

Ablation experiment tests on CEC 2017 functions (Dim = 50).

Table 2.

Ablation experiment tests on CEC 2017 functions (Dim = 50).

| Function | Index | SSA | SSA1 | SSA2 | SSA3 | SSA4 | MESSA | MSESSA |

|---|

| C01 | Mean | 1.471 × 106 | 1.479 × 106 | 1.630 × 106 | 1.467 × 106 | 1.715 × 106 | 1.427 × 106 | 6.947 × 103 |

| Std | 6.635 × 105 | 6.116 × 105 | 6.910 × 105 | 6.493 × 105 | 7.048 × 105 | 4.971 × 105 | 4.534 × 103 |

| C03 | Mean | 2.091 × 105 | 1.615 × 105 | 2.166 × 105 | 1.666 × 105 | 2.242 × 105 | 1.963 × 105 | 4.623 × 104 |

| Std | 4.565 × 104 | 3.446 × 104 | 4.678 × 104 | 3.336 × 104 | 5.252 × 104 | 3.867 × 104 | 1.484 × 104 |

| C04 | Mean | 1.908 × 102 | 2.803 × 102 | 1.828 × 102 | 2.697 × 102 | 1.826 × 102 | 1.834 × 102 | 1.555 × 102 |

| Std | 5.517 × 101 | 8.004 × 101 | 4.936 × 101 | 5.777 × 101 | 3.950 × 101 | 4.556 × 101 | 5.302 × 101 |

| C05 | Mean | 3.665 × 102 | 3.600 × 102 | 3.828 × 102 | 3.628 × 102 | 3.750 × 102 | 3.824 × 102 | 2.441 × 102 |

| Std | 3.133 × 101 | 2.878 × 101 | 1.958 × 101 | 3.840 × 101 | 2.072 × 101 | 3.306 × 101 | 3.842 × 101 |

| C06 | Mean | 6.327 × 101 | 4.514 × 101 | 6.466 × 101 | 4.318 × 101 | 6.314 × 101 | 6.342 × 101 | 2.455 × 100 |

| Std | 7.087 × 100 | 1.364 × 101 | 5.640 × 100 | 1.232 × 101 | 4.653 × 100 | 4.813 × 100 | 8.202 × 10−1 |

| C07 | Mean | 1.044 × 103 | 9.814 × 102 | 1.041 × 103 | 9.440 × 102 | 1.043 × 103 | 1.046 × 103 | 3.668 × 102 |

| Std | 7.600 × 101 | 9.173 × 101 | 6.760 × 101 | 1.177 × 102 | 6.171 × 101 | 6.215 × 101 | 3.450 × 101 |

| C08 | Mean | 3.955 × 102 | 3.789 × 102 | 3.942 × 102 | 3.904 × 102 | 3.977 × 102 | 3.937 × 102 | 2.288 × 102 |

| Std | 3.523 × 101 | 4.579 × 101 | 3.007 × 101 | 4.979 × 101 | 3.615 × 101 | 3.739 × 101 | 4.132 × 101 |

| C09 | Mean | 1.244 × 104 | 1.501 × 104 | 1.221 × 104 | 1.453 × 104 | 1.239 × 104 | 1.280 × 104 | 8.450 × 103 |

| Std | 1.261 × 103 | 2.386 × 103 | 1.282 × 103 | 2.795 × 103 | 1.047 × 103 | 1.254 × 103 | 2.080 × 103 |

| C10 | Mean | 7.636 × 103 | 7.382 × 103 | 7.342 × 103 | 7.753 × 103 | 7.488 × 103 | 7.543 × 103 | 5.484 × 103 |

| Std | 7.927 × 102 | 1.242 × 103 | 8.090 × 102 | 1.163 × 103 | 7.868 × 102 | 8.922 × 102 | 6.309 × 102 |

| C11 | Mean | 3.043 × 102 | 4.172 × 102 | 3.360 × 102 | 4.442 × 102 | 3.329 × 102 | 3.098 × 102 | 1.798 × 102 |

| Std | 8.496 × 101 | 1.040 × 102 | 6.493 × 101 | 1.100 × 102 | 8.804 × 101 | 6.662 × 101 | 3.197 × 101 |

| C12 | Mean | 1.313 × 107 | 6.711 × 107 | 1.633 × 107 | 6.467 × 107 | 1.599 × 107 | 1.160 × 107 | 6.261 × 106 |

| Std | 6.892 × 106 | 5.309 × 107 | 8.226 × 106 | 4.173 × 107 | 1.067 × 107 | 6.360 × 106 | 5.043 × 106 |

| C13 | Mean | 2.721 × 104 | 2.830 × 105 | 1.727 × 104 | 2.857 × 105 | 4.220 × 104 | 1.956 × 104 | 4.363 × 103 |

| Std | 2.669 × 104 | 8.579 × 105 | 1.260 × 104 | 7.000 × 105 | 3.866 × 104 | 1.360 × 104 | 6.047 × 103 |

| C14 | Mean | 3.155 × 105 | 6.362 × 105 | 4.905 × 105 | 6.475 × 105 | 4.438 × 105 | 4.255 × 105 | 3.385 × 105 |

| Std | 1.606 × 105 | 4.901 × 105 | 3.233 × 105 | 5.574 × 105 | 3.453 × 105 | 2.565 × 105 | 2.498 × 105 |

| C15 | Mean | 1.410 × 104 | 1.995 × 104 | 1.627 × 104 | 4.292 × 104 | 1.908 × 104 | 1.511 × 104 | 1.469 × 104 |

| Std | 1.238 × 104 | 1.592 × 104 | 8.102 × 103 | 1.529 × 105 | 1.897 × 104 | 1.328 × 104 | 1.528 × 104 |

| C16 | Mean | 2.333 × 103 | 2.204 × 103 | 2.099 × 103 | 2.197 × 103 | 2.342 × 103 | 2.454 × 103 | 2.179 × 103 |

| Std | 4.003 × 102 | 5.176 × 102 | 4.334 × 102 | 3.828 × 102 | 5.200 × 102 | 5.927 × 102 | 5.456 × 102 |

| C17 | Mean | 1.875 × 103 | 1.779 × 103 | 1.843 × 103 | 1.693 × 103 | 1.951 × 103 | 1.678 × 103 | 1.649 × 103 |

| Std | 4.481 × 102 | 3.634 × 102 | 3.989 × 102 | 4.043 × 102 | 4.353 × 102 | 1.678 × 103 | 3.443 × 102 |

| C18 | Mean | 2.191 × 106 | 3.682 × 106 | 2.668 × 106 | 4.334 × 106 | 2.922 × 106 | 2.653 × 106 | 1.961 × 106 |

| Std | 1.277 × 106 | 2.578 × 106 | 1.385 × 106 | 3.166 × 106 | 1.865 × 106 | 2.348 × 106 | 1.048 × 106 |

| C19 | Mean | 1.684 × 104 | 2.897 × 104 | 1.728 × 104 | 1.934 × 104 | 2.474 × 104 | 1.845 × 104 | 1.099 × 104 |

| Std | 1.131 × 104 | 3.193 × 104 | 1.162 × 104 | 1.385 × 104 | 2.199 × 104 | 1.238 × 104 | 9.587 × 103 |

| C20 | Mean | 1.532 × 103 | 1.318 × 103 | 1.667 × 103 | 1.432 × 103 | 1.573 × 103 | 1.658 × 103 | 1.297 × 103 |

| Std | 3.737 × 102 | 2.580 × 102 | 2.815 × 102 | 3.284 × 102 | 3.519 × 102 | 3.268 × 102 | 2.918 × 102 |

| C21 | Mean | 6.386 × 102 | 5.334 × 102 | 6.680 × 102 | 5.252 × 102 | 6.987 × 102 | 6.947 × 102 | 4.561 × 102 |

| Std | 7.417 × 101 | 5.033 × 101 | 9.858 × 101 | 4.808 × 101 | 9.675 × 101 | 9.788 × 101 | 6.032 × 101 |

| C22 | Mean | 7.932 × 103 | 8.612 × 103 | 8.393 × 103 | 8.492 × 103 | 8.202 × 103 | 8.073 × 103 | 6.188 × 103 |

| Std | 1.009 × 103 | 1.321 × 103 | 8.335 × 102 | 1.331 × 103 | 1.170 × 103 | 1.177 × 103 | 7.416 × 102 |

| C23 | Mean | 1.093 × 103 | 8.612 × 102 | 1.055 × 103 | 8.447 × 102 | 1.068 × 103 | 1.041 × 103 | 7.713 × 102 |

| Std | 1.205 × 102 | 8.154 × 101 | 1.414 × 102 | 7.427 × 101 | 1.522 × 102 | 1.234 × 102 | 7.208 × 101 |

| C24 | Mean | 1.137 × 103 | 1.017 × 103 | 1.105 × 103 | 1.003 × 103 | 1.467 × 103 | 1.088 × 103 | 7.302 × 102 |

| Std | 1.448 × 102 | 1.423 × 102 | 1.148 × 102 | 1.462 × 102 | 1.487 × 102 | 1.384 × 102 | 9.637 × 101 |

| C25 | Mean | 6.141 × 102 | 6.775 × 102 | 6.150 × 102 | 6.756 × 102 | 5.665 × 102 | 6.262 × 102 | 5.499 × 102 |

| Std | 2.208 × 101 | 4.841 × 101 | 2.884 × 101 | 3.841 × 101 | 3.192 × 101 | 3.064 × 101 | 3.163 × 101 |

| C26 | Mean | 6.515 × 103 | 3.902 × 103 | 6.812 × 103 | 3.916 × 103 | 6.673 × 103 | 6.324 × 103 | 3.902 × 103 |

| Std | 2.628 × 103 | 3.137 × 103 | 2.835 × 103 | 2.938 × 103 | 3.008 × 103 | 3.374 × 103 | 3.258 × 103 |

| C27 | Mean | 1.034 × 103 | 8.149 × 102 | 9.655 × 102 | 8.402 × 102 | 5.00012 × 102 | 1.076 × 103 | 5.00011 × 102 |

| Std | 1.281 × 102 | 9.237 × 101 | 1.916 × 102 | 1.321 × 102 | 2.090 × 10−4 | 2.044 × 102 | 3.220 × 10−4 |

| C28 | Mean | 5.871 × 102 | 6.764 × 102 | 5.848 × 102 | 6.696 × 102 | 5.031 × 102 | 5.921 × 102 | 5.000 × 102 |

| Std | 4.508 × 101 | 5.407 × 101 | 4.147 × 101 | 5.663 × 101 | 1.199 × 101 | 3.466 × 101 | 3.310 × 10−4 |

| C29 | Mean | 2.344 × 103 | 1.779 × 103 | 2.248 × 103 | 1.885 × 103 | 1.954 × 103 | 2.312 × 103 | 1.537 × 103 |

| Std | 3.690 × 102 | 2.849 × 102 | 3.892 × 102 | 3.093 × 102 | 4.092 × 102 | 3.989 × 102 | 4.108 × 102 |

| C30 | Mean | 1.445 × 106 | 1.461 × 106 | 1.545 × 106 | 1.523 × 106 | 6.387 × 103 | 1.476 × 106 | 6.158 × 103 |

| Std | 5.693 × 105 | 3.585 × 105 | 6.347 × 105 | 7.248 × 105 | 6.987 × 103 | 4.668 × 105 | 5.932 × 103 |

Table 3.

Ablation experiment tests on CEC 2017 functions (Dim = 100).

Table 3.

Ablation experiment tests on CEC 2017 functions (Dim = 100).

| Function | Index | SSA | SSA1 | SSA2 | SSA3 | SSA4 | MESSA | MSESSA |

|---|

| C01 | Mean | 3.400 × 108 | 3.134 × 108 | 3.167 × 108 | 3.085 × 108 | 3.544 × 108 | 3.080 × 108 | 1.865 × 107 |

| Std | 1.320 × 108 | 9.889 × 107 | 9.049 × 107 | 1.048 × 108 | 1.084 × 108 | 1.195 × 108 | 8.154 × 106 |

| C03 | Mean | 5.756 × 105 | 4.860 × 105 | 5.582 × 105 | 4.984 × 105 | 5.123 × 105 | 5.239 × 105 | 2.679 × 105 |

| Std | 6.883 × 104 | 1.119 × 105 | 1.055 × 105 | 8.873 × 104 | 9.471 × 104 | 1.047 × 105 | 2.607 × 104 |

| C04 | Mean | 6.977 × 102 | 8.945 × 102 | 6.757 × 102 | 8.753 × 102 | 6.970 × 102 | 6.982 × 102 | 5.209 × 102 |

| Std | 9.500 × 101 | 1.404 × 102 | 1.105 × 102 | 1.102 × 102 | 9.570 × 101 | 1.083 × 102 | 7.216 × 101 |

| C05 | Mean | 8.811 × 102 | 8.727 × 102 | 8.873 × 102 | 8.655 × 102 | 8.888 × 102 | 8.808 × 102 | 6.764 × 102 |

| Std | 4.877 × 101 | 5.506 × 101 | 3.581 × 101 | 4.758 × 101 | 5.217 × 101 | 6.030 × 101 | 6.819 × 101 |

| C06 | Mean | 6.711 × 101 | 6.211 × 101 | 6.703 × 101 | 6.188 × 101 | 6.771 × 101 | 6.670 × 101 | 1.063 × 101 |

| Std | 2.818 × 100 | 4.376 × 100 | 3.212 × 100 | 4.851 × 100 | 2.823 × 100 | 1.661 × 100 | 1.412 × 100 |

| C07 | Mean | 2.561 × 103 | 2.469 × 103 | 2.572 × 103 | 2.505 × 103 | 2.571 × 103 | 2.583 × 103 | 1.086 × 103 |

| Std | 1.018 × 102 | 1.525 × 102 | 8.911 × 101 | 1.288 × 102 | 1.402 × 102 | 8.535 × 101 | 1.005 × 102 |

| C08 | Mean | 1.049 × 103 | 1.055 × 103 | 1.067 × 103 | 1.035 × 103 | 1.050 × 103 | 1.056 × 103 | 6.938 × 102 |

| Std | 5.063 × 101 | 6.933 × 101 | 5.054 × 101 | 5.002 × 101 | 4.331 × 101 | 4.541 × 101 | 6.579 × 101 |

| C09 | Mean | 2.475 × 104 | 2.762 × 104 | 2.467 × 104 | 2.710 × 104 | 2.472 × 104 | 2.465 × 104 | 2.332 × 104 |

| Std | 1.009 × 103 | 3.582 × 103 | 5.579 × 102 | 3.149 × 103 | 1.096 × 103 | 8.065 × 102 | 1.059 × 103 |

| C10 | Mean | 1.669 × 104 | 1.864 × 104 | 1.629 × 104 | 1.837 × 104 | 1.662 × 104 | 1.695 × 104 | 1.353 × 104 |

| Std | 1.547 × 103 | 1.882 × 103 | 1.499 × 103 | 2.272 × 103 | 1.547 × 103 | 1.471 × 103 | 1.087 × 103 |

| C11 | Mean | 6.094 × 104 | 5.162 × 104 | 5.474 × 104 | 5.892 × 104 | 6.392 × 104 | 5.076 × 104 | 1.328 × 104 |

| Std | 1.467 × 104 | 1.536 × 104 | 2.092 × 104 | 1.647 × 104 | 1.931 × 104 | 1.432 × 104 | 5.918 × 103 |

| C12 | Mean | 2.320 × 108 | 4.300 × 108 | 2.355 × 108 | 4.255 × 108 | 3.355 × 108 | 2.441 × 108 | 8.343 × 107 |

| Std | 1.010 × 108 | 1.475 × 108 | 7.268 × 107 | 1.424 × 108 | 1.438 × 108 | 9.785 × 107 | 8.426 × 107 |

| C13 | Mean | 6.178 × 104 | 5.989 × 104 | 6.107 × 104 | 6.606 × 104 | 6.628 × 104 | 6.045 × 104 | 8.120 × 103 |

| Std | 1.813 × 104 | 2.154 × 104 | 2.079 × 104 | 2.383 × 104 | 2.731 × 104 | 2.352 × 104 | 6.250 × 103 |

| C14 | Mean | 2.431 × 106 | 3.493 × 106 | 2.086 × 106 | 3.324 × 106 | 2.420 × 106 | 2.209 × 106 | 1.513 × 106 |

| Std | 1.038 × 106 | 2.268 × 106 | 9.219 × 105 | 3.288 × 106 | 8.656 × 105 | 9.758 × 105 | 5.933 × 105 |

| C15 | Mean | 1.259 × 104 | 1.564 × 105 | 5.131 × 104 | 3.506 × 105 | 1.277 × 105 | 4.509 × 104 | 3.377 × 103 |

| Std | 7.276 × 103 | 5.827 × 105 | 2.126 × 105 | 1.483 × 106 | 2.440 × 105 | 1.912 × 105 | 4.491 × 103 |

| C16 | Mean | 5.354 × 103 | 4.802 × 103 | 5.020 × 103 | 5.269 × 103 | 5.245 × 103 | 5.272 × 103 | 4.813 × 103 |

| Std | 5.545 × 102 | 8.611 × 102 | 8.918 × 102 | 5.629 × 102 | 6.878 × 102 | 6.569 × 102 | 8.047 × 102 |

| C17 | Mean | 4.245 × 103 | 4.082 × 103 | 4.299 × 103 | 4.013 × 103 | 4.415 × 103 | 4.317 × 103 | 3.787 × 103 |

| Std | 5.617 × 102 | 6.148 × 102 | 8.194 × 102 | 6.563 × 102 | 8.196 × 102 | 6.173 × 102 | 6.412 × 102 |

| C18 | Mean | 3.163 × 106 | 5.705 × 106 | 3.523 × 106 | 4.218 × 106 | 3.433 × 106 | 3.269 × 106 | 2.034 × 106 |

| Std | 1.832 × 106 | 4.089 × 106 | 1.551 × 106 | 2.021 × 106 | 1.544 × 106 | 1.574 × 106 | 9.128 × 105 |

| C19 | Mean | 3.131 × 104 | 5.763 × 104 | 5.749 × 104 | 2.548 × 104 | 1.279 × 104 | 2.603 × 104 | 3.723 × 103 |

| Std | 2.882 × 104 | 8.447 × 104 | 1.156 × 105 | 2.585 × 104 | 1.504 × 104 | 2.212 × 104 | 3.802 × 103 |

| C20 | Mean | 3.871 × 103 | 3.342 × 103 | 4.124 × 103 | 3.436 × 103 | 3.993 × 103 | 4.001 × 103 | 3.246 × 103 |

| Std | 5.609 × 102 | 6.617 × 102 | 6.076 × 102 | 6.158 × 102 | 6.377 × 102 | 4.628 × 102 | 5.244 × 102 |

| C21 | Mean | 1.663 × 103 | 1.280 × 103 | 1.679 × 103 | 1.251 × 103 | 1.716 × 103 | 1.648 × 103 | 9.012 × 102 |

| Std | 1.991 × 102 | 1.207 × 102 | 1.928 × 102 | 1.076 × 102 | 2.000 × 102 | 1.965 × 102 | 8.237 × 101 |

| C22 | Mean | 1.828 × 104 | 2.022 × 104 | 1.805 × 104 | 2.072 × 104 | 1.773 × 104 | 1.758 × 104 | 1.462 × 104 |

| Std | 1.400 × 103 | 1.736 × 103 | 1.621 × 103 | 1.908 × 103 | 1.780 × 103 | 1.605 × 103 | 1.177 × 103 |

| C23 | Mean | 1.957 × 103 | 1.404 × 103 | 1.982 × 103 | 1.419 × 103 | 2.364 × 103 | 1.958 × 103 | 1.089 × 103 |

| Std | 1.929 × 102 | 1.053 × 102 | 1.897 × 102 | 1.167 × 102 | 2.312 × 102 | 1.860 × 102 | 8.824 × 101 |

| C24 | Mean | 2.847 × 103 | 2.049 × 103 | 2.723 × 103 | 2.059 × 103 | 3.953 × 103 | 2.807 × 103 | 1.665 × 103 |

| Std | 2.736 × 102 | 1.556 × 102 | 2.551 × 102 | 1.445 × 102 | 3.417 × 102 | 2.743 × 102 | 1.065 × 102 |

| C25 | Mean | 1.210 × 103 | 1.338 × 103 | 1.217 × 103 | 1.340 × 103 | 1.127 × 103 | 1.197 × 103 | 1.007 × 103 |

| Std | 7.953 × 101 | 8.471 × 101 | 8.076 × 101 | 1.175 × 102 | 7.236 × 101 | 7.137 × 101 | 5.882 × 101 |

| C26 | Mean | 2.246 × 104 | 1.711 × 104 | 2.284 × 104 | 1.962 × 104 | 2.425 × 104 | 2.198 × 104 | 1.538 × 104 |

| Std | 4.939 × 103 | 6.911 × 103 | 4.413 × 103 | 2.977 × 103 | 2.319 × 103 | 3.999 × 103 | 5.236 × 103 |

| C27 | Mean | 1.287 × 103 | 1.067 × 103 | 1.328 × 103 | 1.068 × 103 | 5.00024 × 102 | 1.359 × 103 | 5.00022 × 102 |

| Std | 3.130 × 102 | 1.700 × 102 | 2.574 × 102 | 1.459 × 102 | 4.031 × 10−4 | 2.579 × 102 | 3.760 × 10−4 |

| C28 | Mean | 1.083 × 103 | 1.226 × 103 | 1.033 × 103 | 1.205 × 103 | 1.060 × 103 | 1.042 × 103 | 8.444 × 102 |

| Std | 7.551 × 101 | 1.098 × 102 | 7.998 × 101 | 1.262 × 102 | 1.161 × 102 | 7.441 × 101 | 3.894 × 101 |

| C29 | Mean | 5.832 × 103 | 4.636 × 103 | 5.553 × 103 | 4.693 × 103 | 4.261 × 103 | 5.320 × 103 | 3.627 × 103 |

| Std | 7.132 × 102 | 5.812 × 102 | 6.826 × 102 | 6.410 × 102 | 8.352 × 102 | 5.630 × 102 | 5.149 × 102 |

| C30 | Mean | 2.315 × 106 | 2.128 × 106 | 2.145 × 106 | 2.393 × 106 | 2.595 × 105 | 2.429 × 106 | 7.285 × 103 |

| Std | 1.148 × 106 | 9.114 × 105 | 1.190 × 106 | 2.061 × 106 | 3.493 × 105 | 1.364 × 106 | 1.160 × 104 |

As can be seen from

Table 1, MSESSA has the most bolds and optimal values, which presents excellent performance in terms of unimodal functions, simple multimodal functions, mixed functions, and combined functions. In addition, from the test values of SSA1, SSA2, SSA3, and SSA4, we can know they are a significant improvement compared with SSA in some functions. Therefore, it can be verified that the proposed strategies have a good effect on CEC 2017 test suites (dimension = 30). In addition, it can be found that the performance of MSESSA is better than that of MESSA, so it can be concluded that selective ensemble does have a certain effect on algorithm optimization compared with the ensemble to some extent.

As can be seen from