Residual Depth Feature-Extraction Network for Infrared Small-Target Detection

Abstract

1. Introduction

2. Materials and Methods

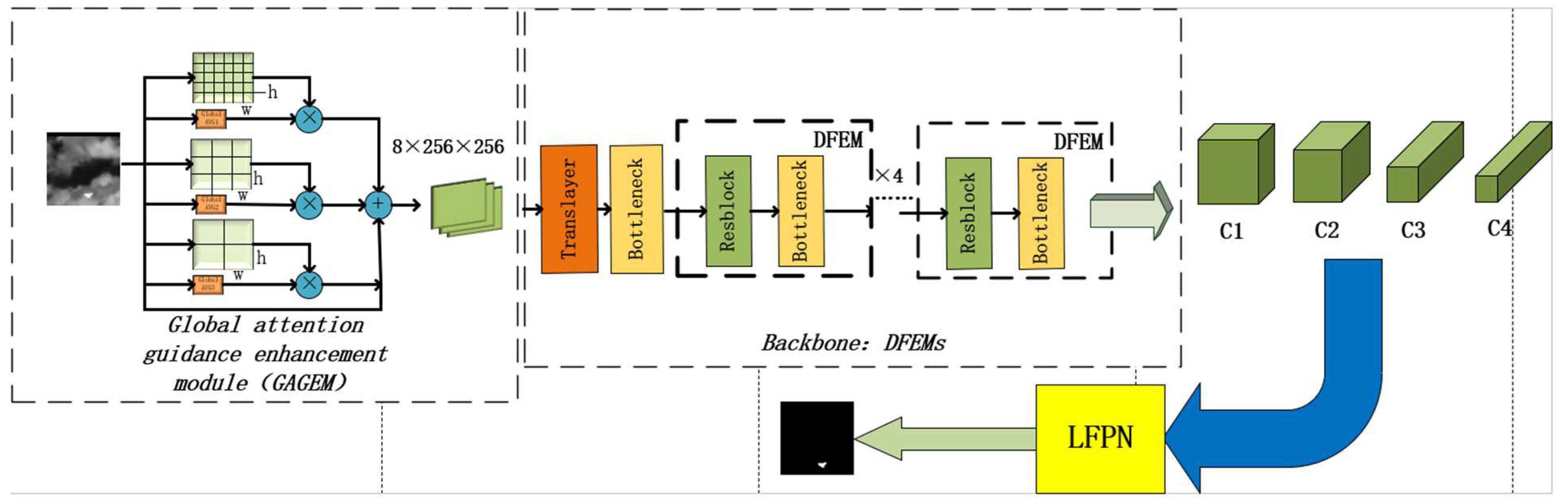

2.1. The Global Attention Guidanceenhancement Module (GAGEM)

2.1.1. Motivation

2.1.2. The Global Attention Guidance Enhancement Module

| Algorithm 1 Implementation Algorithm of Nonlocal. |

| 1: Input |

| 2: Update Q = conv1*1(X), ; K = conv1*1(X), ; V = conv1*1(X), . 3: Update Q = Reshape(Q), ; K = Reshape(K), ; Energy = Q*K; Attention = SoftMax (Energy); 4: Update V = Reshape(V), ; Out = V * Energy; 5: Output Out = Reshape (Out), Note: conv1 × 1() represents 1 × 1 convolution kernel; SoftMax() represents torch.softmax(); |

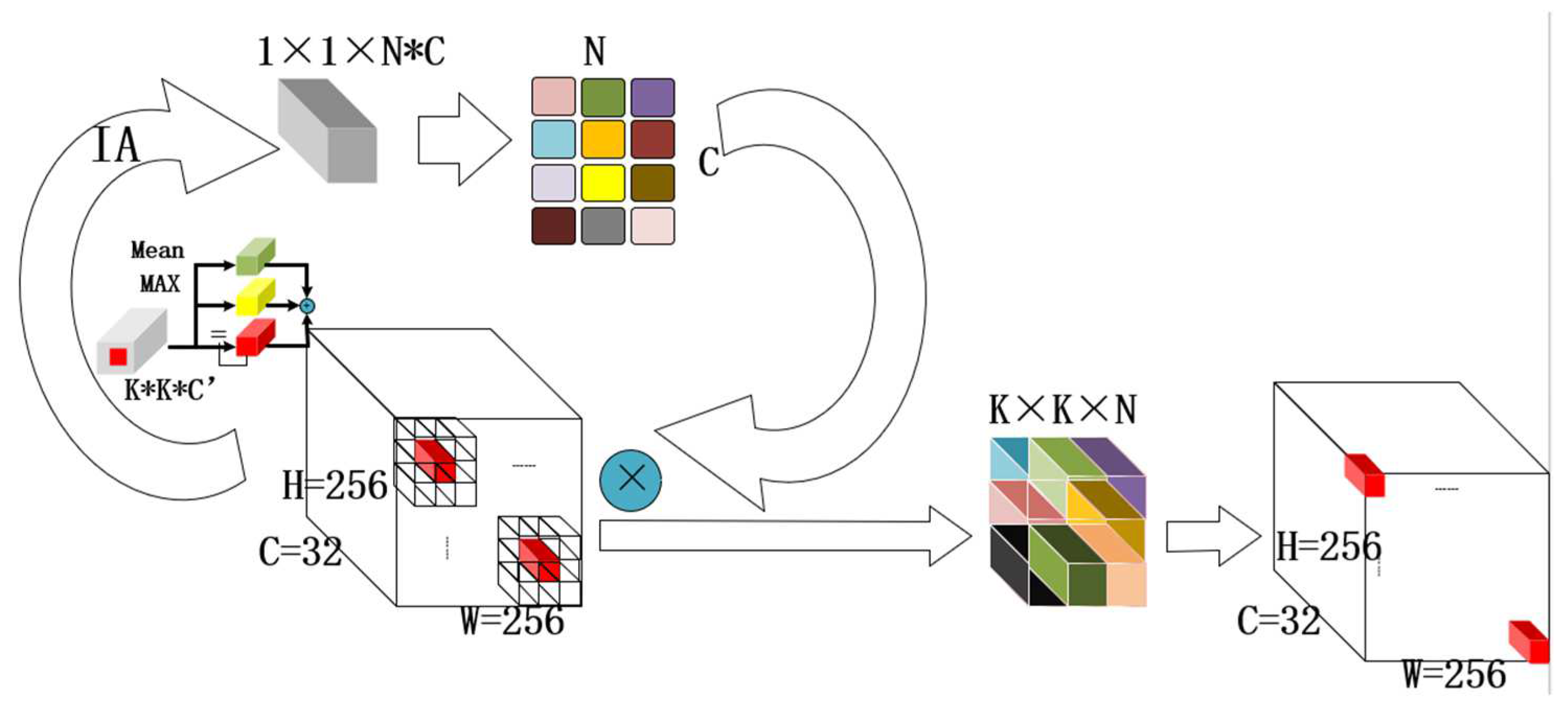

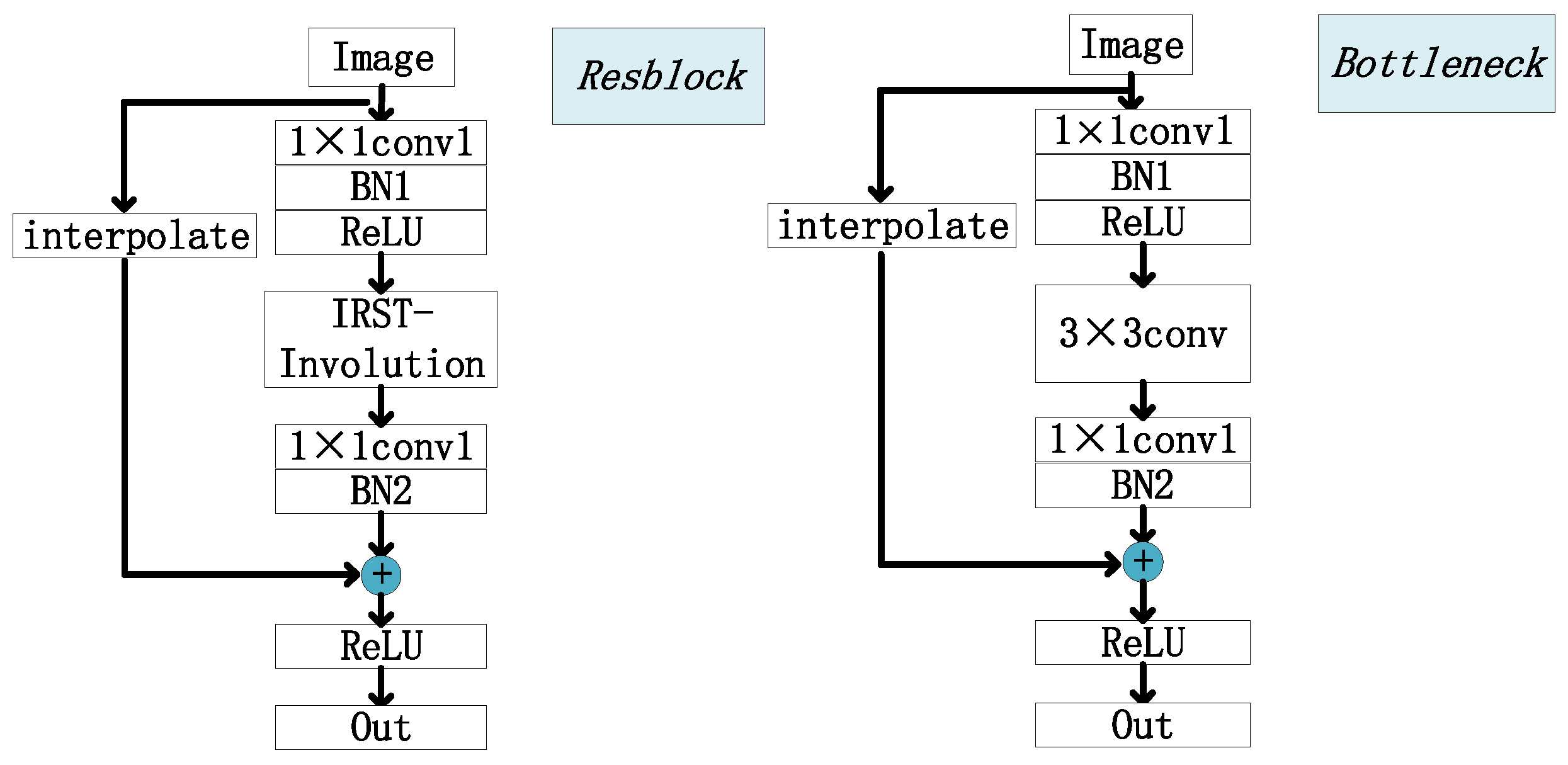

2.2. Depth Feature-Extraction Module (DFEMs)

2.2.1. Description of IRST-Involution

2.2.2. Depth Feature-Extraction Module

2.2.3. Establishment of Backbone Network

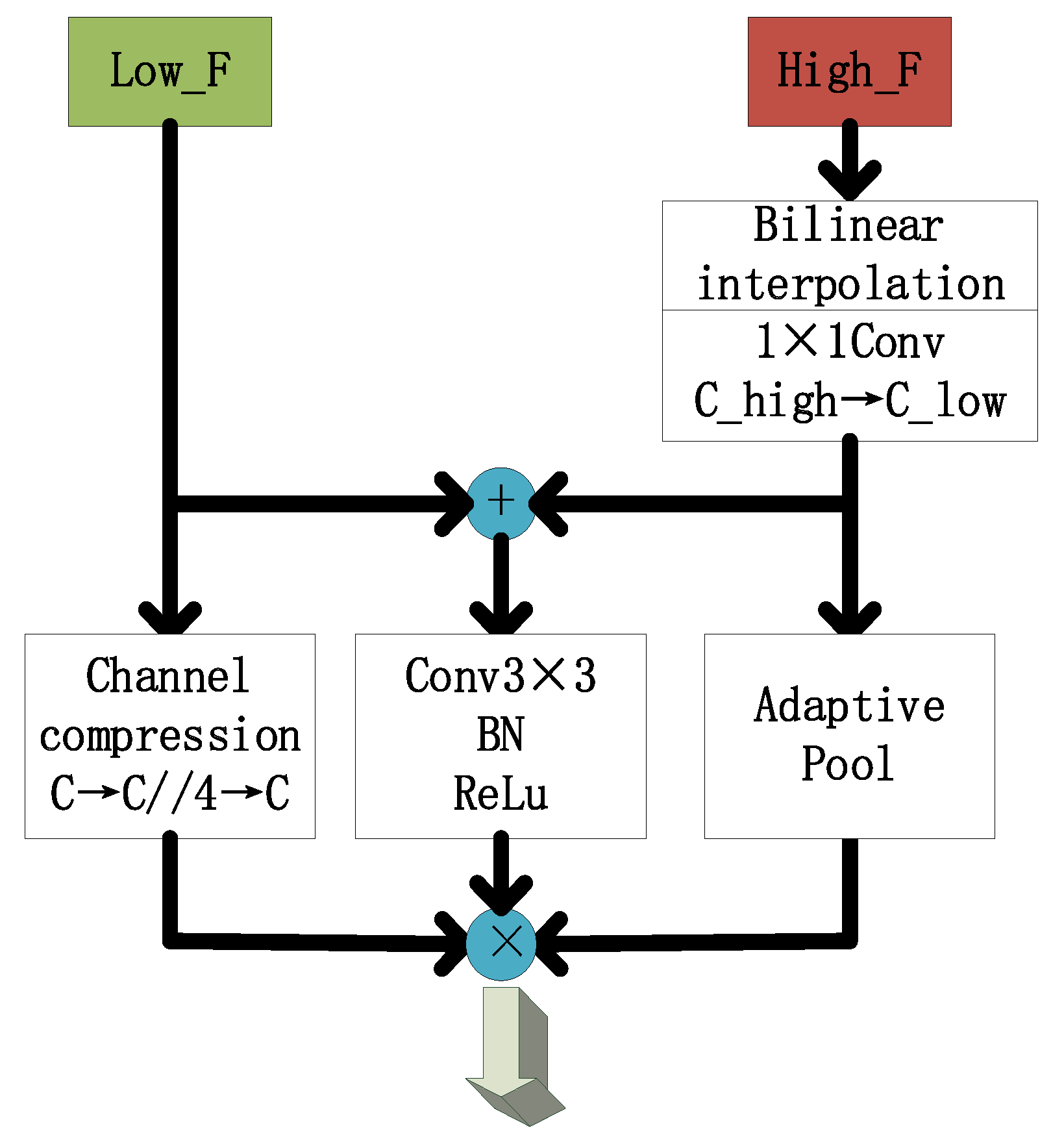

2.3. Feature Fusion

3. Experimental Results

3.1. Dataset Description

3.2. Experimental Configuration

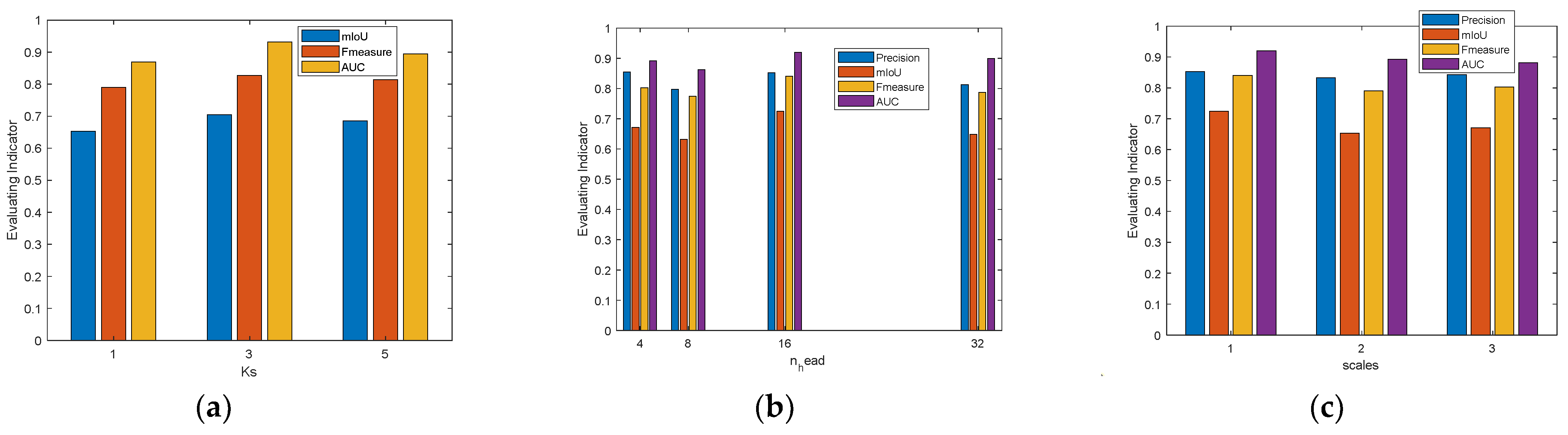

3.3. Parameters Setting

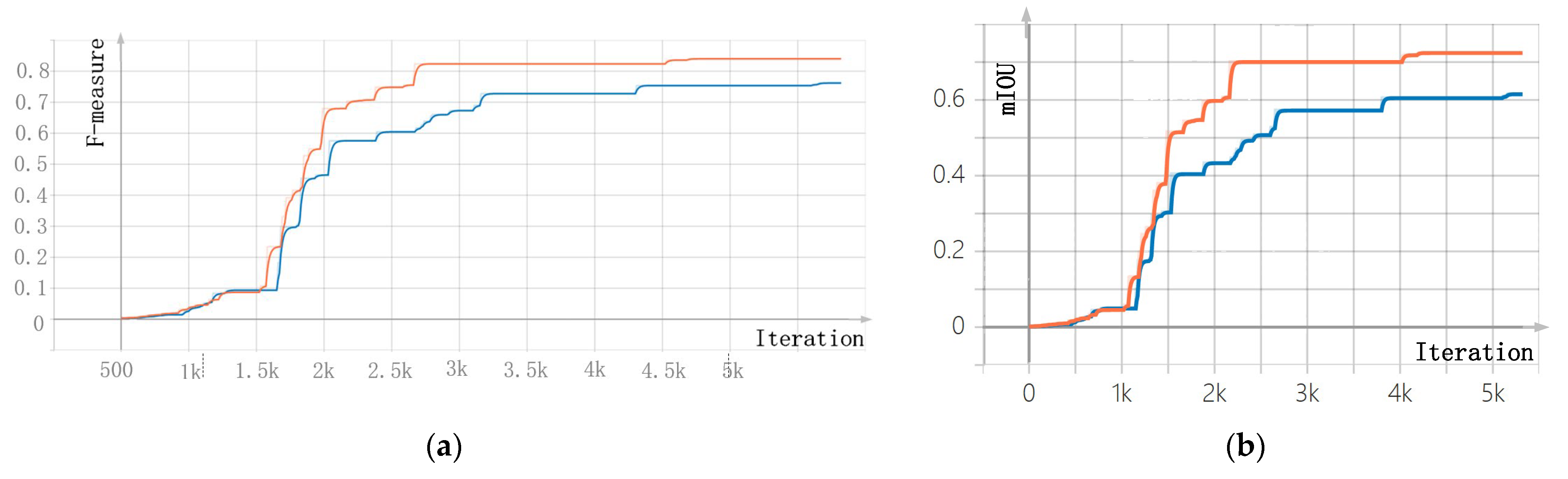

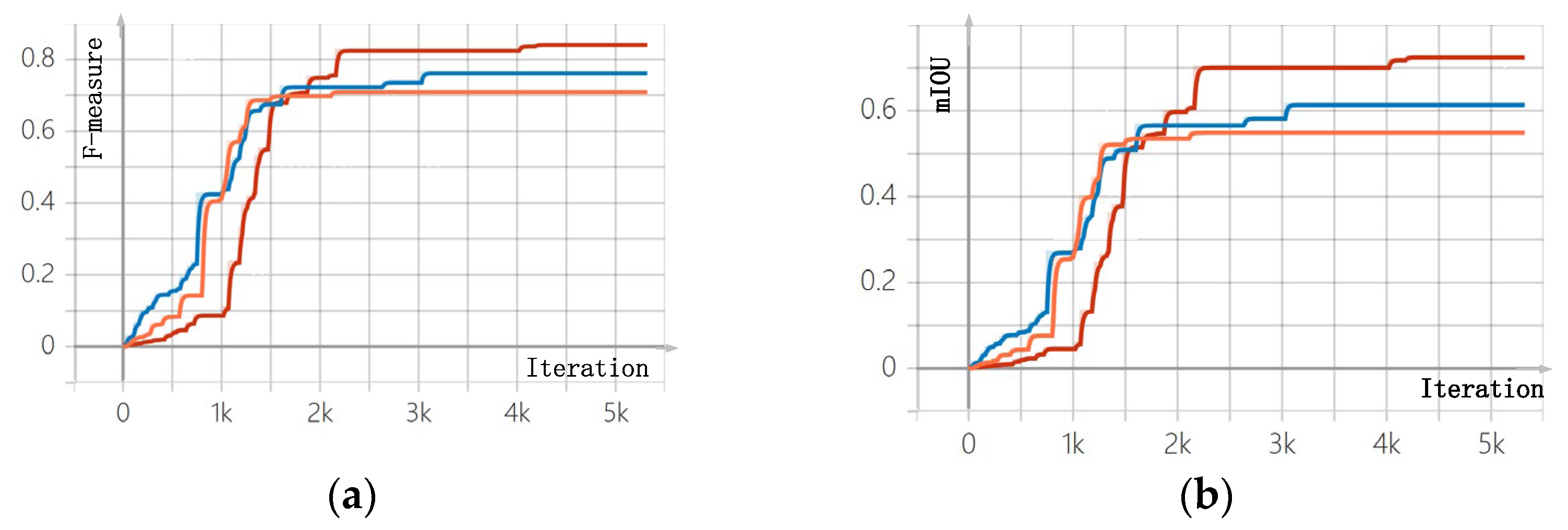

3.4. Ablation Experiment

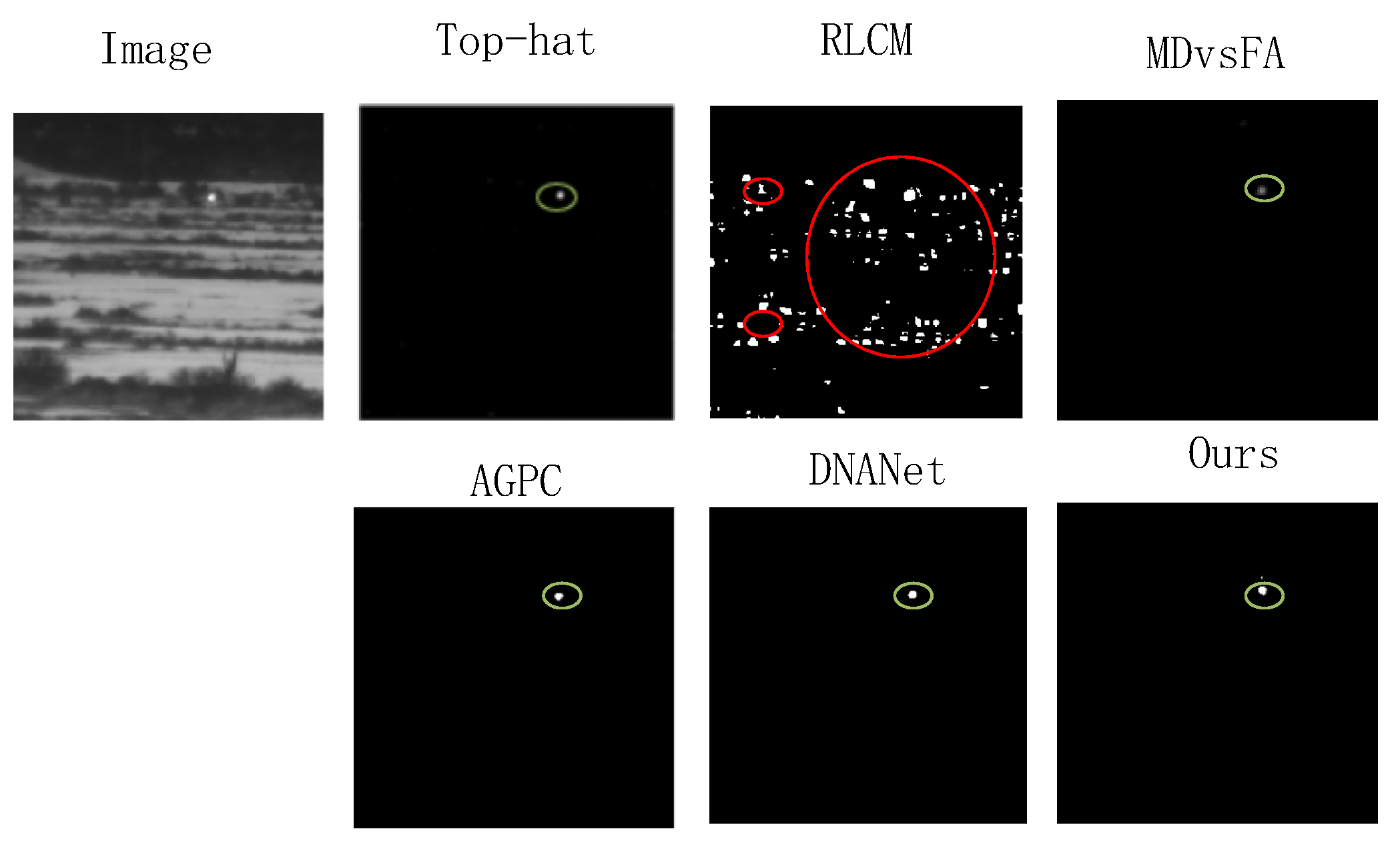

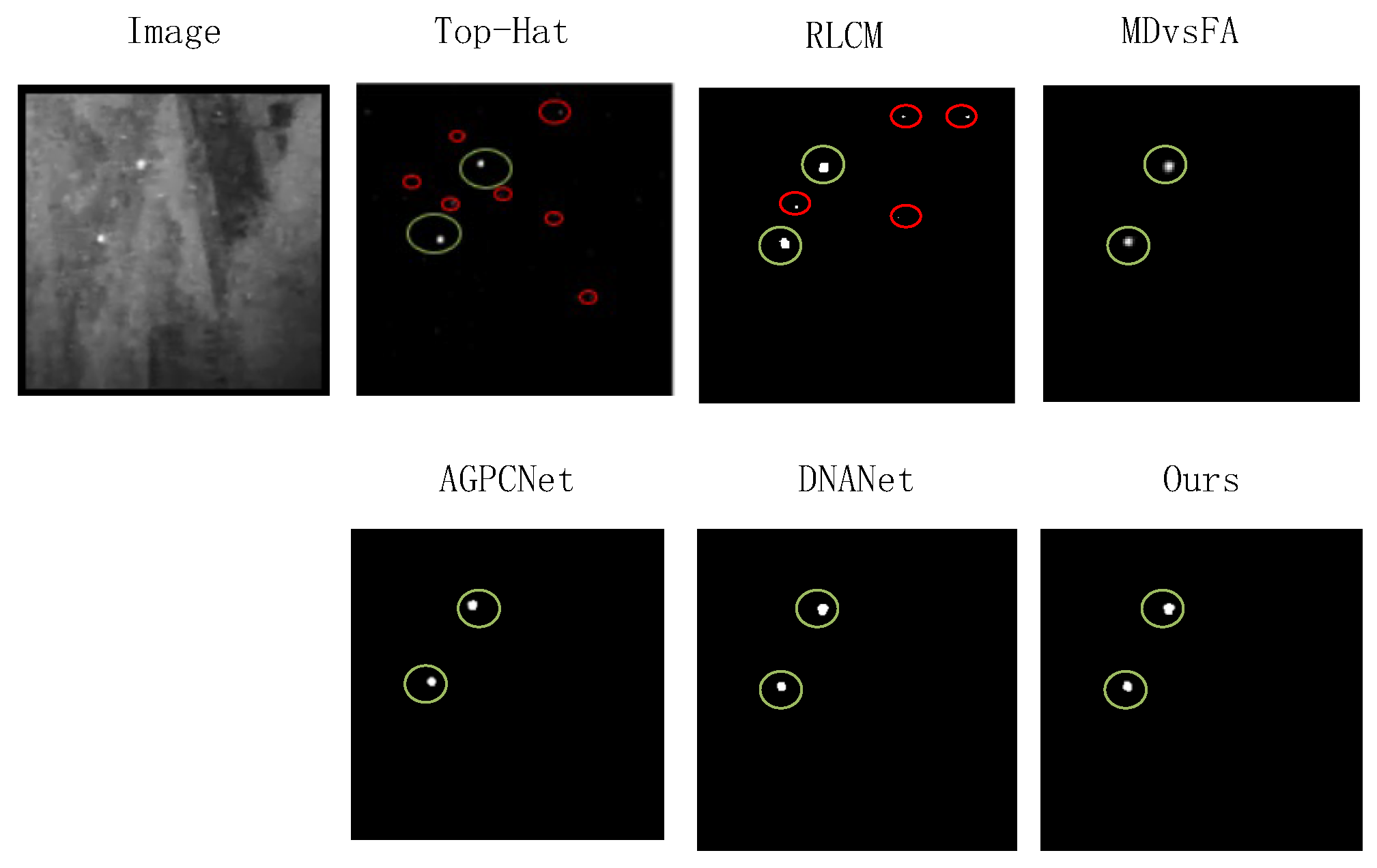

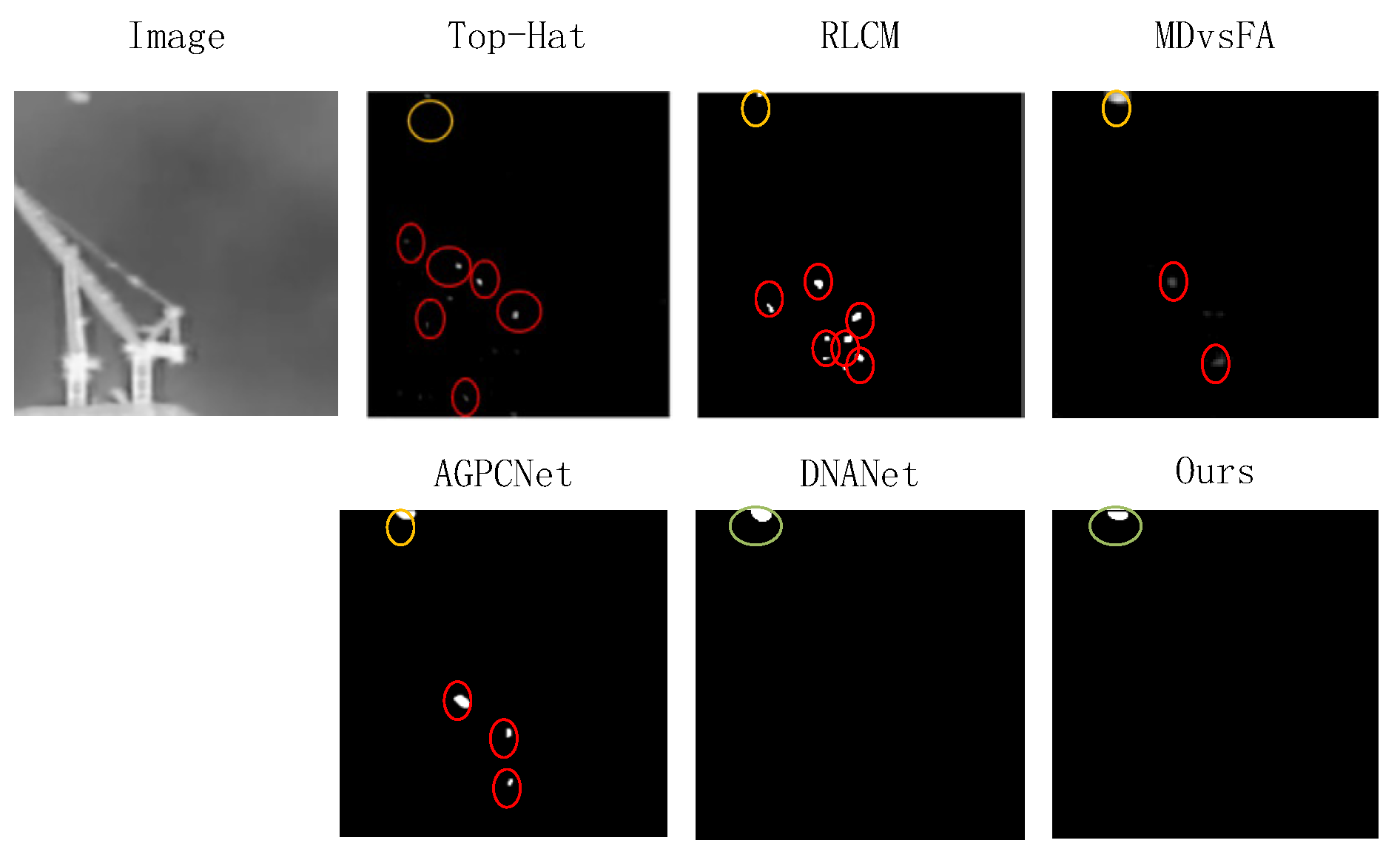

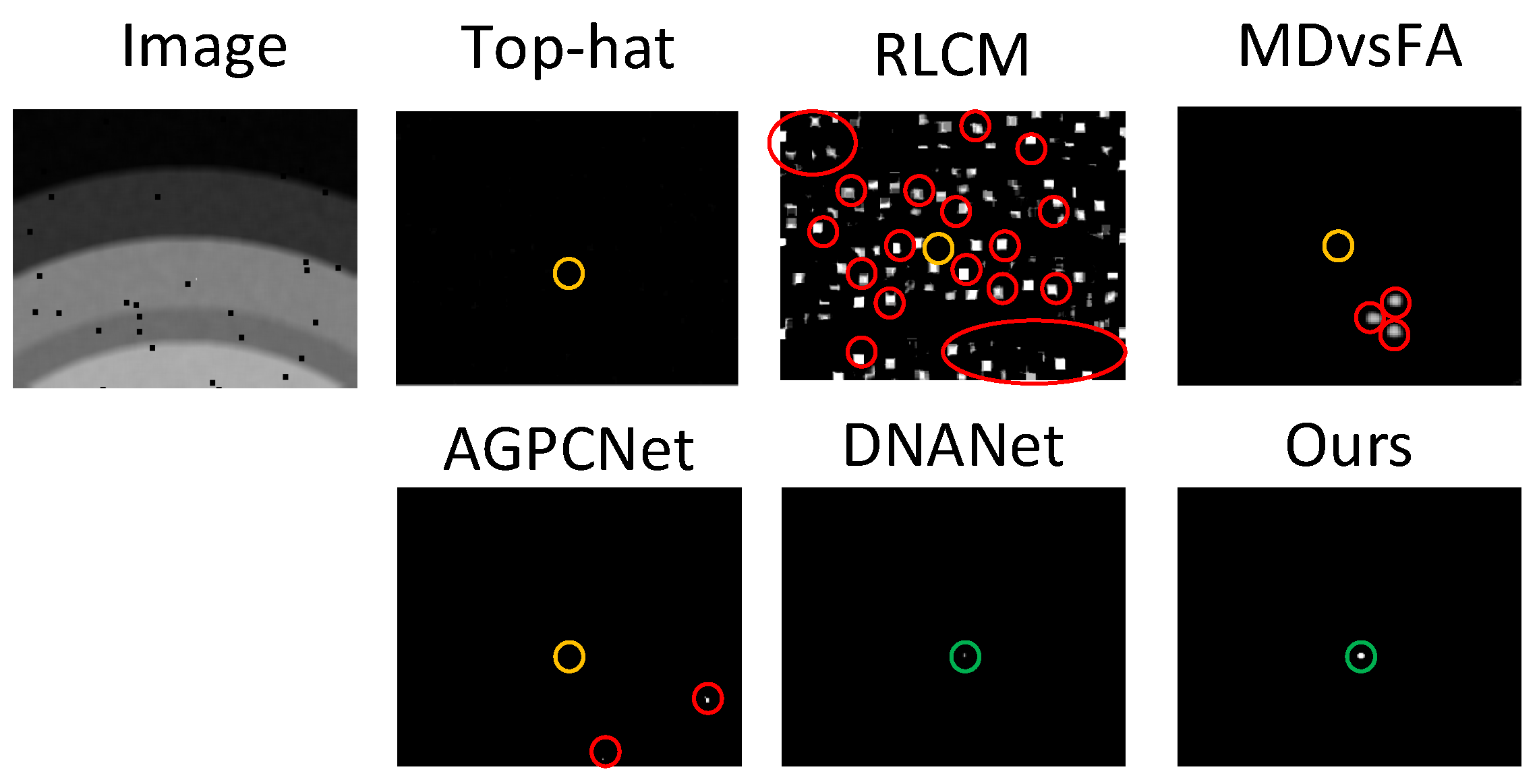

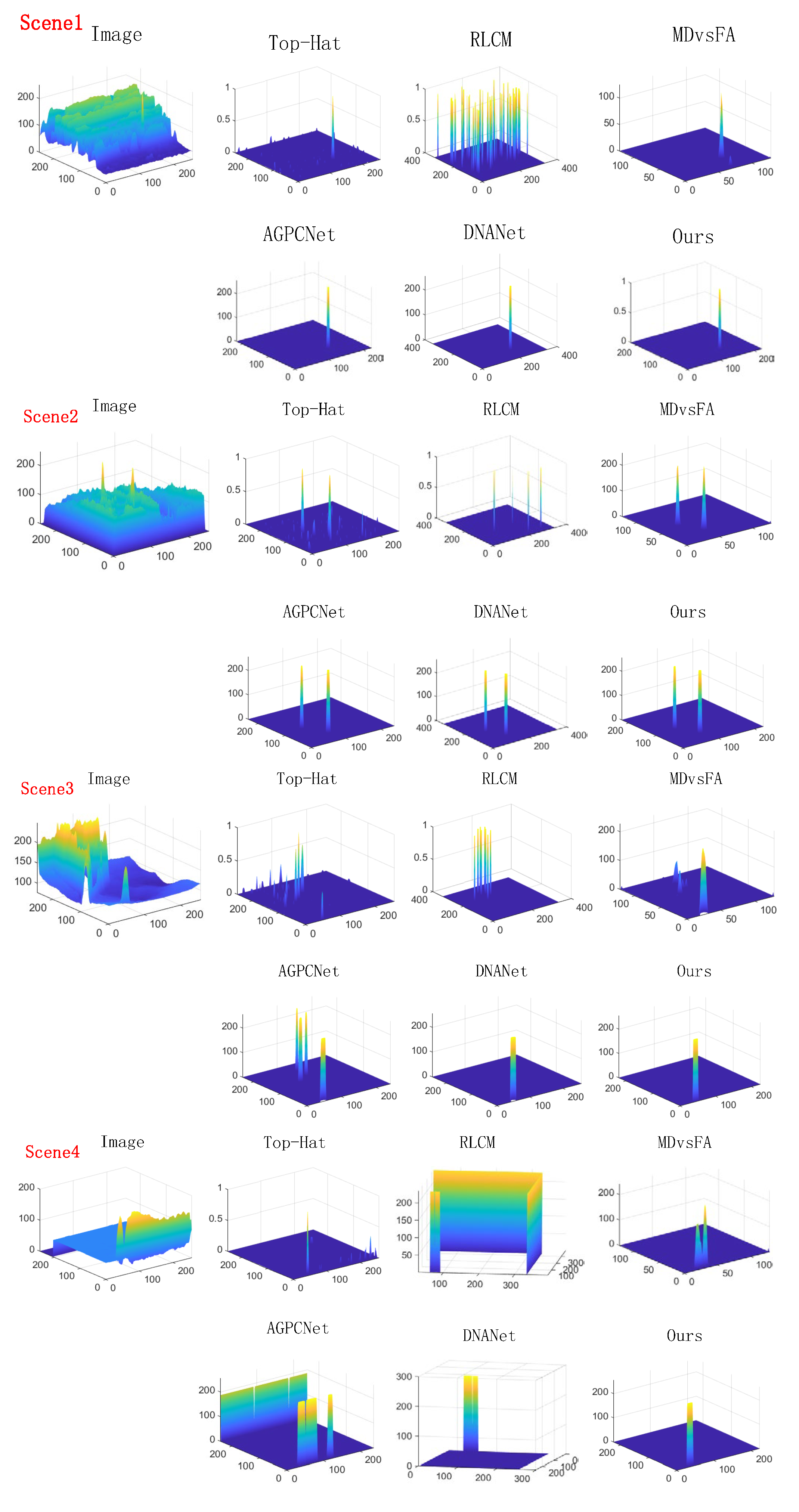

3.5. Comparison with the State-of-the-Arts

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huang, S.; Liu, Y.; He, Y.; Zhang, T.; Peng, Z. Structure-Adaptive clutter suppression for infrared small target detection: Chain-growth filtering. Remote Sens. 2020, 12, 47. [Google Scholar] [CrossRef]

- Li, Z.; Liao, S.; Zhao, T. Infrared Dim and Small Target Detection Based on Strengthened Robust Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Z.; Zhang, P.; He, Y. Infrared Small Target Detection via Nonnegativity-Constrained Variational Mode Decomposition. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1700–1704. [Google Scholar] [CrossRef]

- Liu, C.; Xie, F.; Dong, X.; Gao, H.; Zhang, H. Small Target Detection from Infrared Remote Sensing Images Using Local Adaptive Thresholding. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1941–1952. [Google Scholar] [CrossRef]

- Liu, D.; Cao, L.; Li, Z.; Liu, T.; Che, P. Infrared small target detection based on flux density and direction diversity in gradient vector field. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2528–2554. [Google Scholar] [CrossRef]

- Wang, C.; Qin, S. Adaptive detection method of infrared small target based on target-background separation via robust principal component analysis. Infrared Phys. Technol. 2015, 69, 123–135. [Google Scholar] [CrossRef]

- Ranka, S.; Sahni, S. Efficient serial and parallel algorithms for median filtering. IEEE Trans. Signal Process. 1991, 39, 1462–1466. [Google Scholar] [CrossRef]

- Han, J.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A robust infrared small target detection algorithm based on human visual system. IEEE Geosci. Remote Sens. Lett. 2014, 11, 2168–2172. [Google Scholar] [CrossRef]

- He, Y.; Li, M.; Zhang, J.; An, Q. Small infrared target detection based on low-rank and sparse representation. Infrared Phys. Technol. 2015, 68, 98–109. [Google Scholar] [CrossRef]

- Deshpande, S.D.; Er, M.H.; Ronda, V.; Chan, P. Max-mean and max-median filters for detection of small-targets. Proc. SPIE Int. Soc. Opt. Eng. 1999, 3809, 74–83. [Google Scholar]

- Zhang, Y.; Zheng, L.; Zhang, Y. Small Infrared Target Detection via a Mexican-Hat Distribution. Appl. Sci. 2019, 9, 5570. [Google Scholar] [CrossRef]

- Zhou, J.; Lv, H.; Zhou, F. Infrared small target enhancement by using sequential top-hat filters. Proc. SPIE Int. Soc. Opt. Eng. 2014, 9301, 417–421. [Google Scholar] [CrossRef]

- Deng, L.; Zhu, H.; Zhou, Q.; Li, Y. Adaptive top-hat filter based on quantum genetic algorithm for infrared small target detection. Multimed. Tools Appl. 2018, 77, 10539–10551. [Google Scholar] [CrossRef]

- Gao, J.; Lin, Z.; An, W. Infrared small target detection using a temporal variance and spatial patch contrast filter. IEEE Access 2019, 7, 32217–32226. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B. Effective Infrared Small Target Detection Utilizing a Novel Local Contrast Method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Han, J.; Yu, Y.; Liang, K.; Zhang, H. Infrared small-target detection under complex background based on subblock-level ratio-difference joint local contrast measure. Opt. Eng. 2018, 57, 103105. [Google Scholar] [CrossRef]

- Wei, Y.; You, X.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recognit. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Xia, C.; Li, X.; Zhao, L.; Shu, R. Infrared Small Target Detection Based on Multiscale Local Contrast Measure Using Local Energy Factor. IEEE Geosci. Remote Sens. Lett. 2020, 17, 157–161. [Google Scholar] [CrossRef]

- Han, J.; Ma, Y.; Huang, J.; Mei, X.; Ma, J. An Infrared Small Target Detecting Algorithm Based on Human Visual System. IEEE Geosci. Remote Sens. Lett. 2016, 13, 452–456. [Google Scholar] [CrossRef]

- Hsieh, T.-H.; Chou, C.-L.; Lan, Y.-P.; Ting, P.-H.; Lin, C.-T. Fast and Robust Infrared Image Small Target Detection Based on the Convolution of Layered Gradient Kernel. IEEE Access 2021, 9, 94889–94900. [Google Scholar] [CrossRef]

- Wu, L.; Fang, S.; Ma, Y.; Fan, F.; Huang, J. Infrared small target detection based on gray intensity descent and local gradient watershed. Infrared Phys. Technol. 2022, 123, 104171. [Google Scholar] [CrossRef]

- Lu, X.; Bai, X.; Li, S.; Hei, X. Infrared Small Target Detection Based on the Weighted Double Local Contrast Measure Utilizing a Novel Window. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.; Wu, Y.; Dai, Y. Small target detection based on reweighted infrared patch-image model. Iet Image Process. 2018, 12, 70–79. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, L.; Zhang, T.; Cao, S.; Peng, Z. Infrared Small Target Detection via Non-Convex Rank Approximation Minimization Joint l(2,1) Norm. Remote Sens. 2018, 10, 1821. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y. Reweighted Infrared Patch-Tensor Model with Both Nonlocal and Local Priors for Single-Frame Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef]

- Zhang, T.; Peng, Z.; Wu, H.; He, Y.; Li, C.; Yang, C. Infrared small target detection via self-regularized weighted sparse model. Neurocomputing 2021, 420, 124–148. [Google Scholar] [CrossRef]

- Liu, T.; Yang, J.; Li, B.; Xiao, C.; Sun, Y.; Wang, Y.; An, W. Nonconvex Tensor Low-Rank Approximation for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Rawat, S.S.; Alghamdi, S.; Kumar, G.; Alotaibi, Y.; Khalaf, O.I.; Verma, L. Infrared Small Target Detection Based on Partial Sum Minimization and Total Variation. Mathematics 2022, 10, 671. [Google Scholar] [CrossRef]

- Ryu, J.; Kim, S. Small infrared target detection by data-driven proposal and deep learning-based classification. Proc. SPIE—Int. Soc. Opt. Eng. 2018, 10624, 134–143. [Google Scholar] [CrossRef]

- Hou, Q.; Wang, Z.; Tan, F.; Zhao, Y.; Zheng, H.; Zhang, W. RISTDnet: Robust Infrared Small Target Detection Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Attentional Local Contrast Networks for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2021, 59, 9813–9824. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss detection vs. false alarm: Adversarial learning for small object segmentation in infrared images. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8508–8517. [Google Scholar] [CrossRef]

- Kim, J.-H.; Hwang, Y. GAN-Based Synthetic Data Augmentation for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Wang, K.; Du, S.; Liu, C.; Cao, Z. Interior Attention-Aware Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Li, B.; Xiao, C.; Wang, L.; Wang, Y.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. IEEE Trans. Image Process. 2022, 32, 1745–1758. [Google Scholar] [CrossRef]

- Chen, G.; Wang, W.; Tan, S. IRSTFormer: A Hierarchical Vision Transformer for Infrared Small Target Detection. Remote Sens. 2022, 14, 3258. [Google Scholar] [CrossRef]

- Zuo, Z.; Tong, X.; Wei, J.; Su, S.; Wu, P.; Guo, R.; Sun, B. AFFPN: Attention Fusion Feature Pyramid Network for Small Infrared Target Detection. Remote Sens. 2022, 14, 3412. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric contextual modulation for infrared small target detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 949–958. [Google Scholar] [CrossRef]

- Zhang, T.; Cao, S.; Pu, T.; Peng, Z. AGPCNet: Attention-guided pyramid context networks for infrared small target detection. arXiv 2021, arXiv:2111.03580. [Google Scholar]

- Wang, A.; Li, W.; Wu, X.; Huang, Z.; Tao, R. Mpanet: Multi-Patch Attention for Infrared Small Target Object Detection. arXiv 2022, arXiv:2206.02120. [Google Scholar] [CrossRef]

- Hou, Q.; Zhang, L.; Tan, F.; Xi, Y.; Zheng, H.; Li, N. ISTDU-Net: Infrared Small-Target Detection U-Net. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Computer Vision—ECCV 2018. ECCV 2018. Lecture Notes in Computer Science; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; Volume 11211. [Google Scholar]

- Rukundo, O. Normalized Weighting Schemes for Image Interpolation Algorithms. Appl. Sci. 2023, 13, 1741. [Google Scholar] [CrossRef]

| Layers | Input Channel/Output Channel | Input Size/ Output Size |

|---|---|---|

| Translayer | 8/16 | 256 × 256/256 × 256 |

| DFEM1 | 16/64 | 256 × 256/256 × 256 |

| DFEM2 | 64/128 | 256 × 256/128 × 128 |

| DFEM3 | 128/256 | 128 × 128/64 × 64 |

| DFEM4 | 256/512 | 64 × 64/32 × 32 |

| Convolution Depth | Precision | Recall | mIoU | F-Measure | AUC |

|---|---|---|---|---|---|

| 3 | 0.7458 | 0.7765 | 0.6140 | 0.7608 | 0.9024 |

| 9 | 0.8517 | 0.8283 | 0.7239 | 0.8399 | 0.9193 |

| 13 | 0.8058 | 0.7581 | 0.6410 | 0.7812 | 0.8837 |

| Methods | Precision | Recall | mIoU | F-Measure | AUC |

|---|---|---|---|---|---|

| Top-Hat | 0.2568 | 0.5213 | 0.2875 | 0.3441 | 0.8341 |

| RLCM | 0.4513 | 0.6021 | 0.2165 | 0.5159 | 0.8797 |

| MDvsFA | 0.6750 | 0.7266 | - | 0.6999 | - |

| AGPCNet | 0.6881 | 0.9250 | 0.6518 | 0.7892 | 0.9689 |

| DNANet | 0.8174 | 0.7692 | 0.7046 | 0.7926 | - |

| Ours | 0.6948 | 0.9413 | 0.6660 | 0.7995 | 0.9784 |

| Methods | Precision | Recall | mIoU | F-Measure | AUC |

|---|---|---|---|---|---|

| Top-Hat | 0.5972 | 0.0677 | 0.1688 | 0.1451 | 0.7541 |

| RLCM | 0.8456 | 0.1864 | 0.1652 | 0.1984 | 0.8010 |

| MDvsFA | 0.6408 | 0.7982 | - | 0.7109 | - |

| AGPCNet | 0.8057 | 0.8041 | 0.6735 | 0.8049 | 0.9160 |

| DNANet | 0.7772 | 0.7084 | 0.6067 | 0.7412 | - |

| Ours | 0.8517 | 0.8283 | 0.7239 | 0.8399 | 0.9193 |

| Methods | Precision | Recall | mIoU | F-Measure | AUC |

|---|---|---|---|---|---|

| Top-Hat | 0.0486 | 0.1048 | 0.0265 | 0.0664 | 0.7023 |

| RLCM | 0.6329 | 0.1580 | 0.1452 | 0.2529 | 0.8362 |

| MDvsFA | 0.6585 | 0.5297 | - | 0.5623 | 0.9025 |

| AGPCNet | 0.5601 | 0.7017 | 0.4524 | 0.6299 | 0.8429 |

| DNANet | 0.5210 | 0.6782 | 0.4613 | 0.5893 | - |

| Ours | 0.5593 | 0.7480 | 0.4706 | 0.6400 | 0.8748 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Zhang, Y.; Xu, Y.; Yuan, R.; Li, S. Residual Depth Feature-Extraction Network for Infrared Small-Target Detection. Electronics 2023, 12, 2568. https://doi.org/10.3390/electronics12122568

Wang L, Zhang Y, Xu Y, Yuan R, Li S. Residual Depth Feature-Extraction Network for Infrared Small-Target Detection. Electronics. 2023; 12(12):2568. https://doi.org/10.3390/electronics12122568

Chicago/Turabian StyleWang, Lizhe, Yanmei Zhang, Yanbing Xu, Ruixin Yuan, and Shengyun Li. 2023. "Residual Depth Feature-Extraction Network for Infrared Small-Target Detection" Electronics 12, no. 12: 2568. https://doi.org/10.3390/electronics12122568

APA StyleWang, L., Zhang, Y., Xu, Y., Yuan, R., & Li, S. (2023). Residual Depth Feature-Extraction Network for Infrared Small-Target Detection. Electronics, 12(12), 2568. https://doi.org/10.3390/electronics12122568