An Optimal Network-Aware Scheduling Technique for Distributed Deep Learning in Distributed HPC Platforms

Abstract

:1. Introduction

2. Background and Related Works

2.1. Container Orchestration

2.2. Distributed HPC

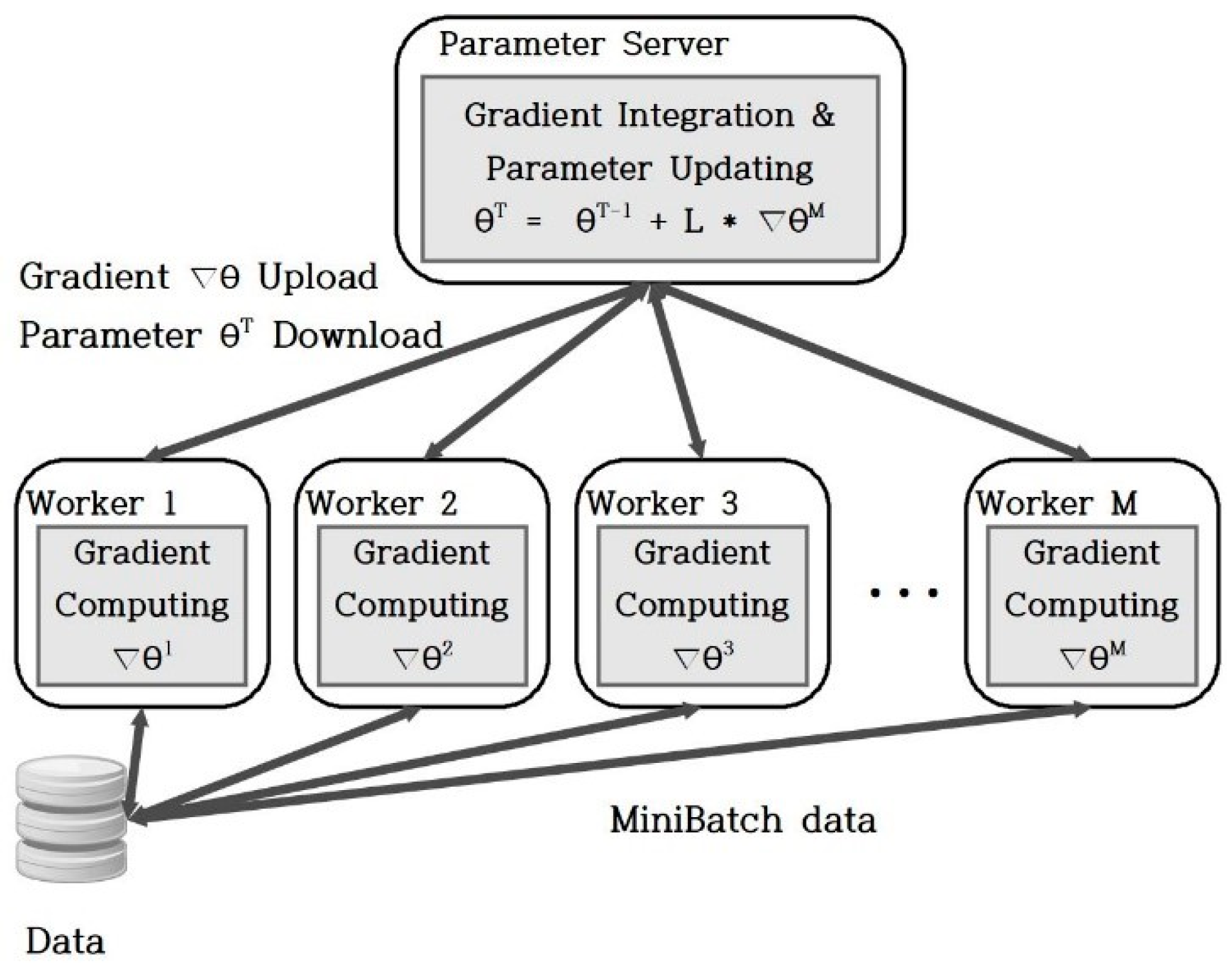

2.3. Distributed Machine/Deep Learning

3. Proposed Solution

3.1. Monitoring Architecture for Distributed HPC Platform

3.2. An Optimal Network-Aware Scheduling Technique

- Determine the requirements of the task in terms of resources such as CPUs, GPUs, memory, etc.

- Filter the zones based on their proximity to the intended users and select the nearest zone.

- Filter the servers within the selected zone based on the required resources and their availability.

- Rank the remaining servers based on the network parameters such as delay, loss, and bandwidth.

- Select the top-ranked servers and assign the task to them.

- It receives the node list of the zone and the required number of nodes as input values.

- When checking the network performance between nodes, it is not necessary to check the same nodes, so the first node in the node list is included in the exclusion list.

- Check the network performance between all nodes in the zone. At this time, both sides are checked; high values are used for loss and delay; low values are used for bandwidth; and scores are obtained using the formula mentioned above.

- Scores are summed to calculate a total score. At this time, if there are previous combined scores, they are added and stored.

- The total score is saved again to the previous total score.

- The node with the highest total score is selected, and that node is added to the exclusion node list.

- After that, the number of nodes to be selected is additionally selected through the process of 4–6 again.

| Pseudo code: Scheduling for Selecting Nodes |

| scheduleSelectNode(Nodelist[], NeedNodeNumber) #Get Available NodeList[] and NeedNodeNumber for j in range NeedNodeNumber for i in range nodeinzone if NodeList[i] not in ExclusionList if vα ≥ v’α and 20 − vα * wα > 0 tα = 20 − vα * wα else if vα < v’α and 20 − v’α * wα > 0 tα = 20 − v’α * wα else tα = 0 if vβ ≥ v’β and 40 − vβ * wβ > 0 tβ = 40 − vβ * wβ else if vβ < v’β and 40 − v’β * wβ > 0 tβ = 40 − v’β * wβ else tβ = 0 if vγ ≥ v’γ tγ = 40 * v’γ * wγ else tγ = 40 * vγ * wγ if NodeList[i] in pretotal[] total[i] = pretotal[i] + tα + tβ + tγ else total[i] = tα + tβ + tγ pretotal[] = total[] Node = max(total[]) # Selected nodes are excluded from scoring for the next selection selectlist append Node ExclusionList append Node Return SelectList[] |

4. Experiments and Results

4.1. Experimental Testbed

4.2. Test Case I: Nodes Selection Using Scheduler

4.3. Test Case II: Low Bandwidth Scenario

4.4. Test Case III: Higher Loss Scenario

4.5. Test Case IV: Higher Delay Scenario

4.6. Performance Improvement

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Peng, Y.; Zhu, Y.; Chen, Y.; Bao, Y.; Yi, B.; Lan, C.; Wu, C.; Guo, C. A generic communication scheduler for distributed dnn training acceleration. In Proceedings of the 27th ACM Symposium on Operating Systems Principles, Huntsville, ON, Canada, 27 October 2019; pp. 16–29. [Google Scholar]

- Hashemi, S.H.; Jyothi, S.A.; Campbell, R. Tictac: Accelerating distributed deep learning with communication scheduling. Proc. Mach. Learn. Syst. 2019, 1, 418–430. [Google Scholar]

- Li, M.; Andersen, D.G.; Smola, A.J.; Yu, K. Communication efficient distributed machine learning with the parameter server. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Yao, L.; Ge, Z. Scalable learning and probabilistic analytics of industrial big data based on parameter server: Framework, methods and applications. J. Process Control 2019, 78, 13–33. [Google Scholar] [CrossRef]

- Patarasuk, P.; Yuan, X. Bandwidth optimal all-reduce algorithms for clusters of workstations. J. Parallel Distrib. Comput. 2009, 69, 117–124. [Google Scholar] [CrossRef] [Green Version]

- Smarr, L.; Crittenden, C.; DeFanti, T.; Graham, J.; Mishin, D.; Moore, R.; Papadopoulos, P.; Würthwein, F. The pacific research platform: Making high-speed networking a reality for the scientist. In Proceedings of the Practice and Experience on Advanced Research Computing, Oregon, OR, USA, 22 July 2018; pp. 1–8. [Google Scholar]

- Ki-hyeon, K.; Jeong-hoon, M.; Woo Chang, K.; Byungyeon, P.; Woojin, S.; Won-taek, H.; Sang-kwon, L.; Jinyong, J.; Taejin, Y.; Jaehein, C.; et al. Connecting Method Research of Distributed Computing for AI Research Based on ScienceDMZ. J. Korean Inst. Commun. Inf. Sci. 2021, 46, 1006–1022. [Google Scholar]

- Asif Raza Shah, S.; Moon, J.; Kim, K.-H.; Asif Khan, M.; Wati, N.A.; Howard, A. HPC4Asia: A Distributed HPC Research Platform for Educationists and Researchers of Asian Countries. Platf. Technol. Lett. 2023, 8. [Google Scholar] [CrossRef]

- Jayarajan, A.; Wei, J.; Gibson, G.; Fedorova, A.; Pekhimenko, G. Priority-based parameter propagation for distributed DNN training. Proc. Mach. Learn. Syst. 2019, 1, 132–145. [Google Scholar]

- Shi, S.; Wang, Q.; Chu, X.; Li, B. A DAG model of synchronous stochastic gradient descent in distributed deep learning. In Proceedings of the 2018 IEEE 24th International Conference on Parallel and Distributed Systems (ICPADS), Singapore, 11 December 2018; pp. 425–432. [Google Scholar]

- Amiri, M.M.; Gündüz, D. Machine learning at the wireless edge: Distributed stochastic gradient descent over-the-air. IEEE Trans. Signal Process. 2020, 68, 2155–2169. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Zhu, Y.; Lan, C.; Yi, B.; Cui, Y.; Guo, C. A unified architecture for accelerating distributed {DNN} training in heterogeneous {GPU/CPU} clusters. In Proceedings of the 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), Banff, AB, Canada, 4 November 2020; pp. 463–479. [Google Scholar]

- Wang, H.; Liu, Z.; Shen, H. Machine learning feature based job scheduling for distributed machine learning clusters. In IEEE/ACM Transactions on Networking; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- perfSONAR. Available online: https://www.perfsonar.net (accessed on 26 June 2023).

- MaDDash. Available online: https://docs.perfsonar.net/maddash_intro (accessed on 26 June 2023).

- Nautilus. Available online: https://nautilus.optiputer.net (accessed on 26 June 2023).

- Peng, Y.; Bao, Y.; Chen, Y.; Wu, C.; Guo, C. Optimus: An efficient dynamic resource scheduler for deep learning clusters. In Proceedings of the Thirteenth EuroSys Conference, Porto, Portugal, 23 April 2018; pp. 1–14. [Google Scholar]

- Gu, J.; Chowdhury, M.; Shin, K.G.; Zhu, Y.; Jeon, M.; Qian, J.; Liu, H.H.; Guo, C. Tiresias: A GPU Cluster Manager for Distributed Deep Learning. NSDI 2019, 19, 485–500. [Google Scholar]

- Zhao, Y.; Liu, Y.; Peng, Y.; Zhu, Y.; Liu, X.; Jin, X. Multi-resource interleaving for deep learning training. In Proceedings of the ACM SIGCOMM 2022 Conference, New York, NY, USA, 22 August 2022; pp. 428–440. [Google Scholar]

- Zhang, Z.; Chang, C.; Lin, H.; Wang, Y.; Arora, R.; Jin, X. Is network the bottleneck of distributed training? In Proceedings of the Workshop on Network Meets AI & ML, New York, NY, USA, 14 August 2020; pp. 8–13. [Google Scholar]

- Shi, S.; Wang, Q.; Zhao, K.; Tang, Z.; Wang, Y.; Huang, X.; Chu, X. A distributed synchronous SGD algorithm with global top-k sparsification for low bandwidth networks. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7 July 2019; pp. 2238–2247. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Raza Shah, S.A.; Seok, W.; Moon, J.; Kim, K.; Raza Shah, S.H. An Optimal Network-Aware Scheduling Technique for Distributed Deep Learning in Distributed HPC Platforms. Electronics 2023, 12, 3021. https://doi.org/10.3390/electronics12143021

Lee S, Raza Shah SA, Seok W, Moon J, Kim K, Raza Shah SH. An Optimal Network-Aware Scheduling Technique for Distributed Deep Learning in Distributed HPC Platforms. Electronics. 2023; 12(14):3021. https://doi.org/10.3390/electronics12143021

Chicago/Turabian StyleLee, Sangkwon, Syed Asif Raza Shah, Woojin Seok, Jeonghoon Moon, Kihyeon Kim, and Syed Hasnain Raza Shah. 2023. "An Optimal Network-Aware Scheduling Technique for Distributed Deep Learning in Distributed HPC Platforms" Electronics 12, no. 14: 3021. https://doi.org/10.3390/electronics12143021