1. Introduction

Malware, often known as malicious software, is a category of software that is specifically created to cause harm to, or to damage, computer systems. Its primary objective is to harm computer systems deliberately, by attacking, penetrating, or obtaining unauthorized access to sensitive digital assets, which may cause unwanted consequences or damage to the system [

1]. In 2020, on average, 360,000 new malware files were discovered daily, increasing by 5.2% [

2]. The proliferation of malware has been facilitated by the availability and utilization of sophisticated and automated malware creation tools, such as Zeus SpyEye, as well as denial-of-service (DoS) attacks [

3]. Emerging blended attacks pose more significant threats, combining multiple assault types for an increased impact. Hacking, spoofing, phishing, and spyware incidents are surging, with a noticeable rise in deceptive phishing attacks. The vulnerable Internet of Things (IoT) faces cyberattacks and viruses, resulting in data breaches and manipulation, and profoundly affecting society. This information highlights the severe threat that malware and cyberattacks pose to our interconnected digital world [

4,

5,

6]. Efficient cybersecurity is vital to safeguarding IoT users from malware threats, on connected devices and smart appliances. Malware detection techniques have evolved from labor-intensive manual labeling to advanced hybrid systems [

7]. The application of association rule mining and various other techniques to anti-malware software has increased the creation of new malware. Malware classification involves dynamic analysis (observing malware behavior during its runtime) and static analysis (examining the properties of malware binaries without their execution) [

8]. Dynamic analysis is a widely used technique for malware analysis, but it has drawbacks, such as the analysis being time-consuming, and the potential damage caused by the malware. New methods [

9,

10,

11,

12,

13] have been developed to overcome these limitations. Traditional malware detection techniques rely on feature engineering and expert knowledge, but struggle to keep up with rapid malware development. Signature-based methods are also becoming insufficient against new automatic malware generation techniques. To enhance detection capabilities, new techniques are required [

14,

15]. Machine learning models have become more popular recently in various fields, and the latest platforms have adopted image processing methods and deep learning or machine learning techniques to categorize malware. The most popular method for identifying malware based on its features is to merge machine learning algorithms and artificial neural networks (ANNs) into more complicated designs, such as ensemble learning [

8,

9,

10,

11,

12,

13,

14,

15]. Several studies have used neural networks (NNs) and support vector machines (SVMs) as popular choices for malware classification and adversarial attacks [

16]. Furthermore, feature selection and classifier hyperparameter tuning can be accomplished using nature-inspired and metaheuristic optimization techniques, such as a genetic algorithm (GA). These techniques have successfully classified malware [

17,

18]. Deep learning and image processing algorithms are used for malware classification without intensive feature engineering. Converting malware binaries into images helps identify specific malware types, as different types within a family share identical image structures. In recent years, researchers have used more complex neural network architectures to improve the malware classification accuracy by combining convolutional neural networks (CNNs) and recurrent neural networks (RNNs), such as long short-term memory (LSTM), with other machine learning models or hybrid models [

19,

20,

21]. This study employs advanced data augmentation techniques to enhance the performance of the malware detection model. Traditional signature and heuristic approaches to malware identification do not offer an adequate level of detection for novel and previously unidentified kinds of malware. This will determine whether or not ML techniques can be applied to resolve this issue. Advanced deep learning techniques, in conjunction with transfer learning [

22] strategies without the requirement for in-depth security knowledge, are employed to increase the robustness and accuracy of malware detection.

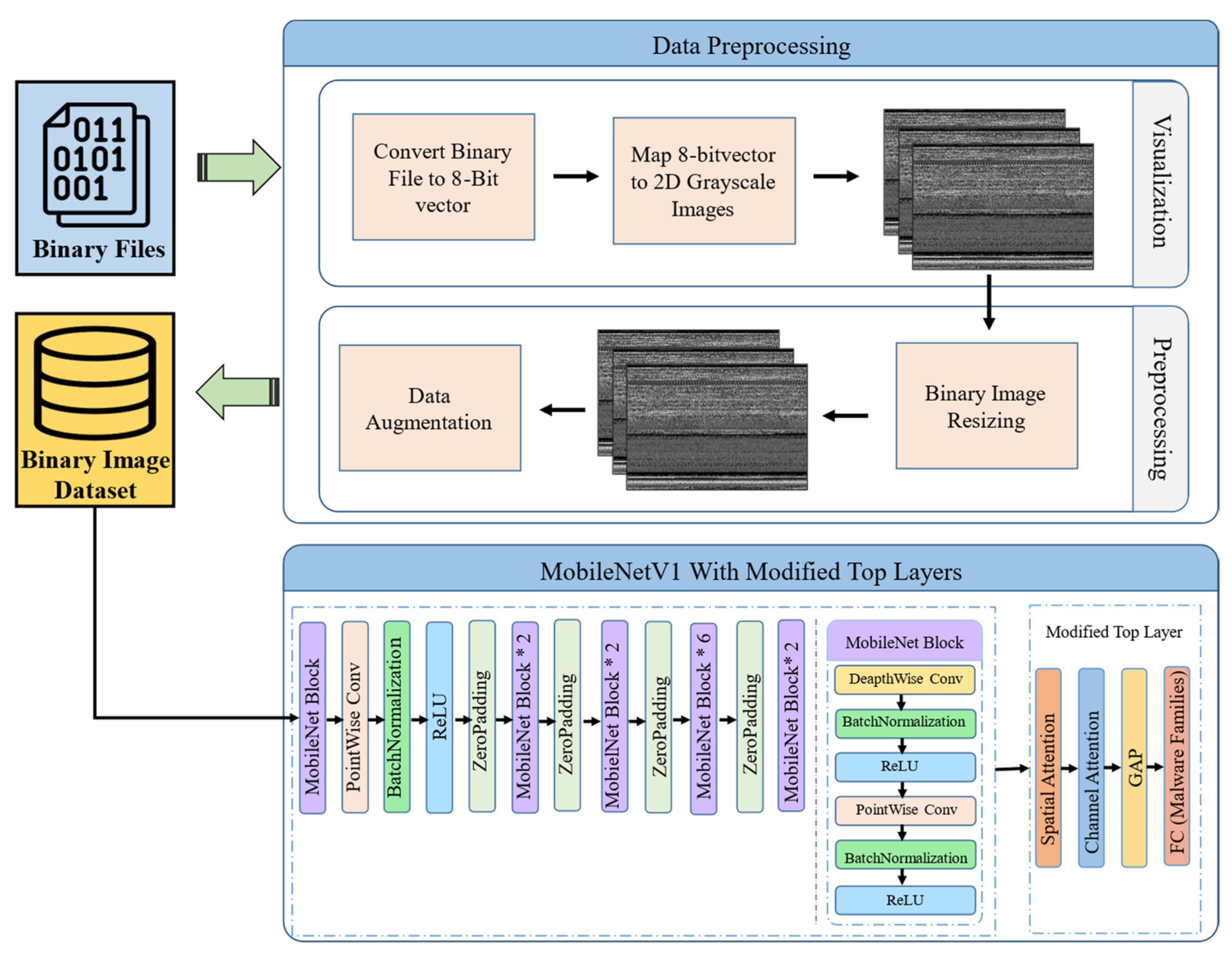

This study aims to develop a state-of-the-art solution, using cutting-edge methods for malware recognition. The contributions of this study are outlined below:

Improved Accuracy with Spatial Attention: The proposed model incorporates spatial attention mechanisms, improving the malware classification accuracy. By selectively focusing on the relevant input regions, the model achieves a higher precision in feature extraction, and an enhanced classification performance.

Novel Combination of Attention Mechanisms: The study introduces a unique combination of spatial attention and convolutional neural networks, shedding light on the effectiveness of dual-attention mechanisms in malware classification. This approach provides valuable insights into optimizing attention mechanisms for computer vision tasks.

Enhancement of MobileNetv1 Performance: By integrating spatial attention and channel attention mechanisms, the proposed model effectively enhances the performance of the MobileNetv1 model. This advancement contributes to improving the accuracy of computer vision models in various applications, beyond malware classification.

Practical Application in Malware Detection: The experimental results demonstrate the proposed model’s practicality as a trustworthy method for image-based malware detection. Its high accuracy, comparable to more complex solutions, showcases its potential for real-world deployment in cybersecurity systems.

The paper is organized as follows. Firstly, the related work in the field of malware detection techniques, including feature engineering and attention mechanisms, is discussed. This section provides an overview of the existing approaches, and highlights the need for novel techniques to address the limitations of traditional methods. Next, the proposed methodology is presented, outlining the integration of spatial attention and convolutional neural networks for malware classification. The architecture and design choices are explained in detail, emphasizing the advantages of the proposed model. Subsequently, the experimental setup and results are presented, demonstrating the model’s performance on the Malimg benchmark dataset. Evaluation metrics such as the precision, recall, specificity, and F1 score are used to assess the accuracy and robustness of the model. Additionally, an ablation study is conducted, to analyze the impact of different components of the proposed model on its performance. Finally, the paper concludes with a summary of its contributions. It highlights the potential of deep learning frameworks and attention mechanisms to enhance cybersecurity measures, in the face of evolving malware threats.

In a study by Rezende et al. [

23], ResNet-50 was utilized to create a neural network architecture that used transfer learning. The uniform Glorot approach was utilized for weight initialization, and the input to the network was made up of RGB images, with dimensions of 224 × 224. The model was trained for 750 epochs, using Adam optimization, with the final accuracy of 98.62% obtained using 10-fold cross-validation. With the addition of GIST features and the k-nearest neighbors algorithm (kNN), with k set to 4, the accuracy was 97.48%. Once the bottleneck features were applied, the accuracy reached 98.0%. Khan et al. [

24] performed a thorough analysis, including the data preparation pipeline and top model, to assess the efficiency of transfer learning in malware classification, using ResNet and GoogleNet. The analysis of ResNet’s 18, 34, 50, 101, and 152 performance yielded accuracy ratings of 83%, 86.51%, 86.62%, 85.94%, and 87.98%. On the other hand, GoogleNet provided an accuracy of 84%. Vasan et al. [

25] utilized an ensemble model consisting of VGG16 and ResNet-50, both of which were tuned; their model was adjusted on 50 epochs, the CNN model was trained on 100 to 200 epochs, and the final result was an accuracy of 99.50%. In addition, ref. [

26] 90% of the features in the dataset were reduced using PCA, before being fed into a one-versus-all multiclass SVM. Yosinski et al. [

27] presented a model with 15 classes developed using a dataset of 7087 samples. We used a variety of feature extraction algorithms, and our most outstanding accuracy was 97.47%. Nataraj et al. [

25] used machine learning algorithms such as kNN, and feature extraction techniquess such as GIST descriptors in their study, achieving an accuracy of 97%. They calculated the bigram distributions, and employed static feature categorization in their technique. The key problem of this method is that if an attacker is aware of the functions being utilized, they can evade detection by implementing countermeasures. Akarsh et al. [

28] examined the integration of hybrid LSTMs or CNNs with SVMs and other hybrid SVM architectures, as well as different deep learning models. In their investigation, the hybrid GRU-SVM and MLP-SVM models outperformed the CNN-SVM model in accuracy, scoring 84.92% and 80.46%, respectively. Akarsh et al. [

29] proposed a hybrid CNN-LSTM model with a novel method of picture manipulation. They suggested a two-layer CNN, with an LSTM layer with 70 memory blocks, an F.C.N. layer with 25 units, and a categorical cross-entropy softmax layer as its foundation. The model’s ultimate accuracies ranged from 96.64% to 96.68% on various data distributions. In a separate study, Akarsh et al. [

30] utilized two layers of 1D CNN and LSTM for feature extraction, along with 70 LSTM memory blocks, and 0.1% dropouts, into their model. They also used a cost-sensitive approach. Their model’s 95.5% accuracy score was its highest. In their study [

31], Sudhakar and Kumar enhanced the ResNet-50 malware classification model, by substituting a fully connected dense layer for the final layer of the pre-trained ImageNet model. The SoftMax layer then used the output from this dense layer to categorize the virus. Ember, an approach proposed by Vinayakumar et al. [

32], used domain-specific knowledge, various features extracted from the analyzed PE (portable performance) files, and format-independent features, such as a raw byte histogram.

The authors of Xiao et al. [

33] proposed MalFCS, a methodology for classifying malware that represented malware binaries as entropy graphs derived from structural entropy. Based on these entropy plots, deep convolutional neural networks were also employed to find shared patterns among the malware family. The malware was finally classified using SVM and the extracted features. In their research, Cui et al. [

34] suggested employing the “Bat Algorithm” for dynamic image resampling, to address dataset imbalance. In their convolutional neural network (CNN) model, they reached 94.5% accuracy by combining this approach with data augmentation techniques. Cui et al. [

35] conducted a study in which they presented a data-smoothing approach using the NSGA-|| genetic algorithm. The results showed that, without data smoothing, their approach yielded an accuracy of 92.1%. However, when a single objective algorithm was used, the accuracy increased to 96.1%. Using the multi-objective algorithm, the highest accuracy of 97.1% was achieved. Jain et al. [

36] utilized extreme learning machines (ELMs) coupled with convolutional neural networks (CNNs), and presented an ensemble model. With a single CNN layer, their model achieved 96.30% accuracy. Similarly, with two CNN layers, the accuracy was 95.7%. In their study, Naeem et al. [

37] employed a hybrid visualization technique based on deep learning and the Internet of Things (IoT). They successfully built models with the great accuracy of up to 98.47% and 98.79%, by incorporating various aspect ratios. It should be emphasized, nonetheless, that this accuracy depended on the image’s dynamic features [

16]. In their research, Venkatraman et al. [

38] showed a self-learning system with a hybrid architecture. By fusing a CNN with BiLSTM and BiGRU, they created hybrid models, which they trained using cost-sensitive and cost-insensitive techniques. Their models, which used a variety of parameters and settings, displayed levels of accuracy between 94.48% and 96.3%. Wu et al. [

39] suggested a convolutional neural network (CNN)-based architecture, with byte-class, gradient, Hilbert, entropy, and hybrid image transform (HIT), using GIST and CNN-based models, as well as many image transformations performed to the input images. They discovered that applying the GIST transformation to their grayscale photos produced an accuracy rate of 94.27%. However, their most potent model used the HIT technique combined with a CNN. El-Shafai et al. [

40] presented a method for classifying various malwares that combined transfer learning with pre-trained CNN models (AlexNet, DenseNet-201, DarkNet-53, ResNet-50, Inception-V3, VGG16, MobileNet-V2, and Places365-GoogleNet). Their research showed that VGG16 demonstrated the best malware recognition performance. Moussas and Andreatos [

41] created a two-layer artificial neural network (ANN) that detected malware using both file and picture data. The malware was categorized using file features at the first level of the ANN, and the perplexing malware families were categorized using malware image features at the second level of the ANN. Roseline et al. [

42] employed deep learning methods to recognize and categorize malware. This method created discriminative representations from the data themselves, rather than depending on manually created feature descriptors. The suggested approach beats deep neural networks at malware detection, thanks to deep file stacking and a simple model.

Verma et al. [

43] suggested an ensemble-learning-based malware classification technique integrating second-order statistical texture features, based on a first-order matrix and grey co-occurrence matrix (GLCM). On the Malimg dataset, they used an extreme learning machine (ELM) classifier with a kernel-based performance of 94.25% accuracy. Çayır et al. [

44] employed the CapsNet file model for malware categorization in their study. CapsNet uses a straightforward approach to architecture engineering, instead of sophisticated CNN architectures and domain-specific feature-engineering methodologies. Additionally, CapsNet is easy to train from scratch, and does not require transfer learning. The authors Wozniak et al. [

45], for Android malware detection, proposed an RNN-LSTM classifier with the NAdam optimization technique. On two benchmark datasets, the performance of their suggested strategy was assessed, and the findings indicated the high accuracy of 99%.

Nisa et al. [

46] used segmentation-based fractal texture analysis (SFTA) to extract features from images containing malware code, and combine them with features from deep neural networks already readying AlexNet and Inception-v3. Different classifiers, including SVM, kNN, and decision trees (DTs) were employed to categorize the features retrieved from malware images. A study by Hemalath et al. [

47] used a weighted class-balanced loss function, along with the DenseNet model. This method successfully addressed the issue of unbalanced data, which significantly increased the classification accuracy of malware photos. MJ Awan et al. [

2] suggested utilizing VGG19 pre-trained deep neural networks as an attention-based model. To extract significant features from VGG19, the attention module was used. As a result, this method performed better, resulting in the high accuracy of 97.62%. S Depuru et al. [

48] proposed a neural network model for classifying malicious attacks, by evaluating different combinations of malware representation methods and convolutional neural network (CNN) models. The selected model achieved the high accuracy of 96%. S Yaseen et al. [

49] utilized deep learning models, specifically convolutional neural networks (CNNs), to classify malware families. By transforming malware binaries into grayscale images, and employing CNNs, the proposed method achieved the impressive accuracy of 97.4%. Mallik et al. [

50] utilized convolutional recurrence with grayscale images, BiLSTM layers, data augmentation, and convolutional neural networks, achieving the remarkable accuracy of 98.36% in malware classification. K Gupta et al. [

51] utilized an artificial neural network architecture to precisely classify malware variants, effectively tackling the challenges posed by obfuscation and compression techniques. The experimental results demonstrated an accuracy of 90.80%.