Pedestrian Detection Method Based on Two-Stage Fusion of Visible Light Image and Thermal Infrared Image

Abstract

:1. Introduction

2. Related Work

2.1. Pixel-Level Fusion of Visible Light Images and Thermal Infrared Images

2.2. Feature-Level Fusion of Visible Light Images and Thermal Infrared Images

2.3. Decision-Level Fusion of Visible Light Images and Thermal Infrared Images

3. The Proposed Method

3.1. Stage I: Pixel-Level Fusion of Visual Light Images and Thermal Infrared Images

3.2. Stage II: Combination of Pixel-Level Fusion and Feature-Level Fusion for Pedestrian Detection

3.2.1. Feature-Level Pedestrian Detection Method Based on the Combination of the Pixel-Level Fusion Image and the Visible Light Image

3.2.2. Feature-Level Pedestrian Detection Method Based on the Combination of the Pixel-Level Fusion Image and the Thermal Infrared Image

4. Experimental Results

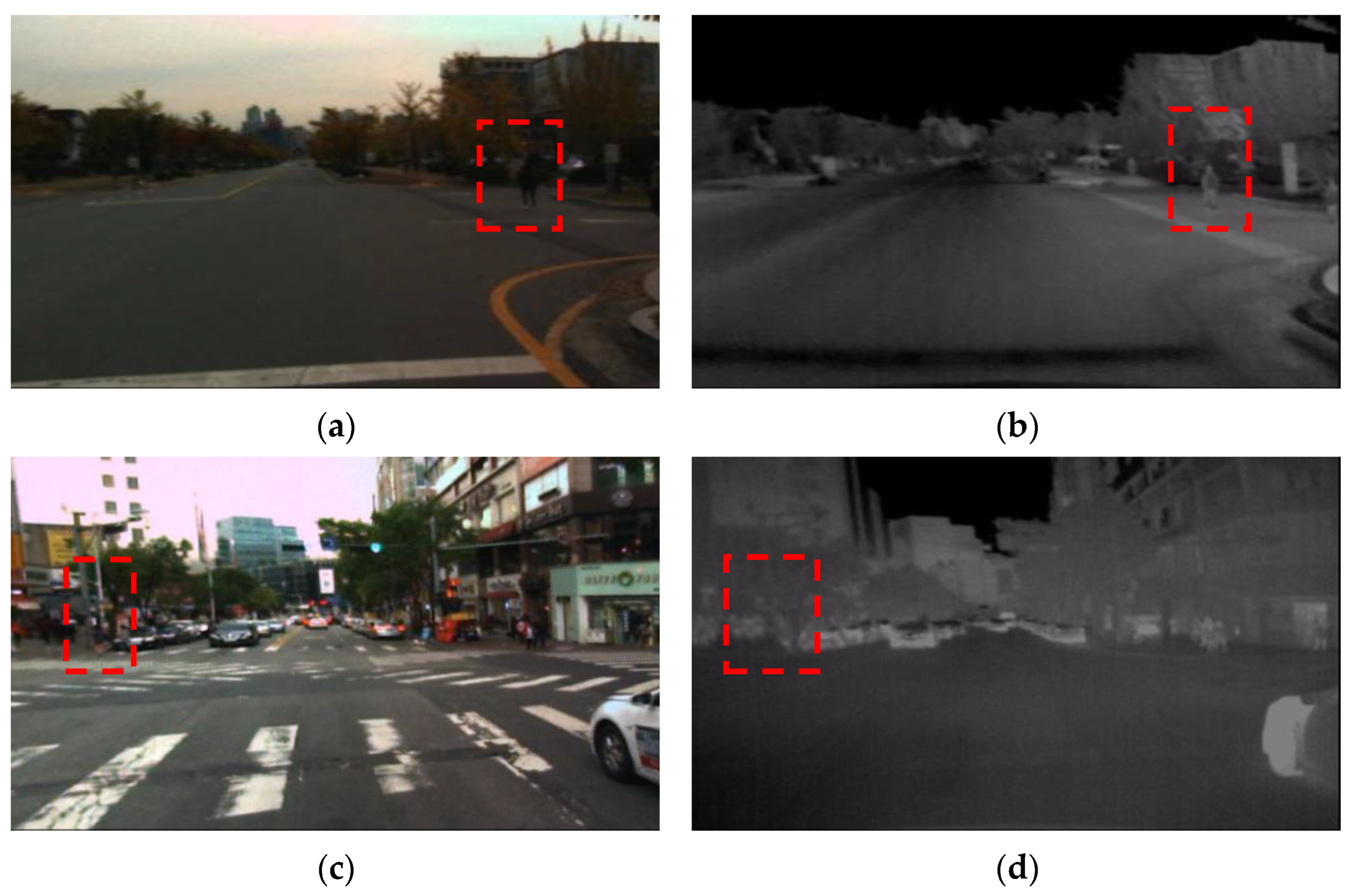

4.1. Introduction of Datasets and Experiments

4.2. Evaluation Metrics

- (1)

- Evaluation Metrics of the Image Quality

- (2)

- Evaluation Metrics of the Pedestrian Detection

- AP@[0.5:0.95] corresponds to the AP for IOU from 0.5 to 0.95 with a step size of 0.05.

- APIoU = 0.50 is AP at IoU = 0.50. APIoU = 0.75 is AP at IoU = 0.75.

- APSmall is AP for small objects: area < 322.

- APMedium is AP for medium objects: 322 < area < 962.

- APLarge is AP for large objects: area > 962.

4.3. Pedestrian Detection Results Based on the Combination of the Pixel-Level Fusion and the Feature-Level Fusion

- (a)

- Pedestrian detection results based on the feature-level fusion of the pixel-level fusion image and the visible light image

- (b)

- Pedestrian detection results based on the feature-level fusion of the pixel-level fusion image and the thermal infrared image

5. Conclusions and Future

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, J.H.; Choi, J.S.; Jeon, E.S.; Kim, Y.G.; Thanh Le, T.; Shin, K.Y.; Lee, H.C.; Park, K.R. Robust pedestrian detection by combining visible and thermal infrared cameras. Sensors 2015, 15, 10580–10615. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, L.; Yang, H.; Dong, L.; Zheng, L.; Asiya, M.; Zheng, F. MMFuse: A multi-scale infrared and visible images fusion algorithm based on morphological reconstruction and membership filtering. IET Image Process. 2023, 17, 1126–1148. [Google Scholar] [CrossRef]

- Hao, L.; Li, Q.; Pan, W.; Yao, R.; Liu, S. Ice accretion thickness prediction using flash infrared thermal imaging and BP neural networks. IET Image Process. 2023, 17, 649–659. [Google Scholar] [CrossRef]

- Balsa-Barreiro, J.; Menendez, M.; Morales, A.J. Scale, context, and heterogeneity: The complexity of the social space. Sci. Rep. 2022, 12, 9037. [Google Scholar] [CrossRef] [PubMed]

- Balsa-Barreiro, J.; Valero-Mora, P.M.; Menéndez, M.; Mehmood, R. Extraction of naturalistic driving patterns with geographic information systems. Mob. Netw. Appl. 2020, 1–17. [Google Scholar] [CrossRef]

- Balsa-Barreiro, J.; Valero-Mora, P.M.; Berné-Valero, J.L.; Varela-García, F.A. GIS mapping of driving behavior based on naturalistic driving data. ISPRS Int. J. Geo-Inf. 2019, 8, 226. [Google Scholar] [CrossRef] [Green Version]

- Yang, L.J.; Li, M.B.; Wu, T.X.; Bao, Y.F.; Li, J.H.; Jiang, Y. Geo-information mapping improves Canny edge detection method. IET Image Process. 2023, 17, 1893–1904. [Google Scholar] [CrossRef]

- Zhang, Y.G.; Shen, L.Q.; Hu, H.M. Extraction of foreground area of pedestrian objects under thermal infrared video surveillance. J. Beijing Univ. Aeronaut. Astronaut. 2020, 46, 1721–1729. [Google Scholar]

- Wagner, J.; Fischer, V.; Herman, M.; Behnke, S. Multispectral Pedestrian Detection using Deep Fusion Convolutional Neural Networks. In Proceedings of the European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 27–29 April 2016; pp. 509–514. [Google Scholar]

- Davis, J.W.; Sharma, V. Background-subtraction using contour-based fusion of thermal and visible imagery. Comput. Vis. Image Underst. 2007, 106, 162–182. [Google Scholar] [CrossRef]

- Li, B.Y.; Liu, Y.; Wang, X.G. Gradient harmonized single-stage detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8577–8584. [Google Scholar]

- Zhang, Y.; Shen, L.; Wang, X.; Hu, H.M. Drone Video Object Detection using Convolutional Neural Networks with Time Domain Motion Features. In Proceedings of the IEEE Conference on Multimedia Information Processing and Retrieval, Shenzhen, China, 6–8 August 2020; pp. 153–156. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1037–1045. [Google Scholar]

- Wu, B.; Nevatia, R. Detection of multiple, partially occluded humans in a single image by Bayesian combination of edgelet part detectors. In Proceedings of the IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005. [Google Scholar]

- Wang, S.; Cheng, J.; Liu, H.; Tang, M. PCN: Part and Context Information for Pedestrian Detection with CNNs. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017. [Google Scholar]

- Wang, C.; Ning, X.; Sun, L.; Zhang, L.; Li, W.; Bai, X. Learning Discriminative Features by Covering Local Geometric Space for Point Cloud Analysis. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Ning, X.; Tian, W.; Yu, Z.; Li, W.; Bai, X.; Wang, Y. HCFNN: High-order Coverage Function Neural Network for Image Classification. Pattern Recognit. 2022, 131, 108873. [Google Scholar] [CrossRef]

- Stark, B.; Smith, B.; Chen, Y.Q. Survey of thermal infrared remote sensing for Unmanned Aerial Systems. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 1294–1299. [Google Scholar]

- Sobrino, J.A.; Del Frate, F.; Drusch, M.; Jimenez-Munoz, J.C.; Manunta, P.; Regan, A. Review of thermal infrared applications and requirements for future high-resolution sensors. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2963–2972. [Google Scholar] [CrossRef]

- Parikh, D.; Zitnick, C.L.; Chen, T. Exploring tiny images: The roles of appearance and contextual information for machine and human object recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1978–1991. [Google Scholar] [CrossRef]

- Lu, Y.; Li, W.; Ning, X.; Dong, X.; Zhang, L.; Sun, L.; Cheng, C. Blind image quality assessment based on the multiscale and dual-domains features fusion. Concurr. Comput. Pract. Exp. 2021, 2021, e6177. [Google Scholar] [CrossRef]

- Torresan, H.; Turgeon, B.; Ibarra-Castanedo, C.; Hebert, P.; Maldague, X.P. Advanced surveillance systems: Combining video and thermal imagery for pedestrian detection. In Proceedings of the International Society for Optics and Photonics, Orlando, FL, USA, 12 April 2004; pp. 506–515. [Google Scholar]

- Choi, E.J.; Park, D.J. Human detection using image fusion of thermal and visible image with new joint bilateral filter. In Proceedings of the International Conference on Computer Sciences and Convergence Information Technology, Seoul, Republic of Korea, 30 November–2 December 2010; pp. 882–885. [Google Scholar]

- Zhou, Z.; Wang, B.; Li, S.; Dong, M. Perceptual fusion of infrared and visible images through a hybrid multi-scale decomposition with Gaussian and bilateral filters. Inf. Fusion 2016, 30, 15–26. [Google Scholar] [CrossRef]

- Ding, Y.Y.; Xiao, J.; Yu, J.Y. Importance filtering for image retargeting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 89–96. [Google Scholar]

- Li, S.T.; Kang, X.D.; Hu, J.W. Image fusion with guided filtering. IEEE Trans. Image Process. 2013, 22, 2864–2875. [Google Scholar] [PubMed]

- Zhou, Z.; Dong, M.; Xie, X.; Gao, Z. Fusion of infrared and visible images for night-vision context enhancement. Appl. Opt. 2016, 55, 6480–6490. [Google Scholar] [CrossRef]

- Zhang, L.; Li, W.; Yu, L.; Sun, L.; Dong, X.; Ning, X. GmFace: An explicit function for face image representation. Displays 2021, 68, 102022. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 2020, 29, 4733–4746. [Google Scholar] [CrossRef] [Green Version]

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- He, K.M.; Sun, J. Fast Guided Filter. arXiv 2015, arXiv:1505.00996. [Google Scholar]

- He, K.; Rhemann, C.; Rother, C.; Tang, X.; Sun, J. A global sampling method for alpha matting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2049–2056. [Google Scholar]

- Wilson, T.A.; Rogers, S.K.; Kabrisky, M. Perceptual-based image fusion for hyperspectral data. IEEE Trans. Geosci. Remote Sens. 1997, 35, 1007–1017. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Varshney, P.K. A human perception inspired quality metric for image fusion based on regional information. Inf. Fusion 2007, 8, 193–207. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; p. 31. [Google Scholar]

- He, K.M.; Sun, J.; Tang, X. Guided image filtering. In Proceedings of the European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 1–14. [Google Scholar]

- Zhang, L.; Sun, L.; Yu, L.; Dong, X.; Chen, J.; Cai, W.; Wang, C.; Ning, X. ARFace: Attention-aware and regularization for face recognition with reinforcement learning. IEEE Trans. Biom. Behav. Identity Sci. 2021, 4, 30–42. [Google Scholar] [CrossRef]

- Wang, X.Y.; Han, T.X.; Yan, S.C. An HOG-LBP human detector with partial occlusion handling. In Proceedings of the IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Khan, F.S.; Anwer, R.M.; Van De Weijer, J.; Bagdanov, A.D.; Vanrell, M.; Lopez, A.M. Color attributes for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3306–3313. [Google Scholar]

- Li, S.; Sun, L.; Ning, X.; Shi, Y.; Dong, X. Head pose classification based on line portrait. In Proceedings of the 2019 International Conference on High Performance Big Data and Intelligent Systems (HPBD&IS), Shenzhen, China, 9–11 May 2019; pp. 186–189. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 2117–2125. [Google Scholar]

- Li, D.W.; Xu, L.H.; Goodman, E.D. Illumination-robust foreground detection in a video surveillance system. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1637–1650. [Google Scholar] [CrossRef]

- Rajalingam, B.; Priya, R. Hybrid multimodality medical image fusion technique for feature enhancement in medical diagnosis. Int. J. Eng. Sci. Invent. 2018, 2, 52–60. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

| Metrics | Information Entropy | Average Gradient | Edge Strength | |

|---|---|---|---|---|

| Image | ||||

| Visible light image | 6.984 | 2.723 | 28.452 | |

| Thermal infrared image | 6.645 | 2.028 | 21.317 | |

| Pixel-level fusion image of non-brightness-enhanced thermal infrared image and visible light image | 7.198 | 3.611 | 37.648 | |

| Pixel-level fusion image of brightness-enhanced thermal infrared image and visible light image | 7.625 | 5.035 | 52.818 | |

| Metrics | Information Entropy | Average Gradient | Edge Strength | |

|---|---|---|---|---|

| Image | ||||

| Visible light image | 6.692 | 3.556 | 37.292 | |

| Thermal infrared image | 5.053 | 0.485 | 5.111 | |

| Pixel-level fusion image of non-brightness-enhanced thermal infrared image and visible light image | 6.716 | 3.681 | 38.649 | |

| Pixel-level fusion image of brightness-enhanced thermal infrared image and visible light image | 6.979 | 3.940 | 41.368 | |

| The Ratio of Visible Light Images to Thermal Infrared Images | AP | AP0.5 | AP0.75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| 1:1 | 0.742 | 0.957 | 0.874 | 0.689 | 0.745 | 0.791 |

| 2:1 | 0.742 | 0.957 | 0.872 | 0.687 | 0.744 | 0.794 |

| 3:1 | 0.739 | 0.956 | 0.871 | 0.685 | 0.741 | 0.789 |

| 3:2 | 0.737 | 0.957 | 0.863 | 0.686 | 0.738 | 0.795 |

| 4:1 | 0.739 | 0.957 | 0.871 | 0.686 | 0.740 | 0.794 |

| 5:1 | 0.742 | 0.965 | 0.871 | 0.681 | 0.743 | 0.791 |

| The Ratio of Thermal Infrared Images to Visible Light Images | AP | AP0.5 | AP0.75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| 1:1 | 0.751 | 0.965 | 0.857 | 0.597 | 0.758 | 0.813 |

| 1:2 | 0.751 | 0.965 | 0.858 | 0.598 | 0.760 | 0.802 |

| 1:3 | 0.737 | 0.965 | 0.855 | 0.562 | 0.747 | 0.793 |

| 2:3 | 0.757 | 0.966 | 0.866 | 0.609 | 0.764 | 0.805 |

| 1:4 | 0.749 | 0.965 | 0.857 | 0.577 | 0.758 | 0.803 |

| 1:5 | 0.749 | 0.965 | 0.856 | 0.584 | 0.756 | 0.803 |

| Evaluation Metrics | AP | AP0.5 | AP0.75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| Detection of the visible light image | 0.724 | 0.956 | 0.848 | 0.660 | 0.724 | 0.789 |

| Detection of the pixel-level fusion of the visible light image and the thermal infrared image | 0.704 | 0.955 | 0.821 | 0.634 | 0.706 | 0.774 |

| Detection of the feature-level fusion of the visible light image and the thermal infrared image | 0.742 | 0.956 | 0.874 | 0.689 | 0.745 | 0.791 |

| Detection using the proposed method | 0.764 | 0.980 | 0.899 | 0.706 | 0.766 | 0.814 |

| Evaluation Metrics | AP | AP0.5 | AP0.75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| Detection of the thermal infrared image | 0.786 | 0.978 | 0.900 | 0.689 | 0.792 | 0.806 |

| Detection of the pixel-level fusion of the visible light image and the thermal infrared image | 0.744 | 0.965 | 0.855 | 0.546 | 0.757 | 0.778 |

| Detection of the feature-level fusion of the visible light image and the thermal infrared image | 0.737 | 0.966 | 0.857 | 0.567 | 0.746 | 0.800 |

| Detection using the proposed method | 0.824 | 0.986 | 0.943 | 0.741 | 0.830 | 0.836 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Zhai, B.; Wang, G.; Lin, J. Pedestrian Detection Method Based on Two-Stage Fusion of Visible Light Image and Thermal Infrared Image. Electronics 2023, 12, 3171. https://doi.org/10.3390/electronics12143171

Zhang Y, Zhai B, Wang G, Lin J. Pedestrian Detection Method Based on Two-Stage Fusion of Visible Light Image and Thermal Infrared Image. Electronics. 2023; 12(14):3171. https://doi.org/10.3390/electronics12143171

Chicago/Turabian StyleZhang, Yugui, Bo Zhai, Gang Wang, and Jianchu Lin. 2023. "Pedestrian Detection Method Based on Two-Stage Fusion of Visible Light Image and Thermal Infrared Image" Electronics 12, no. 14: 3171. https://doi.org/10.3390/electronics12143171

APA StyleZhang, Y., Zhai, B., Wang, G., & Lin, J. (2023). Pedestrian Detection Method Based on Two-Stage Fusion of Visible Light Image and Thermal Infrared Image. Electronics, 12(14), 3171. https://doi.org/10.3390/electronics12143171