Fast Context-Awareness Encoder for LiDAR Point Semantic Segmentation

Abstract

:1. Introduction

- (1)

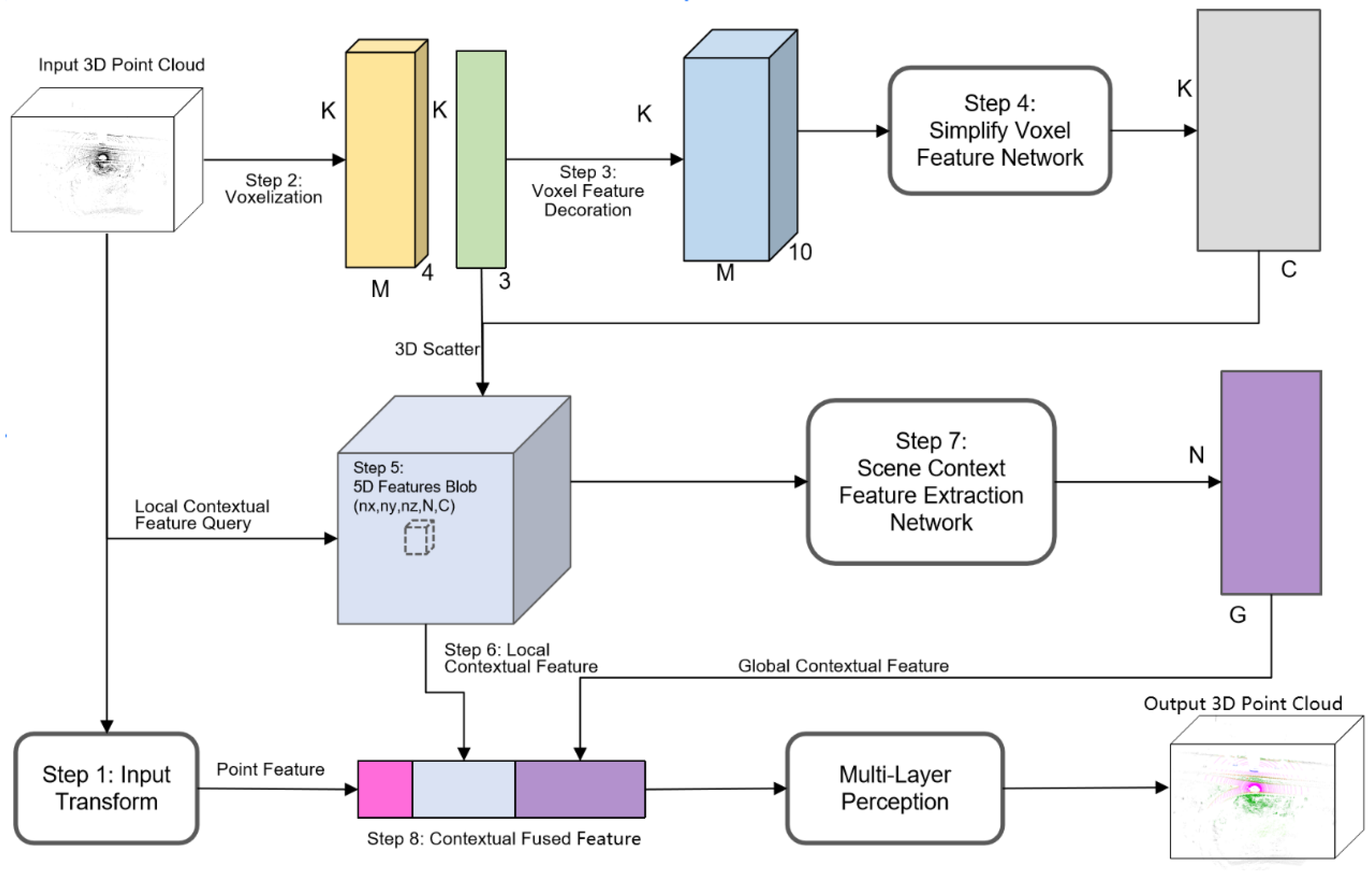

- This paper proposes a novel deep learning-based encoder network. The combination of semantic features involves three aspects: (i) the invariant feature of the point; (ii) the local structural features can be obtained after extracting the position relationship and point characteristics between the scattered points through the context network; and (iii) from the above local features, the network abstracts the initial global feature of these points. By fusing the features of the point cloud at the point level, voxel level, and global level, the network obtains more accurate feature encoding of the point cloud data, which not only retains the fine-grained features of the points but also retains the context and position features. During each feature extraction stage, particularly in the voxel and global feature extraction stages, the network architecture is inspired by feature networks that possess strong expressiveness. The hierarchical design is modified and optimized, and some network layers are simplified in many places to reduce and balance the computational load.

- (2)

- The feature extraction network generates local features by considering the context awareness features of points and their neighbors, while the initial global features are obtained by abstracting the voxel feature and context features at higher levels. By leveraging the dual context relationship of points, the deep neural network can extract and abstract the position relationship and point features between scattered points through both local and global context awareness.

- (3)

- Besides theoretical analysis, this paper proposes a real-time, lightweight CNN network with a mean intersection-over-union (MIoU) score of 68.9% on the proposed semantic KITTI test set in this article. To demonstrate the feasibility and superiority of our method in point cloud segmentation, we conducted a comparison with other models.

2. Related Work

3. Method

3.1. Architecture

| Algorithm 1: Construction steps of the FCAE network | |

| Input: | Points: (N, 4); batch_size: N |

| Point cloud range: (x_min, y_min, z_min, x_max, y_max, z_max); | |

| Voxel_size: (x_size, y_size, z_size); | |

| Maximum number of points in each voxel: M; Total number of voxels after voxelization: K; | |

| Step 1: | Feature transformation of point |

| ① | coords_part = points[:, 0:3]; intensity_part = points[:, 3]; |

| ② | x = ReLU(BatchNorm1d(Conv1d(coords_part))); ▷ For three times max(x, 2, keepdim = True); ReLU(BatchNorm1d(Linear(ReLU(BatchNorm1d(Linear))))); Linear(64, 9); |

| ③ | trans_coords_part = coords_part + bias; coords_part = coords_part × trans_coords_part; ▷ T-net tsn_out = concat(coords_part, intensity_part); ▷ output the transformed point features (N, 4) as the input of Step7. |

| Step 2: | Feature Voxelization |

| ① | batch_voxels (K, M, 4) = Dense Voxels; features_ls = [batch_voxels] |

| ② | points_mean = sum(batch_voxels[:, :, :3])/K; xc, yc, zc = batch_voxels[:, :, :3] - points_mean; ▷ the distance between each internal point and the arithmetic mean of the points in the voxel. Feature matrix (K, M, 3); features_ls.append(xc, yc, zc) |

| ③ | Voxel_Coordinates (K, 3) ▷ it is used as the input of Step 4; xp, yp, zp =batch_voxels[:, :, :3]-Voxel_Coordinates*x,y,z_size + x,y,z_offset ▷ the distance between each point and the center of the voxel. Feature matrix (K, M, 3); features_ls.append(xp, yp, zp). |

| Step 3: | Feature decoration |

| ① | Features (K, M, 10) = features_ls |

| ② | paddings_indicator(K,M); ▷ reset the filling point to 0 mask == unsqueeze(mask, −1).type_as(features); ▷ (K, M, 1) features = features × mask ▷ Feature Decoration matrix(K, M, 10) is used as the input of Step 3. |

| Step 4: | Simplify VFE feature extraction |

| ① | Feature Decoration matrix(K, M, 10) ReLU(BatchNorm1d(Linear (10, C))); ▷ output (K, M, C), C refers to the output feature dimension, C = 64 in the paper; |

| ② | features = max(x, dim = 1,keepdim = True); ▷ output the feature dimension (K, 1, C); |

| ③ | voxel_features = features.squeeze(dim = 1); ▷ Remove redundant dimensions, matrix(K, C)as the input of Step 4. |

| Step 5: | Converting dense features to 5D feature blob |

| ① | nx = (x_max − x_min)/x_size; ny = (y_max − y_min)/y_size; nz == (z_max − z_min)/z_size; |

| ② | for sample_index in range(batch_size): indices == X × self._ny × self._nz + Y × self._nz + Z; ▷ Global index, where (X,Y,Z): indices of voxel grids in x,y, z-axis, calculated by Voxel_Coordinates (K, 3) canvas_3d[:, indices] == voxel_features; end for |

| ③ | canvas_3d (batch_size, C, self._nx × self._ny × self._nz); canvas_3d == canvas_3d.view(batch_size, C, nx, ny, nz) ▷ output shape (batch_size, C, nx, ny, nz), C = 64 in paper, and is used as the input of Step 5 and Step 6. |

| Step 6: | Local feature extraction |

| ① | for batch_idx in range(batch_size): batch_mask == batch_idx local_feats == canvas_3d[batch_idx, :, x_coords, y_coords, z_coords]; local_feats[batch_mask, :] == local_feats ▷ the local feature (N, C) as the input of Step7. end for |

| Step 7: | Scene global feature extraction ▷ canvas_3d(batch_size, C, nx, ny, nz) |

| ① | max_pool3d(relu(Conv3d(C, C/2))); ▷ For three times |

| ② | Linear((nx/8) × (ny/8) × (nz/8) × (C/8, G) ▷ Global feature (N, G). G is the set global feature dimension, which is 1024 in the paper. The matrix is as the input of Step 7. |

| Step 8: | Point-Voxel-Global feature fused network |

| ① | fuse_feats == concat([points, local_feats, global_feats], dim = −1) ▷ Combine that point features, the local feature and the global features, fuse_feats (N, 4 + C + G); |

| ② | MLP perception: MLP(F) = log_softmax(Relu(FC3(Relu(FC2(Relu(FC1)))))) ▷ shape = (N, D), D is the number of semantic segmentation classes. |

3.2. Points’ Invariant Feature Network

3.3. Voxel Feature Network

3.3.1. Point Cloud Voxelization

3.3.2. Voxel Feature Decoration

3.3.3. SVFE

3.3.4. Generate 5D Spatial Feature Blob

3.4. Scene Context Feature Extraction Network (SCFEN)

3.5. Point-Voxel-Global Feature Fused Network

4. Experiment Result

4.1. Dataset

4.2. Evaluation

4.2.1. Qualitative Evaluation Metric

4.2.2. Quantitative Evaluation Metric

mAP

MIoU

4.2.3. Comparative and Ablation Experiments

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Farsoni, S.; Rizzi, J.; Ufondu, G.N.; Bonfe, M. Planning Collision-Free Robot Motions in a Human-Robot Shared Workspace via Mixed Reality and Sensor-Fusion Skeleton Tracking. Electronics 2022, 11, 2407. [Google Scholar] [CrossRef]

- Lawin, F.J.; Danelljan, M.; Tosteberg, P.; Bhat, G.; Khan, F.S.; Felsberg, M. Deep projective 3D semantic segmentation. In International Conference on Computer Analysis of Images and Patterns; Springer: Cham, Switzerland, 2017; pp. 95–107. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Qi Charles, R.; Yi, L.; Su, H.; Guibas, L.J. PointNet ++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; p. 30. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection from Point Clouds. 2019. Available online: https://openaccess.thecvf.com/content_CVPR_2019/papers/Lang_PointPillars_Fast_Encoders_for_Object_Detection_From_Point_Clouds_CVPR_2019_paper.pdf (accessed on 1 April 2022).

- Huang, X.; Wang, C.; Xiong, L.-Y.; Zeng, H. A weighted k-means clustering method for in- and inter-cluster distances in ensembles. Chin. J. Comput. 2019, 42, 2836–2847. [Google Scholar]

- Ma, L.J.; Wu, J.G.; Chen, L. DOTA: Delay Bounded Optimal Cloudlet Deployment and User Association in WMANs. In Proceedings of the 17th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID), Madrid, Spain, 14–17 May 2017; pp. 196–203. [Google Scholar]

- Yang, Y.; Li, M.; Ma, X. A Point Cloud Simplification Method Based on Modified Fuzzy C-Means Clustering Algorithm with Feature Information Reserved. Mathematical Problems in Engineering. Math. Probl. Eng. 2020, 2020, 5713137. [Google Scholar] [CrossRef]

- Zhou, K.; Hou, Q.; Wang, R.; Guo, B. Real-time KD-tree construction on graphics hardware. ACM Trans. Graph. 2008, 27, 126. [Google Scholar] [CrossRef] [Green Version]

- Woo, H.; Kang, E.; Wang, S.; Lee, K.H. A new segmentation method for point cloud data. Int. J. Mach. Tools Manuf. 2002, 42, 167–178. [Google Scholar] [CrossRef]

- Arias-Castro, E.; Chen, G.L.; Lerman, G. Spectral clustering based on local linear approximations. Electron. J. Stat. 2011, 5, 1537–1587. [Google Scholar] [CrossRef] [Green Version]

- Hu, X.B.; Chen, W.; Xu, W.Y. Adaptive Mean Shift-Based Identification of Individual Trees Using Airborne LiDAR Data. Remote Sens. 2017, 9, 148. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Ji, M.; Wang, J.; Wen, W.; Li, T.; Sun, Y. An Improved DBSCAN Method for LiDAR Data Segmentation with Automatic Eps Estimation. Sensors 2019, 19, 172. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, J.; Sun, H.; Dong, Y.; Zhang, R.; Sun, X. Review of 3D point cloud processing based on deep learning. Comput. Res. Dev. 2022, 59, 1160–1179. [Google Scholar]

- Li, H.; Wang, J.; Xu, L.; Zhang, S.; Tao, Y. Efficient and accurate object detection for 3D point clouds in intelligent visual internet of things. Multimed. Tools Appl. 2021, 80, 31297–31334. [Google Scholar] [CrossRef]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Garcia-Garcia, A.; Gomez-Donoso, F.; Garcia-Rodriguez, J.; Orts-Escolano, S.; Cazorla, M.; Azorin-Lopez, J. PointNet: A 3D convolutional neural network for real-time object class recognition. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 1578–1584. [Google Scholar]

- Zhao, Y.H.; Birdal, T.; Deng, H.W.; Tombari, F. 3D Point Capsule Networks. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8887–8896. [Google Scholar]

- Shi, S.S.; Wang, X.G.; Li, H.S. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Ali, W.; Abdelkarim, S.; Zidan, M.; Zahran, M.; El Sallab, A. YOLO 3D: End-to-end real-time 3D oriented object bounding box detection from Lidar Point cloud. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 10–30. [Google Scholar]

- Wang, B.; An, J.; Cao, J. Voxel-FPN: Multi-scale voxel feature aggregation in 3D object detection from point clouds. arXiv 2019, arXiv:1907.05286. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Y.; Liu, S.; Shen, X.; Jia, J. Fast point r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9775–9784. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Wang, L.; Huang, Y.; Hou, Y.; Zhang, S.; Shan, J. Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 10296–10305. [Google Scholar]

- Shi, W.; Rajkumar, R. Point-gnn: Graph neural network for 3d object detection in a point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1711–1719. [Google Scholar]

- Zarzar, J.; Giancola, S.; Ghanem, B. PointRGCN: Graph convolution networks for 3D vehicles detection refinement. arXiv 2019, arXiv:1911.12236. [Google Scholar]

- Cheng, R.; Razani, R.; Taghavi, E.; Li, E.X.; Liu, B.B. (AF)2-S3Net: Attentive Feature Fusion with Adaptive Feature Selection for Sparse Semantic Segmentation Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 12547–12556. [Google Scholar]

- Xie, X.; Bai, L.; Huang, X. Real-Time LiDAR Point Cloud Semantic Segmentation for Autonomous Driving. Electronics 2021, 11, 11. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, Y.; Foroosh, H. Panoptic-polarnet: Proposal-free lidar point cloud panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13189–13198. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantic KITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. Computer Vision and Pattern Recognition, 2019. Available online: https://arxiv.org/pdf/1904.01416.pdf (accessed on 1 April 2022).

- Semantic KITTI. 2021. Available online: http://semantic-kitti.org/dataset.html (accessed on 1 May 2022).

| (Ignored) Unlabeled | Car | Bicycle | Motorcycle | Truck | Other-Vehicle | Person | Bicyclist | Motorcyclist | Road | Parking | Sidewalk | Other-Ground | Building | Fence | Vegetation | Trunk | Terrain | Pole | Traffic-Sign |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0,1,52,99 | 10,252 | 11 | 15 | 18,258 | 13,16,20,256,257,259 | 30,254 | 31,253 | 32,255 | 40,60 | 44 | 48 | 49 | 50 | 51 | 70 | 71 | 72 | 80 | 81 |

| Methods | MIoU (%) | Latency (ms) | Car | Bicycle | Motorcycle | Truck | Other-Vehicle | Person | Bicyclist | Motorcyclist | Road | Parking | Sidewalk | Other-Ground | Building | Fence | Vegetation | Trunk | Terrain | Pole | Traffic-Sign |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet++ | 43.9 | 79 | 39.1 | 25.7 | 30.1 | 59.0 | 48.1 | 40.1 | 38.1 | 38.0 | 70.3 | 45.1 | 66.7 | 16.8 | 66.1 | 24.1 | 62.0 | 36.1 | 44.9 | 30.1 | 54.0 |

| Cylinder3D | 63.5 | 127 | 96.5 | 31.9 | 64.2 | 85.3 | 56.5 | 72.5 | 90.0 | 32.2 | 93.3 | 34.2 | 77.7 | 0.9 | 87.0 | 45.9 | 87.5 | 65.2 | 71.2 | 64.4 | 50.8 |

| Point-Voxel-KD | 69.3 | 73 | 89.2 | 76.4 | 63.6 | 73.4 | 65.8 | 79.5 | 70.2 | 88.0 | 87.6 | 60.3 | 72.5 | 23.5 | 89.7 | 56.8 | 88.6 | 72.9 | 71.2 | 44.5 | 43.8 |

| Ours | 68.9 | 90 | 61.8 | 77.8 | 84.8 | 88.8 | 64.7 | 83.2 | 85.1 | 88.3 | 76.5 | 64.1 | 67.8 | 64.5 | 68.5 | 63.3 | 61.5 | 62.2 | 50.2 | 56.3 | 40.3 |

| Ours(Voxel_size (0.32, 0.32, 0.32) | 69.5 | 72.8 | 77.7 | 82.5 | 74.9 | 65.3 | 83.6 | 83.7 | 85.5 | 80.4 | 57.9 | 64.7 | 63.8 | 78.4 | 67.2 | 60.4 | 59.6 | 55.7 | 50.9 | 54.9 |

| Point Feature | Voxel Feature | Global Feature (Dimensions) | MIoU | Car | Bicycle | Motorcycle | Truck | Other-Vehicle | Person | Bicyclist | Motorcyclist | Road | Parking | Sidewalk | Other-Ground | Building | Fence | Vegetation | Trunk | Terrain | Pole | Traffic-Sign |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| √ | 1024 | 64.7 | 74.5 | 69.9 | 72.9 | 67.9 | 78.6 | 69.4 | 76.5 | 49.5 | 80.7 | 58.5 | 73.6 | 36.1 | 66.8 | 67.4 | 59.3 | 64.7 | 57.6 | 55.6 | 49.9 | |

| √ | 1024 | 52.3 | 67.7 | 54.2 | 68 | 37.9 | 70.2 | 53.2 | 64.5 | 35.3 | 53.9 | 40.5 | 65.6 | 40.1 | 56.6 | 57.9 | 52.3 | 38.2 | 47.4 | 51.6 | 37.7 | |

| √ | √ | 512 | 55.6 | 69.1 | 66.2 | 59.8 | 73.5 | 78.3 | 59.3 | 63.8 | 45.9 | 60.7 | 37.8 | 65.2 | 46.9 | 48.2 | 52.8 | 50.2 | 39.5 | 46.4 | 48.7 | 43.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, T.; Ni, J.; Wang, D. Fast Context-Awareness Encoder for LiDAR Point Semantic Segmentation. Electronics 2023, 12, 3228. https://doi.org/10.3390/electronics12153228

Du T, Ni J, Wang D. Fast Context-Awareness Encoder for LiDAR Point Semantic Segmentation. Electronics. 2023; 12(15):3228. https://doi.org/10.3390/electronics12153228

Chicago/Turabian StyleDu, Tingyu, Jingxiu Ni, and Dongxing Wang. 2023. "Fast Context-Awareness Encoder for LiDAR Point Semantic Segmentation" Electronics 12, no. 15: 3228. https://doi.org/10.3390/electronics12153228