1. Introduction

With the large-scale deployment of 5G commercialization, the concept and technologies of 6G (Sixth Generation) have attracted significant attention [

1,

2]. The 6G mobile communication system is envisioned to be an intelligent infrastructure for universal connection of exponential growth heterogeneous devices of massive application scenarios, such as smart cities, multi-sensory extended reality, and tactile internet, etc. The goal of 6G is to establish a seamless global coverage network across space, air, oceans, and land with high transmission rate, low end-to-end latency, and customized service provisioning [

1].

The 6G system primarily distinguishes itself from 5G by its endogenous intelligence capability supported by AI (Artificial Intelligence) to meet the constantly changing requirements of users and applications [

3]. AI algorithms are ubiquitous from the cloud to the edge and are applied in many aspects of 6G, such as resource management, service orchestration, network security, and semantic communications [

4]. However, it is still unclear how the AI can benefit the 6G

core network architecture. To address this question, we review the long history of mobile communication systems and explore potential evolution strategies for the core network architecture. We further classify these evolution patterns into four basic forms: the emergence of new components [

5], the elimination of obsolete functions [

6], the separation or merger of different network components [

7], and changes in component interactions [

8]. We refer to these four forms of macroscopic network evolution as

Network Morphology Evolution.

The above morphing of network components is designed and evolved by the pure manual effort of expert knowledge and industrial consensus, typically recorded in a series of 3GPP protocols, which is expensive and time-consuming. The previous generations of core networks were generally designed by domain experts under the assumption that the user’s requirements were predefined and predictable [

9]. Meanwhile, the design process was often characterized by long research cycles and significant investments in manpower. The resulted fixed-defined architecture of core networks struggled to effectively accommodate the dynamic nature of evolving user demands [

10]. Therefore, this design philosophy of core network architecture makes it hard to guarantee the diverse and dynamic customized 6G services anywhere and anytime.

Therefore, a 6G core network capable of automatically morphing its architecture according to the changing scenarios is promising. A 6G core network will explore new architecture without being restricted by traditional design paradigms. Intuitively, AI technology is the natural choice for automatically evolving the architecture of the 6G core network. However, to the best of our knowledge, little thought has been given to how AI can empower the 6G core network architecture with self-evolution capabilities.

Fortunately, research on simulating biological morphology evolution has emerged in the field of machine learning, providing inspiration for our idea of empowering the core network with self-evolution enabled by AI. Reinforcement Learning (RL) is a subfield of machine learning that trains agents to make decisions in an environment by maximizing a cumulative reward signal through trial-and-error interactions, enabling them to adapt and improve performance over time. Gupta Agrim et al. [

11] introduced Deep Evolutionary Reinforcement Learning (DERL) to evolve diverse agent morphologies to learn challenging locomotion and manipulation tasks in complex environments. Wang Tingwu et al. [

12] proposed the Neural Graph Evolution (NGE) algorithm, using Graph Neural Networks (GNNs) to describe embodied agents and simple mutation primitives to represent continuous evolution in the environment. Yuan Ye et al. [

13] proposed the Transformation and Control policy (Transform2ACT) algorithm, which incorporated the design procedure of an agent into its decision-making process. Moreover, this approach enables joint optimization of agent design and control as well as experience sharing across different designs.

We consider that the evolution paradigm of the 6G core network closely resembles that of biological evolution, where the core network architecture resembles the form of a biological organism. In biological evolution, organisms evolve in response to varying environments by changing components in a three-level hierarchical structure, with cells forming tissues, and tissues forming bodies. Under the context of the 6G core network, following the idea of the SBA (Service-based Architecture) framework of 5G [

14], there is thought to be a similar three-level hierarchical structure, where

Microservices compose

Network Functions, and the Network Functions compose the

Core Network. To adapt to the changing scenarios, varying microservices can compose different network functions and then core networks. That is, the automatic decomposition and recomposition of elements at each level, triggered by the dynamic user requirements of the 6G system, provide the essential fundamental drive of

self-evolution.

Similar to biological evolution, here we use the term

self-evolution to refer to the intelligent capability of the 6G core network to autonomously adjust and optimize its structure in response to environmental changes during the operation process.

Self-evolution enables networks to better adapt to complex and dynamic communication environments and respond quickly and accurately to different network requirements and application scenarios. The self-evolution paradigm allow the 6G core network to break the static architectures standardized by predefined protocols and to explore arbitrary novel structures beyond the known forms of human expert knowledge. In comparison to architectural research endeavors in 5G, such as Saha et al.’s comprehensive security analysis framework based on machine learning [

15] for the 5G core network to enhance its security, the integration of AI in 6G is inherent rather than an externally attached module.

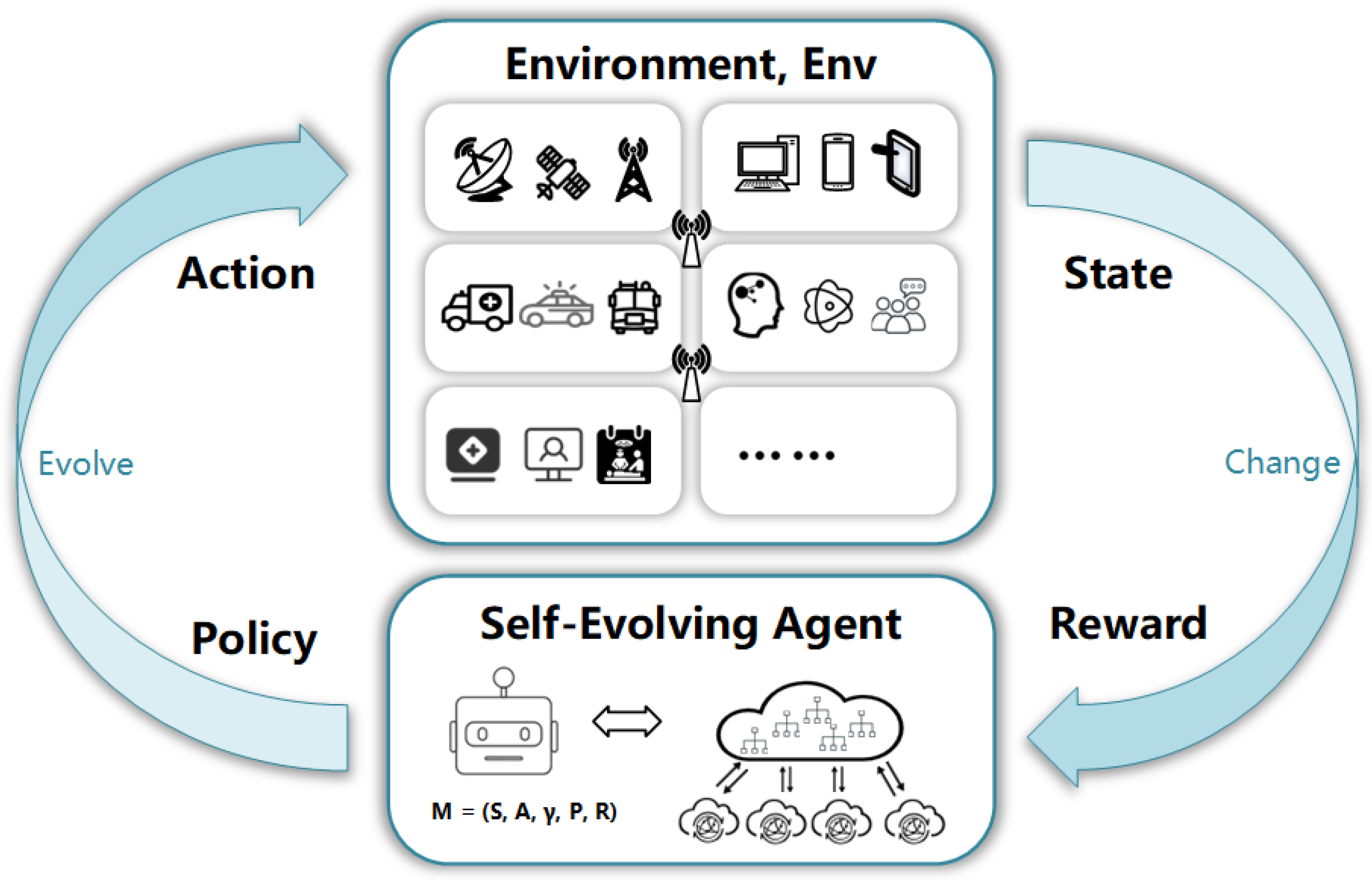

While self-evolution can be achieved under the principles of multiple frameworks, here we specifically adopt the idea of self-generation, which entails the network’s inherent capacity to generate novel structures to effectively adapt to emerging communication environments. As aforementioned, we consider 6G core networks as organic entities, with their network structure resembling the form of a biological organism. As shown in

Figure 1, similar to how living organisms interact with the external environment, observe the environment, and choose the best body form and behavior for survival and competition, we propose an intelligent entity named

Self-Evolving Agent following the basic idea of

reinforcement learning for the 6G core network, aiming to actively morph the core network architecture to try to fit the changing environment by obtaining the feedback reward as much as possible. This process constitutes a closed loop for the agent of perception, reasoning, decision-making, and execution, enabling networks to autonomously learn, continuously iterate, and optimize their existing forms to meet new network requirements and challenges.

To further clarify the novelty of our self-evolution strategy, we review the literature on AI integrated mobile network systems. Currently, numerous research works mainly focus on the use of AI for performance optimization [

16,

17] in topics including wireless network resource allocation, edge computing scheduling, channel allocation, network slicing, and service chain composition. However, they generally assume that all the optimizations are conducted under the assumption that the network structure is not allowed to change. Thus, their optimization results are restricted by the given network architecture. As this type of optimization can be typically represented as a set of learnable and solvable model parameters of AI algorithms [

10,

18], we refer to this pattern as

Network Parameter Evolution, to parallel with the aforementioned pattern of

Network Morphology Evolution. While the Network Parameter Evolution tends to evolve the network at the micro-level with partial optimization in a short time span, such as seconds or minutes, the Network Morphology Evolution focuses on evolving the network at the macro-level, with global changes in a long time span, such as days, months, or even years.

In summary, we aim to empower the 6G core network architecture with self-evolution capabilities to enhance its environmental adaptability. This involves enabling the network architecture and communication protocols to support self-learning, self-generation, and self-operation and maintenance, ultimately leading to a new architecture of the 6G core network. Our primary contributions include:

- (1)

We propose an architecture for the 6G core network that enables self-evolution based on intelligent decision making. We elaborate the core ideas and mechanisms of network self-evolution and formulate the self-evolution task for the 6G core network.

- (2)

We propose a reinforcement learning-based self-evolving agent, which can be applied in cloud and Edge Core Networks to morph the network architecture in response to the changes of the communication environment.

- (3)

Finally, We validate our self-evolution algorithm through experimental simulations. The results demonstrate that the self-evolution agent can make rational evolutionary decisions and enable the core network to exhibit promising environmental adaptability.

The rest of this paper is organized as follows. In

Section 2, we introduce the evolution of core network architecture and discuss relevant works on Network Intelligence. In

Section 3, we present the Problem Formulation for Network Self-Evolution. In

Section 4, we propose the Self-Evolving Pipeline and a deployment architecture for intelligent agents in the 6G core network. In

Section 5, we present the details of our algorithm.

Section 6 is dedicated to the validation of our algorithm, where we conduct experiments to assess its performance. Finally,

Section 7 is used to conclude our research.

4. Self-Evolving Pipeline

In this section, we propose a cyclical pipeline for the evolving agent to complete the endless evolution of the 6G core network. The design considerations behind the pipeline stem from insights of the biological evolution process. We posit that each subnet SCN experiences a unique environment and evolves through the intelligent modification of its genes and physical structure. In addition to the pipeline for self-evolution, we also propose a deployment architecture for the intelligent agents in the 6G core network.

4.1. Pipeline Cycle of Evolution

Figure 5 illustrates the self-evolving pipeline for the 6G core network. The pipeline is analogous to the biological evolution process, consisting of three distinct stages: (1) gene generation; (2) gene composition to construct the body; and (3) natural selection to identify the optimal composition. In this context, the gene refers to the microservice, the body is the subnet SCNs, the optimal composition is the evolved 6G core network adaptive to the given application environment, and the natural selection is the self-evolving algorithm that will be later detailed in the next section. It is important to note that due to the constantly changing environments, the three-stage pipeline will be run iteratively to maintain the adaptability of the resulting 6G core network. The period of each pipeline cycle is primarily determined by the rate of changes in the environment. For instance, when application scenarios are slowly changing over a long period of time, the pipeline might evolve once every few months or even years. On the other hand, when a new application scenario suddenly emerges, the pipeline tends to quickly modify the core network structure within days or minutes.

The pipeline begins with the generation of network evolution genes, the most fundamental components in a self-evolving 6G core network. These genes, also known as network microservices, serve as the building blocks for the NFs of the core network. Each gene possesses specific attributes, including its function and the data it processes. For instance, a registration microservice handles user registration requests by collecting user identification information.

There are typically two methods for generating genes: manual definition and deployment by human domain experts, or automatic extraction using machine learning algorithms from the current core network. Considering the increasing complexity of the 6G core network, it is usually beyond human ability to manually generate the microservices, thus we prefer to exploit the latter method. At each time step, t, the evolving agent automatically extracts a set of genes according to the current architecture of the 6G core network to compose the gene library, . This set is then updated to the next version, , by adding new genes or removing old ones. The in-depth details of the machine learning algorithm used for gene extraction will be discussed in the following section.

In the second stage, with the gene library, , the objective of the pipeline is to reconstruct the subnet SCN by appropriately combining genes into various functions to form a set of NFs. Specifically, given the current structure of , the evolving agent aims to learn the composing strategy, , that decides how to select the genes and compose them into special NFs, which in turn affects the overall structure of the next time-step SCNs. That is, , where is the application environment for the subnet . Different would lead to different variants of SCN, each with its own network function, F, communication protocol, P, and topology, T. For a given , the corresponding strategy, , would produce a group of individual microservice composition plans, analogous to the concept of population in the process of natural selection.

In the third stage, the pipeline evaluates the fitness of each individual SCN plan and selects the most suitable one for survival. The evolving agent assesses the composed SCN by assigning corresponding rewards based on metrics such as evolutionary success rate, evolutionary speed, service performance, and resource costs. The evolutionary success rate measures the completeness of the evolved core network’s required functionality. The evolutionary speed evaluates the response time from changing the environment to the convergence time of the evolutionary strategy. Service performance and resource cost are usually used together to measure the trade-off decided by the evolution policy.

With these rewards, the pipeline repeatedly optimizes the gene library, , the gene composition plan for SCNs, and the agent evolution policy. Once convergence is achieved, the newly produced architecture of the 6G core network is deployed to replace the old one, completing a cycle of evolution.

4.2. Deployment Architecture of Pipeline

After discussing the working stages of the pipeline, we propose a distributed architecture to deploy the evolving agent. As depicted in

Figure 6, the 6G core network hierarchy comprises a Cloud Core Network (CCN) and multiple Edge Core Networks (ECNs), each catering to customized communication scenarios. The CCN is responsible for managing and coordinating ECNs and does not directly involve the processing of user requirements.

At the top-layer of the architecture, the central CCN deploys AI models as its cloud evolving agent. This cloud agent is responsible for gathering information about itself and all ECNs to learn the evolving policy for the CCN. It creates training data and updates its evolving policy to maximize rewards, ensuring that the CCN evolves in line with its agent’s decisions. To optimize resource sharing and reuse the microservices among all ECNs, it is feasible to deploy only a single global gene library, , in the CCN. The CCN’s agent is responsible for creating, maintaining, and updating the to ensure that all the ECNs can efficiently access and utilize the shared microservices. By doing so, the self-evolving architecture can achieve greater scalability and consistence while minimizing resource consumption. To enhance the intelligence of the cloud agent and ensure automatic maintenance of the , a knowledge graph is often deployed in the CCN, which incorporates domain expert prior knowledge and the evolving preferences of 6G service operators. This allows the cloud agent to learn from the experts’ insights and make informed decisions about how to evolve the network. By incorporating this knowledge into its evolving policy, the cloud agent can improve its performance and help the CCN evolve more efficiently. For instance, we can construct a knowledge graph with the entities and the relationships containing knowledge of 3GPP protocols, Cloud Evolution Agent, and the statistics of the CCN to reduce the decision space of the CCN evolution.

At the bottom-layer of the architecture, there are multiple ECNs designed for various application scenarios. Each ECN is equipped with its own evolving agent, which receives edge data, such as the signaling throughput, the distribution of various signaling types, and quality of service (QoS) of UEs and is responsible for making decisions about how to evolve its host ECN. Specifically, the edge agent learns how to use the genes from the global gene library,

, residing on the CCN, to compose desirable network functions. The significant changes in the architecture of numerous ECNs will result in changes to the environment of the CCN, such as signaling throughput of the ECN and signaling distribution of the ECN. Changes in environment will result in alterations to the composition of microservices, and thereby evolute the CCN on NFs, and then the Edge Core Network structure. As the application scenario of the ECN changes, as shown in

Figure 6, where the applications expand from single autonomous vehicles to a mixture of services including autonomous vehicles, video surveillance, and Internet of Things (IoT), the agent decides to reshape the structure of the ECN from two NFs into three NFs, while also altering the internal microservice compositions of each NF. In the long-term, the evolving policies of different agents will be highly distinct due to their ECNs serving different application scenarios. As a result, the architecture of the ECNs will also vary accordingly. The significant changes in the architecture of numerous ECNs will result in changes to the environment of the CCN, such as signaling throughput of the ECN and signaling distribution of the ECN. Changes in environment will result in alterations to the composition of microservices, and thereby evolute the CCN.

In the hierarchical self-evolution architecture of the 6G core network, the agents in the ECNs and CCNs form a distributed multi-agent system, which means that the evolving policies learned by each agent are not generally independent of others. Due to the complexity of joint decision making among all agents, although there have been valuable reinforcement learning algorithms for multi-agent systems published in the literature, we leave this work for further research. To streamline the deployment process for the evolving agent, the MLops (Machine Learning Operations) techniques would be employed to support agent development, deployment, and operation. In the context of this paper, the agents would prefer to utilize the RL (Reinforcement Learning) framework and associated algorithms to make decisions for the 6G core network evolution. Because the agent is natively integrated into the core network, it offers a distinct advantage over external AI solutions for the current 5G core network in terms of comprehensive state perception, real-time decision-making, economic interaction, and efficient resource utilization.

5. Self-Evolving Algorithm

As shown in

Section 4.1, the self-evolving pipeline is composed of three stages from the genetic and evolutionary point of view. In this section, we will provide a clear definition of the three stages from the perspective of algorithm modeling and detail the specific methods for each stage: (1)

GLib Generation Stage; (2)

Composition Stage; (3)

Evaluation Stage.

5.1. Formulation of Self-Evolving Pipeline

As mentioned in

Section 3.2, we formulate the Self-Evolving Process of the 6G core network as a framework of Reinforcement Learning, which is usually modeled as a Markov Decision Process (MDPs):

. In particular, each stage,

, of the pipeline is modeled as an individual MDP. On the one hand, they have different action spaces and different strategies. On the other hand, each stage is closely related to one another, together forming a self-evolving cycle,

.

(1) GLib Generation Stage: At stage , the agent applies actions, , to manually generate microservices by splitting the core network functions into reusable building blocks, and then updates the with the newly extracted microservices. The strategy, , in this stage makes action selection based on the current environmental information, , and gene pool, .

(2) Composition Stage: At stage , the agent applies action, , to select existing microservices from the , which is updated in stage , and combines them into network elements based on the strategy, . The output of stage = 1 for each SCN is a set of candidate microservices compositions, constructed by adding or reducing microservices to existing , also with connections between to generate static network topology. It is important to note that, at this stage, the agent does not need to directly interact with the application environment.

(3) Evaluation Stage: At stage , the agent applies actions, , to organize the obtained and network topology deduced from stage into a service function chain for its host SCN, and then evaluate arrangement performance by interacting with the environment, . The strategy for achieving the above goals is called .

It is important to note that the time scale,

t, in our algorithm is different from the commonly used training scale, which may represent a long time range. So the update of network policy is not real-time. At each time step,

t,

represents all environmental information of the current application scenarios, overall network latency, and CPU and memory usage of

. The policy of the Self-Evolving agent is denoted as below.

In order to prevent human intervention in network evolution, we do not set reward functions in stages

and

, and only interact with the application environment in the stage

, which will be helpful to fully evaluate the completion of specified tasks by self-evolving networks. The detailed explanation of the reward function will be provided in

Section 5.3.

After we formulate the self-evolving task of the core network, the learning of the optimal evolving policy,

, for the agent would be accomplished by popular RL algorithms. Here, we employ Proximal Policy Optimization (PPO) [

48], a popular RL algorithm based on Policy Gradient (PG). PPO is particularly suitable for our approach because it has a Kullback–Leibler (KL) divergence between current and old policies, which can prevent large changes, thus avoiding catastrophic failure.

5.2. GLib Generation Stage

The goal of

generation is to decompose the Core Network Functions into multiple microservices. This approach follows the insight of decomposing monolithic software into smaller, more manageable components known as microservices [

49,

50]. By regarding the SCN of current time step

t as a monolithic software system, we can decompose it into various microservices with distinct functionalities and corresponding data, which would be used as raw materials to compose new network elements in the second stage,

. The optimal decomposition of the current SCN is learned through the reward principle of higher cohesion within the microservices and lower dependency among them, which offers advantages of easier maintenance, improved scalability, and reduced coupling risks.

In particular, we employed Go-Callviz [

51] to analyze the code structure of the current core network functions. Go-Callviz is an open-source project for visualizing the call graph of the Go program, which shows the relationships between functions and methods, including which functions are called by which others and how many times each function is called. By leveraging this information, the Go-Callviz generates an intuitive graphical representation of the call graph, which serves as the input for our gene decomposition algorithm.

We denote the obtained call graph as a directed graph, , where V is a set of entities and edge, , represents the connection between entities i and j. We define three distinct types of nodes in graph G: data node (), function node (), and module node(). These nodes correspond to the primary attributes of a microservice, with the first two representing the data and functionality, respectively. The module node, on the other hand, refers to the package under analysis, and it responds to the functional correlation in the graph clustering algorithm, indicating a stronger correlation between functions within the same package. Given the three types of nodes, we define five types of directed weighted edges:

module–function (): if a function, f, belongs to package m, then the weight of the edge , otherwise .

function–function (

): if there are calling relationships between function

and

, then they are connected by edge with weight, as below:

where

and

n represents the depth of function calls. For example, if there is a function call chain

, then

in

, while

in

.

function–data (

): when function

has a read/write operation on data

, the edge weight between them is defined as follows:

where

represents the maximum number of times all functions,

, perform read/write operations on data node

.

represents the number of times function

performs operations on data node

.

data–data (): The weight of edge when data is the member of data , otherwise .

module–function (): The weight of edge when f is in package m, otherwise .

To further clarify call graph

G, in

Table 2 we present several examples of utilizing Go-Callviz to analyze the source code of Free5GC [

52], an open-source project for 5th generation (5G) mobile core networks. Because the number of nodes obtained from Free5GC exceeds a few hundred,

Table 2 only shows a few of the source and target nodes along with their respective edge connections. Note that because there are currently no popular projects for 6G core networks, we only use Free5GC for the concept proof of the self-evolution in this gene generation algorithm and later experiments.

The nodes in calling graph G provide the fine-grained materials to generate microservices. Generally, the extraction of microservices follows the idea of “high cohesion, low coupling”. High cohesion implies that each microservice is focused on a specific and well-defined functionality, ensuring its internal components work together effectively. Low coupling indicates that the microservices are loosely interconnected, minimizing dependencies and enabling independent development, deployment, and scalability.

To meet this principle, we propose to use the graph clustering Louvain algorithm [

53] to cluster the nodes into a set of coarse-grained microservices, each of which has independent function and data. In particular, the input of the clustering algorithm is calling graph

G, and each cluster output by the algorithm is treated as a microservice, that is, a gene

. The clustering algorithm ensures that the resulting microservices exhibit higher coherence within them and lower coupling to one another, which aligns with the desired characteristics of being easily manageable and reusable.

In

Figure 7, we present an experimental example of six clustered microservices, along with the corresponding nodes and edges in the user traffic control microservice. It is evident that the extracted microservices, such as AMF Session Management, Authentication, and SMF Session Management, are highly coherent with the function requirements of current 5G core network NFs, including AMF, SMF, and AUFS. This suggests that the clustering strategy on the calling graph of the core network is desirable for achieving the goal of gene generation.

To further quantify the quality of the obtained microservices, we use four evaluation metrics to assess them [

54]: (1)

Centripetal coupling (Ca): the number of other microservices that depend on the classes within a given microservice. A higher value indicates greater stability of the microservices; (2)

Centrifugal coupling (Ce): the number of other microservices that the modules within a given microservices depend on. A higher value indicates a higher dependency and lower stability of the microservices; (3)

Instability (I): the level of instability of microservices by calculating

.

, where 0 indicates the most stable microservice that does not depend on any other microservices and

represents extreme instability; (4)

Relational Cohesion (RC): the ratio between the number of internal relationships (function–function, data–data, function–data) and the number of functions and data within a microservice. A higher RC indicates higher cohesion within the microservice. The evaluation results of Free5GC clustering will be later shown in

Section 6.3. Finally, it is noteworthy that the proposed graph clustering algorithm does not constitute a typical candidate for solving MDP problems. In this context, we consider the call graph as the state,

, of the MDP, with the clustering selection of a particular node as action,

, and the four evaluation metrics as local rewards,

R. With this viewpoint, the policy,

, is learned when the clustering algorithm converges.

5.3. Composition and Evaluation Stage

In stage , the is obtained through decomposing the Core Network Functions into multiple microservices, which are input for the next two stages. The goal of composition stage is to automatically learn how to compose microservices generated in the first stage, , into and set the connections between to form a static network topology. In the next stage, , the evolving agent organizes the obtained and network topology deduced from stage into a dynamic service function chain and then interact with the environment, , to receive feedback on a reward, . Although these two stages are modeled as relatively independent MDPs and use different methods, the and network topology generated in stage will not be evaluated separately, but as a component of the service function chain in the third stage, . It can only be regarded as the completion of a training cycle after finishing both the second and the third stages. The optimal service chain can be obtained through multiple loops of Reinforcement Learning. Therefore, we will merge the two stages together for a more comprehensive discussion.

As mentioned in

Section 5.1, we model the composition task as a typical MDP. The state,

, includes the gene library,

, the application scenarios,

, and the current set of network elements,

. The agent makes decisions at each time step as to whether or not to add or remove microservices from

and modify the connection topology between them, so the action set

consists of three options:

: Add-Microservice, which adds a microservice onto a selected network element;

: Del-MicroService, which deletes a microservice from a selected network element; and

: Set-Connection, which represents whether to set connections between

as 0 or 1. Note that there is no direct reward function,

R, at the composition stage

, and we later use the reward of stage

to supervise the learning of composition. As a result, policy

enables rapid growth and adaptation in the network topology by generating diverse

and connections between them. The microservices being composed into the same NF are integrated into more compact modules, eliminating internal interfaces and sharing data between them. This approach effectively reduces resource consumption within the

.

After the composition stage, we need to evaluate whether or not the generated and static network topology can effectively complete specific tasks. Here we select the method of generating dynamic service function chains to evaluate performance. The state includes the application scenarios, , and the current set of network elements, , in stage . At each time step, t, the action traverses through existing connection relationships from the current network element and selects one network element for the next time step, . Policy encourages exploration of multiple service chain generation paths.

In dynamic environments, service composition needs to support the recovery service-based applications from unexpected violations of not only function but also QoS, and the key factor in deciding which microservices are appropriate to be combined is their ability to provide a more modular and scalable service function with high performance and efficient resource consumption. Therefore, the reward, , for evaluation takes into account two factors: (1) whether or not the composed network elements, , can form a complete SCN that meets the functional requirements of the core network; (2) whether or not the tasks are completed with high Quality of Service (QoS). In other words, , where mainly includes a reward for completing a signaling process and each step taken in the network evaluation stage. is determined by network performance, including CPU occupancy, time latency, and specific rewards for different tasks.

The pseudocode of the Self-Evolving Algorithm 1 is presented bellow. The algorithm takes the initial strategy,

, network elements,

, gene pool,

, and the initial state of the environment,

, as inputs, and then outputs an update strategy,

, after completing three stages of training. In the first stage,

, the self-evolving agent samples action

to split microservices and update the gene pool,

through policy

. In the second stage,

, the agent samples action

to reorganize microservices to form

and topologies through policy

. In the third stage,

, the agent samples action

to generate dynamic service chains and interact with the environment,

, to return reward function

through policy

. All parameters are stored in memory pool

M, and we use Proximal Policy Optimization to update the strategy.

| Algorithm 1 Self-Evolving Algorithm |

Initialize Self-Evolving policy while not reaching max iterations do initialize sample action apply to modify store into M while M not reaching batch size do for do sample action apply to modify end for for do sample action apply end for store into M end while update with using samples in M end while

|

7. Conclusions

In this paper, we propose a self-evolution architecture for the 6G core network, which is designed to accommodate diverse and constantly changing scenarios through intelligent decision making. Our contributions encompass elucidating the core ideas and mechanisms of 6G core network self-evolution, designing a pipeline, and introducing a reinforcement learning-based self-evolving agent along with a relevant algorithm. Through experimental simulations, we have validated the effectiveness of our self-evolution algorithm, demonstrating its ability to empower the agent with decision-making capabilities and ensure favorable environmental adaptability. For future work, we consider three points. First, we have discussed the evolving approaches for the Function, F, and Topology, T, of a ; however, we have not explicitly addressed the Protocol, P. When the network functions’ NFs are reconstructed, the corresponding core network protocols, which can be regarded as a conversation between NFs, would also be automatically regenerated. The AIGC (AI Generated Content) provides potential mechanisms for this aspect. Second, considering the physical or virtual environment of the Edge Core Network, the physical deployment of evolved network function instances should also be studied. Finally, the multi-agent evolution algorithm for the joint-optimization for the Cloud Core Networks and Edge Core Networks would also be studied.