Efficient and Lightweight Visual Tracking with Differentiable Neural Architecture Search

Abstract

:1. Introduction

- We propose TrackNAS, a new Siamese tracker based on single-path one-shot NAS and differentiable NAS that facilitates deployment to resource-constrained devices.

- We design a series of search spaces and strategies to find promising network architectures for visual tracking, reducing the computational burden and improving the tracking performance.

- Extensive experiments on several large-scale datasets show the efficiency and effectiveness of our approach. TrackNAS achieves state-of-the-art performances using few store and computational resources.

2. Related Work

2.1. Neural Architecture Search

2.2. Siamese Trackers

3. Proposed Approach

3.1. Search Space

3.1.1. Basic Convolutional Cell

3.1.2. Search Space for Backbone Network Architecture

3.1.3. Search Space for Head Network Architecture

3.2. Search Strategy

3.2.1. Pretraining of Backbone Supernet

3.2.2. Distributed Search for Backbone and Head Network Architectures

3.2.3. Joint Search for Tracking Network Architecture

3.2.4. Lightweighting of Tracking Network Architecture

3.2.5. Retraining of Tracking Network Architecture

4. Experiments

4.1. Implementation Details

4.2. Tracking Datasets

4.3. Performance Metrics

4.4. Comparisons with State-of-the-Art Trackers

4.4.1. Results on OTB100

4.4.2. Results on UAV123

4.4.3. Results on LaSOT

4.4.4. Results on GOT-10k

4.4.5. Results on Efficiency Analysis

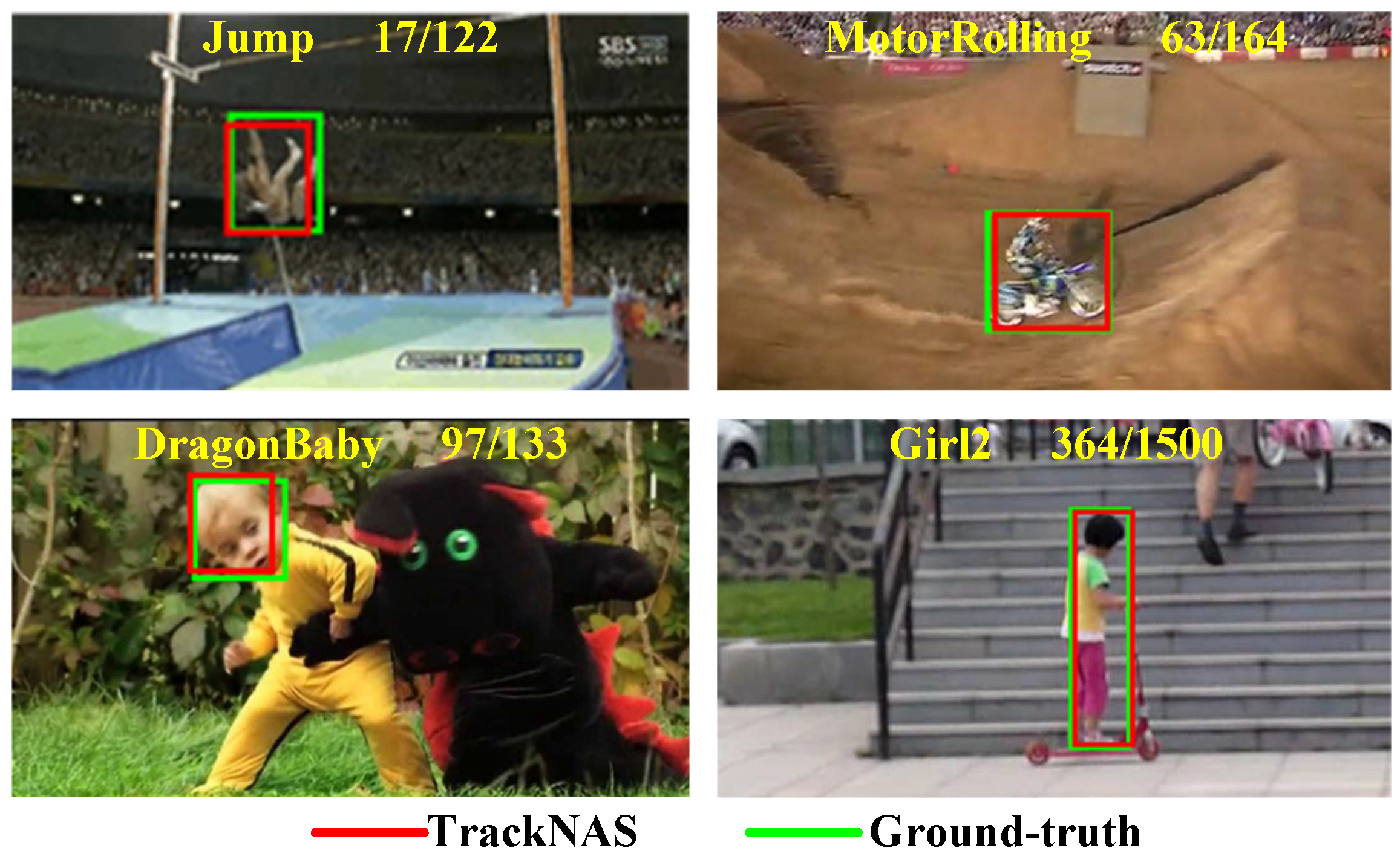

4.4.6. Visualized Results

4.5. Ablation Studies

4.5.1. Methodology

4.5.2. Search Space

4.5.3. Search Strategy

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Javed, S.; Danelljan, M.; Khan, F.S.; Khan, M.H.; Felsberg, M.; Matas, J. Visual object tracking with discriminative filters and siamese networks: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 6552–6574. [Google Scholar]

- Marvasti-Zadeh, S.M.; Cheng, L.; Ghanei-Yakhdan, H.; Kasaei, S. Deep learning for visual tracking: A comprehensive survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 3943–3968. [Google Scholar]

- Ondrašovič, M.; Tarábek, P. Siamese visual object tracking: A survey. IEEE Access 2021, 9, 110149–110172. [Google Scholar]

- Tao, R.; Gavves, E.; Smeulders, A.W.M. Siamese Instance Search for Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1420–1429. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–10 October 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High Performance Visual Tracking With Siamese Region Proposal Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8971–8980. [Google Scholar]

- Gao, P.; Ma, Y.; Yuan, R.; Xiao, L.; Wang, F. Learning Cascaded Siamese Networks for High Performance Visual Tracking. In Proceedings of the International Conference on Image Processing, Chinese Taipei, China, 22–25 September 2019; pp. 3078–3082. [Google Scholar]

- Bao, J.; Yan, M.; Yang, Y.; Chen, K. SiamFFN: Siamese Feature Fusion Network for Visual Tracking. Electronics 2023, 12, 1568. [Google Scholar]

- Gao, P.; Yuan, R.; Wang, F.; Xiao, L.; Fujita, H.; Zhang, Y. Siamese attentional keypoint network for high performance visual tracking. Knowl.-Based Syst. 2020, 193, 105448. [Google Scholar]

- Cui, Y.; Guo, D.; Shao, Y.; Wang, Z.; Shen, C.; Zhang, L.; Chen, S. Joint Classification and Regression for Visual Tracking with Fully Convolutional Siamese Networks. Int. J. Comput. Vis. 2022, 130, 550–566. [Google Scholar]

- Li, J.; Zhang, K.; Gao, Z.; Yang, L.; Zhuo, L. SiamPRA: An Effective Network for UAV Visual Tracking. Electronics 2023, 12, 2374. [Google Scholar]

- Gao, P.; Zhang, Q.; Wang, F.; Xiao, L.; Fujita, H.; Zhang, Y. Learning reinforced attentional representation for end-to-end visual tracking. Inf. Sci. 2020, 517, 52–67. [Google Scholar]

- Chen, X.; Peng, H.; Wang, D.; Lu, H.; Hu, H. SeqTrack: Sequence to Sequence Learning for Visual Object Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 14572–14581. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. AAAI Conf. Artif. Intell. 2020, 34, 12549–12556. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4282–4291. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 6668–6677. [Google Scholar]

- Guo, D.; Shao, Y.; Cui, Y.; Wang, Z.; Zhang, L.; Shen, C. Graph Attention Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 9538–9547. [Google Scholar]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-aware anchor-free tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, Scotland, UK, 23–28 August 2020; pp. 771–787. [Google Scholar]

- Cheng, S.; Zhong, B.; Li, G.; Liu, X.; Tang, Z.; Li, X.; Wang, J. Learning to filter: Siamese relation network for robust tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 4421–4431. [Google Scholar]

- Lin, L.; Fan, H.; Zhang, Z.; Xu, Y.; Ling, H. Swintrack: A simple and strong baseline for transformer tracking. Adv. Neural Inf. Process. Syst. 2022, 35, 16743–16754. [Google Scholar]

- Cui, Y.; Jiang, C.; Wang, L.; Wu, G. Mixformer: End-to-end tracking with iterative mixed attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 13608–13618. [Google Scholar]

- Song, Z.; Yu, J.; Chen, Y.P.P.; Yang, W. Transformer tracking with cyclic shifting window attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 8791–8800. [Google Scholar]

- Lan, J.P.; Cheng, Z.Q.; He, J.Y.; Li, C.; Luo, B.; Bao, X.; Xiang, W.; Geng, Y.; Xie, X. Procontext: Exploring progressive context transformer for tracking. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–9 October 2023; pp. 1–5. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4591–4600. [Google Scholar]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Li, Y. Hift: Hierarchical feature transformer for aerial tracking. In Proceedings of the International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2021; pp. 15457–15466. [Google Scholar]

- Xing, D.; Evangeliou, N.; Tsoukalas, A.; Tzes, A. Siamese transformer pyramid networks for real-time UAV tracking. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2022; pp. 2139–2148. [Google Scholar]

- Zhao, M.; Okada, K.; Inaba, M. Trtr: Visual tracking with transformer. arXiv 2021, arXiv:2105.03817. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Fu, C.; Lu, K.; Zheng, G.; Ye, J.; Cao, Z.; Li, B. Siamese object tracking for unmanned aerial vehicle: A review and comprehensive analysis. arXiv 2022, arXiv:2205.04281. [Google Scholar]

- Zoph, B.; Le, Q. Neural Architecture Search with Reinforcement Learning. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–16. [Google Scholar]

- Pham, H.; Guan, M.; Zoph, B.; Le, Q.; Dean, J. Efficient neural architecture search via parameters sharing. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 4095–4104. [Google Scholar]

- Cai, H.; Zhu, L.; Han, S. Proxylessnas: Direct neural architecture search on target task and hardware. arXiv 2018, arXiv:1812.00332. [Google Scholar]

- Liu, H.; Simonyan, K.; Yang, Y. Darts: Differentiable architecture search. arXiv 2018, arXiv:1806.09055. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Ghimire, D.; Kil, D.; Kim, S.H. A survey on efficient convolutional neural networks and hardware acceleration. Electronics 2022, 11, 945. [Google Scholar]

- Song, Y.; Wang, T.; Cai, P.; Mondal, S.K.; Sahoo, J.P. A comprehensive survey of few-shot learning: Evolution, applications, challenges, and opportunities. Acm Comput. Surv. 2023, 55, 271. [Google Scholar]

- Cai, H.; Lin, J.; Lin, Y.; Liu, Z.; Tang, H.; Wang, H.; Zhu, L.; Han, S. Enable deep learning on mobile devices: Methods, systems, and applications. ACM Trans. Des. Autom. Electron. Syst. 2022, 27, 1–50. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8697–8710. [Google Scholar]

- Real, E.; Aggarwal, A.; Huang, Y.; Le, Q.V. Regularized evolution for image classifier architecture search. AAAI Conf. Artif. Intell. 2019, 33, 4780–4789. [Google Scholar] [CrossRef]

- Zheng, X.; Ji, R.; Tang, L.; Zhang, B.; Liu, J.; Tian, Q. Multinomial distribution learning for effective neural architecture search. In Proceedings of the International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1304–1313. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for hyper-parameter optimization. Adv. Neural Inf. Process. Syst. 2011, 24, 1–9. [Google Scholar]

- Real, E.; Moore, S.; Selle, A.; Saxena, S.; Suematsu, Y.L.; Tan, J.; Le, Q.V.; Kurakin, A. Large-scale evolution of image classifiers. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2902–2911. [Google Scholar]

- Baker, B.; Gupta, O.; Naik, N.; Raskar, R. Designing neural network architectures using reinforcement learning. arXiv 2016, arXiv:1611.02167. [Google Scholar]

- Marvasti-Zadeh, S.M.; Khaghani, J.; Cheng, L.; Ghanei-Yakhdan, H.; Kasaei, S. Chase: Robust visual tracking via cell-level differentiable neural architecture search. arXiv 2021, arXiv:2107.03463. [Google Scholar]

- Guo, Z.; Zhang, X.; Mu, H.; Heng, W.; Liu, Z.; Wei, Y.; Sun, J. Single path one-shot neural architecture search with uniform sampling. In Proceedings of the European Conference on Computer Vision, Glasgow, Scotland, UK, 23–28 August 2020; pp. 544–560. [Google Scholar]

- Cai, H.; Gan, C.; Wang, T.; Zhang, Z.; Han, S. Once-for-all: Train one network and specialize it for efficient deployment. In Proceedings of the International Conference on Learning Representations, Virtual, 26 April–1 May 2020; pp. 1–15. [Google Scholar]

- Stamoulis, D.; Ding, R.; Wang, D.; Lymberopoulos, D.; Priyantha, B.; Liu, J.; Marculescu, D. Single-path nas: Designing hardware-efficient convnets in less than 4 hours. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Wurzburg, Germany, 16–20 September 2019; pp. 481–497. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7036–7045. [Google Scholar]

- Liu, C.; Chen, L.C.; Schroff, F.; Adam, H.; Hua, W.; Yuille, A.L.; Fei-Fei, L. Auto-deeplab: Hierarchical neural architecture search for semantic image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 82–92. [Google Scholar]

- Liu, S.; Kong, W.; Chen, X.; Xu, M.; Yasir, M.; Zhao, L.; Li, J. Multi-scale ship detection algorithm based on a lightweight neural network for spaceborne SAR images. Remote Sens. 2022, 14, 1149. [Google Scholar] [CrossRef]

- Li, W.; Zhang, L.; Wu, C.; Cui, Z.; Niu, C. A new lightweight deep neural network for surface scratch detection. Int. J. Adv. Manuf. Technol. 2022, 123, 1999–2015. [Google Scholar] [CrossRef]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “siamese” time delay neural network. Adv. Neural Inf. Process. Syst. 1993, 6, 1–8. [Google Scholar]

- Thangavel, J.; Kokul, T.; Ramanan, A.; Fernando, S. Transformers in Single Object Tracking: An Experimental Survey. arXiv 2023, arXiv:2302.11867. [Google Scholar]

- He, A.; Luo, C.; Tian, X.; Zeng, W. A twofold siamese network for real-time object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4834–4843. [Google Scholar]

- Wang, Q.; Teng, Z.; Xing, J.; Gao, J.; Hu, W.; Maybank, S. Learning attentions: Residual attentional Siamese Network for high performance online visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4854–4863. [Google Scholar]

- Zhang, Y.; Wang, L.; Qi, J.; Wang, D.; Feng, M.; Lu, H. Structured siamese network for real-time visual tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 351–366. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-Aware Siamese Networks for Visual Object Tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 103–119. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1328–1338. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. Spm-tracker: Series-parallel matching for real-time visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3643–3652. [Google Scholar]

- Fan, H.; Ling, H. Siamese cascaded region proposal networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7952–7961. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Yan, B.; Peng, H.; Wu, K.; Wang, D.; Fu, J.; Lu, H. LightTrack: Finding lightweight neural networks for object tracking via one-shot architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 15180–15189. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Ramachandran, P.; Zoph, B.; Le, Q.V. Searching for activation functions. arXiv 2017, arXiv:1710.05941. [Google Scholar]

- Real, E.; Shlens, J.; Mazzocchi, S.; Pan, X.; Vanhoucke, V. Youtube-boundingboxes: A large high-precision human-annotated data set for object detection in video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5296–5305. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Lim, J.; Yang, M.H. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2016; pp. 445–461. [Google Scholar]

- Fan, H.; Bai, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Huang, M.; Liu, J.; Xu, Y.; et al. Lasot: A high-quality large-scale single object tracking benchmark. Int. J. Comput. Vis. 2021, 129, 439–461. [Google Scholar] [CrossRef]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pfugfelder, R.; Čehovin, L.; Vojir, T.; Bhat, G.; Lukezic, A.; Eldesokey, A.; et al. The Sixth Visual Object Tracking VOT2018 Challenge Results. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–27 June 2013; pp. 2411–2418. [Google Scholar]

- Čehovin, L.; Leonardis, A.; Kristan, M. Visual Object Tracking Performance Measures Revisited. IEEE Trans. Image Process. 2016, 25, 1261–1274. [Google Scholar] [CrossRef]

- Kristan, M.; Matas, J.; Leonardis, A.; Vojíř, T.; Pflugfelder, R.; Fernández, G.; Nebehay, G.; Porikli, F.; Čehovin, L. A Novel Performance Evaluation Methodology for Single-Target Trackers. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2137–2155. [Google Scholar] [CrossRef]

| Basic Cells | Kernel Sizes | Strides |

|---|---|---|

| Standard Conv | , , | 1, 2 |

| MBConv | , , | 1, 2 |

| Basic Cells | Kernel Sizes | Dimensions | Strides |

|---|---|---|---|

| Standard Conv | , , | 128, 192, 256 | 1, 2 |

| DSConv | , | 128, 192, 256 | 1 |

| Datasets | OTB100 | VOT2018 | UAV123 | LaSOT | GOT-10k |

|---|---|---|---|---|---|

| Video Sequences | 100 | 60 | 123 | Total: 1400 Training: 1120 Test: 280 | Total: 10 k Training: 9.34 k Test: 420 |

| Total Video Frames (Annotations) | 58.61 k | 21.356 k | 113.476 k | Total: 3.52 M Training: 2.8 M Testing: 685 k | Total: 1.5 M Training: 1.4 M Test: 56 k |

| Classes | 22 | 41 | 9 | 70 | Total: 563 Training: 480 Test: 84 |

| Minimum Frames | 71 | 24 | 109 | 1000 | 51 |

| Maximum Frames | 3872 | 1500 | 3085 | 11,397 | 920 |

| Average Resolution | 356 × 530 | 758 × 465 | 1231 × 699 | 632 × 1089 | 929 × 1638 |

| Frame Rate | 30 FPS | 30 FPS | 30 FPS | 30 FPS | 10 FPS |

| Trackers | OTB100 [75] | UAV123 [76] | LaSOT [77] | GOT-10k [74] | # Params (M) ↓ | GFLOPs ↓ | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| SR↑ | PR↑ | SR↑ | PR↑ | SR↑ | PR↑ | AO↑ | SR↑ | |||

| SiamFC [5] | 0.582 | 0.771 | 0.461 | 0.691 | 0.336 | 0.339 | 0.392 | 0.426 | 2.3 | 2.6 |

| SiamRPN [6] | 0.637 | 0.851 | 0.527 | 0.796 | 0.433 | - | 0.481 | 0.581 | 7.6 | 4.9 |

| SiamDW (R) [25] | 0.670 | 0.892 | 0.536 | 0.776 | 0.385 | 0.389 | 0.429 | 0.483 | 2.5 | 12.9 |

| SiamRPN++ (R) [15] | 0.696 | 0.915 | 0.642 | 0.840 | 0.496 | 0.491 | 0.518 | 0.618 | 53.9 | 48.9 |

| SiamBAN [17] | 0.696 | 0.910 | 0.631 | 0.833 | 0.514 | 0.521 | - | - | 53.9 | 48.8 |

| SiamFC++ (G) [14] | 0.683 | 0.896 | 0.623 | 0.810 | 0.543 | 0.547 | 0.595 | 0.695 | 13.9 | 17.5 |

| Ocean [19] | 0.684 | 0.920 | 0.621 | 0.823 | 0.560 | 0.566 | 0.611 | 0.721 | 37.2 | 20.2 |

| SiamGAT [18] | 0.710 | 0.916 | 0.646 | 0.843 | 0.539 | 0.530 | 0.627 | 0.488 | 14.2 | 17.3 |

| TrTr [28] | 0.712 | 0.931 | 0.633 | 0.839 | 0.527 | 0.544 | - | - | 11.9 | 21.2 |

| HiFT [26] | 0.614 | 0.814 | 0.589 | 0.787 | 0.451 | 0.421 | - | - | 11.0 | 6.5 |

| SiamTPN [27] | 0.702 | 0.902 | 0.636 | 0.823 | 0.581 | 0.578 | 0.576 | 0.441 | 6.2 | 2.1 |

| TrackNAS (Ours) | 0.685 | 0.893 | 0.633 | 0.816 | 0.538 | 0.532 | 0.603 | 0.696 | 2.1 | 0.6 |

| Trackers | # Params (M) ↓ | GFLOPs ↓ | EAO ↑ | Accuracy ↑ | Robustness ↓ |

|---|---|---|---|---|---|

| TrackNAS | 3.3 | 0.6 | 0.407 | 0.582 | 0.244 |

| TrackNAS | 2.8 | 0.6 | 0.398 | 0.577 | 0.267 |

| TrackNAS | 2.6 | 0.6 | 0.379 | 0.574 | 0.302 |

| TrackNAS | 2.8 | 0.6 | 0.343 | 0.533 | 0.348 |

| TrackNAS | 2.1 | 0.6 | 0.425 | 0.602 | 0.198 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, P.; Liu, X.; Sang, H.-C.; Wang, Y.; Wang, F. Efficient and Lightweight Visual Tracking with Differentiable Neural Architecture Search. Electronics 2023, 12, 3623. https://doi.org/10.3390/electronics12173623

Gao P, Liu X, Sang H-C, Wang Y, Wang F. Efficient and Lightweight Visual Tracking with Differentiable Neural Architecture Search. Electronics. 2023; 12(17):3623. https://doi.org/10.3390/electronics12173623

Chicago/Turabian StyleGao, Peng, Xiao Liu, Hong-Chuan Sang, Yu Wang, and Fei Wang. 2023. "Efficient and Lightweight Visual Tracking with Differentiable Neural Architecture Search" Electronics 12, no. 17: 3623. https://doi.org/10.3390/electronics12173623