Enhanced Infrared Detection Algorithm for Weak Targets in Complex Backgrounds

Abstract

:1. Introduction

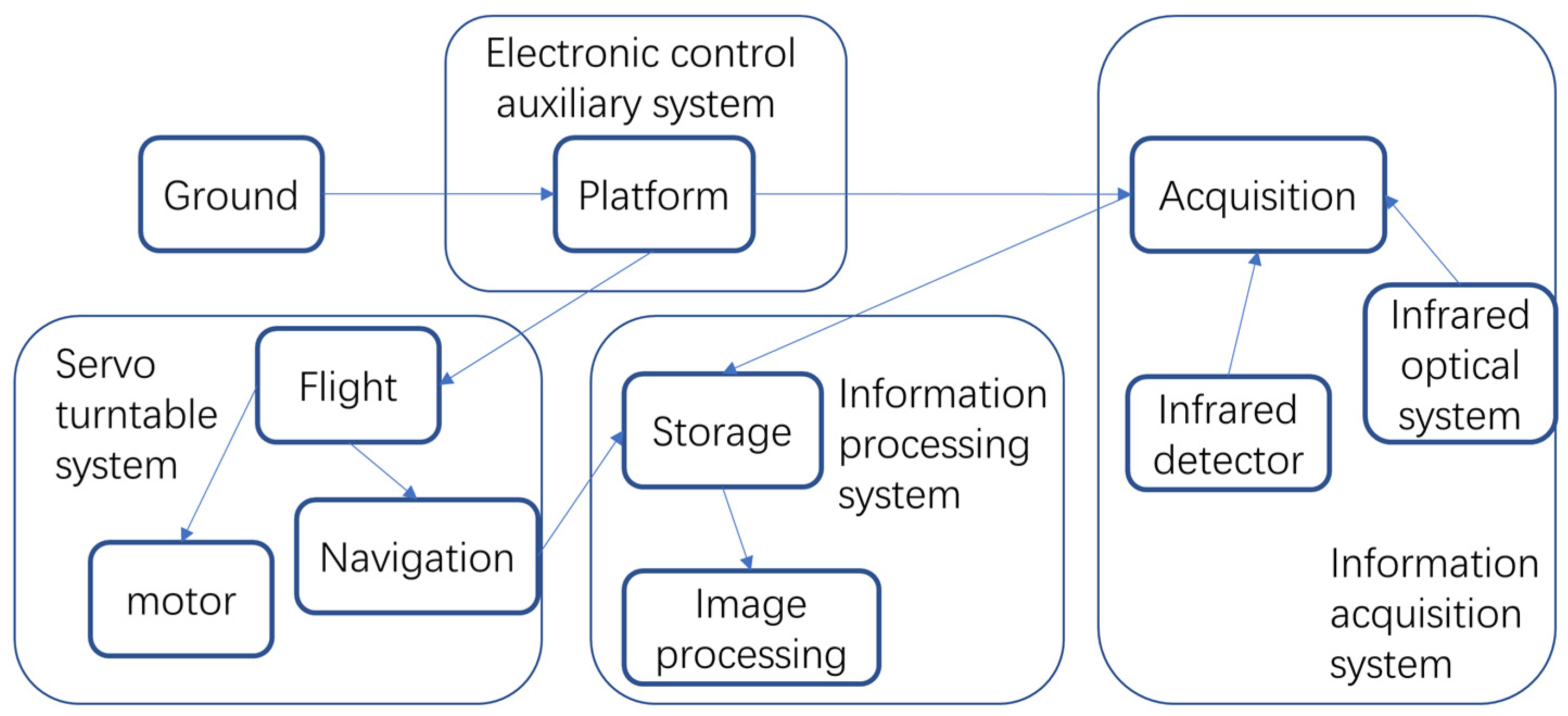

2. Materials and Methods

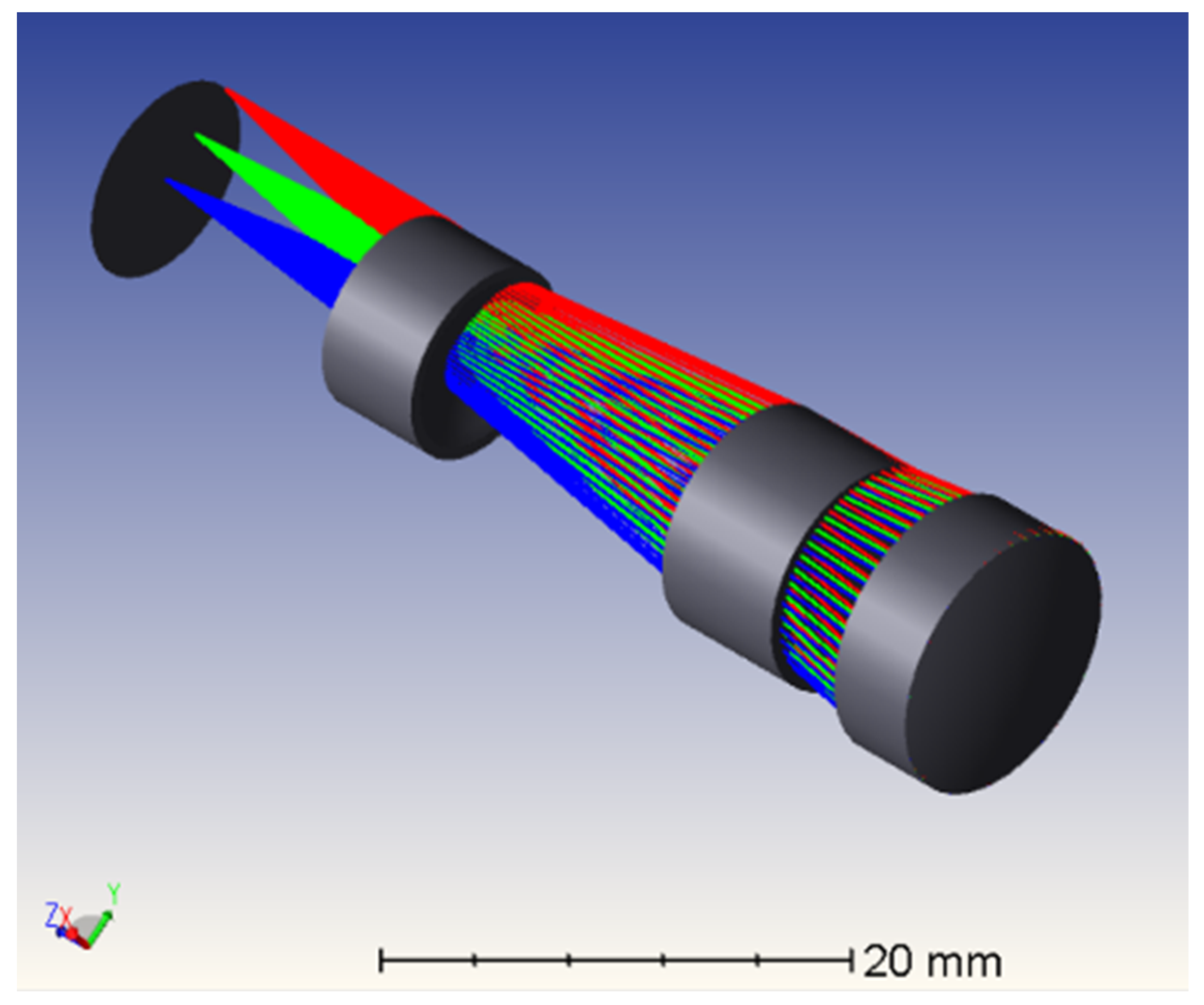

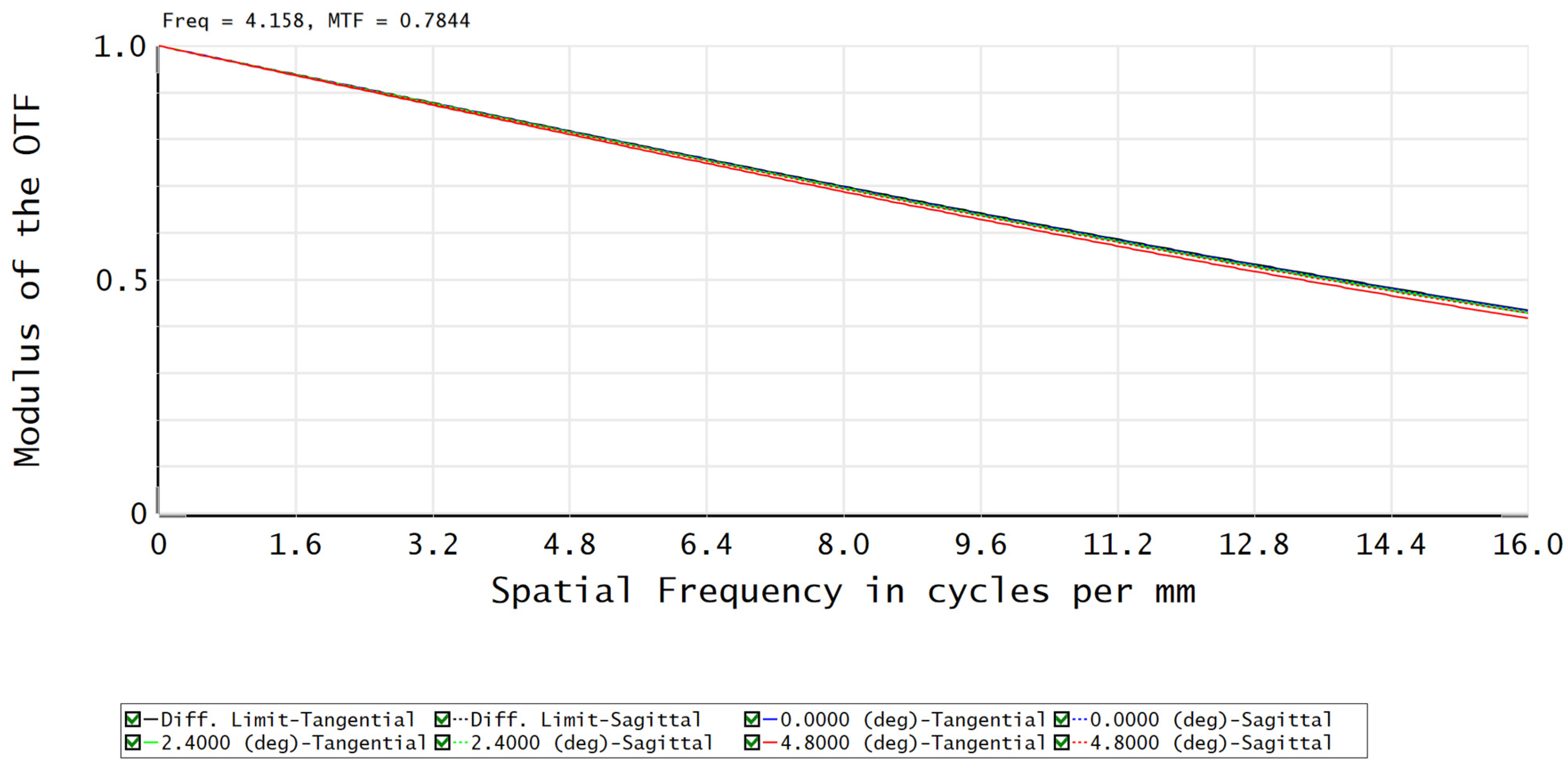

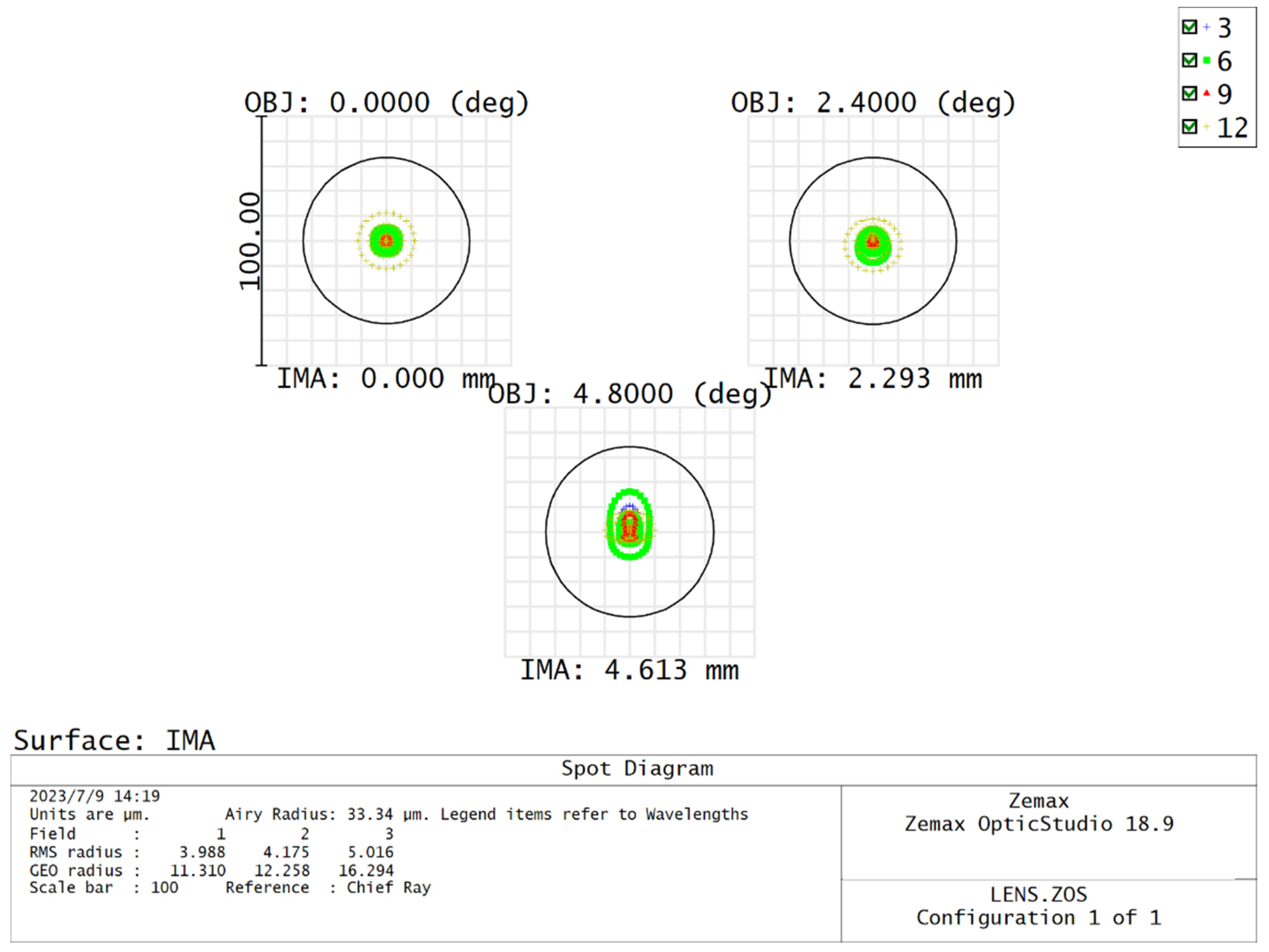

2.1. Infrared Small-Target Acquisition Optical System

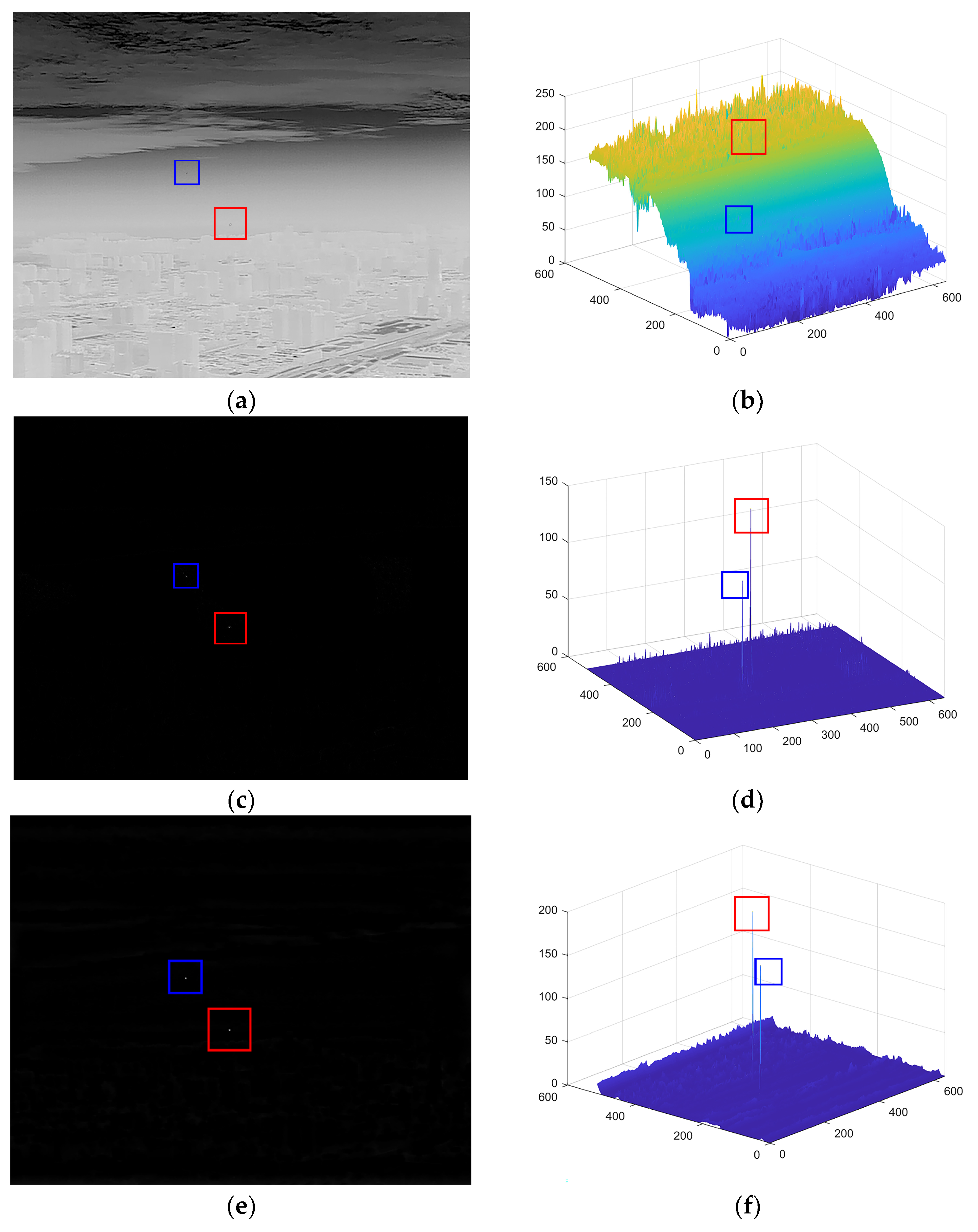

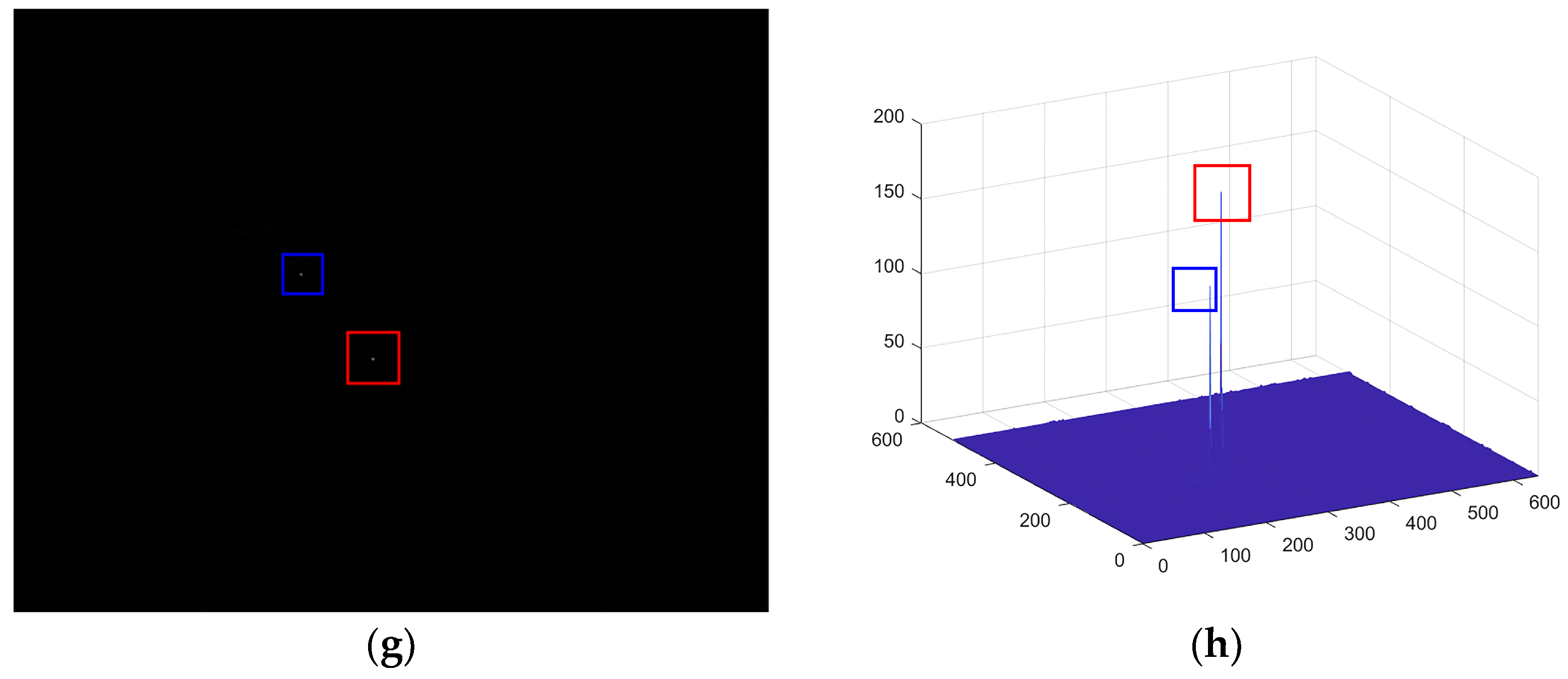

2.2. Infrared Weak Target Enhancement Algorithm

2.2.1. Image Pre-Processing

2.2.2. A-Channel Image Enhancement Methods

Improved Perona–Malik Model

Improved Curvature Filtering

2.2.3. B-Channel Image Enhancement Methods

Improved Bootstrap Filtering

Improved Local Contrast Algorithm

2.2.4. Two-Channel Fusion with Weighted Superposition

3. Results

3.1. Sequential Experiments

3.2. Assessment Criteria

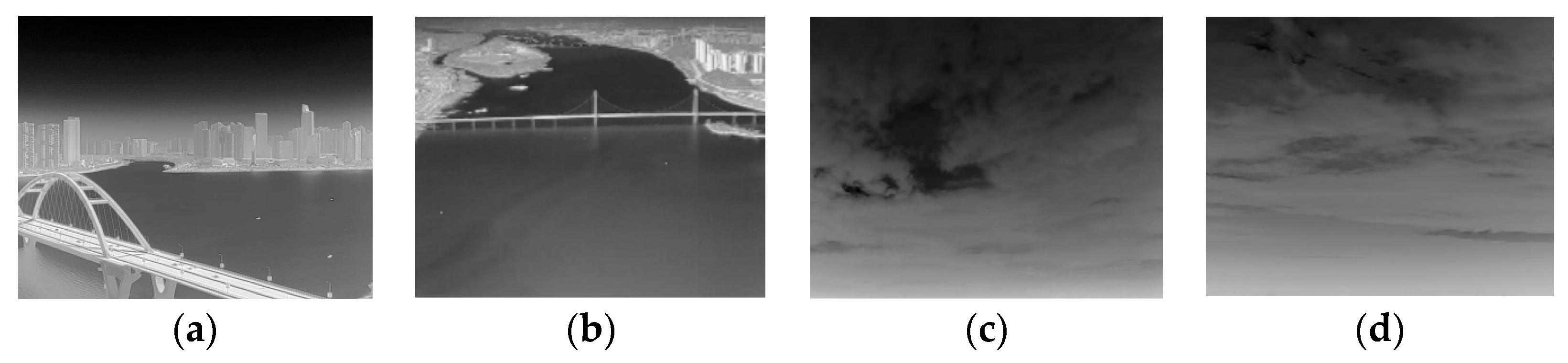

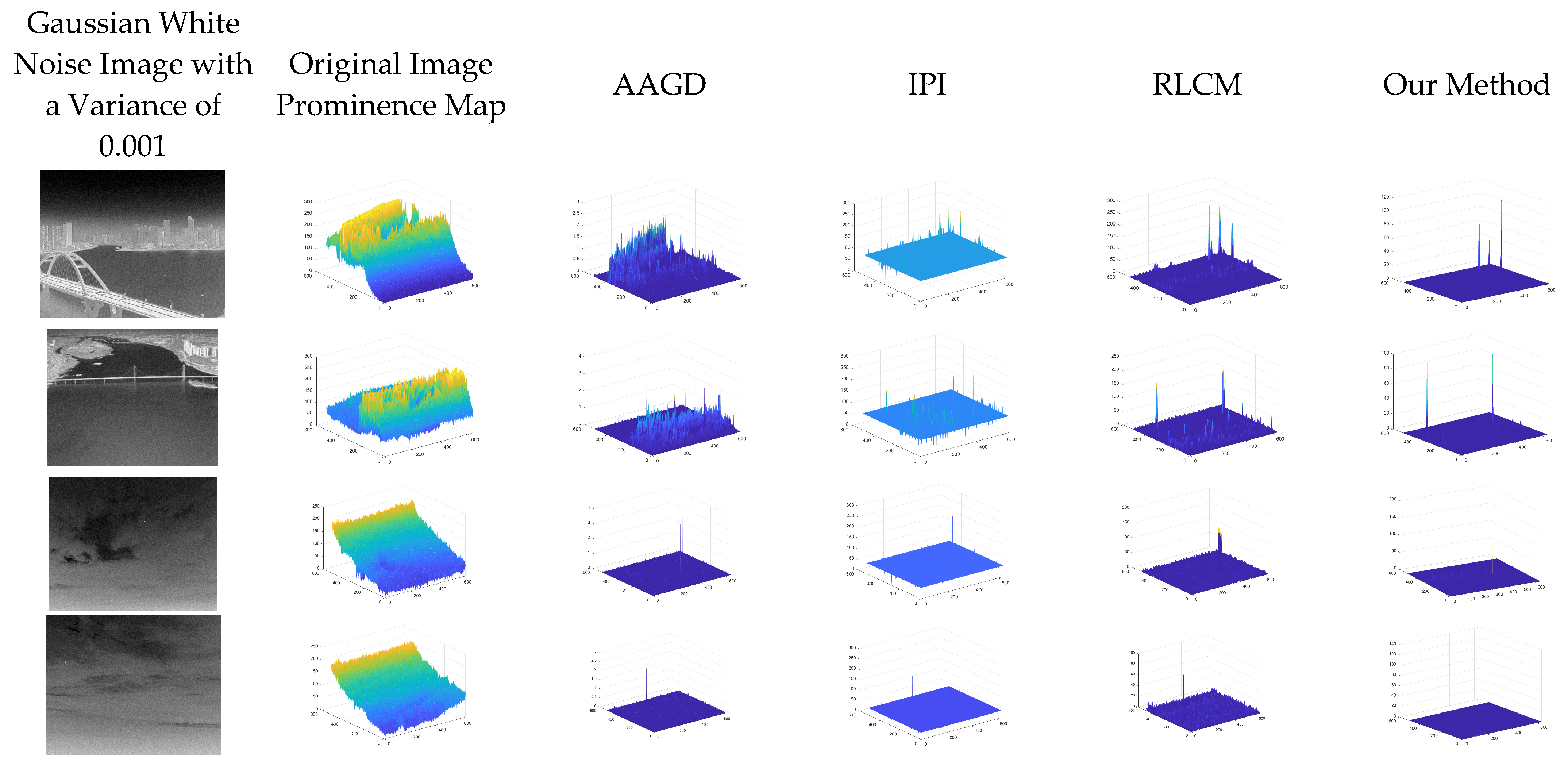

3.3. Comparative Experiments

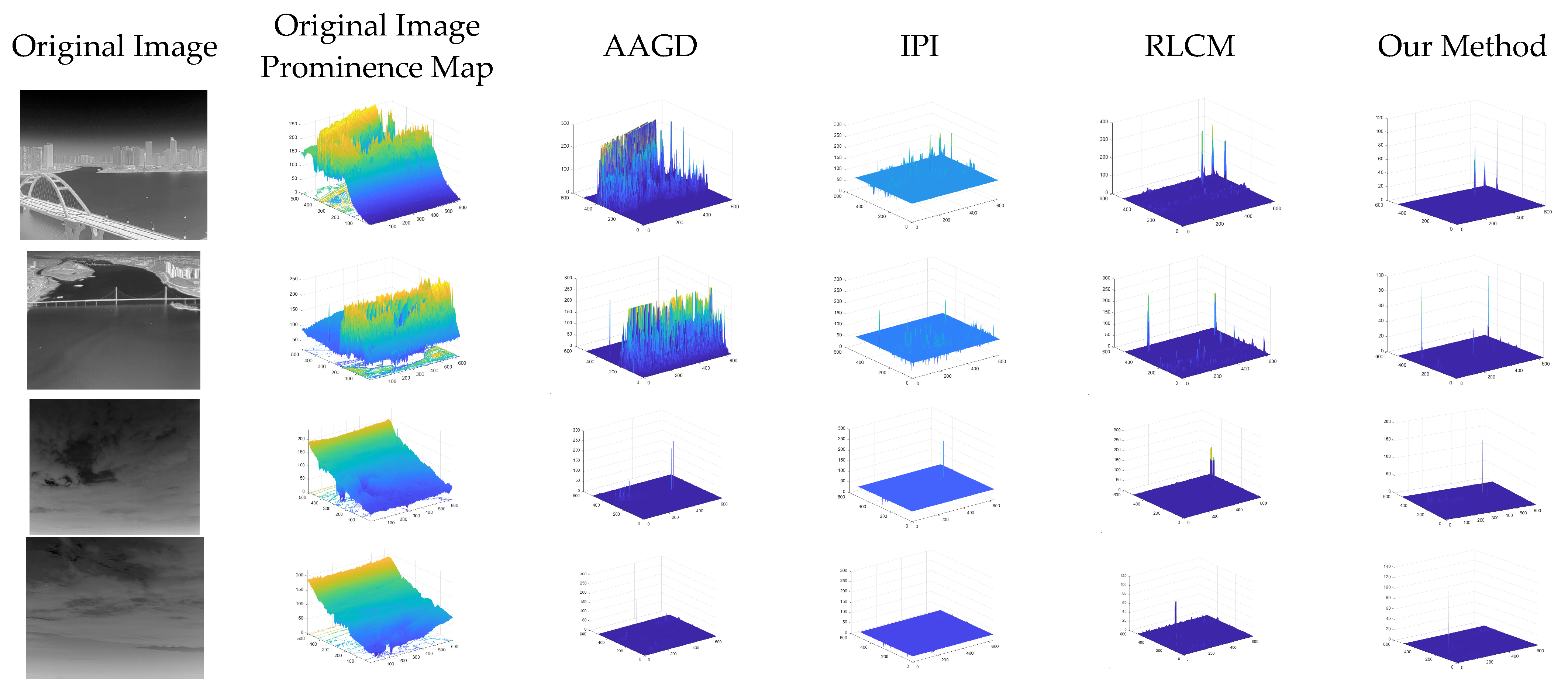

3.4. Ablation Experiments

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rawat, S.S.; Verma, S.K.; Kumar, Y. Review on recent development in infrared small target detection algorithms. Procedia Comput. Sci. 2020, 167, 2496–2505. [Google Scholar] [CrossRef]

- Yang, J.; Cui, Y.; Song, F.; Lei, T. Infrared Small Target Detection Based on Non-Overlapping Patch Model via l0-l1 Norm. Electronics 2020, 9, 1426. [Google Scholar] [CrossRef]

- Andriyanov, N.A.; Dementiev, V.E. Developing and studying the algorithm for segmentation of simple images using detectors based on doubly stochastic random fields. Pattern Recognit. Image Anal. 2019, 29, 1–9. [Google Scholar] [CrossRef]

- Mammadov, R.; Lena, R.; Mammadov, G. Invariant Image Recognition of Objects Using the Radon Transform. In Proceedings of the International Conference on Software Testing, Validation and Verification (ICST), 24–28 October 2020; pp. 503–517. Available online: https://ceur-ws.org/Vol-2711/paper39.pdf (accessed on 1 January 2023).

- Andriyanov, N.A.; Dementiev, V.E.; Tashlinskiy, A.G. Detection of objects in the images: From likelihood relationships towards scalable and efficient neural networks. Comput. Opt. 2022, 46, 139–159. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, X.; Wang, M. Background Suppression Algorithm for Infrared Images Based on Robinson Guard Filter. In Proceedings of the 2017 2nd International Conference on Multimedia and Image Processing (ICMIP), Wuhan, China, 17–19 March 2017; pp. 250–254. [Google Scholar] [CrossRef]

- Gong, Y.; Xie, Y. Linear approximation of mean curvature. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 570–574. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Analysis of new top-hat transformation and the application for infrared dim small target detection. Pattern Recognit. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Infrared small target enhancement and detection based on modified top-hat transformations. Comput. Electr. Eng. 2010, 36, 1193–1201. [Google Scholar] [CrossRef]

- Cai, J.; Huang, Y.; Li, P.; Zhao, Z.; Deng, Q. Infrared small target detection algorithm using visual contrast mechanism. Syst. Eng. Electron. 2019, 41, 2416–2423. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Hao, S.; Xie, J.; Wen, M.; Wang, Y.; Yuan, L. Design and realization of light and small long-wave infrared optical system. Infrared Laser Eng. 2020, 49, 293–300. [Google Scholar] [CrossRef]

- Xi, T.; Yuan, L.; Sun, Q. A Combined Approach to Infrared Small-Target Detection with the Alternating Direction Method of Multipliers and an Improved Top-Hat Transformation. Sensors 2022, 22, 7327. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Cao, E.; Hu, X.; Qian, W.; Ren, K. In-frame and inter-frame information based infrared moving small target detection under complex cloud backgrounds. Infrared Phys. Technol. 2016, 76, 455–467. [Google Scholar] [CrossRef]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Gong, Y.; Sbalzarini, I.F. Curvature Filters Efficiently Reduce Certain Variational Energies. IEEE Trans. Image Process. 2017, 26, 1786–1798. [Google Scholar] [CrossRef]

- Thorpe, J.A. Some remarks on the Gauss-Bonnet integral. J. Math. Mech. 1969, 18, 779–786. Available online: http://www.jstor.org/stable/24893137 (accessed on 1 January 2023).

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Kim, S.; Yang, Y.; Lee, J.; Park, Y. Small Target Detection Utilizing Robust Methods of the Human Visual System for IRST. J. Infrared Millim. Terahertz Waves 2009, 30, 994–1011. [Google Scholar] [CrossRef]

- Moradi, S.; Moallem, P.; Sabahi, M.F. A false-alarm aware methodology to develop robust and efficient multi-scale infrared small target detection algorithm. Infrared Phys. Technol. 2018, 89, 387–397, ISSN 1350-4495. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Song, Y. Infrared small target and background separation via column-wise weighted robust principal component analysis. Infrared Phys. Technol. 2016, 77, 421–430. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Liu, C.; Zhang, H.; Zhao, Q.; Li, N. Infrared Small Target Detection Based on the Weighted Strengthened Local Contrast Measure. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1670–1674. [Google Scholar] [CrossRef]

- Uzair, M.; Brinkworth, R.S.A.; Finn, A. Detecting Small Size and Minimal Thermal Signature Targets in Infrared Imagery Using Biologically Inspired Vision. Sensors 2021, 21, 1812. [Google Scholar] [CrossRef]

- Sun, J.; Gao, H.; Wang, X.; Yu, J. Scale Enhancement Pyramid Network for Small Object Detection from UAV Images. Entropy 2022, 24, 1699. [Google Scholar] [CrossRef] [PubMed]

- Man, Y.; Yang, Q.; Chen, T. Infrared Single-Frame Small Target Detection Based on Block-Matching. Sensors 2022, 22, 8300. [Google Scholar] [CrossRef] [PubMed]

- Fu, Q.; Liu, N.; Guo, H.; Liu, X.; Yan, Y.; Geng, D.; Zhang, S.; Zhan, J.; Duan, J. Multi-Band Polarization Imaging in a Harsh Sea Fog Environment. Appl. Sci. 2023, 13, 202. [Google Scholar] [CrossRef]

| AAGD | IPI | RLCM | Our Method | |

|---|---|---|---|---|

| 0.791 | 0.824 | 0.930 | 0.988 | |

| 0.555 | 0.505 | 0.315 | 0.070 | |

| 0.135 | 0.323 | 0.576 | 0.893 | |

| 0.826 | 0.847 | 0.934 | 0.980 | |

| 0.808 | 0.836 | 0.932 | 0.984 |

| Improved PM filtering only | 0.957 | 0.063 | 0.798 | 0.952 | 0.954 |

| Improved PM filtering plus improved curvature filtering | 0.957 | 0.063 | 0.903 | 0.952 | 0.954 |

| Improved local contrast algorithm only | 0.939 | 0.069 | 0.695 | 0.943 | 0.941 |

| Improved bootstrap filtering plus improved local contrast algorithm | 0.953 | 0.070 | 0.766 | 0.952 | 0.952 |

| Use of complete algorithms | 0.993 | 0.079 | 0.893 | 0.980 | 0.984 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, Z.; Ma, L.; Yang, S.; Li, Y.; Shi, H.; Fu, Q. Enhanced Infrared Detection Algorithm for Weak Targets in Complex Backgrounds. Electronics 2023, 12, 3671. https://doi.org/10.3390/electronics12173671

Zou Z, Ma L, Yang S, Li Y, Shi H, Fu Q. Enhanced Infrared Detection Algorithm for Weak Targets in Complex Backgrounds. Electronics. 2023; 12(17):3671. https://doi.org/10.3390/electronics12173671

Chicago/Turabian StyleZou, Zhihui, Lianji Ma, Shuai Yang, Yingchao Li, Haodong Shi, and Qiang Fu. 2023. "Enhanced Infrared Detection Algorithm for Weak Targets in Complex Backgrounds" Electronics 12, no. 17: 3671. https://doi.org/10.3390/electronics12173671