A Hierarchical Resource Scheduling Method for Satellite Control System Based on Deep Reinforcement Learning

Abstract

:1. Introduction

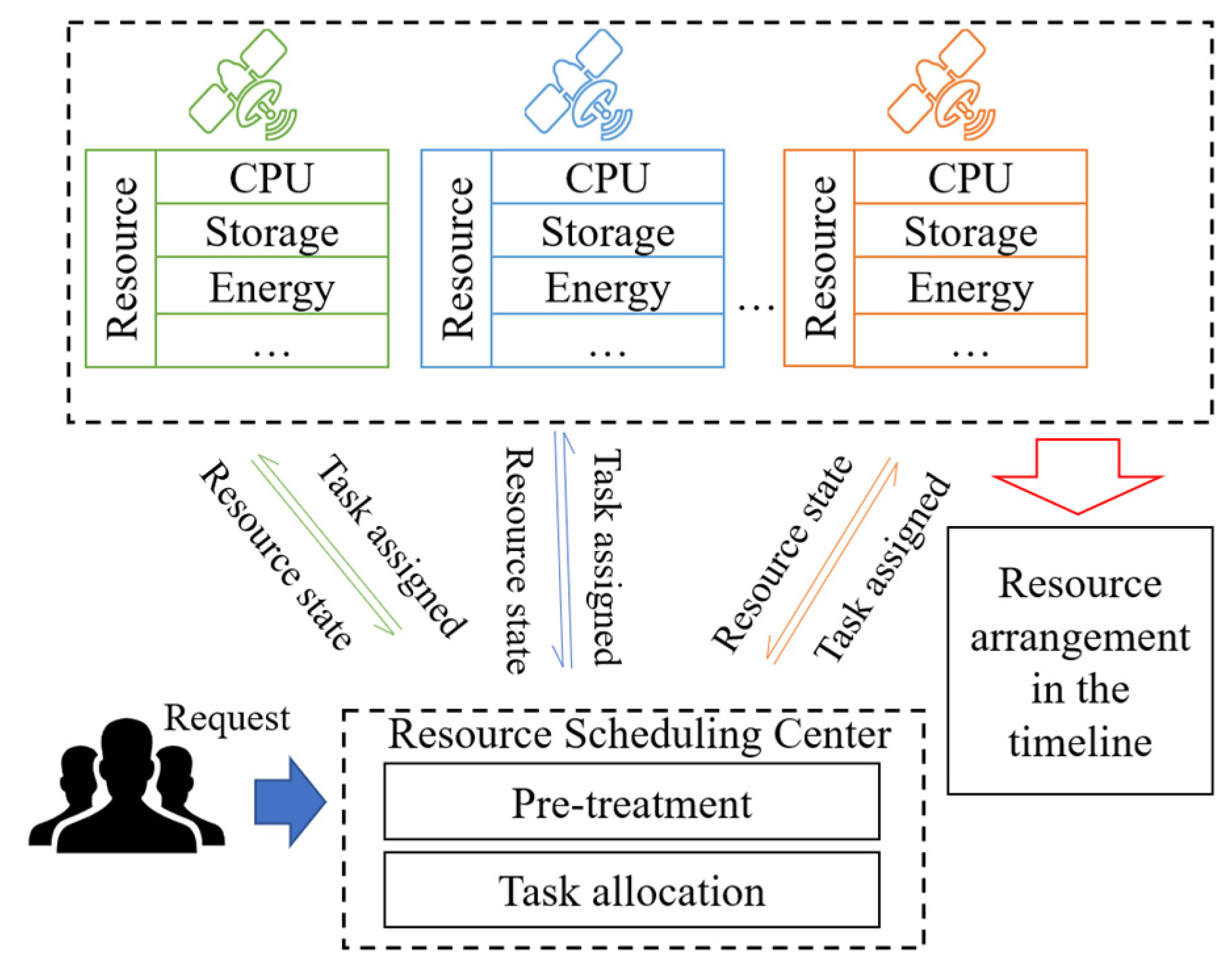

- A hierarchical resource scheduling framework is developed for multiple-satellite systems. It decomposes the problem into task allocation and resource arrangement in the timeline, improving the efficiency and effectiveness of the resource scheduling process in multi-satellite systems. We first designed a representation method of multiple satellites and task requirements. Furthermore, based on the complex constraints among satellites, tasks, and their capabilities, we constructed a scheduling model that considers optimization objectives, constraints, assumptions, and other factors.

- The IACO algorithm is developed to solve the task allocation problem, ensuring the load balance across the constellation. Unlike traditional methods that consider the satellite execution time windows, our proposed method instead considers each satellite’s ability to execute tasks. This reduces the complexity of task allocation and mitigates the challenge of modeling a single comprehensive solution using only one algorithm. Furthermore, a combination of the pheromone maximization strategy and the random search method is adopted to avoid convergence to the local optimism solution.

- The IDQN algorithm is utilized to resolve the problem of resource arrangement in the timeline, which can meet the requirements of tasks. Initially, a Markov decision process model is formulated for the resource arrangement problem, followed by designing state space, action space, and reward functions. The model integrates several constraints within the environment. A model is developed through training to obtain long-term benefits, which can then guide the resource scheduling plan.

2. Literature Review

3. Problem Description and Model Construction

3.1. Assumptions

- (1)

- Each task is executed once, regardless of repeated execution, which could be described as the following Equation (1);

- (2)

- After the task starts executing, it cannot be interrupted or replaced.

- (3)

- At the same time, a single satellite can only perform one task.

- (4)

- Do not consider situations where the task cannot be continued due to equipment failures.

- (5)

- The scheduled time is limited, and tasks are regularly collected and rescheduled.

3.2. Constraints

- (1)

- The task must be executed within the executable time window, satisfying the corresponding Equation (3). The start time of task execution shall not be earlier than the start time of the visible time window. The end time of task execution must not be later than the end time of the visible time window;

- (2)

- The interval between the execution time of two adjacent tasks should not be less than the required switching time, which includes the startup and preheating time of different loads and the angle switching time of the same load. That is to say, the start time of the next task to execute must be later than the summary of the transition time and the end time of the task.

- (3)

- The satellite’s assigned tasks’ storage and energy requirements should not exceed its storage and energy capacity.

3.3. Objectives

4. Hierarchical Resource Scheduling Framework

4.1. Task Allocation by IACO

4.1.1. The General Framework of IACO Algorithm

| Algorithm 1: Improved ant colony optimization algorithm |

Optimized Objective Function: Input: original task requests and satellite resource situation Output: task allocation results Initialization: Matrixphe, Matrixtask, α, β, antNum, Iterationmax While iteration< Iterationmax do If antNum critical number Adopt the maximum pheromone strategy to allocate tasks Else Adopt the random strategy to allocate tasks Calculate the resource load variance as Equation (7) Update the pheromone matrix as Equation (13) and the task selection matrix end |

4.1.2. The Design of the Fitness Evaluation Function

4.1.3. The Update of Pheromone and Search Method

4.2. Resource Arrangement in the Timeline by IDQN

4.2.1. The MDP Modeling Process

- (1)

- State. The state includes information about task requests and satellite status. Task information comprises visible task windows, profits, geographical coordinates, whether the task is executed, and other relative information. The status of each satellite is determined by its resource utilization level in various aspects. In the Markov decision process, the state can be expressed by the geographical coordinates, task profit, whether the task is executed, and the time window left in which the task can be executed. Other factors are integrated into the scheduling environment. The state can be expressed as S = {lani, loni, profiti, xi, windowleft}.

- (2)

- Action. In this model, the action is to choose a task not selected before or turn to the next orbiting cycle. As we all know, the satellite moves periodically along its orbit. We define that one period as an orbiting cycle. There may be more than one visible time window for a task. However, in one orbiting cycle, only one visible time window exists. If the satellite can perceive that it is better to arrange the task to the next orbiting cycle, it will not choose it this time. After all tasks that are better to execute in this orbiting cycle are selected, the satellite will turn to the next orbiting cycle.

- (3)

- Reward. The profit is obtained through interacting with the environment. It is the increment of the total profit after performing the chosen action. Furthermore, the total profit can be calculated by arranging the tasks according to the start time of the visible window under some assumptions. To enhance the task scheduling process, we begin with sorting the time window of tasks by their respective end time. Subsequently, the task with the earliest end time and evaluate the total profit generated from its implementation. The reward can be expressed as R = F(A + a) − F(A). A refers to the collection of previously executed actions. F(A) refers to the total profit from performing action set A.

4.2.2. The IDQN Training Process

4.3. Complexity Analysis

4.3.1. Space Complexity

4.3.2. Time Complexity

5. Experiments and Discussion

5.1. Simulation Settings

5.2. Experimental Results

5.3. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kim, M.; Song, H.; Kim, Y. Direct Short-Term Forecast of Photovoltaic Power through a Comparative Study between COMS and Himawari-8 Meteorological Satellite Images in a Deep Neural Network. Remote Sens. 2020, 12, 2357. [Google Scholar] [CrossRef]

- Li, Z.; Xie, Y.; Hou, W.; Liu, Z.; Bai, Z.; Hong, J.; Ma, Y.; Huang, H.; Lei, X.; Sun, X.; et al. In-Orbit Test of the Polarized Scanning Atmospheric Corrector (PSAC) Onboard Chinese Environmental Protection and Disaster Monitoring Satellite Constellation HJ-2 A/B. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4108217. [Google Scholar] [CrossRef]

- Barra, A.; Reyes-Carmona, C.; Herrera, G.; Galve, J.P.; Solari, L.; Mateos, R.M.; Azañón, J.M.; Béjar-Pizarro, M.; López-Vinielles, J.; Palamà, R.; et al. From satellite interferometry displacements to potential damage maps: A tool for risk reduction and urban planning. Remote Sens. Environ. 2022, 282, 113294. [Google Scholar] [CrossRef]

- Sun, F.; Zhou, J.; Xu, Z. A holistic approach to SIM platform and its application to early-warning satellite system. Adv. Space Res. 2018, 61, 189–206. [Google Scholar] [CrossRef]

- Zhang, B.; Wu, Y.; Zhao, B.; Chanussot, J.; Hong, D.; Yao, J.; Gao, L. Progress and Challenges in Intelligent Remote Sensing Satellite Systems. IEEE J.-STARS 2022, 15, 1814–1822. [Google Scholar] [CrossRef]

- Hao, Y.; Song, Z.; Zheng, Z.; Zhang, Q.; Miao, Z. Joint Communication, Computing, and Caching Resource Allocation in LEO Satellite MEC Networks. IEEE Access 2023, 11, 6708–6716. [Google Scholar] [CrossRef]

- Peng, D.; Bandi, A.; Li, Y.; Chatzinotas, S.; Ottersten, B. Hybrid Beamforming, User Scheduling, and Resource Allocation for Integrated Terrestrial-Satellite Communication. IEEE Trans. Veh. Technol. 2021, 70, 8868–8882. [Google Scholar]

- Li, Y.; Feng, X.; Wang, G.; Yan, D.; Liu, P.; Zhang, C. A Real-Coding Population-Based Incremental Learning Evolutionary Algorithm for Multi-Satellite Scheduling. Electronics 2022, 11, 1147. [Google Scholar] [CrossRef]

- Xiong, J.; Leus, R.; Yang, Z.; Abbass, H.A. Evolutionary multi-objective resource allocation and scheduling in the Chinese navigation satellite system project. Eur. J. Oper. Res. 2016, 251, 662–675. [Google Scholar] [CrossRef]

- He, L.; Li, J.; Sheng, M.; Liu, R.; Guo, K.; Zhou, D. Dynamic Scheduling of Hybrid Tasks With Time Windows in Data Relay Satellite Networks. IEEE Trans. Veh. Technol. 2019, 68, 4989–5004. [Google Scholar] [CrossRef]

- Lemaıtre, M.; Verfaillie, G.; Jouhaud, F.; Lachiver, J.-M.; Bataille, N. Selecting and scheduling observations of agile satellites. Aerosp. Sci. Technol. 2002, 6, 367–381. [Google Scholar] [CrossRef]

- He, P.; Hu, J.; Fan, X.; Wu, D.; Wang, R.; Cui, Y. Load-Balanced Collaborative Offloading for LEO Satellite Networks. IEEE Internet Things J. 2023, 1. [Google Scholar] [CrossRef]

- Deng, X.; Chang, L.; Zeng, S.; Cai, L.; Pan, J. Distance-Based Back-Pressure Routing for Load-Balancing LEO Satellite Networks. IEEE Trans. Veh. Technol. 2023, 72, 1240–1253. [Google Scholar] [CrossRef]

- Gao, Y.; Yang, H.; Wang, X.; Chen, Y.; Li, C.; Zhang, X. A Fuzzy-Logic-Based Load Balancing Scheme for a Satellite-Terrestrial Integrated Network. Electronics 2022, 11, 2752. [Google Scholar] [CrossRef]

- Kumar, P.; Kumar, R. Issues and Challenges of Load Balancing Techniques in Cloud Computing: A Survey. ACM Comput. Surv. (CSUR) 2019, 51, 120. [Google Scholar] [CrossRef]

- Gures, E.; Shayea, I.; Saad, S.A.; Ergen, M.; El-Saleh, A.A.; Ahmed, N.M.O.S.; Alnakhli, M. Load balancing in 5G heterogeneous networks based on automatic weight function. ICT Express 2023, in press. [CrossRef]

- Liu, J.; Zhang, G.; Xing, L.; Qi, W.; Chen, Y. An Exact Algorithm for Multi-Task Large-Scale Inter-Satellite Routing Problem with Time Windows and Capacity Constraints. Mathematics 2022, 10, 3969. [Google Scholar] [CrossRef]

- Liu, Y.; Jin, S.; Zhou, J.; Hu, Q. A branch-and-bound algorithm for the unit-capacity resource constrained project scheduling problem with transfer times. Comput. Oper. Res. 2023, 151, 106097. [Google Scholar] [CrossRef]

- Chen, X.; Reinelt, G.; Dai, G.; Spitz, A. A mixed integer linear programming model for multi-satellite scheduling. Eur. J. Oper. Res. 2019, 275, 694–707. [Google Scholar] [CrossRef]

- Haugen, K.K. A Stochastic Dynamic Programming model for scheduling of offshore petroleum fields with resource uncertainty. Eur. J. Oper. Res. 1996, 88, 88–100. [Google Scholar] [CrossRef]

- Chu, X.; Chen, Y.; Tan, Y. An anytime branch and bound algorithm for agile earth observation satellite onboard scheduling. Adv. Space Res. 2017, 60, 2077–2090. [Google Scholar] [CrossRef]

- Song, Y.; Xing, L.; Chen, Y. Two-stage hybrid planning method for multi-satellite joint observation planning problem considering task splitting. Comput. Ind. Eng. 2022, 174, 108795. [Google Scholar] [CrossRef]

- Niu, X.; Tang, H.; Wu, L. Satellite scheduling of large areal tasks for rapid response to natural disaster using a multi-objective genetic algorithm. Int. J. Disaster Risk Reduct. 2018, 28, 813–825. [Google Scholar] [CrossRef]

- He, Y.; Chen, Y.; Lu, J.; Chen, C.; Wu, G. Scheduling multiple agile earth observation satellites with an edge computing framework and a constructive heuristic algorithm. J. Syst. Archit. 2019, 95, 55–66. [Google Scholar] [CrossRef]

- Huang, Y.; Mu, Z.; Wu, S.; Cui, B.; Duan, Y. Revising the Observation Satellite Scheduling Problem Based on Deep Reinforcement Learning. Remote Sens. 2021, 13, 2377. [Google Scholar] [CrossRef]

- Wei, L.; Xing, L.; Wan, Q.; Song, Y.; Chen, Y. A Multi-objective Memetic Approach for Time-dependent Agile Earth Observation Satellite Scheduling Problem. Comput. Ind. Eng. 2021, 159, 107530. [Google Scholar] [CrossRef]

- He, Y.; Xing, L.; Chen, Y.; Pedrycz, W.; Wang, L.; Wu, G. A Generic Markov Decision Process Model and Reinforcement Learning Method for Scheduling Agile Earth Observation Satellites. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 1463–1474. [Google Scholar] [CrossRef]

- Kim, H.; Chang, Y.K. Mission scheduling optimization of SAR satellite constellation for minimizing system response time. Aerosp. Sci. Technol. 2015, 40, 17–32. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, E.; Wu, F.; Chang, Z.; Xing, L. Multi-satellite scheduling problem with marginal decreasing imaging duration: An improved adaptive ant colony algorithm. Comput. Ind. Eng. 2023, 176, 108890. [Google Scholar] [CrossRef]

- Song, Y.; Wei, L.; Yang, Q.; Wu, J.; Xing, L.; Chen, Y. RL-GA: A Reinforcement Learning-based Genetic Algorithm for Electromagnetic Detection Satellite Scheduling Problem. Swarm Evol. Comput. 2023, 77, 101236. [Google Scholar] [CrossRef]

- Wen, Z.; Li, L.; Song, J.; Zhang, S.; Hu, H. Scheduling single-satellite observation and transmission tasks by using hybrid Actor-Critic reinforcement learning. Adv. Space Res. 2023, 71, 3883–3896. [Google Scholar] [CrossRef]

- Li, J.; Wu, G.; Liao, T.; Fan, M.; Mao, X.; Pedrycz, W. Task Scheduling under A Novel Framework for Data Relay Satellite Network via Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2022, 72, 6654–6668. [Google Scholar] [CrossRef]

- Ortiz-Gomez, F.G.; Lei, L.; Lagunas, E.; Martinez, R.; Tarchi, D.; Querol, J.; Salas-Natera, M.A.; Chatzinotas, S. Machine Learning for Radio Resource Management in Multibeam GEO Satellite Systems. Electronics 2022, 11, 992. [Google Scholar] [CrossRef]

- Wu, D.; Sun, B.; Shang, M. Hyperparameter Learning for Deep Learning-based Recommender Systems. IEEE Trans. Serv. Comput. 2023, 16, 2699–2712. [Google Scholar] [CrossRef]

- Bai, R.; Chen, Z.-L.; Kendall, G. Analytics and machine learning in scheduling and routing research. Int. J. Prod. Res. 2023, 61, 1–3. [Google Scholar] [CrossRef]

- Wang, X.; Chen, S.; Liu, J.; Wei, G. High Edge-Quality Light-Field Salient Object Detection Using Convolutional Neural Network. Electronics 2022, 11, 1054. [Google Scholar] [CrossRef]

- Lee, Y.-L.; Zhou, T.; Yang, K.; Du, Y.; Pan, L. Personalized recommender systems based on social relationships and historical behaviors. Appl. Math. Comput. 2023, 437, 127549. [Google Scholar] [CrossRef]

- Nguyen, D.H.; Sun, X.; Tretiak, I.; Valverde, M.A.; Kratz, J. Automatic process control of an automated fibre placement machine. Compos. Part A Appl. Sci. Manuf. 2023, 168, 107465. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Driessche, G.v.d.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Oroojlooyjadid, A.; Nazari, M.; Snyder, L.V.; Takáč, M. A Deep Q-Network for the Beer Game: Deep Reinforcement Learning for Inventory Optimization. Manuf. Serv. Oper. Manag. 2022, 24, 285–304. [Google Scholar] [CrossRef]

- Albaba, B.M.; Yildiz, Y. Driver Modeling Through Deep Reinforcement Learning and Behavioral Game Theory. IEEE Trans. Control Syst. Technol. 2022, 30, 885–892. [Google Scholar] [CrossRef]

- Pan, J.; Zhang, P.; Wang, J.; Liu, M.; Yu, J. Learning for Depth Control of a Robotic Penguin: A Data-Driven Model Predictive Control Approach. IEEE Trans. Ind. Electron. 2022, 70, 11422–11432. [Google Scholar] [CrossRef]

- Cui, K.; Song, J.; Zhang, L.; Tao, Y.; Liu, W.; Shi, D. Event-Triggered Deep Reinforcement Learning for Dynamic Task Scheduling in Multisatellite Resource Allocation. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 3766–3777. [Google Scholar] [CrossRef]

- Ren, L.; Ning, X.; Wang, Z. A competitive Markov decision process model and a recursive reinforcement-learning algorithm for fairness scheduling of agile satellites. Comput. Ind. Eng. 2022, 169, 108242. [Google Scholar] [CrossRef]

- Hu, X.; Wang, Y.; Liu, Z.; Du, X.; Wang, W.; Ghannouchi, F.M. Dynamic Power Allocation in High Throughput Satellite Communications: A Two-Stage Advanced Heuristic Learning Approach. IEEE Trans. Veh. Technol. 2023, 72, 3502–3516. [Google Scholar] [CrossRef]

- Qin, Z.; Yao, H.; Mai, T.; Wu, D.; Zhang, N.; Guo, S. Multi-Agent Reinforcement Learning Aided Computation Offloading in Aerial Computing for the Internet-of-Things. IEEE Trans. Serv. Comput. 2022, 16, 976–1986. [Google Scholar] [CrossRef]

- Lin, Z.; Ni, Z.; Kuang, L.; Jiang, C.; Huang, Z. Multi-Satellite Beam Hopping Based on Load Balancing and Interference Avoidance for NGSO Satellite Communication Systems. IEEE Trans. Commun. 2023, 71, 282–295. [Google Scholar] [CrossRef]

- Dorigo, M. Optimization, Learning and Natural Algorithms. Ph.D. Thesis, Politecnico Di Milano, Milano, Italy, 1992. [Google Scholar]

- Elloumi, W.; El Abed, H.; Abraham, A.; Alimi, A.M. A comparative study of the improvement of performance using a PSO modified by ACO applied to TSP. Appl. Soft Comput. 2014, 25, 234–241. [Google Scholar] [CrossRef]

- Jia, Y.H.; Mei, Y.; Zhang, M. A Bilevel Ant Colony Optimization Algorithm for Capacitated Electric Vehicle Routing Problem. IEEE Trans. Cybern. 2022, 52, 10855–10868. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, N.; Feng, Z. Multi-satellite control resource scheduling based on ant colony optimization. Expert Syst. Appl. 2014, 41, 2816–2823. [Google Scholar] [CrossRef]

- Saif, M.A.N.; Karantha, A.; Niranjan, S.K.; Murshed, B.A.H. Multi Objective Resource Scheduling for Cloud Environment using Ant Colony Optimization Algorithm. J. Algebr. Stat. 2022, 13, 2798–2809. [Google Scholar]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Danino, T.; Ben-Shimol, Y.; Greenberg, S. Container Allocation in Cloud Environment Using Multi-Agent Deep Reinforcement Learning. Electronics 2023, 12, 2614. [Google Scholar] [CrossRef]

- He, G.; Feng, M.; Zhang, Y.; Liu, G.; Dai, Y.; Jiang, T. Deep Reinforcement Learning Based Task-Oriented Communication in Multi-Agent Systems. IEEE Wirel. Commun. 2023, 30, 112–119. [Google Scholar] [CrossRef]

- Hao, J.; Yang, T.; Tang, H.; Bai, C.; Liu, J.; Meng, Z.; Liu, P.; Wang, Z. Exploration in Deep Reinforcement Learning: From Single-Agent to Multi-agent Domain. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–21. [Google Scholar] [CrossRef]

- Gao, J.; Kuang, Z.; Gao, J.; Zhao, L. Joint Offloading Scheduling and Resource Allocation in Vehicular Edge Computing: A Two Layer Solution. IEEE Trans. Veh. Technol. 2023, 72, 3999–4009. [Google Scholar] [CrossRef]

- Chen, G.; Shao, R.; Shen, F.; Zeng, Q. Slicing Resource Allocation Based on Dueling DQN for eMBB and URLLC Hybrid Services in Heterogeneous Integrated Networks. Sensors 2023, 23, 2518. [Google Scholar] [CrossRef]

- Nov, Y.; Weiss, G.; Zhang, H. Fluid Models of Parallel Service Systems Under FCFS. Oper. Res. 2022, 70, 1182–1218. [Google Scholar] [CrossRef]

- Liu, X.; Laporte, G.; Chen, Y.; He, R. An adaptive large neighborhood search metaheuristic for agile satellite scheduling with time-dependent transition time. Comput. Oper. Res. 2017, 86, 41–53. [Google Scholar] [CrossRef]

| Algorithm | Advantages | Disadvantages | |

|---|---|---|---|

| Exact algorithm | Branch and bound [18], integer programming [19], dynamic programming [20]. | 1. Optimality | 1. Complex modeling process 2. Large-scale solving leads to dimensionality explosion |

| Heuristic algorithms | HADRT [24] | 1. Short computation time 2. Optimal or near-optimal solutions | 1. Cannot guarantee optimality |

| Metaheuristic algorithms | Genetic Algorithm [43] Ant colony algorithm [44] | 1. Global optimization performance 2. Parallel processing | 1. Large-scale solving leads to the dimensionality explosion |

| Artificial intelligence | Reinforcement learning [28,29,30,45] | 1. No need for accurate modeling 2. Ability to solve large-scale problems | 1. High computational power requirements 2. Hard to design reward functions |

| Our approach | IACO-IDQN | 1. Reduced difficulty in solving the problem in a hierarchical method 2. Less time-consuming solution process using models 3. Easy to migrate algorithms | 1. Long training time required before deploying models |

| Symbol | Definition |

|---|---|

| taski | The ith task to execute |

| VTWsi | Start time of the ith visible time window for task |

| VTWei | End time of the ith visible time window for task |

| ETWsi | Start execution time of the ith task |

| ETWei | End execution time of the ith task |

| Ttrans | The preparation time for task switch |

| Duri | Task duration of task i |

| profiti | Profit of task i |

| lati | Latitude of task target i |

| loni | Longitude of task target i |

| Etotal | Total available energy |

| Mtotal | Total data storage available |

| ei | Energy consumed for task i |

| mi | Storage consumed for task i |

| CPUi | CPU consumed for task i |

| loadi | Resource consumed for task i |

| Algorithm | Space Complexity | Time Complexity |

|---|---|---|

| IACO-IDQN | O(M × N) | O(N4) |

| HADRT | O(N) | O(N2) |

| HAW | O(N) | O(N2) |

| Parameters | SAT1 | SAT2 | SAT3 |

|---|---|---|---|

| Semi-major axis | 7200 | 7200 | 7200 |

| Orbital eccentricity | 0 | 0 | 0 |

| Inclination | 95 | 95 | 95 |

| Argument of Perigee | 0 | 0 | 0 |

| RAAN | 180 | 150 | 120 |

| Mean anomaly | 0 | 45 | 90 |

| Algorithm | Parameters | Value |

|---|---|---|

| IACO | Pheromone evaporation rate α | 0.5 |

| Pheromone reinforcement rate β | 2 | |

| Ant number in each iteration | 50 | |

| Number of iterations | 500 | |

| IDQN | Exploration rate | 0.05 |

| Buffer size | 10,000 | |

| Batch size | 128 | |

| Gamma | 0.9 | |

| Target update | 50 | |

| Learning rate | 0.001 |

| Task Number | IACO-IDQN | FCFS | HADRT’ | HAW |

|---|---|---|---|---|

| 150 | 7.25 | 0.54 | 12.63 | 12.69 |

| 300 | 14.42 | 1.04 | 25.36 | 25.07 |

| 450 | 21.82 | 1.59 | 42.36 | 42.15 |

| Task Number | IACO | IDQN | FCFS | HADRT’ | HAW |

|---|---|---|---|---|---|

| 150 | 11.22 | 1009.00 | 6187.56 | 10,310.22 | 16,154.89 |

| 300 | 22.69 | 462.30 | 15,340.67 | 30,147.56 | 27,106.89 |

| 450 | 24.33 | 562.33 | 3417.56 | 8112.67 | 5660.67 |

| Task Number | IACO-IDQN | FCFS | HADRT’ | HAW |

|---|---|---|---|---|

| 150 | 658 | 554 | 768 | 768 |

| 300 | 1584 | 848 | 1611 | 1611 |

| 450 | 2329 | 1243 | 2431 | 2431 |

| Metrics | IACO-IDQN | FCFS | HADRT’ | HAW | |

|---|---|---|---|---|---|

| CPU time | 150 | 7.25 | 0.54 | 12.63 | 12.69 |

| 300 | 14.42 | 1.04 | 25.36 | 25.07 | |

| 450 | 21.82 | 1.59 | 42.36 | 42.15 | |

| Variance of resources | 150 | 1009.00 | 6187.56 | 10,310.22 | 16,154.89 |

| 300 | 462.30 | 15,340.67 | 30,147.56 | 27,106.89 | |

| 450 | 562.33 | 3417.56 | 8112.67 | 5660.67 | |

| Total rewards | 150 | 658 | 554 | 768 | 768 |

| 300 | 1584 | 848 | 1611 | 1611 | |

| 450 | 2329 | 1243 | 2431 | 2431 | |

| Task profit rate | 150 | 85.68 | 72.14 | 100 | 100 |

| 300 | 98.32 | 56.98 | 100 | 100 | |

| 450 | 95.80 | 51.13 | 100 | 100 | |

| Task completion rate | 150 | 91.33 | 73.33 | 100 | 100 |

| 300 | 97.33 | 54.33 | 100 | 100 | |

| 450 | 95.56 | 51.56 | 100 | 100 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Guo, X.; Meng, Z.; Qin, J.; Li, X.; Ma, X.; Ren, S.; Yang, J. A Hierarchical Resource Scheduling Method for Satellite Control System Based on Deep Reinforcement Learning. Electronics 2023, 12, 3991. https://doi.org/10.3390/electronics12193991

Li Y, Guo X, Meng Z, Qin J, Li X, Ma X, Ren S, Yang J. A Hierarchical Resource Scheduling Method for Satellite Control System Based on Deep Reinforcement Learning. Electronics. 2023; 12(19):3991. https://doi.org/10.3390/electronics12193991

Chicago/Turabian StyleLi, Yang, Xiye Guo, Zhijun Meng, Junxiang Qin, Xuan Li, Xiaotian Ma, Sichuang Ren, and Jun Yang. 2023. "A Hierarchical Resource Scheduling Method for Satellite Control System Based on Deep Reinforcement Learning" Electronics 12, no. 19: 3991. https://doi.org/10.3390/electronics12193991

APA StyleLi, Y., Guo, X., Meng, Z., Qin, J., Li, X., Ma, X., Ren, S., & Yang, J. (2023). A Hierarchical Resource Scheduling Method for Satellite Control System Based on Deep Reinforcement Learning. Electronics, 12(19), 3991. https://doi.org/10.3390/electronics12193991