55 nm CMOS Mixed-Signal Neuromorphic Circuits for Constructing Energy-Efficient Reconfigurable SNNs

Abstract

:1. Introduction

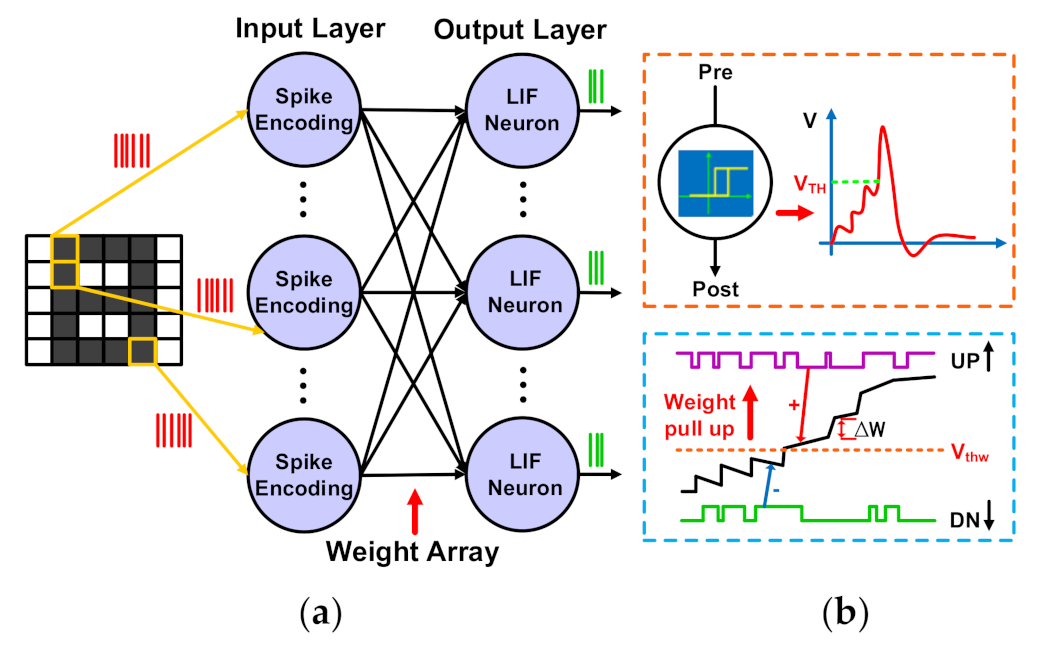

2. Proposed Neuromorphic Circuits and SNN Implementation

2.1. Analog LIF Neuron

2.2. Configurable Synapse and SDSP Learning Algorithm

2.3. Reconfigurable SNN Implementation

3. Chip Measurement Results

3.1. LIF Neuron Behavior Testing

3.2. SDSP Learning Algorithm Testing

3.3. Pavlov Associative Learning Experiment and Binary Classification Task Testing

3.4. Energy per Spike

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Grollier, J.; Querlioz, D.; Camsari, K.Y.; Everschor-Sitte, K.; Fukami, S.; Stiles, M.D. Neuromorphic spintronics. Nat. Electron. 2020, 3, 360–370. [Google Scholar] [CrossRef]

- Indiveri, G.; Liu, S.-C. Memory and Information Processing in Neuromorphic Systems. Proc. IEEE 2015, 103, 1379–1397. [Google Scholar] [CrossRef]

- Monroe, D. Neuromorphic computing gets ready for the (really) big time. Commun. ACM 2014, 57, 13–15. [Google Scholar] [CrossRef]

- Milde, M.B.; Blum, H.; Dietmüller, A.; Sumislawska, D.; Conradt, J.; Indiveri, G.; Sandamirskaya, Y. Obstacle Avoidance and Target Acquisition for Robot Navigation Using a Mixed Signal Analog/Digital Neuromorphic Processing System. Front. Neurorobotics 2017, 11, 28. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.-C.; Yu, Q.; Gao, J.-W.; Zhao, S.; Liu, X.-C.; Lu, Y.-F. Spiking Neuron Networks based Energy-Efficient Object Detection for Mobile Robot. In Proceedings of the 2021 China Automation Congress, Beijing, China, 22–24 October 2021. [Google Scholar] [CrossRef]

- Furber, S.B.; Galluppi, F.; Temple, S.; Plana, L.A. The SpiNNaker Project. Proc. IEEE 2014, 102, 652–665. [Google Scholar] [CrossRef]

- Davies, M.; Srinivasa, N.; Lin, T.-H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Kuang, Y.; Cui, X.; Zhong, Y.; Liu, K.; Zou, C.; Dai, Z.; Wang, Y.; Yu, D.; Huang, R. A 64K-Neuron 64M-1b-Synapse 2.64pJ/SOP Neuromorphic Chip with All Memory on Chip for Spike-Based Models in 65 nm CMOS. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 2655–2659. [Google Scholar] [CrossRef]

- Frenkel, C.; Lefebvre, M.; Legat, J.-D.; Bol, D. A 0.086-mm2 12.7-pJ/SOP 64k-Synapse 256-Neuron Online-Learning Digital Spiking Neuromorphic Processor in 28 nm CMOS. IEEE Trans. Biomed. Circuits Syst. 2018, 20, 425. [Google Scholar] [CrossRef] [PubMed]

- Pu, J.; Goh, W.L.; Nambiar, V.P.; Wong, M.M.; Do, A.T. ‘A 5.28-mm2 4.5-pJ/SOP Energy-Efficient Spiking Neural Network Hardware with Reconfigurable High Processing Speed Neuron Core and Congestion-Aware Router. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 5081–5094. [Google Scholar] [CrossRef]

- Thakur, C.S.; Molin, J.L.; Cauwenberghs, G.; Indiveri, G.; Kumar, K.; Qiao, N.; Schemmel, J.; Wang, R.; Chicca, E.; Hasler, J.O.; et al. Large-Scale Neuromorphic Spiking Array Processors: A Quest to Mimic the Brain. Front. Neurosci. 2018, 12, 891. [Google Scholar] [CrossRef]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Saxena, V.; Zhu, K. Homogeneous Spiking Neuromorphic System for Real-World Pattern Recognition. IEEE J. Emerg. Sel. Top. Circuits Syst. 2015, 5, 254–266. [Google Scholar] [CrossRef]

- Blubaugh, D.J.; Atamian, M.; Babcock, G.T.; Golbeck, J.H.; Cheniae, G.M. Photoinhibition of hydroxylamine-extracted photosystem II membranes: Identification of the sites of photodamage. Biochemistry 1991, 30, 7586–7597. [Google Scholar] [CrossRef] [PubMed]

- Diehl, P.U.; Neil, D.; Binas, J.; Cook, M.; Liu, S.-C.; Pfeiffer, M. Fast-classifying, high-accuracy spiking deep networks through weight and threshold balancing. In Proceedings of the International Joint Conference on Neural Networks, Killarney, Ireland, 11–17 July 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Brader, J.M.; Senn, W.; Fusi, S. Learning Real-World Stimuli in a Neural Network with Spike-Driven Synaptic Dynamics. Neural Comput. 2007, 19, 2881–2912. [Google Scholar] [CrossRef]

- Joubert, A.; Belhadj, B.; Temam, O.; Heliot, R. Hardware spiking neurons design: Analog or digital? In Proceedings of the 2012 International Joint Conference on Neural Networks, Brisbane, QLD, Australia, 10–15 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Aamir, S.A.; Muller, P.; Kiene, G.; Kriener, L.; Stradmann, Y.; Grubl, A.; Schemmel, J.; Meier, K. A Mixed-Signal Structured AdEx Neuron for Accelerated Neuromorphic Cores. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 1027–1037. [Google Scholar] [CrossRef]

- Qiao, N.; Mostafa, H.; Corradi, F.; Osswald, M.; Stefanini, F.; Sumislawska, D.; Indiveri, G. A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128K synapses. Front. Neurosci. 2015, 9, 141. [Google Scholar] [CrossRef]

- Aamir, S.A.; Stradmann, Y.; Muller, P.; Pehle, C.; Hartel, A.; Grubl, A.; Schemmel, J.; Meier, K. An Accelerated LIF Neuronal Network Array for a Large-Scale Mixed-Signal Neuromorphic Architecture. IEEE Trans. Circuits Syst. I Regul. Pap. 2018, 65, 4299–4312. [Google Scholar] [CrossRef]

- Qiao, N.; Indiveri, G. Scaling mixed-signal neuromorphic processors to 28 nm FD-SOI technologies. In Proceedings of the 2016 IEEE Biomedical Circuits and Systems Conference (BioCAS), Shanghai, China, 17–19 October 2016; pp. 552–555. [Google Scholar] [CrossRef]

- Folowosele, F.; Etienne-Cummings, R.; Hamilton, T.J. A CMOS switched capacitor implementation of the Mihalas-Niebur neuron. In Proceedings of the 2009 IEEE Biomedical Circuits and Systems Conference, Beijing, China, 26–28 November 2009; pp. 105–108. [Google Scholar] [CrossRef]

- Demirkol, A.S.; Ozoguz, S. A low power VLSI implementation of the Izhikevich neuron model. In Proceedings of the 2011 IEEE 9th International New Circuits and systems conference, Bordeaux, France, 26–29 June 2011; pp. 169–172. [Google Scholar] [CrossRef]

- Livi, P.; Indiveri, G. A current-mode conductance-based silicon neuron for address-event neuromorphic systems. In Proceedings of the 2009 IEEE International Symposium on Circuits and Systems, Taipei, Taiwan, 24–27 May 2009; pp. 2898–2901. [Google Scholar] [CrossRef]

- Cruz-Albrecht, J.M.; Yung, M.W.; Srinivasa, N. Energy-Efficient Neuron, Synapse and STDP Integrated Circuits. IEEE Trans. Biomed. Circuits Syst. 2012, 6, 246–256. [Google Scholar] [CrossRef] [PubMed]

- Indiveri, G.; Stefanini, F.; Chicca, E. Spike-based learning with a generalized integrate and fire silicon neuron. In Proceedings of the 2010 IEEE International Symposium on Circuits and Systems, Paris, France, 30 May–2 June 2010; pp. 1951–1954. [Google Scholar] [CrossRef]

- Indiveri, G.; Linares-Barranco, B.; Hamilton, T.J.; van Schaik, A.; Etienne-Cummings, R.; Delbruck, T.; Liu, S.-C.; Dudek, P.; Häfliger, P.; Renaud, S.; et al. Neuromorphic Silicon Neuron Circuits. Front. Neurosci. 2011, 5, 73. [Google Scholar] [CrossRef]

- Granizo, J.; Garvi, R.; Garcia, D.; Hernandez, L. A CMOS LIF neuron based on a charge-powered oscillator with time-domain threshold logic. In Proceedings of the 2023 IEEE International Symposium on Circuits and Systems (ISCAS), Monterey, CA, USA, 21–25 May 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Song, J.; Shirn, J.; Kim, H.; Choi, W.-S. Energy-Efficient High-Accuracy Spiking Neural Network Inference Using Time-Domain Neurons. In Proceedings of the 2022 IEEE 4th International Conference on Artificial Intelligence Circuits and Systems (AICAS), Incheon, Republic of Korea, 13–15 June 2022; pp. 5–8. [Google Scholar] [CrossRef]

- Chicca, E.; Stefanini, F.; Bartolozzi, C.; Indiveri, G. Neuromorphic Electronic Circuits for Building Autonomous Cognitive Systems. Proc. IEEE 2014, 102, 1367–1388. [Google Scholar] [CrossRef]

- Srivastava, S.; Sahu, S.; Rathod, S. Computation and Analysis of Excitatory Synapse and Integrate & Fire Neuron: 180nm MOSFET and CNFET Technology. IOSR J. VLSI Signal Process. 2022, 8, 60–72. [Google Scholar] [CrossRef]

- Shaik, N.; Malik, P.K.; Ravipati, S.; Oduru, S.; Munnangi, A.; Boda, S.; Singh, R. Static Excitatory Synapse with an Integrate Fire Neuron Circuit. In Proceedings of the 2023 International Conference on Artificial Intelligence and Smart Communication (AISC), Greater Noida, India, 27–29 January 2023; pp. 383–389. [Google Scholar] [CrossRef]

- Brette, R.; Gerstner, W. Adaptive Exponential Integrate-and-Fire Model as an Effective Description of Neuronal Activity. J. Neurophysiol. 2005, 94, 3637–3642. [Google Scholar] [CrossRef]

- Bartolozzi, C.; Indiveri, G. Synaptic Dynamics in Analog VLSI. Neural Comput. 2007, 19, 2581–2603. [Google Scholar] [CrossRef]

- Wang, J.; Yu, T.; Akinin, A.; Cauwenberghs, G.; Broccard, F.D. Neuromorphic synapses with reconfigurable voltage-gated dynamics for biohybrid neural circuits. In Proceedings of the 2017 IEEE Biomedical Circuits and Systems Conference (BioCAS), Turin, Italy, 19–21 October 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Noack, M.; Krause, M.; Mayr, C.; Partzsch, J.; Schuffny, R. VLSI implementation of a conductance-based multi-synapse using switched-capacitor circuits. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Melbourne, Australia, 1–5 June 2014. [Google Scholar]

- Ramakrishnan, S.; Hasler, P.E.; Gordon, C. Floating Gate Synapses with Spike-Time-Dependent Plasticity. IEEE Trans. Biomed. Circuits Syst. 2011, 5, 244–252. [Google Scholar] [CrossRef] [PubMed]

- Sumislawska, D.; Qiao, N.; Pfeiffer, M.; Indiveri, G. Wide dynamic range weights and biologically realistic synaptic dynamics for spike-based learning circuits. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montreal, QC, Canada, 22–25 May 2016; pp. 2491–2494. [Google Scholar]

- Gautam, A.; Kohno, T. A Conductance-Based Silicon Synapse Circuit. Biomimetics 2022, 7, 246. [Google Scholar] [CrossRef]

- Abbott, L.F.; Nelson, S.B. Synaptic plasticity: Taming the beast. Nat. Neurosci. 2000, 3, 1178–1183. [Google Scholar] [CrossRef] [PubMed]

- Beyeler, M.; Dutt, N.D.; Krichmar, J.L. Categorization and decision-making in a neurobiologically plausible spiking network using a STDP-like learning rule. Neural Networks 2013, 48, 109–124. [Google Scholar] [CrossRef] [PubMed]

- Gütig, R.; Sompolinsky, H. The tempotron: A neuron that learns spike timing–based decisions. Nat. Neurosci. 2006, 9, 420–428. [Google Scholar] [CrossRef]

- Lisman, J.; Spruston, N. Questions about STDP as a General Model of Synaptic Plasticity. Front. Synaptic Neurosci. 2010, 2, 140. [Google Scholar] [CrossRef]

- Billings, G.; van Rossum, M. Memory retention and spike- timing-dependent plasticity. J. Neurophysiol. 2009, 101, 2775–2788. [Google Scholar] [CrossRef]

- Fusi, S.; Annunziato, M.; Badoni, D.; Salamon, A.; Amit, D.J. Spike-Driven Synaptic Plasticity: Theory, Simulation, VLSI Implementation. Neural Comput. 2000, 12, 2227–2258. [Google Scholar] [CrossRef] [PubMed]

- Chicca, E.; Fusi, S. ‘Stochastic synaptic plasticity in deterministic aVLSI networks of spiking neurons. In Proceedings of the World Congress on Neuroinformatics 2001, Vienna, Austria, 24–29 September 2001; pp. 468–477. [Google Scholar]

- Bichler, O.; Zhao, W.; Alibart, F.; Pleutin, S.; Lenfant, S.; Vuillaume, D.; Gamrat, C. Pavlov’s Dog Associative Learning Demonstrated on Synaptic-Like Organic Transistors. Neural Comput. 2013, 25, 549–566. [Google Scholar] [CrossRef] [PubMed]

- Indiveri, G.; Corradi, F.; Qiao, N. Neuromorphic architectures for spiking deep neural networks. In Proceedings of the Electron Devices Meeting (IEDM), 2015 IEEE International, Washington, DC, USA, 7–9 December 2015; pp. 4.2.1–4.2.4. [Google Scholar] [CrossRef]

- Moradi, S.; Qiao, N.; Stefanini, F.; Indiveri, G. A Scalable Multicore Architecture With Heterogeneous Memory Structures for Dynamic Neuromorphic Asynchronous Processors (DYNAPs). IEEE Trans. Biomed. Circuits Syst. 2017, 12, 106–122. [Google Scholar] [CrossRef]

- Benjamin, B.V.; Gao, P.; McQuinn, E.; Choudhary, S.; Chandrasekaran, A.R.; Bussat, J.-M.; Alvarez-Icaza, R.; Arthur, J.V.; Merolla, P.A.; Boahen, K. Neurogrid: A Mixed-Analog-Digital Multichip System for Large-Scale Neural Simulations. Proc. IEEE 2014, 102, 699–716. [Google Scholar] [CrossRef]

- Mayr, C.; Partzsch, J.; Noack, M.; Hanzsche, S.; Scholze, S.; Hoppner, S.; Ellguth, G.; Schuffny, R. A Biological-Realtime Neuromorphic System in 28 nm CMOS Using Low-Leakage Switched Capacitor Circuits. IEEE Trans. Biomed. Circuits Syst. 2016, 10, 243–254. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Han, Z.; Huang, Y.; Ye, T.T. 55 nm CMOS Analog Circuit Implementation of LIF and STDP Functions for Low-Power SNNs. In Proceedings of the 2021 IEEE/ACM International Symposium on Low Power Electronics and Design (ISLPED), Boston, MA, USA, 26–8 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

| Work | [48] | [52] | [51] | This Work |

|---|---|---|---|---|

| Technology | 180 nm CMOS | 55 nm CMOS | 28 nm CMOS | 55 nm CMOS |

| Supply voltage | 1.8 V | 1 V | 0.7–1.0 V | 1.2 V |

| Area of neuron | 1188 μm2 | 482 μm2 | 64.6 μm2 | 264 μm2 |

| Area of synapse | 128.4 μm2 | - | 13 μm2 | 54 μm2 |

| Frequency | 30 Hz–1 kHz | - | 10 Hz–350 Hz | 30 Hz–1 kHz |

| Energy per spike | 883 pJ@30 Hz | 1.099 nJ@10 Hz | 2.3 nJ@10 Hz 30 nJ@350 Hz | 18.4 pJ@30 Hz 483 pJ@1 kHz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quan, J.; Liu, Z.; Li, B.; Zeng, C.; Luo, J. 55 nm CMOS Mixed-Signal Neuromorphic Circuits for Constructing Energy-Efficient Reconfigurable SNNs. Electronics 2023, 12, 4147. https://doi.org/10.3390/electronics12194147

Quan J, Liu Z, Li B, Zeng C, Luo J. 55 nm CMOS Mixed-Signal Neuromorphic Circuits for Constructing Energy-Efficient Reconfigurable SNNs. Electronics. 2023; 12(19):4147. https://doi.org/10.3390/electronics12194147

Chicago/Turabian StyleQuan, Jiale, Zhen Liu, Bo Li, Chuanbin Zeng, and Jiajun Luo. 2023. "55 nm CMOS Mixed-Signal Neuromorphic Circuits for Constructing Energy-Efficient Reconfigurable SNNs" Electronics 12, no. 19: 4147. https://doi.org/10.3390/electronics12194147