For each of the below sub-sections, the methodologies presented will be compared and contrasted with respect to the below criteria. The relevant advantages and disadvantages for the methodologies discussed will be outlined for each criterion (Crit.). The following details the criteria that will be used to assess the methodologies:

3.1. Object Detection Enhancements

One of the fundamental flaws in a typical mmWave tracking system is the reliance on static noise filtering. In the context of radar imaging, as opposed to tracking, there have been advancements towards adaptive background filtering. Recent adaptive background filtering research in the mmWave domain can be seen presented by [

16]. The authors of [

16] present a novel approach toward adaptive background noise suppression, that remains computationally cost effective. The approach presented by [

16] ultimately relies on the ability to observe the operating background environment without any targets in the field of view. This allows for the construction of a background image which in turn is used to derive a background power map. The work presented by [

16] demonstrates an adaptive background filtering approach that can be used when imaging a single target with mmWave. Although not practically tested, the principles that the authors of [

16] rely on for adaptive background subtraction are also present in the context of multi-object tracking with mmWave. Therefore, this serves as an interesting approach towards reducing the reliance on static noise filtering in the mmWave tracking domain.

The reliance on static noise filtering ultimately spawns challenges related to the reliable tracking of a stationary object. As a result, a large focus on methodologies and strategies to alleviate these challenges can be seen in the literature. The two overarching themes that encompass the research direction for addressing these challenges are sensor fusion and micro-Doppler feature analysis.

Sensor fusion, in the context of this paper, refers to the combination of data from additional sensors in addition to a mmWave sensor. A common approach to this in the literature is to fuse camera data with the data obtained from the mmWave sensor to achieve a more coherent and comprehensive object detection algorithm, whilst alleviating challenges associated with illumination in the vision domain. One of the primary challenges with fusing camera and mmWave radar detections is that they are a heterogeneous pair of sensors [

17]. The plane in which the radar detections are aligned with is different to that of the camera detection. Therefore, this can make associating the detections between the two sensors quite difficult [

17]. Research presented by [

17] demonstrate a novel approach to solving the association challenge. In the methodology presented in [

17], the authors define the concept of error bounds to assist with the data association and gating within a fusion extended Kalman filter. The concept of error bounds provide a criteria to define the behavior of the individual sensors before and after the sensor fusion [

17].

In the fusion-extended Kalman filter presented in [

17], the radar point cloud clusters are formed using an approach similar to the typical architecture discussed in

Section 2 of this paper, with DBSCAN. Similarly, the bounding boxes on the image plane are initially formed in isolation to the radar and then sent to the fusion-extended Kalman filter to be associated and tracked with the radar clusters. The plane of the camera data points is transformed from an image plane to a world plane using a homography estimation method [

17]. A warped bird’s eye view of the camera data points can then be estimated using the world coordinates. The estimated warped bird’s eye view can then be compared and associated with the radar point cloud data points [

17]. In the fusion-extended Kalman filter presented by [

17], the error bounds are updated using data points from both of the sensors (as opposed to independently) and the warped bird’s eye view of the image plane is calculated for each sample point. As a result, the authors of [

17] demonstrate that although this yields a higher association accuracy a time synchronization challenge is faced between the sensors. This challenge is resolved in the research by ensuring timeline alignment between the sensors and a synchronization strategy is employed by comparing certain regions of the fusion-extended Kalman filter output with the error bounds [

17]. The experimental results presented by [

17] appear to demonstrate a higher reliability in real-time target detection and persisted tracks, compared to a radar alone. Another approach seen in literature towards mmWave sensor fusion, is a track-to-track based association method. The authors of [

18] demonstrate an implementation of track-to-track based association between a mmWave radar and a thermal camera. In the research presented by [

18], it is assumed the independent sensors are co-located, whereby the two sensors are orientated and located is the same position. Under this operating condition, the targets in the field of view are tracked independently by the mmWave sensor and thermal camera. The independent tracks are then ultimately associated by solving a combinatorial cost minimization problem. In the research presented by [

18], the components involved in this problem are identified as:

Exploiting micro-Doppler in mmWave radar systems is actively being sought as another angle to devise methodologies that resolve the challenge of static object detection and localization. Specifically in the context of human detection, bio-metric information, such as heartbeat and breathing are being explored as potential features that are measurable through micro-Doppler. A study performed by [

19] demonstrates an algorithm designed to localize multiple static humans using their individual breathing pattern. The research performed by [

19] highlight that the time of flight of a signal is minimally impacted by the small movements of a breathing chest cavity. As a result, the sub-millimeter movements are lost when performing static background removal between two consecutive frames, 12.5 ms apart in the case of the experiment performed by [

19]. To counter this loss of information, the authors in [

19] suggest subtracting the static background from a frame that is a few seconds apart, 2.5 s in the case of the research performed by [

19]. In doing this, the sub-millimeter movements are ultimately exaggerated in comparison to a truly stationary object and therefore are left intact when preforming a removal of static data points.

The authors of [

19] make note that removing static background points from a frame that is a few seconds apart does not work in for a non-stationary object, such as a person walking. This is due to the principle that the movements appear exaggerated when comparing to a frame a few seconds apart, so [

19] notes that walking appears ‘smeared’ in this regard. Based on this differing outcome with static and dynamic objects, the algorithm presented in [

19] employs independent different background removal strategies; one for static object using a long window and one for dynamic objects using a short window. The experimental results presented in [

19] demonstrate a high accuracy of 95%. It should be noted that the experiments performed by [

19] does not appear to quantify the success of both moving individuals and static individuals simultaneously within the scene. The radar architecture used in the research presented by [

19] is slightly different to the mmWave tracking system that has been discussed in this paper. However, the research performed by [

19] illustrates the potential to use vital signs as a means of detecting a static object. It would be of interest to assess the range potential of implementing a static localization algorithm of this nature using a mmWave tracking system architecture.

The literature explored in this paper regarding vision sensor fusion and bio-metric micro-Doppler feature analysis are viable approaches to enhance traditional object detection techniques to track objects interchanging from a dynamic and static movement state.

Table 1 outlines the advantages and disadvantages of the two methodologies with respect to the comparison criteria. Although individually both methodologies prove viable, it would be interesting to consider a combination of both methodologies to compliment each other. Specifically, incorporating a micro-Doppler feature analysis component to the vision system could in turn remove the need of utilizing the universal background subtraction algorithm [

20] for identifying moving objects in the image. This could potentially be considered as a three component sensor fusion approach, where camera data points, static radar data points and dynamic radar points are fused.

3.2. Sensing Methodologies

Sensing is not typically considered a usual aspect that is present in an object tracking system. However, it is a stream of research that has been investigated independently and has the potential when integrated with a tracking system to enhance the tracking systems sensitivity and reliability. An enhancement to the tracking system through sensing could ultimately spawn through the additional extracted features that the sensing solution provides, granting more data points that can be incorporated into the tracking estimation and prediction. The advanced sensing methodologies that are explored in this paper can be classified as either general activity recognition or specialized estimation methodologies.

General activity recognition can be considered as a class of sensing methodologies that have an underlying objective of classifying a broad set of movements or activities that a given object in the field of view might exhibit. One stream of research that dominates this class of sensing methodologies is human activity recognition (HAR). Traditionally, a radar based HAR system relied on machine learning techniques such as random forest classifiers [

21], dynamic time warping [

22] and support vector machines (SVM) [

23]. In comparison to a deep learning based approach, these techniques are usually computationally less taxing due to their lower complexity. However, relying solely on conventional machine learning techniques for HAR contrastingly presents several limitations. A survey conducted by the authors of [

24] provides a thorough critical analysis over the evolution of radar-based HAR. In [

24], a conventional machine learning approach to HAR is considered to make optimization and generalization of the HAR solution difficult. The authors of [

24] highlight three fundamental limitations of machine learning techniques with respect to a HAR system. The first acknowledges the approach in which feature extraction takes place, specifically a manual procedure based on heuristics and domain knowledge which is ultimately subject to the human’s experience [

24]. The second limitation identified relates to the fact that manually selected features tend to also be accompanied by specific statistical algorithms that are dependent on the trained dataset. As a result, when applying the trained model to a new dataset the performance is typically not as good as the dataset that was used to train the model. Lastly, the authors of [

24] highlighted that the conventional machine learning approaches used in a radar based HAR system primarily learn on discrete static data. This poses a difference between the data that are used to train a model and the data that the model is subject to during real-time testing. The real-time data are principally continuous and dynamic in nature. The survey conducted by [

24] explores the potential for deep learning to assist in alleviating these limitations in machine learning radar-based HAR systems.

Although there are some limitations with using conventional machine learning approaches, it should also be acknowledged that there has been successful applications of radar-based HAR using these techniques. The research presented in [

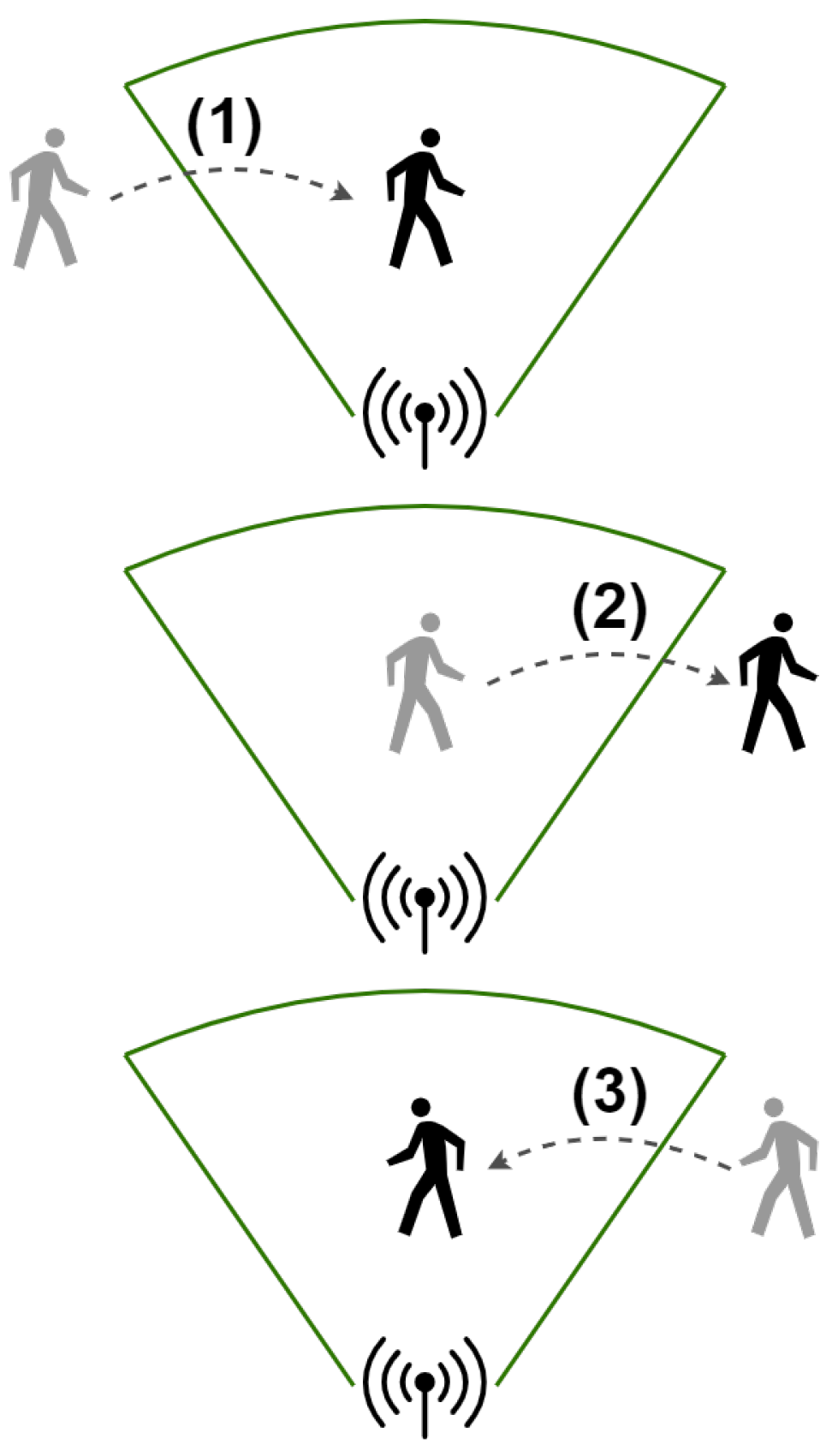

25] identifies recent work that attempts to classify three different walking/movement patterns:

The authors of [

25] attempt to classify these walking patterns comparing the performance between an approach using k-Nearest Neighbor (k-NN) and SVMs. The four system designs explored in the work presented by [

25] can be seen illustrated in

Figure 7. In [

25], both the range-Doppler and Doppler-time data are incorporated into feature extraction. In the research presented by [

25], the impact each of the walking patterns has in the range-Doppler and Doppler-time maps is illustrated in the form of a heat-map. It can be seen in this illustration, that the change in walking speed (the difference between slow and fast walking) results in a dramatic change in the range-Doppler and Doppler-time maps. Whereas, maintaining a consistent walking speed and with hands in the pocket has less of a notable difference.

In regard to extracting the features, the authors of [

25] explore and compare two potential approaches, using either Principle Component Analysis or t-distributed Stochastic Neighbor Embedding. Both of which are non-supervised transform algorithms. The two feature extraction methods are compared against each other whilst equally being applied with the two aforementioned classification methods. The permutations of feature extraction methods with classification algorithms explored are shown in

Figure 7. The results obtained from [

25] for each of the explored system designs in

Figure 7 demonstrate the capability of classifying fast and slow walking with high accuracy. Using the feature extraction methods and classification algorithms explored in [

25], the authors note a 72% accuracy in classifying slow walking with hand in the pocket.

Another piece of leading research in radar-base HAR is RadHAR presented in [

26]. In [

26], the authors explore a range of classification approaches, including both conventional machine learning algorithms and deep learning based algorithms. The primary objective of the RadHAR system is to classify five human movement activities; walking, jumping, jumping jacks, squats and boxing.

Unlike the research presented in [

25], in [

26] the data that are used for classification originates from point cloud. The point cloud data are first voxelized to to ensure a uniform frame size, despite the number of points, before feeding to the classification algorithm. Using the voxelized point cloud data, an SVM, multi-layered perceptron (MLP), Long Short-term Memory (LSTM) and convolution neural network (CNN) combined with LSTM were trained and compared against each other.

The results of the research conducted in [

26] demonstrate that the classification algorithm with the highest accuracy, 90.47%, is that of a combined time-distributed CNN and bi-directional LSTM. The authors of [

26] hypothesis that the high accuracy of this approach can be attributed towards the fact that the time-distributed CNN learns the spatial features of the point cloud data, whilst the bi-directional LSTM learns the time dependent component of the activities being performed.

Another more recent piece of research, presented in [

27], demonstrates a mmWave sensing framework that is capable of recognizing gestures fundamentally using micro-Doppler and AoA (both elevation and azimuth) data to form a set of feature maps. Features are then ultimately extracted using an empirical feature extraction method and used to train a MLP to classify gestures [

27]. An important aspect to consider regarding the research presented by the authors of [

27], is that the approach presented is for a field of view where only a single human performing gestures is present (i.e., not multi-object). This same limitation can also be seen in a similar piece of research presented in [

28]. The authors of [

28] demonstrate a mmWave system capable of performing 3D finger joint tracking using the vibrations and distortions evident on the forearm as a consequence to finger movements. However, as previously mentioned, this specialized estimation is also subject to the challenge of operating in a multi-person environment. Despite this, the authors of [

27] have made their approach so that underlying encoded assumptions about the number of people in the field of view has been abstracted from the core methodology to performing gesture recognition. Instead, the field of view constraint has been isolated to being a data formation challenge. The authors of [

27] acknowledge that the range data have not been taken into account in their presented approach, but would yield beneficial in extending their design to handle multiple people simultaneously performing their own sequence of gestures. Putting the specific classification task aside, the abstracted methodology presented by the authors of [

27] could serve as a framework to incorporating generalized activity recognition into a mmWave multi-object tracking system, ultimately uplifting the tracking profile maintained for an individual. As the authors of [

27] did not have multi-object within scope, extending the methodology to operate on each range bin, for satisfying multi-object support, raises concerns around whether real-time processing is still feasible.

Specialized estimation, as opposed to general activity recognition, is a class of sensing that ultimately has a primary focus on a single objective that can be measured. Measurement of this nature of course should be considered as an estimation. This class of sensing has overlap with features that can be used as a criteria for identifying a specific object. More details on features with the potential to be used as an identification strategy are addressed in

Section 3.3 of this paper. The primary driver behind research in radar-based specialized estimation methodologies originates from a human health perspective. The ability to determine human vital signs passively is an area in which mmWave radar is being explored as a viable solution. A study performed in [

29] demonstrates a solution named ’mBeats’ which aims to implement a moving mmWave radar system that is capable of measuring the heartbeat of an individual. The proposed ’mBeats’ system implements a three module architecture. The first modules is a user tracking module, which the authors of [

29] state that the system utilizes a standard point cloud based tracking system, as illustrated in

Section 2 of this paper. The purpose of this module is to ultimately find the target in the room. It should be noted that in [

29] an assumption is made that there will only be one target in the field of view. The second module is termed proposed in [

29] is termed as the ‘mmWave Servoing’ module. The purpose of this module is to optimize the angle in which the target is situated from the mmWave radar to give the best heartbeat measurement. To achieve this, the authors of [

29] specify the ultimate goal of this module as obtaining peak signal reflections for the targets lower limbs, since the mmWave radar is situated on a robot at ground level. Using the Peak To Average value as a determinant for the reflected signal strength, the authors define an observation variable which is incorporated by a feedback Proportional-Derivative controller to ultimately orientate the radar in the direction that yields the highest signal strength.

The last module is the heart rate estimation module, responsible for ultimately determining the targets heart rate from a set of different poses. The poses consist of various sitting and lying down positions. The authors of [

29] acknowledge that heartbeats lie in the frequency band of 0.8∼4 Hz, and therefore implement a biquad cascade infinite impulse response (IIR) filter to eliminate unwanted frequencies and extract the heartbeat waveform. A CNN is selected in [

29] as the predictor due to the heartbeat detection problem being considered as a regression problem. The authors state that a key challenge with using a CNN for this problem is estimating the uncertainty of the result. Uncertainty in this problem is ultimate caused by measurement inaccuracies, sensor biases and noise, environment changes, multipath and inadequate reflections [

29]. To overcome this, the authors of [

29] cast the problem into a Bayesian model, defining the likelihood between the prediction and ground truth (

) as a probability following a Gaussian distribution. This ultimately results in a loss function as illustrated in Equation (

11).

where the CNN predicts a mean

and variance

. Using this approach the authors of [

29] compare the outcome of their model with three other common signal processing approaches (FFT, Peak Count (PK) and Auto-correlation (XCORR)) with accuracy as the metric that is compared.

In the results presented in [

29], it can be seen that the other approaches fail to maintain an accuracy above 90% in all poses, whereas the CNN presented in [

29] does maintain a high accuracy for the selected poses. The authors acknowledge that in the current system the target must maintain static whilst performing the heartbeat measurement and that future work will be focused on measuring a moving object. It would also be interesting to assess the viability and challenges of this approach in a multi-person scene.

The underlying theme of the sensing methodologies explored in this paper is that independently they are successful in the goal they aim to achieve. However, there is a lack of acknowledgment in the literature regarding the suitability of these methodologies in a combined holistic tracking and sensing architecture. It would not only be interesting to assess their suitability in such a system, but also how they may contribute to enhance the sophistication and reliability of such a tracking system.

Table 2 outlines the advantages and disadvantages of the explored sensing methodologies, with respect to the comparison criteria. It can be seen in this table that both methodologies explored fail to address the challenges of operating in a multi-object environment. In order to achieve a tracking system that completes a target profile with sensing characteristics, the challenge of sensing multiple objects and associating the acquired information to a detected target must be solved.

3.3. Identification Strategies

The development of identification methodologies is a natural direction of the evolution for mmWave tracking systems. It can be considered a more unique type of specialized estimation sensing but with the key focus on being able to reliably and uniquely correlate the sensed information to a tracked object. There are a number of challenges that need to be considered and overcome in identification approaches, such as the feasible range, separation of multiple objects/people and generalization of the approach. This sections aims to explore the leading identification methodologies of radar-based tracking systems.

Gait identification approaches rely on the different gait characteristics between individuals. Gait based identification strategies are the most common passive based approach to identifying people in a radar or WiFi based tracking system. They fundamentally leverage that each person typically has a unique pattern in the way they walk, this pattern is most often identified through a deep learning-based technique. Gait recognition can pose its own challenges, such as inconsistencies and unpredictable upper limb movements that influence the lower limb signal reflections. This interference can ultimately reduce the reliability of obtaining a consistent lower limb gait pattern for a given individual. A recent study performed in [

30] attempts to overcome the challenges associated with upper limb movement interference by narrowing the vertical field of view and focusing attention on the finer grain movements of the lower limbs. The research presented in [

30] proposes a system that comprises of three phases:

Signal processing and feature extraction

Multi-user identification

CNN-based gait model training

In the first phase the authors of [

30] construct a range-Doppler map following the traditional methodology described in

Section 2 of this paper. The stationary interference in the range-Doppler map is then removed following a technique similar to the described approach in

Section 2.3 of this paper. The stationary reflections are subtracted from each frame of the range-Doppler frequency responses. The authors of [

30] observe that a cumulative deviation of the range-Doppler data occurs due to the dynamic background noises, which are not eliminated when subtracting the static interference. To overcome this, a threshold-based high-pass filter is implemented with a threshold

of 10 dBFS. This filter is described in Equation (

12).

where

is the range-Doppler domain frequency response at the

frame with range

i and velocity

j.

The authors of [

30] identify that the dominant velocity

can be used to describe the targets lower limb velocity in each frame. In [

30], this is expressed as Equation (

13).

where

is the normalized frequency response,

is the velocity corresponding to the frequency response

,

and

represent the number of range-FFT and Doppler-FFT points respectively.

The authors of [

30] illustrate the composition of these gait characteristics as a heat-map corresponding to the actual gait captured with a camera. Using these extracted gait features, the author of [

30] identifies that multiple targets can be differentiated firstly by range and secondly (if the range is the same) by leveraging distinct spatial positions. This is ultimately done by projecting the point

in the

frame to a point

in the two-dimensional spatial Cartesian coordinate system. To differentiate the data points in the spatial Cartesian coordinate system, Ref. [

30] implements a K-means clustering algorithm. Each individual gait feature can be generated as a range-Doppler map by negating the frequency responses that were not correlated in the K-means clustering [

30]. After differentiating the gait features, the authors of [

30] then identify a challenge regarding the segmentation of the actual step. In [

30], this is ultimately overcome by using an unsupervised learning technique to detect the silhouette of the steps.

Finally, a CNN-based classifier in the image recognition domain is used to identify the patterns associated with the gait feature maps. The classifier is assessed with multiple users and varying steps to determine the overall accuracy of the system. Overall, the system demonstrates a high accuracy that marginally decreases in accuracy as the number of users increases but is ultimately corrected as the number of steps increases.

Another overarching class of identification strategies being explored are tagging based approaches. This is not a passive approach unlike the others mentioned in this paper and involves incorporating a tag on the object so that it can be uniquely identified. There are two directions in which the literature focuses on in regards to identification of this nature. The first is radio frequency identification (RFID). In a chipless based RFID system, data must be encoded in the signal either by altering the time-domain, frequency-domain, spatial-domain or a combination of two or more of the domains. An example of RFID implemented as an identification strategy in mmWave can be seen in the ‘FerroTag’ research presented in [

31]. The ‘FerroTag’ system presented in [

31] is a paper-based RFID system. Although the usage of the FerroTag research is intended for inventory management, it could potentially be adopted to as a tagging strategy for a tracking based system. FerroTag is ultimately based on ferrofluidic ink, which is colloidal liquids that fundamentally contain magnetic nanoparticles. The ferrofluidic ink can be printed onto surfaces which in turn will embed frequency characteristics in the response of a signal. The shape, arrangement and size of the printed ferrofluidic ink will ultimately influence the frequency tones that are applied to the response signal. In order to identify and differentiate the different signal characteristics caused by the chipless RFID surface, the solution presented by [

31] utilizes a random forest as a classifier to identify the corresponding tags present in the field of view. The second approach to tagging as a means of identification is through re-configurable reflective surfaces (RIS). To the best of our knowledge no system has been presented in the literature that demonstrates a practical RIS solution for identification purposes in a mmWave tracking system. Research regarding RIS with respect to mmWave is predominantly in the communication domain. The challenges and opportunity to design an RIS based identification system for a mmWave tracking system are yet to be detailed.

Shape profiling has been seen implemented in previous mmWave research to identify an object by the properties of the objects shape. For example, if the object being tracked is a human, the height and curvature of the human body can influence the way in which the mmWave signal is reflected [

32]. The authors of [

32] demonstrate how a human being tracked and represented in point cloud form can be identified based on the shape profile of their body. Using a fixed-size tracking window, the related points to the particular human are voxelized to form an occupancy grid [

32]. This is then ultimately sequenced through a Long-short Term Memory network to classify the particular human [

32]. This particular identification method is abstracted from the tracking aspect of the process, therefore making it suitable regardless if there are multiple objects being tracked. suitable for identifying objects in an environment where multiple object tracking is taking place.

The research presented in [

33] differs to that presented in [

32] in the regard that the tracking data are not used during the identification stage. Instead, the authors in [

33] propose a strategy where once the human has been tracked, the radar adjusts its transmit and receive beams towards the tracked human. By doing so the granularity of the feature set available from the human body is increased. In other words, more specific profiling can be performed on the individual. The research presented in [

33] demonstrates the ability to characterize the human body by its outline, surface boundary and vital signs. Having this granular feature set, and tailored profiling, provides a stronger ground to positively identify an individual. However, this particular method does come at the cost of directing the beam just for identification purposes. Additionally, the existing research presented in [

33] does not make any remarks regarding the suitability for this method in real-time applications.

The various identification strategies explored in this section of the paper each have their own complexities involved in fundamentally incorporating into a tracking system.

Table 3 aims to assist in comparing the various identification methodologies, to ultimately understand their suitability and limitations around implementing them in a tracking system.