Task Offloading and Resource Allocation for Tasks with Varied Requirements in Mobile Edge Computing Networks

Abstract

1. Introduction

- To address the issue of tasks with immense sizes monopolizing system resources, we introduce the concept of an emergency factor to support tasks with small sizes in contending for system resources. The joint optimization of offloading decisions and edge resource allocation among tasks with significant differences in data size is formulated as a mixed-integer nonlinear programming problem.

- We decompose the MINLP problem into two subproblems and propose a linear-search-based coordinate descent method and a bisection-search-based resource allocation algorithm to address the offloading decision and resource allocation subproblems, respectively.

- Simulation results demonstrate the effectiveness of our proposed scheme in regulating offloading decisions and resource allocation when there is a significant difference in the data size of the offloaded tasks. When the tasks are of regular size, our scheme obtains the minimum delay as the compared baseline scheme.

2. Related Work

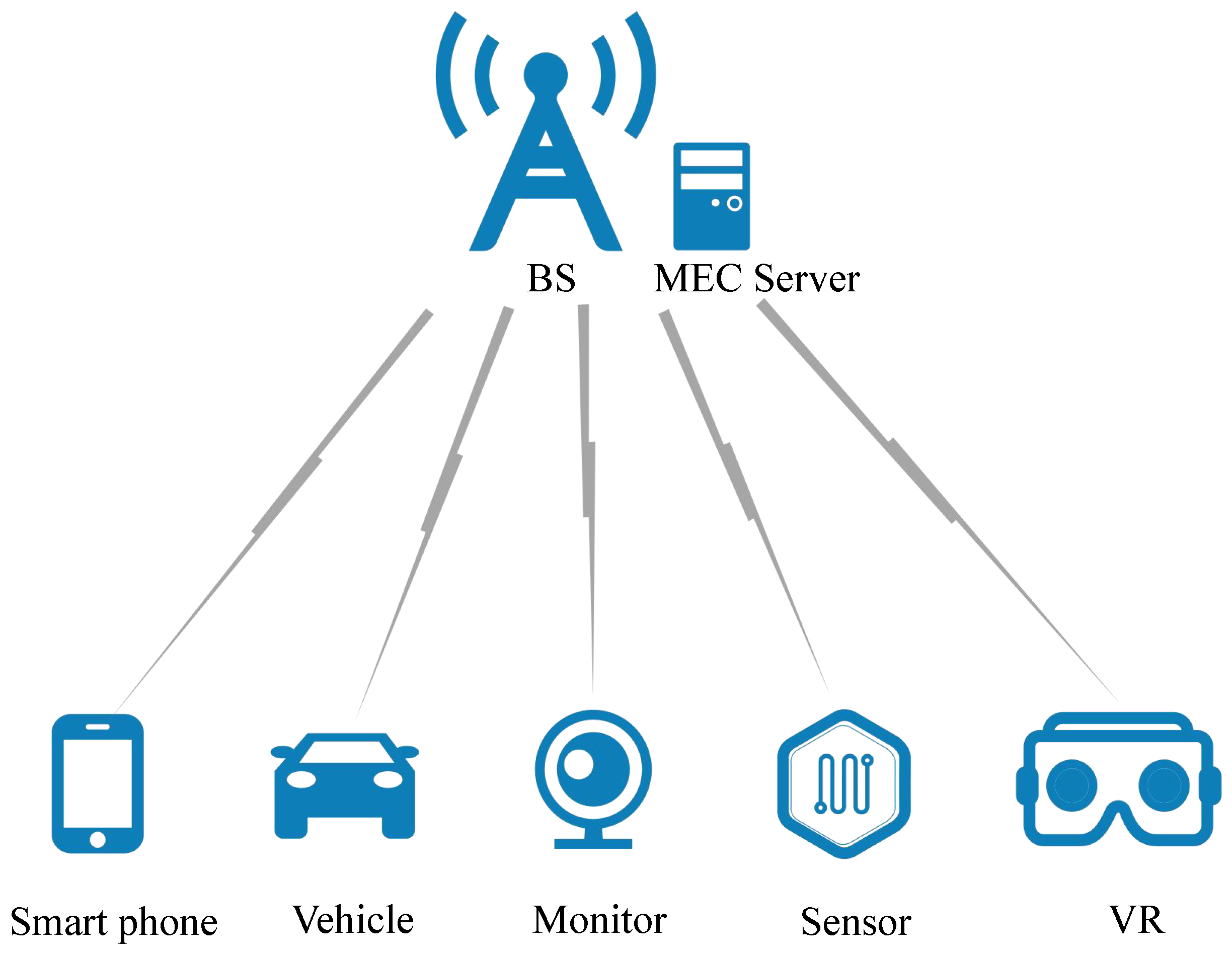

3. System Model

3.1. Local Computing

3.2. Edge Computing

3.3. Problem Formulation

4. Decoupled Computation Offloading and Resource Allocation with Coordinate Descent (CD)

| Algorithm 1: Linear CD-Aided Optimal Resource Allocation |

Input: in ascending order Output: offloading decision and corresponding resource allocation scheme ;

|

| Algorithm 2: Bisection-Search-Based Resource Allocation |

Input: ; Output: the optimal ;

|

4.1. Local Processing Part

4.2. Edge Processing Part

5. Simulation and Results

- All offload (AO): all tasks are processed at the edge server.

- All local (AL): all tasks are processed locally.

- Random offload (RO): the offloading decision is randomly generated and the resource allocation decisions are obtained with Algorithm 2.

- Brute-force search method (BF): searches all the offloading schemes and selects the one with the highest reward as the final solution.

- Naive coordinate descent (NCD): directly goes into the “while loop” [16] with the randomly initialized in Algorithm 1.

- Deep-reinforcement-learning-based scheme (DRL): uses channel conditions and task data size to make offloading decisions and utilizes the critic module to get the resource allocation scheme with minimum delay, which is slightly different from [29].

5.1. Simulation Setting

5.2. Result Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

References

- Mach, P.; Becvar, Z. Mobile edge computing: A survey on architecture and computation offloading. IEEE Commun. Surv. Tutor. 2017, 19, 1628–1656. [Google Scholar] [CrossRef]

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-edge ai: Intelligentizing mobile edge computing, caching and communication by federated learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, N.; Zhang, Y.; Chen, X. Dynamic computation offloading in edge computing for internet of things. IEEE Internet Things J. 2018, 6, 4242–4251. [Google Scholar] [CrossRef]

- Wu, Y.; Ni, K.; Zhang, C.; Qian, L.P.; Tsang, D.H. NOMA-assisted multi-access mobile edge computing: A joint optimization of computation offloading and time allocation. IEEE Trans. Veh. Technol. 2018, 67, 12244–12258. [Google Scholar] [CrossRef]

- Raza, S.; Wang, S.; Ahmed, M.; Anwar, M.R.; Mirza, M.A.; Khan, W.U. Task offloading and resource allocation for IoV using 5G NR-V2X communication. IEEE Internet Things J. 2021, 9, 10397–10410. [Google Scholar] [CrossRef]

- Yousefpour, A.; Ishigaki, G.; Gour, R.; Jue, J.P. On reducing IoT service delay via fog offloading. IEEE Internet Things J. 2018, 5, 998–1010. [Google Scholar] [CrossRef]

- Yang, B.; Cao, X.; Xiong, K.; Yuen, C.; Guan, Y.L.; Leng, S.; Qian, L.; Han, Z. Edge intelligence for autonomous driving in 6G wireless system: Design challenges and solutions. IEEE Wirel. Commun. 2021, 28, 40–47. [Google Scholar] [CrossRef]

- Qiu, T.; Chi, J.; Zhou, X.; Ning, Z.; Atiquzzaman, M.; Wu, D.O. Edge computing in industrial internet of things: Architecture, advances and challenges. IEEE Commun. Surv. Tutor. 2020, 22, 2462–2488. [Google Scholar] [CrossRef]

- Peng, K.; Huang, H.; Liu, P.; Xu, X.; Leung, V.C. Joint Optimization of Energy Conservation and Privacy Preservation for Intelligent Task Offloading in MEC-Enabled Smart Cities. IEEE Trans. Green Commun. Netw. 2022, 6, 1671–1682. [Google Scholar] [CrossRef]

- Ren, J.; Yu, G.; He, Y.; Li, G.Y. Collaborative cloud and edge computing for latency minimization. IEEE Trans. Veh. Technol. 2019, 68, 5031–5044. [Google Scholar] [CrossRef]

- Ren, J.; Yu, G.; Cai, Y.; He, Y. Latency optimization for resource allocation in mobile-edge computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 5506–5519. [Google Scholar] [CrossRef]

- Kai, C.; Zhou, H.; Yi, Y.; Huang, W. Collaborative cloud-edge-end task offloading in mobile-edge computing networks with limited communication capability. IEEE Trans. Cogn. Commun. Netw. 2020, 7, 624–634. [Google Scholar] [CrossRef]

- Saleem, U.; Liu, Y.; Jangsher, S.; Li, Y.; Jiang, T. Mobility-aware joint task scheduling and resource allocation for cooperative mobile edge computing. IEEE Trans. Wirel. Commun. 2020, 20, 360–374. [Google Scholar] [CrossRef]

- El Haber, E.; Nguyen, T.M.; Assi, C. Joint optimization of computational cost and devices energy for task offloading in multi-tier edge-clouds. IEEE Trans. Commun. 2019, 67, 3407–3421. [Google Scholar] [CrossRef]

- Naouri, A.; Wu, H.; Nouri, N.A.; Dhelim, S.; Ning, H. A novel framework for mobile-edge computing by optimizing task offloading. IEEE Internet Things J. 2021, 8, 13065–13076. [Google Scholar] [CrossRef]

- Bi, S.; Zhang, Y.J. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 4177–4190. [Google Scholar] [CrossRef]

- Xing, H.; Liu, L.; Xu, J.; Nallanathan, A. Joint task assignment and resource allocation for D2D-enabled mobile-edge computing. IEEE Trans. Commun. 2019, 67, 4193–4207. [Google Scholar] [CrossRef]

- Zhao, C.; Cai, Y.; Liu, A.; Zhao, M.; Hanzo, L. Mobile edge computing meets mmWave communications: Joint beamforming and resource allocation for system delay minimization. IEEE Trans. Wirel. Commun. 2020, 19, 2382–2396. [Google Scholar] [CrossRef]

- Ning, Z.; Dong, P.; Kong, X.; Xia, F. A cooperative partial computation offloading scheme for mobile edge computing enabled Internet of Things. IEEE Internet Things J. 2018, 6, 4804–4814. [Google Scholar] [CrossRef]

- Li, J.; Zhang, X.; Zhang, J.; Wu, J.; Sun, Q.; Xie, Y. Deep reinforcement learning-based mobility-aware robust proactive resource allocation in heterogeneous networks. IEEE Trans. Cogn. Commun. Netw. 2019, 6, 408–421. [Google Scholar] [CrossRef]

- Chen, M.; Hao, Y. Task offloading for mobile edge computing in software defined ultra-dense network. IEEE J. Sel. Areas Commun. 2018, 36, 587–597. [Google Scholar] [CrossRef]

- Tang, M.; Wong, V.W. Deep reinforcement learning for task offloading in mobile edge computing systems. IEEE Trans. Mob. Comput. 2020, 21, 1985–1997. [Google Scholar] [CrossRef]

- You, C.; Huang, K.; Chae, H.; Kim, B.H. Energy-efficient resource allocation for mobile-edge computation offloading. IEEE Trans. Wirel. Commun. 2016, 16, 1397–1411. [Google Scholar] [CrossRef]

- Chen, J.; Xing, H.; Lin, X.; Nallanathan, A.; Bi, S. Joint resource allocation and cache placement for location-aware multi-user mobile edge computing. IEEE Internet Things J. 2022, 9, 25698–25714. [Google Scholar] [CrossRef]

- Dai, Y.; Zhang, K.; Maharjan, S.; Zhang, Y. Edge intelligence for energy-efficient computation offloading and resource allocation in 5G beyond. IEEE Trans. Veh. Technol. 2020, 69, 12175–12186. [Google Scholar] [CrossRef]

- Chen, J.; Chen, S.; Wang, Q.; Cao, B.; Feng, G.; Hu, J. iRAF: A deep reinforcement learning approach for collaborative mobile edge computing IoT networks. IEEE Internet Things J. 2019, 6, 7011–7024. [Google Scholar] [CrossRef]

- Yan, J.; Bi, S.; Zhang, Y.J.A. Offloading and resource allocation with general task graph in mobile edge computing: A deep reinforcement learning approach. IEEE Trans. Wirel. Commun. 2020, 19, 5404–5419. [Google Scholar] [CrossRef]

- Chen, Y.; Li, Z.; Yang, B.; Nai, K.; Li, K. A Stackelberg game approach to multiple resources allocation and pricing in mobile edge computing. Future Gener. Comput. Syst. 2020, 108, 273–287. [Google Scholar] [CrossRef]

- Huang, L.; Bi, S.; Zhang, Y.J.A. Deep reinforcement learning for online computation offloading in wireless powered mobile-edge computing networks. IEEE Trans. Mob. Comput. 2019, 19, 2581–2593. [Google Scholar] [CrossRef]

- Bi, S.; Huang, L.; Wang, H.; Zhang, Y.J.A. Lyapunov-guided deep reinforcement learning for stable online computation offloading in mobile-edge computing networks. IEEE Trans. Wirel. Commun. 2021, 20, 7519–7537. [Google Scholar] [CrossRef]

- Fang, C.; Liu, C.; Wang, Z.; Sun, Y.; Ni, W.; Li, P.; Guo, S. Cache-assisted content delivery in wireless networks: A new game theoretic model. IEEE Syst. J. 2020, 15, 2653–2664. [Google Scholar] [CrossRef]

- Fang, C.; Yao, H.; Wang, Z.; Wu, W.; Jin, X.; Yu, F.R. A survey of mobile information-centric networking: Research issues and challenges. IEEE Commun. Surv. Tutor. 2018, 20, 2353–2371. [Google Scholar] [CrossRef]

- Fang, C.; Xu, H.; Yang, Y.; Hu, Z.; Tu, S.; Ota, K.; Yang, Z.; Dong, M.; Han, Z.; Yu, F.R.; et al. Deep-reinforcement-learning-based resource allocation for content distribution in fog radio access networks. IEEE Internet Things J. 2022, 9, 16874–16883. [Google Scholar] [CrossRef]

| Work | Offloading Mode | Optimization Variables | Objective | Methodology |

|---|---|---|---|---|

| [10] | Partial | , , , | D | Decomposition and Karush–Kuhn–Tucker conditions |

| [11] | Partial | 8, , | D | Lagrange multiplier method |

| [12] | Partial | , , 9 | D | Successive convex approximation |

| [14] | Binary | , , , | E | Branch-and-bound |

| [16] | Binary | , , | R 6 | The alternating direction method of multipliers and CD |

| [25] | Binary | , 7, | E | Deep deterministic policy gradient (DDPG) |

| [26] | Binary | 1, 2, 3 | D 4, E 5 | Monte Carlo tree search, DNN and replay memory |

| [27] | Binary | , | D+E | Actor–critic-based DRL |

| [30] | Binary | , , | R 6 | Lyapunov optimization and DRL |

| Our work | Binary | , , , | Revenue maximization | CD and Lagrange multiplier method |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, L.; He, W.; Yao, H. Task Offloading and Resource Allocation for Tasks with Varied Requirements in Mobile Edge Computing Networks. Electronics 2023, 12, 366. https://doi.org/10.3390/electronics12020366

Dong L, He W, Yao H. Task Offloading and Resource Allocation for Tasks with Varied Requirements in Mobile Edge Computing Networks. Electronics. 2023; 12(2):366. https://doi.org/10.3390/electronics12020366

Chicago/Turabian StyleDong, Li, Wenji He, and Haipeng Yao. 2023. "Task Offloading and Resource Allocation for Tasks with Varied Requirements in Mobile Edge Computing Networks" Electronics 12, no. 2: 366. https://doi.org/10.3390/electronics12020366

APA StyleDong, L., He, W., & Yao, H. (2023). Task Offloading and Resource Allocation for Tasks with Varied Requirements in Mobile Edge Computing Networks. Electronics, 12(2), 366. https://doi.org/10.3390/electronics12020366