1. Introduction

Imagining a day when autonomous vehicles (AVs) can travel across the cities with ease, pedestrians are thrust into a complex world of uncertainty. Although AVs represent a compelling aspect of society’s vision for the future, their ability to radically alter society depends on good human–machine interaction. AVs are key players in this closely integrated society, empowered by cutting-edge technology to enable smooth communication and interaction data sharing between vehicles and infrastructure components [

1]. Establishing consistent real-time communication channels between AVs and people, particularly during interactions at crosswalks, represents the greatest barrier [

2].

Certain ideas appear hazy in this environment, particularly those dealing with complex human decision-making processes. Deciphering pedestrians’ intentions and behaviors can be difficult due to the complex factors influencing them. Decision making for pedestrians is challenging due to the intricacy of their cognitive processes [

3]. The behavioral information from pedestrians also shows fuzziness similar to fuzzy logic principles, which adds to the complexity. Making decisions is made more difficult by the data’s inherent ambiguity. It becomes difficult to determine pedestrians’ complex actions and intents from such hazy data sources [

4].

A novel strategy that promotes the optimization of pedestrian decision making through the use of virtual reality (VR) technology has been developed in response to these complex issues. This method involves filming and studying pedestrian behavior in a regulated virtual reality setting to provide insights into human decision making. This methodology seeks to close the gap between complex human behavior and the realities of AV–pedestrian interactions through precisely planned trials [

5]. As researchers unravel the enigma of human behaviors, particularly at crosswalks, integrating external human–machine interface (eHMI) technologies has gained prominence [

6]. Exploring various designs and interactive components includes everything from lit bars and emoticon-like expressions to ocular displays on moving vehicles. These initiatives seek to make communication between AVs and pedestrians more effective and clear, promoting safer encounters. However, there are many significant obstacles to good AV–pedestrian communication. A major challenge is the requirement for standardized eHMI communication protocols, which calls for solutions considering different street designs, restricted pedestrian movement, and shared places. Overcoming these obstacles is consistent with the larger goal of developing mobility services while putting pedestrian safety first on busy roads [

7].

The National Highway Traffic Safety Administration (NHTSA) in the United States has identified technological challenges to overcome before eHMI can improve pedestrian safety in autonomous cars. The creation of universally understandable eHMI signals, the application of human-centric design concepts, assuring technical compatibility with the multiplicity of AV sensors, cameras, and systems, and carrying out stringent testing and validation procedures are all examples of these challenges [

8]. This research explores the complexities of human behavior and decision making to achieve seamless AV–pedestrian coexistence while utilizing the power of VR and cutting-edge eHMI systems. Untangling these hazy ideas is essential to creating a safer and more peaceful future for pedestrians and AVs as the autonomous mobility landscape changes. The representation of uncertainty and ambiguity in data is made possible by fuzzy sets: membership functions quantify the degree of membership of components in fuzzy sets, and rules control the processing of input variables to generate conclusions or actions in a fuzzy inference system. For modeling and resolving issues involving erroneous or partial information, these ideas are widely applied in many different domains, like pedestrian decision making at a crosswalk.

1.1. Autonomous Vehicle

The development of AVs is a noteworthy technological accomplishment changing how we move. AVs represent a substantial technical advance and a potential answer to many transportation problems, changing how people view mobility (Howard and Dai, 2014) [

9]. The numerous benefits of AVs include better accessibility, improved efficiency, increased safety, and less environmental impact. The NHTSA predicts that human error accounts for around 90% of vehicle collisions, a factor that can be largely reduced with AVs. The increased safety offered by driverless vehicles is a significant advantage.

One of the key benefits of AV technology is that it enables the development of a new form of transportation that combines the best aspects of private and public transit: collectively owned, shared vehicles. Private vehicle ownership has increased since the development of the automobile. The research demonstrates the methodology for acquiring comprehensive data to conduct an in-depth attribute analysis of various 3D LiDARs designed for autonomous vehicles. The data collected allow for evaluating LiDAR performance in key areas, including measurement range, accuracy, data density, object detection capabilities, mapping and localization efficiency, and resilience to harsh weather conditions and interference. Shared vehicle systems have been developed due to the unaffordability of individually owned vehicles and the need for similar mobility options. The study report developed a cooperative localization integrated cyber physical system in which vehicles close by share local dynamic maps. It decentralized a fusion architecture for system consistency to handle LDMs with ambiguous error correlations. The paper also suggested a consistent method for predicting relative vehicle poses using communicated polygonal shape models, a 2D LiDAR, and a point-to-line metric within an iterative closest-point method [

10]. Many of the obstacles to shared vehicle systems, from both the providers’ and users’ perspectives, will be removed by the development of completely autonomous vehicles, which will also improve the designs. Among these are moving vehicles to meet demand better and enhancing consumers’ access to the vehicles. In several research studies, the integration of V2X communication into particular AD stack modules, such as perception [

11,

12] and planning [

13], is the main focus. Applications for perception, planning, and control are described in [

14], and a V2X communication-enhanced autonomous driving system for automobiles is also suggested.

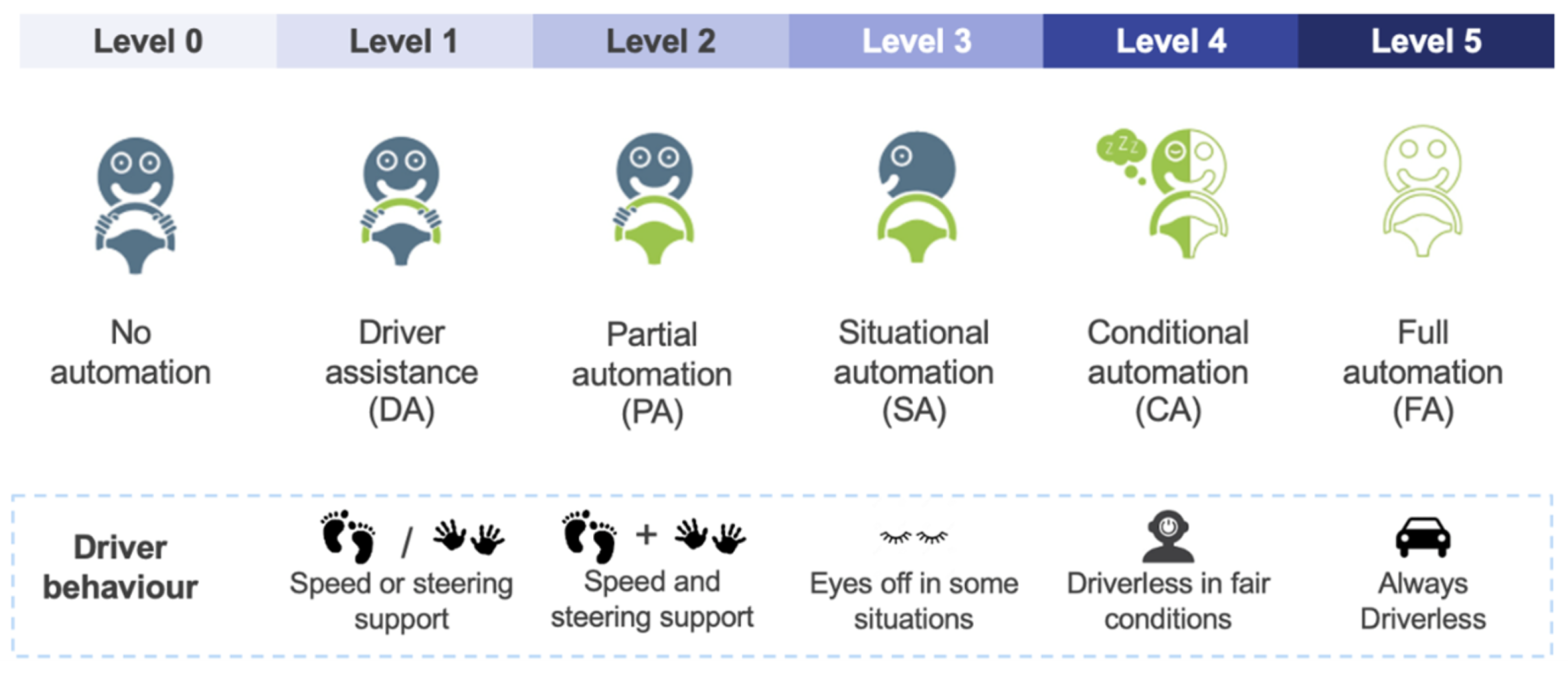

The Society of Automotive Engineers (SAE) [

15] has developed a vehicular automation classification system with six autonomy tiers. According to the complexity and level of sophistication of the automation technology used, these levels are depicted in

Figure 1 and are used to identify vehicles. As a result, the connected autonomous vehicles (CAVs) data gathering and analytics platform for autonomous driving systems (ADSs) is focused on extracting, reconstructing, and analyzing vehicle trajectories through cooperative perception [

16].

1.2. eHMI

The eHMI describes the emotional interactions between people and machines, especially AVs. The usage of eHMI in AVs intends to improve communication between the vehicle and its users or occupants as well as the overall user experience. AVs can better understand and react to the emotions and intents of passengers or other road users by including emotional cues and reactions in the interaction [

18]. This may involve the vehicle expressing emotions visually or audibly through voice modulation, facial expressions, or other means of expression, as shown in

Figure 2. There are various possible advantages of eHMI integration in AVs. Increased trust, comfort, and user pleasure may result from a more natural and intuitive relationship between people and vehicles. As the car can modify its behavior and responses based on the emotional state of the occupants or other drivers on the road, eHMI can also help to create a safer and more individualized driving experience [

19,

20]. Overall, eHMI in AVs seeks to develop a more engaging and emotionally intelligent relationship between people and AVs, enhancing safety at crosswalks and personalization while making driving more fun and effective [

21].

The growing adoption of eHMI technology in autonomous vehicles (AVs) necessitates increased standardization. This is essential for regulatory compliance, industry-wide consistency, and a seamless user experience. Challenges arise from varied approaches to expressing emotions in AVs. To ensure interoperability, additional standardization is vital. Collaboration among industry stakeholders, researchers, and regulators is pivotal in establishing global eHMI standards, simplifying processes, and promoting uniformity in AVs.

1.3. Necessity of eHMI

AVs integration into smart cities and their presence on roadways are becoming increasingly common as automotive technology develops. Fully autonomous or semi-autonomous vehicles lack a driver interface, which raises safety and security concerns questions among pedestrians and cyclists at crossings in contrast to regular manual autos, where pedestrians and cyclists may communicate with the driver [

23]. To improve interactions with pedestrians and ensure the safety and security of all road users, independent automotive firms like Mercedes, Waymo, and Zoox actively search for the best solutions globally [

24].

The external human–machine interface, often known as eHMI, is a crucial idea that has evolved to address this problem. eHMI focuses on developing strategies that aid dialogue and comprehension between autonomous cars and bicycles or pedestrians. It seeks to offer a smooth and effective contact environment that promotes confidence and trust [

25,

26].

eHMI comprises various elements that help to create a more secure and productive environment for interactions between AVs and pedestrians and is not limited to vehicle design. The vehicles may employ certain technology, visual signals, and movement patterns to communicate their knowledge and intentions to pedestrians, particularly in crosswalk and pedestrian crossing scenarios, among these elements [

27]. Interactions between vehicles and pedestrians are complex and varied. AVs may provide pedestrians with plenty of information about their awareness and intentions while focused on crosswalks and pedestrian crossing decisions through visible movement patterns such as speed, acceleration, and stopping distance [

28].

As eHMI research advances, it is anticipated to significantly improve the general safety and effectiveness of autonomous cars on the road, leading to a more peaceful cohabitation between autonomous technology and human road users, as depicted in

Figure 3.

1.4. Pedestrian Interaction with Automated Vehicles

Recent technological developments have significantly contributed to the development of AVs and the advent of self-driving vehicles as a prominent aspect of our society. The most well-known manufacturers of autonomous vehicles on the market include the Google self-driving car, Zoox, and Lyft, among others [

30].

A novel concern arises: how can we instill confidence in pedestrians, especially when there is no human driver, despite rigorous testing and data-driven enhancements for pedestrian safety in autonomous vehicles (AVs)? The focus now shifts to the interaction between pedestrians, cyclists, and AVs. The concept of eHMI emerges as a solution to this challenge, bolstering system understanding. Additionally, we are exploring the potential development of smart pole interaction units (SPIUs) for shared spaces, aiming to enhance the interaction between AVs and their surroundings. The one general idea of how the concepts of AVs, interaction with pedestrians, and the types of communications fit into one societal solution is shown in

Figure 4.

Improving pedestrian safety in autonomous driving also requires the use of cooperative perception [

31]. Real-world autonomous driving perception-related projects have significantly advanced this subject. First, integrating inertial sensors and LiDAR technology has increased the precision of autonomous vehicle positioning and orientation for map-matching localization in various situations [

32]. This development guarantees that autonomous vehicles can accurately map and track moving objects, such as pedestrians, even in difficult situations like crowded cities or bad weather. Second, the “Hydro-3D” system focuses on hybrid object identification and tracking for cooperative perception and uses 3D LiDAR sensors. Finally, creating a data collection and analysis framework for automated driving systems is essential for cooperative automation. With real-time connectivity and advanced data processing, this technology enables effective data sharing between vehicles, enhancing safety and autonomous decision making [

33].

Figure 4.

Pedestrian interaction with shuttle AV while crossing the crosswalk [

34].

Figure 4.

Pedestrian interaction with shuttle AV while crossing the crosswalk [

34].

1.4.1. Importance of Pedestrian Safety at Crosswalks

Walking, a sustainable and health-conscious transportation, is crucial in reducing traffic congestion in urban areas. It contributes to energy conservation and fosters a pleasant environment by minimizing noise and air pollution [

35]. Furthermore, it offers long-term environmental advantages. By improving pedestrian infrastructure and expanding access to public transportation, cities can enhance liveability and contribute meaningfully to the fight against climate change [

36]. While vulnerable road users (VRUs), including pedestrians, motorcyclists, and cyclists, lack protective barriers against accidents [

37,

38,

39], pedestrians are particularly at risk. According to the World Health Organization (WHO) assessment of global road safety, approximately 275,000 pedestrian fatalities occur worldwide each year due to car collisions [

40]. This report underscores that pedestrians and cyclists account for 26% of all road accident deaths globally [

40]. In the United States and the European Union, pedestrian fatalities comprise more than 14% and over 21% of traffic fatalities, respectively [

41]. Research shows that most pedestrian–vehicle collisions occur at intersections [

42,

43].

1.4.2. AV Intentions in Unsignaled Shared Spaces

A shared space, also known as a naked street, aims to create an environment where different road users can interact and share the space equitably. Traditional traffic control tools like traffic signals, signs, and road markings are purposely minimized or deleted in shared areas to encourage more socially engaged and cooperative behavior among users [

44,

45]. The concept of shared space emphasizes the importance of negotiation and human dialogue more than it does strictly following traffic laws and regulations. It aims to highlight the needs of bicyclists and pedestrians while allowing for automotive traffic, promoting social contact, enhancing safety as depicted in

Figure 5, and establishing a sense of place [

46,

47]. In the future, AVs will play a prominent role in our daily lives, performing various tasks such as autonomous delivery carts that operate in shared spaces. However, the interactions between AVs and pedestrians in these open shared environments have yet to be studied extensively. Most existing research has focused on traditional traffic settings with traffic lights, overlooking this crucial traffic scenario [

48,

49]. Recognizing the importance of addressing AV-to-pedestrian interactions in shared spaces, we have devised an innovative approach, moving beyond sole reliance on eHMI displays on individual AVs. We encounter several challenges: firstly, clarifying the standard right-of-way definition in shared environments is crucial. In the absence of traffic lights or designated crossings, pedestrians may need guidance to determine safe crossing points, potentially leading to confusion and conflicts with vehicles. Secondly, heightened vulnerability is observed in shared spaces, where pedestrians are more exposed than vehicles.

The novelty of this research work introduces the smart pole interaction unit (SPIU) concept in shared spaces to aid pedestrians in making decisions when interacting with autonomous vehicles (AVs) without traditional traffic signs and lights. While our study provides valuable insights, future research will expand the participant pool and validate the prototype in real-world settings. Our findings show that the SPIU significantly improved pedestrian–vehicle interactions, reducing cognitive load and enhancing response times, especially in multiple-vehicle scenarios.

2. Related Works

This section presents the background and related work on three topics as follows: (i) the framework of developing eHMI, (ii) pedestrian interaction with AVs evaluation, and (iii) shared space.

2.1. The Framework of Developing eHMI

The eHMI and the development of automated vehicles, one of our significant goals, shared by researchers and the European Commission, is to enhance road safety by minimizing fatalities and improving interactions between vehicles and pedestrians. This objective becomes particularly important as we strive for a future where AVs operate on our roads [

50]. A concise design chart summary has been tested and developed. Regarding safety improvements, effective communication and interaction between vehicles and pedestrians play a crucial role. It is important to consider the various vulnerable road users, including pedestrians, cyclists, and wheelchair users. In fully AVs, following the levels defined by the Society of Automotive Engineers (SAE), the driver is no longer actively engaged in the driving activity. Consequently, the highest risk lies in the interaction between pedestrians and AVs. Research interest in this field has grown significantly, as evidenced by the rise in scholarly articles discussing pedestrian interaction with AVs, which increased from 3250 in 2011 to over 10,000 in 2020, according to Google Scholar [

50]. To address this challenge, the eHMI development holds considerable promise as a potential solution for pedestrian communication in general [

51].

There are many aspects involved in the pedestrian decision input process when facing AVs as depicted in

Figure 6. eHMI patterns can be classified based on their characteristics, including text, audio, light-hung bars, one- and two-dimensional signals, road projections, symbols, eye movements, tracking lights, grins, infrastructure components, and mobile devices. These patterns have been found and tested via extensive investigation. However, the ethical and privacy issues raised by eHMI interactions with pedestrians make finding the best solution a problem. Our research examines new scenarios involving interactions with smart poles, although most research has concentrated on pedestrian interactions involving AVs approaching from one direction. We can bring a new approach to the eHMI sector because of this wider perspective, especially when two AVs are approaching from different directions [

7].

Pedestrian Interaction with AVs Evaluation

Pedestrian interaction with AVs is crucial for ensuring road safety. While low-automation AVs have demonstrated their validity over the years, examining the most recent statistics on AV crashes, particularly regarding crash severity, is essential [

53]. As AV technology has advanced, with improved sensors and accessibility, the question of pedestrian interaction in complex scenarios and situations without traffic lights or signboards remains a significant challenge [

54,

55].

In manual driving cases, pedestrians can interact with drivers and make decisions, especially in shared spaces where traffic lights and signboards are absent. However, no driver in an AV can lead to bottlenecks and confusion, potentially resulting in severe accidents [

56]. Therefore, finding solutions to improve pedestrian interaction with AVs is crucial. Numerous research studies focus on AV–pedestrian interaction from the perspective of AVs, which is responsible for designing rapidly and prioritizing their safety [

57]. The eyes on the car is a research topic that is very well known in which the research shows how eyes on AVs help to understand the intention of AVs [

58]. These studies often delve into deep learning, sensor technology, and algorithms to assist AVs in detecting pedestrians in different environments, including crosswalks and traffic signals [

19,

59].

However, simulation-based approaches have limitations when examining various parameters related to AV–pedestrian safety. Scarce studies, such as Day(2019) [

60], have found no main statistical significance in the effect of AVs on pedestrian safety compared to conflicts with manually driven vehicles [

61,

62]. This raises the question of investigating pedestrian behavior in real-life environmental conditions or implementing real-time management systems that effectively convey intentions to pedestrians [

63,

64].

Researchers of the human factors field have proposed various eHMIs for high-level AVs to bridge and tackle the communication gap between pedestrians and AVs interaction. These interactions aim to improve the understanding and predictability of AV behavior, enhancing pedestrian safety and trust in autonomous systems. The role of human drivers changes as automation levels rise, becoming passive bystanders who no longer actively engage in traffic encounters. As a result, there is a gap in the interaction between pedestrians and AVs. As depicted in

Figure 7, this break in conventional communication might cause traffic to become unclear and tense.

It has been carefully considered how to decide what data should be presented on the eHMI and how to present them [

66]. To handle this, four information types were proposed as a classification for eHMI design. Some categories include information about AVs objectives, perception of the surrounding area, cooperative skills, and the AV driving state [

67]. Several comparison studies were carried out to evaluate the effects of various eHMI setups. The impact of having no eHMI, status eHMI, status + perception eHMI, status + intent eHMI, and status + perception + intention eHMI was investigated in this research. The quality + purpose eHMI was the most beneficial in boosting the user experience among these setups [

67,

68,

69]. Furthermore, studies showed that pedestrians thought egocentric eHMI signals were more understandable than all egocentric ones. Whenever the message was unclear, pedestrians tended to see things from their perspective. To further describe the eHMI notion, an 18-dimensional categorization was suggested [

70]. Physical qualities, usability, and realism are the three main categories covered by this categorization, which covers the important eHMI aspects from both viewpoints [

71]. Many studies have also been conducted regarding the internal cabin interaction aspect, such as a study that helps in the development of HCI in smart car interiors and verifies the viability of glazing projection displays [

72].

2.2. Shared Space

The Dutch traffic engineer Hans Monderman’s theories are the foundation of the notion. He suggested a different strategy for controlling urban traffic that emphasizes the variety of human endeavors. Contrary to 1980s traffic control strategies, shared space does not prioritize limiting automobile traffic and speeds [

44]. Shared space is also known as a naked street. Instead, it promotes voluntary behavioral adjustments among all road users, backed by suitable public space design and organization. By substituting informal social standards for official traffic regulations like traffic signs and other traditional traffic engineering components, the intention is to encourage better traffic behavior. The lack of traffic signals, signage, directional indicators, and clean and open architecture of public places are characteristics of shared space [

73].

The goal is to promote the shared space by using available traffic space within a defined region while maintaining basic traffic. It is a design idea that aims to make the road safe and agreeable for all users, including automobiles, bikes, and pedestrians [

47]. Traditional traffic management devices like lights and signage are purposefully reduced or completely deleted in shared spaces. Another goal is encouraging genuine human relationships and compromise among drivers [

45]. The eHMI is essential in shared spaces because it enables good coordination and decision making by giving pedestrians and AVs clear and simple communication in places where there are no traffic lights and signs boards as depicted in

Figure 8, which shows a picture of a shared space in the Netherlands. According to [

74] shared space requires creating public spaces where motorists are treated as transient visitors. They realize that not everyone will connect with the other users of the shared area and might not act socially responsibly. However, because only around 10% of people engage in this activity, it is considered appropriate in a wider context. The bulk of automobile drivers and their behavior are the main emphasis. This presumption might not be true. First, people walking through the shared space could feel uncomfortable and disconnected. Second, hazards increase when a sizable percentage of drivers act recklessly. The link between reckless behavior and mishaps is more complicated, though. Accidents are uncommon, unusual events. According to studies like Verschuur (2003), those who break traffic laws (about 10–15% of automobile drivers) are more likely to get in collisions than people who scrupulously abide by the rules and show respect for the skills and hazards of others.

There are some earlier studies on shared space, with interactions between AVs and pedestrians [

76]. Effective communication between AVs and pedestrians has received much attention in recent years [

77]. Recently, the focus has switched to how AVs may clearly and understandably communicate their intentions to pedestrians, empowering pedestrians to make judgments. In this regard, several approaches have been investigated, including using eye-on-car-like characteristics on the front of AVs, incorporating smiling features by Semcon on the front of the vehicle, and using light bars on AVs [

58,

78,

79]. However, most of these studies have focused on how a single vehicle interacts with its users. Particularly around 2010, shared space growth brought fresh difficulties to the fore. According to reports from New Zealand, there is more uncertainty for pedestrians in public places since there are no obvious channels of engagement and communication, especially for youngsters and older people [

46,

73,

80]. Previous research on this subject has mostly concentrated on conventional road segments, and the idea of shared spaces has provoked conflicting responses from various road user groups. Beyond AVs technological features, building trust and comprehending AV intentions when approaching pedestrians in shared environments is paramount. Using eHMI concepts to increase societal acceptability is a potential strategy. The current study intends to solve this issue by supporting the responsibilities of AVs in shared spaces and resolving the aforementioned communication difficulty between pedestrians and AVs. It should be noted that significant research is needed to improve AVs feasibility regarding shared areas with pedestrians [

81,

82].

In Tokyo, there are many common shared spaces. In several spots we have visited in Tokyo, as depicted in

Figure 9, we can imagine the interactions between automobiles, bicycles, and pedestrians without the usual traffic signs or signals. We captured this occurrence in Bunkyo (a) and Arakawa (b), Tokyo, Japan, where traffic passes, particularly during rush hours. Looking into the future, with the introduction of AVs, it becomes crucial to consider putting in place an efficient eHMI system in these shared places to emphasize pedestrian safety. We may provide an organized and secure environment to guarantee the security and effectiveness of these interactions by using aspects of eHMI technology. As AVs become prevalent in urban planning, new social challenges arise. They must navigate through crowds and handle interactions formerly managed by human drivers. In response, scientists and engineers are diligently creating algorithms for AVs to navigate public spaces while adhering to social norms regarding proximity and motion [

80]. The absence of human drivers prompts questions about AVs’ ability to navigate diverse environments and select appropriate interaction methods. Despite ongoing AV technology improvements, shared spaces need more insights into real pedestrian–vehicle interactions. Additionally, robust data are essential to support relevant external interaction design [

83].

These uncertainties raise important issues, such as how AVs might interact with pedestrians in a shared space. As a researcher, we stress the need to study more and accumulate empirical data to comprehend how AVs may successfully navigate shared places and interact with pedestrians securely and respectably [

73]. This information will be crucial for refining AV algorithms, choosing suitable engagement strategies, and eventually promoting the incorporation of AVs into our urban settings. Researchers, urban planners, engineers, and politicians must work together to overcome these issues and realize the full potential of AVs in common areas. It is crucial to remember that AVs have more safety measures and interaction resources to afford a higher-risk situation [

84].

We can summarize the issues that we have in shared spaces and the interaction of AVs with pedestrians.

- 1.

Lack of communication in the shared space area.

- 2.

Uncertainty and trust.

- 3.

Right of way.

- 4.

Communication in case of multiple AVs from opposite directions.

Our approaches to designing a VR simulation environment for a shared space are to check the interaction of AVs with pedestrians, find one-way (with one AV) and two-way (with two AVs) approach interactions with opposite directions approaching AVs, and find the outcome and effectiveness of the proposed new approach that uses a smart pole interaction unit (SPIU) with an eHMI on AVs for shared spaces.

3. Proposed Method: SPIU and Virtual Environment

As depicted in

Figure 10, our suggested solution includes a VR environment that simulates a shared space with congested roadways. It was created on the open-source Unreal Engine platform. We have affected AVs in this setting without human drivers, giving the impression that the vehicle is moving independently. We included a crosswalk so people can see the AVs in one-way and two-way scenarios to heighten the realism.

We have included two SPIUs at either end of the crossing to facilitate efficient communication between the AVs and pedestrians. As the AVs approach, these devices employ illumination to let pedestrians know what the AVs intend to do. This cutting-edge technology concept enables pedestrians to understand when it is safe to Pass and Stop when AVs approach the road based on the illuminated signals emitted by the SPIU and eHMI on AVs. Our system offers an immersive experience that imitates the dynamics of a shared space setting with lanes by employing the Unreal Engine and VR technologies. Adding virtual AVs and the SPIU improves realism. Examining pedestrian responses and interactions in a safe and regulated virtual environment makes it easier.

The envisioned scenario takes place in stages. First, we purposefully exclude traffic signs and lights to create a specific real-world traffic scenario within a shared space context. The goal is to see how participants make decisions with AVs in such an environment. We use the Unreal Engine and VR headsets to portray this scenario, allowing participants to fully immerse themselves in the digital world. We then add a pressure-based grading system for the VR experience trigger button. Participants can indicate their confidence in their choice to cross the road using a rating scale from 0 to 1. A rating of 0 means low confidence, and 1 means high confidence. We can gauge how soon participants decide to travel by examining the confidence value and the related time and identifying the precise second at which their confidence reaches a value of 1. This knowledge sheds light on how participants make decisions.

3.1. Smart Pole Interaction

3.1.1. Unit Design Sketch

The primary objective of this concept is to leverage VR technology, enabling us to visualize and test its effectiveness effectively. This approach will allow us to implement these designs in real-world shared spaces more efficiently and precisely the SPIU imaginary design as depicted in

Figure 11. The motivation behind this research stems from the challenges posed by traditional traffic lights in shared spaces. Due to space limitations and the unsuitability of traffic lights and signboards in such areas, we recognize the need for an alternative interaction system to cater to the high pedestrian traffic and limited space. The proposed smart pole design seeks to address these limitations effectively. While we envision a future dominated by AVs, we acknowledge that manual drivers will remain a part of the transportation landscape. Hence, the SPIU concept presents a future-proof solution that serves as an interaction unit and will also display vital information about approaching AVs such as messages, illumination, sounds, advertisements, and real-time interaction. This functionality ensures pedestrian safety and maximizes efficiency in shared spaces. Our current participants’ study rigorously tests the effectiveness of the SPIU concept using VR simulations. We can evaluate their performance in one-way and two-way scenarios by exploring different illumination-based designs, particularly the current work focusing on its effectiveness in providing the intention of approaching AVs. This comprehensive evaluation process will allow us to refine the concept further and ensure its practicality and success once implemented in real-world settings.

3.1.2. Unit in Virtual Reality

This subsection will describe each scenario of the proposed SPIU method. The SPIU and virtual reality concept is described in

Section 3.1.3. The role of the participant step is provided in

Section 3.2. The usability of the smart pole interaction unit is described in

Section 3.2.1. In

Section 3.2.2, we explain why SPIU is more effective in shared space than traditional traffic lights. The description of the Unreal Engine is given in

Section 3.3. We also include the scenarios used in VR, which are played during testing.

3.1.3. Proposed Method: SPIU

We have developed a virtual SPIU design with a vertical cylindrical shape. The SPIU provides notifications to pedestrians in VR simulation scenarios before an approaching AV at the crosswalk. The dimensions of the SPIU are as follows: it has a height of 150 cm and a width of 35 cm. The key feature of the SPIU is its cylindrical screen, which displays an eHMI to communicate intentions to pedestrians. We used two colors, cyan and red, to convey different messages. In our study, the cyan color represents the Pass situation for AVs, indicating that pedestrians should halt their movement. It is considered a neutral color for communicating a yielding intention that the AV will Pass. In contrast, the red color represents the Stop situation for AVs, indicating that pedestrians start their movement as described in

Figure 12. These random color assignments were based on previous studies by Dey2020color [

85]. Our main objective is to examine how pedestrians interact with the SPIU when AVs approach the crosswalks. To accomplish this, we designed various scenarios in virtual reality, and the test setup for participants’ thinking is shown in

Figure 13 and

Figure 14.

These scenarios enabled us to evaluate the effectiveness of conveying intention cues through the SPIU and eHMI. In VR scenarios, we install the SPIU beside the crosswalk and close to the stopping line, specifically tailored for testing participant ease in decision making. When an AVs approaches the crosswalk, the SPIU responds by illuminating with a solid color, signaling the AVs intention to the pedestrian. This eliminates the need for pedestrians to check the eHMI on multiple AVs individually, fostering a more organic and cohesive shared space experience. By using the SPIU and eHMI, participants are relieved of the tediousness of inspecting each vehicle separately. Instead, the SPIU ensures effective communication regarding the visibility of eHMI for the approaching vehicle, especially in cases where the eHMI of one vehicle is obstructed by other vehicles in front. Pedestrians waiting to cross can rely on the SPIU to indicate the intentions of the upcoming vehicles, eliminating the need for individual vehicle inspections. The SPIU introduces a unique approach to facilitate dynamic interactions with pedestrians in one-way and two-way scenarios with AVs, which enhances safety and essential interactions with AVs on the shared road. We ran the experiment on a single machine with Intel i7 CPUs and a top-of-the-line graphics card (NVIDIA GeForce GTX 3070), the only platform the program supports. A smooth user experience is delivered thanks to this configuration, which guarantees a constant frame rate between 90 and 120 Hz.

3.2. Participants Tasks

Participant research was conducted to assess the efficiency of an SPIU and AV eHMI, as depicted in

Figure 13 and

Figure 14. Participants in the study viewed situations while wearing VR headsets, and their responses were captured using VR triggers. Eight scenarios were included, both with and without the SPIU, and each was repeated 64 times. The situations were dispersed among the participants randomly to preserve accuracy and consistency in the procedure. The study aimed to determine how well the SPIU and AV eHMI performed in a virtual reality setting.

We invited 16 participants to take part in this study; the task of the participants involved making decisions about crossing the road with and without the SPIU under two different conditions: (1) with the AV eHMI coming from one direction only (one-way scenarios) and (2) with the AV eHMI approaching from two different directions (two-way scenarios). The figure is depicted in

Section 3.3.1 and

Section 3.3.2, respectively. Participants used the VR trigger button to measure their time level and intention to indicate their decision-making time. The users’ study participants were instructed not to press the trigger button if they did not decide. The meanings of the different colors on the eHMI were not disclosed to the participants. By wearing the VR headset and using the trigger button, the participants actively engaged with the virtual environment and provided valuable feedback based on their understanding in multiple trials. The VR scenarios accurately represented approaching AVs and allowed us to interpret the conveyed intentions using the eHMI. Stadler et al., in their findings, show considerable differences in decision times throughout their trials between the control group and several HMI (human–machine interface) approaches. Specifically, the HMI concepts showed average reaction speeds between 2.0 and 3.0 s, whereas the control group’s average reaction time was 4.8 s. They go into great detail in their study on these results [

86]. Furthermore, virtual reality (VR) technology was used to explore pedestrian safety in a separate study by Deb and associates [

87] in 2017, providing a safe option for test subjects. We also utilize VR technology in our research and intend to broaden our experiments in follow-up studies, building on their knowledge. Our study includes methods addressing real-life challenges, including vehicles coming from opposite directions. The participants considered information from the SPIU and the eHMI displayed on the AV to make precise decisions.

3.2.1. Usability of Smart Pole Interaction Unit

The SPIU’s main goal is to promote interactions in shared space areas without traditional traffic signals or lights, as depicted in

Figure 15. Because traditional traffic control infrastructure may not be present in these places, it is essential to allow for adequate communication between AVs and pedestrians to prevent traffic jams and reduce the likelihood of accidents in the future when we have AVs and roads, such as delivery carts, etc.

We have taken several pictures around Tokyo demonstrating suitable sites for placing the smart pole supporting this idea. Residential neighborhoods, college campuses, pedestrian crossings on college campuses, and Bunkyo shared roadways are a few examples of these places. By implementing the SPIU in these areas, pedestrians could make decisions confidently, and AV technology would gain more widespread acceptance in society as depicted in

Figure 15.

AVs will engage with pedestrians in these shared space environments dependably and effectively by incorporating the SPIU. A visual interface or signals indicating the AVs detection and intention to concede the right-of-way would be sent to pedestrians via the SPIU. This improves safety while also fostering confidence between AVs and pedestrians. Additionally, the SPIU use in many contexts illustrates its adaptability and potential for wide adoption, which helps to advance the acceptance and integration of AVs in general.

The SPIU is an inventive response to the particular difficulties presented by shared places without conventional traffic lights. By putting it into practice, we can make pedestrians and AV surroundings safer and more peaceful, paving the road for the seamless integration of autonomous technology into our urban environments.

3.2.2. Reason for Using SPIU at Shared Space

We summarize four points concerning traditional traffic lights in shared spaces:

- 1.

Limited Space and Aesthetics: Shared spaces often have limited area and complex road layouts, making it challenging to install traditional traffic lights. The SPIU concept offers a compact and flexible design with a vertical cylindrical shape, enabling it to fit seamlessly into these crowded environments without obstructing pedestrian movement.

- 2.

Easy Understanding and Communication: The proposed SPIU and eHMI offer a more user-friendly and efficient communication system. Unlike traditional traffic lights, which do not communicate with AVs, the SPIU cylindrical screen with color-coded messages (e.g., cyan for Pass and red for Stop) provides pedestrians with clear, real-time, easy-to-understand intentions of approaching AVs.

- 3.

Future-Proof Solution: As we transition into a future with autonomous and manually driven vehicles on the road, the SPIU offers a future-proof solution. It can serve as a dynamic communication platform, adaptable to display messages, sounds, advertisements, and vehicle intentions, making it versatile for different scenarios and technologies.

- 4.

Improved Safety and Interaction: The SPIU ensures effective communication regarding the visibility of an eHMI for approaching vehicles. By adding the SPIU to increase the effectiveness for pedestrians to check each AV’s eHMI individually, the SPIU fosters more organic and cohesive shared space experiences. It enhances safety and interaction between pedestrians and AVs, making the shared space more harmonious and secure.

3.3. System Implementation with Unreal Engine

Unreal Engine is an open-source platform that provides researchers with a practical approach to creating and prototyping our ideas and generating scenarios per our needs. One may use the Unreal Engine from the company website at

https://www.unrealengine.com (accessed on 1 October 2022). The Epic Games launcher will launch once downloaded, enabling one to set up the Unreal Engine on their computer.

The Unreal Engine blueprint capability is one of its best qualities. Thanks to this robust tool, one’s VR prototypes may be quickly changed and iterated upon. A straightforward approach for connecting processes and optimizing one’s workflow pipeline makes up blueprints as per

Figure 16. The C++ programming language used during development improves the workflow and accomplishes the needed functionality. One may include objects like homes, vehicles, trees, roads, and many more from the Unreal Engine’s extensive library in their VR experiences. These pre-existing materials greatly accelerate the creation process, enabling one to concentrate on developing immersive worlds. To allow data to be stored during VR simulations using a headset, we also made use of the development of blueprints and the inclusion of scripts for each scenario.

As depicted in

Figure 16, in this part of our study, we have built a pipeline for VR simulation, which forms the basis for our testing. The automobile model, BPCar (blueprint of the car), is an essential part of this pipeline since it will be used to simulate numerous situations. We assure consistency and modality by using the same automobile model in various settings like execute pipeline as depicted in

Figure 17, making it simpler for participants to see and comprehend the user testing procedure. Both one-way and two-way possibilities will be covered in the scenarios that we will be studying. In these instances, there is either one AV or two AVs present. In the following parts, we will further detail each scenario, explaining its traits and goals. Additionally, we can independently change each design’s vehicle mobility and speed while keeping that speed constant across several simulations to guarantee fairness and comparability.

We have placed the SPIU next to the crossing to make the VR simulation easier. The AV can interact with the surroundings thanks to this fixed device. Additionally, a static eHMI bar integrated into the AV gives users access to important data and instructions. The HTC Vive Pro 2 headset has been used to handle the various views needed by the one-way and two-way situations, allowing participants to experience the simulation from the same point of view of understanding. Last, we continuously modify the blueprints and simulations using target instructions and testing. This iterative approach keeps the simulation adaptive while maintaining viability and usefulness. Using this full pipeline, we want to provide an immersive and realistic VR environment that allows for an in-depth testing and study of eHMI systems in AVs.

3.3.1. SPIU and AV eHMI 1-Way Scenarios for Pass and Stop

In our study, we carried out a thorough testing phase that included participant engagement in eight situations. The SPIU was used in half of these cases and left out of the other half. Each participant went through 64 trials in total, including with SPIU and without SPIU scenarios. We meticulously documented participant responses, thought processes, and comprehension of the given circumstances throughout the trials. Scenario 1 to 4 without SPIU is shown in

Figure 18.

Scenario 1: In this case, an AV communicates its intentions to the SPIU and the eHMI within the vehicle as it approaches a crosswalk in a shared space area in the scenario. The eHMI shows the AV intention, which a cyan color signal may represent, to inform pedestrians that vehicles are moving forward in trails. To provide pedestrians with a clear indication, the SPIU simultaneously displays the same cyan indicator. Because of the coordinated communication, pedestrians may plan and predict the AV path. Crossing the street when pedestrians notice the cyan signal is unsafe since they can discern that the AV is about to Pass and take appropriate action.

Scenario 2: In this case, an AV transmits its intentions to the SPIU and eHMI in the car as it approaches a crosswalk in a shared space area. The eHMI indicates the AVs purpose; however, a red signal indicates the AVs intention to Stop this time. The SPIU simultaneously lights the same red sign to give pedestrians a visual clue. Pedestrians may confidently cross the street when they see the red signal since they know the AV will Stop when they do. This coordinated communication between the AV and the SPIU fosters safety and effective traffic flow by establishing a clear and consistent understanding between pedestrians and the AV.

Scenario 3: In this case, an AV uses its eHMI to express its intentions as it approaches a crosswalk in a shared space area scenario. The eHMI visibly communicates the AVs intention to pedestrians, frequently represented by a cyan indicator signaling that it intends to go forward. However, there may be decreased trust and predictability in knowing the AVs planned course over time without coordinated communication between the AV and pedestrians. For pedestrians to cross the street safely, they may require more information than just the cyan signal since they must determine if the AV is genuinely about to Pass or if there is still time for them to do so.

Scenario 4: In this case, an AV uses its eHMI to express its intentions as it approaches a crosswalk in a shared space area scenario. The eHMI visibly communicates the AVs intention to pedestrians, frequently represented by a red indicator signaling that it intends to Stop, although there may be decreased trust and predictability in knowing the AV planned course over time without coordinated communication between the AV and pedestrians. For pedestrians to cross the street safely, they may require more information than just the red signal since they must determine if the AV is genuinely about to Stop or if there is still time for them to do so.

These hypothetical situations highlight the SPIU’s important contribution to improving the interaction between AVs and pedestrians in shared space VR scenarios, respectively. The SPIU efficiently conveys the AVs intentions, whether to Pass (shown by a cyan color) or Stop (indicated by a red sign), by working with the AVs eHMI. This visual cue encourages safer and more amicable interactions between AVs and pedestrians in shared environments while assisting pedestrians in making informed judgments.

3.3.2. SPIU and AV eHMI 2-Way Scenarios for Pass and Stop

In the two-way scenario, we will have the AVs approach from two opposite directions. First, we will conduct experiments with only the eHMI on the vehicles. Next, we will introduce both eHMI and SPIU illumination, allowing us to record and analyze which setup enables users to make more effective decisions, as depicted in

Figure 19 and

Figure 20.

Scenario 1: In this case, two AVs are approaching the participant from different directions but only have an eHMI in the front of the vehicle. Participants must choose whether to Pass or Stop based on the AV eHMI intention. The color cyan on the eHMI means that the vehicle will Pass.

Scenario 2: In this case, two AVs are approaching from different directions but are only equipped with an eHMI. Participants must choose whether to Pass or Stop based only on the AV eHMI intention. Here, the eHMI interpretation of red means that the AV will Stop.

Scenario 3: In this case, AVs are coming from both sides, and an eHMI on the AVs and an SPIU are used to communicate the intentions of the vehicles to pedestrians simultaneously. The eHMI on the AV and the SPIU flash the same cyan indicator before the AV gets close to the crossing, indicating that the AV will Pass.

Scenario 4: In this case, AVs are coming from both sides, and an eHMI on the AVs and an SPIU are used to communicate the intentions of the vehicles to pedestrians simultaneously. The eHMI on the AV and the SPIU flash the same red color indicator before the AV gets close to the crossing, indicating that the AV will Stop.

These examples emphasize the value of clear and constant communication in shared environments via the eHMI and SPIU. These interfaces help users to make knowledgeable decisions and promote safer interactions between AVs, pedestrians, and other AVs by communicating intents and behaviors.

4. Results and Evaluation

4.1. Procedure of User Study Session with Participants

We conducted trials in this study with 16 individuals aged 18 to 27. Four women and twelve men made up this group of participants, offering a broad representation. The average age of participants is 24.5; they received instructions and information before the studies to familiarize them with the goals and processes. We began the experiments once the subjects were prepared. The total number of participants is N; therefore, .

We used VR simulations to collect more detailed and qualitative investigative data. Participants were given a survey with questions after the VR simulation to obtain their thoughts and ideas. This response was an important information source for examining and evaluating the experiment results. We wanted to improve the study’s overall quality by incorporating participant input to acquire a deeper knowledge of their experiences and viewpoints.

The participation sees our dedication to providing a thorough and inclusive study of participants from all demographics and gathering feedback via questionnaires. These metrics allow us to collect various viewpoints and assess the usefulness and usability of the procedures put into place. We seek to significantly contribute to the eHMI and enhance developments in this shared space area via thorough design and study in VR.

To help the participants in our experiment navigate the research process, we created the flow chart seen in

Figure 21. The user study starts with a briefing and introduction phase where we provide the participants with detailed instructions about the research’s goal and timeline. In the second phase, to prevent prejudice, we introduce the SPIU at this point without expressly specifying the significance of the various colors. We then go on to the third phase, which includes randomly assigning VR experiences to each participant. Both one-way and two-way situation scenarios are included in this set, both with and without the SPIU. Sixty-four trials are completed, with data recorded concurrently for all sessions.

After the VR experience, participants are given a link to a survey with a barcode, allowing them to reply to 11 questions about their opinions and experiences. We meticulously record their replies for further examination. Additionally, we speak with each participant in-person to address any issues that they might have had with the experiment or to obtain their feedback on how to improve it. Using this well-organized flow chart and various methods, including briefings, VR scenarios, data collection, questionnaires, and interviews, we hope to thoroughly understand how participants experienced and perceived the SPIU. With this strategy, the efficacy and user experience of the interaction unit are thoroughly and methodically assessed.

4.2. Control Setup with VR Devices and Trigger

Four keys comprise the fundamental control scheme in the VR simulation, each with a distinct purpose to provide a smooth user experience. These keys will help one to interact with the virtual environment once fully immersed in the simulation. We can stop the movement or operation of the simulated vehicle by pressing the space bar key on the keyboard. Tapping this key may halt the virtual AV, enabling a brief pause or environment inspection. On the other hand, the second key, labeled moving, makes it easier to keep the virtual AVs moving. We try to provide a real environment stable scenario driving experience within the virtual world by pressing this key to start or stop the movement of the simulated vehicle eHMI. The third key stands for the eHMI system. This key unlocks several interactive features and controls within the simulation when it is enabled. We can change various settings, obtain information, or perform certain virtual activities related to the simulation goals using the eHMI system. The fourth key, SPIU, refers to the simulation device. This feature improves user engagement by enabling interaction with a virtual SPIU in the simulated world. By manipulating the SPIU with this key, one may engage in dynamic exchanges and create more options for immersive experiences.

A VR viewing system has been thoughtfully implemented to complement the control system, ensuring an immersive and realistic experience. The VR viewing system provides users with a fully immersive visual perspective, allowing us to perceive the simulated environment in a three-dimensional and interactive manner. The VR viewing system setup enhances the simulation’s overall realism and engagement, providing users with an enriched virtual experience. This comprehensive control system and VR viewing setup allow us to make an effective environment for users to navigate and interact with the VR simulation effectively, contributing to a more immersive and engaging experience. We have gathered important data about the participants decision-making processes and response times in the context of data recording and input. This was achieved by tracking how long each participant hit the trigger button, as shown in

Figure 22, and whether they decided to cross the line. We may learn more about participants’ decision-making speed and effectiveness by examining the time spent. Additionally, we have considered the pressure used to hit the VR trigger button, which goes from 0 to 1. Based on the pressure data, we can tell how confident participants are in their choices.

The reaction time and the pressure applied to the trigger button are the two pieces of data that have been meticulously captured and kept for future examination. Thanks to our all-encompassing method, we can probe deeper into the participant cognitive processes and degree of choice certainty. We can learn a great deal about the variables impacting decision making in the context of our research by looking at these recorded data points. In the end, this knowledge assists in creating an effective and efficient eHMI for the scenarios. It adds to a more thorough understanding of the pedestrians intention to Pass or Stop at the crosswalk.

4.3. VR Scenario Simulation

We investigated the effectiveness of an SPIU and AV eHMI within a VR simulation environment. The task of the participants involved making decisions about crossing the road with and without an SPIU under two different conditions: (1) with the AV eHMI coming from one direction only (one-way scenarios), and (2) with the AV eHMI approaching from two different directions (two-way scenarios). To indicate their decision-making time, participants used the VR trigger button to measure their time level and intention. Participants were instructed not to press the trigger button if they did not decide. If a user unintentionally pressed a button, we excluded those data. We continuously monitored the scenario through on-screen data. If, during data recording, users made erroneous decisions, we paused and excluded those results from our study for further consideration. The meanings of the different colors on the eHMI were not disclosed to the participants. By wearing the VR headset and using the trigger button, the participants actively engaged with the virtual environment and provided valuable feedback based on their understanding in multiple trials. The VR scenarios accurately represented approaching AVs and allowed us to interpret the conveyed intentions using the eHMI. Our study includes methods addressing real-life challenges, including vehicles coming from opposite directions. The participants can consider the information from the SPIU and the eHMI aspects displayed on the AVs to make precise decisions.

4.4. VR Device Specification

We have used HTC Vive Pro 2 to test and run VR simulations. The HTC Vive Pro 2 adaptability for testing and simulations is one of its main advantages. This VR system will be used for various purposes, from scientific research and professional training to gaming and leisure activities, thanks to its comprehensive features and specs. It is useful in many sectors due to its easy-to-use and synchronized adaptability. The HTC Vive Pro 2 is distinguished by its high-resolution display, dramatically improving the visual experience. The gadget has an outstanding pixel density of 615 pixels per inch (PPI) with a combined resolution of 2880 × 1600 pixels. These requirements produce crisp, vivid, and clear visuals, essential for building realistic simulations and giving users an engaging virtual environment. The HTC Vive Pro 2 also provides a wealth of support for applications and development environments. We can also construct specialized simulations suited to their needs by connecting with well-known gaming platforms like unity and Unreal Engine; in our case, we used Unreal Engine. The gadget also supports several VR frameworks and application programming interfaces (APIs), making it possible for programs of all kinds to integrate with it without any issues. During our testing and development, we used a high-end graphic card specification computer system that satisfies the hardware specifications, which is essential if one wants to make the most of the HTC Vive Pro 2 capabilities. We used a computer with a powerful graphics card, such as an NVIDIA GeForce GTX 3070, and a quick processor, such as an Intel Core i7, with sufficient RAM and storage capacity, respectively, to guarantee a fluid performance and handle massive volumes of simulation data.

Measurement

We conducted our research to determine the effectiveness of the SPIU on the road by evaluating the participants’ decision-making time process; that is, cognitive load for one-way scenarios vs. two-way scenarios, both with and without an SPIU. The decision-making time for participants was recorded by triggering a remote button while wearing the VR headset. They used this button to indicate whether they would Pass or Stop on the crosswalk for different scenarios. Additionally, we implemented a pressure-based rating system on the trigger button, ranging from 0 to 1, to evaluate participants’ confidence in their decision to cross the road. We utilized the confidence value and time to identify how quickly a participant decides to cross; that is, the time instance where the confidence reaches value 1. While experimenting, we took measures to ensure no error trials. We accomplished this by continuously monitoring the computer screen throughout the experiment. Even though some participants unintentionally pressed the trigger, we excluded these trials from our calculations. Throughout the experiment, we only considered the correct decisions made by the participants. To further explore the impact of the eHMI, we compared the cognitive load between scenarios with and without SPIU. Our hypothesis suggested that AV eHMI interaction with the SPIU would reduce cognitive load. During the user study, we obtained intriguing findings, which are summarized in the following results section. We aimed to address any concerns associated with its implementation and provide valuable insights to the research community. Our interactive presentation concisely conveys information and enables the audience to understand the significance of our work.

4.5. Evaluation Scenario Results

4.5.1. Male Participants Result Graph

With a number of

male volunteers, we performed research, and after thorough analysis, we came up with some surprising findings as shown in

Figure 23.

Our data show that male participants had more difficulties in the Pass scenario than in the Stop scenario. The occurrence of a greater number of outliers in the Pass situations is the main cause of this divergence. A participant’s overall performance and experience might be considerably impacted by the existence of outliers, which are data points that differ significantly from the rest of the total data.

The predominance of outliers in the Pass scenario may make it harder for male participants to obtain positive results. Therefore, greater research and care should be given to this performance discrepancy when designing and implementing interactive systems, particularly when male users are present.

For interactive systems to be inclusive and user-friendly, it is essential to comprehend these subtleties. Our study provides an opportunity to investigate potential remedies for the difficulties experienced by male participants and paves the way for more equal and effective interactions between various user groups.

In conclusion, we obtain the following understanding:

- 1.

Male participants faced more difficulties in the Pass scenario than the Stop scenario.

- 2.

The presence of a higher number of outliers in the Pass situations is the primary reason for this difference in performance.

- 3.

Outliers are data points that significantly deviate from the rest of the data and can considerably impact a participant’s overall performance and experience.

- 4.

Upon comparing response times during trials with and without the SPIU, a statistically significant improvement was observed when utilizing the SPIU, with a p-value of less than 0.001.

4.5.2. Female Participants Result Graph

We want to discuss the findings of a recent study with

female participants as the sample size. We have thoroughly analyzed the data and have found some fascinating results, which we will now give. The study measured how quickly female participants responded to different scenarios using the SPIU. Our results show that female participants consistently decrease the response times across all circumstances. This finding is consistent with the average outcomes attained from all scenarios as depicted in

Figure 24, which should be consulted for a more thorough comprehension of these results. These unexpected findings imply that the SPIU might improve female users’ response times, which could positively affect productivity and efficiency. It would be helpful to conduct additional studies with a bigger and more varied sample size to validate and generalize these findings. The fact that this study only included female individuals is significant, and it would be worthwhile to examine comparable trends in male participants. Creating inclusive and efficient user interfaces can help in understanding potential gender disparities in response times when utilizing an SPIU. In conclusion, our research findings show that when using the SPIU, female participants’ response times across a range of scenarios were significantly reduced.

In conclusion, we obtain the following understanding:

- 1.

Across all circumstances, the female participants exhibited a decrease in response times, which aligns with the average outcomes observed in all scenarios.

- 2.

However, the statistical significance p-value is less than 0.05, so this improvement in response time could not be established due to the limited sample size.

- 3.

The unexpected findings suggest that implementing the SPIU could enhance female users’ response times, increasing productivity and efficiency.

- 4.

To validate and extend these results, additional studies with a larger and more diverse sample size are recommended in our future study.

4.5.3. Average Response Time for All the Participants

In

Table 1, we examine the average response time for the participants across different scenarios. Our analysis reveals intriguing findings, particularly when considering the use of an SPIU. When participants encountered a Stop scenario with an SPIU, we observed a remarkable 21% improvement in response time for a two-way Stop scenario and a 9% improvement for a two-way Pass scenario.This signifies that the SPIU can significantly enhance interaction efficiency when pedestrians need to Stop and Pass.

In the case of the one-way Pass scenario with an SPIU, although we observed a more modest 2.19% improvement in response time, it still indicates some positive impact on interaction dynamics. However, the most noteworthy results emerged in the one-way Stop scenario, where we witnessed an impressive 9.12% decrease in response time with an SPIU. This indicates that the SPIU can considerably expedite interactions where pedestrians must stop and yield to vehicles.

Moreover, our study highlighted that participants in the two-way scenario performed notably better with the SPIU when compared to the one-way scenario. This suggests that SPIU effectiveness is more pronounced in scenarios involving interactions with multiple vehicles, such as crosswalks and intersections. The SPIU’s ability to convey intentions that closely emulate real environmental cues contributes to this improved performance, allowing pedestrians to interact more naturally and confidently with the traffic around them.

Hence, our research demonstrates the significant benefits of SPIUs in enhancing pedestrian–vehicle interactions. By leveraging SPIU technology, we can create more efficient and safer pedestrian environments, particularly in multiple-vehicle scenarios. These findings have important implications for developing advanced eHMI interaction systems that closely resemble natural and intuitive interactions in the real world.

5. Discussions

In our research, we conducted a paired t-test for statistical analysis. We observed a significant improvement (

p = 0.001) when utilizing an AV eHMI with an SPIU in the case of one-way Stop and two-way Pass scenarios, as well as two-way Stop scenarios. However, when examining the one-way Pass scenario, the advantage of the SPIU could not be statistically distinguished. We also investigated the effectiveness of the SPIU on the cognitive load of pedestrians in different scenarios. Therefore, we utilized the confidence value and time to identify how quickly a participant understands the method and decides to cross; that is, when the confidence reaches value 1. To summarize this information, as depicted in

Figure 25, we plotted timestamps for different scenarios with and without an SPIU. In

Figure 25, for the one-way scenarios, although there was an improvement in the response time for one-way Stop when compared with one-way Pass with only an eHMI, we observed that using an SPIU further decreased the response time for both the Pass and Stop scenarios. A similar benefit of SPIUs is kept in the case of the two-way Pass and Stop scenarios, thus validating the effectiveness of using an SPIU in reducing the cognitive load. Furthermore, we examined the difficulties in decision making between Pass and Stop for a one-way vs. two-way scenario with only an eHMI on the AV. Our data analysis revealed a significant delay in decision making in the two-way scenarios. Therefore, participants faced difficulties in decision making for two-way scenarios when relying solely on an eHMI. In conclusion, our study highlights the advantages of the SPIU in facilitating quicker decision making, thus reducing the cognitive load of participants, and highlights the challenges associated with relying solely on an eHMI in complex traffic scenarios, such as multiple AVs in a shared space.

We performed a thorough survey to assess the performance and user experience of the SPIU at pedestrian crossings. Sixteen people who have recently engaged with the SPIU were interviewed for the survey.Whether the participants had any experience using SPIUs at pedestrian crossings was one of the important questions that we addressed. Surprisingly, 93.8% of the respondents said that this interactive system was their first experience. These data show that SPIUs are still relatively new to most users, making them cutting-edge tools for traffic control and pedestrian safety. We divided the issue into one-way and two-way situations for the participants to test the efficacy of the SPIU in shared space scenarios. A total of 62.5% of users gave favorable responses in one-way scenarios when the SPIU presented information unidirectionally, indicating their approval of its efficiency. However, a substantially greater percentage of 80% found it beneficial in two-way instances when the SPIU engaged in interactive dialogue. This implies that SPIUs have a greater impact when they can include users in a dynamic information exchange, improving their overall experience and feeling of safety. We also examined the participants’ perceptions of safety when utilizing an SPIU at pedestrian crossings. A total of 93.8% of those polled said that SPIUs gave them peace of mind when deciding whether to cross the street. This outcome demonstrates the ability of SPIUs to affect pedestrian behavior favorably and guarantee a safe crossing.

We also asked about the potential effects of SPIUs in reducing accidents at pedestrian crossings. Surprisingly, all respondents indicated confidence and agreed that the SPIU idea might help to lower accidents at these crucial places. This resounding endorsement highlights the potential influence of SPIUs on AV interaction. It highlights the usefulness of these devices in the future planning aspects of the infrastructure development of smart cities and shared space.

We have reached the following important conclusions after carefully examining the data shown in the graphs for the one-way Pass, one-way Stop, two-way Pass, and two-way Stop scenarios:

SPIU benefits in one-way and two-way Stop situations include:

- 1.

In the case of one-way and two-way Stop scenarios, SPIUs have a significant advantage.

- 2.

In the case of the one-way Pass scenario, we found no appreciable benefits above the baseline.

- 3.

The two-way Pass scenario showed considerable gains in reaction time.

- 4.

Participants had more difficulties during the two-way Pass scenario than during the one-way Pass scenario, as seen by the longer average reaction times.

In conclusion, the two-way Pass scenario proved more difficult for participants than the one-way Pass scenario, showing potential gains in response time. These results underline the need for more research into the variables affecting participant performance and the potential benefits of SPIUs in particular traffic circumstances.

6. Conclusions and Future Works

This research endeavors to delve into the shared space environment, specifically investigating pedestrians’ utilization of proposed SPIUs for decision making in the absence of traditional traffic signs and lights, within the context of AVs. Our focus lies in comprehending how individuals interpret SPIU cues to make well-informed choices when confronted with the decision to cross or halt at crosswalks. We explored eight scenarios, one-way and two-way, involving 16 participants. Each participant completed 64 trials to evaluate decision making while crossing crosswalks. While our current study provides valuable insights, we recognize the need to enhance future research by increasing the number of participants, particularly to achieve gender balance.

This will allow us to investigate potential significant differences in decision making across various scenarios. Another limitation is that we have to check our validity in the VR environment; we aim to validate our prototype by implementing real-world testing of AVs in shared spaces with AVs. Participants will encounter the same scenario simulation to compare results with VR study results. One more limitation aspect that we acknowledged in our current study was the limitation of relying solely on color coding to convey information. To address this, we plan to explore textual and sound presentation methods with SPIUs. By doing so, we hope to contribute to the development of more effective and inclusive pedestrian safety measures in shared spaces, ensuring that individuals from diverse linguistic backgrounds can easily comprehend and respond to the cues provided by AVs and SPIUs; future research will be conducted in a dynamic and more complex environment before we make a conclusion and effective interaction with AVs in shared space.