VNF Migration in Digital Twin Network for NFV Environment

Abstract

:1. Introduction

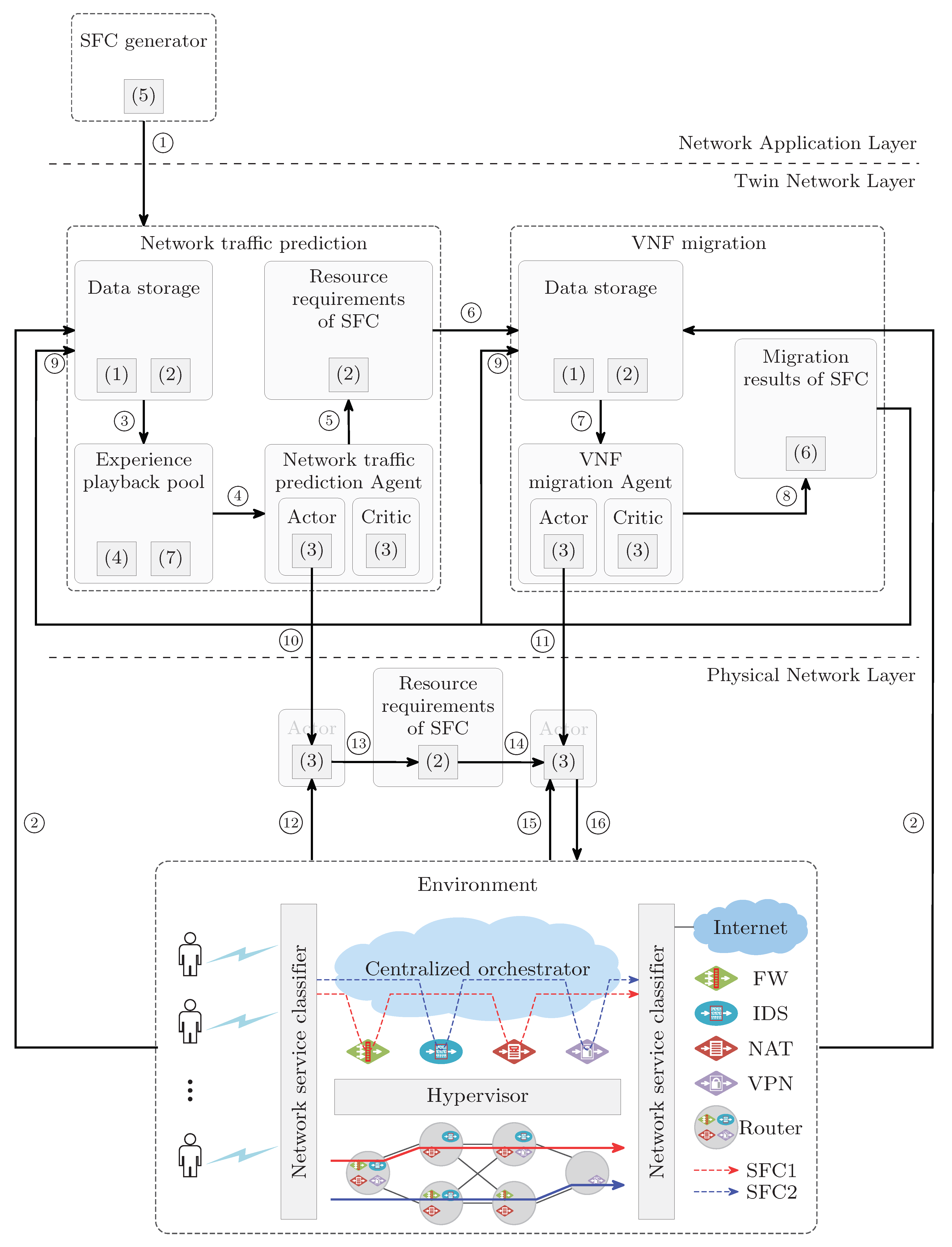

- Introducing the DT network architecture: In our approach, we integrate DT technology to construct a network architecture that faithfully simulates the real-time state and dynamic characteristics of the physical network. By mapping the physical network’s entities into the virtual realm, we design a DT network architecture tailored to handle time-varying network traffic efficiently.

- Addressing dynamic VNF migration: We tackle the challenge of VNF migration in the dynamic NFV network environment caused by fluctuating network traffic. To enhance the effectiveness of VNF migration decisions, we propose an algorithm called Agent based on Actor–Critic model and Graph Convolution Network (AC_GCN).

- DRL algorithm for efficient VNF migration: We introduce a DRL algorithm based on AC_GCN to determine the migration targets and formulate VNF migration strategies. The primary goal is to maximizing request acceptance rate and reduce migration frequency and energy consumption as much as possible.

- Performance analysis and key factors evaluation: In our study, we thoroughly analyze the performance of the proposed algorithms.

2. Related Work

2.1. VNF Migration

2.2. Traffic-Aware VNF Migration

2.3. Digital Twin Network

3. Network Architecture Design and Problem Statement

3.1. The Architecture of the DT Network in NFV Environment

3.2. Problem Model

3.2.1. System Model

- (1)

- Physical Network

- (2)

- SFC request

3.2.2. Problem Formulation

4. AC and GCN-Based Agent

4.1. Framework of Markov Decision Process (MDP)

4.1.1. State

4.1.2. Action

4.1.3. Reward

- (1)

- PenaltyThe cost encompasses penalties associated with energy consumption, VNF migration frequency, and SFC request rejection.

- (a)

- The first scenarioIn scenarios where the mapping of SFC request i succeeded in the previous time slot but failed in the next time slot, the penalty encompasses various factors, including the failure of SFC request mapping for the next time slot, the number of failed nodes and links associated with each SFC request, and the punitive measure applied to originally successful mappings in the previous time slot. The penalty can be expressed aswhere represents a constant coefficient and represents the penalty for the failed node within the SFC request i in time slot q, and it is calculated aswhere represents the number of VNFs in SFC request i, represents the penalty for failed VNF j of SFC request i in time slot q. It can be calculated aswhere represents a constant coefficient for the failed mapping of the node, represents the requested CPU of VNF j in SFC request i, represents the physical node to which VNF j is mapped, represents the maximum capacity of the CPU in physical node , and represents the residual capacity of the CPU in physical node .In Equation (10), represents the penalty for failed links within SFC request i, and it is calculated aswhere is calculated aswhere is a constant coefficient.

- (b)

- The second scenario In scenarios where the mapping of SFC request i failed in the previous and next time slot, the penalty associates with the failure of SFC request mapping in the next time slot, as well as the failed nodes and links. It is calculated as

- (c)

- The third scenario In scenarios where the mapping of SFC request i failed in the previous time slot but succeeded in the next time slot, the penalty is associated with energy consumption and the failed mapping of the SFC request i in the previous time slot. It is calculated aswhere is a constant coefficient for energy consumption and represents the energy consumption of SFC request i in the next time slot q, and it can be calculated aswhere and represent the maximum and minimum amount of energy consumed by physical node , respectively.

- (d)

- The fourth scenario In scenarios where the mapping of SFC request i succeeded in the previous and next time slot, the penalty should take into account both energy consumption and the number of migrated nodes. It is calculated aswhere represents a constant coefficient for node migration and represents the total number of migrated physical nodes in SFC request i, and it is calculated aswhere represents whether to migrate VNF j in SFC request i from physical node m to physical node n in time slot q. represents the set of physical nodes in the physical network, and represents the set of virtual nodes in the SFC request i.

- (2)

- Award The award value for the action taken in the next time slot is determined by comparing the award in the previous time slot with the award in the next time slot.

- (a)

- The first scenario In scenarios where the mapping of SFC request i succeeded in the previous time slot but failed in the next time slot, the award is determined as the negative value of the successful mapping award, and it can be expressed aswhere represents a constant coefficient for successful mapping.

- (c)

- The second scenario In scenarios where the mapping of SFC request i failed in the previous and the next time slot, Equation (21) serves to define the award:

- (c)

- The third scenario In scenarios where the mapping of SFC request i failed in the previous time slot but succeeded in the next time slot, Equation (22) serves to define the award:

- (d)

- The fourth scenario In scenarios where the mapping of SFC request i succeeded in the previous and the next time slot, Equation (23) serves to define the award:

- (3)

- Reward In the mapping scheme of SFC request i, the reward is calculated as the difference between the award and the penalty, and it can be represented as

4.2. Structure of the Agent for VNF Migration

4.2.1. Interactions among Components

4.2.2. Structure of the AC_GCN Agent

4.2.3. Structure of Actor Network

4.2.4. Structure of Critic Network

4.3. Update Methods for Parameters of the Neural Networks

4.4. Key Processes of the AC_GCN Agent

4.4.1. Predicting Process of the AC_GCN Agent

4.4.2. Training Process of the AC_GCN Agent

4.4.3. Predicting Process of GCN

4.4.4. Training Process of GCN

4.4.5. Function

5. Performance Evaluation

5.1. Simulation Setting

- Node CPU capacity range: [7, 10];

- Link bandwidth range: [400, 1000];

- Link latency range: [1, 4].

5.2. Compared Algorithms

5.3. Experiment and Simulation Results

5.3.1. Convergence of AC_GCN during Training

5.3.2. Comparison of Lagrangian and Other Metrics during Performance

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, Q.; Tang, L.; Wu, T.; Chen, Q. Deep Reinforcement Learning for Resource Demand Prediction and Virtual Function Network Migration in Digital Twin Network. IEEE Internet Things J. 2023. early access. [Google Scholar] [CrossRef]

- Ren, B.; Gu, S.; Guo, D.; Tang, G.; Lin, X. Joint Optimization of VNF Placement and Flow Scheduling in Mobile Core Network. IEEE Trans. Cloud Comput. 2022, 10, 1900–1912. [Google Scholar] [CrossRef]

- Liu, Y.; Lu, Y.; Li, X.; Yao, Z.; Zhao, D. On Dynamic Service Function Chain Reconfiguration in IoT Networks. IEEE Internet Things J. 2020, 7, 10969–10984. [Google Scholar] [CrossRef]

- Li, B.; Cheng, B.; Liu, X.; Wang, M.; Yue, Y.; Chen, J. Joint Resource Optimization and Delay-Aware Virtual Network Function Migration in Data Center Networks. IEEE Trans. Netw. Serv. Manag. 2021, 18, 2960–2974. [Google Scholar] [CrossRef]

- Qu, K.; Zhuang, W.; Ye, Q.; Shen, X.; Li, X.; Rao, J. Dynamic Flow Migration for Embedded Services in SDN/NFV-Enabled 5G Core Networks. IEEE Trans. Commun. 2020, 68, 2394–2408. [Google Scholar] [CrossRef]

- Wang, F.Y.; Qin, R.; Li, J.; Yuan, Y.; Wang, X. Parallel Societies: A Computing Perspective of Social Digital Twins and Virtual–Real Interactions. IEEE Trans. Comput. Soc. Syst. 2020, 7, 2–7. [Google Scholar] [CrossRef]

- Sun, C.; Bi, J.; Meng, Z.; Yang, T.; Zhang, X.; Hu, H. Enabling NFV Elasticity Control With Optimized Flow Migration. IEEE J. Sel. Areas Commun. 2018, 36, 2288–2303. [Google Scholar] [CrossRef]

- Eramo, V.; Miucci, E.; Ammar, M.; Lavacca, F.G. An Approach for Service Function Chain Routing and Virtual Function Network Instance Migration in Network Function Virtualization Architectures. IEEE/ACM Trans. Netw. 2017, 25, 2008–2025. [Google Scholar] [CrossRef]

- Badri, H.; Bahreini, T.; Grosu, D.; Yang, K. Energy-Aware Application Placement in Mobile Edge Computing: A Stochastic Optimization Approach. IEEE Trans. Parallel Distrib. Syst. 2020, 31, 909–922. [Google Scholar] [CrossRef]

- Cziva, R.; Anagnostopoulos, C.; Pezaros, D.P. Dynamic, Latency-Optimal vNF Placement at the Network Edge. In Proceedings of the IEEE INFOCOM 2018—IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 693–701. [Google Scholar] [CrossRef]

- Song, S.; Lee, C.; Cho, H.; Lim, G.; Chung, J.M. Clustered Virtualized Network Functions Resource Allocation based on Context-Aware Grouping in 5G Edge Networks. IEEE Trans. Mob. Comput. 2020, 19, 1072–1083. [Google Scholar] [CrossRef]

- Kumar, N.; Ahmad, A. Machine Learning-Based QoS and Traffic-Aware Prediction-Assisted Dynamic Network Slicing. Int. J. Commun. Netw. Distrib. Syst. 2022, 28, 27–42. [Google Scholar] [CrossRef]

- Jalalian, A.; Yousefi, S.; Kunz, T. Network slicing in virtualized 5G Core with VNF sharing. J. Netw. Comput. Appl. 2023, 215, 103631. [Google Scholar] [CrossRef]

- Bu, C.; Wang, J.; Wang, X. Towards delay-optimized and resource-efficient network function dynamic deployment for VNF service chaining. Appl. Soft Comput. 2022, 120, 108711. [Google Scholar] [CrossRef]

- Xie, Y.; Wang, S.; Wang, B.; Xu, S.; Wang, X.; Ren, J. Online algorithm for migration aware Virtualized Network Function placing and routing in dynamic 5G networks. Comput. Netw. 2021, 194, 108115. [Google Scholar] [CrossRef]

- Qin, Y.; Guo, D.; Luo, L.; Zhang, J.; Xu, M. Service function chain migration with the long-term budget in dynamic networks. Comput. Netw. 2023, 223, 109563. [Google Scholar] [CrossRef]

- Chintapalli, V.R.; Adeppady, M.; Tamma, B.R.; Franklin, A. RESTRAIN: A dynamic and cost-efficient resource management scheme for addressing performance interference in NFV-based systems. J. Netw. Comput. Appl. 2022, 201, 103312. [Google Scholar] [CrossRef]

- Shen, X.; Gao, J.; Wu, W.; Li, M.; Zhou, C.; Zhuang, W. Holistic Network Virtualization and Pervasive Network Intelligence for 6G. IEEE Commun. Surv. Tutor. 2022, 24, 1–30. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, X.; Zhang, K.; Maharjan, S.; Zhang, Y. Low-Latency Federated Learning and Blockchain for Edge Association in Digital Twin Empowered 6G Networks. IEEE Trans. Ind. Inform. 2021, 17, 5098–5107. [Google Scholar] [CrossRef]

- Wang, W.; Tang, L.; Wang, C.; Chen, Q. Real-Time Analysis of Multiple Root Causes for Anomalies Assisted by Digital Twin in NFV Environment. IEEE Trans. Netw. Serv. Manag. 2022, 19, 905–921. [Google Scholar] [CrossRef]

- Dai, Y.; Zhang, K.; Maharjan, S.; Zhang, Y. Deep Reinforcement Learning for Stochastic Computation Offloading in Digital Twin Networks. IEEE Trans. Ind. Inform. 2021, 17, 4968–4977. [Google Scholar] [CrossRef]

- Yi, B.; Wang, X.; Li, K.; Das, S.k.; Huang, M. A comprehensive survey of Network Function Virtualization. Comput. Netw. 2018, 133, 212–262. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, K.; Zhang, Y. Digital Twin Networks: A Survey. IEEE Internet Things J. 2021, 8, 13789–13804. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Y.; Min, G.; Xu, J.; Tang, P. Data-driven dynamic resource scheduling for network slicing: A Deep reinforcement learning approach. Inf. Sci. 2019, 498, 106–116. [Google Scholar] [CrossRef]

- Wang, Q.; Alcaraz-Calero, J.; Ricart-Sanchez, R.; Weiss, M.B.; Gavras, A.; Nikaein, N.; Vasilakos, X.; Giacomo, B.; Pietro, G.; Roddy, M.; et al. Enable Advanced QoS-Aware Network Slicing in 5G Networks for Slice-Based Media Use Cases. IEEE Trans. Broadcast. 2019, 65, 444–453. [Google Scholar] [CrossRef]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks; MIT Press: Cambridge, MA, USA, 2014; pp. 3104–3112. [Google Scholar]

- Geursen, I.L.; Santos, B.F.; Yorke-Smith, N. Fleet planning under demand and fuel price uncertainty using actor-critic reinforcement learning. J. Air Transp. Manag. 2023, 109, 102397. [Google Scholar] [CrossRef]

- Grondman, I.; Busoniu, L.; Lopes, G.A.D.; Babuska, R. A Survey of Actor-Critic Reinforcement Learning: Standard and Natural Policy Gradients. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 1291–1307. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Solozabal, R.; Ceberio, J.; Sanchoyerto, A.; Zabala, L.; Blanco, B.; Liberal, F. Virtual Network Function Placement Optimization With Deep Reinforcement Learning. IEEE J. Sel. Areas Commun. 2020, 38, 292–303. [Google Scholar] [CrossRef]

- Kumaraswamy, S.; Nair, M.K. Bin packing algorithms for virtual machine placement in cloud computing: A review. Int. J. Electr. Comput. Eng. (IJECE) 2019, 9, 512. [Google Scholar] [CrossRef]

| Subject | Related Work | Scope | Problem | Objective | Algorithm or Method |

|---|---|---|---|---|---|

| VNF migration | Ref. [7] | NFV | NFV elasticity control | Reduce migration cost | heuristic |

| Ref. [8] | NFV | VNF migration according to changing workload | Save energy | heuristic | |

| Ref. [9] | NFV | SFC placement in Mobile Edge Computing | Save energy | heuristic | |

| Ref. [10] | NFV | Time selection for Edge VNF placement | Reduce end-to-end latency | heuristic | |

| Ref. [11] | NFV | VNF placement in the edge network | Reduce end-to-end latency | heuristic | |

| Ref. [4] | NFV | VNF Migration in Data Center Networks | Resource optimization and delay reduction | heuristic | |

| Traffic-aware VNF Migration | Ref. [12] | NFV | VNF migration in dynamic traffic | Dynamic network slicing | machine learning |

| Ref. [13] | NFV | Request prediction in dynamic traffic | Resource reduction | CNN+LSTM+DRL | |

| Ref. [14] | NFV | VNF migration | Delay-optimized and resource-efficient | Ant Colony Optimization | |

| Ref. [15] | NFV | VNF migration in dynamic 5G networks | Time-average and cost-minimizing | Lyapunov optimization | |

| Ref. [16] | NFV | VNF migration in mobile edge network | Balance between the SFC latency and the migration cost | Markov approximation | |

| Ref. [17] | NFV | Resource allocation based on dynamic traffic load | Ensure performance isolation between VNFs | heuristic | |

| Digital Twin Network | Ref. [18] | Network virtualization in 6G networks | Conceptual architecture for the 6G network | AI integration | Apply digital twin network |

| Ref. [19] | Industrial Internet of Things | Instant wireless connectivity | Reliability and security | Apply digital twin network | |

| Ref. [20] | NFV | Root cause analysis | Availability and superiority | Digital twin network and hidden Markov model | |

| Ref. [21] | Industrial Internet of Things | Stochastic computation offloading and resource allocation | Long-term energy efficiency | Digital twin network and Lyapunov optimization | |

| Ref. [1] | NFV enabled Internet of Things | Network traffic prediction and VNF migration | Reduce the number of migrated VNFs and save energy | Digital twin network, DRL, and federated learning |

| VNF ID | CPU Cores Required | BW Required | Processing Latency |

|---|---|---|---|

| 1 | 1 | 10 | 1 |

| 2 | 2 | 10 | 1 |

| 3 | 2 | 10 | 1 |

| 4 | 2 | 20 | 2 |

| 5 | 2 | 20 | 2 |

| 6 | 2 | 20 | 2 |

| 7 | 1 | 20 | 2 |

| 8 | 1 | 20 | 2 |

| Equation ID | Constant Coefficient | Value |

|---|---|---|

| (10) | 1500 | |

| (12) | 60 | |

| (14) | 60 | |

| (16) | 5 | |

| (17) | 300 | |

| (17) | 200 | |

| (18) | 10 | |

| (20) | 15,000 | |

| (26) | B | 300 |

| (28) | 0.9 |

| Hyper Parameter | Value |

|---|---|

| Learning Rate of Actor Network | 0.1 |

| Learning Rate of Critic Network | 0.0001 |

| Number of Time Slots | 12 |

| Number of Layers in LSTM | 2 |

| Number of Hidden Dimensions in LSTM | 100 |

| Discard Rate | 0.2 |

| Training Times | 201 |

| Algorithm | Request Acceptance | Availability of Node | Availability of Link | Requirement Satisfied | Energy Consumption | Migration |

|---|---|---|---|---|---|---|

| DRL | no | yes | yes | yes | yes | no |

| AC_GCN | yes | yes | yes | yes | yes | yes |

| FF | no | no | no | yes | no | no |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Y.; Min, G.; Li, J.; Li, Z.; Cai, Z.; Zhang, J. VNF Migration in Digital Twin Network for NFV Environment. Electronics 2023, 12, 4324. https://doi.org/10.3390/electronics12204324

Hu Y, Min G, Li J, Li Z, Cai Z, Zhang J. VNF Migration in Digital Twin Network for NFV Environment. Electronics. 2023; 12(20):4324. https://doi.org/10.3390/electronics12204324

Chicago/Turabian StyleHu, Ying, Guanbo Min, Jianyong Li, Zhigang Li, Zengyu Cai, and Jie Zhang. 2023. "VNF Migration in Digital Twin Network for NFV Environment" Electronics 12, no. 20: 4324. https://doi.org/10.3390/electronics12204324

APA StyleHu, Y., Min, G., Li, J., Li, Z., Cai, Z., & Zhang, J. (2023). VNF Migration in Digital Twin Network for NFV Environment. Electronics, 12(20), 4324. https://doi.org/10.3390/electronics12204324