GBSG-YOLOv8n: A Model for Enhanced Personal Protective Equipment Detection in Industrial Environments

Abstract

:1. Introduction

- To enhance the PPE detection model’s performance, we have established a new dataset called PPES, which comprises many images captured by cameras in industrial settings, providing ample data resources for research.

- By introducing GAM and embedding it into the model’s backbone network, we enhance the focus on PPE targets, suppress interference from non-target background information, and significantly improve the feature extraction capability of the backbone network.

- To effectively integrate feature information from different scales and prevent the loss of PPE feature details, we optimized the PANet structure within the Neck network. This optimization facilitated efficient bidirectional cross-scale connections and feature-weighted fusion, further enhancing detection accuracy.

- We have innovatively designed the SimC2f structure to significantly enhance the performance of the C2f module, resulting in the more efficient processing of image features and an improvement in overall detection efficiency.

- To satisfy the real-time PPE detection and the light weight of the model, we use GhostConv to optimize the convolution operation in the backbone network, which significantly reduces the amount of model computation and parameters, while ensuring high detection accuracy.

2. Related Work

3. Method

3.1. YOLOv8n Model Analysis

3.2. Improved Model

3.2.1. Global Attention Mechanism

3.2.2. Bidirectional Feature Pyramid Network

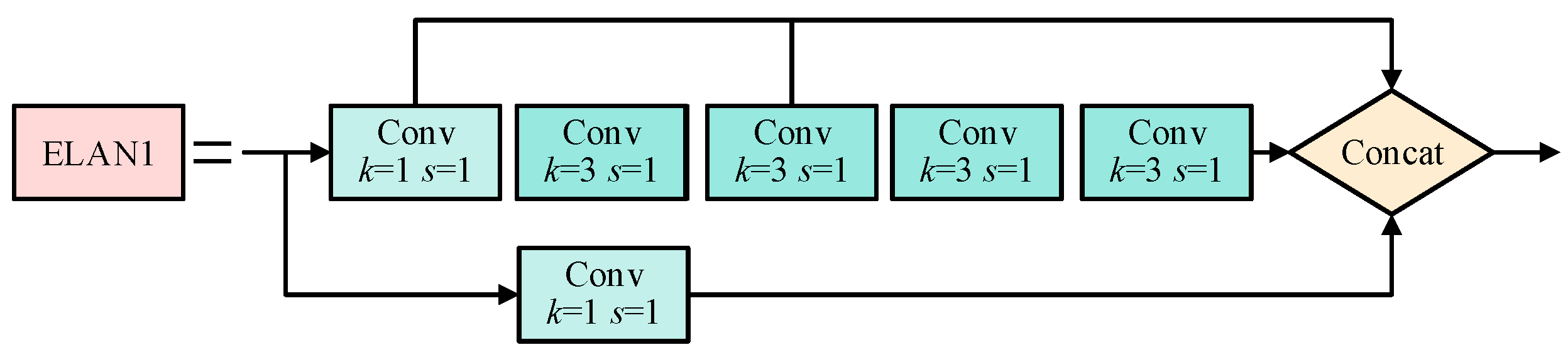

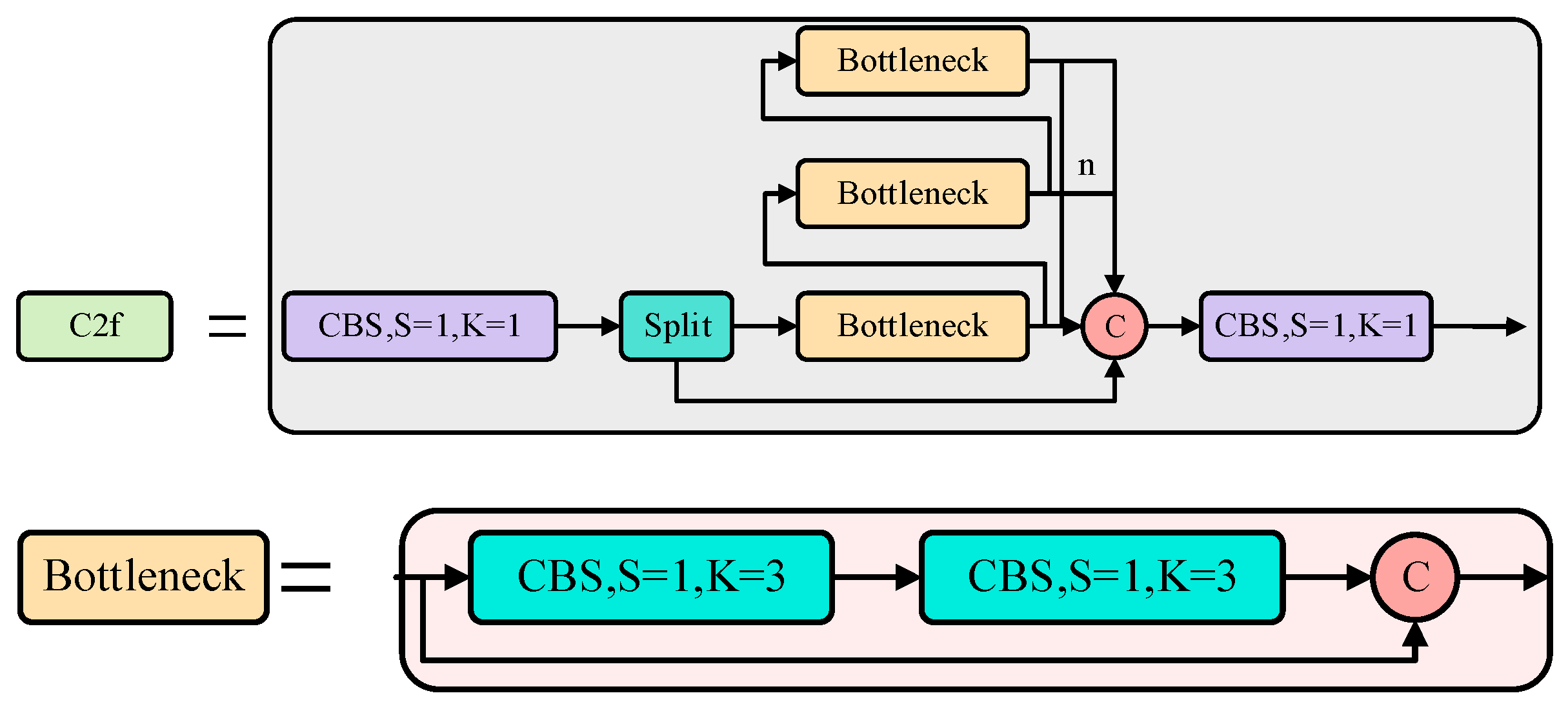

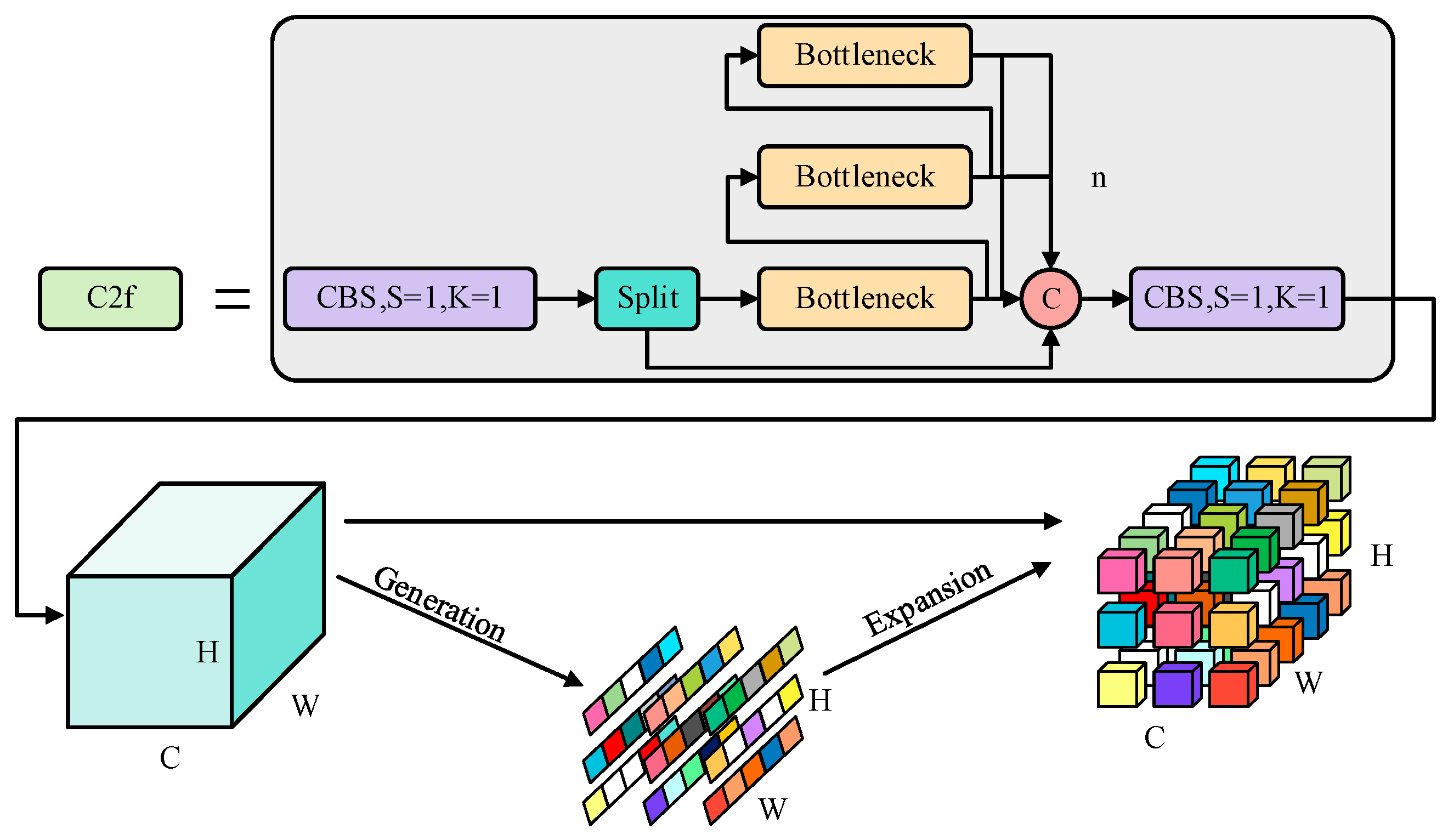

3.2.3. SimC2f Design

3.2.4. GhostConv

4. Experimental and Results

4.1. Experimental Datasets

4.2. Experimental Environments

4.3. Evaluation Metrics

4.4. Experimental Results and Analysis

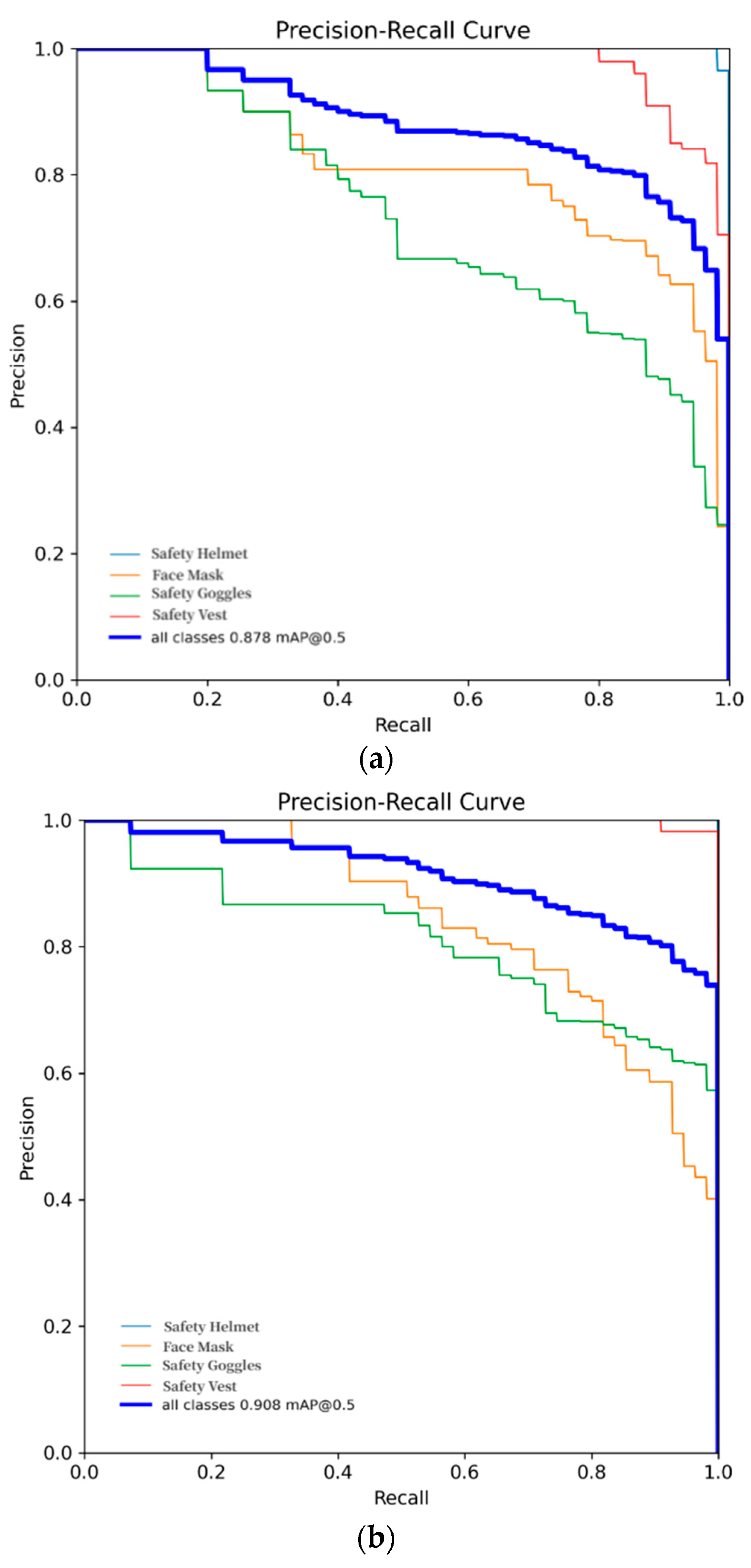

4.4.1. Performance Analysis of the GBSG-YOLOv8n Model

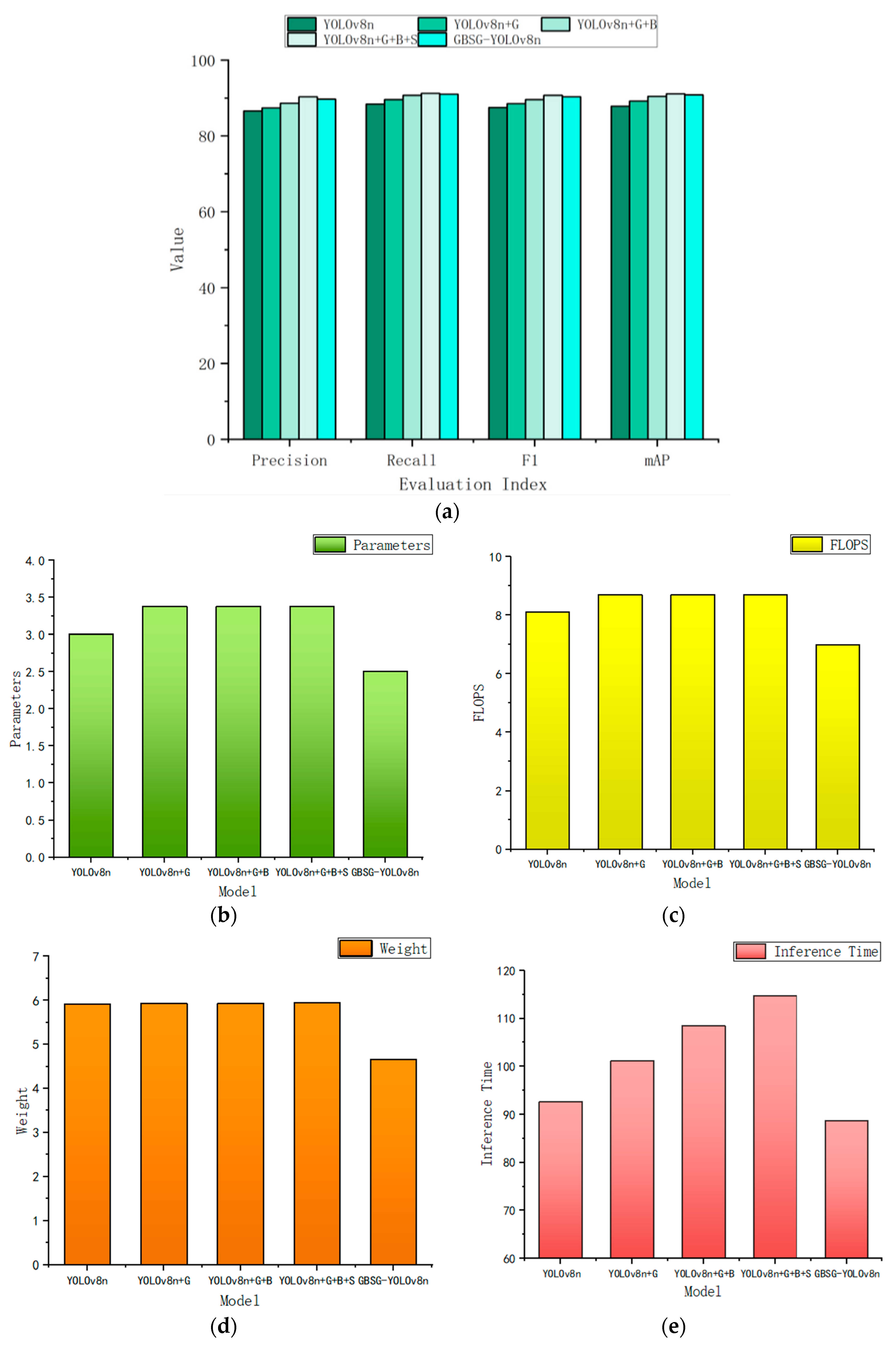

4.4.2. Ablation Experiment

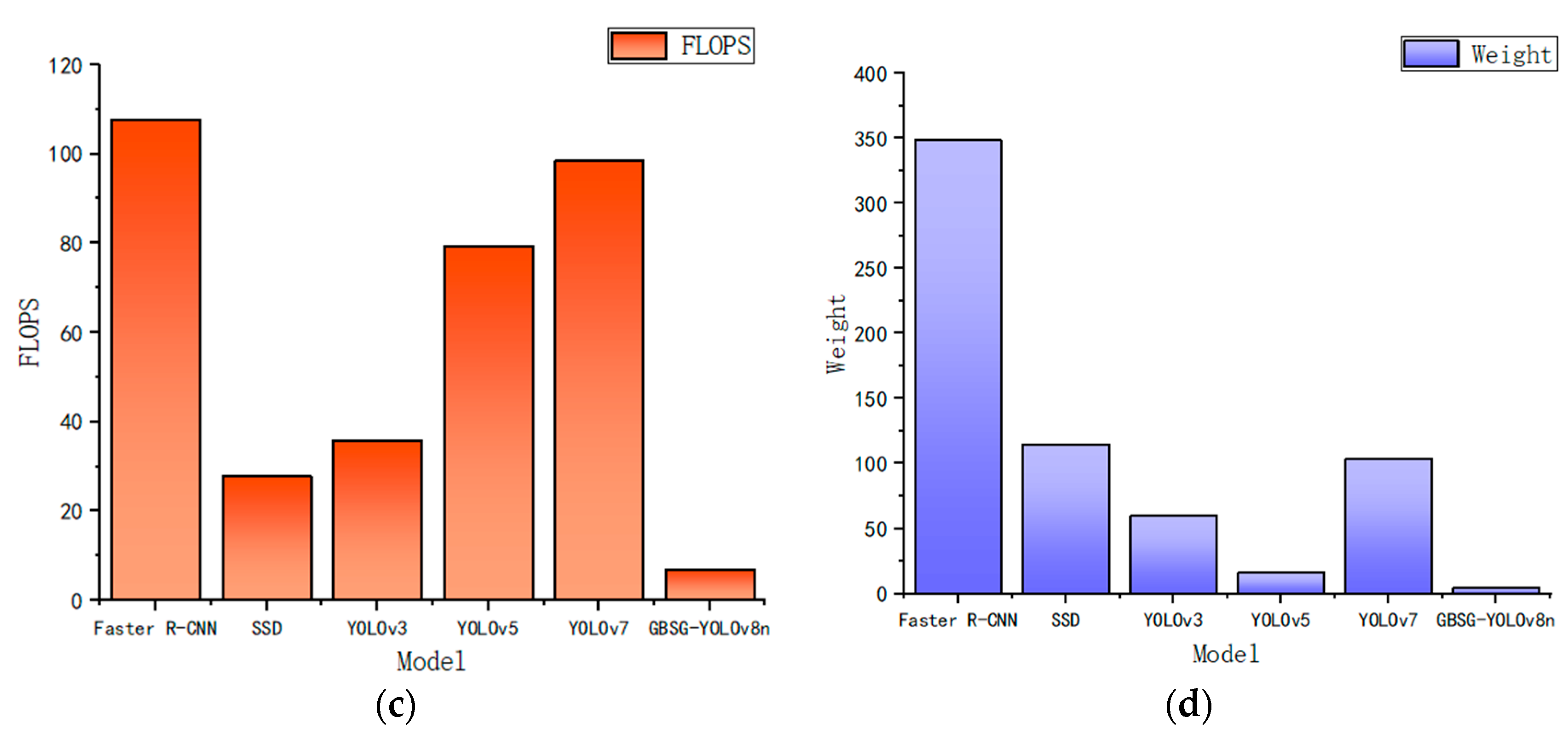

4.4.3. Experiments Comparing GBSG-YOLOv8n to Other Models

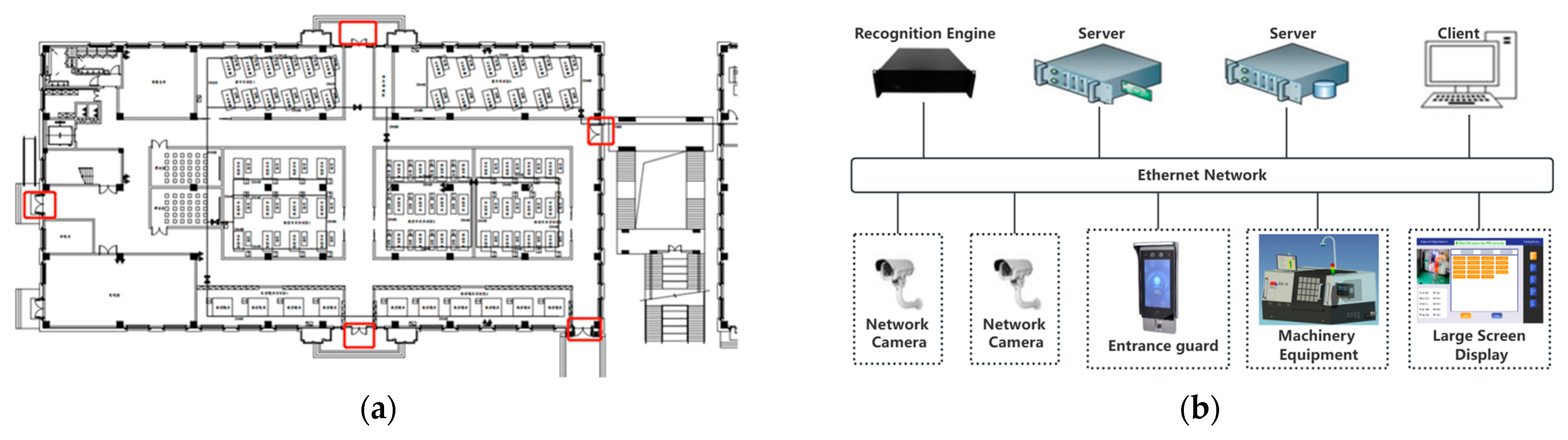

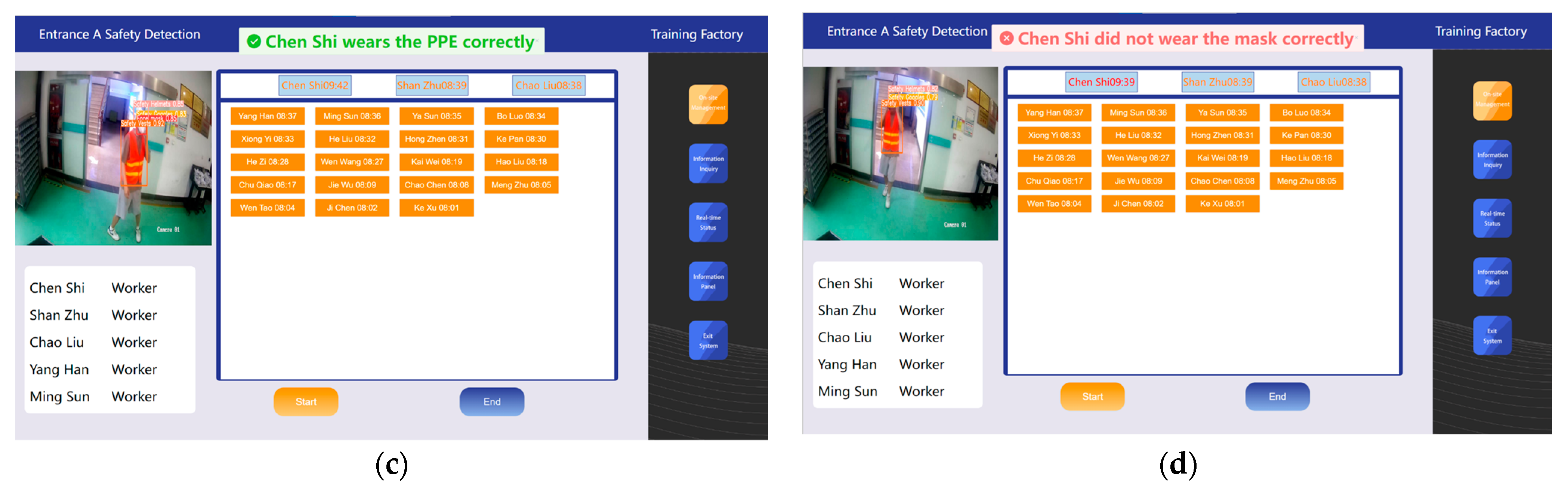

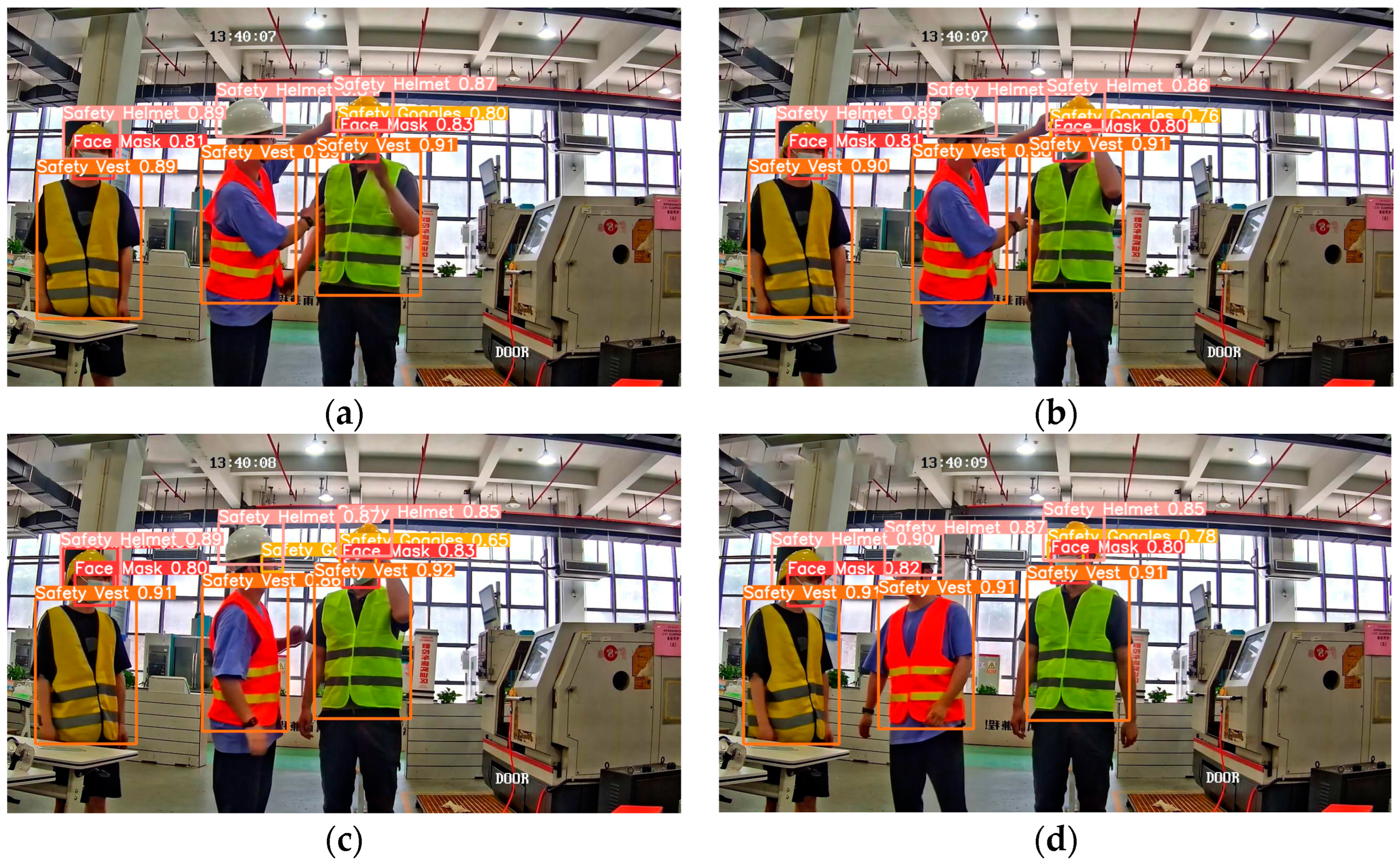

4.4.4. Practical Applications of GBSG-YOLOv8n in Industrial Environments

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kerr, W.A. Accident Proneness of Factory Departments. J. Appl. Psychol. 1950, 34, 167. [Google Scholar] [CrossRef] [PubMed]

- Fall Protection—Overview. Occupational Safety and Health Administration. Available online: https://www.osha.gov/fall-protection (accessed on 16 September 2023).

- Chughtai, A.A.; Khan, W. Use of Personal Protective Equipment to Protect against Respiratory Infections in Pakistan: A Systematic Review. J. Infect. Public Health 2020, 13, 385–390. [Google Scholar] [CrossRef] [PubMed]

- Hulme, A.; Gilchrist, A. Industrial Head Injuries And The Performance Of Helmets. In Proceedings of the 1995 International IRCOBI Conference on the Biomechanics of Impact, Brunnen, Switzerland, 13–15 September 1995. [Google Scholar]

- De la Hunty, D.; Sprivulis, P. Safety Goggles Should Be Worn by Australian Workers. Aust. N. Z. J. Ophthalmol. 1994, 22, 49–52. [Google Scholar] [CrossRef] [PubMed]

- Arditi, D.; Ayrancioglu, M.A.; Shi, J. Effectiveness of safety vests in nighttime highway construction. J. Transp. Eng. 2004, 130, 725–732. [Google Scholar] [CrossRef]

- Kyung, S.Y.; Jeong, S.H. Particulate-Matter Related Respiratory Diseases. Tuberc. Respir. Dis. 2020, 83, 116. [Google Scholar] [CrossRef]

- Hung, H.M.; Lan, L.T.; Hong, H.S. A Deep Learning-Based Method For Real-Time Personal Protective Equipment Detection. Le Quy Don Tech. Univ.-Sect. Inf. Commun. Technol. 2019, 13, 23–34. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Detecting Apples in Orchards Using YOLOv3 and YOLOv5 in General and Close-up Images. In Proceedings of the Advances in Neural Networks–ISNN 2020: 17th International Symposium on Neural Networks, ISNN 2020, Cairo, Egypt, 4–6 December 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 233–243. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Choi, J.; Chun, D.; Kim, H.; Lee, H.-J. Gaussian YOLOv3: An Accurate and Fast Object Detector Using Localization Uncertainty for Autonomous Driving. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27–28 October 2019; pp. 502–511. [Google Scholar]

- Vats, A.; Anastasiu, D.C. Enhancing Retail Checkout through Video Inpainting, YOLOv8 Detection, and DeepSort Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5530–5537. [Google Scholar]

- TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. IEEE Conference Publication. IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/9607487 (accessed on 28 September 2023).

- Liu, K.; Sun, Q.; Sun, D.; Peng, L.; Yang, M.; Wang, N. Underwater Target Detection Based on Improved YOLOv7. J. Mar. Sci. Eng. 2023, 11, 677. [Google Scholar] [CrossRef]

- Real-Time Growth Stage Detection Model for High Degree of Occultation Using DenseNet-Fused YOLOv4—ScienceDirect. Available online: https://www.sciencedirect.com/science/article/pii/S0168169922000114?via%3Dihub (accessed on 28 September 2023).

- Kelm, A.; Laußat, L.; Meins-Becker, A.; Platz, D.; Khazaee, M.J.; Costin, A.M.; Helmus, M.; Teizer, J. Mobile Passive Radio Frequency Identification (RFID) Portal for Automated and Rapid Control of Personal Protective Equipment (PPE) on Construction Sites. Autom. Constr. 2013, 36, 38–52. [Google Scholar] [CrossRef]

- Bauk, S.; Schmeink, A.; Colomer, J. An RFID Model for Improving Workers’ Safety at the Seaport in Transitional Environment. Transport 2018, 33, 353–363. [Google Scholar] [CrossRef]

- Dong, S.; Li, H.; Yin, Q. Building Information Modeling in Combination with Real Time Location Systems and Sensors for Safety Performance Enhancement. Saf. Sci. 2018, 102, 226–237. [Google Scholar] [CrossRef]

- Hayward, S.; van Lopik, K.; West, A. A Holistic Approach to Health and Safety Monitoring: Framework and Technology Perspective. Internet Things 2022, 20, 100606. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine Learning: Trends, Perspectives, and Prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Li, J.; Liu, H.; Wang, T.; Jiang, M.; Wang, S.; Li, K.; Zhao, X. Safety Helmet Wearing Detection Based on Image Processing and Machine Learning. In Proceedings of the 2017 Ninth International Conference on Advanced Computational Intelligence (ICACI), Doha, Qatar, 4–6 February 2017; pp. 201–205. [Google Scholar]

- Wu, H.; Zhao, J. An Intelligent Vision-Based Approach for Helmet Identification for Work Safety. Comput. Ind. 2018, 100, 267–277. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Zhang, J.; Zhang, D.; Liu, X.; Liu, R.; Zhong, G. A framework of on-site construction safety management using computer vision and real-time location system. In Proceedings of the International Conference on Smart Infrastructure and Construction 2019 (ICSIC) Driving Data-Informed Decision-Making, Cambridge, UK, 8–10 July 2019; pp. 327–333. [Google Scholar]

- Fan, Z.; Peng, C.; Dai, L.; Cao, F.; Qi, J.; Hua, W. A Deep Learning-Based Ensemble Method for Helmet-Wearing Detection. PeerJ Comput. Sci. 2020, 6, e311. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. ISBN 978-3-319-46447-3. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Han, G.; Zhu, M.; Zhao, X.; Gao, H. Method Based on the Cross-Layer Attention Mechanism and Multiscale Perception for Safety Helmet-Wearing Detection. Comput. Electr. Eng. 2021, 95, 107458. [Google Scholar] [CrossRef]

- Wang, J.; Zhu, G.; Wu, S.; Luo, C. Worker’s Helmet Recognition and Identity Recognition Based on Deep Learning. Open J. Model. Simul. 2021, 9, 135–145. [Google Scholar] [CrossRef]

- Jiang, X.; Gao, T.; Zhu, Z.; Zhao, Y. Real-Time Face Mask Detection Method Based on YOLOv3. Electronics 2021, 10, 837. [Google Scholar] [CrossRef]

- Ji, X.; Gong, F.; Yuan, X.; Wang, N. A High-Performance Framework for Personal Protective Equipment Detection on the Offshore Drilling Platform. Complex Intell. Syst. 2023, 9, 5637–5652. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Yang, L.; Thirunavukkarasu, A.; Evison, C.; Zhao, Y. Fast Personal Protective Equipment Detection for Real Construction Sites Using Deep Learning Approaches. Sensors 2021, 21, 3478. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Qiu, Y.; Bai, H. FEFD-YOLOV5: A Helmet Detection Algorithm Combined with Feature Enhancement and Feature Denoising. Electronics 2023, 12, 2902. [Google Scholar] [CrossRef]

- Tai, W.; Wang, Z.; Li, W.; Cheng, J.; Hong, X. DAAM-YOLOV5: A Helmet Detection Algorithm Combined with Dynamic Anchor Box and Attention Mechanism. Electronics 2023, 12, 2094. [Google Scholar] [CrossRef]

- Sun, C.; Zhang, S.; Qu, P.; Wu, X.; Feng, P.; Tao, Z.; Zhang, J.; Wang, Y. MCA-YOLOV5-Light: A Faster, Stronger and Lighter Algorithm for Helmet-Wearing Detection. Appl. Sci. 2022, 12, 9697. [Google Scholar] [CrossRef]

- Ali, L.; Alnajjar, F.; Parambil, M.M.A.; Younes, M.I.; Abdelhalim, Z.I.; Aljassmi, H. Development of YOLOv5-Based Real-Time Smart Monitoring System for Increasing Lab Safety Awareness in Educational Institutions. Sensors 2022, 22, 8820. [Google Scholar] [CrossRef]

- Wang, J.; Wang, J.; Zhang, X.; Yu, N. A Mask-Wearing Detection Model in Complex Scenarios Based on YOLOv7-CPCSDSA. Electronics 2023, 12, 3128. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Zhou, S.; Zhao, Y.; Guo, D. YOLOv5-GE Vehicle Detection Algorithm Integrating Global Attention Mechanism. In Proceedings of the 2022 3rd International Conference on Information Science, Parallel and Distributed Systems (ISPDS), Guangzhou, China, 22–24 July 2022; pp. 439–444. [Google Scholar]

- Zhang, C.; Tian, Z.; Song, J.; Zheng, Y.; Xu, B. Construction Worker Hardhat-Wearing Detection Based on an Improved BiFPN. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE Computer Soc: Los Alamitos, CA, USA, 2021; pp. 8600–8607. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 24 July 2021; pp. 11863–11874. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More Features from Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

| Model | Algorithm Description | Pros | Cons |

|---|---|---|---|

| Faster R-CNN | Faster R-CNN represents an enhanced iteration within the R-CNN series, improving processing speed and precision. The method involves feature extraction through convolutional neural networks, followed by generating region proposals utilizing the region proposal network (RPN). It ultimately conducts region classification and performs bounding box regression. | Faster R-CNN uses convolutional neural networks to extract features, capturing a wide range of visual attributes of PPE objects, such as their size, shape, and appearance. Its architecture, which relies on RPN and classification networks, significantly enhances its accuracy in recognizing PPE categories. | Faster R-CNN shows slower real-time PPE detection in complex industrial environments, primarily due to its high computational demands. Furthermore, it exhibits reduced accuracy, particularly for small-sized PPE, potentially resulting in missed detections. |

| SSD | Compared to two-stage methods such as Faster R-CNN, SSD demonstrates superior speed and is well-suited for real-time applications. It achieves this speed by incorporating multi-scale feature maps and advanced convolutional structures. | SSD performs exceptionally well in real-time PPE detection by leveraging multi-scale feature maps to effectively handle complex scenarios and detect PPE objects of various sizes. | In the detection of small targets, SSD exhibits relatively lower accuracy, is susceptible to interference from complex backgrounds, and may lead to instances of missed detections. |

| YOLOv3 | YOLOv3 utilizes three distinct-scale detection heads and employs Darknet-53 as the backbone network, significantly improving feature extraction capabilities. | YOLOv3 supports multi-scale PPE detection and is suitable for real-time detection of different PPE categories, making it especially ideal for industrial environments. | Compared to newer YOLO versions, YOLOv3 may display differences in detection accuracy and computational resource requirements. It shows reduced accuracy in complex environments, possibly necessitating additional training data. |

| YOLOv4 | YOLOv4 introduces enhancements such as CIOU loss, SAM, and PANet, significantly improving detection accuracy. Simultaneously, it adopts a more powerful Darknet-53 network and additional data augmentation techniques, enhancing the model’s robustness. | YOLOv4 introduces improved loss functions and network structures, leading to enhanced accuracy in PPE detection. Model optimization and a lightweight design further improve the speed and robustness of PPE detection, rendering it suitable for industrial environments with complex backgrounds. | Compared to more recent YOLO versions, the YOLOv4 model exhibits increased complexity, necessitating more significant computational resources and extended durations of training and deployment. |

| YOLOv5 | YOLOv5 introduces adaptive feature selection and model pruning, reducing model complexity. Additionally, through a lightweight design and model optimization, it accelerates inference speed and enhances detection accuracy. | YOLOv5 balances speed and accuracy in PPE detection by implementing streamlined model pruning techniques that alleviate model complexity. It is particularly apt for application scenarios demanding instantaneous PPE detection. | In specific scenarios, YOLOv5 exhibits slightly diminished accuracy, particularly in small object detection, which requires further improve. Additionally, compared to low-complexity models, it still demands more computational resources. |

| YOLOv7 | YOLOv7 inherits the high performance of the YOLO series, while prioritizing a balance between performance and speed. It introduces enhancements to model design, including the backbone network and loss functions. Utilizing a more advanced backbone network, YOLOv7 significantly improves feature extraction capabilities. Through optimizations in network structure and model design, it achieves a balance between detection speed and accuracy. | YOLOv7 excels in PPE object detection, maintaining high accuracy while improving detection speed, making it suitable for real-time applications. It utilizes a more advanced backbone network and model design, further enhancing the efficiency and accuracy of PPE detection. | YOLOv7 demands higher computational resources and is unsuitable for resource-constrained situations. While excelling in speed and accuracy, there is still room for improvement in PPE detection, which requires high precision and real-time performance, and more training data are needed to further improve the performance. |

| Model | Precision (%) | Recall (%) | F1 (%) | mAP (%) |

|---|---|---|---|---|

| YOLOv8n | 86.6 | 88.4 | 87.5 | 87.8 |

| GBSG-YOLOv8n | 89.7 | 91.0 | 90.3 | 90.8 |

| Model | Parameters (M) | FLOPS (G) | Weight (MB) | Inference (ms) |

|---|---|---|---|---|

| YOLOv8n | 3.01 | 8.1 | 5.92 | 92.7 |

| GBSG-YOLOv8n | 2.51 | 7.0 | 4.66 | 88.7 |

| Model | Precision (%) | Recall (%) | F1 (%) | mAP (%) | Parameters (M) | FLOPS (G) | Weight (MB) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|---|

| YOLOv8n | 86.6 | 88.4 | 87.5 | 87.8 | 3.01 | 8.1 | 5.92 | 92.7 |

| YOLOv8n+G | 87.4 | 89.6 | 88.5 | 89.2 | 3.38 | 8.7 | 5.93 | 101.2 |

| YOLOv8n+G+B | 88.6 | 90.7 | 89.6 | 90.4 | 3.38 | 8.7 | 5.93 | 108.5 |

| YOLOv8n+G+B+S | 90.3 | 91.2 | 90.7 | 91.1 | 3.38 | 8.7 | 5.94 | 114.7 |

| GBSG-YOLOv8n | 89.7 | 91.0 | 90.3 | 90.8 | 2.51 | 7.0 | 4.66 | 88.7 |

| Model | Precision (%) | Recall (%) | F1 (%) | mAP (%) | Parameters (M) | FLOPS (G) | Weight (MB) |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | 80.4 | 84.7 | 82.5 | 82.7 | 137.38 | 107.54 | 348.65 |

| SSD | 81.6 | 85.3 | 83.4 | 83.4 | 26.23 | 27.83 | 114.75 |

| YOLOv3 | 83.9 | 86.7 | 85.3 | 84.9 | 60.53 | 35.78 | 59.62 |

| YOLOv5 | 86.2 | 88.1 | 87.1 | 86.4 | 7.12 | 79.42 | 15.91 |

| YOLOv7 | 87.1 | 89.7 | 88.4 | 87.3 | 36.52 | 98.34 | 103.24 |

| GBSG-YOLOv8n | 89.7 | 91.0 | 90.3 | 90.8 | 2.51 | 7.0 | 4.66 |

| Model | Precision (%) | Recall (%) | F1 (%) | mAP (%) |

|---|---|---|---|---|

| RetinaNet | 85.6 | 86.4 | 86.0 | 85.1 |

| ComerNet | 87.5 | 88.1 | 87.8 | 86.4 |

| DETR | 88.7 | 89.3 | 89.0 | 88.6 |

| DINO | 89.3 | 90.4 | 89.8 | 89.3 |

| GBSG-YOLOv8n | 89.7 | 91.0 | 90.3 | 90.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, C.; Zhu, D.; Shen, J.; Zheng, Y.; Zhou, C. GBSG-YOLOv8n: A Model for Enhanced Personal Protective Equipment Detection in Industrial Environments. Electronics 2023, 12, 4628. https://doi.org/10.3390/electronics12224628

Shi C, Zhu D, Shen J, Zheng Y, Zhou C. GBSG-YOLOv8n: A Model for Enhanced Personal Protective Equipment Detection in Industrial Environments. Electronics. 2023; 12(22):4628. https://doi.org/10.3390/electronics12224628

Chicago/Turabian StyleShi, Chenyang, Donglin Zhu, Jiaying Shen, Yangyang Zheng, and Changjun Zhou. 2023. "GBSG-YOLOv8n: A Model for Enhanced Personal Protective Equipment Detection in Industrial Environments" Electronics 12, no. 22: 4628. https://doi.org/10.3390/electronics12224628

APA StyleShi, C., Zhu, D., Shen, J., Zheng, Y., & Zhou, C. (2023). GBSG-YOLOv8n: A Model for Enhanced Personal Protective Equipment Detection in Industrial Environments. Electronics, 12(22), 4628. https://doi.org/10.3390/electronics12224628