Intelligent Scheduling Based on Reinforcement Learning Approaches: Applying Advanced Q-Learning and State–Action–Reward–State–Action Reinforcement Learning Models for the Optimisation of Job Shop Scheduling Problems

Abstract

:1. Introduction

2. Literature Review

2.1. Job Shop Scheduling Problems

2.2. Scheduling Using Learning-Based Methods and Reinforcement Learning (RL)

3. Problem Description

3.1. Single Machine Benchmarks with Tardiness and Earliness

3.2. Flexible Benchmarks, Including Tardiness and Earliness Penalties

- : The index number of jobs.

- : The index number of operations.

- : Number of jobs in the benchmark.

- : Number of machines in the benchmark.

- : Execution time for operation of job on machine .

- (1)

- One machine at a time is used to complete each task.

- (2)

- Each machine performs one task; it cannot perform several tasks at once.

- (3)

- For each machine, the setup time between operations is zero if two subsequent operations are from the same job.

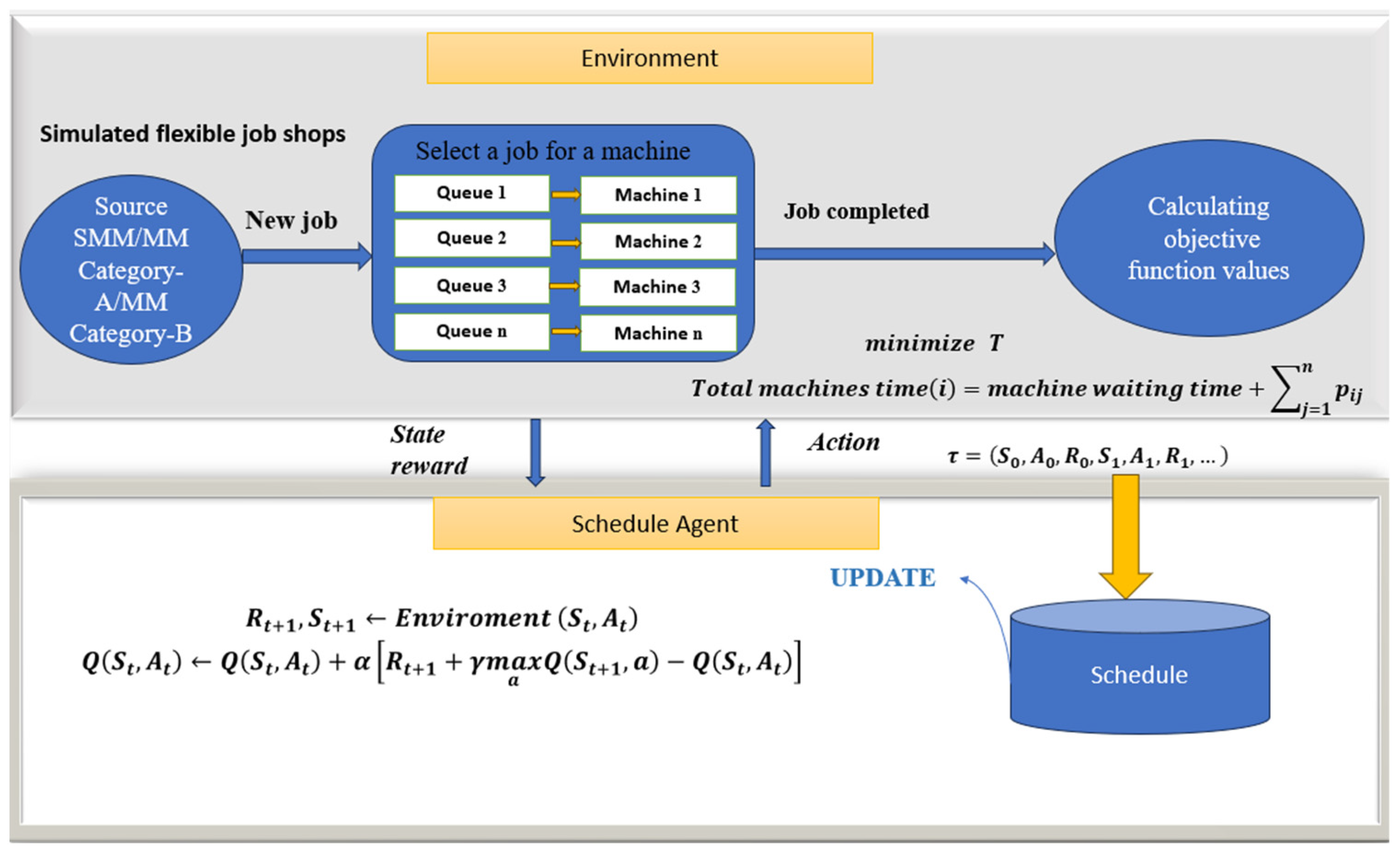

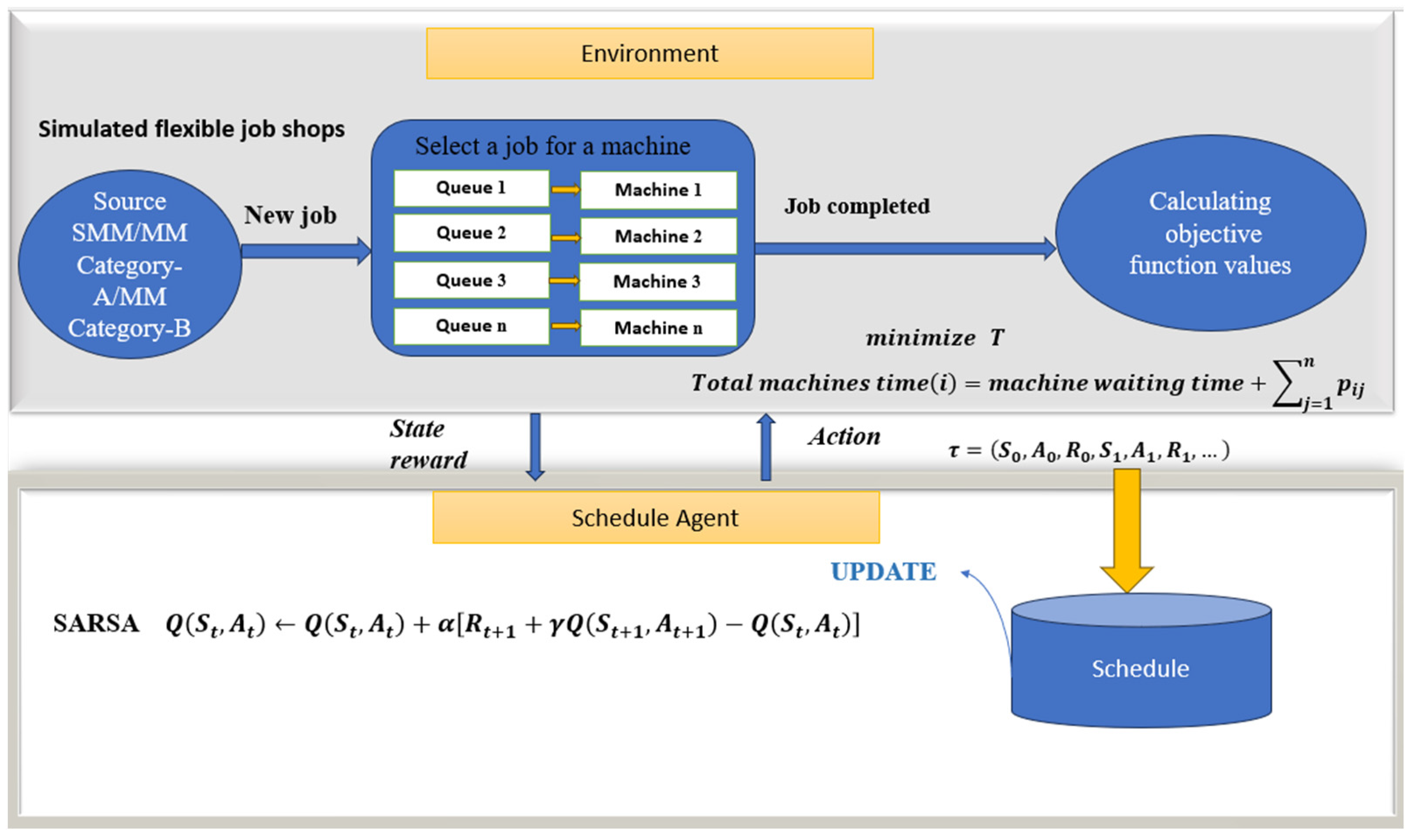

4. Reinforcement Learning Framework

5. Research Methodology

5.1. Simulated Job Shops

5.2. Application of QRL

| Algorithm 1. QRL algorithm |

| in the current episode, do |

| End for |

| End for |

- State, Action and Reward

5.3. Application of SARSA Model

- Agent: In this model, an agent interacts with the environment, gains knowledge and makes choices. When a job becomes available, it is selected from the local waiting queue of the machine (sometimes referred to as the job-candidate set) and processed. When the current operation is completed, each job chooses a machine to handle subsequent operations. At this point, the job becomes a job candidate for the machine and is assigned an agent for task routing; this is performed to address the vast action space of the FJSPs. Thus, the agent decides what is appropriate given the current state of the environment.

- States: The state represents the environment, including various machine and job-related aspects. Such aspects include the productivity of machines and how they interact with one another, the number of machines active and available for use in each operation and the workloads of the jobs completed or in progress at different operations; this aims to maximise returns or the sum of discounted rewards while minimising the makespan or the number of time steps required to accomplish the jobs.

- Reward: The rewards involve moving between jobs to calculate the makespan, which ultimately becomes an objective function. Regarding the state, the agent moves between jobs to calculate the time, add it to the reward, calculate the objective function and execution time for the entire benchmark (job shop), and cut down on time. By reversing the rewards, the minimum time in the program is calculated, wherein the agent increases the reward while the environment minimises the time.

| Algorithm 2. Standard SARSA |

| for all state-action pairs. For each episode, do |

| in the current episode, do |

| End for |

| End for |

6. Results and Experimental Discussion

7. Case Study: Reheating Furnace Model

- : Time [min];

- : Dead time [min];

- : Total fuel flow rate in the heating furnace ;

- : The out-strip temperature ;

- : Non-negative integer;

- : Out-strip temperature ;

- : Strip temperature at the inlet of the furnace (constant) ;

- : Furnace temperature ;

- : Strip width and thickness ;

- : Line speed ;

- : Average line speed during is the heating time of the strip) [35].

8. Conclusions and Future Work

- Q-learning, a fundamental reinforcement learning model, outperforms the advanced GA (NMHPGA) because GA algorithms are more complex and time-consuming and require additional program functions.

- Most of the QRL results show the strong performance of the algorithm, except for a few job shops of more considerable sizes. The proposed SARSA model was used to enhance these results.

- Despite the poor performance of SARSA in scenarios involving large-scale job shops, it is still a highly recommended model because of its comparative simplicity compared to GAs; SARSA requires less execution time and involves fewer functions, which makes it a preferred option to run the program and optimise JSSPs.

- Additionally, the proposed SARSA outperformed the NMHPGA and QRL by optimising the furnace model using RL models. It can be concluded that the SARSA is sufficiently fast and accurate to optimise industrial production lines with moderate-sized schedules.

- Although some limitations and the outlined models’ difficulties preclude the complete application of RL in complex real production systems, ongoing research efforts could address these issues, resulting in improvements in the use of RL in industrial applications.

- Future DRL-based models and additional applicable benchmarks would be beneficial; this expansion can be achieved by integrating advanced optimisation techniques.

- Furthermore, as GA methods are predominantly characterised by their complexity and slow performance, integrating various RL models with advanced GA models may lead to significant performance improvement.

- As a final note in this paper, it is noticeable that RL approaches can address more complex problems, extending their applicability to encompass not only the multi-objective JSSPs but also the more demanding FJSPs.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Jamwal, A.; Agrawal, R.; Sharma, M.; Kumar, A.; Kumar, V.; Garza-Reyes, J.A.A. Machine Learning Applications for Sustainable Manufacturing: A Bibliometric-Based Review for Future Research. J. Enterp. Inf. Manag. 2022, 35, 566–596. [Google Scholar] [CrossRef]

- Machado, C.G.; Winroth, M.P.; Ribeiro da Silva, E.H.D. Sustainable Manufacturing in Industry 4.0: An Emerging Research Agenda. Int. J. Prod. Res. 2019, 58, 1462–1484. [Google Scholar] [CrossRef]

- Malek, J.; Desai, T.N. A Systematic Literature Review to Map Literature Focus of Sustainable Manufacturing. J. Clean. Prod. 2020, 256, 120345. [Google Scholar] [CrossRef]

- Pezzella, F.; Morganti, G.; Ciaschetti, G. A Genetic Algorithm for the Flexible Job-Shop Scheduling Problem. Comput. Oper. Res. 2008, 35, 3202–3212. [Google Scholar] [CrossRef]

- Liu, Z.; Guo, S.; Wang, L. Integrated Green Scheduling Optimization of Flexible Job Shop and Crane Transportation Considering Comprehensive Energy Consumption. J. Clean. Prod. 2019, 211, 765–786. [Google Scholar] [CrossRef]

- Yuan, Y.; Xu, H. Multi-objective Flexible Job Shop Scheduling Using Memetic Algorithms. IEEE Trans. Autom. Sci. Eng. 2015, 12, 336–353. [Google Scholar] [CrossRef]

- Park, I.B.; Huh, J.; Kim, J.; Park, J. A Reinforcement Learning Approach to Robust Scheduling of Semiconductor Manufacturing Facilities. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1420–1431. [Google Scholar] [CrossRef]

- Gao, K.; Cao, Z.; Zhang, L.; Chen, Z.; Han, Y.; Pan, Q. A Review on Swarm Intelligence and Evolutionary Algorithms for Solving Flexible Job Shop Scheduling Problems. IEEE/CAA J. Autom. Sin. 2019, 6, 904–916. [Google Scholar] [CrossRef]

- Momenikorbekandi, A.; Abbod, M. A Novel Metaheuristic Hybrid Parthenogenetic Algorithm for Job Shop Scheduling Problems: Applying Optimisation Model. IEEE Access 2023, 11, 56027–56045. [Google Scholar] [CrossRef]

- Brucker, P.; Schlie, R. Job-Shop Scheduling with Multi-Purpose Machines. Computing 1990, 45, 369–375. [Google Scholar] [CrossRef]

- Brandimarte, P. Routing and Scheduling in a Flexible Job Shop by Tabu Search. Ann. Oper. Res. 1993, 41, 157–183. [Google Scholar] [CrossRef]

- Jiang, E.d.; Wang, L. Multi-Objective Optimization Based on Decomposition for Flexible Job Shop Scheduling under Time-of-Use Electricity Prices. Knowl. Based Syst. 2020, 204, 106177. [Google Scholar] [CrossRef]

- Li, Y.; Huang, W.; Wu, R.; Guo, K. An Improved Artificial Bee Colony Algorithm for Solving Multi-Objective Low-Carbon Flexible Job Shop Scheduling Problem. Appl. Soft Comput. 2020, 95, 106544. [Google Scholar] [CrossRef]

- Li, J.Q.; Song, M.X.; Wang, L.; Duan, P.Y.; Han, Y.Y.; Sang, H.Y.; Pan, Q.K. Hybrid Artificial Bee Colony Algorithm for a Parallel Batching Distributed Flow-Shop Problem with Deteriorating Jobs. IEEE Trans. Cybern. 2020, 50, 2425–2439. [Google Scholar] [CrossRef] [PubMed]

- Mahmoodjanloo, M.; Tavakkoli-Moghaddam, R.; Baboli, A.; Bozorgi-Amiri, A. Flexible Job Shop Scheduling Problem with Reconfigurable Machine Tools: An Improved Differential Evolution Algorithm. Appl. Soft Comput. 2020, 94, 106416. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Zhang, B.; Wang, S. Multi-Objective Optimisation in Flexible Assembly Job Shop Scheduling Using a Distributed Ant Colony System. Eur. J. Oper. Res. 2020, 283, 441–460. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, M.; Yu Wang, Z.; Bing Zhu, Q. Robust Scheduling Based on Extreme Learning Machine for Bi-Objective Flexible Job-Shop Problems with Machine Breakdowns. Expert Syst. Appl. 2020, 158, 113545. [Google Scholar] [CrossRef]

- Zhang, G.; Shao, X.; Li, P.; Gao, L. An Effective Hybrid Particle Swarm Optimisation Algorithm for Multi-Objective Flexible Job-Shop Scheduling Problem. Comput. Ind. Eng. 2009, 56, 1309–1318. [Google Scholar] [CrossRef]

- Mihoubi, B.; Bouzouia, B.; Gaham, M. Reactive Scheduling Approach for Solving a Realistic Flexible Job Shop Scheduling Problem. Int. J. Prod. Res. 2021, 59, 5790–5808. [Google Scholar] [CrossRef]

- Wu, X.; Peng, J.; Xiao, X.; Wu, S. An Effective Approach for the Dual-Resource Flexible Job Shop Scheduling Problem Considering Loading and Unloading. J. Intell. Manuf. 2021, 32, 707–728. [Google Scholar] [CrossRef]

- Momenikorbekandi, A.; Abbod, M.F. Multi-Ethnicity Genetic Algorithm for Job Shop Scheduling Problems. 2021. Available online: https://ijssst.info/Vol-22/No-1/paper13.pdf (accessed on 16 November 2023).

- Bellman, R. A Markovian Decision Process. Indiana Univ. Math. J. 1957, 6, 679–684. [Google Scholar] [CrossRef]

- Oliff, H.; Liu, Y.; Kumar, M.; Williams, M.; Ryan, M. Reinforcement Learning for Facilitating Human-Robot-Interaction in Manufacturing. J. Manuf. Syst. 2020, 56, 326–340. [Google Scholar] [CrossRef]

- Watkins, C.J.C.H.; Dayan, P. Q-Learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Shahrabi, J.; Adibi, M.A.; Mahootchi, M. A Reinforcement Learning Approach to Parameter Estimation in Dynamic Job Shop Scheduling. Comput. Ind. Eng. 2017, 110, 75–82. [Google Scholar] [CrossRef]

- Shen, X.N.; Minku, L.L.; Marturi, N.; Guo, Y.N.; Han, Y. A Q-Learning-Based Memetic Algorithm for Multi-Objective Dynamic Software Project Scheduling. Inf. Sci. 2018, 428, 1–29. [Google Scholar] [CrossRef]

- Lin, C.C.; Deng, D.J.; Chih, Y.L.; Chiu, H.T. Smart Manufacturing Scheduling with Edge Computing Using Multiclass Deep Q Network. IEEE Trans. Industr. Inform. 2019, 15, 4276–4284. [Google Scholar] [CrossRef]

- Shi, D.; Fan, W.; Xiao, Y.; Lin, T.; Xing, C. Intelligent Scheduling of Discrete Automated Production Line via Deep Reinforcement Learning. Int. J. Prod. Res. 2020, 58, 3362–3380. [Google Scholar] [CrossRef]

- Luo, S. Dynamic Scheduling for Flexible Job Shop with New Job Insertions by Deep Reinforcement Learning. Appl. Soft Comput. 2020, 91, 106208. [Google Scholar] [CrossRef]

- Chen, R.; Yang, B.; Li, S.; Wang, S. A Self-Learning Genetic Algorithm Based on Reinforcement Learning for Flexible Job-Shop Scheduling Problem. Comput. Ind. Eng. 2020, 149, 106778. [Google Scholar] [CrossRef]

- Panzer, M.; Bender, B. Deep Reinforcement Learning in Production Systems: A Systematic Literature Review. Int. J. Prod. Res. 2021, 60, 4316–4341. [Google Scholar] [CrossRef]

- Sivanandam, S.N.; Deepa, S.N. Genetic Algorithms. In Introduction to Genetic Algorithms; Springer: Berlin/Heidelberg, Germany, 2008; pp. 15–37. [Google Scholar] [CrossRef]

- Dong, H.; Ding, Z.; Zhang, S. Deep Reinforcement Learning: Fundamentals, Research and Applications; Springer: Singapore, 2020; pp. 1–514. [Google Scholar] [CrossRef]

- Sewak, M. Policy-Based Reinforcement Learning Approaches: Stochastic Policy Gradient and the REINFORCE Algorithm. In Deep Reinforcement Learning; Springer: Singapore, 2019; pp. 127–140. [Google Scholar] [CrossRef]

- Yoshitani, N.; Hasegawa, A. Model-Based Control of Strip Temperature for the Heating Furnace in Continuous Annealing. IEEE Trans. Control Syst. Technol. 1998, 6, 146–156. [Google Scholar] [CrossRef]

| Job Shop-Type | Number of Machines | Number of Jobs |

|---|---|---|

| SM1 | 1 | 32 |

| SM2 | 1 | 40 |

| SM3 | 1 | 60 |

| SM4 | 1 | 80 |

| SM5 | 1 | 100 |

| SM6 | 1 | 120 |

| SM7 | 1 | 150 |

| SM8 | 1 | 200 |

| SM9 | 1 | 250 |

| SM10 | 1 | 300 |

| Job Shop-Type | Number of Machines | Number of Jobs |

|---|---|---|

| MM1 | 4 | 8 |

| MM2 | 4 | 8 |

| MM3 | 4 | 8 |

| MM4 | 4 | 8 |

| MM5 | 4 | 8 |

| MM6 | 4 | 8 |

| MM7 | 4 | 8 |

| MM8 | 4 | 8 |

| MM9 | 4 | 8 |

| MM10 | 4 | 8 |

| MM11 | 4 | 10 |

| MM12 | 4 | 20 |

| MM13 | 4 | 30 |

| MM14 | 4 | 40 |

| MM15 | 4 | 100 |

| MM16 | 4 | 150 |

| MM17 | 4 | 200 |

| MM18 | 4 | 250 |

| MM19 | 4 | 300 |

| Benchmark Type | No. of Jobs | NMHPGA | QRL | SARSA | SARSA Performance Differential Relative to NMHPGA (%) | QRL Performance Differential Relative to NMHPGA (%) |

|---|---|---|---|---|---|---|

| SM1 | 32 | 39,444 | 42,016 | 23,006 | 71.45 | 6.12 |

| SM2 | 40 | 160,871 | 297,088 | 156,270 | 2.94 | 45.85 |

| SM3 | 60 | 474,325 | 816,604 | 507,100 | 6.46 | 41.91 |

| SM4 | 80 | 1,120,440 | 1,662,800 | 1,082,100 | 3.54 | 32.61 |

| SM5 | 100 | 2,297,979 | 4,452,100 | 2,446,362 | 6.06 | 48.38 |

| SM6 | 120 | 11,009,721 | 11,283,000 | 8,310,500 | 32.47 | 2.42 |

| SM7 | 150 | 8,549,596 | 12,201,000 | 8,051,900 | 6.18 | 29.92 |

| SM8 | 200 | 15,952,550 | 26,355,000 | 20,215,000 | 21.08 | 39.47 |

| SM9 | 250 | 30,845,566 | 58,246,000 | 39,822,000 | 22.54 | 47.04 |

| SM10 | 300 | 52,260,543 | 90,327,000 | 65,091,000 | 19.71 | 42.14 |

| Benchmark Type | NMHPGA | QRL | SARSA | SARSA Performance Differential Relative to NMHPGA (%) | QRL Performance Differential Relative to NMHPGA (%) |

|---|---|---|---|---|---|

| MM1 | 7758 | 6711 | 7230 | 7.30 | 15.59 |

| MM2 | 9465 | 5420 | 7490 | 26.37 | 74.62 |

| MM3 | 7913 | 13,558 | 13,187 | 39.99 | 41.63 |

| MM4 | 7758 | 6711 | 7230 | 7.30 | 15.59 |

| MM5 | 7039 | 10,647 | 11,542 | 39.01 | 33.88 |

| MM6 | 7798 | 12,863 | 13,500 | 42.23 | 39.37 |

| MM7 | 9181 | 8055 | 8652 | 6.11 | 13.98 |

| MM8 | 8727 | 17,695 | 16,094 | 45.77 | 50.68 |

| MM9 | 6195 | 5379 | 5766 | 7.44 | 15.16 |

| MM10 | 7758 | 6711 | 7230 | 7.30 | 15.59 |

| Benchmark Type | (No. Machine × No. Jobs) | NMHPGA | QRL | SARSA | SARSA Performance Differential Relative to NMHPGA (%) | QRL Performance Differential Relative to NMHPGA (%) |

|---|---|---|---|---|---|---|

| MM11 | 4 × 10 | 16,421 | 17,700 | 17,882 | 8.17 | 7.22 |

| MM12 | 4 × 20 | 131,115 | 124,270 | 125,296 | 4.64 | 5.50 |

| MM13 | 4 × 30 | 461,538 | 429,230 | 443,460 | 4.07 | 7.52 |

| MM14 | 4 × 40 | 1,236,658 | 1,061,652 | 1,143,600 | 8.13 | 16.48 |

| MM15 | 4 × 100 | 17,432,983 | 15,698,000 | 17,035,000 | 2.33 | 11.05 |

| MM16 | 4 × 150 | 62,354,613 | 54,567,000 | 60,006,000 | 3.91 | 14.27 |

| MM17 | 4 × 200 | 131,840,576 | 126,430,000 | 105,410,000 | 25.07 | 4.27 |

| MM18 | 4 × 250 | 270,317,679 | 248,160,000 | 270,810,000 | 0.18 | 8.92 |

| MM19 | 4 × 300 | 475,082,286 | 417,290,000 | 420,790,000 | 12.90 | 13.84 |

| Furnace Model | NMHPGA | Q-Learning | SARSA |

|---|---|---|---|

| Furnace objective 1 | 133.7500 | 133.9333 | 133.9333 |

| Furnace objective 2 | 134.0000 | 137.9667 | 133.9333 |

| Furnace objective 3 | 133.7500 | 135.6333 | 133.9333 |

| Furnace Model | NMHPGA | Q-Learning | SARSA |

|---|---|---|---|

| Furnace objective 1 | 83.9666 | 84.1481 | 84.1481 |

| Furnace objective 2 | 84.0847 | 86.2792 | 84.1481 |

| Furnace objective 3 | 83.9666 | 85.2274 | 84.1481 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Momenikorbekandi, A.; Abbod, M. Intelligent Scheduling Based on Reinforcement Learning Approaches: Applying Advanced Q-Learning and State–Action–Reward–State–Action Reinforcement Learning Models for the Optimisation of Job Shop Scheduling Problems. Electronics 2023, 12, 4752. https://doi.org/10.3390/electronics12234752

Momenikorbekandi A, Abbod M. Intelligent Scheduling Based on Reinforcement Learning Approaches: Applying Advanced Q-Learning and State–Action–Reward–State–Action Reinforcement Learning Models for the Optimisation of Job Shop Scheduling Problems. Electronics. 2023; 12(23):4752. https://doi.org/10.3390/electronics12234752

Chicago/Turabian StyleMomenikorbekandi, Atefeh, and Maysam Abbod. 2023. "Intelligent Scheduling Based on Reinforcement Learning Approaches: Applying Advanced Q-Learning and State–Action–Reward–State–Action Reinforcement Learning Models for the Optimisation of Job Shop Scheduling Problems" Electronics 12, no. 23: 4752. https://doi.org/10.3390/electronics12234752

APA StyleMomenikorbekandi, A., & Abbod, M. (2023). Intelligent Scheduling Based on Reinforcement Learning Approaches: Applying Advanced Q-Learning and State–Action–Reward–State–Action Reinforcement Learning Models for the Optimisation of Job Shop Scheduling Problems. Electronics, 12(23), 4752. https://doi.org/10.3390/electronics12234752