3.2. Remarks and Results of Step 2—Input Datasets Selection and Analysis Specification

Given that Newtonian kinematics guide the braking of a vehicle, its equations were chosen as the source to generate synthetic datasets for usage throughout the remainder of the BCS lifecycle. The equations that were employed for that matter are based on the safe braking model of IEEE 1474.1:2014 [

64] for the braking of trains in metro and rail domains.

The following guidelines were followed to produce the datasets that were used in the remainder of the case study:

The algorithm that describes the dataset generation is represented by the flowchart in

Figure 8. It indicates that data records are tuples of the type (

d, s,

gd,

bcp) generated by varying

d, s, and

gd within their domains, with steps of 10 m for

d and 0.5 m/s steps for

s, and exhaustively including all plausible combinations. Within the domains of

d, s, and

gd, 57,286 tuples are generated.

The brake command prediction (bcp) is then set to “1” (apply brakes) if the vehicle’s braking curve reaches the obstacle at the given conditions of d, s, and gd, or to “0” (not apply brakes) otherwise. Afterward, positive or negative random noise of up to twice the standard deviations (σ) of distance and speed sensors’ tolerances are added to the figures of d and s in order to represent their intrinsic uncertainties.

Finally, another aspect of the dataset generation algorithm is the possibility of configuring the proportion of data records with bcp = 0, hereinafter referred to as “pb0”. This proportion can be set by choosing a setting “pb0_thres” within the open interval [0%; 90%), The justification for the configurable “pb0” is to allow the fine-tuning of AI instances better specialized in braking, since data records with bcp = 1 are scarcer than those with bcp = 0. By decreasing pb0, data records with bcp = 0 are discarded and replaced by additional records with bcp = 1. The upper limit of “pb0”, which is no higher than 90%, has a twofold justification: (i.) a noise-free dataset has an 89.9% proportion of records with bcp = 0, and (ii.) given the abundance of records with bcp = 0, it was not deemed relevant to increase pb0 even further for the BCS-related AI design.

A Python application was developed in accordance with the previous specification, and its results were redundantly verified by another member of the Safety ArtISt team who employed Microsoft Excel 2013 as a dual tool to perform the same processing. With this procedure, the developed dataset generator was considered functionally correct, thus leading the datasets generated with it to be adequate for the case study’s end application.

Another aspect that is worthy of highlighting in Step 2 is the identification of potential corner cases in the datasets generated with the aforementioned tool. Two categories of corner cases were considered relevant for the case study:

Corner Cases (0, 1): This group includes data records classified with bcp = 0 based on noise-free d and s, and gd but that would shift to bcp = 1 with their actual noisy d and s figures. Data records of this corner case group are relevant in assessing whether the SR 4.2 is indeed met by the BCS;

Corner Cases (1, 0): This group is the opposite of the previous one. It comprises data records whose noise-free input data led to bcp = 1 and that would rather be classified with bcp = 0 based on the actual noisy d and s figures. As a result, the records of this group are important to evaluate the BCS compliance with the SR 4.1.

The percentage of records of both corner case groups varies with the proportion “pb0”. For instance, datasets with pb0 in its upper limit of 89.9% feature shares of circa 0.18% to 0.25% of their 57,286 records for each corner case group depending on vehicle-related settings (e.g., mass). These proportions shift to 0.94% for (1, 0) and to 0.11% for (0, 1) if pb0 is reduced to 50%. The increase in corner cases of the group (1, 0) and the decrease in those of the group (0, 1) as pb0 is reduced are indeed expected. Given that more records with bcp = 1 and fewer records with bcp = 0 will be within the dataset as pb0 is lowered, the chance for meeting the criteria of the corner case group (1, 0) goes up whereas that for the corner case group (0, 1) diminishes.

At the end of Step 2, 19 different datasets were generated for the remainder of the case study. All of them share the same end application operational conditions (i.e., no changes on vehicle and environment-related parameters) and differ on two main aspects: pb0 and data randomness due to the inclusion of noises to d and s.

Seven datasets were reserved for 10-fold CV and AI training purposes: two different datasets with pb0 = 89.9% (DatasetT&V and DatasetT&V2), two different datasets with pb0 = 80% (DatasetStratified80v3 and DatasetStratified80v2), one dataset with pb0 = 65% (DatasetStratified65), one dataset with pb0 = 55% (DatasetStratified55), and one dataset with pb0 = 50% (DatasetStratified50).

Three additional datasets with pb0 = 89.9% were also generated to support the analysis of corner cases: DatasetT1, DatasetT2, and DatasetT3. This proportion was chosen for this analysis because it is deemed that, since pb0 approximates the rate of BCS processing cycles at which brakes are applied, utilizing it would indicate that the BCS triggers the brake commands in circa 10% of its processing cycles. This allows a reasonably better approximation of an actual BCS for rail applications than with datasets with lower pb0.

The datasets DatasetT1, DatasetT2, and DatasetT3 are also used along with a set of nine other datasets with other pb0 rates for two other objectives: performing the holdout tests of the trained AI instances and providing supporting performance indicators for the quantitative safety analysis. The nine datasets that were generated and used in addition to DatasetT1, DatasetT2, and DatasetT3 are as follows: DatasetTestZ01 (pb0 = 1%), DatasetTestZ10 (pb0 = 10%), DatasetTestZ20 (pb0 = 20%), DatasetTestZ30 (pb0 = 30%), DatasetTestZ40 (pb0 = 40%), DatasetTestZ50 (pb0 = 50%), DatasetTestZ60 (pb0 = 60%), DatasetTestZ70 (pb0 = 70%), and DatasetTestZ80 (pb0 = 80%). The objective of using datasets with different pb0 rates is to assess to what extent the SRs are affected by different operational conditions, ranging from extremely pessimistic scenarios at which the brakes are frequently requested (pb0 = 1%) up to scenarios at which the brake request rate is lower (pb0 = 89.9%).

3.3. Remarks and Results of Step 3—AI Preliminary Design

Activity 3-Pre was initially performed to assess the most promising AI base models for the end application among all candidates raised in Step 1. For this purpose, these AI base models were initially exercised in software with the infrastructure provided by the Python-coded

scikit-learn library (version 1.3.1) [

67]. By means of CV and holdout tests with the datasets defined in Step 2 (

Section 3.2), it was verified that DTs outperformed kNN, SVMs, and ANNs in accuracy, precision, recall, and specificity regardless of

pb0. These performance metrics are utilized in this research as per their definitions by James et al. [

68]. Moreover, since each DT was specialized in producing braking commands as per the corresponding

pb0 of its training dataset, building RFs by joining DTs trained with datasets of different

pb0 settings was deemed an appropriate way to leverage the overall BCS performance.

Activity 3-A started by assessing whether the direct HDL design or HLS would be used in the target implementation of DTs and RFs for FPGAs. Given that HLS tools such as Conifer [

69,

70] and LeFlow [

71,

72] are able to translate DTs and RFs coded in Python into equivalent HDL models targeted to FPGAs, an HLS approach was chosen for the case study. As a result, the initial BCS architecture of

Figure 7 (

Section 3.1) was refined considering the three-level abstraction model advocated in the Safety ArtISt method and detailed in

Section 2.2.

Since the software and HLS levels are direct instantiations of the templates presented in

Figure 4 and

Figure 5 (

Section 2.2) with BCS-specific datasets and AI features, special attention is given to the final hardware architecture, achievable with the HLS byproducts and depicted in

Figure 9. The results obtained in 3-Pre allow defining that the BCS comprises

m = 2 independent FPGAs for AI processing and that the number of AI instances for both FPGAs (

n1 and

n2) is set to three, which means that each FPGA includes an RF with three DTs. In this setup, the majority voter, referred to as “

voting” in

Figure 9, averages the braking probabilities retrieved by both FPGAs, represented by “

prob_mean”, and sets

bcp to the “active brakes” state whenever this probability is of at least 0.5. Given that “

voting” tends to require a simple design, it better suits a Complex PLD (CPLD) than an FPGA.

The architecture in

Figure 9 also reveals the following refinements related to FPGA independence, processing stability, and trustworthiness:

Each FPGA/CPLD has its own clock signal (“clock_1”, “clock_2”, and “clock_voting”);

In addition to bcp, the BCS has two other outputs: “prob_mean”, which indicates the probability that supported the bcp decision, and “prob_valid_mean”, which is detailed in item “4”;

Each FPGA/CPLD enforces a safe state (by applying brakes) via Power-On Reset (POR) and reaches an absorbing safe state whenever faults related to loss of communication with inputs (i.e., no periodic enabling of “

input_valid”) and/or partner FPGAs/CPLD. These directives exempt the need for a reset signal for all three programmable components in

Figure 9, since only a cold restart is able to allow system recovery from an availability perspective.

- 4.

In order to cascade control flow data from the inputs up to the final “voting” stage, all three programmable devices provide validity signals that enforce whether their outputs have been recently updated. These include “valid_1.1” to “valid_1.3” for each DT on FPGA 1, “valid_2.1” to “valid_2.3” for each DT on FPGA 2, “prob_valid_1” for the FPGA 1 RF as a whole, “prob_valid_2” for the FPGA 2 RF as a whole, and “prob_valid_mean” for “voting”. These signals allow not only detecting and mitigating the lack of input activity on “input_valid” but also potential random faults that prevent the proper operation of both FPGAs and the “voting” CPLD.

In activity 3-B, the tools and resources that are applicable to the remainder of the BCS lifecycle are selected. These include the following items:

Anaconda 22.9.0 (with Python 3.9.15) to design the BCS DTs and RFs in software;

Conifer 0.3 to perform the HLS of DTs and RFs. Conifer was preferred over LeFlow because it has straightforward, native support for translating RFs coded as instances of Python’s scikit-learn class RandomForestClassifier into VHDL;

AMD/Xilinx Vivado 2022.2 to provide the needed infrastructure for built-in tests and simulations performed by Conifer during the HLS process;

Intel/Altera Quartus Prime Lite 22.1 Std 0.915 for additional VHDL design (e.g., “voting” CPLD), timing analyses, and the programming of Intel/Altera devices for tests;

Intel ModelSim Starter Edition 20.1.1.720 for simulations. By using it along with AMD/Xilinx Vivado, the performance of the VHDL-coded AI generated on the HLS can be cross-checked, thus improving the confidence of the results;

Digilent AnalogDiscovery and Digilent Waveforms 3.20.1 to generate input signals during physical tests.

Other relevant settings and practices defined throughout activities 3-B to 3-D, in turn, comprise the following items:

Ensuring compliance with VHDL 2008 [

31,

62];

Ensuring that the final VHDL code, including the HLS-generated one, adheres to fault-tolerant design practice defined by Lange et al. [

62] and Silva Neto et al. [

31]. Since Conifer was not designed with fault tolerance in mind, manual code changes might be needed;

For Intel/Altera Quartus Prime, turning off optimizations at compilation, synthesis, and routing to avoid unwanted changes to the as-designed fault-tolerant design.

3.4. Remarks and Results of Step 4—AI Detailed Design

Based on the design decisions in Step 3, the AI instances were initially developed in software by using the

scikit-learn Python library (version 1.3.1). Since its

RandomForestClassifier only allows building DTs with the same depth, the approach that was used to build RFs with DTs of different depths followed two steps: (i.) designing each DT as an instance of

scikit-learn’s

DecisionTreeClassifier class and (ii.) creating instances of the

scikit-learn RandomForestClassifier class with the DTs mapped onto each RF based on their respective performance metrics. The second step is needed to allow the HLS via Conifer, since Conifer does not support HLS straight from instances of the

DecisionTreeClassifier class. A Python function called “

convert_tree_to_random_fores” was developed for this purpose [

65].

The design of the DTs was carried out with 10-fold CV with the corresponding datasets of Step 2 (

Section 3.2) and by performing an exhaustive grid search on the domains of eight hyperparameters:

criterion,

splitter,

max_depth,

min_samples_split,

min_samples_leaf,

min_weight_fraction_leaf,

max_leaf_nodes, and

max_features [

73]. Special attention was given to limiting the depth of the DTs to up to 10 in such a way as to convey DTs that remain explainable and with reasonable resource requirements for FPGA synthesis after the HLS. Preliminary tests with Conifer also indicated that, on a computer with Intel i7-7700 (3.6 GHz × 4 cores; 8 threads) and 12 GB RAM, the HLS succeeded only for RFs with DTs no deeper than 15 levels; otherwise, Conifer crashed.

A total of eight different DTs, shown in

Table 7, were designed with the previous workflow by advancing activity 5-B to Step 4. DTs 1 and 5 were iteratively discarded due to overly high depths and were ultimately replaced by DT 6, which had a similar performance [

65] for the same “

pb0” setting. Furthermore,

Table 7 also indicates that, by means of software-based holdout tests with

DatasetT3 (“

pb0” = 89.9%), the DTs designed with datasets of lower “

pb0” have higher recall than those designed with datasets of higher “

pb0”. This indicates that the latter DTs tend to apply brakes more frequently than the ones with higher “

pb0”, which is expected given the higher abundance of data records leaning towards the need for brakes. On the other hand, and for a complementary reason, the DTs of higher “

pb0” are significantly better in precision, which plays a relevant role in avoiding unneeded brake applications posed by the lower “

pb0” counterparts.

Based on the results presented in

Table 7, the RFs of each of the two BCS FPGAs depicted in

Figure 9 (

Section 3.3) were designed with the principle of maximizing the overall recall while still achieving good precision. This was reached through experimentation and led to making each RF comprise one DT with high recall and low precision, one DT with average recall and average precision, and one DT with low recall and high precision. Therefore, the RF of FPGA 1, hereinafter referred to as “RF 1”, includes DTs 2, 3, and 4, whereas the RF of FPGA 2, hereinafter referred to as “RF 2”, comprises DTs 6, 7, and 8.

Holdout tests with DatasetT3 yielded a precision of 90.4% and a recall of 99.4% for RF 1, as well as a precision of 94.2% and a recall of 98.8% for RF 2. These results indicate that the recall of both RFs is significantly close to that of the DTs with the highest recalls, whereas the precision approached that of the middling DT.

Step 4 also comprised the VHDL design of the “

voting” CPLD in accordance with the functions and safety principles detailed for it in Step 3 (

Section 3.3). However, since the design of

voting is not based on AI nor contains any other cutting-edge techniques except for classic safety-critical design for CPLDs, it is not further detailed in this paper.

3.5. Remarks and Results of Step 5—AI Training and AI Preliminary V&V

Step 5 started with the HLS of the RFs generated in Step 4. The HLS was performed with the aid of Conifer’s “Python to VHDL” module, which translates Python-coded RFs into VHDL and simulates the resulting VHDL code with AMD/Xilinx Vivado with a given input dataset so that both implementations can be compared.

In order to feed Conifer with the BCS RFs coded as instances of scikit-learn’s RandomForestClassifier class, one of the needed settings for Conifer is the fixed-point precision employed to translate the numeric inputs and outputs into VHDL numerical and logical types. The notation hereinafter utilized for the fixed-point precision is ap_fixed<n,i>, with n representing the sum of bits for both the integer and decimal parts and i depicting the integer part’s bit width. As a result, the width of the decimal part equals n-i.

Conifer imposes two restrictions in setting ap_fixed<n,i>: the most significant bit of the integer part represents the number’s sign (i.e., positive or negative), and the settings of n and i are the same for every variable. Based on these restrictions and on the domains of all BCS inputs and outputs, the integer part mandatorily features i = 12 bits, since 11 bits are needed to represent the upper range of the distance (d) measurements within [0 m; 2000 m] and an additional bit is needed for the sign. The decimal part n-i, in turn, was defined with the aid of a sensitivity analysis at which it was varied within the range [6; 12] in order to analyze the following performance indicators:

Number of Wrong Predictions (#WP): This indicator represents the number of predictions for which the HLS-generated VHDL produced an outcome that differs from the originating software implementation;

Percentage of Wrong Predictions (%WP): This indicator represents the percentage of the test dataset records that were added to #WP, as defined in Equation (1):

The 75th Percentile Absolute Error (AE75): This indicator represents the 75th percentile of the absolute errors in the braking output probability generated by the RF. It was chosen with the objective of assessing the degree to which the RF output probability is affected at a given n-i decimal bit width.

The results presented in

Table 8 by exercising RF 1 with

DatasetT3 indicate that

ap_fixed<24,

12> provides no prediction errors at minimum AE75 ratings and, thus, represents an optimal setting in achieving the same performance of its software counterpart while keeping the needed resources for FPGA synthesis at a minimum for this performance. With all other

ap_fixed<n,

i> settings, #WP is not null, thus indicating that the HLS introduced additional errors with regard to the original software implementation.

The analysis of

ap_fixed<n,

i> also allowed identifying that the quantity of FPGA Look-Up Tables (LUTs) and registers linearly increases with

n and

i = 12, as shown in

Figure 10 with synthesis data generated with AMD/Xilinx Vivado for the FPGA AMD/Xilinx Spartan 7 XC7S100FGGA484. Furthermore, Intel Quartus Prime also evidenced that the Intel/Altera FPGA Cyclone V 5CEBA4F23C7N, available at terasIC’s DE0-CV experimental board, would suffice in fitting RF 1, RF 2, and

voting with

ap_fixed<24,

12>, provided the occupancy rates presented in

Table 9 are sufficiently low.

After the HLS was performed with

ap_fixed<24,

12>, the source code generated by Conifer was inspected in order to assess its functional adequacy and its robustness in dealing with fault tolerance. Each RF was structured in the seven VHDL entities depicted in

Figure 11. In this diagram, an arrow directed from “A” to “B” indicates that “A” instantiates or refers to “B” at least once within its design.

The RF design’s top entity is “BDTTop”, which instantiates two other entities: “BDT” (Boosted Decision Tree) and “Arrays0”. “BDT” describes the overall structure of the RF, whereas “Arrays0” comprises the numeric parameters that characterize each DT of the RF (e.g., interconnections among nodes, input thresholds for decision-making per node, and outputs per node). “BDT”, in turn, depends on two other VHDL entities, called “Tree” and “AddReduce”. “Tree” represents a DT and is then instantiated three times per BCS RF along with the corresponding parameterization obtained via “Arrays0”. “AddReduce” combines the outputs of each DT and generates the RF ensemble outputs. Finally, all aforementioned entities also depend on the entities “Constants” and “Types”. “Constants” provides the top-level hyperparameters of the RF, and both also contain the definition of data types.

By means of a binary comparison of both RFs’ VHDL codes, RF 1 and RF 2 only differ in the parameterization within the entities “Arrays0” and “Constants”. In addition to the overlapping of five out of seven entities, the VHDL code of all entities is not overly long or complex, thus allowing all safety assurance activities to be performed straight at the VHDL level, as opposed to adding an intermediate step at the software level.

In activity 5-A, code inspection allowed confirming the correctness of the VHDL code of both RFs and obtaining a set of 790 explainable decision rules that define the behavior of the BCS as a whole: 329 for RF 1 and 461 for RF 2. It is worth noting that these rules were only partially optimized and still have overlapping domains that give room for further summarization. Moreover, the compliance to fault tolerance [

31,

62] was checked in the entities “

Tree” and “

AddReduce”, which were chosen due to their core roles within the RFs. The results indicate that, despite coding issues such as unconnected inputs/outputs, feedthrough, unused hardware components, and unnamed processes, these do not pose safety-critical concerns.

Since activity 5-B was performed in advance during Step 4, Step 5 was followed with activity 5-C. It started with analyzing the maximum clock frequencies for each component of

Figure 9 (

Section 3.3) for the Intel/Altera FPGA Cyclone V 5CEBA4F23C7N after turning off synthesis and routing optimizations on Intel Quartus Prime and considering the most pessimistic scenario of its Timing Analysis tool, called “

SLOW 1100 mV 85C”. The obtained clock frequencies were 101 MHz for

voting, 38MHz for RF 1, and 24 MHz for RF 2 after full BCS integration. Since the VHDL code generated by Conifer requires a total of “

max_depth + 5” clock cycles to update its outputs after reading a set of valid inputs, the lower bound constraint of RF 2 would still allow the BCS to meet its response time RSR.

Activity 5-C also included a series of experiments to assess the overall performance of the BCS by integrating both RFs and

voting. These experiments were carried out in two major steps. The first one was by means of simulations aided by Intel ModelSim, for which the BCS is provided with

DatasetT1,

DatasetT2, and

DatasetT3 so that the overall BCS dynamics and performance ratings can be analyzed. The experiments revealed proper functional and safety dynamics, and the performance ratings presented in

Table 10 show that, in comparison with the results of each RF (

Section 3.4), the BCS reached an average recall that nears that of the highest scoring RF (RF 2), while achieving a precision that is the average of the indices reached by both RFs. Specificity is also significantly high and consistent, at 99.1%.

The impact of

pb0 was also assessed by repeating the same simulated experiments with the datasets

DatasetTestZ01 (

pb0 = 1%),

DatasetTestZ10 (

pb0 = 10%),

DatasetTestZ20 (

pb0 = 20%),

DatasetTestZ30 (

pb0 = 30%),

DatasetTestZ40 (

pb0 = 40%),

DatasetTestZ50 (

pb0 = 50%),

DatasetTestZ60 (

pb0 = 60%),

DatasetTestZ70 (

pb0 = 70%), and

DatasetTestZ80 (

pb0 = 80%). The results are plotted in

Figure 12 along with the “average” results in

Table 10, therein referred to as scenario

pb0 = 89.9%.

Figure 12 shows that recall achieved the smallest drifts with the different

pb0 settings, with a difference no higher than roundabout 0.1% across experiments. Specificity also showed differences of approximately 0.1% from

pb0 = 89.9% up to

pb0 = 20% and experienced an increase of circa 0.2% in the two lowest

pb0 settings (10% and 1%). These results indicate that the BCS has good confidence in effectively classifying data records that are distant from corner cases, i.e., scenarios that certainly require brakes to be applied (recall) and scenarios in which the brakes are certainly not needed (specificity).

On the other hand, accuracy, precision, and f1-score increased more than the other performance metrics as

pb0 decreased. The accuracy growth was fairly constant as

pb0 reduced, peaking at an improvement of roundabout 0.3% from

pb0 = 89.9% up to

pb0 = 1%. The changes in precision, in turn, were not only greater in value, with an increase of approximately 7.3% from

pb0 = 89.9% up to

pb0 = 1%, but the growth rate also differed with

pb0, as the precision had a steeper increase when transitioning between two high

pb0 settings than between two

pb0 low settings. Finally, the f1-score followed a trend that is similar to the precision because, since it depends on both precision and recall [

68], and the latter remained virtually unchanged: precision dominated its change rate with

pb0. The behavior of all three performance metrics is expected because, since datasets with lower

pb0 sport more records indicating the need for brakes, and these are known to be typically well classified as per the high recall ratings across all

pb0, accuracy, precision, and f1-score are also likely to increase.

The second experimental step involved a physical test session with two main objectives: (i.) assessing whether the simulated results are indeed observed in programmed FPGAs and (ii.) evaluating systems-level safety-critical mechanisms unrelated to AI, such as the partial and permanent loss of communication with at least one of the RFs. This was achieved with a built-in test bench whose test scenarios include not only a subset of DatasetT3 but also fault injection means that emulate communication faults related to the signals input_valid, prob_valid_1, and prob_valid_2.

The physical tests were performed in a terasIC DE0-CV board (with Intel/Altera FPGA Cyclone V 5CEBA4F23C7N), whose programming included the entire BCS and the aforementioned automated test bench, along with Digilent AnalogDiscovery and Digital Waveforms as clock-generating tools. The physical tests’ results corroborate those obtained in simulations. It is also worth highlighting that, given the objectives of the physical tests, it is not deemed that the usage of a single FPGA voids the validity of the collected evidence for the distributed architecture of

Figure 9 (

Section 3.3).

Finally, additional simulations were also performed with the objective of assessing how the BCS performance is affected when it is strictly subject to processing the corner case groups “Corner Cases (0, 1)” and ‘Corner Cases (1, 0)”. For that purpose, subsets of DatasetT1, DatasetT2, and DatasetT3 for each corner case category were generated and utilized as input stimuli for the same test benches utilized in the holdout tests’ simulations.

The performance metrics of the corner case simulations are presented in

Table 11, which is structured in three columns: the first one for the category “

Corner Cases (

0,

1)”, the second one for the category “

Corner Cases (

1,

0)”, and the third one for the merging of both categories. For readability purposes, the results therein presented correspond to the weighted averages of the corner cases from the three datasets (

DatasetT1,

DatasetT2, and

DatasetT3). Performance metrics signaled as “N/A” (not applicable) correspond to indices that cannot be mathematically calculated due to the features of the corner case categories.

The indices presented in

Table 10 are highly suggestive of potentially unsafe behavior for the BCS when processing input tuples (

d,

s,

gd) that are near the boundaries of either applying or releasing brakes. On the one hand, the low specificity with

Corner Cases (

0,

1) indicates that brakes might be frequently applied when they are still not necessary. On the other hand, the recall with

Corner Cases (

1,

0) suggests that the probability of not applying brakes immediately when they are needed (24.3%) is nearly 40 times higher than that of the overall scenarios of

Table 10 (0.6%).

Taking into account that assessing whether the previous indices indeed translate into unsafe behavior depends on how frequently the BCS is requested, a quantitative safety analysis is performed in advance to assess whether the BCS is indeed unsafe. Since this analysis does not take into account the unsafe events stemming from hardware failure modes, as these would only be assessed in Step 6, the quantitative safety model herein explored the lower bounds of the unsafe failure rates of each SR with the systematic unsafe behavior of AI in misclassifying bcp.

Two unsafe failure rates are relevant for safety assurance purposes:

Unsafe AI Failure Rate for Not Applying Brakes (): This failure rate, expressed by Equation (2), indicates how frequently the BCS misses applying brakes when needed. It is needed, thus, to evaluate the BCS compliance to SR 4.1;

Unsafe AI Failure Rate for Applying Unnecessary Brakes (): This failure rate, described by Equation (3), quantifies how frequently the BCS applies brakes when they are not needed. It is important, thus, to evaluate whether SR 4.2 is met.

In both equations, “recall” and “specificity” refer to the homonymous performance metrics at a given operational scenario. Moreover, the parameter indicates the number of wrong brake control predictions (bcp) that is tolerated by the BCS at each operating hour. This parameter was added to Equations (2) and (3) in such a way that, if the failure rates and do not meet the safety requirements SR 4.1 and SR 4.2 if = 0, it is possible to assess to what extent a higher would be acceptable from a risk perspective. Finally indicates the number of outputs generated by the BCS per hour. It equals the inverse of its 500 ms processing cycle, i.e., 7200 outputs per hour.

Therefore, Equations (2) and (3) were initially exercised by making

= 0 and utilizing the recall and specificity figures of all

pb0 and corner case scenarios depicted in

Figure 12 and

Table 11. Neither case allowed achieving

and

lower than the corresponding target THRs of SR 4.1 and SR 4.2, respectively. This result corroborates the initial remarks raised during the corner case analyses.

The minimum figures of

that allow meeting the target THRs of both SR 4.1 and SR 4.2 are presented in

Table 12, along with their corresponding unsafe failure rates

and

. They indicate that the BCS is potentially safe only if at least six classification errors of each type are tolerated per operating hour regardless of

pb0. A minor exception occurs for SR 4.2 and

pb0 = 1%, for which the target THR is achieved by tolerating one less error.

Finally, in a pessimistic operational scenario in which the BCS is strictly subject to corner case data, there is a significant leap in . At least 20 hourly classification errors of not applying brakes when needed are tolerated to meet SR 4.1, whereas a minimum of 253 misapplications of brakes per hour are tolerated to accomplish SR 4.2.

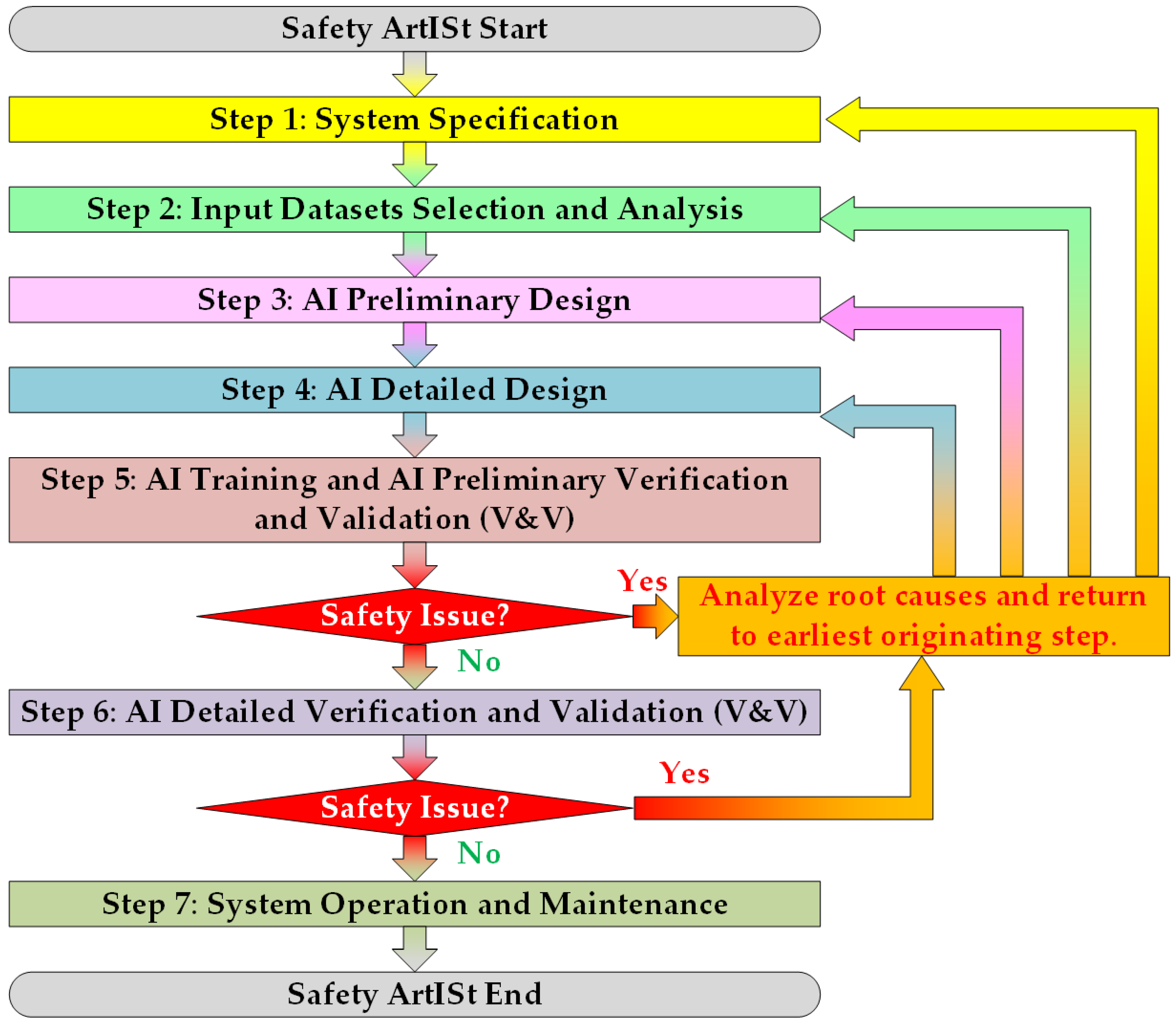

Given that the thresholds of

are not deemed acceptable from a safety perspective, notably considering that Newtonian-based BCSs implemented in commercial train control systems that adhere to IEEE 1474-1:2004 [

64] are able to achieve their safety goals without tolerating faults on braking commands, the AI-based BCS explored in this case study is deemed unsafe. This closes the iteration of the Safety ArtISt method and triggers the assessment of the safety issues’ root causes so that the design is reviewed in a new iteration. This discussion is covered, along with other themes, in

Section 4.