System Architecture for Diagnostics and Supervision of Industrial Equipment and Processes in an IoE Device Environment

Abstract

:1. Introduction

2. Systematic Review

- Proposing a new architecture model for collecting and processing data for the architecture of the IoE device environment.

- Proposing a new technology stack model for the proposed architecture model.

- The development of two architectures for the diagnostics and supervision of industrial equipment and processes in an IoE device environment that meet the assumptions of the proposed architecture model and the technology stack model.

- The implementation of the architecture in the form of a prototype (technology readiness level: TRL7) in order to confirm its correct functioning in an environment containing real elements of IoT systems and, thus, verify the assumptions of the developed models.

3. Initial Research Works

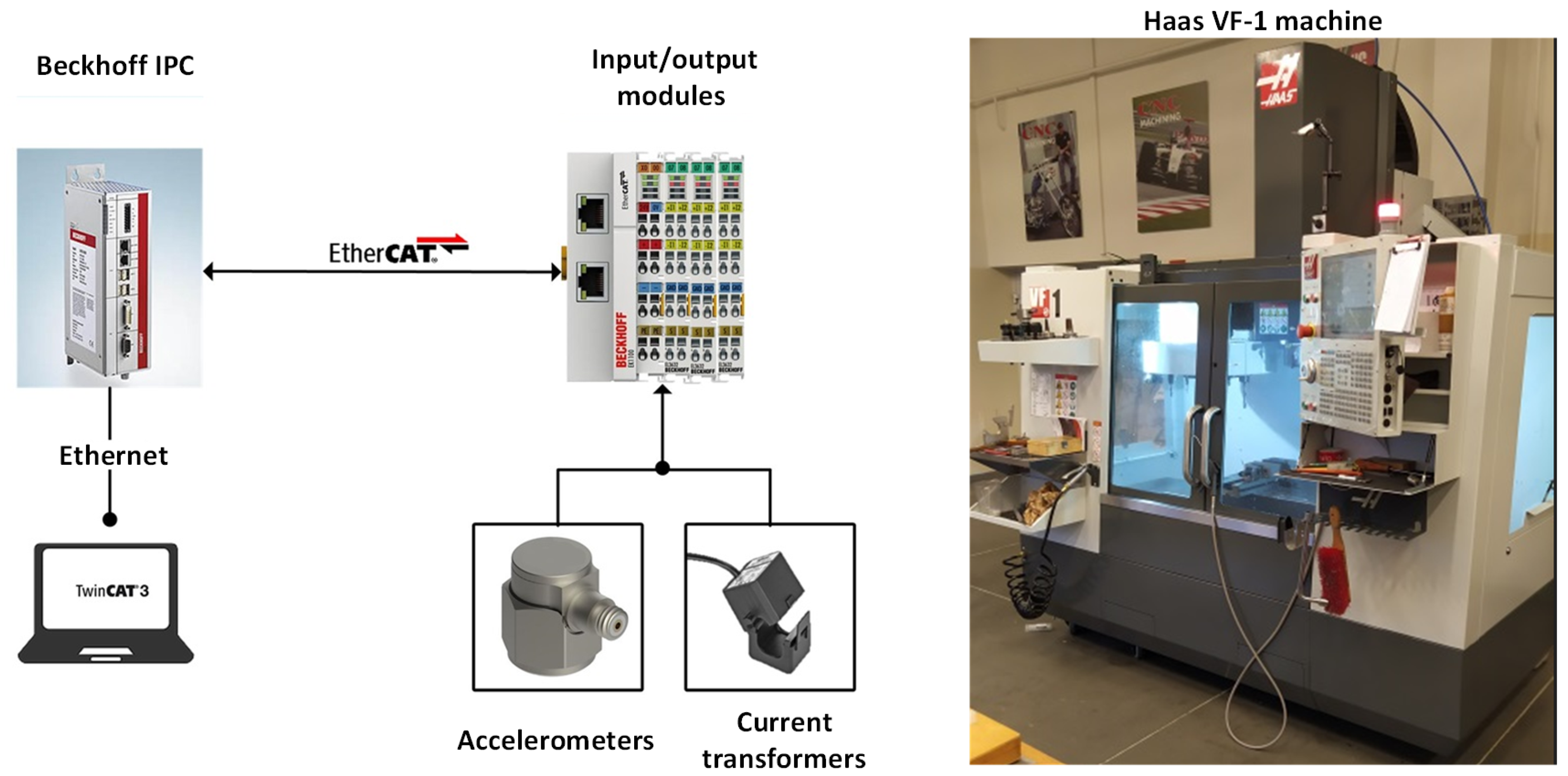

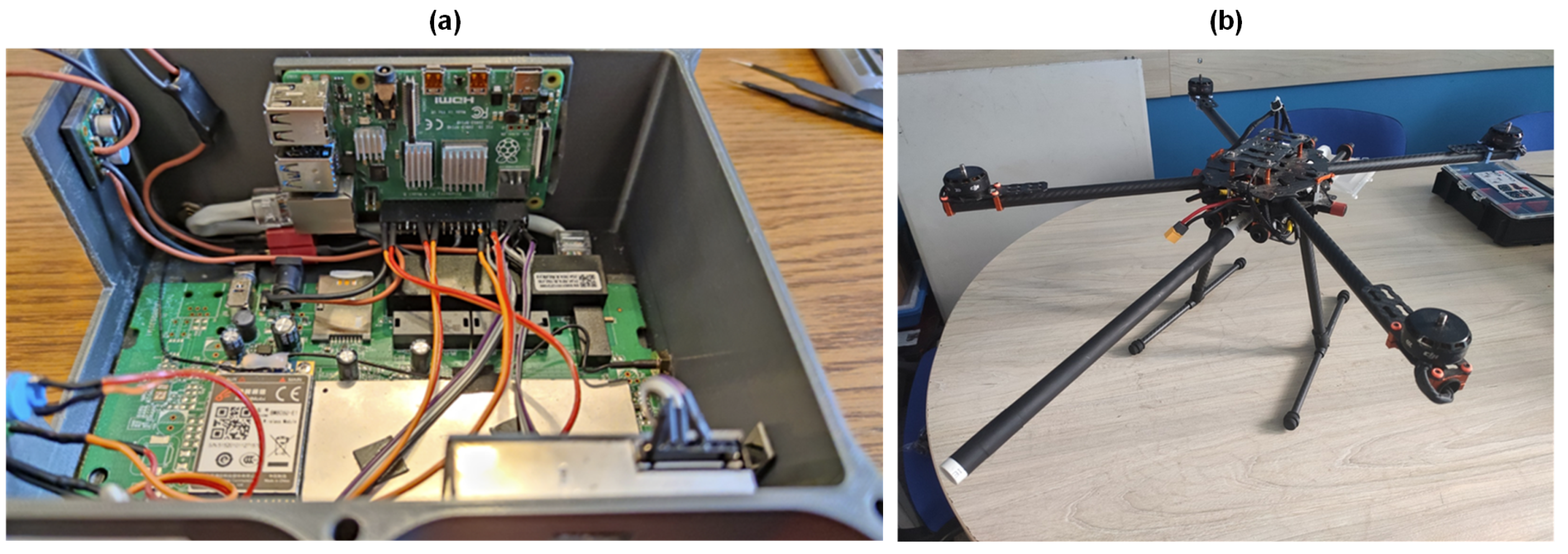

3.1. Sources of Measurement Data

3.2. Categories of Data Sources

- Category 1 (W2, W3, W4, W5): simple objects, e.g., IoT sensors, etc. As a rule, they return data with little complexity and do not require complex data processing performed locally on remote nodes. In this case, nodes do not aggregate data and do not store them in local databases or other repositories.

- Category 2 (W3, W6, W8): facilities that require local data processing (aggregation, cleaning, local control, etc.), but these are relatively simple facilities that have little computing power and limited hardware resources. This group of devices includes, i.e., the Computer Numerical Control (CNC) machine, manufacturing components, embedded systems, controllers, and IPC computers. In the case of these systems, we are dealing with limited resources for data storage (small databases, low-performance servers in extreme cases). Edge and IIoT objects with low complexity belong in this category.

- Category 3 (W1, W7): complex systems, including entire factory floors, factories, smart city systems, etc. They include information systems that communicate with the rest of the system using events, aggregated data, Api.MES, Enterprise Resource Planning systems (ERP), etc. In this class of systems, we are dealing with dedicated processing resources, which include server systems, database systems, application systems, directory services, etc. Complex IIoT objects fall into this category.

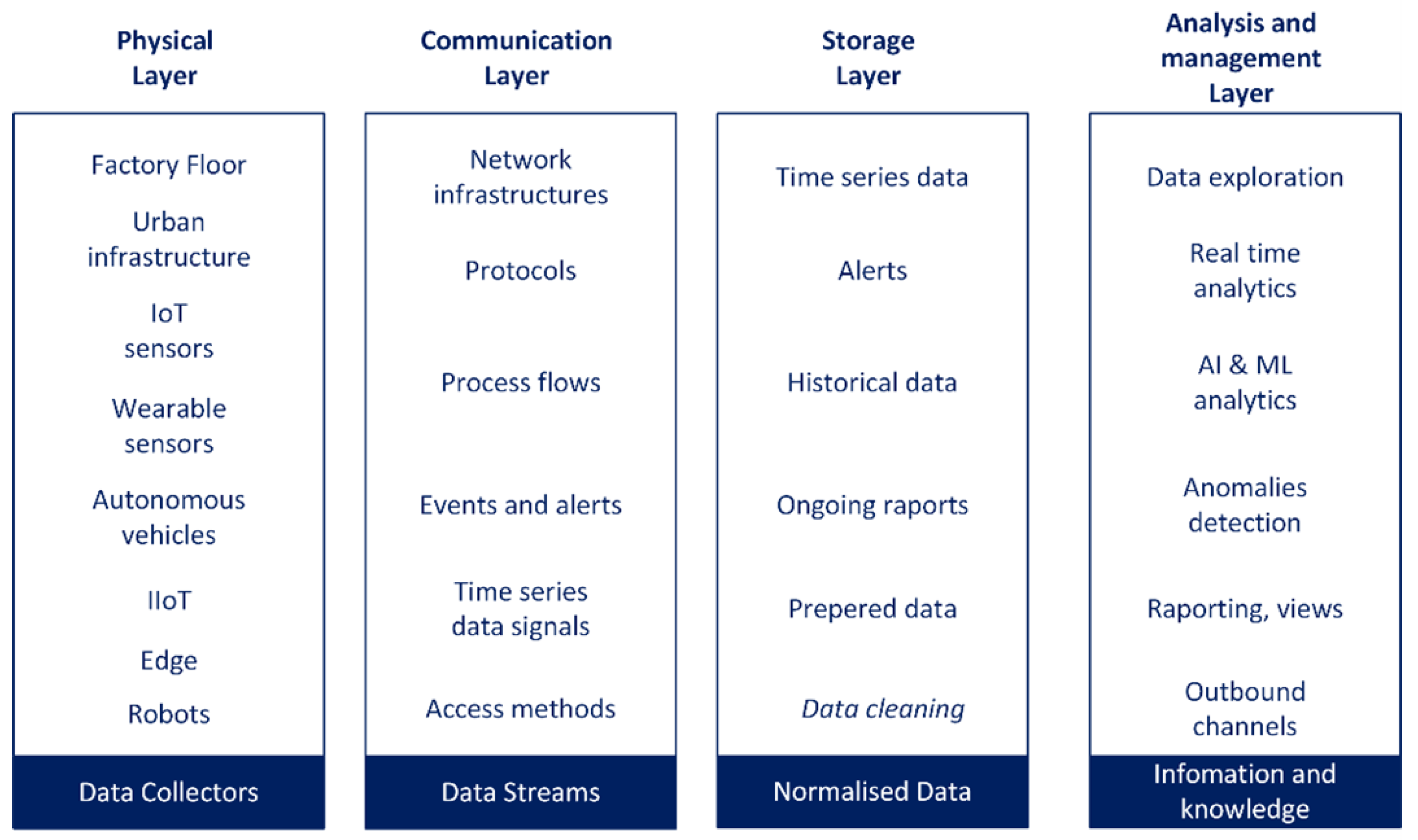

4. Data-Acquisition, -Collection, and -Processing Architecture with Technology Stack Assumptions

- Currently, there is no consistent, uniform, universal interface available to acquire data from various IoT/IIoT devices and send control messages to them.

- There is no environment available in the form of a framework within which new functionalities and algorithms could be implemented without having to directly and time-consumingly implement them in all types of end items.

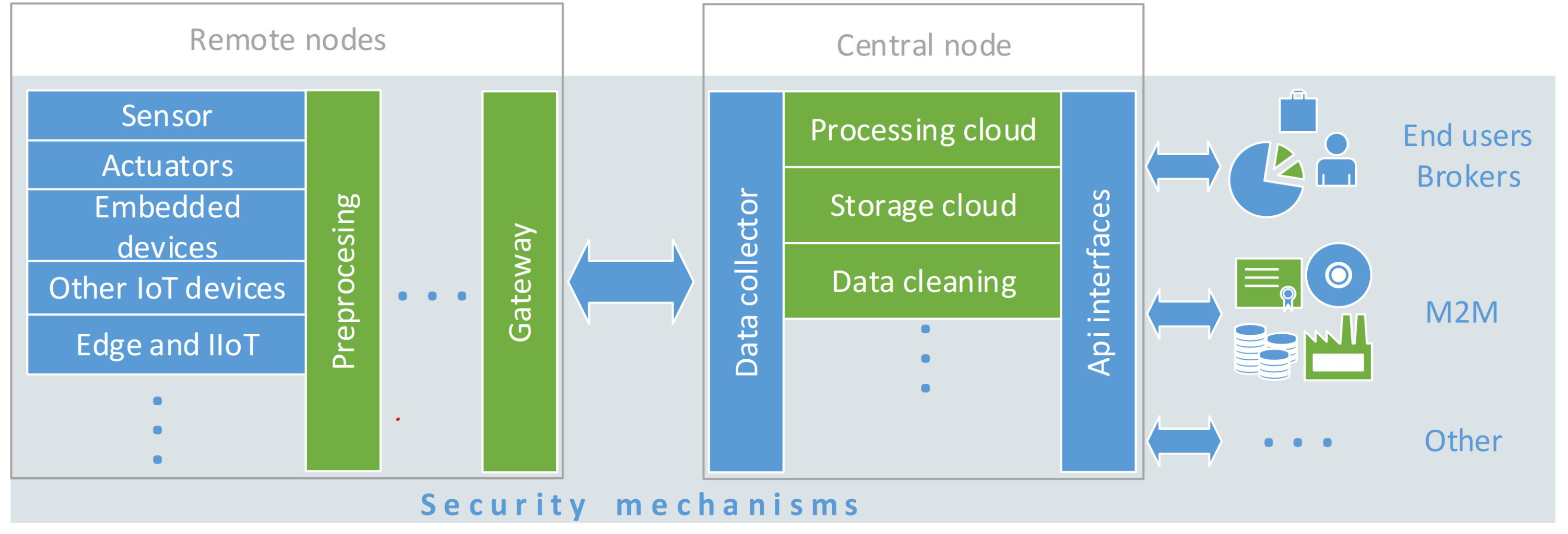

4.1. Assumptions of IoE Data Collection and Processing System: Architecture I

- Raw data: All data will be sent to the CMM directly from sensors and device elements (input device: WX). Based on this, the CMM inference module will make a decision and treat these data as an input to algorithms.

- Event data: In this case, on the auxiliary module (W1), the algorithm will be executed using the data obtained from the device, and only the results of the algorithm (events) will be returned to the CMZ in the form of control messages.

4.2. Assumptions of IoE Data Collection and Processing System: Architecture II

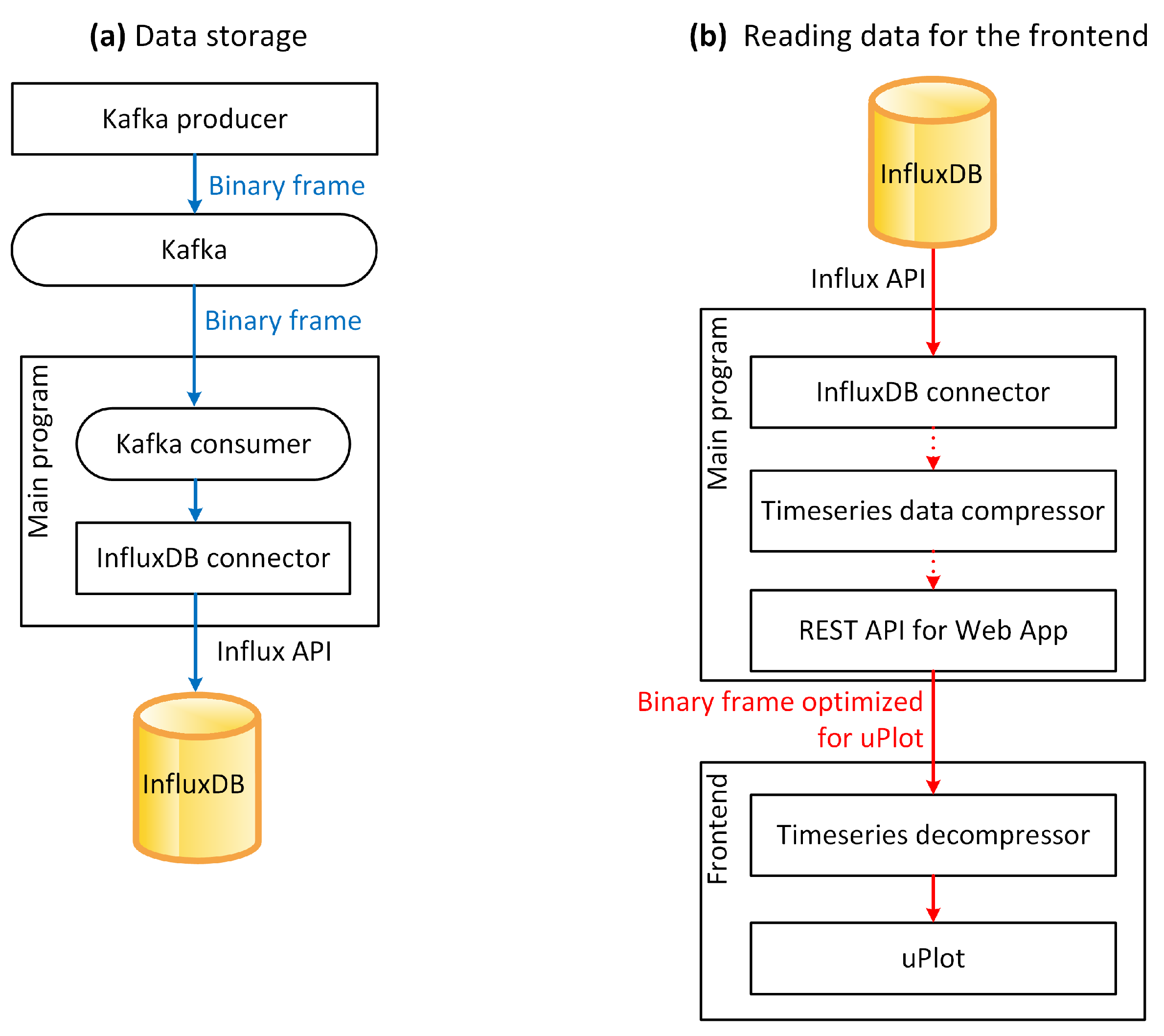

- Receiving data from Kafka;

- Saving data in the database;

- Frontend sharing;

- Communication with the scripting subsystem;

- Recording and processing of events.

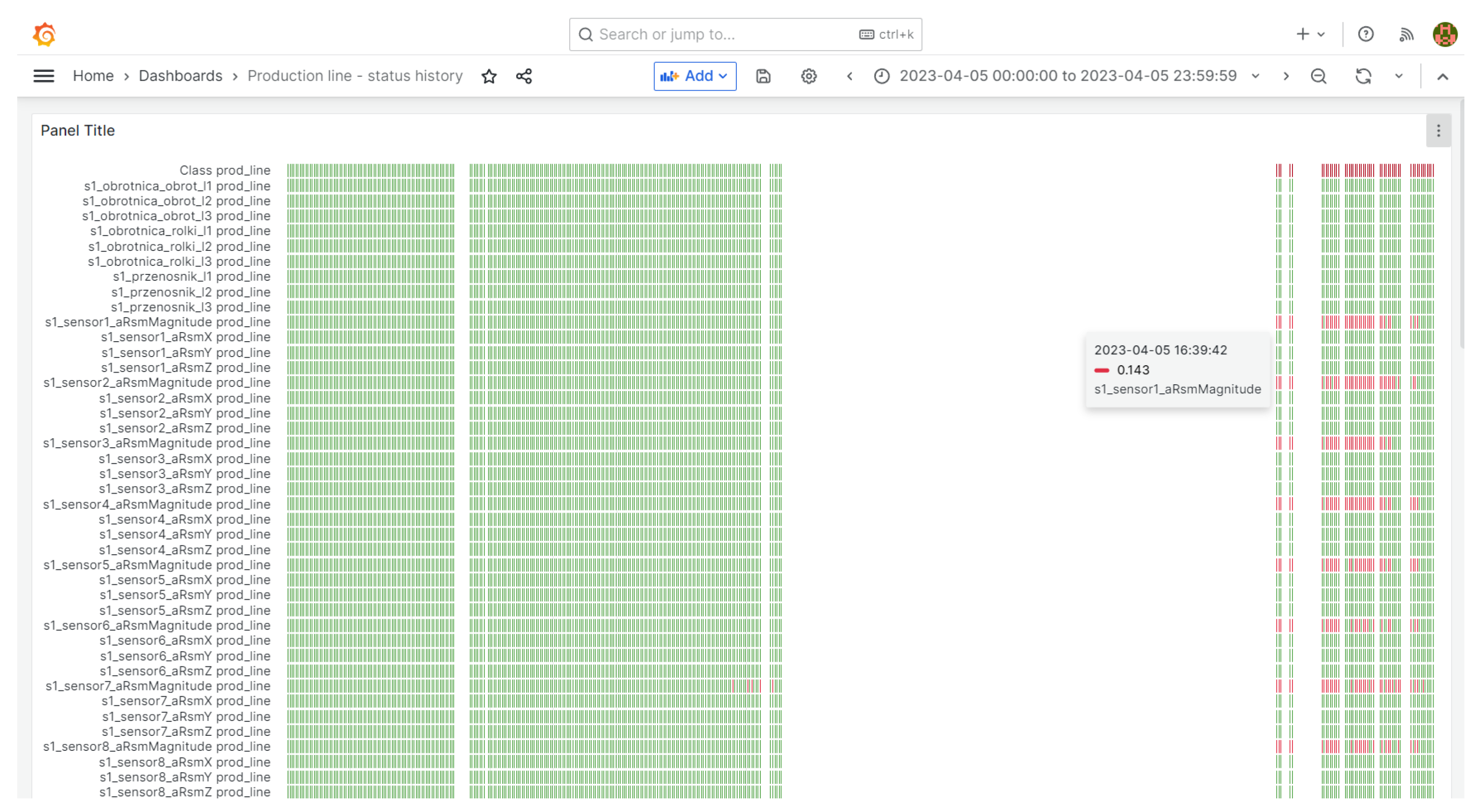

5. Implementation of the System and Discussion of the Results Obtained

- NodeRED allows graphically creating input data flows and enables integration with various sources. The main task of the service is to retrieve data from an IoT device and send them to Kafka.

- A functionality that gives the ability to simply send data to the system via standard HTTP requests.

- Kafka is a service used to temporarily store and queue data from various data sources.

- InfluxDB is a popular database specifically optimized for storing time series data, chosen for its high schema flexibility and additional calculation functionality for time series runs. In addition, this system has its own interface, which allows displaying data in graphs, which enables viewing all stored data quickly.

- Kafka Consumer for InfluxDB is designed to retrieve data from relevant Kafka topics, manage database connections, and for optimization, send data in batches.

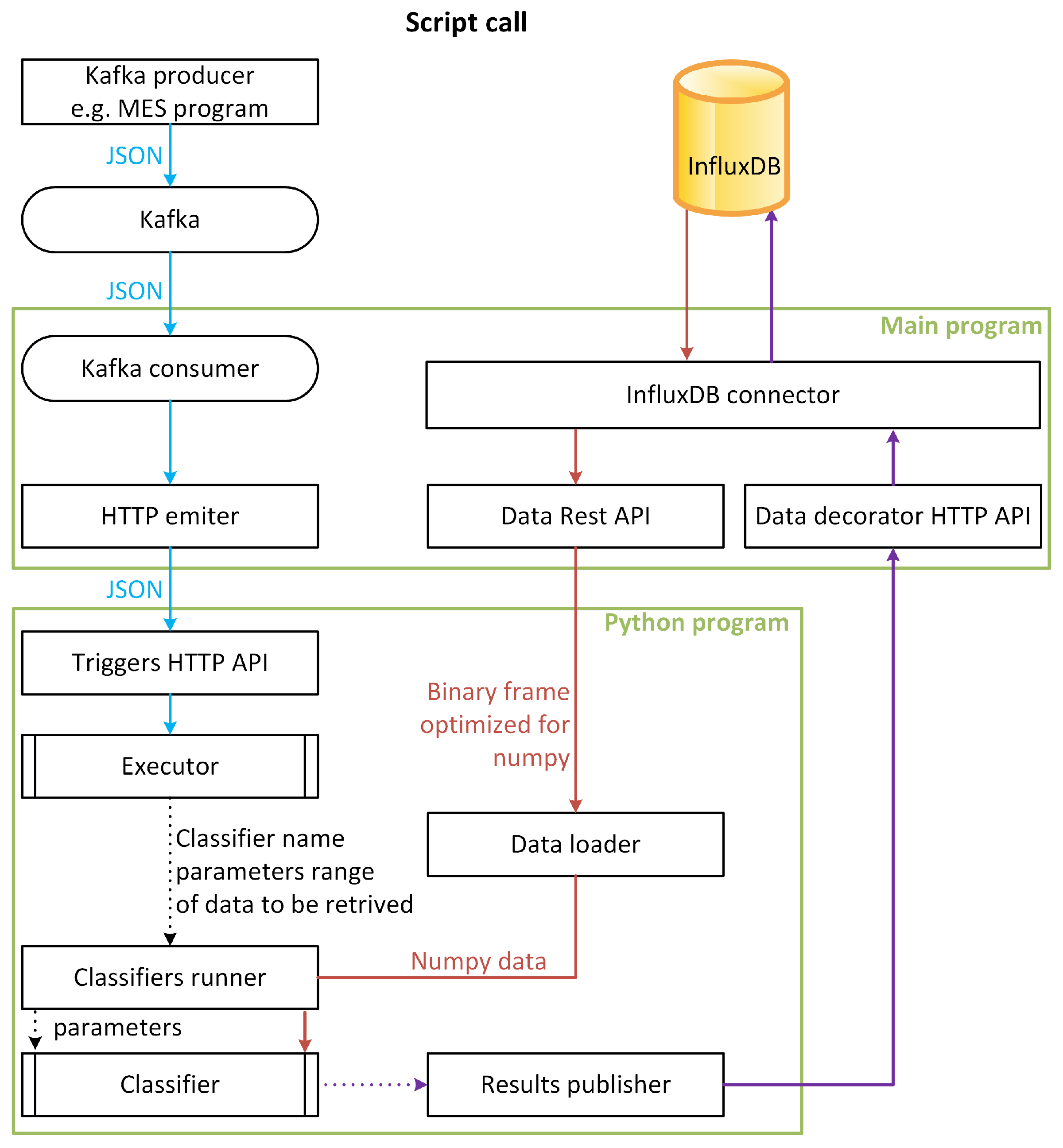

- The analytical scripting module reacts to events and can observe individual time courses.

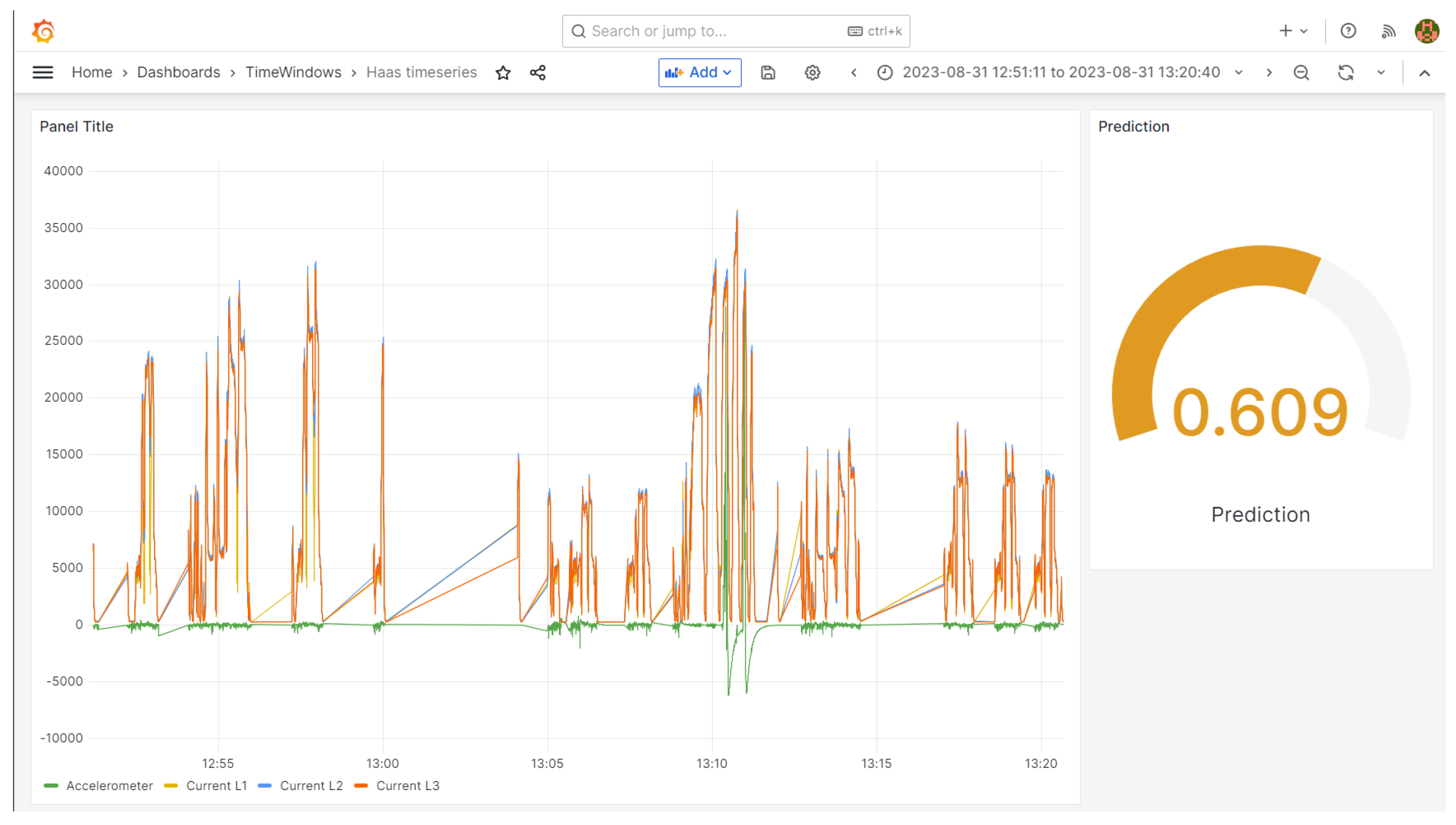

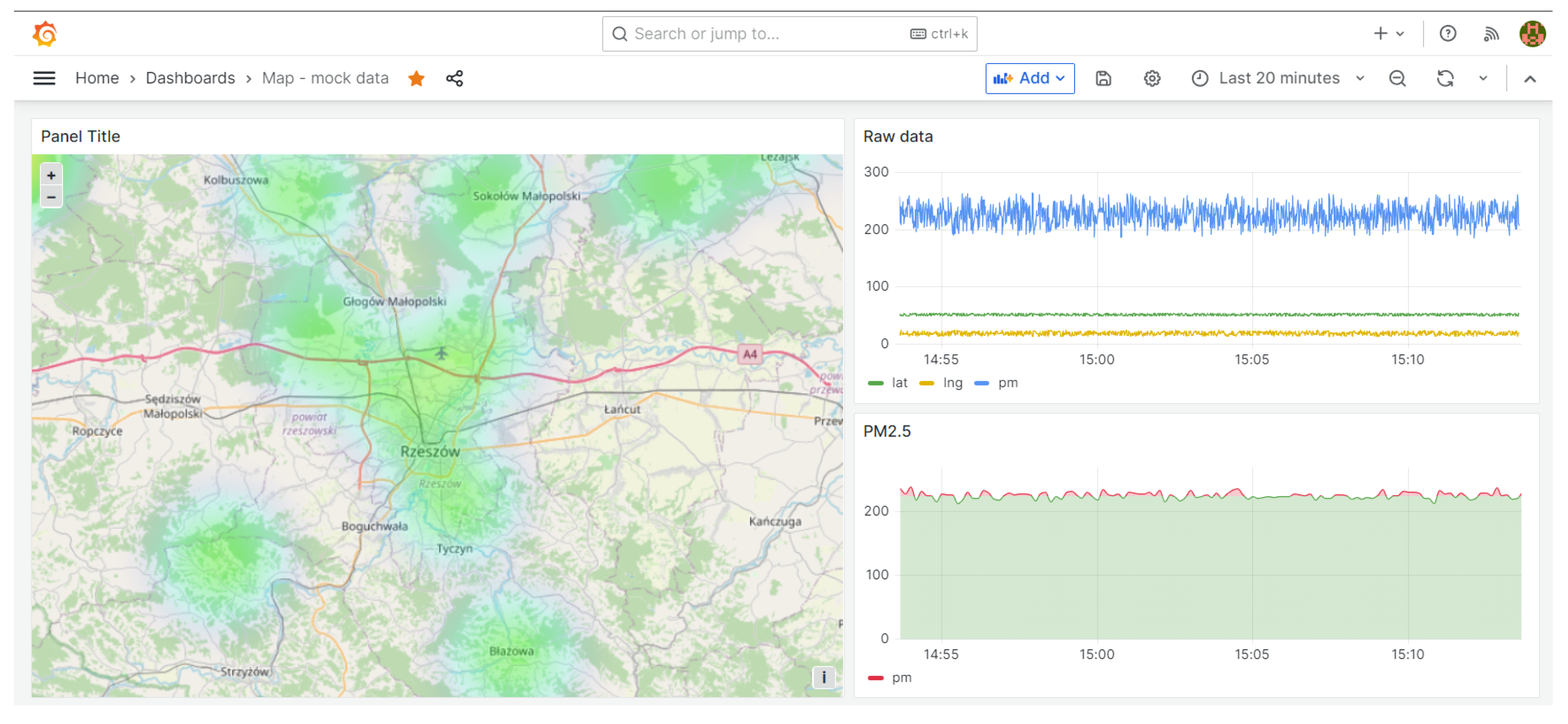

- Grafana allows creating visualizations and retrieving data from many different sources, including InfluxDB and PostgreSQL. It constitutes the frontend of the system, which, if necessary, can be extended with custom plugins of the data source, panel, or application type.

- Sending directly to the topic Kafka: This requires the customer to use a special library and properly configure the connection. This is the most-efficient option and may be required in case of a large amount of transferred data.

- Sending via HTTP requests (REST API): This is tailored for less-advanced devices, for which frequency of transmitted data is no more than once per second. The advantage of this solution is the simplicity of the configuration.

- Using NodeRED: With its base of add-ons, it can support a wide variety of protocols for communicating with devices and provides the ability to pre-filter and recalculate raw data

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Raj, A.; Prakash, S. Internet of Everything: A survey based on Architecture, Issues and Challenges. In Proceedings of the 2018 5th IEEE Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON), Gorakhpur, India, 2–4 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Calvo, I.; Espin, A.; Gil-García, J.M.; Fernández Bustamante, P.; Barambones, O.; Apiñaniz, E. Scalable IoT Architecture for Monitoring IEQ Conditions in Public and Private Buildings. Energies 2022, 15, 2270. [Google Scholar] [CrossRef]

- Atmoko, R.A.; Riantini, R.; Hasin, M.K. IoT real time data acquisition using MQTT protocol. J. Phys. Conf. Ser. 2017, 853, 012003. [Google Scholar] [CrossRef]

- Acosta-Ortiz, D.; Ramos-Pollán, R.; Pedraza, G. A General Purpose Architecture for IoT Data Acquisition. In Advances in Computing; Solano, A., Ordoñez, H., Eds.; Communications in Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 735, pp. 644–658. [Google Scholar] [CrossRef]

- Hastbacka, D.; Jaatinen, A.; Hoikka, H.; Halme, J.; Larranaga, M.; More, R.; Mesia, H.; Bjorkbom, M.; Barna, L.; Pettinen, H.; et al. Dynamic and Flexible Data Acquisition and Data Analytics System Software Architecture. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Liang, F.; Guo, H.; Yi, S.; Ma, S. A Scalable Data Acquisition Architecture in Web-Based IOT. In Information and Business Intelligence; Qu, X., Yang, Y., Eds.; Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 267, pp. 102–108. [Google Scholar] [CrossRef]

- Wang, S.; Hou, Y.; Gao, F.; Ji, X. A novel IoT access architecture for vehicle monitoring system. In Proceedings of the 2016 IEEE 3rd World Forum on Internet of Things (WF-IoT), Reston, VA, USA, 12–14 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 639–642. [Google Scholar] [CrossRef]

- Gupta, V.; Sharma, M.; Pachauri, R.K.; Babu, K.N.D. A Low-Cost Real-Time IOT Enabled Data Acquisition System for Monitoring of PV System. Energy Sources Part A Recover. Util. Environ. Eff. 2021, 43, 2529–2543. [Google Scholar] [CrossRef]

- Kumar, S.; Kolekar, T.; Patil, S.; Bongale, A.; Kotecha, K.; Zaguia, A.; Prakash, C. A Low-Cost Multi-Sensor Data Acquisition System for Fault Detection in Fused Deposition Modelling. Sensors 2022, 22, 517. [Google Scholar] [CrossRef] [PubMed]

- Ortiz, G.; Boubeta-Puig, J.; Criado, J.; Corral-Plaza, D.; Garcia-de Prado, A.; Medina-Bulo, I.; Iribarne, L. A microservice architecture for real-time IoT data processing: A reusable Web of things approach for smart ports. Comput. Stand. Interfaces 2022, 81, 103604. [Google Scholar] [CrossRef]

- Bolanowski, M.; Żak, K.; Paszkiewicz, A.; Ganzha, M.; Paprzycki, M.; Sowiński, P.; Lacalle, I.; Palau, C.E. Eficiency of REST and gRPC Realizing Communication Tasks in Microservice-Based Ecosystems. In Frontiers in Artificial Intelligence and Applications; Fujita, H., Watanobe, Y., Azumi, T., Eds.; IOS Press: Amsterdam, The Netherlands, 2022. [Google Scholar] [CrossRef]

- Badii, C.; Bellini, P.; Difino, A.; Nesi, P. Sii-Mobility: An IoT/IoE Architecture to Enhance Smart City Mobility and Transportation Services. Sensors 2018, 19, 1. [Google Scholar] [CrossRef] [PubMed]

- Jindal, A.; Kumar, N.; Singh, M. A unified framework for big data acquisition, storage, and analytics for demand response management in smart cities. Future Gener. Comput. Syst. 2020, 108, 921–934. [Google Scholar] [CrossRef]

- Paszkiewicz, A.; Bolanowski, M.; Budzik, G.; Przeszłlowski, L.; Oleksy, M. Process of Creating an Integrated Design and Manufacturing Environment as Part of the Structure of Industry 4.0. Processes 2020, 8, 1019. [Google Scholar] [CrossRef]

- Sowiński, P.; Rachwał, K.; Danilenka, A.; Bogacka, K.; Kobus, M.; Dąbrowska, A.; Paszkiewicz, A.; Bolanowski, M.; Ganzha, M.; Paprzycki, M. Frugal Heart Rate Correction Method for Scalable Health and Safety Monitoring in Construction Sites. Sensors 2023, 23, 6464. [Google Scholar] [CrossRef]

- Touati, F.; Tariq, H.; Al-Hitmi, M.A.; Mnaouer, A.B.; Tahir, A.; Crescini, D. IoT and IoE prototype for scalable infrastructures, architectures and platforms. Int. Robot. Autom. J. 2018, 4, 319–327. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, L.; Wang, X.V.; Xu, X.; Jiang, P. Cloud manufacturing: Key issues and future perspectives. Int. J. Comput. Integr. Manuf. 2019, 32, 858–874. [Google Scholar] [CrossRef]

- Paszkiewicz, A.; Bolanowski, M. Software for Integration of Manufacturing Resources in the Hybrid Cloud Model for Industry 4.0. In Integrating Research and Practice in Software Engineering; Jarzabek, S., Poniszewska-Marańda, A., Madeyski, L., Eds.; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2020; Volume 851, pp. 223–236. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, L.; Wang, X.V.; Xu, X.; Zhang, L. Scheduling in Cloud manufacturing: State-of-the-art and research challenges. Int. J. Prod. Res. 2019, 57, 4854–4879. [Google Scholar] [CrossRef]

- Hewa, T.; Braeken, A.; Liyanage, M.; Ylianttila, M. Fog Computing and Blockchain-Based Security Service Architecture for 5G Industrial IoT-Enabled Cloud Manufacturing. IEEE Trans. Ind. Inform. 2022, 18, 7174–7185. [Google Scholar] [CrossRef]

- Ajith, J.B.; Manimegalai, R.; Ilayaraja, V. An IoT Based Smart Water Quality Monitoring System using Cloud. In Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), Vellore, India, 24–25 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Bansal, M.; Chana, I.; Clarke, S. A Survey on IoT Big Data: Current Status, 13 V’s Challenges, and Future Directions. ACM Comput. Surv. 2021, 53, 1–59. [Google Scholar] [CrossRef]

- Microsoft Azure. Azure IoT Solution Accelerators. Available online: https://azure.microsoft.com/en-us/solutions/iot (accessed on 3 December 2023).

- IBM. Internet of Things. Available online: https://www.ibm.com/Cloud/internet-of-things (accessed on 3 December 2023).

- Google. Edge TPU. Available online: https://Cloud.google.com/Edge-tpu/ (accessed on 3 December 2023).

- Kaur, H.; Sood, S.K.; Bhatia, M. Cloud-assisted green IoT-enabled comprehensive framework for wildfire monitoring. Clust. Comput. 2020, 23, 1149–1162. [Google Scholar] [CrossRef]

- Aceto, G.; Persico, V.; Pescapé, A. Industry 4.0 and Health: Internet of Things, Big Data, and Cloud Computing for Healthcare 4.0. J. Ind. Inf. Integr. 2020, 18, 100129. [Google Scholar] [CrossRef]

- Nancy, A.A.; Ravindran, D.; Raj Vincent, P.M.D.; Srinivasan, K.; Gutierrez Reina, D. IoT-Cloud-Based Smart Healthcare Monitoring System for Heart Disease Prediction via Deep Learning. Electronics 2022, 11, 2292. [Google Scholar] [CrossRef]

- Rani, R.; Kashyap, V.; Khurana, M. Role of IoT-Cloud Ecosystem in Smart Cities: Review and Challenges. Mater. Today Proc. 2022, 49, 2994–2998. [Google Scholar] [CrossRef]

- Wu, Y. Cloud-Edge Orchestration for the Internet of Things: Architecture and AI-Powered Data Processing. IEEE Internet Things J. 2021, 8, 12792–12805. [Google Scholar] [CrossRef]

- Fysarakis, K.; Spanoudakis, G.; Petroulakis, N.; Soultatos, O.; Broring, A.; Marktscheffel, T. Architectural Patterns for Secure IoT Orchestrations. In Proceedings of the 2019 Global IoT Summit (GIoTS), Aarhus, Denmark, 17–21 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Rafique, W.; Zhao, X.; Yu, S.; Yaqoob, I.; Imran, M.; Dou, W. An Application Development Framework for Internet-of-Things Service Orchestration. IEEE Internet Things J. 2020, 7, 4543–4556. [Google Scholar] [CrossRef]

- Sirma, M.; Kavak, A.; Inner, B. Cloud Based IoE Connectivity Engines for The Next Generation Networks: Challenges and Architectural Overview. In Proceedings of the 2019 1st International Informatics and Software Engineering Conference (UBMYK), Ankara, Turkey, 6–7 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Longbottom, C. A Reference Architecture for the IoE; Technical Report; Enterprise Management 360: London, UK, 2016. [Google Scholar]

- Balestrieri, E.; De Vito, L.; Lamonaca, F.; Picariello, F.; Rapuano, S.; Tudosa, I. Research challenges in Measurement for Internet of Things systems. Acta IMEKO 2019, 7, 82. [Google Scholar] [CrossRef]

- Sadhu, P.K.; Yanambaka, V.P.; Abdelgawad, A. Internet of Things: Security and Solutions Survey. Sensors 2022, 22, 7433. [Google Scholar] [CrossRef] [PubMed]

- Pawłowicz, B.; Salach, M.; Trybus, B. Infrastructure of RFID-Based Smart City Traffic Control System. In Automation 2019; Szewczyk, R., Zieliński, C., Kaliczyńska, M., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2020; Volume 920, pp. 186–198. [Google Scholar] [CrossRef]

- Uviase, O.; Kotonya, G. IoT Architectural Framework: Connection and Integration Framework for IoT Systems. Electron. Proc. Theor. Comput. Sci. 2018, 264, 1–17. [Google Scholar] [CrossRef]

- “Regional Initiative of Excellence” Program for Years 2019–2022. Project Number 027/RID/2018/19. Available online: https://rid.prz.edu.pl/ (accessed on 23 October 2023).

- 10+ Best IoT Cloud Platforms in 2023. Available online: https://euristiq.com/best-iot-Cloud-platforms (accessed on 3 December 2023).

| Parameter | DHT11 | BME280 | DHT22 |

|---|---|---|---|

| Measurement temperature | 0–50 C | −40–85 C | −40–80 C |

| Humidity (RH) | 20–90% | 10–100% | 0–100% |

| Supply voltage | 0–3.3 do 5.5 V | 3.3 V | 3.3–6 V |

| Electricity consumption | 0–0.2 mA | Unknown | 0.2 mA |

| Accuracy (temp) | 0–2 C | +/−1 C | +/−0.5 C |

| Accuracy (humidity) | 0–+/−4 RH | +/−3 RH | +/−2 RH |

| Platform | Communication Protocol | Functions | Possibility to Install a Separate Instance on Local Resources | Time Required for Initial System Implementation |

|---|---|---|---|---|

| Google IoT | HTTP, MQTT | Connectivity device management | n/a | short |

| Amazon Web Services IoT Platform | HTTP MQTT WebSockets | AWS IoT core, connectivity, authentication, rules engine, development environment | n/a | short |

| Microsoft Azure IoT | MQTT, AMQP, both over WebSockets, HTTPS | Azure IoT Hub, connectivity, authentication, device monitoring, device management, IoT Edge | n/a | short |

| IBM Watson IoT | HTTP, MQTT | BM Watson IoT platform, connectivity, device management, real-time analytics, blockchain | n/a | short/medium |

| Proposed solution | HTTP, MQTT, and any others | Any functionality depending on customer needs, possibility to integrate with existing Cloud solutions | yes | medium/long |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bolanowski, M.; Paszkiewicz, A.; Żabiński, T.; Piecuch, G.; Salach, M.; Tomecki, K. System Architecture for Diagnostics and Supervision of Industrial Equipment and Processes in an IoE Device Environment. Electronics 2023, 12, 4935. https://doi.org/10.3390/electronics12244935

Bolanowski M, Paszkiewicz A, Żabiński T, Piecuch G, Salach M, Tomecki K. System Architecture for Diagnostics and Supervision of Industrial Equipment and Processes in an IoE Device Environment. Electronics. 2023; 12(24):4935. https://doi.org/10.3390/electronics12244935

Chicago/Turabian StyleBolanowski, Marek, Andrzej Paszkiewicz, Tomasz Żabiński, Grzegorz Piecuch, Mateusz Salach, and Krzysztof Tomecki. 2023. "System Architecture for Diagnostics and Supervision of Industrial Equipment and Processes in an IoE Device Environment" Electronics 12, no. 24: 4935. https://doi.org/10.3390/electronics12244935

APA StyleBolanowski, M., Paszkiewicz, A., Żabiński, T., Piecuch, G., Salach, M., & Tomecki, K. (2023). System Architecture for Diagnostics and Supervision of Industrial Equipment and Processes in an IoE Device Environment. Electronics, 12(24), 4935. https://doi.org/10.3390/electronics12244935