TransAttention U-Net for Semantic Segmentation of Poppy

Abstract

1. Introduction

- Poppy is not a common crop, which is difficult to collect and the number of samples is small to support the training of a high- accuracy network.

- RGB images can mostly retain the morphological features of poppy and other crops, but there will be interferences in the images. The similar tree crowns or other plant interferences can easily lead to the problem of segmentation errors.

- The change in shooting height brings complex scale features.

- The plant morphology of opium poppy is special, and there is often a long distance between the fruit and stem of the same poppy plant, which can lead to loss of edge information. Due to the lack of ability of traditional methods to process the rich semantic information of images, many works have been done on agricultural yield estimation using deep learning techniques. As an important tool for image processing, convolutional neural networks can make full use of the semantic information of images. However, limitations still exist due to convolutional operations in modeling long-distance relationships. Therefore, these architectures usually yield weaker performance, especially for poppy images with large differences in structural textures, shape features. In this regard, we use CNN as the backbone to integrate transformer technology, which has excellent attention mechanism. The combination with CNN effectively avoids the high computing power required for a network built by transformer only.

- We use ExG (Excess Green index), CIVE (Color Index of Vegetation Extraction) and the combined vegetation indices COM (Combination index) to process the original image instead of the original RGB image as the network input for the distinctive features of poppy (leaf texture, fruit shape) [8]. This is useful for the task of semantic segmentation of poppy with few samples and inter-class interference.

- A U-shaped network—TAU-Net is improved using Transformer for the semantic segmentation task of poppy images captured by UAVs. The backbone network incorporates both CNN networks and self-attentive mechanisms. Poppy features vary widely at different scales. Unlike the network built by the self-attentive module only or the convolution module only, TAU-Net takes advantage of the transformer to perceive the whole image instead of the original U-net which has a restricted field of perception. The improved network improves the robustness of the network to scale changes without imposing high computational effort.

- Poppy images collected by UAVs have high resolution and pixels with high spatial structure. There is a remote dependency between the fruit and the rootstock of the plant of poppy. In this paper, the number of tokens is huge when using transformer in encoding stage. The relative position encoding method learns the relationship between tokens to maintain more accurate position information.

2. Related Works

2.1. Plant Image Enhancement

2.2. Artificial Neural Networks for Vegetation Image Segmentation

2.3. Attentive Mechanisms

3. Methodology

3.1. Input Presentation

3.2. Network Architecture

4. Experimental Evaluation and Discussion

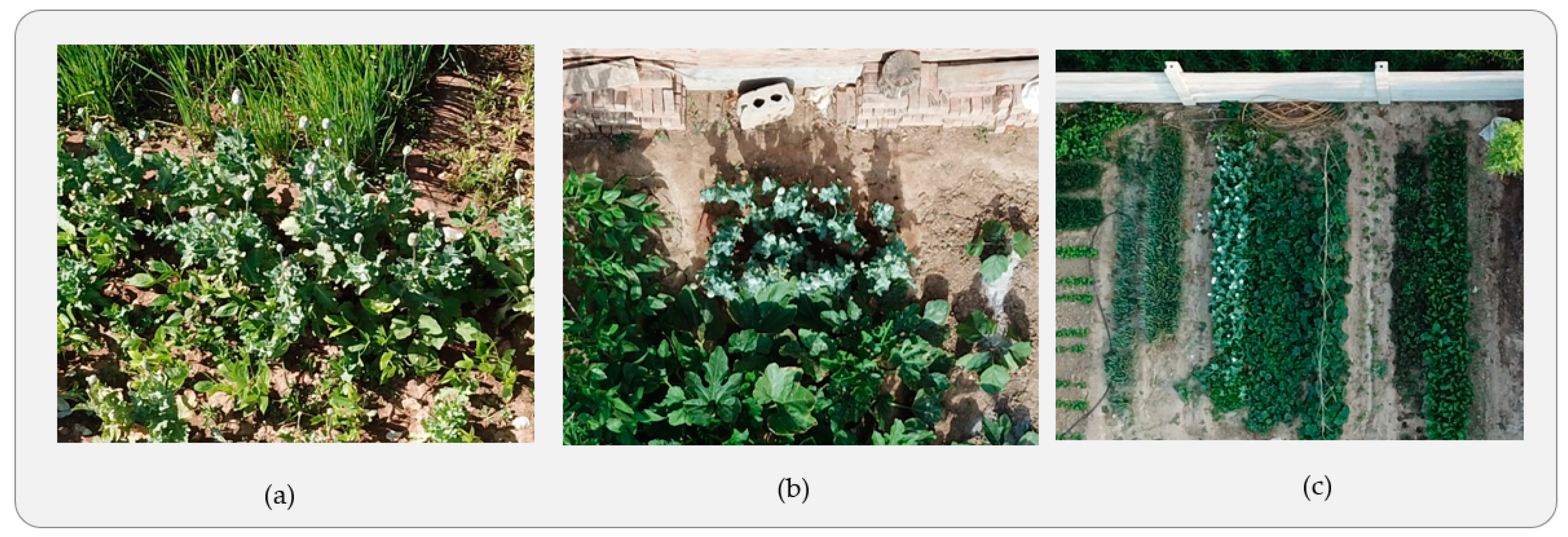

4.1. Experimental Conditions and Dataset

4.2. Evaluation Metrics

4.3. Comparison Experiment

- When a poppy image appears the poppy sample only occupies a very small area, this situation of extreme imbalance between the foreground and background. If a 512 × 512 image has only a 10 × 10 split sample, BCE cannot solve this extremely uneven situation, but Dice Loss is not affected by the foreground size.

- When a poppy image includes a large poppy sample and a small poppy sample at the same time, this situation belongs to the unbalanced content of the split. If a 512 × 512 image has a 10 × 10 and a 200 × 200 segmentation sample, Dice Loss will tend to learn the large block and ignore the small sample, but BCE will still learn the small samples.

4.4. Ablations Study

5. Conclusions

- There are false positives in the experimental results, which reduce the experimental accuracy.

- The proposed method is weak in handling intercropping.

- Image acquisition in natural environment is affected by lighting conditions. The feature performance of poppies varies greatly under different light intensities, which have a large impact on the learning ability of the network.

- The robustness of the network to changes in photo angles also needs to be enhanced.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine vision systems in precision agriculture for crop farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef] [PubMed]

- Narin, O.G.; Abdikan, S. Monitoring of phenological stage and yield estimation of sunflower plant using Sentinel-2 satellite images. Geocarto Int. 2022, 37, 1378–1392. [Google Scholar] [CrossRef]

- Aslan, M.F.; Durdu, A.; Sabanci, K.; Ropelewska, E.; Gültekin, S.S. A comprehensive survey of the recent studies with UAV for precision agriculture in open fields and greenhouses. Appl. Sci. 2022, 12, 1047. [Google Scholar] [CrossRef]

- Rehman, A.; Saba, T.; Kashif, M.; Fati, S.M.; Bahaj, S.A.; Chaudhry, H. A revisit of internet of things technologies for monitoring and control strategies in smart agriculture. Agronomy 2022, 12, 127. [Google Scholar] [CrossRef]

- Hassan, M.A.; Javed, A.R.; Hassan, T.; Band, S.S.; Sitharthan, R.; Rizwan, M. Reinforcing Communication on the Internet of Aerial Vehicles. IEEE Trans. Green Commun. Netw. 2022, 6, 1288–1297. [Google Scholar] [CrossRef]

- Hassan, M.A.; Ali, S.; Imad, M.; Bibi, S. New Advancements in Cybersecurity: A Comprehensive Survey. In Big Data Analytics and Computational Intelligence for Cybersecurity; Springer: Cham, Switzerland, 2022; pp. 3–17. [Google Scholar]

- Lateef, S.; Rizwan, M.; Hassan, M.A. Security Threats in Flying Ad Hoc Network (FANET). In Computational Intelligence for Unmanned Aerial Vehicles Communication Networks; Springer: Cham, Switzerland, 2022; pp. 73–96. [Google Scholar]

- Kitzler, F.; Wagentristl, H.; Neugschwandtner, R.W.; Gronauer, A.; Motsch, V. Influence of Selected Modeling Parameters on Plant Segmentation Quality Using Decision Tree Classifiers. Agriculture 2022, 12, 1408. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, L.; Wu, H.; Li, L. Advanced agricultural disease image recognition technologies: A review. Inf. Process. Agric. 2021, 9, 48–59. [Google Scholar] [CrossRef]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2229–2235. [Google Scholar]

- Mardanisamani, S.; Eramian, M. Segmentation of vegetation and microplots in aerial agriculture images: A survey. Plant Phenome J. 2022, 5, 20042. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional Encoder-Decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Xue, C.; Shao, Y.; Chen, K.; Xiong, J.; Xie, Z.; Zhang, L. Semantic segmentation of litchi branches using DeepLabV3+ model. IEEE Access 2020, 8, 164546–164555. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision, ECCV, Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zou, K.; Chen, X.; Wang, Y.; Zhang, C.; Zhang, F. A modified U-Net with a specific data argumentation method for semantic segmentation of weed images in the field. Comput. Electron. Agric. 2021, 187, 106242. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; LGB: Los Angle, CA, USA, 2017; Volume 30. [Google Scholar]

- Mou, L.; Zhao, Y.; Chen, L.; Cheng, J.; Gu, Z.; Hao, H.; Qi, H.; Zheng, Y.; Frangi, A.; Liu, J. CS-Net: Channel and spatial attention network for curvilinear structure segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2019; pp. 721–730. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–14 August 2021; pp. 10347–10357. [Google Scholar]

| Module | Dice Score | Run Time (s) |

|---|---|---|

| U-Net | 0.74 | 1.4 |

| DeepLabV3+ | 0.66 | 2.03 |

| TAU-Net | 0.77 | 1.87 |

| Method | Dice Score | Run Time (s) |

|---|---|---|

| No relative position code added | 0.74 | 1.84 |

| Add relative position code | 0.77 | 1.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, Z.; Yang, W.; Gou, R.; Yuan, Y. TransAttention U-Net for Semantic Segmentation of Poppy. Electronics 2023, 12, 487. https://doi.org/10.3390/electronics12030487

Luo Z, Yang W, Gou R, Yuan Y. TransAttention U-Net for Semantic Segmentation of Poppy. Electronics. 2023; 12(3):487. https://doi.org/10.3390/electronics12030487

Chicago/Turabian StyleLuo, Zifei, Wenzhu Yang, Ruru Gou, and Yunfeng Yuan. 2023. "TransAttention U-Net for Semantic Segmentation of Poppy" Electronics 12, no. 3: 487. https://doi.org/10.3390/electronics12030487

APA StyleLuo, Z., Yang, W., Gou, R., & Yuan, Y. (2023). TransAttention U-Net for Semantic Segmentation of Poppy. Electronics, 12(3), 487. https://doi.org/10.3390/electronics12030487