1. Introduction

Sentiment analysis (SA) is a popular research topic in natural language processing (NLP). It has recently gained a lot of attention due to massive online polls on services and products and the growth of social media data. The three levels of sentiment analysis are document, sentence, and aspect levels. The opinion at the document and sentence levels is about the entirety of the given content (document or sentence), and it is frequently insufficient to express an opinion on the particular issues raised in the text. Aspect-based sentiment analysis, or ABSA for short, is a detailed approach to predicting sentiment that considers specific entities, such as goods, services, events, or organizations, in a particular domain. This type of analysis goes beyond the capabilities of traditional sentiment analysis by providing a more nuanced understanding of the sentiment expressed in a text [

1]. High-level NLP conferences and workshops, including SemEval, have made ABSA one of their primary topics due to its importance and impact on the field.

In fact, the ABSA task was divided into four subtasks for SemEval 2014 task 4. First, there is aspect term extraction (ATE), where the aim is to take out features or aspects of services, goods, or topics that have been discussed in a phrase or a sentence. For example, “This phone’s camera is quite powerful”. The reviewer assessed the phone’s camera in this clause. Similar to named entity recognition (NER), aspect term extraction (ATE) tasks are viewed as sequence-marking problems. Hidden Markov models (HMM) [

2], and conditional random fields (CRF) [

3] are popular techniques for extracting aspects from the text. These methods typically rely on hand-crafted features, bigram analysis, and part-of-speech tagging to identify aspects of the text. Second, aspect polarity detection (APD) refers to identifying the semantic orientation, whether it is positive, negative, neutral, or conflicted, for example, for each aspect that is assessed inside a sentence, such as “The voice on this phone is great”. This particular review is a complimentary and positive judgment of the item, the camera. Third, aspect category detection (ACD), known as a prepared list of aspect categories, is used in the task of ACD, which aims to identify the aspect category being studied in a given statement, such as “The pizza was particularly tasty”. Food is the aspect category. It is regarded as the pizza aspect term’s hypernym. Finally, aspect category polarity (ACP) targets identifying the polarity of the sentiment of the analysed aspect categories in a given sentence. For instance, “The foods were wonderful, but the music was terrible”, is the goal of the assignment known as aspect category polarity. In this review, the author expressed a favourable, positive opinion of the cuisine category but an unfavourable, negative opinion of the ambience category.

Recently, there has been a growing interest in using transfer learning (TL) techniques for NLP tasks [

4]. TL refers to the process of adapting a model pre-trained on a particular task to a new task, typically by fine-tuning the model on a new dataset. One advantage of TL is that it can significantly reduce the amount of labelled data and computational resources needed to train a model for the new task, especially when the new task is related to the original task [

5,

6].

The proposed model will be based on previously trained language models, which have achieved state-of-the-art performance on various NLP tasks. These models will be fine-tuned on a large annotated dataset of Arabic product reviews in order to adapt them to the tasks at hand, ATE and APD. To the best of our knowledge, there is a lack of research on the application of TL to NLP tasks in Arabic. This is particularly true for ATE and APD, which are crucial for understanding and summarizing customer reviews. Therefore, we hope to not only demonstrate the effectiveness of TL for these tasks in Arabic, but also gain insights into how the characteristics of the pre-trained models and the amount of fine-tuning data influence the performance of the TL-based model.

In particular, in this paper, we use TL techniques based on several well-performing pre-trained language models to perform ATE and APD in Arabic reviews simultaneously. The following is a summary of this paper’s core contributions:

Upgrade the Human Annotation of Arabic Dataset (HAAD) in such a way as to make a combination of the two aspect term extraction and aspect polarity detection tasks.

Combine fine-tuned Arabic base BERT [

7] and CRF for the sake of better representation of words to solve the ATE task on an Arabic dataset. This is the first Arabic ABSA work of this kind that we are aware of.

Utilize a cutting-edge approach for fine-tuning the BERT model to enhance the results obtained from fine-tuned ATE and APD tasks.

The rest of this paper is structured as follows.

Section 2 offers a literature review of ABSA and Arabic ABSA.

Section 3 offers the proposed models.

Section 4 presents the specifics of the experiments and the findings of the evaluation. The paper is then concluded in

Section 5, which also outlines this research’s future directions.

2. Related Work

The ABSA is a precise SA assignment that aims to extract aspects and their associated polarity from users’ audits. With Al-Smadi et al. [

8]’s work, the Arabic ABSA task debuted in 2015. For each ABSA task, they also created a set of baseline models. ATE and APD both had an F1 score of 23.4%, whereas APD and ACP each received a precision of 29.7% and 42.6%.

Compared to other NLP tasks, deep learning (DL) approaches are still at an early development stage for ABSA [

9] and particularly for Arabic, which is harder than, for example, English ABSA [

10]. Al-Smadi et al. [

11] proposed two different models using long short-term memory (LSTM) aiming to improve a hotel’s dataset results [

12,

13] in slots 2 and 3. The best results for slot 2 were achieved with BiLSTMCRF (FastText), a 39% improvement over baseline results (F1 value = 69.9% vs. 30.9%). On the other hand, slot 3 gave results comparable to the best model of SemEval 2016 task 5 [

13] (Accuracy = 82.6%).

In Al-Dabet et al. [

10]’s proposal, the model of previous opinion target expression extraction is further improved with more additional CNN layers for a character-level extraction and concatenation with word-level vectors, as well as the class’s attention to grasping the main parts of the sentence. With CBOW being trained on the Wikipedia dataset, a performance reaching an F1 score of 72.8% was achieved. Various tests were also carried out both with and without the CNN model. They demonstrated that the performance of convolution tweets is positively impacted by the character-level vectors recovered by CNN.

In several downstream tasks, including SA, language models have recently produced state-of-the-art outcomes. These models can be customized for downstream NLP tasks utilizing only a little amount of labelled data after being pre-trained with a huge amount of unlabelled text. Due to this, they may overcome the dearth of annotated datasets in low-resource languages such as Arabic and spare themselves the time and resources required to build a new model from the start. OpenAI GPT [

14], XLNET [

15], and BERT [

7] are the three most popular pre-trained language models.

To more accurately depict Arabic words, Fadel et al. [

1] concatenated the embedding of BERT and Flair. AraBERT, the contextualized Arabic language model, and the embedding of Flair were combined to create the Arabic ATE model. This extended layer, BiLSTM-CRF or BiGRU-CRF, was used for sequence tagging. Both the BF-BiLSTM-CRF and the BF-BiGRU-CRF models were proposed. The experimental findings demonstrated that the suggested BF-BiLSTM-CRF configuration performed better than the baseline and other models by reaching an F1 score of 79.7% in the dataset of Arabic hotel reviews.

In Al-Smadi et al. [

11]’s proposed approach, the Bi-LSTM and CRF were used by the authors at, respectively, the word and character level for AABSA. Their result outperformed the baseline on both tasks, particularly in task one, 39%, with 6% in task two. Moreover, in Al-Dabet et al. [

10]’s work, the mechanism of attention for ATE was utilised, and the conducted experiments were reported to be reaching a 72.7% F1 score. Similar results were obtained using BiGRU instead of Bi-LSTM for ATE [

10]. Moreover, Bensoltane and Zaki [

5] proposed a BERT-BiLSTM-CRF model for ATE from the similar aforementioned news dataset.

Moreover, two DL-based methods for ABSA are proposed by Abdelgwad et al. [

16] using gated recurrent unit (GRU) neural networks. The first method is a combination of bidirectional GRU, convolutional neural network (CNN), and CRF called the BGRU–CNN–CRF model, which extracts the main opinionated aspects (OTE). The second method is an interactive attention network based on bidirectional GRU (IAN-BGRU), which is used to identify the sentiment polarity towards the extracted aspects. Furthermore, Gao et al. [

17] presents a short-text aspect-based sentiment analysis method using a hybrid model of CNN and BiGRU. The model takes in corpus sentences and feature words as input and outputs the emotional polarity. Furthermore, Alqaryouti et al. [

18] presents a method for analysing the sentiments in reviews of smart apps that takes into account important aspects mentioned in the reviews. This approach combines the use of domain-specific vocabulary and rules to extract the relevant aspects and classify the sentiments. It employs various language processing techniques and makes use of predetermined rules and lexicons to address difficulties in sentiment analysis and produce a summary of the results.

3. Proposed Model Architecture

This section starts with a reminder of the Arabic BERT architecture and CRF. Then, we explain the proposed approach for ABSA.

3.1. BERT Models for Arabic Language

BERT is implemented using a transformer, a sizeable part that processes sequence input using an encoder and generates an expectation for the task using a decoder. Only the encoder portion of BERT is implemented in order to create a language representation model. BERT accepts a single sequence for embedding and tagging or a pair of sequences for classification as its input. Two further tokens are added at the start (CLS) and end (SEP) of the tokenized sentence before feeding to BERT. By adding a further layer (or layers) on top of BERT, and processing all of the layers at once, BERT can be improved while using fewer resources for downstream NLP tasks. The fundamental BERT architecture was trained in a variety of languages.

There are several reasons why the use of BERT would be justified in the proposed study. Firstly, BERT has been specifically designed to handle the complexities of NLP and has achieved state-of-the-art results on a wide range of NLP tasks. This makes it a strong candidate for use in the study, particularly given that the study is focused on Arabic language processing. Moreover, BERT has multiple Arabic versions that have been trained on large Arabic language corpora, which gives it the ability to capture the nuances and characteristics of the Arabic language. This is important because the specific characteristics of a language can significantly impact the performance of a language model, and using a model that has been trained on a similar language can improve its performance.

As highlighted above, there are a number of different BERT versions dedicated to the Arabic language. With 768 hidden dimensions, 12 blocks of the transformer, 12 attention heads, and a maximum sequence length of 512 tokens, Arabic BERT [

19] employed the standard setup of BERT. CAMeLBERT [

20] was developed as a collection of BERT models that have already been pre-trained on Arabic texts of various sizes and types (modern standard Arabic (MSA), dialectal Arabic (DA), classic Arabic (CA) and a mix of the three). Similarly, the BERT base configuration was utilized by ARABERT [

21]. MARBERT [

22] is another large masked and pre-trained language model focused on both MSA and DA. mBERT [

7] is a multilingual extension of BERT, which is trained on Wikipedia monolingual corpora in 104 languages, including Arabic.

3.2. Conditional Random Fields (CRF) Layer

Strong dependencies between labels must be taken into account in sequence-labelling tasks such as aspect extraction. While BiLSTM or BiGRU can account for long-term context information, they are unable to account for tag dependency while generating output results. These issues can be addressed through CRF. With highly interdependent output labels, the CRF layer is used. Labelling choices are collaboratively modelled with a CRF layer rather than individually, with the goal of producing the best possible global sequence of labels from an input sequence.

Conceptually, conditional random fields are an undirected graphical model for sequence labelling. CRFs can also model a much richer set of label distributions because they can define a much larger set of features, and CRFs can have arbitrary weights. Mathematically, CRF can be stated as follows. Following [

1,

23], we denote by

a given input sequence, and by

the corresponding tag sequence.

The following is the formula for a

y label sequence’s conditional probability given a sequence

x [

24]:

where

x is a feature vector such that

,

is a matrix of size

,

is a matrix of

,

is the ith row in the matrix

, and

is a normalization constant known as a partition function [

25]. In the equation,

is a transition score that represents the score of a transition from the tag

to the tag

. The term

is an emission score that refers to the score of the tag

of the word

. To estimate the parameters

, we use maximum likelihood estimation (MLE). The Viterbi [

26] algorithm is used by the model during testing to predict the best-scoring tag sequence.

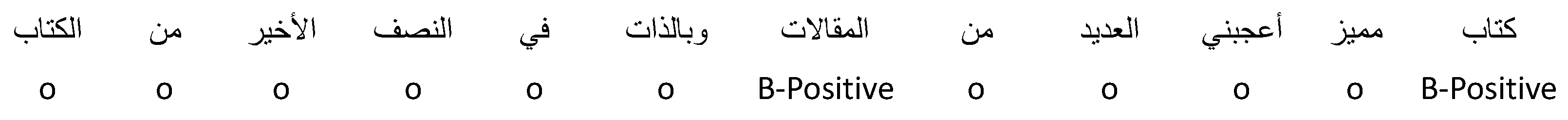

3.3. Proposed Fine-Grained Annotation of Arabic Dataset (HAAD)

The BIO scheme is widely employed for labelling words in an ATE task where B stands for the beginning of an aspect, I stands for inside an aspect, and O stands for outside an aspect, i.e., just a regular word. In this study, we used a more fine-grained annotation which jointly takes into consideration the aspect term extraction and its polarity classification (B-Positive, B-Negative, B-Conflict, B-Neutral, I-Positive, I-Negative, I-Conflict, I-Neutral, O). The biggest benefit of CRF is that it automatically picks up on some constraints for output labels that adhere to the BIO labelling scheme, which helps to validate predicted sequence labels. While learning, these restrictions are automatically learned. The following are some examples of these constraints in the context of our ATE task [

1]: The opening prediction label may read ‘B-Positive’ or ‘O’, but not ‘I-Positive’. The ‘O I-Positive’ pattern is not valid because ‘I-Positive’ should come before ‘B-Positive’. The ‘B-Negative I-Positive’ pattern is invalid because of ‘I-Positive’, and ‘I-Positive’ should be preceded by ‘B-Positive’.

3.4. Proposed Joint Model

In this paper, we jointly solve the ATE and APD tasks.

Figure 1 shows the proposed architecture. First, the input sentence is tokenized with the associated BERT tokenizer to ensure the text is split the same way as the pre-training corpus and minimize the out-of-vocabulary terms. BERT requires input sentences of the same length with a maximum of 512 tokens. Thus, for short sentences, special [PAD] tokens are added to make sentences of equal length. BERT outputs the hidden state or the encoding vector corresponding to each token, including the special tokens. Then, these inputs are fed to a fully connected layer followed by the linear-chain CRF layer that jointly outputs the aspect and the polarity. The goal of CRF is to create a dependency between successive labels or aspects and to ensure the validity of the aspect sequence.

5. Conclusions

In this paper, we have proposed an Arabic-BERT-CRF model that combines contextual pre-trained Arabic-BERT as embedding layers and CRF as a classifier layer. The objective is to jointly solve the aspect term extraction (ATE) and aspect polarity detection (APD) tasks. The experiments were carried out using the HAAD dataset. We also enriched the dataset by manually adding polarity to each aspect. The experimental results show that the joint model Arabic-BERT-CRF outperforms the sequential model where the aspects are extracted first, then polarities are assigned.

The proposed approach was evaluated using the accuracy metric and F1 score to determine its effectiveness with different versions of BERT. The results, displayed in

Table 3, demonstrate that adding a CRF layer on top of any BERT-based model can improve its performance. Additionally, the combination of Arabic base BERT and CRF (the proposed approach) achieved the highest accuracy score of 95.23% and F1 score of 47.63%, outperforming the current state-of-the-art models.

As a potential future step in this line of research, it would be interesting to explore the possibility of jointly addressing the other subtasks of Arabic aspect-based sentiment analysis. This could involve developing a model that is able to simultaneously perform multiple subtasks, such as identifying the aspect and determining the sentiment expressed towards it, rather than addressing each subtask separately. Such a model could potentially be more efficient and effective at performing the overall task of aspect-based sentiment analysis. Additionally, studying the feasibility of jointly solving the subtasks could provide insights into the relationships and dependencies between the subtasks, and potentially lead to the development of new approaches for addressing the overall task.