FPGA/AI-Powered Architecture for Anomaly Network Intrusion Detection Systems

Abstract

:1. Introduction

- We propose an FPGA-based architecture to deploy neural network models for anomaly-based NIDS systems. The ultimate goal of the architecture is to achieve high performance and accuracy.

- We present the implementation of two neural network models, the Anomaly Detection Autoencoder (ADA) and Artificial Neural Classification (ANC), for detecting different attacks on the proposed architecture to verify. While the NSL-KDD dataset accompanies the ADA model, the ANC is built based on the UNSW-NB15 and CIC-IDS2017 datasets. The three datasets exploited in this research are used by most NIDS research in the literature.

- We conduct experiments with the proposed architecture and the implemented models to compare with related work in the literature. Our results can be used as references for other studies and help designers choose a suitable model for a dataset or attack types.

2. Background and Related Work

2.1. Network Intrusion Detection Systems

2.2. Datasets

2.3. Related Work

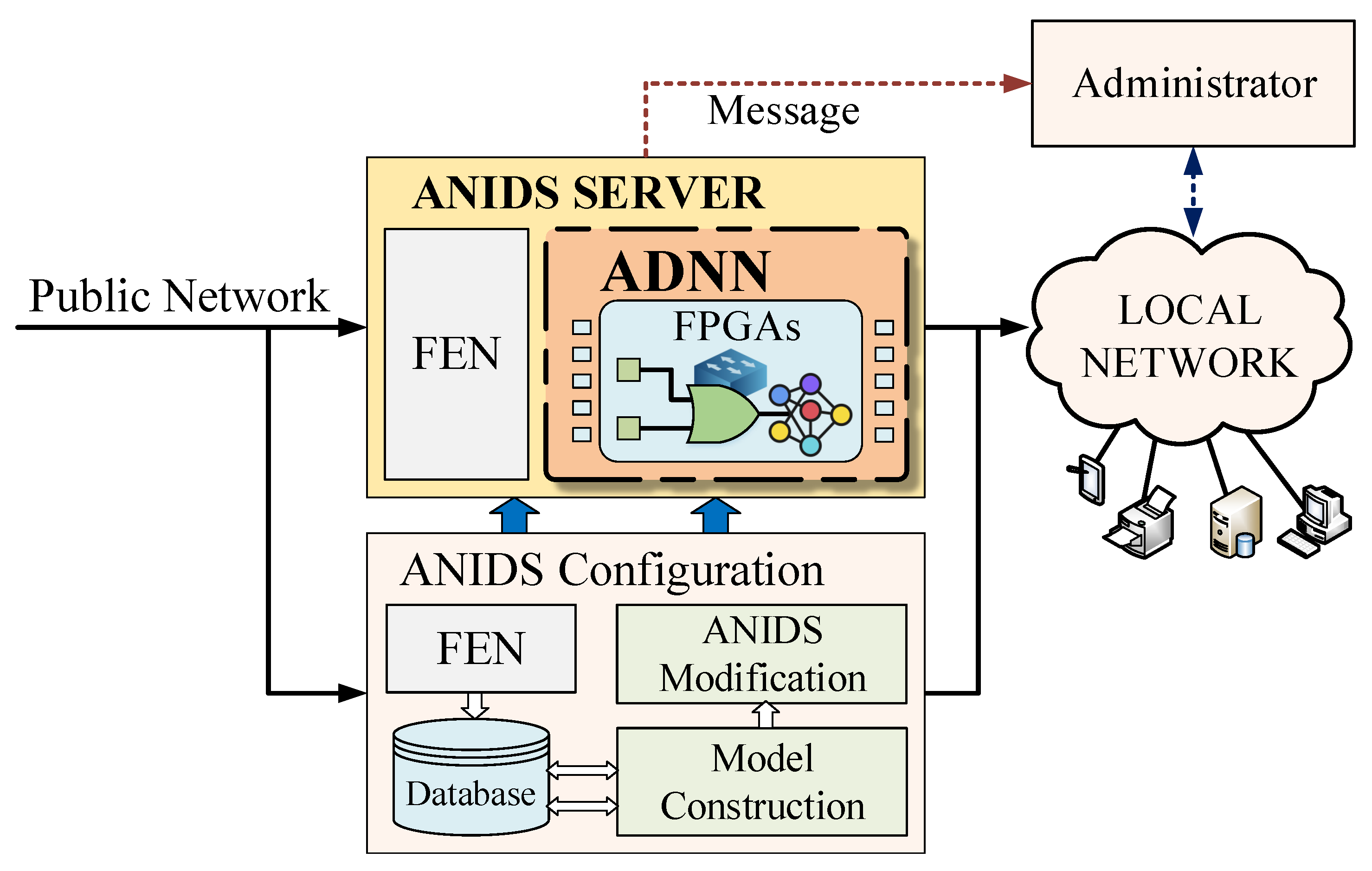

3. Proposed Architecture

3.1. The ANIDS Server

3.2. Anomaly Detection Neural Networks

4. System Implementation

4.1. The NetFPGA-Sume Platform

- Third-generation 8 lanes PCI Express interface with a throughput of 8Gbps/lane and a frequency up to 13.1 GHz. Therefore, configuration data can be transferred between the host processor (ANIDS Configuration) and hardware computing cores in ANIDS Server with a high-bandwidth connection.

- Four external SFP+ ports with a 10-Gigabit Ethernet Connection to communicate with the public and secure local networks.

- Three parallel banks of 72 MBit QDRII+ SRAM up to 500 MHz memory and two DDR3-SoDIMM modules 93 MHz (1866 MT/s).

- Expansion Interfaces with ten high-speed serial links fully compliant VITA-57 FMC-HPC connector, eight high-speed serial links SAMTEC QTH-DP connector.

4.2. Datasets

- The NSL-KDD dataset consists of 41 features. We use the one-hot technique to encode these features. Hence, the number of spatial data dimensions has increased to 122. Since all features are essential in the training and inference processes, we use all of them when building and executing the related neural networks.

- The UNSW-NB15 dataset consists of 47 features; after encoding the features quantity increases to 196. However, some are reduced because of duplication or unrelated to intrusion detection approaches. Hence, we only used 40 features (like other systems in the literature) for building our neural network models.

- The CIC-IDS2017 dataset collected rawvnetwork packets from the CICFlowMeter [38] tool including 84 features. After cleaning the numerical data, there are only 68 features left. Because they are calculated by the CICFlowMeter tool, the features are represented in digital form already. Therefore, data preprocessing is not required.

4.3. Adnn Implementation on NetFPGA-Sume

- Input Arbiter: receiving network packets from the public network through the four input ports (10 G Rx).

- Output Queue: transmitting packets to the corresponding port if packets are legitimate or removing them from the system if ADNN considers them as illegitimate.

- Host Monitor: communicating with the host processor to receive updated neuron network models of sending statistic data to administrators via the host processor.

- Input Parser functions like the input layer for the neuron network models deployed. This engine receives network packets and extracts required features from those packets so that the neuron network models can be executed by processing elements, as explained in the previous section. After extracted and normalized, Features are stored in the Memory engine (FIFO) for processing by PEs in the Neural Layer Core engine.

- Neural Layer Core implements the architecture shown in Figure 3. This is the main part of the work, i.e., the heart of the system. In this implementation, two neuron network models, ADA and ANC, are deployed and processed by the PEs, as explained in the previous section. The next subsection explains the details of the two models.

- Memory, in the form of a FIFO, stores input packets received from the Input Parser to process by the neurons networks. These packets are then forwarded to the Output Queue for delivery to corresponding output ports or removal from the system according to the decision from Classification Result

- Classification Result receives outputs from the neuron network models and determines if a network packet and its data stream are normal or abnormal.

4.4. Anomaly Detection Autoencoder-ADA

4.5. Artificial Neural Classification-ANC

5. Experiments

5.1. Experimental Setup

- First, the proposed ANIDS is installed on the NetFPGA-sume board handled by an Intel Core i5 processor with 8 GB of RAM running Ubuntu 16.04. The system is connected with a high-speed switch and assigned an IP address of 172.28.25.173.

- Second, an Intel Core i5 processor with 8 GB of RAM running Ubuntu 12.04 hosts one NetFPGA-10G board installed with OSNT to measure the bandwidth of the proposed ADNN system. This computer is assigned an IP address of 172.28.25.184.

- Third, the last Intel Core i5 processor with the same configuration hosts one Intel-10G network card to install a testing agent (the tcpreplay tool [40]) with IP address 172.28.25.172. This computer is used to measure the accuracy of the proposed system.

5.2. Synthesis Results

5.3. Throughput and Bandwidth Analysis

5.4. Accuracy Analysis

5.4.1. Evaluation Metrics

5.4.2. Ada Approach with NSL-KDD Dataset

5.4.3. Anc Approach with UNSW-NB15 Dataset

5.4.4. Anc Approach with the CIC-IDS2017 Dataset

5.5. State-of-the-Art Comparison

| System | Accuracy | Precision | Recall |

|---|---|---|---|

| Our proposed ANC | 87.49 | 75.09 | 96.95 |

| DNN by Vinayakumar et al. [29] | 76.10 | 95.10 | 68.40 |

| 2-Stage Ensemble by Tama et al. [18] | 91.27 | 91.60 | 91.30 |

| MSCNN-LSTM-AE [49] | 89.00 | 88.00 | 89.00 |

| SVM by Jing et al. [50] | 75.77 | 50.90 | 84.74 |

| LogAE-XGBoost by Xu et al. [48] | 95.11 | 95.49 | 97.43 |

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vailshery, L. Number of Internet of Things (IoT) Connected Devices Worldwide from 2019 to 2030. Available online: https://www.statista.com/statistics/1183457/iot-connected-devices-worldwide/ (accessed on 27 November 2022).

- Ahmed, M.; Naser Mahmood, A.; Hu, J. A survey of network anomaly detection techniques. J. Netw. Comput. Appl. 2016, 60, 19–31. [Google Scholar] [CrossRef]

- García-Teodoro, P.; Díaz-Verdejo, J.; Maciá-Fernández, G.; Vázquez, E. Anomaly-based network intrusion detection: Techniques, systems and challenges. Comput. Secur. 2009, 28, 18–28. [Google Scholar] [CrossRef]

- Guo, K.; Zeng, S.; Yu, J.; Wang, Y.; Yang, H. [DL] A Survey of FPGA-Based Neural Network Inference Accelerators. ACM Trans. Reconfigurable Technol. Syst. 2019, 12, 1–26. [Google Scholar] [CrossRef]

- Mittal, S. A survey of FPGA-based accelerators for convolutional neural networks. Neural Comput. Appl. 2020, 32, 1109–1139. [Google Scholar] [CrossRef]

- Axelsson, S. Intrusion Detection Systems: A Survey and Taxonomy. 2000. Available online: http://www.cse.msu.edu/~cse960/Papers/security/axelsson00intrusion.pdf (accessed on 27 November 2022).

- Ahmad, Z.; Shahid Khan, A.; Wai Shiang, C.; Abdullah, J.; Ahmad, F. Network intrusion detection system: A systematic study of machine learning and deep learning approaches. Trans. Emerg. Telecommun. Technol. (ETT) 2021, 32, e4150. [Google Scholar] [CrossRef]

- Rai, K.; Devi, M.S.; Guleria, A. Decision tree based algorithm for intrusion detection. Int. J. Adv. Netw. Appl. 2016, 7, 2828. [Google Scholar]

- Farnaaz, N.; Jabbar, M. Random forest modeling for network intrusion detection system. Procedia Comput. Sci. 2016, 89, 213–217. [Google Scholar] [CrossRef] [Green Version]

- Dhaliwal, S.S.; Nahid, A.A.; Abbas, R. Effective intrusion detection system using XGBoost. Information 2018, 9, 149. [Google Scholar] [CrossRef] [Green Version]

- Karatas, G.; Demir, O.; Sahingoz, O.K. Increasing the performance of machine learning-based IDSs on an imbalanced and up-to-date dataset. IEEE Access 2020, 8, 32150–32162. [Google Scholar] [CrossRef]

- Yan, B.; Han, G. Effective feature extraction via stacked sparse autoencoder to improve intrusion detection system. IEEE Access 2018, 6, 41238–41248. [Google Scholar] [CrossRef]

- Ghanem, K.; Aparicio-Navarro, F.J.; Kyriakopoulos, K.G.; Lambotharan, S.; Chambers, J.A. Support vector machine for network intrusion and cyber-attack detection. In Proceedings of the 2017 Sensor Signal Processing for Defence Conference (SSPD), London, UK, 6–7 December 2017; pp. 1–5. [Google Scholar]

- Li, G.; Niu, P.; Duan, X.; Zhang, X. Fast learning network: A novel artificial neural network with a fast learning speed. Neural Comput. Appl. 2014, 24, 1683–1695. [Google Scholar] [CrossRef]

- Ali, M.H.; Al Mohammed, B.A.D.; Ismail, A.; Zolkipli, M.F. A new intrusion detection system based on fast learning network and particle swarm optimization. IEEE Access 2018, 6, 20255–20261. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Shen, Y.; Zheng, K.; Wu, C.; Zhang, M.; Niu, X.; Yang, Y. An ensemble method based on selection using bat algorithm for intrusion detection. Comput. J. 2018, 61, 526–538. [Google Scholar] [CrossRef]

- Gao, X.; Shan, C.; Hu, C.; Niu, Z.; Liu, Z. An adaptive ensemble machine learning model for intrusion detection. IEEE Access 2019, 7, 82512–82521. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Mittal, M.; Iwendi, C.; Khan, S.; Rehman Javed, A. Analysis of security and energy efficiency for shortest route discovery in low-energy adaptive clustering hierarchy protocol using Levenberg-Marquardt neural network and gated recurrent unit for intrusion detection system. Trans. Emerg. Telecommun. Technol. (ETT) 2021, 32, e3997. [Google Scholar] [CrossRef]

- Yin, C.; Zhu, Y.; Fei, J.; He, X. A deep learning approach for intrusion detection using recurrent neural networks. IEEE Access 2017, 5, 21954–21961. [Google Scholar] [CrossRef]

- Xu, C.; Shen, J.; Du, X.; Zhang, F. An intrusion detection system using a deep neural network with gated recurrent units. IEEE Access 2018, 6, 48697–48707. [Google Scholar] [CrossRef]

- Naseer, S.; Saleem, Y.; Khalid, S.; Bashir, M.K.; Han, J.; Iqbal, M.M.; Han, K. Enhanced network anomaly detection based on deep neural networks. IEEE Access 2018, 6, 48231–48246. [Google Scholar] [CrossRef]

- Malaiya, R.K.; Kwon, D.; Kim, J.; Suh, S.C.; Kim, H.; Kim, I. An empirical evaluation of deep learning for network anomaly detection. In Proceedings of the 2018 International Conference on Computing, Networking and Communications (ICNC), Maui, HI, USA, 5–8 March 2018; pp. 893–898. [Google Scholar]

- Yang, Y.; Zheng, K.; Wu, B.; Yang, Y.; Wang, X. Network intrusion detection based on supervised adversarial variational auto-encoder with regularization. IEEE Access 2020, 8, 42169–42184. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, J.; Zhou, Y.; Han, L.; Lin, J. A multiple-layer representation learning model for network-based attack detection. IEEE Access 2019, 7, 91992–92008. [Google Scholar] [CrossRef]

- Yu, Y.; Bian, N. An Intrusion Detection Method Using Few-Shot Learning. IEEE Access 2020, 8, 49730–49740. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Alazab, M.; Soman, K.; Poornachandran, P.; Al-Nemrat, A.; Venkatraman, S. Deep learning approach for intelligent intrusion detection system. IEEE Access 2019, 7, 41525–41550. [Google Scholar] [CrossRef]

- Hoque, N.; Kashyap, H.; Bhattacharyya, D. Real-time DDoS attack detection using FPGA. Comput. Commun. 2017, 110, 48–58. [Google Scholar] [CrossRef]

- P N, S.; KT, M. Neural Network based ECG Anomaly Detection on FPGA. Asian J. Converg. Technol. (AJCT) 2019, 5, 1–4. Available online: https://asianssr.org/index.php/ajct/article/view/883 (accessed on 27 November 2022).

- Tran, C.; Vo, T.N.; Thinh, T.N. HA-IDS: A heterogeneous anomaly-based intrusion detection system. In Proceedings of the 2017 4th NAFOSTED Conference on Information and Computer Science, Hanoi, Vietnam, 24–25 November 2017; pp. 156–161. [Google Scholar] [CrossRef]

- Ngo, D.M.; Tran-Thanh, B.; Dang, T.; Tran, T.; Thinh, T.N.; Pham-Quoc, C. High-Throughput Machine Learning Approaches for Network Attacks Detection on FPGA. In Proceedings of the Context-Aware Systems and Applications, and Nature of Computation and Communication, My Tho City, Vietnam, 28–29 November 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 47–60. [Google Scholar]

- Ngo, D.M.; Pham-Quoc, C.; Thinh, T.N. Heterogeneous Hardware-based Network Intrusion Detection System with Multiple Approaches for SDN. Mob. Netw. Appl. 2020, 25, 1178–1192. [Google Scholar] [CrossRef]

- Zilberman, N.; Audzevich, Y.; Covington, G.; Moore, A. NetFPGA SUME: Toward 100 Gbps as research commodity. Micro IEEE 2014, 34, 32–41. [Google Scholar] [CrossRef]

- NetFPGA. NetFPGA SUME. Available online: https://netfpga.org/NetFPGA-SUME.html (accessed on 30 May 2022).

- Digilent. NetFPGA-SUME Virtex-7 FPGA Development Board. Available online: https://digilent.com/shop/netfpga-sume-virtex-7-fpga-development-board/ (accessed on 30 May 2022).

- Arash Habibi, L.; Amy, S.; Gerard Drapper, G.; Ali, G. CIC-AB: An Online Ad Blocker for Browsers. In Proceedings of the 2017 International Carnahan Conference on Security Technology (ICCST), Madrid, Spain, 23–26 October 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 27 November 2022).

- Home, T. Tcpreplay-Pcap Editing and Replaying Utilities. Available online: https://tcpreplay.appneta.com/ (accessed on 30 May 2022).

- Xilinx, A. Get Started with Vivado. Available online: https://www.xilinx.com/developer/products/vivado.html (accessed on 30 May 2022).

- Xilinx, A. Vivado Overview. Available online: https://www.xilinx.com/products/design-tools/vivado.html (accessed on 30 May 2022).

- netfpga-sume github. NetFPGA-SUME Vivado Reference Operating System Setup Guide. Available online: https://github.com/NetFPGA/NetFPGA-SUME-public/wiki/Reference-Operating-System-Setup-Guide (accessed on 30 May 2022).

- Hossin, M.; Sulaiman, M.N. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1. [Google Scholar]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation Forest. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, X.; Li, R.; Song, Y.; He, J.; Lai, J. Network intrusion detection based on conditional Wasserstein generative adversarial network and cost-sensitive stacked autoencoder. IEEE Access 2020, 8, 190431–190447. [Google Scholar] [CrossRef]

- Al-Qatf, M.; Lasheng, Y.; Al-Habib, M.; Al-Sabahi, K. Deep learning approach combining sparse autoencoder with SVM for network intrusion detection. IEEE Access 2018, 6, 52843–52856. [Google Scholar] [CrossRef]

- Xu, W.; Fan, Y. Intrusion Detection Systems Based on Logarithmic Autoencoder and XGBoost. Secur. Commun. Netw. 2022, 2022, 1–8. [Google Scholar] [CrossRef]

- Singh, A.; Jang-Jaccard, J. Autoencoder-based Unsupervised Intrusion Detection using Multi-Scale Convolutional Recurrent Networks. arXiv 2022, arXiv:2204.03779. [Google Scholar]

- Jing, D.; Chen, H.B. SVM Based Network Intrusion Detection for the UNSW-NB15 Dataset. In Proceedings of the 2019 IEEE 13th International Conference on ASIC (ASICON), Chongqing, China, 29 October–1 November 1 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Andresini, G.; Appice, A.; Mauro, N.D.; Loglisci, C.; Malerba, D. Multi-Channel Deep Feature Learning for Intrusion Detection. IEEE Access 2020, 8, 53346–53359. [Google Scholar] [CrossRef]

- Azizan, A.H.; Mostafa, S.A.; Mustapha, A.; Foozy, C.F.M.; Wahab, M.H.A.; Mohammed, M.A.; Khalaf, B.A. A machine learning approach for improving the performance of network intrusion detection systems. Ann. Emerg. Technol. Comput. (AETiC) 2021, 5, 201–208. [Google Scholar] [CrossRef]

- Zhang, H.; Li, J.L.; Liu, X.M.; Dong, C. Multi-dimensional feature fusion and stacking ensemble mechanism for network intrusion detection. Future Gener. Comput. Syst. 2021, 122, 130–143. [Google Scholar] [CrossRef]

| Feature Types | Feature Meaning |

|---|---|

| src_ip, dst_ip | IP address of source and destination |

| src_port, dst_port | Port number of source and destination |

| pkt_count | Number of packets in an interval |

| pkt_length | The length of packets |

| flag | The flag value in the header field of a packet |

| protocol | Network protocol, for example TCP, ICMP, or UDP |

| appname | Name of the application where packets come |

| Label | Training | Testing |

|---|---|---|

| Normal | 67,343 | 9711 |

| Dos | 45,927 | 7458 |

| Probe | 11,656 | 2421 |

| U2R | 52 | 200 |

| R2L | 995 | 2754 |

| Total | 125,973 | 22,544 |

| Label | Training | Testing |

|---|---|---|

| Normal | 56,000 | 37,000 |

| Analysis | 2000 | 677 |

| Backdoor | 1746 | 583 |

| DoS | 12,264 | 4089 |

| Exploits | 33,393 | 11,032 |

| Fuzzers | 18,184 | 5680 |

| Generic | 40,000 | 18,071 |

| Reconnaissance | 10,491 | 3096 |

| Shellcode | 1133 | 378 |

| Worms | 130 | 44 |

| Total | 175,341 | 80,650 |

| Label | Training | Testing |

|---|---|---|

| Benign | 1,657,165 | 414,493 |

| Bot | 1549 | 399 |

| Brute Force | 7305 | 1845 |

| DoS | 257,341 | 64,292 |

| Infiltration | 27 | 9 |

| PortScan | 72,719 | 17,975 |

| Web Attack | 1703 | 440 |

| Total | 125,973 | 499,453 |

| Model | Max Accuracy | Threshold |

|---|---|---|

| 122 × 64 × 32 × 16 × 32 × 64 × 122 | 86.26% | 0.0005 |

| 122 × 64 × 122 | 90.87% | 0.0010 |

| 122 × 32 × 122 | 87.48% | 0.0005 |

| 122 × 8 × 122 | 86.98% | 0.0005 |

| Parameter | Value |

|---|---|

| The number of input | 122 |

| The number of node hidden | 64 |

| The number of output node | 122 |

| Batch Size | 5 |

| Epoch | 20 |

| Activation function hidden layer | Relu |

| Activation function output layer | Sigmoid |

| Loss Function | Mean Squared Error |

| Model | Max Accuracy | Loss |

|---|---|---|

| 40 × 8 × 48 × 8 × 32 × 8 × 16 × 8 × 1 | 0.851 | 0.1025 |

| 40 × 8 × 40 × 8 × 40 × 8 × 1 | 0.8749 | 0.0907 |

| 40 × 8 × 32 × 8 × 1 | 0.845 | 0.0960 |

| Parameter | Value |

|---|---|

| The number of hidden layer | 2 |

| The number of node first hidden layer | 40 |

| The number of node second hidden layer | 40 |

| The number of node in output layer | 1 |

| Batch Size | 5 |

| Epoch | 20 |

| Activation function hidden layer | Relu |

| Activation function output layer | Sigmoid |

| Loss Function | Mean Squared Error |

| Resource | ADA Model | ANC Model | Available | ||

|---|---|---|---|---|---|

| Amount | Percentage | Amount | Percentage | ||

| LUT | 90,859 | 20.97 | 92,661 | 21.39 | 433,200 |

| LUTRAM | 5185 | 2.98 | 8106 | 4.65 | 174,200 |

| FF | 131,341 | 15.16 | 131,592 | 15.19 | 866,400 |

| BRAM | 285.5 | 19.42 | 214.5 | 14.59 | 1470 |

| DSP | 245 | 6.81 | 132 | 3.67 | 3600 |

| Power (W) | 11.27 | 10.32 | |||

| Max Frequency (MHz) | 206.7 | 204.2 | |||

| Label | Total Packets | Predict Normal | Predict Anomaly |

|---|---|---|---|

| Label Normal | 9711 | 8277 (TN) | 1434 (FP) |

| Label Anomaly | 12,833 | 624 (FN) | 12,209 (TP) |

| Label | Total Packets | Predict Normal | Predict Anomaly |

|---|---|---|---|

| Label Normal | 37,000 | 27,785 (TN) | 9215 (FP) |

| Label Anomaly | 43,650 | 875 (FN) | 42,775 (TP) |

| Label | Total packets | Predict Normal | Predict Anomaly |

|---|---|---|---|

| Label Normal | 414,493 | 410,866 (TN) | 3627 (FP) |

| Label Anomaly | 84,960 | 5270 (FN) | 79,690 (TP) |

| System | Accuracy | Precision | Recall |

|---|---|---|---|

| Our proposed ADA | 90.87 | 85.23 | 92.99 |

| CWGAN-CSSAE by Zhang et al. [46] | 90.34 | 96.74 | 85.93 |

| DNN by Vinayakumar et al. [29] | 80.10 | 69.2 | 96.9 |

| Ensemble Voting MultiTree [18] | 85.2 | 86.4 | 84.23 |

| SAE-SVM by Al-Qatf et al. [47] | 84.96 | 96.24 | 76.57 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pham-Quoc, C.; Bao, T.H.Q.; Thinh, T.N. FPGA/AI-Powered Architecture for Anomaly Network Intrusion Detection Systems. Electronics 2023, 12, 668. https://doi.org/10.3390/electronics12030668

Pham-Quoc C, Bao THQ, Thinh TN. FPGA/AI-Powered Architecture for Anomaly Network Intrusion Detection Systems. Electronics. 2023; 12(3):668. https://doi.org/10.3390/electronics12030668

Chicago/Turabian StylePham-Quoc, Cuong, Tran Hoang Quoc Bao, and Tran Ngoc Thinh. 2023. "FPGA/AI-Powered Architecture for Anomaly Network Intrusion Detection Systems" Electronics 12, no. 3: 668. https://doi.org/10.3390/electronics12030668