1. Introduction

A Face Recognition algorithm uses machine learning techniques to detect and identify human faces by analyzing visual patterns in visual data [

1]. One of the key advantages of facial recognition systems is that they allow users to be passively authenticated [

2] that is, they allow users to prove their authenticity simply by being in the room without having to interact with the system at all. Video surveillance, access control, forensics, and social media are some of the many applications in which facial recognition systems are used.

As explained by [

3], facial recognition systems have six stages. Preprocessing is the first stage. An area of interest is aligned when faces are detected in the visual input. Using preprocessed input, face features are extracted at the second stage. During the final stage, extracted face features are compared with features in the database for matching results. An identification is based on facial features stored in memory, or a verification is based on matching results.

The face recognition authentication system has a number of advantages and limitations, as all biometric subsystems. It is more secure to use biometric authentication than to use conventional password authentication [

4]. As biometric traits cannot be forged, registration is required, preventing false authentication [

5], and each person’s data is unique [

6]. An important drawback of biometric authentication systems is that they are susceptible to spoofing attacks, as well as attacks on deep learning and machine learning systems. In spoofing attacks, attackers present false biometric information in order to gain credibility [

7]. Synthesis is considered a spoofing attack by [

8]. According to [

9,

10], reply attacks can also be described as spoofing attacks. Machine learning and deep learning models can be attacked in a variety of ways, including adversarial attacks [

4] and poisoning attacks [

11]. In this paper a method for preventing spoofing attacks on face recognition system is proposed by integrating a model for image encryption on recognition process. Image encryption model is used to encrypt preprocessed face images that are used to train and test a face recognition model based on Linear Discriminant Analysis (LDA) algorithm. Extracted features enrolled on features database are encrypted, meaning that in order to gain authenticity face image needs to be encrypted with correct image encryption key in order for classifier to correctly identify or verify submitted input. With that effectiveness of spoofing attacks are minimized; other than illegitimately obtaining copies of face images of authenticated individuals and submit it to system, an attacker needs to encrypt face image with correct key as well.

In order for image encryption models to provide this extra layer of security, they must offer high encryption performance and be resistant to brute force attacks. The image encryption model used in the recognition process is based on the XOR operation and a special type of Cellular Automata called the Outer Totalistic Cellular Automata (OTCA). XOR is applied to pixels bits for pixel substitution, while CA is used for image scrambling. Pixel substitution involves changing the original values of pixels using mathematical operations, and then applying the reverse operation to recover the original values [

12]. By shuffling the original pixel locations on an image, image scrambling breaks the high correlation between pixels that were originally adjacent [

13]. In order to generate complex structures from simple structures, CA can utilize simple structures [

14], making it an excellent choice for image scrambling applications [

15]. Most of studies on facial recognition systems focus on increasing recognition accuracy of model on various datasets however, not enough work is presented in addressing weaknesses of such systems including spoofing issues. This paper proposes a solution to spoofing issue on facial recognition through addition of an image encryption step in recognition process. The main goal is to build a facial recognition system that correctly classifies encrypted facial images for each subject in a selected faces dataset. The face recognition model is based on LDA and is trained using a training set formed of encrypted subjects’ face images. Testing the model is conducted using two testing sets the first of which is formed of the remaining encrypted subjects’ face images whereas the other will consist of the same remaining images however, in this case the images used are the original or decrypted images. Encryption of face images is implemented with new method based on XOR pixels substitution and scrambling based on CA. The contributions of this paper are as follows:

The encryption of images consists of two main stages. Pixel substitution is the first stage of the process, during which each pixel value is substituted by a new value generated by performing an XOR operation on each pixel bit. A second stage involves shuffled pixel positions into new positions using CA during the pixels scrambling stage.

A Linear Discriminant Analysis (LDA) is used to extract features from encrypted images and a Random Forest classifier is used to classify them. LDA reduces the dimensionality of the feature space to maximize the separation between classes by transforming the feature space. Consequently, more discriminative features are generated, improving the performance of the classifier. Random Forest is a classification method that uses multiple decision trees to classify data. The method has a high degree of accuracy and is robust to overfitting. Combining LDA and Random Forest classifiers makes for a powerful face recognition algorithm.

The use of encrypted face images to train the model causes the model to recognize test images encrypted with the same encryption key only with high accuracy. This drastically reduces the effectiveness of spoofing attacks as an attacker would need to encrypt images with the same key in addition to obtaining an artificial copy of an authenticated individual face image.

The rest of this paper is organized as follows. On second section related work to image scrambling and face recognition with LDA is presented; third section explains implemented methodology; fourth section demonstrates results; last section concludes the study.

2. Related Work

Using universal rules, CA cells can change their state every discrete time step in response to their neighbors. According to [

16], CA-based image encryption works directly on pixels to encrypt images. Aside from its ease of use, CA image encryption provides high security, parallel computing capabilities, and high performance [

16]. In addition to image encryption, CA is capable of encrypting other types of information as well. Using a reversible CA based block cipher algorithm [

17], proposed an algorithm that could handle CPUs with different core counts and supported scalability beyond 128 bits. The security framework offered by [

18] is made up of three stages. The first is entity authentication with a zero-knowledge protocol, while the second and third stages are encryption and decryption with CAs. Several CA-based image scrambling techniques have been developed for use in image encryption [

15,

19,

20,

21,

22,

23,

24]. Those studies found CA scrambling to be effective against a variety of attacks, breaking high rates of correspondence between adjacent pixels. It has been found that a large number of CA-based image scrambling techniques have been developed [

14,

18,

19,

20,

21,

22,

23]. In those studies, CA scrambling performed well against different types of attacks, breaking high correlation between adjacent pixels. The two-dimensional CA was used by [

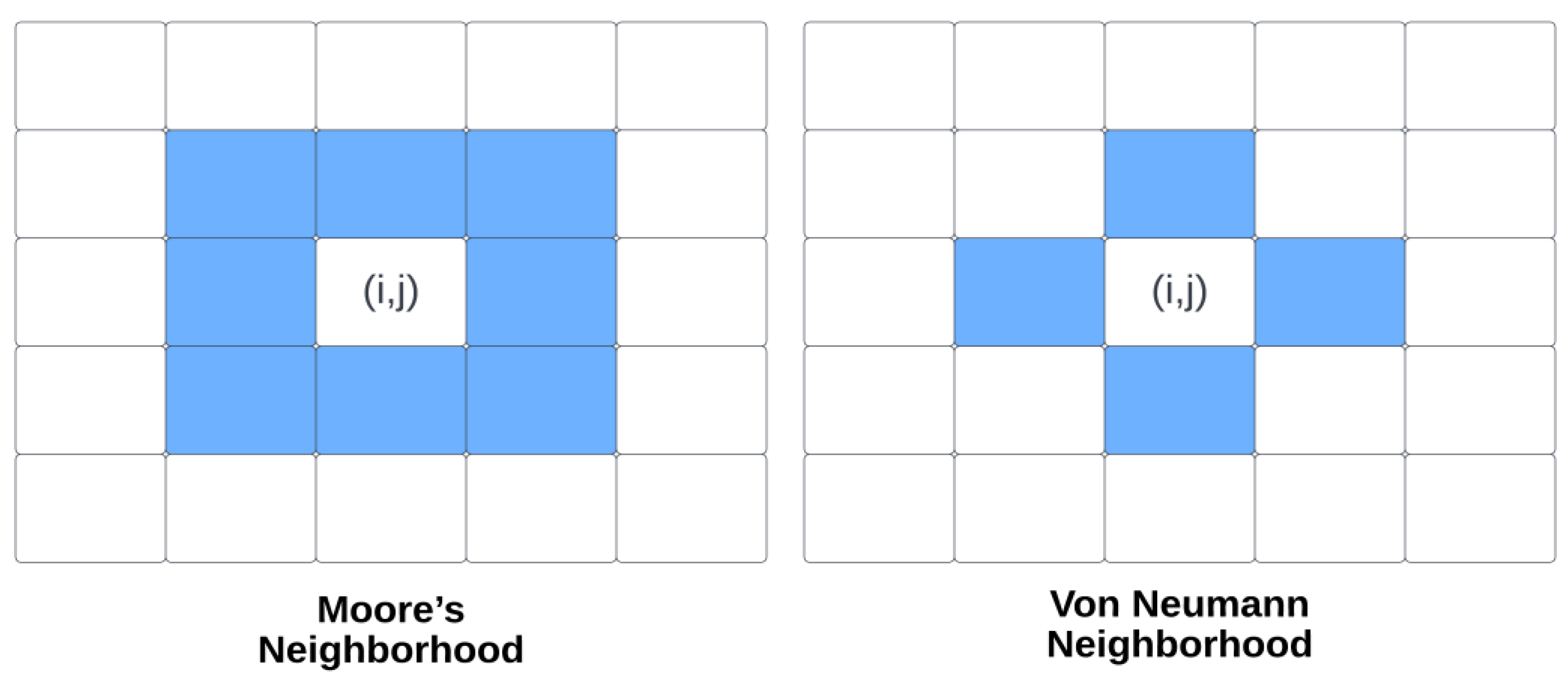

15] for image scrambling. A number of different configurations, such as evolved generations, neighborhood configurations, boundary conditions, and rules with lambda values near critical values, were examined for their effect on

GDD metric scrambling performance. By using all lattices evolved from the initial lattice, the method scrambles the image. An empty lattice is created, then on that lattice pixel locations that correspond to live cells on first scrambling matrix take the pixel’s values of original subject image starting from top-leftmost cell then proceeding in row major order. For the remaining scrambling matrices, pixels at locations that have already been filled are skipped during the process. Pixels are copied from the original image to dead cells in row major order on the scrambling matrix. Based on the obtained results, higher generation scrambling results had better Gray Difference Degree (

GDD). Additionally, there was a higher

GDD achieved when Moore’s configuration with periodic boundary conditions was used. Among the rules tested, lambda values ranged from 0.20703 to 0.41406. The highest

GDD value on tested images was achieved by Rule 224—Game of Life.

It was investigated by [

20] whether other 2D-OTCA rules could be applied to scramble images besides Game of Life. Instead of using Moore’s rule, the authors use von Neumann neighborhood configuration. Different OCTA rules are experimented on and

GDD is used for evaluating scrambling performance. Boundary conditions and generation selection are also taken into consideration. An initially generated lattice is evolved k times based on a randomly generated initial lattice. An image of the subject is scrambled using the final evolved lattice. On an empty lattice, pixel values of the original subject image are taken from the top-leftmost cell and proceed in row major order until every cell on the lattice corresponds to an alive cell on the scrambling matrix. By copying pixels from the original image to dead cells on the scrambling matrix, each pixel will correspond to a cell on the matrix in row major order. According to rule 171, this technique showed the highest

GDD results of all the proposed techniques. As compared to other techniques, this technique took significantly less time to compute. Gray image encryption algorithm developed by [

23] uses 2D CA to scramble the image at the bit level. Binary images representing bits are generated from an image by converting it into 8 binary images. An initial configuration lattice of 8 binary images is created and evolved using a B3/S1234 CA rule. As a result, 8 binary images of the original image are scrambled independently using evolved 8 binary lattices in the same way, thereby changing their positions and values simultaneously.

A modification to the 2D CA image scrambling technique proposed by [

15] was implemented by [

21], which resulted in better

GDD scrambling. In the same way, all evolved lattices are used to scramble data. Using row major order, pixels on the original image that correspond to live cells on the scrambling matrix are copied to an empty lattice. On the remaining locations on row major order, the remaining pixels are copied as well. For the remaining scrambling matrices, repeat the same procedure. A scrambling lattice is evolved according to Game of Life 224 rules. Based on the results, the best

GDD is achieved when periodic boundary conditions, Moore’s neighborhood, and more generations are included up to eight. Based on periodic boundary conditions, Moore’s neighborhood, and Moore’s neighbors, the highest

GDD for eight generations was 0.9551.

In [

19], CA was proposed for scrambling and watermarking images. Chaos can be detected in fractal CA rules by analyzing fractal box dimensions. After creating an initial lattice, a lattice is evolved based on a selected CA to scramble the image. This process scrambles the image as an initial step in watermarking. Furthermore, watermarked images produced using this scheme are less susceptible to noise attacks, cropping, and JPEG compression.

The author of [

22] proposed using OTCA for encrypting images at the bit level. In rule 534 and rule 816, the bit values and locations of the original images are simultaneously changed with high computational efficiency at the bit level. An analysis of histograms and entropies indicates that the encryption method is robust. Furthermore, the key space is highly sensitive in addition to being large. It was found that each test image had an

NPCR of nearly 100%, an Entropy of over 7.2, and a correlation almost equal to zero in each direction. Based on histogram analysis, encrypted images cannot be distinguished from their originals.

A method for scrambling images that uses ECA was proposed by [

24]. ECA rules were used to test scrambling performance in classes 3 and 4. The scrambling method converts original images into 1D vectors. After k generations of evolution, a random 1D lattice is scrambled. Pixels are copied from the original image onto the empty 1D lattice and positioned where the live cells are on the scrambling lattice corresponding to pixels in the original image. Similarly, scrambling matrices with pixels already filled will skip matrices with unfilled pixels. As pixels are copied onto dead cells in the scrambling matrix, they correspond to the pixels that are still in the original image. An output 2D matrix is generated after converting a 1D vector to a 2D matrix. Using ECA for scrambling did not result in any difference in performance between

GDD and 2D CA, and in some cases, performance was even better. A high

GDD was obtained with Rule 22 when boundary conditions were combined with ten generation numbers. In class 3 rules tested (22, 30, 126, 150, 182), the GDDs were higher than in class 4 rules (rule 110).

As real-time processors become more common, research on automatic recognition of faces has become quite active, aiming to facilitate commercial applications by taking advantage of the human ability to recognize faces as special objects. The analysis of human facial images has been the subject of numerous studies. There are several ways in which facial features can be used to discriminate between people based on their gender, race, and age. In studies that used subjective psychovisual experiments, these features have been analyzed for their significance. Linear discriminant analysis (LDA) can be used to recognize faces by maximizing within-class scatter and minimizing between-class scatter through the combination of within- and between-class scatter. With LDA, different features of the face are objectively evaluated for their significance in identifying the human face. Using LDA for recognition can also yield a few features. LDA overcomes the limitations of Principle Component Analysis by using a linear discriminant criterion. By using this criterion, the projected samples’ between-class scatter matrix is compared with their within-class scatter matrix in order to maximize that ratio. A linear discriminant used to classify images results in the separation of images into different categories.

A variety of methods for analyzing the features of the face are described in the literature based on local linear discriminants. Through the use of nonparametric discriminant analysis (NDA) and multiclassifier integration, Ref [

25] developed a new framework for face recognition. The principal space and null space of the intra-class scatter matrix are being used to improve two multi-class NDA algorithms (NSA and NFA). The NFA uses classification boundary information more effectively than the NSA. Ref [

26] also proposes enhancing order-based coding capabilities to increase intrinsic structure detection in facial images in addition to enhancing local textures. By selecting the most discriminatory subspace, multimodal features can be automatically merged. In order to produce robust similarity measurements and discriminant analyses, adaptive interaction functions are used to suppress outliers in each dimension. In order to address the classification issue raised by a compact feature representation, the sparsity-driven regression model is modified. “Exponential LDE” (ELDE) is a new discriminant technique introduced by [

27]. ELDE can be viewed as a compromise between the two-dimensional extension of LDE and the local discriminant embedding (LDE). Using the proposed framework, the SSS problem is overcome by eliminating the null space associated with locality-preserving scatter matrices. Distance diffusion mapping transforms original data into a new space and then applies LDE to the new space, similar to kernel-based nonlinear mapping. Increased margins between samples of different classes improve classification accuracy. The [

28] method uses the local geometry structure of the data while applying a globally discriminatory structure from linear discriminant analysis, which maximizes between-class scatter while minimising within-class scatter. In kernel feature spaces, nonlinear features can also be produced through the optimization of an objective function.

A new ensemble approach for discovering discriminative patterns has been developed by [

29]: the many-kernel random discriminant analysis (MK-RDA). In the proposed ensemble method, the authors incorporate a salience-aware strategy whereby random features are chosen on the semantic components of the scrambled domain using salience modeling. By optimizing binary template discriminability, Ref [

30] proposes a new binarization scheme, using a novel binary discriminant analysis, a real-valued template can be transformed into a binary template. Because binary space is hard to differentiate, direct optimization is challenging. In order to solve this problem, a binary template discriminability function was developed using the perceptron.

5. Discussion

Using a model trained to recognize encrypted face images with high accuracy, this paper proposes a solution to spoofing’s vulnerability in facial image recognition systems. The implementation of such a model requires developing an image encryption algorithm for encrypting face images used for training the recognition model. This image encryption scheme is based on XOR pixel substitutions and CA pixel scrambling.

To evaluate the encryption performance of the image encryption algorithm, it is necessary to analyze the encrypted images encoded with the encryption scheme. Statistical analysis and differential analysis were used in the analysis. Based on the differential analysis with NPCR test, the image encryption scheme produced a high percentage of pixels’ difference between the original image and the encrypted image. A NPCR of 99% or higher was achieved in all test images. UACI was used to perform another differential analysis. On test images, the results on UACI fluctuated between 33 % and 15% within the ideal range. A statistical analysis of the data was conducted using the following five tests: histogram, correlation, key analysis, information entropy, and GDD. XOR operation on pixels’ values changed the histogram in encrypted images, so histogram matching could not identify encrypted images. A very weak correlation was observed between the original and encrypted images for the entire image, as well as in the vertical, horizontal, and diagonal directions, due to a very low similarity between the original and encrypted images. Key analysis in done by firstly determining key space for encryption algorithm which is where is size of the set of unique paris of and values; then testing sensitivity of algorithm by decrypting an encrypted image with slightly different key. This method had excellent key sensitivity, since no visual information could be extracted when decrypting the same image with slightly different keys. For encrypted grayscale images, the information entropy produced different values. Some were extremely close to 8, while others were lower. Based on GDD values, the proposed image encryption scheme produced exceptional results exceeding those obtained from other methods described in related literature.

The following observations were made about the robustness and limitations of the proposed image encryption scheme:

Image encryption algorithms produce very different images when they change the values of pixels in encrypted images. A very weak correlation was observed in all cases, and NPCR values exceeded 99% in every case.

In this method, the key space is very large, and it grows as the size of the image to be encrypted and the number of evolutions selected for configuring the encryption key increases.

According to the proposed scheme, GDD values were exceeding those found in some related literature on image encryption methods based on CAs for the same images.

A scheme for encrypting images changed the histogram to resist histogram matching attacks, but changing the pixel values with XOR is not sufficiently secure, as an XORed image would have a similar histogram to an encrypted image, hence a more robust scheme to substitute pixels must be incorporated into the algorithm.

Both UACI and information entropy values can be considered acceptable, but either one can be enhanced with a better pixel’s substitution scheme.

By analyzing image encryption algorithms, the algorithm is implemented into the face recognition model pipeline by encrypting the face images used to train the LDA-based model. Several experiments were performed on the model using the ORL dataset. The model’s accuracy and spoof-resistance were tested in two main experiments. For the first experiment, the entire ORL dataset was encrypted with the same key, then it was split into 80% for training and 20% for testing. In classifying encrypted face images, the system achieved 96.25% accuracy using a random forest classifier. A second experiment used the same encrypted training set but used original images for testing the model. Only 8.75% of the results were accurate in the second experiment. Since both input face images must be encrypted with the same key for a highly accurate recognition rate to be achieved, the LDA-based recognition system is highly resilient to spoofing attacks.

In information systems containing secret or sensitive information, such a system can be used to authenticate users. Authentication can then be obtained once the user adds the required encryption key configurations as well as capturing the user’s face. Whenever a system user face identity is revealed by spoofing, the attacker needs to have the correct encryption key configuration otherwise authenticating the system is very difficult.

Table 10 shows sensitivity of the model when the testing set was encrypted with slightly different key

from the one used in encrypted testing set in

Table 9, i.e., first experiment. Here the accuracy decreased significantly to 66.25%.

As in the case of the image encryption scheme, the following points concerning the effectiveness and limitations of LDA based encrypted faces recognition model were observed:

The proposed LDA based encrypted faces recognition model produced high accuracy in classification of encrypted faces images with the same encryption key reaching an accuracy of 96.25%.

The model is highly sensitive to encrypted face images with slightly different key. The model accuracy dropped to 66.25% when it was tested with testing set encrypted with a slightly different key.

The model is able to effectively resist spoofing attacks. Testing model with original images testing set showed that the model achieved 8.75% accuracy only.

The model security is limited with robustness of image encryption scheme used. The weakness of the image encryption scheme introduces vulnerabilities to encrypted faces recognition model.

The image encryption scheme needs to be robust enough to provide effective encryption performance however; the image encryption scheme must retain enough features in resulting encrypted images in order for the model to distinguish between different classes.