Depth-Based Dynamic Sampling of Neural Radiation Fields

Abstract

1. Introduction

- Depth-based Dynamic Sampling: We use additional depth supervision to train Depth-DYN NeRF. Unlike traditional NeRF, we dynamically control the spatial extent of each pixel sampled to recover the correct 3D geometry faster;

- Depth-DYN MLP: We construct the distance constraint function between the sampled points and the depth information; then, then the distance constraint is position-encoded and input to the MLP network to guide and optimize it. As far as we know, SD-NeRF is the first algorithm that incorporates depth prior information in the MLP;

- Depth Supervision Loss: We construct a depth-supervised loss function and a complete loss function based on the correspondence between color and depth, which effectively mitigates the appearance of artifacts, further refines the edges of objects, and shows excellent performance when multiple standard baselines are compared.

2. Related Work

3. Method

3.1. Depth Completion

3.2. Depth Priors

3.3. Depth-Guided Sampling

3.4. Network Training

3.5. Volumetric Rendering

4. Results

4.1. Experimental Setup

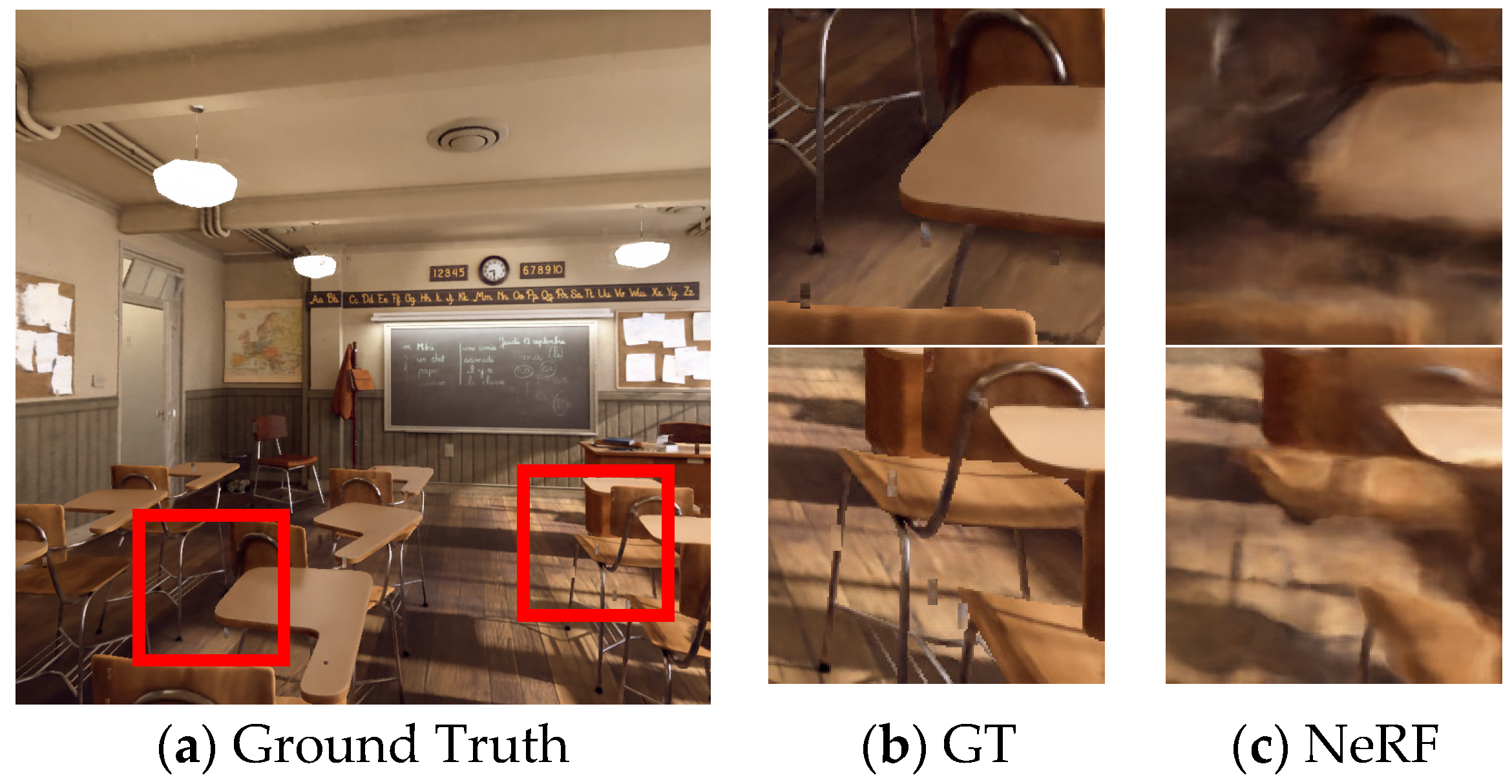

4.2. Baseline Comparison

4.3. Ablation Study

4.4. Depth-DYN NeRF with Synthetic Depth

5. Limitations

Future Work

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Zhang, C.; Chen, T. A survey on image-based rendering—Representation, sampling and compression. Signal Process. Image Commun. 2004, 19, 1–28. [Google Scholar] [CrossRef]

- Chan, S.C.; Shum, H.Y.; Ng, K.T. Image-based rendering and synthesis. IEEE Signal Process. Mag. 2007, 24, 22–33. [Google Scholar] [CrossRef]

- Chan, S.C. Image-based rendering. In Computer Vision: A Reference Guide; Springer International Publishing: Cham, Switzerland, 2021; pp. 656–664. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Neff, T.; Stadlbauer, P.; Parger, M.; Kurz, A.; Mueller, J.H.; Chaitanya, C.R.A.; Steinberger, K.M. DONeRF: Towards Real-Time Rendering of Compact Neural Radiance Fields using Depth Oracle Networks. Comput. Graph. Forum 2021, 40, 45–59. [Google Scholar] [CrossRef]

- Gortler, S.J.; Grzeszczuk, R.; Szeliski, R.; Cohen, M.F. The lumigraph. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 1 August 1996; pp. 43–54. [Google Scholar]

- Levoy, M.; Hanrahan, P. Light field rendering. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 1 August 1996; pp. 31–42. [Google Scholar]

- Davis, A.; Levoy, M.; Durand, F. Unstructured light fields. Comput. Graph. Forum 2012, 31, 305–314. [Google Scholar] [CrossRef]

- Habermann, M.; Liu, L.; Xu, W.; Zollhoefer, M.; Pons-Moll, G.; Theobalt, C. Real-time deep dynamic characters. ACM Trans. Graph. (TOG) 2021, 40, 1–16. [Google Scholar] [CrossRef]

- Liu, L.; Xu, W.; Habermann, M.; Zollhöfer, M.; Bernard, F.; Kim, H.; Wang, W.; Theobalt, C. Neural human video rendering by learning dynamic textures and rendering-to-video translation. arXiv 2020, arXiv:2001.04947. [Google Scholar]

- Liu, L.; Xu, W.; Zollhoefer, M.; Kim, H.; Bernard, F.; Habermann, M.; Wang, W.; Theobalt, C. Neural rendering and reenactment of human actor videos. ACM Trans. Graph. (TOG) 2019, 38, 1–14. [Google Scholar] [CrossRef]

- Thies, J.; Zollhöfer, M.; Nießner, M. Deferred neural rendering: Image synthesis using neural textures. ACM Trans. Graph. (TOG) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Lombardi, S.; Simon, T.; Saragih, J.; Schwartz, G.; Lehrmann, A.; Sheikh, Y. Neural volumes: Learning dynamic renderable volumes from images. arXiv 2019, arXiv:1906.07751. [Google Scholar] [CrossRef]

- Sitzmann, V.; Thies, J.; Heide, F.; Nießner, M.; Wetzstein, G.; Zollhofer, M. Deepvoxels: Learning persistent 3d feature embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2437–2446. [Google Scholar]

- Aliev, K.A.; Sevastopolsky, A.; Kolos, M. Dmitry, Ulyanov, and Victor Lempitsky. Neural point-based, graphics. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Kopanas, G.; Philip, J.; Leimkühler, T.; Drettakis, G. Point-Based Neural Rendering with Per-View Optimization. Comput. Graph. Forum 2021, 40, 29–43. [Google Scholar] [CrossRef]

- Rückert, D.; Franke, L.; Stamminger, M. Adop: Approximate differentiable one-pixel point rendering. ACM Trans. Graph. (TOG) 2022, 41, 1–14. [Google Scholar] [CrossRef]

- Wu, M.; Wang, Y.; Hu, Q.; Yu, J. Multi-view neural human rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1682–1691. [Google Scholar]

- Debevec, P.E.; Taylor, C.J.; Malik, J. Modeling and rendering architecture from photographs: A hybrid geometry- and image-based approach. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 11–20. [Google Scholar]

- Buehler, C.; Bosse, M.; McMillan, L.; Gortler, S.; Cohen, M. Unstructured lumigraph rendering. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 1 August 2001; pp. 425–432. [Google Scholar]

- Sinha, S.; Steedly, D.; Szeliski, R. Piecewise planar stereo for image-based rendering. In Proceedings of the 2009 International Conference on Computer Vision, Las Vegas, NV, USA, 13–16 July 2009; pp. 1881–1888. [Google Scholar]

- Chaurasia, G.; Sorkine, O.; Drettakis, G. Silhouette-Aware Warping for Image-Based Rendering. Comput. Graph. Forum 2011, 30, 1223–1232. [Google Scholar] [CrossRef]

- Chaurasia, G.; Duchene, S.; Sorkine-Hornung, O.; Drettakis, G. Depth synthesis and local warps for plausible image-based navigation. ACM Trans. Graph. (TOG) 2013, 32, 1–12. [Google Scholar] [CrossRef]

- De Bonet, J.S.; Viola, P. Poxels: Probabilistic voxelized volume reconstruction. In Proceedings of the International Conference on Computer Vision (ICCV), Kerkyra, Corfu, Greece, 20–25 September 1999; Volume 2. [Google Scholar]

- Kutulakos, K.N.; Seitz, S.M. A theory of shape by space carving. Int. J. Comput. Vis. 2000, 38, 199–218. [Google Scholar] [CrossRef]

- Kolmogorov, V.; Zabih, R. Multi-camera scene reconstruction via graph cuts. In Proceedings of the European Conference on Computer Vision, Copenhagen, Denmark, 28–31 May 2002; pp. 82–96. [Google Scholar]

- Esteban, C.H.; Schmitt, F. Silhouette and stereo fusion for 3D object modeling. Comput. Vis. Image Underst. 2004, 96, 367–392. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 1, pp. 519–528. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Schönberger, J.L.; Zheng, E.; Frahm, J.M.; Pollefeys, M. Pixelwise view selection for unstructured multi-view stereo. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 501–518. [Google Scholar]

- Chen, A.; Xu, Z.; Zhao, F.; Zhang, X.; Xiang, F.; Yu, J.; Su, H. Mvsnerf: Fast generalizable radiance field reconstruction from multi-view stereo. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14124–14133. [Google Scholar]

- Wang, Q.; Wang, Z.; Genova, K.; Srinivasan, P.P.; Zhou, H.; Barron, J.T.; Martin-Brualla, R.; Snavely, N.; Funkhouser, T. Ibrnet: Learning multi-view image-based rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4690–4699. [Google Scholar]

- Yu, A.; Ye, V.; Tancik, M.; Kanazawa, A. pixelnerf: Neural radiance fields from one or few images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4578–4587. [Google Scholar]

- Tancik, M.; Mildenhall, B.; Wang, T.; Schmidt, D.; Srinivasan, P.P.; Barron, J.T.; Ng, R. Learned initializations for optimizing coordinate-based neural representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2846–2855. [Google Scholar]

- Roessle, B.; Barron, J.T.; Mildenhall, B.; Srinivasan, P.P.; Nießner, M. Dense depth priors for neural radiance fields from sparse input views. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12892–12901. [Google Scholar]

- Deng, K.; Liu, A.; Zhu, J.Y.; Ramanan, D. Depth-supervised nerf: Fewer views and faster training for free. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12882–12891. [Google Scholar]

- Wei, Y.; Liu, S.; Rao, Y.; Zhao, W.; Lu, J.; Zhou, J. Nerfingmvs: Guided optimization of neural radiance fields for indoor multi-view stereo. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5610–5619. [Google Scholar]

- Ku, J.; Harakeh, A.; Waslander, S.L. In defense of classical image processing: Fast depth completion on the cpu. In Proceedings of the 2018 15th Conference on Computer and Robot Vision (CRV), Toronto, ON, Canada, 8–10 May 2018; pp. 16–22. [Google Scholar]

- Osher, S.; Fedkiw, R.P. Level set methods: An overview and some recent results. J. Comput. Phys. 2001, 169, 463–502. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

| Barbershop | San Miguel | Classroom | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR↑ | SSIM↑ | LPIPS↓ | Depth-MSE↓ | PSNR↑ | SSIM↑ | LPIPS↓ | Depth-MSE↓ | PSNR↑ | SSIM↑ | LPIPS↓ | Depth-MSE↓ | |

| Nerf | 22.143 | 0.769 | 0.275 | 0.587 | 21.641 | 0.647 | 0.431 | 7.798 | 23.629 | 0.802 | 0.2479 | 0.575 |

| MVSNerf | 21.309 | 0.728 | 0.283 | 5.574 | 23.76 | 0.757 | 0.267 | 3.693 | 23.253 | 0.867 | 0.148 | 0.395 |

| DoNerf | 23.117 | 0.799 | 0.222 | 0.003 | 22.135 | 0.69 | 0.301 | 0.69 | 24.216 | 0.822 | 0.196 | 0.004 |

| Ours (Synthetic depth) | 24.687 | 0.843 | 0.169 | 0.0017 | 23.701 | 0.758 | 0.21 | 0.0016 | 25.188 | 0.849 | 0.161 | 0.0009 |

| Ours (ground truth depth) | 26.683 | 0.904 | 0.111 | 0.0004 | 26.148 | 0.827 | 0.17 | 0.001 | 29.059 | 0.908 | 0.096 | 0.0003 |

| Barbershop | San Miguel | Classroom | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR↑ | SSIM↑ | LPIPS↓ | Depth-MSE↓ | PSNR↑ | SSIM↑ | LPIPS↓ | Depth-MSE↓ | PSNR↑ | SSIM↑ | LPIPS↓ | Depth-MSE↓ | |

| Depth-DYN NeRF | 26.683 | 0.904 | 0.111 | 0.0004 | 26.148 | 0.827 | 0.17 | 0.001 | 29.059 | 0.908 | 0.096 | 0.0003 |

| w/o DPLOSS | 26.378 | 0.899 | 0.115 | 0.009 | 25.919 | 0.83 | 0.158 | 0.713 | 28.188 | 0.904 | 0.098 | 0.033 |

| w/o dploss, Depth-DYN MLP | 24.937 | 0.869 | 0.1455 | 0.028 | 25.963 | 0.8198 | 0.182 | 0.003 | 27.48 | 0.88 | 0.1287 | 0.0008 |

| w/o dysamp, Depth-DYN MLP | 20.475 | 0.694 | 0.396 | 0.251 | 21.656 | 0.595 | 0.46 | 2.021 | 23.852 | 0.776 | 0.281 | 0.111 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Xiao, J.; Zhang, X.; Xu, X.; Jin, T.; Jin, Z. Depth-Based Dynamic Sampling of Neural Radiation Fields. Electronics 2023, 12, 1053. https://doi.org/10.3390/electronics12041053

Wang J, Xiao J, Zhang X, Xu X, Jin T, Jin Z. Depth-Based Dynamic Sampling of Neural Radiation Fields. Electronics. 2023; 12(4):1053. https://doi.org/10.3390/electronics12041053

Chicago/Turabian StyleWang, Jie, Jiangjian Xiao, Xiaolu Zhang, Xiaolin Xu, Tianxing Jin, and Zhijia Jin. 2023. "Depth-Based Dynamic Sampling of Neural Radiation Fields" Electronics 12, no. 4: 1053. https://doi.org/10.3390/electronics12041053

APA StyleWang, J., Xiao, J., Zhang, X., Xu, X., Jin, T., & Jin, Z. (2023). Depth-Based Dynamic Sampling of Neural Radiation Fields. Electronics, 12(4), 1053. https://doi.org/10.3390/electronics12041053