Accuracy of Using a New Semi-Automated Software Package to Diagnose Osteoporotic Vertebral Fractures in Adults

Abstract

:1. Introduction

2. Materials and Methods

2.1. Ethical Approval

2.2. Subjects

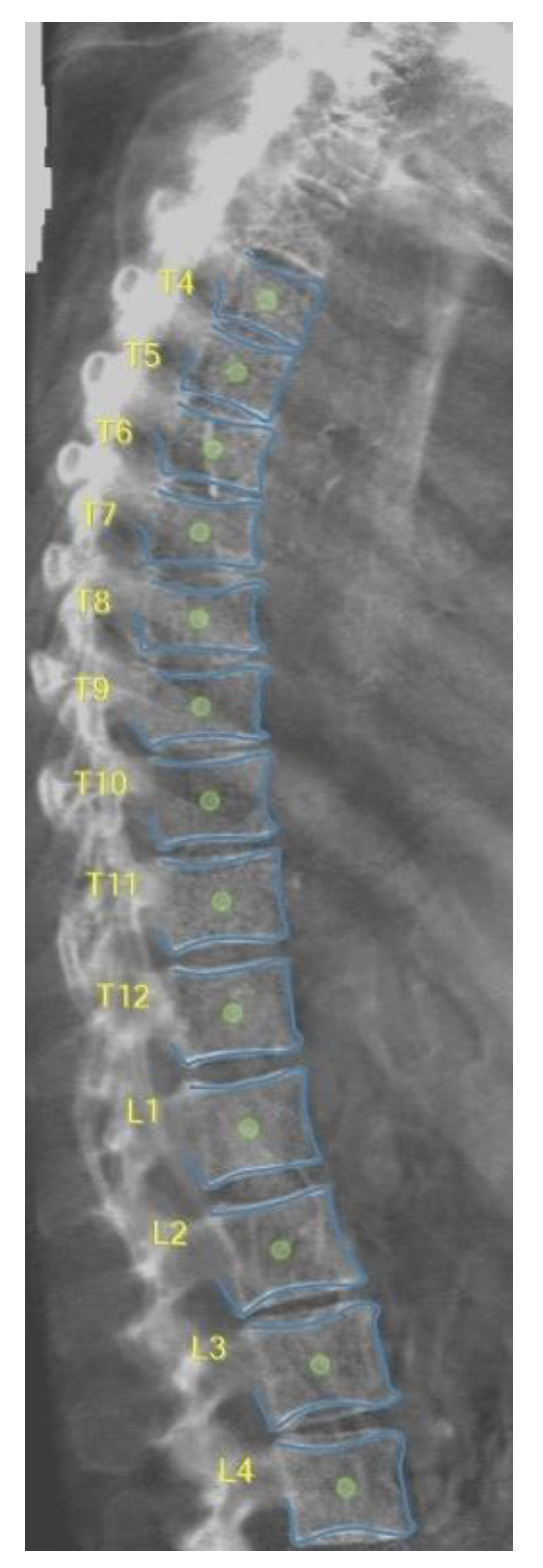

2.3. DXA-VFA Image Annotation

2.4. Statistical Analysis

3. Results

3.1. Vertebral Level Assignment

3.2. Point-Wise Landmark Annotation Accuracy

3.3. Image-Wise Annotation Accuracy

3.4. Diagnostic Accuracy

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- A Clynes, M.; Harvey, N.C.; Curtis, E.M.; Fuggle, N.R.; Dennison, E.M.; Cooper, C. The epidemiology of osteoporosis. Br. Med. Bull. 2020. [Google Scholar] [CrossRef]

- Cooper, C.; Atkinson, E.J.; O'Fallon, W.M.; Melton, J.L., III. Incidence of clinically diagnosed vertebral fractures: A population-based study in Rochester, Minnesota. J. Bone Miner. Res. 1992, 7, 221–227. [Google Scholar]

- Guglielmi, G.; Diacinti, D.; van Kuijk, C.; Aparisi, F.; Krestan, C.; Adams, J.E.; Link, T.M. Vertebral morphometry: Current methods and recent advances. Eur. Radiol. 2008, 18, 1484–1496. [Google Scholar] [CrossRef] [PubMed]

- Alqahtani, F.F.; Offiah, A.C. Diagnosis of osteoporotic vertebral fractures in children. Pediatr. Radiol. 2018, 49, 283–296. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.M.; Demissie, S.; Eisenberg, R.; Samelson, E.J.; Kiel, D.P.; Bouxsein, M.L. Intra-and inter-reader reliability of semi-automated quantitative morphometry measurements and vertebral fracture assessment using lateral scout views from computed tomography. Osteoporos. Int. 2011, 22, 2677–2688. [Google Scholar] [CrossRef] [PubMed]

- Birch, C.; Knapp, K.; Hopkins, S.; Gallimore, S.; Rock, B. SpineAnalyzer™ is an accurate and precise method of vertebral fracture detection and classification on dual-energy lateral vertebral assessment scans. Radiography 2015, 21, 278–281. [Google Scholar] [CrossRef]

- Alqahtani, F.; Messina, F.; Kruger, E.; Gill, H.; Ellis, M.; Lang, I.; Broadley, P.; Offiah, A. Evaluation of a semi-automated software program for the identification of vertebral fractures in children. Clin. Radiol. 2017, 72, 904.e11–904.e20. [Google Scholar] [CrossRef]

- Crabtree, N.; Chapman, S.; Högler, W.; Hodgson, K.; Chapman, D.; Bebbington, N.; Shaw, N. Vertebral fractures assessment in children: Evaluation of DXA imaging versus conventional spine radiography. Bone 2017, 97, 168–174. [Google Scholar] [CrossRef] [PubMed]

- Cawthon, P.M.; Haslam, J.; Fullman, R.; Peters, K.W.; Black, D.; Ensrud, K.E. Methods and reliability of radiographic vertebral fracture detection in older men: The osteoporotic fractures in men study. Bone 2014, 67, 152–155. [Google Scholar] [CrossRef] [PubMed]

- Alqahtani, F.F.; Crabtree, N.J.; Bromiley, P.A.; Cootes, T.; Broadley, P.; Lang, I.; Offiah, A.C. Diagnostic performance of morphometric vertebral fracture analysis (MXA) in children using a 33-point software program. Bone 2020, 133, 115249. [Google Scholar] [CrossRef] [PubMed]

- Leidig-Bruckner, G.; Minne, H. The spine deformity index (SDI): A new approach to quantifying vertebral crush fractures in patients with osteoporosis. In Vertebral; Wiley: San Francisco, CA, USA, 1988. [Google Scholar]

- Osteoporosis Research Group. Fracture in Osteoporosis; University of California: Los Angeles, CA, USA, 1995; pp. 235–252. [Google Scholar]

- Alqahtani, F.F.; Messina, F.; Offiah, A.C. Are semi-automated software program designed for adults accurate for the identification of vertebral fractures in children? Eur. Radiol. 2019, 29, 6780–6789. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bromiley, P.A.; Kariki, E.P.; Adams, J.E.; Cootes, T.F. Classification of Osteoporotic Vertebral Fractures Using Shape and Appearance Modelling. In Proceedings of the Computational Methods and Clinical Applications in Musculoskeletal Imaging: 5th International Workshop, MSKI 2017, Held in Conjunction with MICCAI 2017, Quebec City, QC, Canada, 10 September 2017. [Google Scholar] [CrossRef]

- Bromiley, P.A.; Adams, J.E.; Cootes, T. Automatic Localisation of Vertebrae in DXA Images Using Random Forest Regression Voting. In Proceedings of the Computational Methods and Clinical Applications for Spine Imaging: Third International Workshop and Challenge, CSI 2015, Held in Conjunction with MICCAI 2015, Munich, Germany, 5 October 2015. [Google Scholar] [CrossRef]

- Genant, H.K.; Wu, C.Y.; van Kuijk, C.; Nevitt, M.C. Vertebral fracture assessment using a semiquantitative technique. J. Bone Miner. Res. 1993, 8, 1137–1148. [Google Scholar] [CrossRef]

| Observer | Median Error (mm) |

|---|---|

| E.K. | 1.07 |

| I.H. | 0.81 |

| M.M. | 0.80 |

| F.A. | 1.05 |

| U1 | U2 | U | U_mu | U_sd | Z | p | |

|---|---|---|---|---|---|---|---|

| F.A. vs. E.K. | 98,909 | 85,132 | 85,132 | 92,020.5 | 3629.6 | 1.90 | 0.029 |

| F.A. vs. I.H. | 43,380 | 140,661 | 43,380 | 92,020.5 | 3629.6 | 13.4 | <0.001 |

| F.A. vs. M.M. | 36,238 | 147,803 | 36,238 | 92,020.5 | 3629.6 | 15.4 | <0.001 |

| F.A. | |||||

|---|---|---|---|---|---|

| J.A. | Normal | Non-Fracture Deformity | Mild VF | Moderate VF | Severe VF |

| Normal | 481 | 239 | 36 | 12 | 0 |

| Non-Fracture Deformity | 13 | 22 | 2 | 2 | 0 |

| Mild VF | 3 | 4 | 3 | 7 | 0 |

| Moderate VF | 1 | 3 | 1 | 6 | 2 |

| Severe VF | 0 | 0 | 0 | 8 | 10 |

| Observer | Fracture vs. Non-Fracture | Moderate/Severe Fracture vs. Other Classes |

|---|---|---|

| E.K. | 0.767 | 0.829 |

| I.H. | 0.646 | 0.753 |

| M.M. | 0.716 | 0.709 |

| F.A. | 0.504 | 0.651 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alqahtani, F.F.; Bromiley, P.A. Accuracy of Using a New Semi-Automated Software Package to Diagnose Osteoporotic Vertebral Fractures in Adults. Electronics 2023, 12, 847. https://doi.org/10.3390/electronics12040847

Alqahtani FF, Bromiley PA. Accuracy of Using a New Semi-Automated Software Package to Diagnose Osteoporotic Vertebral Fractures in Adults. Electronics. 2023; 12(4):847. https://doi.org/10.3390/electronics12040847

Chicago/Turabian StyleAlqahtani, Fawaz F., and Paul A. Bromiley. 2023. "Accuracy of Using a New Semi-Automated Software Package to Diagnose Osteoporotic Vertebral Fractures in Adults" Electronics 12, no. 4: 847. https://doi.org/10.3390/electronics12040847

APA StyleAlqahtani, F. F., & Bromiley, P. A. (2023). Accuracy of Using a New Semi-Automated Software Package to Diagnose Osteoporotic Vertebral Fractures in Adults. Electronics, 12(4), 847. https://doi.org/10.3390/electronics12040847