1. Introduction

Individuals contribute their time, expertise, and wealth to help the needy and transform the Earth into a better living place [

1]. Technologies like social networking and Web 2.0 are making health and medical care more accessible to businesses, professionals, patients, and laypeople. The innovative tools and applications made publicly available by Web 2.0 innovation have changed how organizations operate and communicate. People utilize a variety of platforms to expand their social networks, purchase items, complete activities, and learn new things. Information retrieval, blogging, tagging, path-finding, text messaging, collaborative online services, and multi-player gaming are some of the activities that are carried by Web 2.0 applications [

2]. Individuals may now interact and collaborate more easily because of advances in technology, and this engagement of people is referred as “crowdsourcing” [

3]. Crowdsourcing is a task-solving methodology in which human participation is required to solve difficult tasks [

4]. Jeff Howe first used the term “crowdsourcing” in Wired magazine. Crowdsourcing, according to him, is “the act of taking a job traditionally performed by a designated agent (usually an employee) and outsourcing it to an undefined, generally large group of people in the form of an open call” [

5].

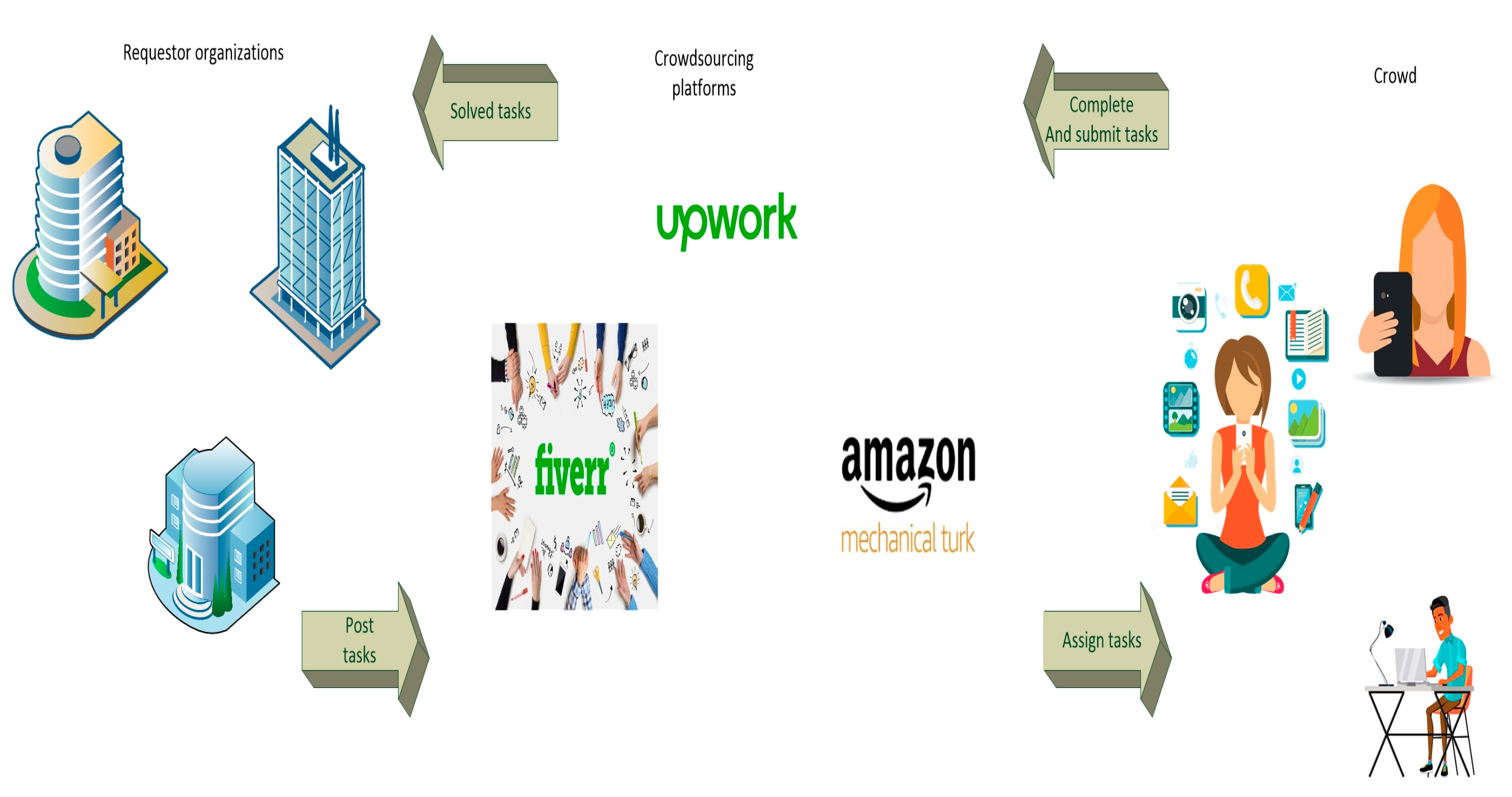

Crowdsourcing is a popular strategy for accomplishing a variety of tasks. Several individuals and their interactions are involved in the process, such as requesters and crowdsourcers [

6] that manage, execute, and supervise crowdsourcing initiatives and may post task requests, the crowd (individuals) [

7], consisting of virtual employees who participate in outsourcing activities or events, and the platform [

8], which serves as a channel for interaction between the crowd and the crowdsourcers. The people on these platforms are connected by means of social media such as Facebook, WhatsApp, Instagram, Twitter, Y-mail, and Gmail accounts. Social networking improvements have encouraged corporations to collect information from individuals all over the world in order to identify the best solutions to unique challenges [

9,

10]. Crowdsourcing allows enterprises to hire globalized, low-cost, and talented workers through internet platforms [

11,

12].Crowdsourcing is employed for a wide range of tasks including spelling correction, content creation, coding, pattern recognition, software development, and debugging [

13]. The task is advertised on a platform, and the crowd participates in various types of activities [

14]. Considering the user interface and their intrinsic motivation, crowdsourcing platforms utilize appealing concepts that promote human–computer interaction in the context of open innovation [

15]. In some situations, computers may be used to manage crowdsourced tasks, resulting in human-based computation systems. This type of human-based computing is incorporated in many online systems (crowdsourcing platforms) [

16]. Crowdsourcing platforms are websites that work as the interface between job seekers and online people. Both entities are registered on these crowdsourcing platforms. The platform uses various selection methods such as skill testing, profiling, previous participating history, matching, and many others to select an appropriate participant to accomplish requestor tasks [

17].

In crowdsourcing, participants from diverse backgrounds have skills, knowledge, abilities, and some expertise in task domains, and they collaborate to tackle various challenges [

3,

6]. “Crowdsourced Software Engineering is the act of undertaking any external software engineering tasks by an undefined, potentially large group of online workers in an open call format” [

18]. Crowdsourcing allows a requestor to tap into a global community of users with various types of expertise and background to facilitate the completion of a task that would be difficult to complete without a large group of individuals [

19]. Crowdsourcing has also been utilized in software engineering to resolve coding, validation, and architectural problems. However, most crowdsourcing approaches for engineering are for theoretical concepts and are often implemented and assessed on a comparatively small crowd with a maximum of 20 participants. Considering the nature of the crowdsourcing sector and the cognitive features of participants, there is a great demand for an integrated resource-sharing crowdsourcing environment for real-world solutions [

20].

Modern society depends on sophisticated hardware and software systems, many of which are safety-critical, such health monitoring software, which is utilized by medical organizations to detect, monitor, and aid elders and patients. Innovations in mobile computing might change how health interventions are delivered. In order to overcome limitations brought on by a scarcity of clinician timing, poor patient engagement, and the challenge of ensuring appropriate treatments at the correct time, mobile health (mHealth) treatment may be more effective than occasional in-clinic consultations. The delivery of healthcare is being revolutionized by technology that collects, analyzes, and configures patient data across devices. Intelligent and interconnected healthcare will deliver benefits that are safe, more user-centered, economical, effective, and impartial due to advancements in ubiquitous computing.

Crowdsourcing is being utilized more often as it offers the opportunity to mobilize a wide and heterogeneous group through improved communication and collaboration. In the areas of research, crowdsourcing R&D has been successful. Due to “crowdsourcing’s elasticity and mobility”, it is excellent for carrying out research tasks such data processing, surveying, monitoring, and evaluation. By including the public as innovation partners, projects may be improved in terms of quality, cost, and speed while also generating solutions to important research problems [

21].

The key contributions of this article are as follows:

Analysis of the appropriate characteristics of social crowds utilized for effective software crowdsourcing.

Analysis of the participation reasons of people in carrying out software developmental tasks.

Development of a decision support system for evaluating primary participation reasons by assessing various crowds using Fuzzy AHP and TOPSIS techniques.

The paper is organized into five sections.

Section 2 describes the existing literature on crowdsourcing,

Section 3 describes the overall methodology of our study,

Section 4, “Experimental setup and results”, provides the description and evaluation of the proposed method, and

Section 5, “conclusion”, concludes and summarizes the objectives achieved in this study.

2. Literature Review

The Internet has made it possible for organizations to attract a significant number of individuals. Cell phones, computers, tablets, and smart gadgets are all ways for crowd and requestor organizations to engage people to carry out various crowdsourcing tasks (health monitoring, question and answering, problem solution, recommendations, etc.). Crowds are employed from around the world to undertake various jobs. As the tasks are completed by groups of individuals, quality outcomes may be obtained in less time and with less expenditure [

22]. Organizations issue an open call for employees who will satisfy specific standards, along with guidelines if the organization receives a reply from competent workers to carry out the assignment within the specific timelines. The organization provides a confirmation to that individual [

23]. Crowds are constituted of a group of skilled people who possess some expertise [

7,

24]. Organizations recruit people who can provide numerous and diverse suggestions for fixing technical concerns [

25]. The company pre-assesses the individual’s capacity to engage in complicated activities [

26].

Participants are selected based on their backgrounds. Demographic filtering is used to pick persons from relevant countries/localities. If a worker is ready to begin a task, they must supply demographic information. The audience is drawn from a variety of sources and backgrounds. Not every crowd is suitable for every activity, and different activities need different levels of expertise, field knowledge, and so on. Inaccurate workers can reduce job productivity and increase recruitment costs. Choosing the right employee is a difficult task. Workers are recruited with three goals in mind: maximize test specification scope, maximize recruited worker competence in bug identification, and decrease costs. Workers are classified into five belts based on their enrollment—red, green, yellow, blue, and grey—that indicate their skill levels. Worker reliability is evaluated by qualifying and completing the assigned work [

27].

To choose appropriate personnel for the sensing task, many task assignment methodologies are used. Mobile crowd-sensing is a technology that enables a group of people to interact and gather data from devices with sensing and processing capabilities in order to measure and visualize phenomena that are of interest to all. Using smartphones, data can be gathered in everyday life and easily compared to other users of the crowd, especially when taking environmental factors or sensor data into account as well. In the context of chronic diseases, mobile technology can particularly help to empower patients in properly coping with their individual health situations. Employee selection in Mobile Crowd Sensing (MCS) is a difficult problem that has an impact on sensing efficiency and quality. Different standards are used to screen for appropriate employees. Participants in the job scheduling system employ sensors to gather or evaluate details about their actual subject [

11].

Many software firms are knowledge-intensive; therefore, knowledge management is critical. The design and execution of software systems need information that is frequently spread across many personnel with diverse areas of experience and capabilities [

28]. Software engineering is increasingly taking place in companies and communities involving large numbers of individuals, rather than in limited, isolated groups of developers [

29]. The popularity of social media has created new methods of distributing knowledge over websites. We live in an environment dominated by social media and user-generated information. Many social relationships, from pleasure to learning and employment, are the result of people engaging actively with one another. It is hardly surprising that social sites have modified the spread of knowledge [

30].

Social media is essential for organizations of all sizes because people must engage to perform tasks. As technology develops, it becomes more user-friendly and incorporates a variety of features, such as an operating system based on social factors, software applications primarily geared toward communication, and a medium of engagement through social networks, which are becoming more and more significant. Social media have altered how individuals engage with and share their perspectives on state policies. Four key objectives are achieved by organizations using social media: engaging with citizens, promoting citizen involvement, advancing open government, and analyzing/monitoring public sentiment and activity [

31].

Human–computer interaction (HCI) research and practice are based on the principle of human-centered design. The goal of human-centered design is to develop new technologies that are geared toward the requirements and activities of the users. This design philosophy ensures that user needs are considered throughout the whole development process of a technology, from obtaining required information through its final stages. Crowdsourcing utilizes gamification-based strategy for solving larger problems. The practice of adding features that provide game-like representation is known as gamification. The method seeks to boost overall problem-solving strategy by eliciting users’ intrinsic drives through the development of systems that are similar to game interfaces. The principles and characteristics of games may be utilized to attract and engage users, lowering anticipated constraints to system usage, such as low motivation and low acceptance rates, and transforming game-based activities into successful outcomes [

32].

2.1. Stack Overflow

Social media has become an essential source of information for a diverse range of fields as a means to gain a broader knowledge of information processes and community groups [

33]. To aid with application creation, the internet provides a plethora of built-in libraries and tools. Developers typically use pre-existing mobile APIs to save time and money. An Application Programming Interface is a group of elements that provide a mechanism for software-to-software interaction. Stack Overflow (SO) is a renowned question-and-answer platform for software developers, engineers, and beginners. The Stack Overflow technique provides a distributed skill set that enables clients worldwide to improve and broaden their knowledge in coding and their communication capabilities [

34]. SO enables a user (literally, the problem presenter or question submitter) to initiate a conversation (question), provide an answer, make discussion, rate questions, and accept responses that they believe are beneficial [

35].

Choosing the right coding language is usually an important phase in the software development process. Technical factors concerning the coding language’s abilities and flaws in resolving the topic of concern naturally drive this decision. The recent emergence of social networks concerned with technical difficulties has brought professionals into conversations regarding programming languages wherein rigorously technical difficulties usually compete with strongly articulated viewpoints [

36]. Practitioners and scholars throughout software engineering are continually focusing on the challenges associated with mobile software development [

37]. When coworkers are drawn from various cultures, tackling cultural barriers in software engineering is essential for ensuring appropriate group performance, and the necessity of managing such difficulties has expanded with activities that are reinforced in software development [

38].

Some famous platforms that assign workers to requested tasks are mentioned in subsequent sections. Platforms and their working procedures are also briefly highlighted in [

39], and their working procedures are also explained.

2.2. Amazon Mechanical Turk

Amazon Mechanical Turk is a crowdsourced recruiting platform that enables requesters to offer online projects in exchange for completion rewards to online people who have the required skills, without the limitations of permanent employment [

40,

41]. Requesters seeking access to a large pool of individuals have minimal entrance requirements for executing human intelligence task (HITs). The tasks are assigned by requesters and completed by individuals. The HITs vary in complexity from recognizing an image to performing domain-specific tasks such as interpreting source code [

42]. Requesters might provide qualifying conditions, such as gender, age, and geography requirements [

43]. MTurk is a platform for seeking jobs that may require human intelligence. By displaying Human Intelligence Tasks, employees may examine and decide to complete specific tasks [

44]. Workers sign up for tasks on the platform and afterwards according to their credentials and compete on micro projects known as HITs that are advertised by requester organizations that require the accomplishment of such tasks. The MTurk workforce is mostly constituted of individuals from various parts of the globe [

45].

2.3. Upwork

Upwork is a platform that connects people selling work with potential employees. Organizations may publish a variety of jobs on Upwork for potential workers to bid on. Accounts are created on Upwork by both job hunters and job posters in order to browse or post jobs and to use the services and functionalities that Upwork delivers. Jobs on Upwork often entail client commitments that last from hours to weeks and necessitate more dynamic interaction than those advertised on micro-tasking websites such as AMT. Jobs advertised on Upwork generally demand a higher degree of implicit understanding and, as a result, a new strategy for planning and coordinating activities between providers and users that goes beyond simple automated monitoring [

46]. Upwork enables skilled employees to perform knowledge work ranging from web design to strategic decisions [

47].

2.4. Freelancer

Freelancers are self-employed individuals who have a short-term, task-based relationship with employers and hence are not part of the firm workforce. Their relationship with the firm lasts just until the assigned task is finished satisfactorily; therefore, they do not have the long-term obligations with the corporation that full-time workers have. In exchange for payment, they are obligated to finish the assignment with high quality standards by the agreed-upon day. During the period that the freelancer is carrying out the assigned activity, they are permitted to take on additional freelancing projects with various requesters under different contractual circumstances. In other words, freelancers may work on many projects concurrently. As opposed to full-time employees, freelancers are not bound by restrictive and long contractual work [

48]. Engagement of freelancers on these websites enables them to pool their collective intellect in order to execute an assignment in a creative and cost-effective way. The lower processing cost makes freelancers a desirable option for completing tasks efficiently and effectively [

49]. FaaT (Freelance as a Team) is an approach for professionals to optimize their internal procedures to fulfill the requirements and capabilities of a single programmer [

50].

2.5. Top Coder

Many prominent application developers use online communities to enhance the products or solutions they deliver. TopCoder is a digital platform of over 430,000 creative professionals that contest to build and improve software, websites, and mobile applications for subscribers. TopCoder was a pioneer of technology innovation and allows developers and producers from across the world to choose and solve the various problems and difficulties to which they wish to make contributions. TopCoder offers functionality and technology to coordinate and ease the advancement of solution and implementation [

51]. Every TopCoder application passes through the following phases: application design, architecture, development, assembly, and delivery.

Each step is advertised as a contest on the TopCoder site. Registered platform users can enter any contest and submit the appropriate solutions. The preceding phase’s successful answer is used as input for the following step. The needs of businesses are gathered and specified during the application specification process. Following that, each application is separated into a collection of components in the architecture stage. Following that, each part passes through the design and development phases. The components are then joined together during the assembly process to develop the application, which is eventually deployed and delivered to corporations [

52].

Overall, the registration of social people on crowdsourcing platforms to participate on different tasks is on the rise.

4. Experimental Setup and Results

In order to cope with ambiguity and imprecision throughout the decision-making process, one of the main AI agents, known as fuzzy set theory, was applied to evaluate reasons for participation. This section discusses our evaluation of our proposed method.

4.1. Fuzzy AHP Approach for Finding Criterion Weightage

The weightage of criteria was calculated using a fuzzy scale, as presented in

Table 1.

Our suggested strategy uses the fuzzy AHP approach to assess a crowd based on their participation motives. This method reliably assesses selected qualities and determines their percentage relevance. Seven engagement criteria were taken into consideration in the proposed study. The variables were identified by their titles, which included competency, knowledge-sharing, socialization, ranking, employment, and rewards. The list below is ordered by the procedure results and total numerical effort. The steps of the approach are as follows.

Step 1. Draw a pairwise decision matrix n*n.

The decision matrix (n*n) may be created by solving the preceding matrix equation and assigning a value from 1 to 10 to each criterion, as shown in

Table 2.

Step 2. Replacing and offering fuzzy numbers to each criterion. For reciprocals, the equation is

where l is a lower number, m is the middle number, and u is the upper number.

Equation (2) may be used to replace specific integers with fuzzy numbers, and the resultant fuzzified matrix is shown in

Table 3.

Step 3. We compute the fuzzy geometric mean value (FGMV) by implementing the following equation,

whereas “n” indicates the number of criteria.

The FGMV values are derived using solution (3). In

Table 4, the results of the FGMV are shown.

Step 4. For computing the fuzzy weights (W

i), the formula is as follows:

Step 5. Defuzzification: average weights are computed by using the formula given below:

Using the COA method, we obtain the average weights from fuzzy weights.

Step 6. If the overall sum of the average weightage is greater than one, convert the weights to normalized weights by applying the formula below:

Using the afore-mentioned Equations (4)–(6), we must determine the FGMV before calculating the fuzzy weights, average weights, and normalized weights of Formula (6). The fuzzy weights are initially calculated using Formula (4). Then, we use Formula (5) to calculate the average weights. Lastly, Formula (6) is used to obtain the normalized weights of the criterion.

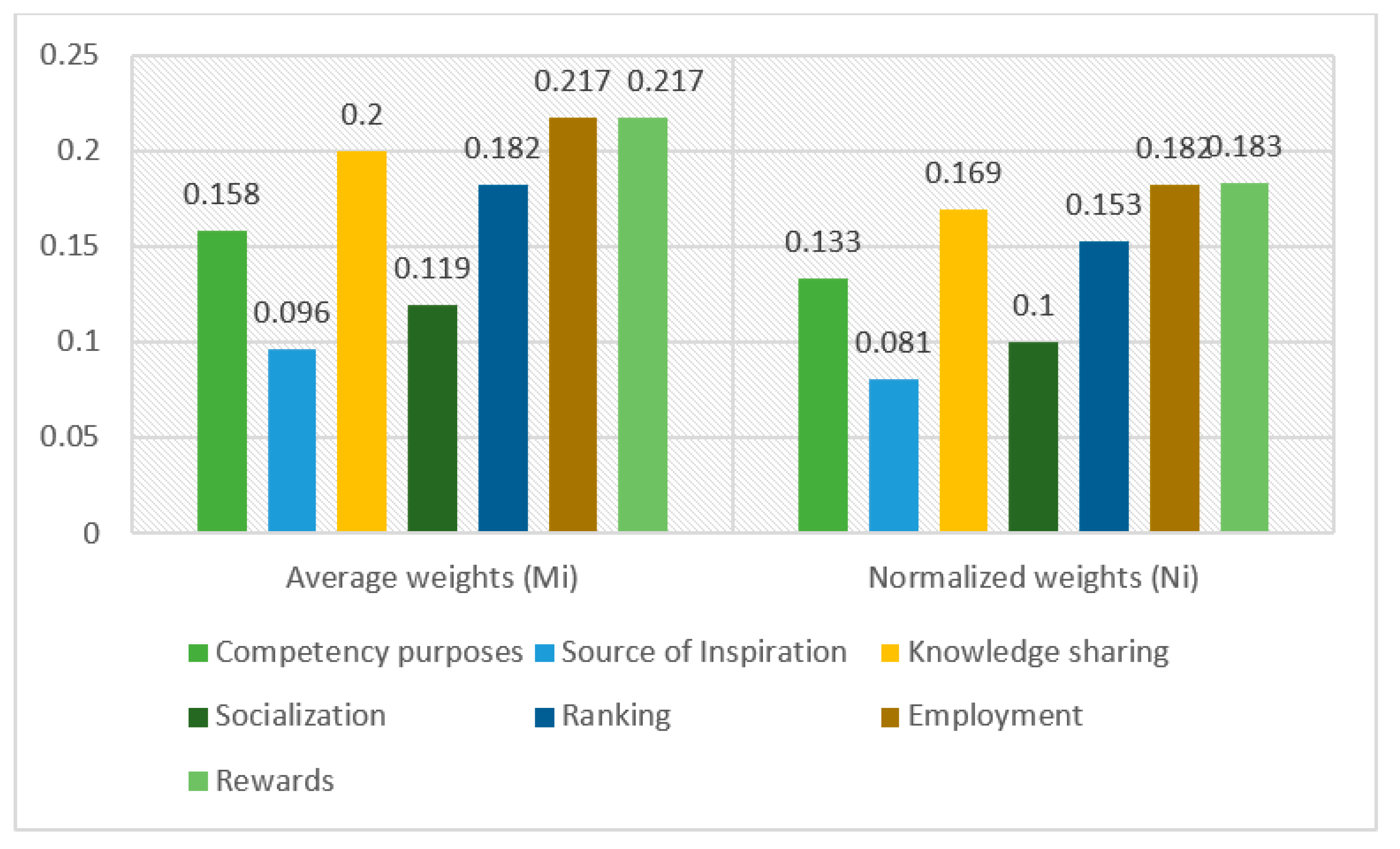

Table 5 displays the general results.

Figure 2 indicates the overall weights of the criteria (participation reasons). Here, rewards and employment are the primary motives of crowds for task accomplishment, followed by knowledge-sharing, ranking, competency purpose, socialization, and source of inspiration.

4.2. TOPSIS Technique for Evaluating and Ranking Alternatives

To overcome MCDM difficulties, Hwang and Yoon developed TOPSIS, a method for judging order performance by similarity to the ideal solution. According to the primary premise of the technique, the choice to be picked should be the one that is the furthest from the positive ideal solution and the closest to the negative one. In conventional MCDM techniques, the ratings and weights of criteria are precisely known. The traditional TOPSIS approach also uses real numbers to show the weights of the criteria and the ratings of the options. Several other fields have successfully used the TOPSIS technique. The proposed approach successfully evaluates the options and calculates their percentages. Five alternatives are proposed in the corresponding study. The alternatives are determined by their titles, which include crowd-1, crowd-2, crowd-3, crowd-4, and crowd-5. The following list is organized by the procedure’s outcomes and total numerical computation. The steps of the approach are as follows:

Step 1. Draw a decision matrix.

Develop the decision matrix by applying matrix Equation (7):

Here, i = 1,2,3,4,…,m and j = 1,2,3,4,…,m.

In the given matrix (1), Dij displays the value of ith alternatives on the jth characteristic.

Using the crowds and criteria listed in

Table 6 as a foundation, the decision matrix can be built for five crowds and provide values between 1 to 10.

Step 2. Draw normalized decision matrix (NDM)

Identify the normalized matrix by using Equation (8):

Equation (8) is used to normalize the previously provided decision matrix in

Table 2; the results are shown in

Table 7.

Step 3. Calculate the weighted normalized decision matrix (weighted NDM); recognize the weighted NDM via Equation (9):

The normalized matrix shown in

Table 6 was used to create the weighted NDM via Equation (9); the outputs of the scaled NDM, together with criteria weighting, are shown in

Table 8.

Step 4. Calculating ideal +ive and −ive parameters

The ideal +ive and −ive solutions are determined using the given Formulas (10) and (11),

The ideal I

j− and I

j+ solutions were determined from the weighted normalized table using Equations (10) and (11), and their results are shown in

Table 9 below. A solution that optimizes the beneficial criterion and reduces the cost or non-beneficial criteria was the positive ideal I

j+ solution. The negative ideal I

j− solution optimizes the cost or non-beneficial criteria while minimizing the beneficial criteria. The ideal I

j+ was the best crowd-motivation reason, while I

j− was considered to be the worst crowd-motivation reason.

Step 5. Identifying ideal and non-ideal separation

The ideal (S

+) as well as non-ideal separation (S

−) was determined using Equations (12) and (13):

Equations (13) and (14) were used to compute Si

+ and Si

−, accordingly, for the ranking of options, and the overall result is shown in

Table 10.

Step 6. Calculate performance score (Pᵢ) and ranking of alternatives

Pᵢ was determined using Equation (14):

Table 11 demonstrates the ordering of options after measuring the ideal and non-ideal separation measures and identifying pi via Equation (14).

The following

Figure 3 depicts the performance score and ranking of the evaluated crowds as alternatives.

We concluded from the outcomes that crowd-4 was the best alternative, having the highest performance value at 0.676, and thus, we ranked it 1st among the available crowds.

5. Conclusions

Crowdsourcing is a popular strategy for accomplishing a variety of tasks. Several individuals and their interactions are involved in the process, such as requesters that manage, execute, and supervise crowdsourcing initiatives and may post task requests, the crowd (individuals), consisting of virtual employees who participate in outsourcing activities or events, and the platform, which serves as a channel for interaction between the crowd and the crowdsourcers. The people on these platforms are connected by means of social media such as Facebook, WhatsApp, Instagram, Twitter, Y-mail, and Gmail accounts. By utilizing crowdsourcing, a requestor may access a wide potential audience with a variety of skills and experiences to help with work that would be challenging to do without many people. In the realm of software engineering, crowdsourcing has been used to address coding, validation, and architectural problems. A range of incentive schemes are used to encourage public involvement, just as they are used to motivate internal working groups in businesses. Employees get several types of points, cash awards, and other rewards based on their performance. Our study focused on achieving three goals related to crowdsourcing paradigm:

- (1)

Analysis of the appropriate characteristics of social crowd utilized for effective software crowdsourcing.

- (2)

Analysis of the participation reasons of people carrying out software developmental tasks.

- (3)

Development of a decision support system for evaluating primary participation reasons by assessing various crowds using Fuzzy AHP and TOPSIS techniques.

A decision support system was developed in this study to analyze the appropriate reasons for crowd participation in software development. Rewards and employment were evaluated as the highest motives of crowds for task accomplishment, followed by knowledge-sharing, ranking, competency, socialization, and source of inspiration. As this study evaluated crowd drives and motivations for task accomplishment, it will assist crowdsourcing organizations in enhancing their operations and productivity by providing incentives according to crowd needs and expectations.