Towards Machine Learning-Based FPGA Backend Flow: Challenges and Opportunities

Abstract

1. Introduction

- 1.

- The increasing capacity of FPGA that could contain 10 million logic cells and 40 billion transistors [3];

- 2.

- The increased complexity of the target applications, e.g., autonomous vehicles and medical surgery equipment with increased computation requirements, which adds further implementation delays in the CAD flow process.

- We review the FPGA CAD flow steps with a special emphasis on the employed machine learning algorithms to achieve the desired outcome in the respective backend flow step—HLS, Logic Synthesis, Placement and Routing;

- Based on our review of the little work done in the paradigm of ML algorithms deployment for CAD work flow steps, we analyze six implementation challenges that—if addressed—have the potential to overcome the bottleneck(s) associated with ML algorithms deployment in the CAD workflow steps;

- We discuss the novel cloud computing trends in CAD workflow which is a hot research spot and could assist in active persuasion of the identified research challenges.

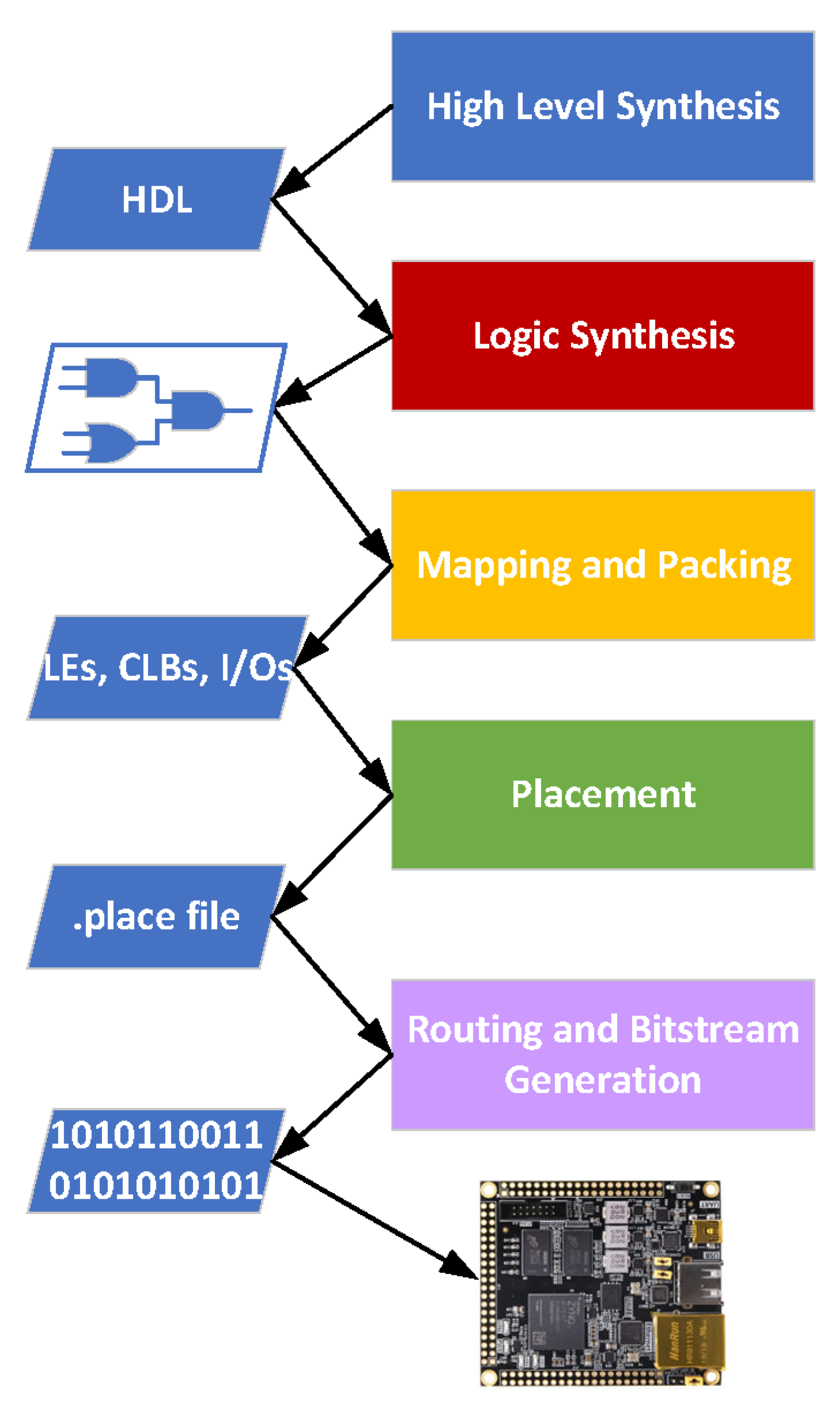

2. Conventional CAD Flow for FPGAs

2.1. High-Level Synthesis

2.2. Logic Synthesis

2.3. Placement

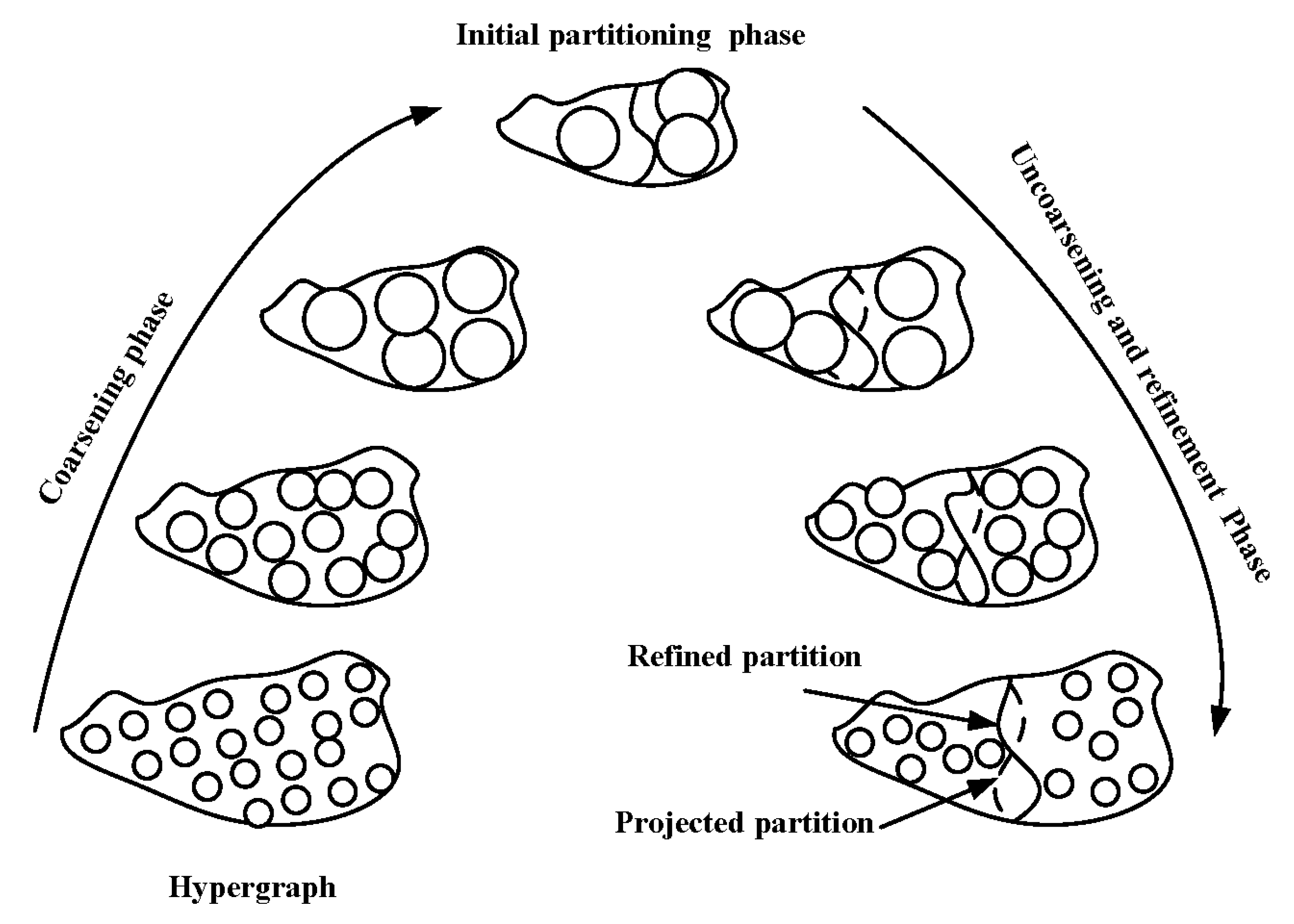

2.3.1. Partitioning-Based Approach

- 1.

- Clustering, where hierarchy of clustered hypergraphs is formed by combining hypergraph vertices based on connectivity;

- 2.

- Top-level partitioning, where the smallest hypergraph is partitioned using a fast initial solution generator and improved repeatedly by employing heuristics such as FM algorithm [29], and the process is repeated for the next smallest hypergraph;

- 3.

- Refinement, where solutions are improved iteratively by projecting from smaller levels to the next.

2.3.2. Analytical Placement Approach

2.3.3. Simulated Annealing Placement Approach

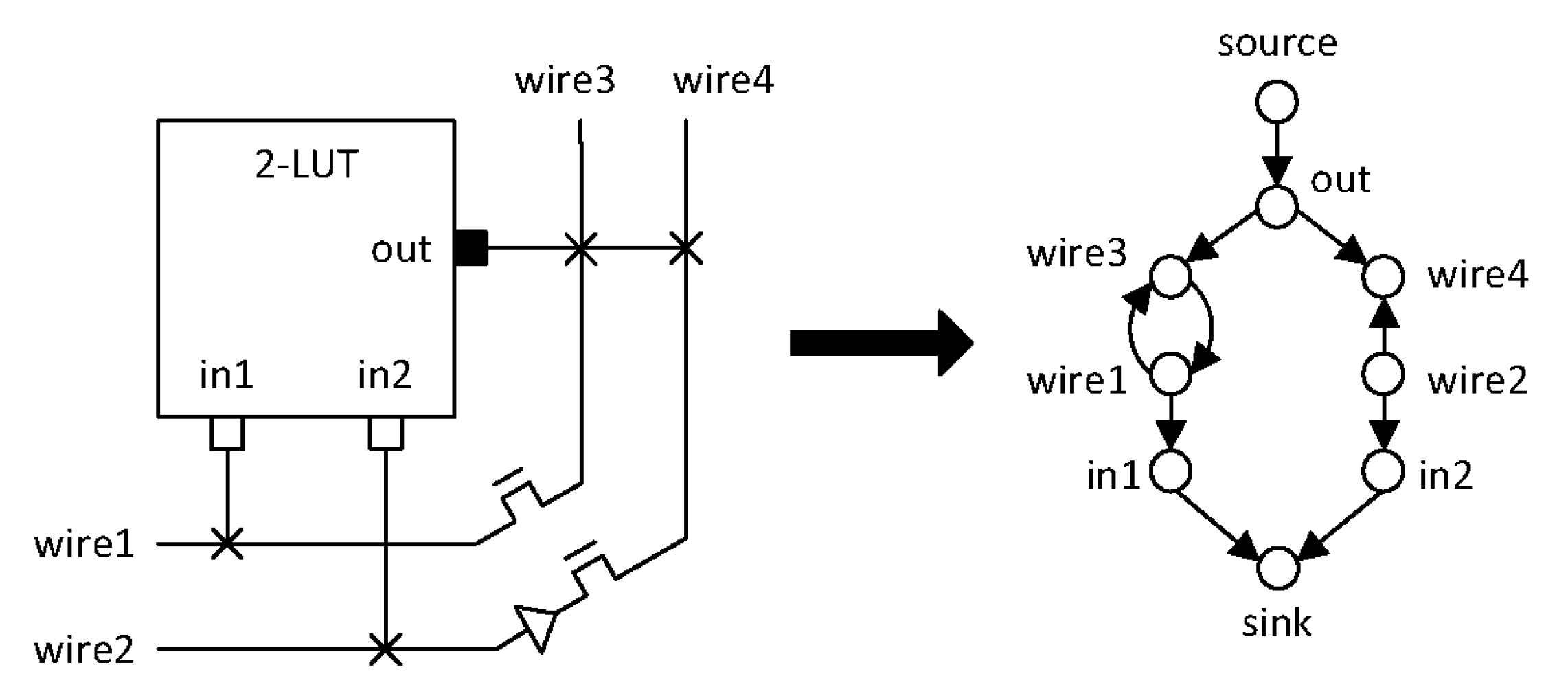

2.4. Routing

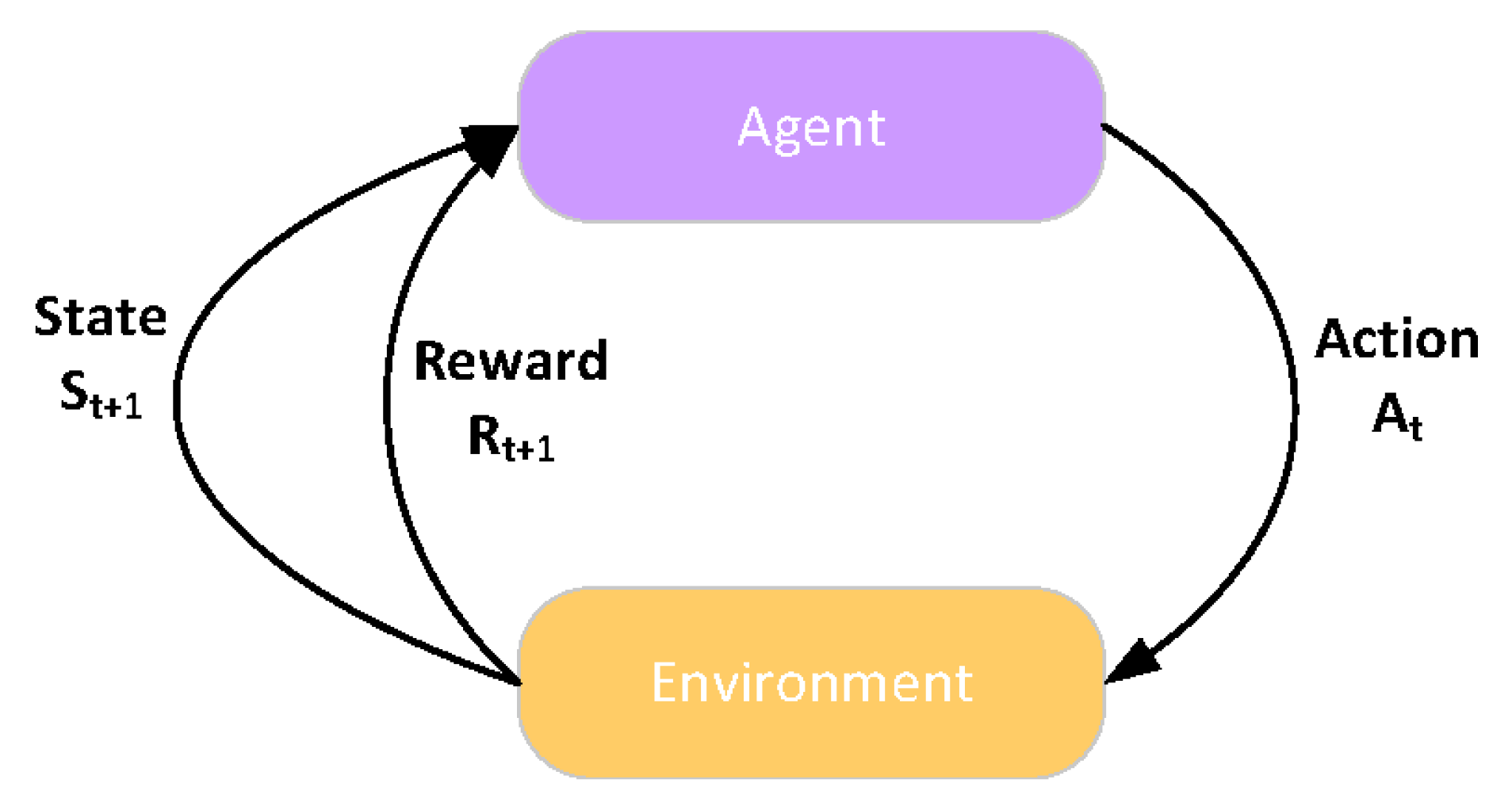

3. CAD Flow for FPGAs: Machine Learning Perspective

3.1. High-Level Synthesis Using Machine Learning

3.2. Logic Synthesis

3.3. Placement

3.4. Routing

4. Research Challenges and Opportunities

- Lack of open-source benchmarks;

- Absence of machine learning-based complete backend flow;

- Multi FPGA-prototyping using machine learning;

- Domain-specific flow;

- Federated learning for FPGAs;

- Lack of explainability in ML-based flow.

4.1. Lack of Open-Source Benchmarks

- 1.

- Publicly available benchmarks such as Titan [80] lack the machine learning acceleration examples;

- 2.

- Mapping algorithms in multiplication/addition operations in ML context requires significant functionality implementation and such mapping algorithms are not trivial to create, e.g., for AI tensor blocks [81] which require a great amount of multiplication/addition.

- 1.

- Not all embedded blocks are represented;

- 2.

- Not all aspects of deep neural network-based architecture are covered;

- 3.

- Benchmark is highly dependent on assumptions about critical metrics such as timing delays and baseline architectures.

4.2. Machine Learning-Based Complete Backend Flow

4.3. Multi-FPGA Prototyping Using Machine Learning

4.4. Domain-Specific Flow

4.5. Federated Learning for FPGA

4.6. Lack of Explainability in ML-Based Flow

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Taj, I.; Zaman, N. Towards Industrial Revolution 5.0 and Explainable Artificial Intelligence: Challenges and Opportunities. Int. J. Comput. Digit. Syst. 2022, 12, 295–320. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://www.electronicsweekly.com/blogs/mannerisms/markets/auto-iot-will-drive-ic-market-says-ic-insights-2017-12/ (accessed on 2 February 2023).

- Stratix. 2022. Available online: https://www.intel.co.uk/content/www/uk/en/products/details/fpga/stratix/10.html (accessed on 7 December 2022).

- Chen, S.C.; Chang, Y.W. FPGA placement and routing. In Proceedings of the 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Irvine, CA, USA, 13–16 November 2017; pp. 914–921. [Google Scholar]

- Zhuo, C.; Yu, B.; Gao, D. Accelerating chip design with machine learning: From pre-silicon to post-silicon. In Proceedings of the 2017 30th IEEE International System-on-Chip Conference (SOCC), Munich, Germany, 5–8 September 2017; pp. 227–232. [Google Scholar]

- Wang, Z.; Schafer, B.C. Machine leaming to set meta-heuristic specific parameters for high-level synthesis design space exploration. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 20–24 July 2020; pp. 1–6. [Google Scholar]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Fix, E.; Hodges, J.L. Discriminatory analysis. Nonparametric discrimination: Consistency properties. Int. Stat. Rev./Rev. Int. Stat. 1989, 57, 238–247. [Google Scholar] [CrossRef]

- Ward, S.; Ding, D.; Pan, D.Z. PADE: A high-performance placer with automatic datapath extraction and evaluation through high-dimensional data learning. In Proceedings of the DAC Design Automation Conference 2012, San Francisco, CA, USA, 3–7 June 2012; pp. 756–761. [Google Scholar]

- Chan, W.T.J.; Du, Y.; Kahng, A.B.; Nath, S.; Samadi, K. BEOL stack-aware routability prediction from placement using data mining techniques. In Proceedings of the 2016 IEEE 34th International Conference on Computer Design (ICCD), Scottsdale, AZ, USA, 2–5 October 2016; pp. 41–48. [Google Scholar]

- Tang, Y.; Zhang, L.; Bao, G.; Ren, F.; Pedrycz, W. Symmetric implicational algorithm derived from intuitionistic fuzzy entropy. Iran. J. Fuzzy Syst. 2022, 19, 27–44. [Google Scholar]

- Chhabria, V.A.; Kahng, A.B.; Kim, M.; Mallappa, U.; Sapatnekar, S.S.; Xu, B. Template-based PDN synthesis in floorplan and placement using classifier and CNN techniques. In Proceedings of the 2020 25th Asia and South Pacific Design Automation Conference (ASP-DAC), Beijing, China, 13–16 January 2020; pp. 44–49. [Google Scholar]

- Al-Hyari, A.; Areibi, S. Design space exploration of convolutional neural networks based on evolutionary algorithms. J. Comput. Vis. Imaging Syst. 2017, 3. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Al-Hyari, A.; Abuowaimer, Z.; Martin, T.; Gréwal, G.; Areibi, S.; Vannelli, A. Novel Congestion-estimation and Routability-prediction Methods based on Machine Learning for Modern FPGAs. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2019, 12, 1–25. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, X.; Qi, Z.; Chen, Z.; Zhou, Q.; Cai, Y. An accurate detailed routing routability prediction model in placement. In Proceedings of the 2015 6th Asia Symposium on Quality Electronic Design (ASQED), Kula Lumpur, Malaysia, 4–5 August 2015; pp. 119–122. [Google Scholar]

- Roorda, E.; Rasoulinezhad, S.; Leong, P.H.; Wilton, S.J. FPGA architecture exploration for DNN acceleration. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2022, 15, 1–37. [Google Scholar]

- Platforms, X.V. 2022. Available online: https://www.xilinx.com/products/design-tools/vitis/vitis-platform.html (accessed on 9 December 2022).

- EC2, A. 2022. Available online: https://aws.amazon.com/ec2/instance-types/f1/ (accessed on 9 December 2022).

- Wang, Z.; Schafer, B.C. Learning from the Past: Efficient High-level Synthesis Design Space Exploration for FPGAs. ACM Trans. Des. Autom. Electron. Syst. (TODAES) 2022, 27, 1–23. [Google Scholar] [CrossRef]

- Schafer, B.C.; Wang, Z. High-level synthesis design space exploration: Past, present, and future. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2019, 39, 2628–2639. [Google Scholar] [CrossRef]

- Brayton, R.K. The decomposition and factorization of Boolean expressions. In Proceedings of the International Symposium on Circuits and Systems (ISCAS 82), Rome, Italy, 10–12 May 1982. [Google Scholar]

- Reyes Fernandez de Bulnes, D.; Maldonado, Y.; Trujillo, L. Development of multiobjective high-level synthesis for fpgas. Sci. Program. 2020, 2020. [Google Scholar] [CrossRef]

- Cong, J.; Ding, Y. FlowMap: An optimal technology mapping algorithm for delay optimization in lookup-table based FPGA designs. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 1994, 13, 1–12. [Google Scholar]

- Cong, J.; Ding, Y. On area/depth trade-off in LUT-based FPGA technology mapping. In Proceedings of the 30th International Design Automation Conference, Dallas, TX, USA, 14–18 June 1993; pp. 213–218. [Google Scholar]

- Cong, J.; Hwang, Y.Y. Simultaneous depth and area minimization in LUT-based FPGA mapping. In Proceedings of the Third International ACM Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 12–14 February 1995; pp. 68–74. [Google Scholar]

- Cong, J.; Hwang, Y.Y. Structural gate decomposition for depth-optimal technology mapping in LUT-based FPGA designs. ACM Trans. Des. Autom. Electron. Syst. (TODAES) 2000, 5, 193–225. [Google Scholar] [CrossRef]

- Shah, D.; Hung, E.; Wolf, C.; Bazanski, S.; Gisselquist, D.; Milanovic, M. Yosys+ nextpnr: An open source framework from verilog to bitstream for commercial fpgas. In Proceedings of the 2019 IEEE 27th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), San Diego, CA, USA, 28 April–1 May 2019; pp. 1–4. [Google Scholar]

- Fiduccia, C.M.; Mattheyses, R.M. A linear-time heuristic for improving network partitions. In Papers on Twenty-Five Years of Electronic Design Automation; ACM: Las Vegas, NV, USA, 1988; pp. 241–247. [Google Scholar]

- Bui, T.N.; Chaudhuri, S.; Leighton, F.T.; Sipser, M. Graph bisection algorithms with good average case behavior. Combinatorica 1987, 7, 171–191. [Google Scholar] [CrossRef]

- Ababei, C.; Feng, Y.; Goplen, B.; Mogal, H.; Zhang, T.; Bazargan, K.; Sapatnekar, S. Placement and routing in 3D integrated circuits. IEEE Des. Test Comput. 2005, 22, 520–531. [Google Scholar] [CrossRef]

- Das, S.; Fan, A.; Chen, K.N.; Tan, C.S.; Checka, N.; Reif, R. Technology, performance, and computer-aided design of three-dimensional integrated circuits. In Proceedings of the 2004 International Symposium on Physical Design, Phoenix, AZ, USA, 18–21 April 2004; pp. 108–115. [Google Scholar]

- Bartzas, K.S.A.; Soudris, D. Architecture level exploration of alternative schmes targeting 3d fpgas: A software supported methodology. Int. J. Reconfigurable Comput. 2008, 2008. [Google Scholar] [CrossRef]

- Chtourou, S.; Abid, M.; Marrakchi, Z.; Amouri, E.; Mehrez, H. On exploiting partitioning-based placement approach for performances improvement of 3d fpga. In Proceedings of the 2017 International Conference on High Performance Computing & Simulation (HPCS), Genoa, Italy, 17–21 July 2017; pp. 572–579. [Google Scholar]

- Kleinhans, J.M.; Sigl, G.; Johannes, F.M.; Antreich, K.J. GORDIAN: VLSI placement by quadratic programming and slicing optimization. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 1991, 10, 356–365. [Google Scholar] [CrossRef]

- Ludwin, A.; Betz, V.; Padalia, K. High-quality, deterministic parallel placement for FPGAs on commodity hardware. In Proceedings of the 16th International ACM/SIGDA Symposium on Field Programmable Gate Arrays, Monterey, CA, USA, 24–26 February 2008; pp. 14–23. [Google Scholar]

- Vorwerk, K.; Kennings, A.; Greene, J.W. Improving simulated annealing-based FPGA placement with directed moves. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2009, 28, 179–192. [Google Scholar] [CrossRef]

- Marquardt, A.; Betz, V.; Rose, J. Using cluster-based logic blocks and timing-driven packing to improve FPGA speed and density. In Proceedings of the 1999 ACM/SIGDA Seventh International Symposium on Field Programmable Gate Arrays, Monterey, CA, USA, 21–23 February 1999; pp. 37–46. [Google Scholar]

- Betz, V.; Rose, J. VPR: A new packing, placement and routing tool for FPGA research. In Proceedings of the International Workshop on Field Programmable Logic and Applications, London, UK, 20 August 1997; pp. 213–222. [Google Scholar]

- Marquardt, A.; Betz, V.; Rose, J. Timing-driven placement for FPGAs. In Proceedings of the 2000 ACM/SIGDA Eighth International Symposium on Field Programmable Gate Arrays, Monterey, CA, USA, 10–11 February 2000; pp. 203–213. [Google Scholar]

- McMurchie, L.; Ebeling, C. PathFinder: A negotiation-based performance-driven router for FPGAs. In Proceedings of the 1995 ACM Third International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 12–14 February 1995; pp. 111–117. [Google Scholar]

- Swartz, J.S.; Betz, V.; Rose, J. A fast routability-driven router for FPGAs. In Proceedings of the 1998 ACM/SIGDA Sixth International Symposium on Field Programmable Gate Arrays, Monterey, CA, USA, 22–25 February 1998; pp. 140–149. [Google Scholar]

- Lou, J.; Krishnamoorthy, S.; Sheng, H.S. Estimating routing congestion using probabilistic analysis. In Proceedings of the 2001 international symposium on Physical design, Sonoma, CA, USA, 1–4 April 2001; pp. 112–117. [Google Scholar]

- Chavez, J.; Torralba, A.; Franquelo, L.G. A fuzzy-logic based tool for topology selection in analog synthesis. In Proceedings of the IEEE International Symposium on Circuits and Systems-ISCAS’94, London, UK, 30 May–2 June 1994; Volume 1, pp. 367–370. [Google Scholar]

- Kim, R.G.; Doppa, J.R.; Pande, P.P. Machine learning for design space exploration and optimization of manycore systems. In Proceedings of the 2018 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Diego, CA, USA, 5–8 November 2018; pp. 1–6. [Google Scholar]

- Ding, D.; Gao, J.R.; Yuan, K.; Pan, D.Z. AENEID: A generic lithography-friendly detailed router based on post-RET data learning and hotspot detection. In Proceedings of the 48th Design Automation Conference, San Diego, CA, USA, 5–10 June 2011; pp. 795–800. [Google Scholar]

- Yang, H.; Luo, L.; Su, J.; Lin, C.; Yu, B. Imbalance aware lithography hotspot detection: A deep learning approach. J. Micro/Nanolithography MEMS MOEMS 2017, 16, 033504. [Google Scholar] [CrossRef]

- Matsuba, T.; Takai, N.; Fukuda, M.; Kubo, Y. Inference of suitable for required specification analog circuit topology using deep learning. In Proceedings of the 2018 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Ishigaki, Okinawa, Japan, 27–30 November 2018; pp. 131–134. [Google Scholar]

- Wang, H.; Yang, J.; Lee, H.S.; Han, S. Learning to design circuits. arXiv 2018, arXiv:1812.02734. [Google Scholar]

- Ma, Y.; Ren, H.; Khailany, B.; Sikka, H.; Luo, L.; Natarajan, K.; Yu, B. High performance graph convolutional networks with applications in testability analysis. In Proceedings of the 56th Annual Design Automation Conference 2019, Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar]

- Available online: https://semiengineering.com/entities/flex-logix-technologies-inc/ (accessed on 9 December 2022).

- Makrani, H.M.; Farahmand, F.; Sayadi, H.; Bondi, S.; Dinakarrao, S.M.P.; Homayoun, H.; Rafatirad, S. Pyramid: Machine learning framework to estimate the optimal timing and resource usage of a high-level synthesis design. In Proceedings of the 2019 29th International Conference on Field Programmable Logic and Applications (FPL), Barcelona, Spain, 8–12 September 2019; pp. 397–403. [Google Scholar]

- Farahmand, F.; Ferozpuri, A.; Diehl, W.; Gaj, K. Minerva: Automated hardware optimization tool. In Proceedings of the 2017 International Conference on ReConFigurable Computing and FPGAs (ReConFig), Cancun, Mexico, 4–6 December 2017; pp. 1–8. [Google Scholar]

- Goswami, P.; Schaefer, B.C.; Bhatia, D. Machine learning based fast and accurate High Level Synthesis design space exploration: From graph to synthesis. Integration 2023, 88, 116–124. [Google Scholar] [CrossRef]

- Mahapatra, A.; Schafer, B.C. Machine-learning based simulated annealer method for high level synthesis design space exploration. In Proceedings of the 2014 Electronic System Level Synthesis Conference (ESLsyn), San Francisco, CA, USA, 10–11 June 2015; pp. 1–6. [Google Scholar]

- Available online: https://support.huawei.com/enterprise/en/doc/EDOC1100116632/4988e57/ops-apis-supported-by-a-device (accessed on 9 December 2022).

- Zhang, Y.; Bai, X.; Fan, R.; Wang, Z. Deviation-sparse fuzzy c-means with neighbor information constraint. IEEE Trans. Fuzzy Syst. 2018, 27, 185–199. [Google Scholar] [CrossRef]

- Dai, S.; Zhou, Y.; Zhang, H.; Ustun, E.; Young, E.F.; Zhang, Z. Fast and accurate estimation of quality of results in high-level synthesis with machine learning. In Proceedings of the 2018 IEEE 26th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Boulder, CO, USA, 29 April–1 May 2018; pp. 129–132. [Google Scholar]

- Liu, D.; Schafer, B.C. Efficient and reliable high-level synthesis design space explorer for FPGAs. In Proceedings of the 2016 26th International Conference on Field Programmable Logic and Applications (FPL), Lausanne, Switzerland, 29 August–2 September 2016; pp. 1–8. [Google Scholar]

- Koeplinger, D.; Delimitrou, C.; Prabhakar, R.; Kozyrakis, C.; Zhang, Y.; Olukotun, K. Automatic generation of efficient accelerators for reconfigurable hardware. ACM SIGARCH Comput. Archit. News 2016, 44, 115–127. [Google Scholar] [CrossRef]

- Yanghua, Q.; Kapre, N.; Ng, H.; Teo, K. Improving classification accuracy of a machine learning approach for fpga timing closure. In Proceedings of the 2016 IEEE 24th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Washington, DC, USA, 1–3 May 2016; pp. 80–83. [Google Scholar]

- Yanghua, Q.; Adaikkala Raj, C.; Ng, H.; Teo, K.; Kapre, N. Case for design-specific machine learning in timing closure of FPGA designs. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 21–23 February 2016; pp. 169–172. [Google Scholar]

- Xu, C.; Liu, G.; Zhao, R.; Yang, S.; Luo, G.; Zhang, Z. A parallel bandit-based approach for autotuning FPGA compilation. In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017; pp. 157–166. [Google Scholar]

- Ustun, E.; Deng, C.; Pal, D.; Li, Z.; Zhang, Z. Accurate operation delay prediction for FPGA HLS using graph neural networks. In Proceedings of the 39th International Conference on Computer-Aided Design, Virtual Event, USA, 2–5 November 2020; pp. 1–9. [Google Scholar]

- Elgammal, M.A.; Murray, K.E.; Betz, V. Learn to place: FPGA placement using reinforcement learning and directed moves. In Proceedings of the 2020 International Conference on Field-Programmable Technology (ICFPT), Maui, HI, USA, 7–8 December 2020; pp. 85–93. [Google Scholar]

- Pui, C.W.; Chen, G.; Chow, W.K.; Lam, K.C.; Kuang, J.; Tu, P.; Zhang, H.; Young, E.F.; Yu, B. RippleFPGA: A routability-driven placement for large-scale heterogeneous FPGAs. In Proceedings of the 2016 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Austin, TX, USA, 7–10 November 2016; pp. 1–8. [Google Scholar]

- Pui, C.W.; Chen, G.; Ma, Y.; Young, E.F.; Yu, B. Clock-aware ultrascale FPGA placement with machine learning routability prediction. In Proceedings of the 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Irvine, CA, USA, 13–16 November 2017; pp. 929–936. [Google Scholar]

- Yang, S.; Gayasen, A.; Mulpuri, C.; Reddy, S.; Aggarwal, R. Routability-driven FPGA placement contest. In Proceedings of the 2016 on International Symposium on Physical Design, Rosa, CA, USA, 3–6 April 2016; pp. 139–143. [Google Scholar]

- Maarouf, D.; Alhyari, A.; Abuowaimer, Z.; Martin, T.; Gunter, A.; Grewal, G.; Areibi, S.; Vannelli, A. Machine-learning based congestion estimation for modern FPGAs. In Proceedings of the 2018 28th International Conference on Field Programmable Logic and Applications (FPL), Dublin, Ireland, 27–31 August 2018; pp. 427–4277. [Google Scholar]

- Al-Hyari, A.; Szentimrey, H.; Shamli, A.; Martin, T.; Grewal, G.; Areibi, S. A deep learning framework to predict routability for fpga circuit placement. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2021, 14, 1–28. [Google Scholar] [CrossRef]

- Martin, T.; Areibi, S.; Gréwal, G. Effective Machine-Learning Models for Predicting Routability During FPGA Placement. In Proceedings of the 2021 ACM/IEEE 3rd Workshop on Machine Learning for CAD (MLCAD), Raleigh, NC, USA, 30 August–3 September 2021; pp. 1–6. [Google Scholar]

- Qi, Z.; Cai, Y.; Zhou, Q. Accurate prediction of detailed routing congestion using supervised data learning. In Proceedings of the 2014 IEEE 32nd International Conference on Computer Design (ICCD), Seoul, Republic of Korea, 19–22 October 2014; pp. 97–103. [Google Scholar]

- Jain, S.R.; Okabe, K. Training a fully convolutional neural network to route integrated Circuits. arXiv 2017, arXiv:1706.08948. [Google Scholar]

- He, Y.; Li, H.; Jin, T.; Bao, F.S. Circuit Routing Using Monte Carlo Tree Search and Deep Reinforcement Learning. In Proceedings of the 2022 International Symposium on VLSI Design, Automation and Test (VLSI-DAT), Hsinchu, Taiwan, 18–21 April 2022; pp. 1–5. [Google Scholar]

- Liao, H.; Zhang, W.; Dong, X.; Poczos, B.; Shimada, K.; Burak Kara, L. A deep reinforcement learning approach for global routing. J. Mech. Des. 2020, 142. [Google Scholar] [CrossRef]

- Farooq, U.; Hasan, N.U.; Baig, I.; Zghaibeh, M. Efficient FPGA Routing using Reinforcement Learning. In Proceedings of the 2021 12th International Conference on Information and Communication Systems (ICICS), Valencia, Spain, 24–26 May 2021; pp. 106–111. [Google Scholar]

- Baig, I.; Farooq, U. Efficient Detailed Routing for FPGA Back-End Flow Using Reinforcement Learning. Electronics 2022, 11, 2240. [Google Scholar]

- Utyamishev, D.; Partin-Vaisband, I. Late Breaking Results: A Neural Network that Routes ICs. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 20–24 July 2020; pp. 1–2. [Google Scholar]

- Goswami, P.; Shahshahani, M.; Bhatia, D. MLSBench: A Benchmark Set for Machine Learning based FPGA HLS Design Flows. In Proceedings of the 2022 IEEE 13th Latin America Symposium on Circuits and System (LASCAS), Puerto Varas, Chile, 1–4 March 2022; pp. 1–4. [Google Scholar]

- Murray, K.E.; Whitty, S.; Liu, S.; Luu, J.; Betz, V. Timing-driven titan: Enabling large benchmarks and exploring the gap between academic and commercial CAD. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2015, 8, 1–18. [Google Scholar] [CrossRef]

- Boutros, A.; Nurvitadhi, E.; Ma, R.; Gribok, S.; Zhao, Z.; Hoe, J.C.; Betz, V.; Langhammer, M. Beyond peak performance: Comparing the real performance of AI-optimized FPGAs and GPUs. In Proceedings of the 2020 International Conference on Field-Programmable Technology (ICFPT), Maui, HI, USA, 9–11 December 2020; pp. 10–19. [Google Scholar]

- Arora, A.; Mehta, S.; Betz, V.; John, L.K. Tensor slices to the rescue: Supercharging ML acceleration on FPGAs. In Proceedings of the 2021 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Virtual Event, USA, 28 February–2 March 2021; pp. 23–33. [Google Scholar]

- Khadilkar, S.; Margala, M. Optimizing open-source FPGA CAD tools. In Proceedings of the 2022 IEEE High Performance Extreme Computing Conference (HPEC), Virtually, 19–23 September 2022; pp. 1–4. [Google Scholar]

- Murray, K.E.; Petelin, O.; Zhong, S.; Wang, J.M.; Eldafrawy, M.; Legault, J.P.; Sha, E.; Graham, A.G.; Wu, J.; Walker, M.J.; et al. Vtr 8: High-performance cad and customizable fpga architecture modelling. ACM Trans. Reconfigurable Technol. Syst. (TRETS) 2020, 13, 1–55. [Google Scholar] [CrossRef]

- Farooq, U.; Alzahrani, B.A. Exploring and optimizing partitioning of large designs for multi-FPGA based prototyping platforms. Computing 2020, 102, 2361–2383. [Google Scholar] [CrossRef]

- Farooq, U.; Chotin-Avot, R.; Azeem, M.; Ravoson, M.; Mehrez, H. Novel architectural space exploration environment for multi-FPGA based prototyping systems. Microprocess. Microsystems 2018, 56, 169–183. [Google Scholar]

- Accelerator, N. 2022. Available online: https://atos.net/en/solutions/high-performance-computing-hpc/hpc-as-a-service (accessed on 9 December 2022).

- Zhang, Z.; Njilla, L.; Kamhoua, C.A.; Yu, Q. Thwarting security threats from malicious FPGA tools with novel FPGA-oriented moving target defense. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2018, 27, 665–678. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, PMLR, Ft. Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Yang, Z.; Hu, S.; Chen, K. FPGA-based hardware accelerator of homomorphic encryption for efficient federated learning. arXiv 2020, arXiv:2007.10560. [Google Scholar]

- Microsoft. 2022. Available online: https://www.microsoft.com/en-us/research/blog/microsoft-unveils-projectbrainwave/ (accessed on 11 December 2022).

- Xu, F.; Uszkoreit, H.; Du, Y.; Fan, W.; Zhao, D.; Zhu, J. Explainable AI: A brief survey on history, research areas, approaches and challenges. In Proceedings of the CCF International Conference on Natural Language Processing and Chinese Computing, Dunhuang, China, 9–14 October 2019; pp. 563–574. [Google Scholar]

- Doran, D.; Schulz, S.; Besold, T.R. What does explainable AI really mean? A new conceptualization of perspectives. arXiv 2017, arXiv:1710.00794. [Google Scholar]

- Minh, D.; Wang, H.X.; Li, Y.F.; Nguyen, T.N. Explainable artificial intelligence: A comprehensive review. Artif. Intell. Rev. 2022, 55, 3503–3568. [Google Scholar]

- Rabozzi, M. CAOS: CAD as an Adaptive Open-platform Service for high performance reconfigurable systems. Spec. Top. Inf. Technol. 2019. [Google Scholar] [CrossRef]

- Intel. 2022. Available online: https://newsroom.intel.com/news/intel-fpgas-power-acceleration-as-a-service-alibaba-cloud/#gs.m38tsy (accessed on 31 December 2022).

- Alibaba. 2022. Available online: https://www.alibabacloud.com/help/en/fpga-based-ecs-instance (accessed on 31 December 2022).

- Available online: https://sdgs.un.org/goals (accessed on 31 December 2022).

| S. No | CAD Step | Objective | ML Algorithm | Limitations | Opportunities |

|---|---|---|---|---|---|

| 1 | HLS |

|

|

|

|

| 2 | Logic Synthesis |

|

|

| |

| 3 | Placement |

|

|

| |

| 4 | Routing |

|

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taj, I.; Farooq, U. Towards Machine Learning-Based FPGA Backend Flow: Challenges and Opportunities. Electronics 2023, 12, 935. https://doi.org/10.3390/electronics12040935

Taj I, Farooq U. Towards Machine Learning-Based FPGA Backend Flow: Challenges and Opportunities. Electronics. 2023; 12(4):935. https://doi.org/10.3390/electronics12040935

Chicago/Turabian StyleTaj, Imran, and Umer Farooq. 2023. "Towards Machine Learning-Based FPGA Backend Flow: Challenges and Opportunities" Electronics 12, no. 4: 935. https://doi.org/10.3390/electronics12040935

APA StyleTaj, I., & Farooq, U. (2023). Towards Machine Learning-Based FPGA Backend Flow: Challenges and Opportunities. Electronics, 12(4), 935. https://doi.org/10.3390/electronics12040935