1. Introduction

Chronic rhinosinusitis is a pressing problem in modern otolaryngology. A special place among this group of diseases is occupied by chronic odontogenic rhinosinusitis [

1]. According to various statistics, their frequency among all chronic rhinosinusitis is up to 40% [

2]. Despite such a significant prevalence among the population, today there are many unresolved issues regarding their diagnosis and treatment.

Determining the diagnosis of odontogenic rhinosinusitis sometimes causes a lot of difficulties among doctors of various specialties. At the same time, the correct diagnosis is of key importance for the selection of the correct treatment tactics.

According to the latest European Position Paper on Rhinosinusitis and Nasal Polyps (EPOS) [

3], computed tomography (CT) is “the gold standard” for diagnosing chronic rhinosinusitis, in general, and odontogenic rhinosinusitis, in particular. CT makes it possible to study in detail the structure of the paranasal sinuses, including the maxillary sinus, to determine the relative position of the teeth and the presence or absence of defects in the Schneiderian membrane.

The current study was based on Multi-Spiral Computed Tomography (MSCT). This type of examination is preferred because of several advantages over Cone Beam Computed Tomography (CBCT). The advantages are as follows:

High-quality images

The scan requires a shorter time span, which is essential for long-term procedures.

Presence of a densitometric scale for measuring the density of organs and tissues. This scale is significant for the fast and accurate determination of the severity of destructive processes in the Paranasal Sinuses’ Structure.

However, even with the information content of the MSCT study, there are questions regarding the features of medical image processing.

During the global COVID-19 pandemic, significant efforts by scientists around the world have been directed toward data-driven medicine. Such studies have included medical data analysis [

4], epidemic process modeling [

5], virus research [

6], computer vision for medical imaging [

7], medical data storage security [

8], automated diagnostics [

9], etc. One of the promising areas of research is the development of decision support systems for use in healthcare practice, which combines different approaches. Such systems improve the adequacy and accuracy of the result by combining different approaches, and can also be used with limited resources in institutions that provide medical care.

The analysis of dental and sinus imaging investigations by automated decision support systems can help diagnose Chronic Odontogenic Rhinosinusitis by spotting patterns that are suggestive of the disorder. For instance, an information system could be taught to spot nasal changes consistent with inflammation and to spot the presence of dental infections such as abscesses. Additionally, by automatically extracting patient data from electronic health records and delivering it to the physician in a structured format, the decision support system can be utilized to enhance the effectiveness of the clinical process. In order to identify individuals who are at high risk of Chronic Odontogenic Rhinosinusitis and other diseases, a decision support system could be used to assess patient data such as symptoms, medical history, and test findings.

Therefore, the current study aims to develop an intelligent decision support system for the differential diagnosis of chronic odontogenic rhinosinusitis based on computer vision methods.

To achieve the goal, the following tasks were formulated:

- 4.

Analyze data-driven medicine approaches for the study of chronic rhinosinusitis.

- 5.

Prepare and process MSCT data of patients with chronic rhinosinusitis.

- 6.

Develop a questionnaire to interview patients with suspected chronic rhinosinusitis.

- 7.

Develop a computer vision model for MSCT segmentation of patients with chronic rhinosinusitis based on the U-Net architecture.

- 8.

Conduct an experimental study of the developed model on test samples.

- 9.

Evaluate the accuracy of the developed data segmentation model.

- 10.

Develop the architecture of an intelligent decision support system for the differential diagnosis of chronic rhinosinusitis.

- 11.

Analyze the effectiveness of the implementation of an intelligent decision support system for the differential diagnosis of chronic rhinosinusitis.

The promising contribution of the research is two-fold. Firstly, a 23 convolutional layer U-Net network architecture [

10] has been used for the segmentation of MSCT data with odontogenic maxillary sinusitis. The proposed model is implemented in such a way that each pair of repeated 3×3 convolutions layers is followed by an Exponential Linear Unit (ELU) instead of a Rectified Linear Unit (ReLU) as an activation function. Secondly, for the first time, an intelligent decision support system for differential diagnosis of Chronic Odontogenic Rhinosinusitis has been proposed that combines data from a patient’s interview, doctor’s examination, and MSCT data.

The further structure of the paper is the following:

Section 2, Current Research Analysis, provides an overview of data-driven approaches used to study chronic rhinosinusitis.

Section 3, Materials and Methods, provides a description of the dataset, models, model training and evaluation, and the concept of the decision-making system.

Section 4, Results, describes the performance of the proposed deep learning model and the architecture of the decision support system.

Section 5, Discussion, discusses the perspective use of the model and the intelligent decision support system. The conclusion describes the outcomes of the research.

2. Current Research Analysis

Data-driven medicine methods are used to study chronic rhinosinusitis in various aspects.

The authors of [

11] propose a conceptual model of chronic rhinosinusitis, which is presented as a teaching aid. The proposed approach facilitates the identification of a spreading factor and subsequent disease-modifying microbial, inflammatory, or mucociliary effects and allows individual tailoring of treatment to eliminate these factors.

Ref. [

12] is devoted to developing multivariate models based on artificial neural networks to predict the early outcomes of chronic rhinosinusitis in individual patients. Several models have been developed and tested on the data of 115 patients operated on for chronic rhinosinusitis. The proposed models made it possible to predict the early outcome of the operation in 90% of patients. However, the quality of the models depended on the mathematical representation of the result of the operation.

The authors of [

13] evaluated the correlation between the symptoms of chronic rhinosinusitis and the results of CT. For this, 60 patients diagnosed with chronic rhinosinusitis were analyzed. For each patient, an assessment of the symptoms and severity of the disease was carried out using a visual analog scale. The authors conducted a correlation study between symptom analysis results and CT scores and determined that computed tomography scores may help clinicians predict the severity of symptoms of nasal congestion and discharge in chronic rhinosinusitis.

The authors of [

14] use a standardized symptom assessment tool for chronic rhinosinusitis preoperative symptoms to understand the heterogeneity of symptoms. For the study, 97 surgical patients with chronic rhinosinusitis were examined. The authors generated symptom-based clusters using preoperative assessments using uncontrolled analysis and network plots and conclude that a distinct burden of symptoms can be identified that may be associated with treatment outcomes based on symptoms in chronic rhinosinusitis.

The authors of [

15] developed a mathematical model based on Bernoulli’s equations that allow clinicians to obtain, using specific direct digital manometry, pressure measurements over time to determine which part of the nose is lined. The proposed model can help study each part of the nose and objectively assess the geometry and resistance of the nasal cavities, especially in preoperative planning and follow-up.

The authors of [

16] consider the principles of unsupervised learning for the study of chronic rhinosinusitis. The review study summarizes different unsupervised learning methods and their practical applications. Even though the authors give possible directions for applying deep learning methods for the study of chronic rhinosinusitis, the paper does not present specific implementations of the models and the results of studies of chronic rhinosinusitis using the considered models.

A review article [

17] reviewed the latest research on chronic rhinosinusitis, focusing on potential new biomarkers and treatment options, which are integrated into a precision medicine model for chronic rhinosinusitis. However, the authors conclude that more long-term studies are needed to investigate the subsequent long-term effects of model implementation.

The authors of [

18] assessed the differences in general approaches to assessing CT for chronic rhinosinusitis using the Lund–McKay system and its modified version. For this, a sinus CT study was performed on 526 subjects selected from a more extensive study of chronic rhinosinusitis. As a result, exploratory factor analysis assessed the similarity between the two systems. The authors conclude that different patterns of opacification may be of clinical relevance, improving the measurement of objective evidence in studies of chronic rhinosinusitis and sinus disease.

Ref. [

19] is devoted to classifying acute invasive fungal rhinosinusitis caused by Mucor versus Aspergillus species by evaluating computed tomography radiological findings. Binomial logistic regression was used to analyze the correlation between variables and mushroom type. Considering the low predictive value of any single anatomical site assessed, a model based on a “two-tailed mean score,” including the nasal cavity, maxillary sinuses, ethmoid air cells, sphenoid sinus, and frontal sinuses, produced the highest predictive accuracy.

Ref. [

20] is devoted to assessing the relevance of chronic rhinosinusitis CT features to the efficacy of mechanical thrombectomy in patients with acute ischemic stroke. To do this, the study included 311 patients qualified for mechanical thrombectomy in whom the chronic rhinosinusitis features were assessed based on a CT scan, according to the Lund–Mackay score. As a result, the authors conclude that CT features of chronic rhinosinusitis can be used as a prognostic tool for early assessment of prognosis in patients with stroke.

Although there are several studies on the application of information technology to the study of chronic rhinosinusitis, to date, there is no comprehensive solution for differential diagnosis that combines the assessment of patient symptoms and the analysis of MSCT images.

3. Materials and Methods

3.1. Dataset

The dataset used in this study contains 162 MSCT images, 90 healthy and 72 MSCTs with OMS, which represent the most complicated cases of pathology. MSCT images of patients with chronic odontogenic maxillary sinusitis were included in the study. It is well known that rhinosinusitis, which is characterized by the complexity and ambiguity of MSCT images, is the most difficult to diagnose. It is in such cases that radiologists often need additional time, and sometimes the creation of consultations, to make an accurate diagnosis in the most difficult cases. Therefore, the current study included only medical images that are difficult to diagnose (for example, patients after surgery, after implantation, etc.).

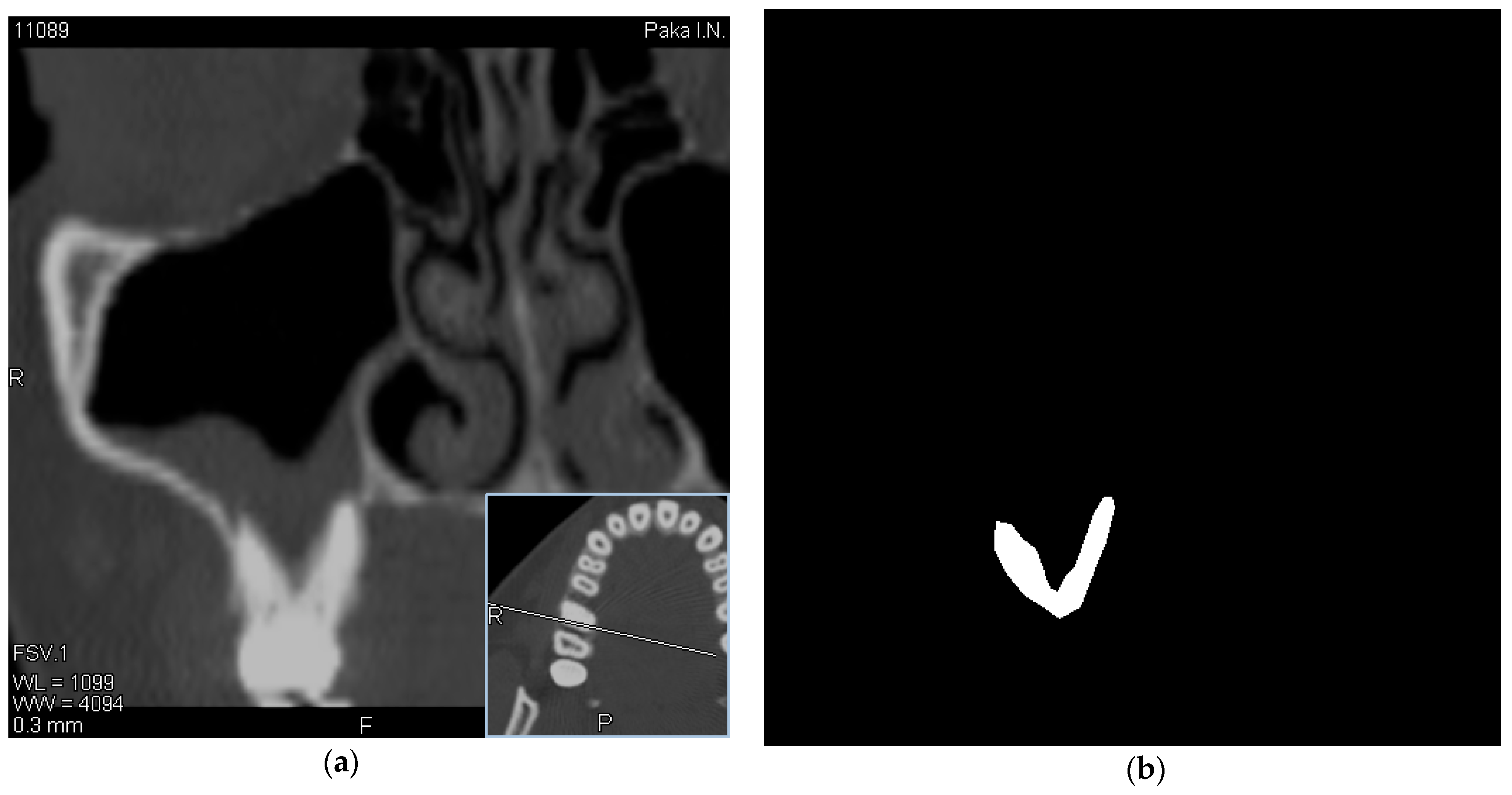

In this regard, along with the evaluation of MSCT images, we also evaluated the history and examination data of these patients obtained using a chatbot, which can significantly increase the information content of diagnostic procedures and become the basis for making the correct diagnosis. The visualization of data was performed using the RadiAnt [

21] software. Experts selected tomographic images with a visible location of the roots of the teeth in the maxillary sinus. Images have been obtained using a Toshiba Aquilion 4, Computed Tomography Scanner (Japan) with a scan thickness of 2 mm [

22]. An example of OMS data as well as a mask created by an expert is shown in

Figure 1.

Data were collected in accordance with the ethical principles of the Helsinki declaration [

23] and provided by Kharkiv Medical University [

24]. Each scan includes patient identity data, in particular, the patient’s name, age, sex, birth date, occupation, referring physician, study date, and DICOM Unique Identifiers. Following patient privacy regulations, we converted the DICOM files into PNG image formats, while removing the header information with all the patient’s data.

The MSCT scan was performed using a Toshiba Aquilion CT scanner, which is a multislice MSCT scanner with the ability to simultaneously collect data on four slices with a thickness of 0.5 mm. It is characterized by high performance with a full turnaround time of up to 0.4 s.

The high-speed swivel mechanism and fast system reconstruction unit provide faster data acquisition, which increases the throughput of the scanner.

The Aquilion 4 is equipped as standard with real-time multislice tomography (Aspire CI) software with a reconstruction speed of 12 images per second.

The system’s multitasking capabilities allow for patient registration and study protocol creation simultaneously with image reconstruction in the background, thereby increasing system performance.

The resulting images were processed using the RadiAnt DICOM WIEVER program. The markup was carried out using the LABELME program. The study included sinuses with a defect in the Schneiderian membrane, while the roots of the teeth were visualized in the lumen of the sinus.

3.2. Deep Learning Algorithms and Their Training

In order to continue our research on OMS data identification, the U-Net [

10] architecture has been selected. The U-NET is a machine learning convolutional network architecture for fast and precise segmentation of images. It suits perfectly the segmentation of biomedical images and shows promising results on a dataset that consists of simple cases of OMS [

25]. Training and testing were performed with U-Net Keras [

26] implementation (Keras 2.3.1 version). A docker image has been created that makes it easy to replicate a working environment for training and running a deep learning model. The network was trained end-to-end using a binary cross-entropy loss function, an Adam optimizer, a learning rate 0.01, and the Exponential Linear Unit (ELU) as an activation function. The size of the input image is 128 × 128 × 3 and its mask is 128 × 128. Epochs, batch sizes, and validation split were customized utilizing a remote server with the following configuration CPU: 2 × AMD EPYC 7413 24-Core CPU, 180 W, 2.65 GHz, 128 MB,—L3 Cache, DDR4-3200, Turbo Core max. 3.60 GHz, GPU: 4 × NVIDIA Tesla A100 (NVLink), 80 GB, RAM: DDR4-3200 512 GB. As a starting point for training, an 80:10:10 split between training, validation, and test sets was used. The study analyzed 48 models with different hyperparameters, but the highest accuracy that was achieved was only 0.772. Therefore, we decided to apply data augmentation with dataset images rotated clockwise by 90°, 180°, and 270°, as well as vertical and horizontal mirroring. After a series of experiments, horizontal mirroring showed the best result. Nine models with an accuracy of at least 0.80 were selected for further analysis.

3.3. Model’s Performance Evaluation

When evaluating a machine learning model, predictions can be classified into four categories: true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN). To evaluate the segmentation performance of the model, error metrics such as accuracy, sensitivity, and specificity have been used [

27]. These are based on a comparison of the segmentation results of the model against manual segmentation labels. As an accuracy metric, the Jaccard Index (Intersection over Union, IoU) [

28] has been selected. It allows a comparison of the ground truth mask (the mask manually drawn by an expert) with the resulting mask.

Accuracy is calculated with the Intersection over Union metric, which is also referred to as the Jaccard index. This is a method to quantify the percent overlap between the target mask and our prediction output. The accuracy was calculated by the formula:

Sensitivity refers to the model’s ability to correctly detect the predicted output:

Specificity refers to the model’s ability to correctly reject the predicted output without the condition:

3.4. Questionnaire

This research is aimed at solving one of the main medical problems in otolaryngology—the diagnostics of chronic odontogenic maxillary sinusitis. As is well-known, to make this diagnosis, it is important not only to have MSCT scans but also to conduct a patient interview, anamnesis, and examination according to generally accepted rules. In the conditions of a lack of time and a heavy workload of medical staff, it was decided to develop an intelligent decision support system in the form of a chatbot. For this purpose, we invited the doctor and the patient to answer several questions. The first block of questions consists of the personal data of patients. Before filling it out, the patient will be asked to give online informed consent to the processing of their data. The next section must be filled in by the patient and is devoted to complaints relating to the ENT organs. The main attention is paid to the detection of the one-sidedness of the lesion, which may be indicated by symptoms of one-sided nasal congestion and nasal discharge on one side. In addition, the patient can already indicate the presence of problems with their teeth during the survey. The question about the presence of frequent sneezing and itching in the nasal cavity is aimed to help to differentiate odontogenic rhinosinusitis from an allergic reaction.

The next block of questions should be filled in by a medical professional—an otolaryngologist, if necessary—after consultation with a dentist or maxillofacial surgeon. All questions in this section are also aimed at identifying the one-sidedness of the process, which is more typical for odontogenic maxillary sinusitis.

And the third section consists of questions on the results of MSCT, which can help make a final diagnosis.

3.5. Intelligent Decision Support System for Differential Diagnosis

An intelligent decision support system for differential diagnosis of chronic odontogenic rhinosinusitis is implemented as an intelligent chatbot integrated with a computer vision model. The architecture of an intelligent chatbot is presented in the form of a use-case diagram in

Figure 2.

Use-case diagrams are commonly referred to as behavior diagrams, which are used to describe a set of actions (use cases) that a particular system or system (actor) must or can perform in cooperation with one or more external users of the system (actors). Each use case should provide some observable and valuable outcome for the participants or other stakeholders of the system.

Use cases allow the capturing of the requirements of systems design, describing the functionality that those systems provide, and defining the requirements that systems place on their environment.

The intelligent chatbot has two levels of users: patients and doctors. The patient fills in the anamnesis data, choosing from the proposed options. The anamnesis that the patient fills in includes data on nasal breathing, headache frequency, duration, localization, runny nose frequency, nasal discharge, dental problems, sneezing frequency, nasal itching, and lacrimation. The doctor chatbot subsystem allows various doctors to enter data about a particular patient. So, based on the examination, the otolaryngologist enters data on the presence of swelling of the soft tissues of the face. Rhinoscopy data are entered by an otolaryngologist, if necessary, after consultation with a dentist and maxillofacial surgeon. This includes the presence of pain on palpation of the exit points of the I and II branches of the trigeminal nerve, the characteristics of the nasal mucosa, the presence of edema of the nasal mucosa, the nature of nasal breathing, the presence of secretions in the nasal cavity, the presence of polyps in the nasal cavity, and the presence of a pseudochoanal polyp. The dentist enters data on the presence of inflammatory changes in the dental and jaw system. The study of the results of the patient’s spiral computed tomography is made by an otolaryngologist and includes the thickness of the lower wall of the maxillary sinus, the density of the lower wall of the maxillary sinus, the severity of pathological changes in the mucous membrane of the maxillary sinus, the presence of a defect in the lower wall of the maxillary sinus, the presence of mycetoma in the sinus, and the presence of foreign bodies in the sinus (filling material, particles of teeth roots, implants).

Security is provided by the built-in tools of the environment in which it is deployed. A chatbot is created through a special token created by the environment and hidden from prying eyes. This token is an identifier, and only through it can changes be made to the bot code. Without rights, the user’s request will not be processed, thereby not allowing the user to influence the bot’s work. Thus, violation of data privacy or leakage is almost impossible. The most vulnerable point is a DDoS attack on the environment server or the server on which the database and the bot code are located.

3.6. Architecture of Computer Vision Module

Morphological processing refers to tools for extracting image components that represent and describe form. Segmentation procedures divide images into component parts or objects. In general, offline segmentation is one of the most challenging tasks in digital image processing. A reliable segmentation procedure dramatically facilitates the process of successfully solving image problems requiring individual object identification. Conversely, weak or unstable segmentation algorithms almost always guarantee a possible failure. In general, the more accurate the segmentation, the greater the likelihood of recognition success.

The UML communication diagram for the image processing module is presented in

Figure 3.

The main feature of the detection system is a classification method based on machine learning. We propose the use of computer vision and machine learning for Chronic Odontogenic Rhinosinusitis segmentation. The scheme of operation of the recognition module is shown in

Figure 4.

4. Results

4.1. Model Performance Evaluation

The results of experiments with a model accuracy of at least 0.80 using the dataset augmented using a horizontal mirroring technique are presented in

Table 1.

Each of the 9 models consists of 19 convolutional layers and 4 transposed convolution layers, given a total of 23 layers. A description of the general structure of the model is presented below. Input images of a size 128 × 128 × 3 in .png format with created .json masks, each of the size 128 × 128 are followed by two consecutive convolution layers, and each of these layers uses 64 filters of size 3 × 3. The first convolution layer incorporates 16 filters each of size 3 × 3, and the ELU as an activation function, as well as “he_normal” as a weight initialization method. The output of the first convolution layer is connected to a dropout layer where 0.1 nodes are dropped out, which allows us to avoid overfitting. The first convolution layer is then convolved again with 16 filters of each 3 × 3 size and goes into a max-pooling layer with a size of 2 × 2. This constitutes block 1 of the model. As a next step, the second block of the model with almost the same structure was built. The only difference is that the number of filters has doubled and is now 32 elements. The third block of the model is built according to the same structure, which means that the convolution layer followed a dropout and then a convolution again and max-pooling; however, the number of filters is now 64 and the dropout parameter is 0.2. The fourth block of the model differs from the third only in the number of filters, which is now 128. The fifth block consists of a convolution layer with 256 filters followed by a dropout and then a further convolution layer, but it does not use the max-pooling function. Then blocks 6–9 of the model follow. Their structure differs from the previous blocks by the integration of two new layers called Conv2DTranspose and concatenate. In this case, in block 6, the number of filters is 128 for both the convolution layer and the Conv2DTranspose layer, as well as a dropout of 0.1; while the Conv2DTranspos layer uses 128 2 × 2 filters each and stride 2 at the output of block 5, the convolution layers use 128 3 × 3 filters. In order to preserve the contextual information the output from the convolution layer of block 4 before max-pooling is concatenated with the output of the 6th block. Blocks 7 to 10 follow the same structure, i.e., each block has one transpose convolution layer (Conv2DTranspos), one concatenate layer, two convolution layers, and one dropout layer. For the 7th block, the number of filters for the Conv2DTranspos layer is 64 and it is 2 × 2 in size; for convolutional layers, 64 3 × 3 filters are used. Additionally, the output of the block is concatenated with the convolution layer of block 3. For the 8th block, the number of filters for the Conv2DTranspos layer is 32 with a size of 2 × 2, and for the convolutional layers, 32 filters with a size of 3 × 3 are used. The output is concatenated with block 2 before max-pooling and the dropout is now 0.1. Finally, block 9 contains the number of filters for the Conv2DTranspos layer 16 with a size of 2 × 2, and for the convolutional layers, 16 filters with a size of 3 × 3 are used. The output is concatenated with block 1 before max-pooling and the dropout is now 0.1. As U-Net is a fully convolutional deep learning model, the last layer is a convolution layer. As an activation function, the sigmoid function has been chosen (outputs = Conv2D(1, (1, 1), activation = ‘sigmoid’)(c9)). In addition, a binary cross-entropy loss function, an Adam optimizer, and a learning rate of 0.01 were used to train the model. It should be noted that models 1–9 differ only in the combination of such model hyperparameters as batch size, number of epochs, and validation split, which makes it possible to determine the optimal combination of their values. All other parameters of the models, such as the number of convolution layers, the number and size of filters for each layer, the activation function, and others, are the same for all nine models. So according to

Table 1, model 1 has the following parameters: batch size 2, number of epochs 10, validation split 0.33, model 2 has batch size 2, number of epochs 50, validation split 0.10, model 3—batch size 8, number of epochs 20, validation split 0.33, model 4—batch size 8, number of epochs 50, validation split 0.10, model 5—batch size 16, number of epochs 20, validation split 0.10, model 6—batch size 16, number of epochs 50, validation split 0.10, model 7—batch size 16, number of epochs 50, validation split 0.33, model 8—batch size 32, number of epochs 20, validation split 0.10, model 9—batch size 32, number of epochs 50, validation split 0.10.

As we can also see from

Table 1, the highest accuracy of 0.909 has been achieved by model 7 with batch size 16, number of epochs 50, and validation split 0.33. The qualitative segmentation results for the dataset on two sample images are presented in

Figure 5.

4.2. Intelligent Decision Support System for Differential Diagnosis Architecture

A component diagram describes the concept of a chatbot using various components, nested components, and required and provided interfaces. Interfaces show how components communicate with each other. The simulated chatbot identifies the entities and intents in the user’s message and generates a response based on the knowledge represented by the templates. The response can be a text message and sometimes an action.

The hardware aspect of the chatbot and how it works is connected to the software.

Figure 6 illustrates the UML diagram of the application’s components.

The component diagram shows the components, the provided and required interfaces, ports, and the connections between them. This type of diagram is used in component-based development (CBD) to describe systems with a service-oriented architecture (SOA).

Component-based development is based on the assumptions that previously built components can be reused and that components can be replaced with other “equivalent” or “compatible” components if needed.

Artifacts that implement a component are designed to be independently deployed and redeployed, such as updating an existing system.

The client interface runs in a browser or can be standalone. It communicates with the Chatbot server via HTTP.

The Chatbot server hosts various services that run the main Chatbot logic, which contains the following components: Messenger, Chatbot Server, Chatbot, Intent Classifier, Knowledge Base, Classifier Trainer, Entity Classifier, Response Generator, and Action Processor. While they all run as Docker containers on the same physical machine, they can also conceptually run on different machines.

Hosted on one or more machines, AI microservices provide different learning model machines that can be used throughout the conversation to facilitate the processing of ideas. Each microservice provides a RESTful HTTP API that can be used to query and retrieve data.

The system can conceptually have the following interfaces: Action API, Message API, Templates, Text Response, Intent, Entities, Action, User Message, and Knowledge.

The digital imaging system consists of six steps: image acquisition, preprocessing, feature extraction, associative storage, knowledge base, and recognition, as shown in

Figure 7.

The first step in the process is to acquire or capture a digital image. A digitized image is f(x,y), in which both spatial coordinates and brightness are digitized. The elements of the digitized array are called image elements or pixels. The stage of obtaining an image concerns the sensors that capture the image. The sensor can be a camera or a scanner. The program determines the nature of the sensor and the image it creates. The pre-processing stage deals with the perception of brightness, as well as the restoration and reconstruction of the image. Image restoration refers to the evaluation of the original image. However, unlike enhancement, which is subjective, image restoration is objective in the sense that restoration methods are typically based on mathematical or probabilistic models of image deterioration. At the same time, improvement is based on the individual’s subjective preferences as to what is a good improvement result.

5. Discussion

A system that provides decision-making support to physicians during diagnosis has been created, which enables the integration of patient interviews, physician examinations, and MSCT analysis. It is crucial to implement this information system in healthcare organizations and institutions that have a shortage of specialized professionals. This is because it enhances diagnostic accuracy, reduces examination time and patient questioning, and organizes the patient information received.

Some studies have used a computer vision approach in otolaryngology. The authors of [

29] applied an automated machine learning approach to create a computer vision algorithm for otoscopic diagnosis capable of providing greater accuracy than trained physicians. The authors achieved an accuracy of 90.9%, higher than the doctor’s accuracy of 58.9%. The authors used otoscopic images from open-access repositories. The authors of [

30] proposed an AI-assisted image classification algorithm for sorting otoscopic images of children from rural and remote areas of Australia and the Torres Strait Islands. The algorithm achieved an accuracy of 99.3% for acute otitis media, 96.3% for chronic otitis media, 77.8% for otitis media with effusion (OME), and 98.2% for wax/canal obstruction classification. At the same time, the accuracy of the difference between various diagnoses ranged from 74.4% to 92.8%.

Research is also devoted to the use of machine learning in dentistry. The authors of [

31] describe the use of artificial intelligence methods for dental radiography. The authors identify the following areas of application of data mining in this area: diagnosis of dental caries, periapical pathologies, and periodontal bone loss; classification of cysts and tumors; cephalometric analysis; screening for osteoporosis; tooth recognition and forensic dentistry; recognition of the dental implant system; and image quality improvement.

The authors of [

32] evaluate the performance of deep CNN algorithms for detecting and diagnosing dental caries on periapical radiographs using a pre-trained GoogLeNet Inception v3 CNN network for preprocessing and transfer learning. The diagnostic accuracy of premolars, molars, premolars, and molars was 89.0% (80.4–93.3), 88.0% (79.2–93.1), and 82.0% (75.5–87.1), respectively. The authors of [

33] tested the accuracy of bone loss classification in radiography using machine learning. The proposed model demonstrated a mean accuracy of 0.87 ± 0.01 in classifying mild (<15%) or severe (≥15%) bone loss with five-fold cross-validation.

In addition, some studies are aimed at supporting decision-making by doctors in otolaryngology. For example, the authors of [

34] propose an algorithm model for decision support system software to support diagnostics in dentistry. The concept proposed by the authors is technically feasible, can support clinician selection based on scientific evidence and up-to-date references, and obtain informed consent based on data that is easily understandable to the patient. The authors of [

35] propose a clinical decision support system to help general practitioners evaluate the need for orthodontic treatment in patients with permanent dentition. A Bayesian network was chosen as the primary model for assessing the need for orthodontic treatment. Each variable is modeled as a node, and the causal relationship between two variables can be represented as a directed arc. To assess the quality of the proposed system, the authors compare its accuracy with experienced doctors. The authors of [

36] propose to develop and test an open case-based decision support system for making a diagnosis in oral pathology. Based on Bayes’ theorem, the system is linked to a relational database. As a result of the implementation, the system allowed the authors to create and manage a database of pathologies.

Thus, today there are no systems that would combine image processing and intelligent decision support systems for otolaryngologists.

In the course of our study, one of the most challenging issues of modern otolaryngology, dentistry, and maxillofacial surgery, the diagnosis of OMS, was solved. It should be noted that although many works have been devoted to the study of this problem over the years [

37], none of the researchers obtained sufficiently convincing results. The conducted study differs from similar ones primarily in the material under study. The object of the study in our case was the most difficult-to-diagnose results of MSCT. When selecting the material, we took into account the fact that usually simple clinical examples of odontogenic maxillary sinusitis do not require additional research, and the diagnosis is easily established by a radiologist. The presence of complex cases (for example, patients after surgical interventions in the area of the dentoalveolar system, with the presence of additional implants) requires special care and accuracy of diagnosis. The quality and speed of making a correct X-ray diagnosis suffer in comparison with simpler cases.

The second distinguishing feature of the study is its complexity. An integrated approach is achieved by analyzing not only medical (MSCT) images, but also by developing a chatbot system that is designed to help differentiate odontogenic maxillary sinusitis from other inflammatory diseases of this anatomical region. As is well known, the correct clinical diagnosis cannot be made based only on the results of X-ray studies. Only an analysis of the patient’s complaints, anamnesis morbi, anamnesis vitae, objective examination data, and additional research methods (including MSCT) will make it possible to establish the correct diagnosis, and therefore select the correct treatment.

Considering all of the above, the current study is modern, comprehensive, and informative, even for the analysis of diagnostically difficult cases of odontogenic maxillary sinusitis.

The proposed deep learning model achieves good performance on MSCT image segmentation applications. Data augmentation techniques and the use of the ELU activation function, as well as the optimization of model hyperparameters, allowed us to investigate 48 different solutions to achieve this result. Moreover, model training showed that when 50 epochs are reached, the model performance is the highest, and a further increase in the number of epochs does not produce a further improvement. A similar conclusion can be drawn regarding the batch size: models with a batch size of more than 32 did not show the expected performance. As a result, after training each of the 48 models, the best combination consisting of batch size 16, number of epochs 50, and validation split 0.33 was obtained.

Although automated decision support systems may help diagnose chronic rhinosinusitis, their application has several limitations. These limitations include:

Diagnostic accuracy may not be as high as that of a skilled clinician, even though a decision support system may be able to recognize patterns in imaging tests that are suggestive of chronic rhinosinusitis. Additionally, elements like the quality of the imaging scans or the existence of other illnesses that mirror the signs of chronic rhinosinusitis may impact the system’s diagnostic accuracy.

Lack of clinical context: A decision support system might not be able to consider additional elements, such as the patient’s symptoms, medical history, or response to treatment, that are crucial for diagnosing chronic rhinosinusitis. False positive or false negative diagnoses could result from this.

Dependence on large datasets: A model’s performance is strongly influenced by the quality and size of the training data set. The system may not generalize to new cases and perform poorly if the dataset is small or biased.

Data privacy, security, and system accountability are just a few of the ethical and legal problems to consider when employing decision support systems in healthcare.

Cost and upkeep: Developing and maintaining information technology can be expensive and may also require specialized skills and knowledge to run well.

While decision support systems can help diagnose chronic rhinosinusitis, it’s crucial to remember that they can only be used in conjunction with the knowledge of skilled doctors and should not be depended upon to make final diagnoses.

6. Conclusions

The study is devoted to the diagnosis of odontogenic rhinosinusitis. An intelligent information system has been developed to support physicians’ decision-making when making a diagnosis, which combines patient interviews, examination of the patient by physicians, and MSCT analysis.

For this, a dataset was created containing 162 MSCT images. Based on the U-Net architecture, a deep learning model for image segmentation was developed. Several experiments were carried out, showing a model accuracy of 90.09% with batch size 16, number of epochs 50, and validation split 0.33.

The modules for examining and questioning patients are implemented with the help of an intelligent chatbot, which allows the user to combine examination data from various doctors with an automated patient survey.

The model and the chatbot are combined into a single intelligent decision support system for the differential diagnosis of Chronic Odontogenic Rhinosinusitis.

It is essential to use the proposed information system in healthcare systems and institutions with a limited number of specialized specialists because it not only increases the accuracy of diagnostics and reduces the time of examination and questioning of the patient but also systematizes the information received about patients.

The scientific novelty of the study lies in the fact that a model for the segmentation of MSCT images with odontogenic maxillary sinusitis was obtained. It is based on the U-Net architecture and provides good performance on a small data set in less than 2 h. The practical novelty of the study lies in the fact that, for the first time, the system of automated interaction with the patient and the deep learning model for the analysis of MSCT are combined, which makes it possible to increase efficiency in making a diagnosis.