1. Introduction

As the application of robots is growing closer to humans, higher requirements for robots are put forward. Human-like motion is one of the important issues, especially for humanoid service robots, advanced industrial robots, and assistive robots [

1]. The future advanced industrial robot is a potential application area. Although industrial robots are developing in the direction of intelligence [

2,

3], their tasks are relatively simple. Unlike the tasks of traditional industrial robots, the tasks of anthropomorphic arms are more complex and various. In addition, more and more anthropomorphic arms work with humans and even can be the companion of humans. They can work in the spaces ergonomically designed for human workers and are safe enough to work together with human workers [

4]. Generating human-like movements can also help assistive robots, such as the wearable exoskeleton robots, to support or enhance natural body movements for disabled people [

5]. Some rehabilitation robots have been on the market. Currently, the new trends in robotics research have been denominated service robotics because of their general goal of moving robots closer to human social needs [

6]. The humanoid robot is an important branch in service robotics. People hope that these robots are human-like not only in their structures but also in their intelligence and movements [

7]. Anthropomorphic arms provide humanoid robots a powerful manipulation ability. Except for the anthropoid shape, humans prefer that anthropomorphic arms have the manipulation ability of humans. During Human Robot Interaction (HRI), the human-like motions of anthropomorphic arms can increase not only the comfort and security of the users but also the efficiency of HRI. Thus, research of human-like motion determines whether the humanoid robots meet the requirements of the tasks in HRI.

The key issue is to generate anthropoid arm postures [

8]. The methods are mostly classified into two categories: index optimization and feature extraction. The former predicts arm postures by optimizing the Human Performance Measures (HPMs). The early HPM is mainly based on psychophysical discomfort [

9,

10]. This HPM indicates that the farther from the center angle, the more uncomfortable humans feel. In addition, some HPMs such as maneuverability [

11], minimal effort [

12], obstacle avoidance [

13] and joint limits [

14] are used to evaluate the human-likeness. As research continues, other HPMs are extracted through different disciplines. Colim et al. [

15] used the rapid upper limb assessment (RULA) from ergonomic researches to analyzes an industrial implementation of a collaborative robotic workstation. Rosell et al. [

16] proposed the concept of “principal motion directions” to reduce the dimension of the search space to obtain results with a compromise between motion optimality and planning complexity. Mainly inspired from established theories of human motor control, Gulletta et al. [

17] presented a human-like upper-limb motion algorithm to generate collision-free trajectories with human-like characteristics. The later predicts arm postures by extracting the features of human arms. Asfour et al. [

18] used a stereo vision to capture the feature points of human arms, and employed the Hidden Markov model to learn the statistical features of the Cartesian trajectories and achieve tasks. Tomić et al. [

19] presented a conversion process for the imitation of human arm motion by a humanoid robot. The conversion process consists of an imitation algorithm and an algorithm for generating the human-like motion of the humanoid. Given that natural human movement exhibits several robust features, Maurice et al. [

20] examined whether human–robot physical interaction is facilitated when these features are considered in robot control. Results showed that the applied force was significantly lower when the robot moved with a biological velocity pattern. Gielniak et al. [

21] proposed a three-stage pipeline to improve the clarity of robot motion by making it more human-like. Yang et al. [

22] proposed a fuzzy control scheme to solve the dual-arm robot control with uncertain kinematics and dynamics. To make the robots engage in physical collaboration with humans, Noohi et al. [

23] proposed a model to compute the interaction force during a dyadic cooperative object manipulation task. Whether they are based on index optimization or feature extraction, the methods ignore the influence caused by arm models. In fact, human arm movements are complex [

24]. During the movement, arm states are changing constantly. Different numbers and combinations of joints form different arm states [

25]. Human arms can perform different and complex tasks by coordination and cooperation of different joints. Thus, the arm motion models are diversified.

Due to the diversity of arm motion, how to select a proper motion model among different models becomes an important issue. Many basic methods have been employed in the research of robot decision-making [

26]. Perula–Martinez et al. [

27] proposed a decision-making system for social robots that prefers to engage them in the interaction. Huang et al. [

28] combined the traditional drift diffusion model and the null-space-based behavioral control method to build a human decision-making behavior model for HRI. Fu et al. [

29] proposed a group decision-making methodology for handing multiple criteria robot selection problem. To mitigate the burden on humans in conventional surveillance systems, Witwicki et al. [

30] proposed an architecture for an intelligent surveillance system. The integration of components in this system supports fully automated decision-making. Due to its unique strengths both in inference and in visualization, the BN is widely used in the field of robotics [

31,

32]. Artemiadis et al. [

33] described the dependencies among the human joint angles using a BN. Magnenat et al. [

34] proposed a learning-form-demonstration framework based the BN. This framework combines the demonstrated commands according to the similarity between the demonstrated sensory trajectories and the current replay trajectory. Combined with the characteristics of the decision-making of a soccer robot, Liu [

35] analyzed the role transformation and experience sharing of multi-agent reinforcement learning, and applied it to the local attack strategy of a soccer robot, and used this algorithm to learn the action selection strategy of the main robot in the team.

The most direct method to generate human-like movements is solving the IK of anthropomorphic arms. However, traditional IK methods have some inevitable drawbacks [

36]. Additionally, the features of human arm movement lack clear physical interpretations. The analysis of inverse kinematics is complex. In most cases, it pursues multiple solutions. Furthermore, an analytic solution exists only for an ideal robot model when the structure of the robot meets certain conditions. How to choose the natural arm postures from the infinite postures at one target point is an important and challenging problem. Recently, most of the IK solutions are approached through the Artificial Neural Network (ANN). The ANNs can highly approximate the nonlinear function without knowing the specific physical interpretations. When the number of the samples is large enough, the ANNs are more accurate. Banga et al. [

37] highlighted that feed-forward ANNs and Bees Back propagation via ANN were good means to solve IK problems and optimize the whole system. Toquica et al. [

38] prosed a deep learning approximation model based on three different networks for the inverse kinematic problem of an industrial parallel robot. The network model is found and compared against a closed analytical form. Jiménez et al. [

39] used an ANN composed of three hidden layers to solve the IK problem of a 3DOF open kinematic chain. The parameters of the traditional neural network are usually selected through the empirical and trial-and-error method, which may be biased and inefficient. Thus, Zhang et al. [

40] proposed a broad neural network to approximate the unknown terms of the robots. This method can reuse the motion controller without relearning its weight parameters.

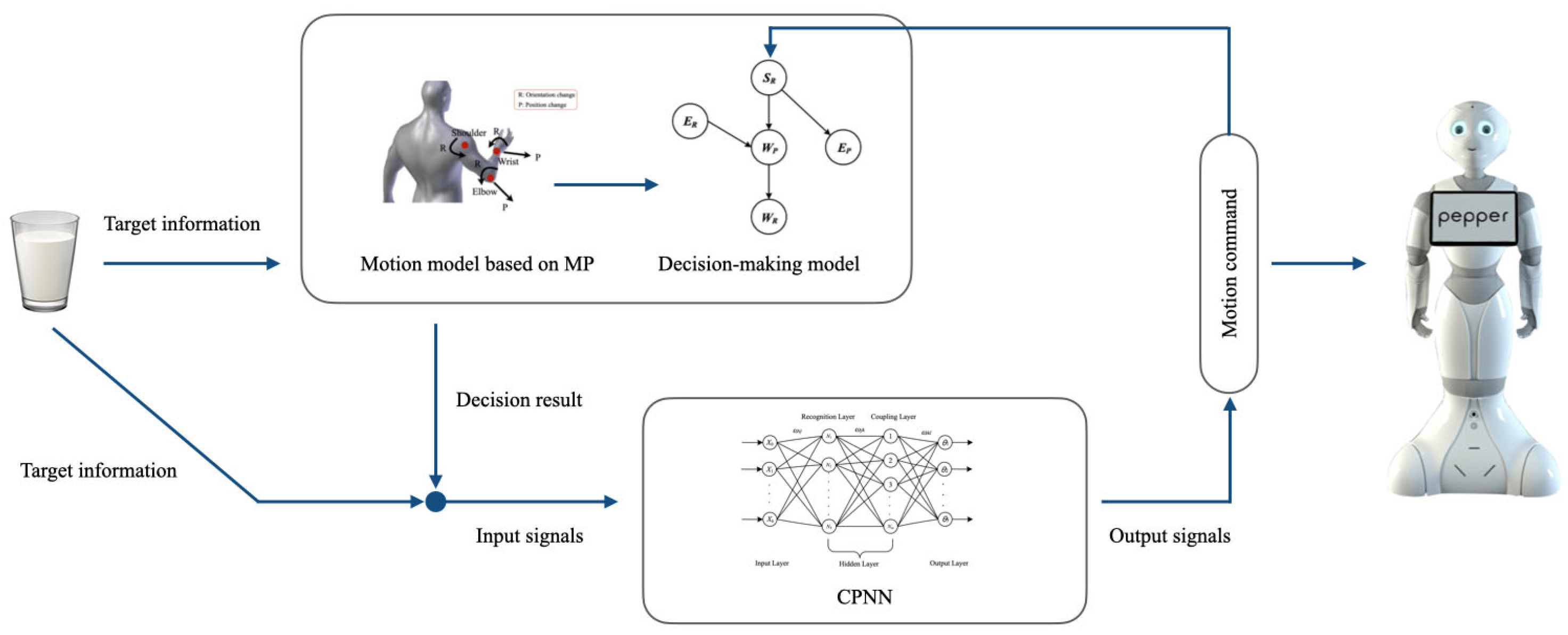

In previous work, we built a simple arm motion model based on movement primitives. The Bayesian network was used to choose the MP. A mixed IK algorithm, including the method based on geometrical constraints and the method based on index, was proposed to solve the IK problems. However, we ignored the influence caused by the motion process. The mixed IK algorithm cannot satisfy the accuracy requirement due to the diversity of arm motion models. Thus, in this paper, further studies have been conducted based on the previous work. The contribution of this paper is proposing an IHMP for anthropomorphic arms to improve the accuracy and efficiency of human-like arm movements. With the IHPM, the robot can generate the human-like arm movements accurately and autonomously. To achieve this purpose, a diversified arm motion model is proposed to represent different human arm movements. The robot can mimic various human-like movements using this model. Although the diversified motion models can approximate the real human arm movements, how to choose a proper motion model among different models is very critical. Thus, the BN is used to help robot predict and determine the motion. The motion variables are extracted and the conditional dependencies in different models are built. With this decision-making model, the robot can choose the appropriate way of moving automatically. Finally, an IK method, CPNN, is proposed to generate the joint trajectories of anthropomorphic arms fast and accurately. Through the CPNN, the diversified motion models can be integrated into a single network and their features can be reflected by changing the network structure. The structure of the IHMP is shown in

Figure 1.

The remainder of this paper is organized as follows: In

Section 2, the diversified arm motion model is introduced. The decision-making method to help robot choose the suitable model is described in

Section 3. The corresponding IK method to solve the IK problems is shown in

Section 4.

Section 5 shows the results of experiments.

Section 6 concludes the paper.

3. Decision-Making Model

Through the HPS-MP motion models, the human arm motion is divided into different MPs, and different MPs thus lead to different forms of motion. In actual movements, the anthropomorphic arm needs to choose the suitable motion models to complete a variety of operational tasks. In this paper, we use the powerful reasoning ability of the Bayesian network to establish a decision-making model.

3.1. Motion Variables

MPs are composed of movement elements, and different combinations of movement elements constitute different MPs. Therefore, the process of selecting MPs is the process of selecting and reorganizing movement elements. Five movement elements (SR, EP, ER, WP, WR) are used as motion variables to establish a BN. According to the arm tree structure, there is a direct causal relationship between the movement elements. According to the collected human arm movement data, the prior probability of each motion variable can be obtained, thereby obtaining the conditional probability between the variables. Each motion variable satisfies the exponential distribution, and its parameters are different according to the attributes of the variable. Among the motion variables, EP and WP are functions of distance. WP is related to the absolute value of the difference between the distance from the shoulder to the target position and the distance from the shoulder to the wrist. EP is related to the absolute difference between the distance from the elbow to the target position and the forearm length. The motion variables SR, ER and WR are functions of the target orientation. Therefore, the Exponential Probability Density Function (EPDF) is used to calculate the occurrence probability of each motion variable. Each motion variable has a corresponding EPDF, and its parameter λ can be calculated by the maximum likelihood estimation and through the leave-one-out cross-validation method for verification. According to the probability distribution of each motion variable, the dependency between the variables can be calculated according to different constraints.

3.2. Network Strucure

The BN is a graphical model used to express the connection probability between different variables. It comprehensively considers prior information and sample data, and combines expert opinions and experience to analyze the problem quantitatively and qualitatively. The network structure is a directed acyclic graph consisting of variable nodes and directed edges connecting these nodes.

According to HPS, when planning the motion of the anthropomorphic arm, it is first necessary to determine the appropriate planning layer according to the constraints, and then select the appropriate MP according to the selected planning layer. Therefore, according to the law of MP construction and the motion characteristics of each planning layer, we established the BN structure under three different planning layers as shown in

Figure 5.

3.3. Decision Algorithm

The mutual information index can be expressed as the total amount of information dependence during the movements. The dependency relationship between motion variables at a certain moment is needed. Therefore, the transient mutual information (TMI) index is proposed in this paper:

where

P(

x,

y) is the joint probability distribution function, and

P(

x) and

P(

y) are the marginal probability distribution functions. The probability function is the objective prior probability, and the data are collected from the experiments of human arm movement.

Similarly, the Transient Conditional Mutual Information (TCMI) index can be expressed as follows:

At the same time, the mutual information reflects the dependence between two variables, and the composition of MPs is generally composed of more than two motion variables. Therefore, the Accumulative Mutual Information (AMI) index is proposed as the selection criterion of the MPs. According to the joint nodes represented by the motion elements, the AMI is divided into series and parallel. The serial type can be defined as follows:

where

vi represents the motion variables of different nodes. The parallel type can be defined as follows:

where

v2i+1 and

v2i represent different attributes under the same node.

According to the Equations (7) and (8), the AMI value of the MPs under each network structure can be determined. For example, the AMI value of MP

SREPWP in first-level planning is the following:

In different planning layers, the corresponding

gi (i = 1, 2, 3, 4) of each MP is used to calculate its AMI value. Therefore, an objective function

is defined as follows:

where

is the objective function and

m represents different planning levels. After determining the planning level

m, corresponding

gi can be calculated. The objective function

selects the largest element

gi. In this way, the decision-making problem is transformed to maximize the objective function

, and the selected element

gi is the result of this decision.

Figure 6 shows the flow chart of the motion decision algorithm. After determining the path points at the beginning and end states, the robot makes a decision on each path point. First, the appropriate planning layer is selected through HPS. Second, the AMI value of each MP is calculated according to the selected planning layer. Then, the maximal

g in objective function

is selected. The selected corresponding MP is the decision result. The robot will then use this MP as the motion mode. Finally, the robot calculates the IK solutions through the IK algorithm to obtain the joint angles and performs the operation.

4. Inverse Kinematic Solution

The variability of arm models will have a great impact on calculation efficiency and increase the amount of calculation required to solve joint angles. Nevertheless, the traditional IK methods have inevitable defects. Because ANNs can highly approximate the nonlinear functions, ANNs are widely used in solving IK problems of manipulators. However, for the anthropomorphic arm, a single neural network model cannot accurately reflect the varying characteristics of the human arm model, and parallel neural networks will further complicate the network structure and increase the amount of calculation. Therefore, we used the Coupling Neural Network to solve the IK problem.

4.1. Coupling Neural Network

Figure 7 shows the network structure of CPNN. The network includes one input layer, two hidden layers, and one output layer. The input signal in the input layer contains the target information, including target position and orientation. The hidden layer is composed of two sub-layers: the recognition layer and the coupling layer. Each sublayer in the hidden layer has its own function. In the recognition layer, the neuron

Ni represents five motion variables and three planning layers, respectively. The values of the

Ni can be seen in the

Table 3. Its function is to determine the results of the decision-making algorithm. According to different decision results, the recognition layer changes the structure of the network. The main function of the coupling layer is to calculate the anthropomorphic arm joint angles under different network structures. These two hidden layers together realize the diversity of CPNN. The neurons in the output layer divide the mechanical joints represented by the neurons into shoulder joints, elbow joints, and wrist joints according to the physiological joints. Each joint set is composed of several mechanical joints. In the coupling layer,

Sh,

Ek, and

Wm represent the neurons. According to different output items, the mapping area is also divided into three sub-areas ([S

1, Sh], [

Eh+1,

Ek] and [

Wk+1,

Wm]). Different

Ni activates different neurons in the three sub-regions. When

Ni is determined by the decision-making algorithm, the corresponding hidden layer neuron in the mapping area will be activated and participate in the mapping calculation. The diversity of CPNN imparts several advantages for solving the IK problem of diversified anthropomorphic arm.

4.2. Coupling Neuron

Figure 7 shows that in the coupling layer, some hidden layer neurons activated by different

Ni overlap, such as

S4 being activated by

N1 and

N2 at the same time. In other words, their corresponding weights are the same, so the output calculated by the weight accumulation and calculation is also relatively close, and this type of neuron is called coupling neurons. The characteristic of the coupling neuron is that it can be activated by multiple

Ni simultaneously, which can improve the convergence speed and generalization ability of the network. The coupling coefficient

C represents the number of

Ni that activates the same coupling neurons. When the coupling coefficient is 1, it indicates that the coupling neurons are activated by two (

C+1)

Ni at the same time. In this section, the coupling coefficient is 1 by default.

The number of coupled neurons is closely related to the number of hidden neurons in the coupling layer. The number of hidden layer neurons in the coupling layer can be expressed as follows:

where

M represents the number of hidden layer neurons in the coupling layer, and

I is the number of MPs that CPNN can reflect. These models can reflect their mapping relationship through a single BP neural network according to their different input and output combinations.

Mi is the number of neurons in the hidden layer of a single BP neural network that satisfies the input and output relationship, which can be obtained by the following:

where

n is the number of neurons in the input layer of the BP neural network, and

o is the number of neurons in the output layer of the BP neural network.

Therefore, the number of coupling neurons

M can be obtained by the following:

According to the coupling neuron equation, the number of coupling neurons can be set in advance based on the number of models input by the network to determine the number of hidden layer neurons in the network. At the same time, the hidden layer structure of the network can be set in advance to calculate the number of coupling neurons. The former can be used to adjust the structure of the network, and the latter can be used to control the overall calculation of the network.

4.3. Network Training

After the determination of the network structure, the CPNN is trained. There are 2400 sets of generated data (○-ERWP, △-ERWPR, ○-SRWP, ○-SRWPR, □-ERWPR and □-SRWPR has 100 sets, respectively, and each of rest of the primitives has 300 sets, respectively), of which 70% is used for training, 15% for validation and 15% for testing. The data are collected by motion capture system and used as the network input.

The network input

X(

t) is the following:

where

x,

y and

z represent the desired position of the target, and

α,

β and

γ are the Euler angles and represent the desired orientation of the target. Through the motion capture system, these values can be collected as the input values.

X0 is the reference output item used to activate the neurons in the recognition layer and its value is determined by the decision-making algorithm.

Table 3 shows the different combinations of

X0.

In this paper, the sigmoid function is used as the transfer function between the layers, and the output of the recognition layer can be expressed as follows:

Among them, the input of the recognition layer is the following:

where

ωij(

t) is the weight coefficient between the

i-th neuron in the input layer and the

j-th neuron in the recognition layer, and

sj is the slope of the neuron’s sigmoid function of the

j-th neuron in the recognition layer.

The output of the coupling layer can be expressed as the following:

where the input of the coupling layer is the following:

where

ωjk(

t) is the weight coefficient between the

j-th neuron in the recognition layer and the

k-th neuron in the coupling layer, and

sk is the slope of the sigmoid function of the

k-th neuron in the coupling layer.

The final output of CPNN

θl(

t) is the following:

It should be noted that due to the different input and output combinations caused by the multi-model problem, not all joint angles are obtained from the network output in the same model. Therefore, these remaining joint angles will be obtained from the anthropomorphic arm’s previous motion state.

To train the CPNN, we define an error function as the following:

where

ei(

t) represents the difference between the expected orientation of the network and the actual output orientation.

when the actual output of the network is not equal to the expected output, there will be an output error

E(

t). The error function represents the error sum of the position and orientation in the anthropomorphic arm working space and can be calculated from the joint angle obtained by the network output. The network error is a function of the weight coefficients of each layer; therefore, the weight coefficients are adjusted through the error back propagation to improve the error. The principle of adjusting the weight coefficient is to continuously reduce the error; consequently, adjusting the amount of the weight coefficient is proportional to the gradient of the error. The gradient of the error function can be expressed as the following:

For different MPs, the specific expression of each item in Equation (22) is also different. According to

Table 4, the forward kinematic relations of the Pepper’s right arm can be obtained. Finally, the coefficient

,

and

of each layer can be updated; we do not discuss this in detail in this paper.

6. Conclusions and Future Work

In this paper, an Intelligent Motion Planner is proposed to help anthropomorphic arms generate human-like arm movements. Three aspects of human-like motion including motion model, decision-making and IK problem are further studied. To improve the accuracy of human-like movements, the arm movements are decoupled into two aspects, arm structure and motion process. The arm model is decoupled into different simple sub-models based on MPs. Due to the influence of the environmental constraint, the same MP can show different motion patterns, which can decrease the accuracy of the human-like motion. Thus, the decoupling of the motion process needs to be considered. By combining these two ways, a motion model based on HPS-MP is proposed. This motion model has definite physical meaning, and reflects the inherent laws of human arm movements directly. With this model, the anthropomorphic arm can generate human-like movements accurately and fast.

A new decision-making model based on BN is constructed and can be applied to different planning levels. In this decision-making model, there are three network structures which can be applied to three planning levels, respectively, and each network is an independent sub-model. The probability of each HPS-MP can be obtained by calculating the AMI, so the decision problem will turn into the optimization problem. With the decision-making model, the anthropomorphic arm can choose an appropriate way to move automatically.

Finally, the IK problems can be solved through the CPNN. It is difficult for a single neural network to solve the IK problems of the diversified arm motion model. Although the parallel neural networks can approximate these different models, complex network structures will increase the difficulty of calculation. Meanwhile, in parallel neural networks, the identification of the diversified models is also a critical question. As a mutable network model, the CPNN can implement the mapping relation between the robot’s joints and diversified arm motion model, respectively. The availability of the IHMP is verified on a humanoid robot Pepper. The robot Pepper performs two groups of experiments. The experimental results show that the human-like arm motion of anthropomorphic arm can be solved effectively, and this performance is satisfactory.

In the future work, we hope to propose a novel online method based on the IHMP. Meanwhile, by using our method, the robot will perform more complex tasks and interact with humans. Additionally, future work will also focus on the application in HRI, such as robot driving and emotional expression.