A User Purchase Behavior Prediction Method Based on XGBoost

Abstract

:1. Introduction

2. Related Works

2.1. Online Purchase Prediction

2.2. Analytical Methods for Prediction

3. Overall Framework and Data Preparation

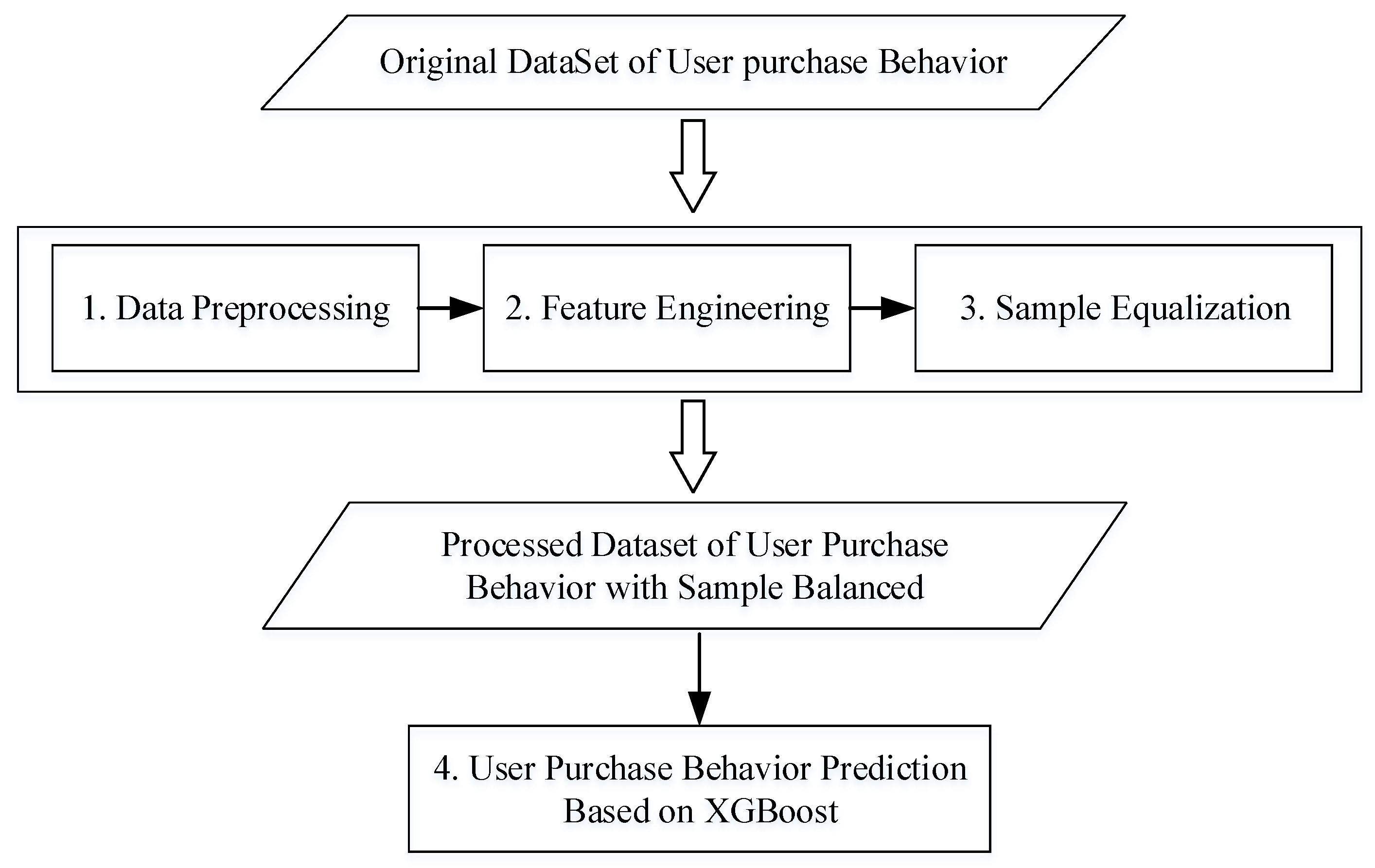

3.1. Overall Framework

3.2. Dataset Description

3.3. Dataset Processing and Feature Engineering

4. Multi-Feature Fusion User Value Based on the XGBoost Model

4.1. Multi-Feature Fusion User Value Model (LDTD)

4.2. XGBoost Feature Importance Selection Algorithm

| Algorithm 1: XGBoost feature importance algorithm |

| Input: : the original historical data of set; XGBoost parameters: max_depth, n_estimators, learning_rate, nthread, subsample, colsample_bytree, min_child_weight, seed |

| Output: filtered feature history data; |

| 1. import XGBClassifier |

| 2. ; |

| 3. = XGBClassifier(); |

| 4. = {‘max_depth’, n_estimators’, ‘learning_rate’, ‘nthread’, ‘subsample’, ‘colsample_bytree’, ‘min_child_weight’, ‘seed’}; |

| 5. = XGB.train(params, Dataset, num_boost_round=1); |

| 6. for importance_type in (‘weight’, ‘gain’, ‘cover’, ‘total_gain’, ‘total_cover’): |

| 7. print(importance_type, .get_score(importance_type=importance_type)); |

| 8. end |

| 9. .fit(X_train, y_train); |

| 10. plot_importance(); |

| 11. select in ; |

| 12. return ; |

4.3. Prediction Principle of the XGBoost Algorithm

| Algorithm 2: XGBoost prediction model algorithm |

| Input: : the training dataset; : the test dataset; |

| Output: : result; XGBoost prediction parameters: learning_rate, n_estimators, max_depth, subsample, min_child_weight, colsample_bytree, gamma, reg_alpha, reg_lambda; |

| 1. import XGBClassifier; |

| 2. train = ; |

| 3. test = ; |

| 4. train = ClusterCentroids(); |

| 5. load the adjusted parameters to params; |

| 6. XGBfeature = XGB.train(params, , num_boost_round =1); |

| 7. XGBfeature.fit(train, test); |

| 8. test_predict = clf.predict(test); |

| 9. return test_predict; |

5. Model Experiment and Comparison

5.1. Experimental Environment and Performance Metrics

5.2. Experimental Results and Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, X.F.; Yan, X.B.; Ma, Y.C. Research on user consumption behavior prediction based on improved XGBoost algorithm. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 4169–4175. [Google Scholar]

- Wu, H.T.A. Prediction Method of User Purchase Behavior Based on Bidirectional Long Short-Term Memory Neural Network Model. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Information Systems, Chongqing, China, 28–30 May 2021; ACM: New York, NY, USA; pp. 1–6. [Google Scholar]

- Palattella, M.R.; Dohler, M.; Grieco, A.; Rizzo, G.; Torsner, J.; Engel, T.; Ladid, L. Internet of Things in the 5G Era: Enablers, Architecture, and Business Models. IEEE J. Sel. Areas Commun. 2016, 34, 510–527. [Google Scholar] [CrossRef]

- Yao, Y.H.; Yen, B.; Yip, A. Examining the Effects of the Internet of Things (IoT) on E-Commerce: Alibaba Case Study. In Proceedings of the 15th International Conference on Electronic Business (ICEB 2015), Hong Kong, China, 6–10 December 2015; pp. 247–257. [Google Scholar]

- Tseng, V.S.; Lin, K.W. Efficient mining and prediction of user behavior patterns in mobile web systems. Informat. Softw. Technol. 2006, 48, 357–369. [Google Scholar] [CrossRef]

- Yin, H.Z.; Hu, Z.T.; Zhou, X.F.; Wang, H.; Zheng, K.; Nguyen, Q.V.H.; Sadiq, S. Discovering interpretable geo-social communities for user behavior prediction. In Proceedings of the 2016 IEEE 32nd International Conference on Data Engineering (ICDE), Helsinki, Finland, 16–20 May 2016; pp. 942–953. [Google Scholar]

- Sireesha, C.H.; Sowjanya, V.; Venkataramana, K. Cyber security in E-commerce. Int. J. Sci. Eng. Res. 2017, 8, 187–193. [Google Scholar]

- Zhang, H.B.; Dong, J.C. Application of sample balance-based multi-perspective feature ensemble learning for prediction of user purchasing behaviors on mobile wireless network platforms. Eurasip J. Wirel. Commun. Netw. 2020, 1, 1–26. [Google Scholar] [CrossRef]

- Luo, X.; Jiang, C.; Wang, W.; Xu, Y.; Wang, J.H.; Zhao, W. User behavior prediction in social networks using weighted extreme learning machine with distribution optimization. Future Gener. Comput. Syst. 2019, 93, 1023–1035. [Google Scholar] [CrossRef]

- Li, J.; Pan, S.X.; Huang, L.; Zhu, X. A Machine Learning Based Method for Customer Behavior Prediction. Tehnicki Vjesnik Technical Gazette 2019, 26, 1670–1676. [Google Scholar]

- Chung, Y.-W.; Khaki, B.; Li, T.; Chu, C.; Gadh, R. Ensemble machine learning-based algorithm for electric vehicle user behavior prediction. Appl. Energy 2019, 254, 113732. [Google Scholar] [CrossRef]

- Martínez, A.; Schmuck, C.; Pereverzyev, S.; Pirker, C.; Haltmeier, M. A machine learning framework for customer purchase prediction in the non-contractual setting. Eur. J. Oper. Res. 2020, 281, 588–596. [Google Scholar] [CrossRef]

- Fu, X.; Zhang, J.; Meng, Z.; King, I. MAGNN: Metapath Aggregated Graph Neural Network for Heterogeneous Graph Embedding. In Proceedings of the The Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; ACM: New York, NY, USA; pp. 2331–2341. [Google Scholar]

- Wen, Y.T.; Yeh, P.W.; Tsai, T.H.; Peng, W.C.; Shuai, H.H.A.-B. Customer purchase behavior prediction from payment datasets. In Proceedings of the 11th ACM International Conference on Web Search and Data Mining, Marina Del Rey, CA, USA, 5–9 February 2018; ACM: New York, NY, USA; pp. 628–636. [Google Scholar]

- Gao, X.; Wen, J.H.; Zhang, C. An improved random forest algorithm for predicting employee turnover. Math. Probl. Eng. 2019, 2019, 1–12. [Google Scholar] [CrossRef]

- Xu, G.W.; Shen, C.; Liu, M.; Zhang, F.; Shen, W.M. A user behavior prediction model based on parallel neural network and k-nearest neighbor algorithms. Clust. Comput. 2017, 20, 1703–1715. [Google Scholar] [CrossRef]

- Gao, H.; Kuang, L.; Yin, Y.; Guo, B.; Dou, K. Mining consuming behaviors with temporal evolution for personalized recommendation in mobile marketing apps. Mob. Netw. Appl. 2020, 25, 1233–1248. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA; pp. 785–794. [Google Scholar]

- Tian, X.; Qiu, L.; Zhang, J. User behavior prediction via heterogeneous information in social networks. Inf. Sci. 2021, 581, 637–654. [Google Scholar] [CrossRef]

- Hughes, A.M. Strategic Database Marketing: The Masterplan for Starting and Managing a Profitable, Customer-Based Marketing Program, 3rd ed.; McGrawHill Companies: New York, NY, USA, 2005. [Google Scholar]

- Chiang, W.Y. To mine association rules of customer values via a data mining procedure with improved model: An empirical case study. Expert Syst. Appl. 2011, 38, 1716–1722. [Google Scholar] [CrossRef]

- Hu, Y.H.; Yeh, T.W. Discovering valuable frequent patterns based on RFM analysis without customer identification information. Knowl. Based Syst. 2014, 61, 76–88. [Google Scholar] [CrossRef]

- Song, P.; Liu, Y. An XGBoost algorithm for predicting purchasing behaviour on E-commerce platforms. Tehnički Vjesn. 2020, 27, 1467–1471. [Google Scholar]

- Xu, J.; Wang, J.; Tian, Y.; Yan, J.; Li, X.; Gao, X. SE-stacking Improving user purchase behavior prediction by information fusion and ensemble learning. PLoS ONE 2020, 15, e0242629. [Google Scholar] [CrossRef]

- Kagan, S.; Bekkerman, R. Predicting purchase behavior of website audiences. Int. J. Electron. Commer. 2018, 22, 510–539. [Google Scholar] [CrossRef]

- Venkatesh, V.; Agarwal, R. Turning visitors into customers: A usability-centric perspective on purchase behavior in electronic channels. Manag. Sci. 2006, 52, 367–382. [Google Scholar] [CrossRef]

- Close, A.G.; Kukar-Kinney, M. Beyond buying: Motivations behind consumers’ online shopping cart use. J. Bus. Res. 2010, 63, 986–992. [Google Scholar] [CrossRef]

- Brown, M.; Pope, N.; Voges, K. Buying or browsing? An exploration of shopping orientations and online purchase intention. Eur. J. Mark. 2002, 37, 1666–1684. [Google Scholar] [CrossRef]

- Olbrich, R.; Holsing, C. Modeling consumer purchasing behavior in social shopping communities with clickstream data. Int. J. Electron. Commer. 2011, 16, 15–40. [Google Scholar] [CrossRef]

- Chaudhuri, N.; Gupta, G.; Vamsi, V.; Bose, I. On the platform but will they buy? Predicting customers’ purchase behavior using deep learning. Decis. Support Syst. 2021, 149, 113622. [Google Scholar] [CrossRef]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Drucker, H.; Surges, C.J.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. In Proceedings of the Advances in Neural Information Processing Systems (9’NIPS), Denver, CO, USA, 3–5 December 1997; pp. 155–161. [Google Scholar]

- Ge, S.L.; Ye, J.; He, M.X. Prediction Model of User Purchase Behavior Based on Deep Forest. Comput. Sci. 2019, 46, 190–194. [Google Scholar]

- Koehn, D.; Lessmann, S.; Schaal, M. Predicting online shopping behaviour from clickstream data using deep learning. Expert Syst. Appl. 2020, 150, 113342. [Google Scholar] [CrossRef]

- Hu, X.; Huang, Q.; Zhong, X.; Davison, R.M.; Zhao, D. The influence of peer characteristics and technical features of a social shopping website on a consumer’s purchase intention. Int. J. Inf. Manag. 2016, 36, 1218–1230. [Google Scholar] [CrossRef]

- Saikr. Available online: https://www.saikr.com/c/nd/7844 (accessed on 4 May 2022).

| Model Category | Advantages | Disadvantages |

|---|---|---|

| Decision Tree (DT) | 1. Easy to understand and interpret. 2. Can handle discrete and continuous data. | 1. Prone to overfitting, especially when the tree depth is large. 2. Lacks global optimization exploration. |

| Random Forest (RF) | 1. Reduces overfitting by constructing multiple trees on subsets of the data and averaging them. 2. Provides estimates of feature importance for a better understanding of the data. | 1. Needs to tune hyperparameters for good performance. 2. Interpretability can be difficult when examining the specific interaction effects of different features. |

| Support Vector Machines (SVM) | 1. Can handle large feature sets and complex data patterns. 2. Effective in small sample sizes and in noisy datasets. | 1. Becoming less efficient as the number of features increases. 2. Large datasets may require a lot of time and computational power during training and prediction, making them less practical for larger-scale problems. |

| Recurrent Neural Network (RNN) | 1. Has a memory component, making it effective for modeling sequential data. 2. Can handle variable-length sequences of data, making it suitable for handling time-series data. | 1. The models can be computationally expensive to train due to their recurrent nature. 2. Tends to suffer from exploding gradients during backpropagation, making training difficult. |

| eXtreme gradient boosting (XGBoost) | 1. Highly accurate and often outperforms other algorithms on structured or unstructured datasets. 2. Has built-in regularization to prevent overfitting and can automatically identify and prune unimportant features. | 1. Training a model on small datasets may lead to overfitting as the boosting process is less effective. 2. Can be sensitive to noisy or irrelevant inputs, leading to suboptimal performance. |

| Table | Column | Meaning |

|---|---|---|

| user_info | User_id first_order_time first_order_price age_month user_info city_num platform_num model_num app_num | 5 Uniform 1 30 1 2 3 4 |

| login_day | User_id login_diff_time distance_day login_time launch_time chinese_subscribe_num math_subscribe_num add_friend add_group camp_num learn_num add_friend add_group study_num coupon course_order_num | 5 Uniform 1 30 1 2 3 4 1 2 3 4 2 3 3 4 |

| LDT Scores | LDT Value | Meaning |

|---|---|---|

| 1 | [30, 135) | Over one month up to four and a half months without login. |

| 2 | [10, 30) | Over 10 days up to one month without login. |

| 3 | [3, 10) | Over 3 days up to 10 days without login. |

| 4 | [1, 3) | Over 1 day up to 3 days without login. |

| 5 | [0, 1) | Logged into the website within 1 day. |

| LT Scores | LT Value | Meaning |

|---|---|---|

| 1 | [0, 1) | The login duration is less than 1 day. |

| 2 | [1, 10) | The login duration is longer than 1 day and less than 10 days. |

| 3 | [10, 30) | The login duration is longer than 10 days and less than 30 days. |

| 4 | [30, 365) | The login duration is longer than 30 days and less than 365 days. |

| 5 | [365, --) | The login duration is longer than 1 year. |

| LD Scores | LT Value | Meaning |

|---|---|---|

| 1 | [0, 1) | The login days are not more than 1 day. |

| 2 | [1, 3) | The login days are more than 1 day and less than 3 days. |

| 3 | [3, 5) | The login days are more than 3 days and less than 5 days. |

| 4 | [5, 10) | The login days are more than 5 days and less than 10 days. |

| 5 | [10, 120) | The login days are more than 10 days and less than 120 days. |

| LDT | LT | LD | Traditional Classification | Improved Classification | Description |

|---|---|---|---|---|---|

| 1 | 1 | 1 | Valuable users | Valuable users (VU) | Frequent login, long duration, and many days. |

| 1 | 1 | 0 | Important potential users | Consumption potential users (CPU) | Frequent login, long duration, and few days. |

| 1 | 0 | 1 | Important training users | Deep ploughing users (DPU) | Frequent login, short time, and many days. |

| 1 | 0 | 0 | new user | Potential users (PU) | Frequent login, short time, and few days. |

| 0 | 1 | 1 | Important lost users | Loss of important value users (LIVU) | Not logged in for a long time and for many days. |

| 0 | 1 | 0 | General user | General value users (GVU) | Not logged in recently, for a long time, and for a few days. |

| 0 | 0 | 1 | Loss of Important value users | Loss of Important value users | Not logged in recently, for a short time, and for many days. |

| 0 | 0 | 0 | Lost users | Lost users (LU) | Not logged in recently, for a short time, and for a few days. |

| 1 | 1 | 1 | Important potential users | Important potential users (LPU) | Frequent login, long duration, and few days. |

| Configuration | |

|---|---|

| CPU Running Memory Operating System Coding Language Scikit-learn version Compiler Environment | Intel(R) Core(TM)i7-7700HQ 16.0 GB Windows 10 Python version 3.7.6 1.0.2 PyCharm Community Edition 2020.1.3 x64 |

| Model Category | Main Parameters | Value |

|---|---|---|

| KNN | Selected neighbors Predicted weight function Nearest neighbor algorithm Leaf size Vector distance algorithm | 5 Uniform Ball-tree 30 Euclidean distance |

| SVM | Penalty coefficient Use contraction heuristics Multi-class fusion strategy Kernel function | 1 True Ovr Rbf |

| Random forest | Number of decision trees Split criterion Maximum depth of the decision tree Splitting an internal node requires a small number of samples | 100 Gini 13 120 |

| BP Neural Network | Activation function Solver Learning rate Maximum number of iterations Number of hidden layer neurons | Relu Adam 0.0001 400 128 + 64 |

| XGBoost | Learning rate Base classifier Maximum depth of the decision tree Number of decision trees | 0.3 Gbtree 6 100 |

| Model Category | F1 | Recall | Precision | ROC |

|---|---|---|---|---|

| KNN | 0.8680 | 0.8681 | 0.8688 | 0.8679 |

| SVM | 0.8745 | 0.8746 | 0.8753 | 0.8745 |

| Random Forest | 0.8978 | 0.8978 | 0.8980 | 0.8978 |

| BP Neural Network | 0.8930 | 0.8930 | 0.8932 | 0.8931 |

| XGBoost | 0.9761 | 0.9761 | 0.9763 | 0.9768 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Xiong, W.; Wang, J.; Tao, L.; Li, S.; Yi, Y.; Zou, X.; Li, C. A User Purchase Behavior Prediction Method Based on XGBoost. Electronics 2023, 12, 2047. https://doi.org/10.3390/electronics12092047

Wang W, Xiong W, Wang J, Tao L, Li S, Yi Y, Zou X, Li C. A User Purchase Behavior Prediction Method Based on XGBoost. Electronics. 2023; 12(9):2047. https://doi.org/10.3390/electronics12092047

Chicago/Turabian StyleWang, Wenle, Wentao Xiong, Jing Wang, Lei Tao, Shan Li, Yugen Yi, Xiang Zou, and Cui Li. 2023. "A User Purchase Behavior Prediction Method Based on XGBoost" Electronics 12, no. 9: 2047. https://doi.org/10.3390/electronics12092047