Face Aging with Feature-Guide Conditional Generative Adversarial Network

Abstract

1. Introduction

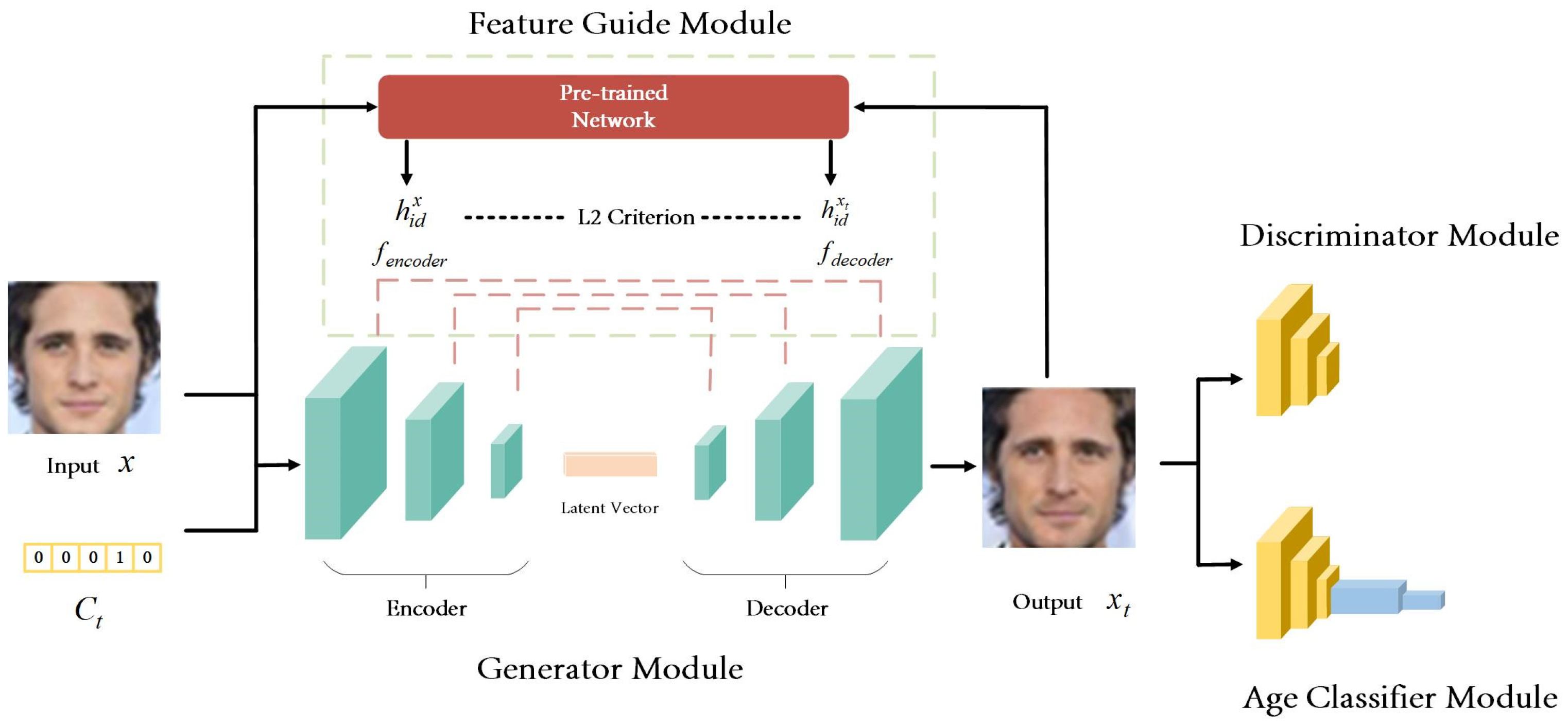

- To address identity preservation as well as aging accuracy, a network structure based on a gradient feature guide named FG-CGAN is proposed. Extra sub-modules, including a feature guide module in the generator part as well as an age classifier module combined with the discriminator, are attached to tackle identity reservation as well as aging accuracy.

- To minimize the distance between the input and output face image identities in the feature guide module, the perceptual loss combined is introduced. During this, L2 loss is introduced to constrain the size of the feature map generated in the generator module.

- In the age classifier module, to improve classification accuracy, the age-estimated loss is constructed, in which L-Softmax loss is combined to learn the intra-class compactness and inter-class separability between features.

2. Related Work

2.1. Face Aging Methods

2.2. Age Prediction/Estimation Methods

3. Methodology

3.1. Base Network

3.2. Feature Guide Module

3.3. Age Classifier Module

3.4. Overall Objective Function

4. Experiments and Evaluation

4.1. Dataset

4.2. Experimental Details

4.3. Classification Accuracy Influence for Age Classifier Module

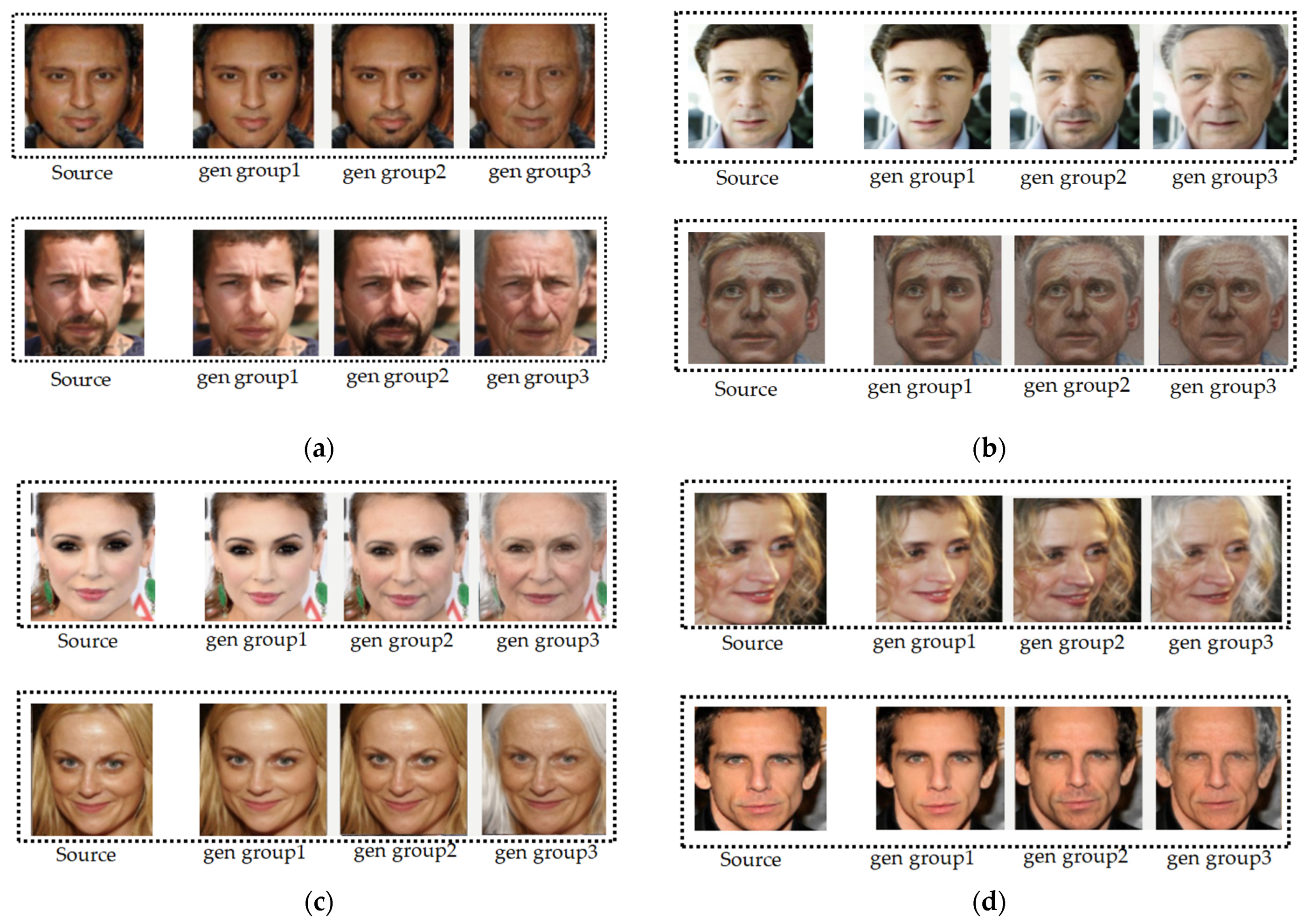

4.4. Intuitive Visual Display

4.5. Quality Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tazoe, Y.; Gohara, H.; Maejima, A.; Morishima, S. Facial aging simulator considering geometry and patch-tiled texture. In ACM SIGGRAPH 2012 Posters; Association for Computing Machinery: New York, NY, USA, 2012; p. 1. [Google Scholar]

- Kemelmacher-Shlizerman, I.; Suwajanakorn, S.; Seitz, S.M. Illumination-aware age progression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3334–3341. [Google Scholar]

- Lanitis, A.; Taylor, C.J.; Cootes, T.F. Toward automatic simulation of aging effects on face images. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 442–455. [Google Scholar] [CrossRef]

- Suo, J.; Zhu, S.C.; Shan, S.; Chen, X. A compositional and dynamic model for face aging. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 385–401. [Google Scholar]

- Suo, J.; Chen, X.; Shan, S.; Gao, W.; Dai, Q. A concatenational graph evolution aging model. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2083–2096. [Google Scholar] [PubMed]

- Ramanathan, N.; Chellappa, R. Modeling shape and textural variations in aging faces. In Proceedings of the 2008 8th IEEE International Conference on Automatic Face & Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–8. [Google Scholar]

- Ramanathan, N.; Chellappa, R. Modeling age progression in young faces. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: Piscataway, NJ, USA, 2006; Volume 1, pp. 387–394. [Google Scholar]

- Liu, C.; Yuen, J.; Torralba, A. SIFT Flow: Dense Correspondence across Scenes and Its Applications. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 978–999. [Google Scholar] [CrossRef] [PubMed]

- Rowland, D.; Perrett, D. Manipulating facial appearance through shape and color. IEEE Comput. Graph. Appl. 1995, 15, 70–76. [Google Scholar] [CrossRef]

- Zhu, H.; Huang, Z.; Shan, H.; Zhang, J. Look globally, age locally: Face aging with an attention mechanism. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1963–1967. [Google Scholar]

- Peng, F.; Yin, L.P.; Zhang, L.B.; Long, M. CGR-GAN: CG facial image regeneration for Antiforensics based on generative adversarial network. IEEE Trans. Multimed. 2019, 22, 2511–2525. [Google Scholar] [CrossRef]

- Yi, Z.; Zhang, H.; Tan, P.; Gong, M. Dualgan: Unsupervised dual learning for image-to-image translation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2849–2857. [Google Scholar]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.K.; Kim, J. Learning to discover cross-domain relations with generative adversarial networks. In Proceedings of the International Conference on Machine Learning. PMLR, Sydney, Australia, 6–11 August 2017; pp. 1857–1865. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Wang, Z.; Tang, X.; Luo, W.; Gao, S. Face aging with identity-preserved conditional generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7939–7947. [Google Scholar]

- Yang, H.; Huang, D.; Wang, Y.; Jain, A.K. Learning Continuous Face Age Progression: A Pyramid of GANs. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 499–515. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Hu, Y.; Li, Q.; He, R.; Sun, Z. Global and local consistent age generative adversarial networks. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1073–1078. [Google Scholar]

- Yang, H.; Huang, D.; Wang, Y.; Jain, A.K. Learning face age progression: A pyramid architecture of gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 31–39. [Google Scholar]

- Zhang, Z.; Song, Y.; Qi, H. Age progression/regression by conditional adversarial autoencoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5810–5818. [Google Scholar]

- Antipov, G.; Baccouche, M.; Dugelay, J.L. Face aging with conditional generative adversarial networks. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2089–2093. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the International Conference on Learning Representations, Scottsdale, AZ, USA, 2–4 May 2013. [Google Scholar]

- Pantraki, E.; Kotropoulos, C. Face Aging Using Global and Pyramid Generative Adversarial Networks. Mach. Vision Appl. 2021, 32, 82. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Duong, C.N.; Luu, K.; Quach, K.G.; Bui, T.D. Longitudinal Face Modeling via Temporal Deep Restricted Boltzmann Machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wang, W.; Cui, Z.; Yan, Y.; Feng, J.; Yan, S.; Shu, X.; Sebe, N. Recurrent face aging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2378–2386. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Sharma, N.; Sharma, R.; Jindal, N. Prediction of face age progression with generative adversarial networks. Multimed. Tools Appl. 2021, 80, 33911–33935. [Google Scholar] [CrossRef] [PubMed]

- Makhmudkhujaev, F.; Hong, S.; Kyu Park, I. Re-Aging GAN: Toward Personalized Face Age Transformation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3888–3897. [Google Scholar]

- Shen, Y.; Yang, C.; Tang, X.; Zhou, B. InterFaceGAN: Interpreting the Disentangled Face Representation Learned by GANs. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2004–2018. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Li, Q.; Sun, Z. Attribute-Aware Face Aging With Wavelet-Based Generative Adversarial Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11869–11878. [Google Scholar]

- Liu, Y.; Li, Q.; Sun, Z.; Tan, T. A3GAN: An Attribute-Aware Attentive Generative Adversarial Network for Face Aging. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2776–2790. [Google Scholar] [CrossRef]

- Levi, G.; Hassncer, T. Age and gender classification using convolutional neural networks. In Proceedings of the 2015 IEEE 146 Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Rothe, R.; Timofte, R.; Van Gool, L. Deep Expectation of Real and Apparent Age from a Single Image without Facial Landmarks. Int. J. Comput. Vision 2018, 126, 144–157. [Google Scholar] [CrossRef]

- Li, P.; Hu, Y.; Wu, X.; He, R.; Sun, Z. Deep Label Refinement for Age Estimation. Pattern Recogn. 2020, 100, 107178. [Google Scholar] [CrossRef]

- Xia, M.; Zhang, X.; Liu, W.; Weng, L.; Xu, Y. Multi-Stage Feature Constraints Learning for Age Estimation. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2417–2428. [Google Scholar] [CrossRef]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.; Wang, Z.; Paul Smolley, S. Least squares generative adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Chen, B.C.; Chen, C.S.; Hsu, W.H. Cross-age reference coding for age-invariant face recognition and retrieval. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 768–783. [Google Scholar]

- Ricanek, K.; Tesafaye, T. Morph: A longitudinal image database of normal adult age-progression. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton, UK, 10–12 April 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 341–345. [Google Scholar]

- Odena, A.; Olah, C.; Shlens, J. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the International conference on machine learning. PMLR, Sydney, Australia, 6–11 August 2017; pp. 2642–2651. [Google Scholar]

- Fang, H.; Deng, W.; Zhong, Y.; Hu, J. Triple-GAN: Progressive Face Aging with Triple Translation Loss. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 3500–3509. [Google Scholar]

| Database | Images | Subject | Dataset Distribution |

|---|---|---|---|

| CACD-ours | 146,794 | 2000 | 10–20 (8656), 20–30 (36,662), 30–40 (38,736), 40–50 (35,768) |

| Morph | 55,134 | 13,000 | <20 (7469), 20–30 (163,225), 30–40 (15,357), 40–50 (12,050), 50+ (3993) |

| 20–30 | 30–40 | 40–50 | ||

| original | 69.84 | 67.21 | 64.85 | |

| CACE | 20–30 | - | 66.91 | 64.18 |

| 30–40 | - | - | 65.02 | |

| original | 94.60 | 93.26 | 91.2 | |

| acGAN | 20–30 | - | 90.02 | 90.72 |

| 30–40 | - | - | 90.57 | |

| original | 95.57 | 94.65 | 90.69 | |

| IPCGAN | 20–30 | - | 94.68 | 90.8 |

| 30–40 | - | - | 90.36 | |

| original | 95.79 | 95.42 | 90.77 | |

| Our | 20–30 | - | 94.11 | 91.92 |

| 30–40 | - | - | 90.67 |

| 20–30 | 30–40 | 40–50 | |

| CACE | 76.42 | 73.17 | 72.87 |

| acGAN | 94.29 | 92.21 | 90.09 |

| IPCGAN | 100 | 100 | 100 |

| Our | 100 | 100 | 100 |

| acGANs | IPCGANs | Ours | |

| Face verification | 85.83 | 91.6 | 95.52 |

| Age classification | 32.70 | 31.74 | 31.87 |

| Image quality | 39.67 | 71.74 | 75.44 |

| VGG-face score | 21.60–25.24 | 34.48–38.18 | 36.12–39.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, C.; Li, Y.; Weng, Z.; Lei, X.; Yang, G. Face Aging with Feature-Guide Conditional Generative Adversarial Network. Electronics 2023, 12, 2095. https://doi.org/10.3390/electronics12092095

Li C, Li Y, Weng Z, Lei X, Yang G. Face Aging with Feature-Guide Conditional Generative Adversarial Network. Electronics. 2023; 12(9):2095. https://doi.org/10.3390/electronics12092095

Chicago/Turabian StyleLi, Chen, Yuanbo Li, Zhiqiang Weng, Xuemei Lei, and Guangcan Yang. 2023. "Face Aging with Feature-Guide Conditional Generative Adversarial Network" Electronics 12, no. 9: 2095. https://doi.org/10.3390/electronics12092095

APA StyleLi, C., Li, Y., Weng, Z., Lei, X., & Yang, G. (2023). Face Aging with Feature-Guide Conditional Generative Adversarial Network. Electronics, 12(9), 2095. https://doi.org/10.3390/electronics12092095