A Decade of Intelligent Software Testing Research: A Bibliometric Analysis

Abstract

1. Introduction

- Using AI to automatically generate test cases based on the code and requirements of the software [3].

- Using ML to predict which test cases are most likely to find defects, and prioritizing those test cases for execution [4].

- Using AI to automatically identify patterns in test results and suggest areas of the code that may be prone to defects [5].

- Automation: Intelligent testing uses AI and ML to automate the generation and execution of test cases, reducing the need for manual effort [10];

- Coverage: Intelligent testing techniques can help to ensure that a greater proportion of the code is covered by test cases, increasing the likelihood of finding defects [11];

- Prioritization: Using ML, intelligent testing can prioritize the execution of test cases based on their likelihood of finding defects, allowing testers to focus on the most important tests first [12];

- Defect prediction: AI can be used to analyze test results and identify patterns that may indicate the presence of defects in the code [13].

- Q1.

- What is the average number of citations per research document?

- Q2.

- What is the annual growth rate of research?

- Q3.

- How many year-wise citations were received by the research documents?

- Q4.

- What is the correlation between countries, authors, and research documents?

- Q5.

- What are the most successful and highly cited journals?

- Q6.

- What are the most contributing institutions and authors to the field?

- Q7.

- Which countries have contributed to most to the field?

- Q8.

- Who are the most cited authors?

- Q9.

- What are the most cited papers?

- Q10.

- What is the relationship between authors and the quantity of articles published?

- Q11.

- Which words are often used and how are they used together?

- Q12.

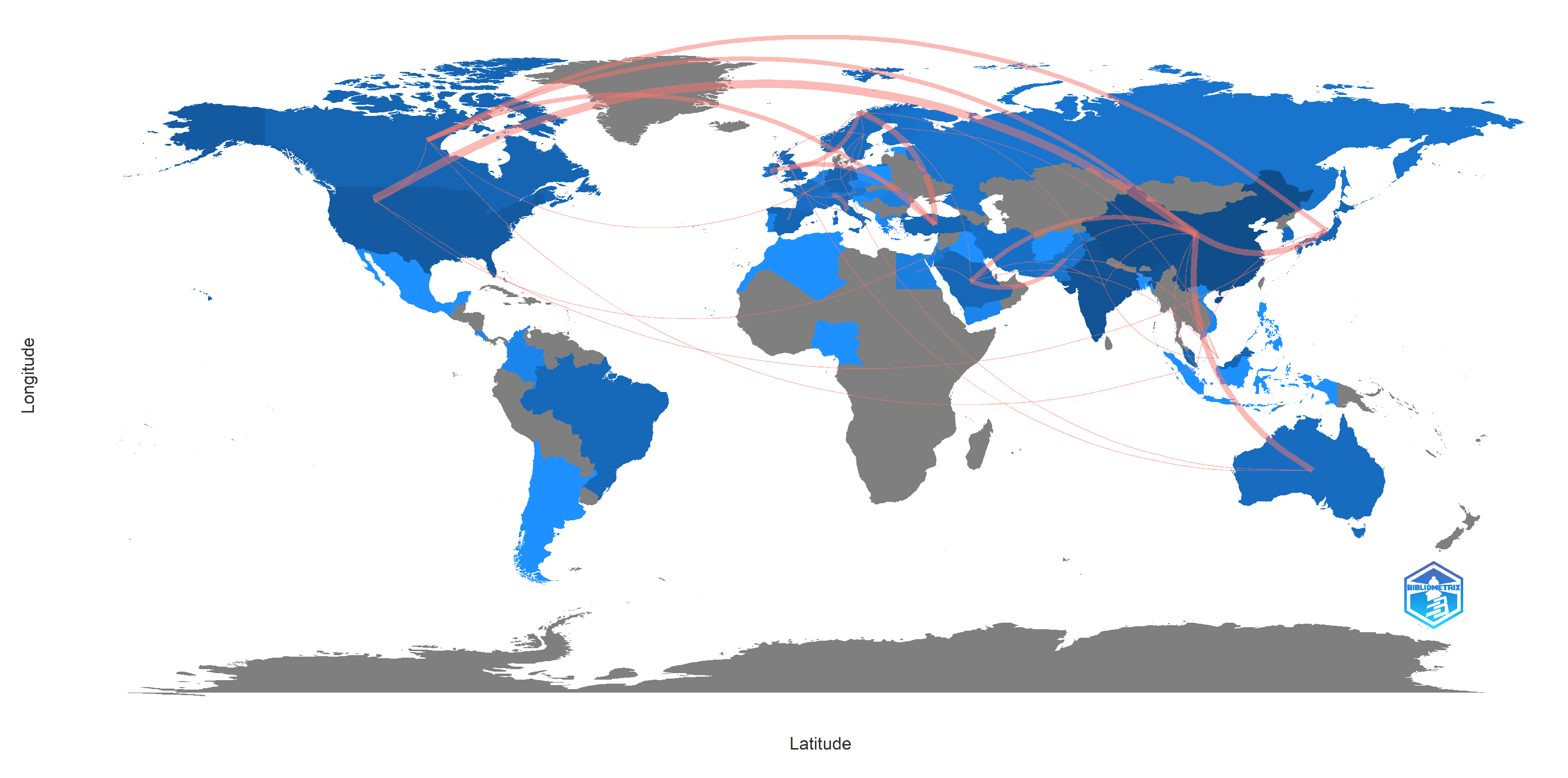

- Which countries usually collaborate together?

- Q13.

- Which organizations/institutions usually collaborate together?

- Q14.

- What are the scholarly communities in terms of authors and journals?

- Q15.

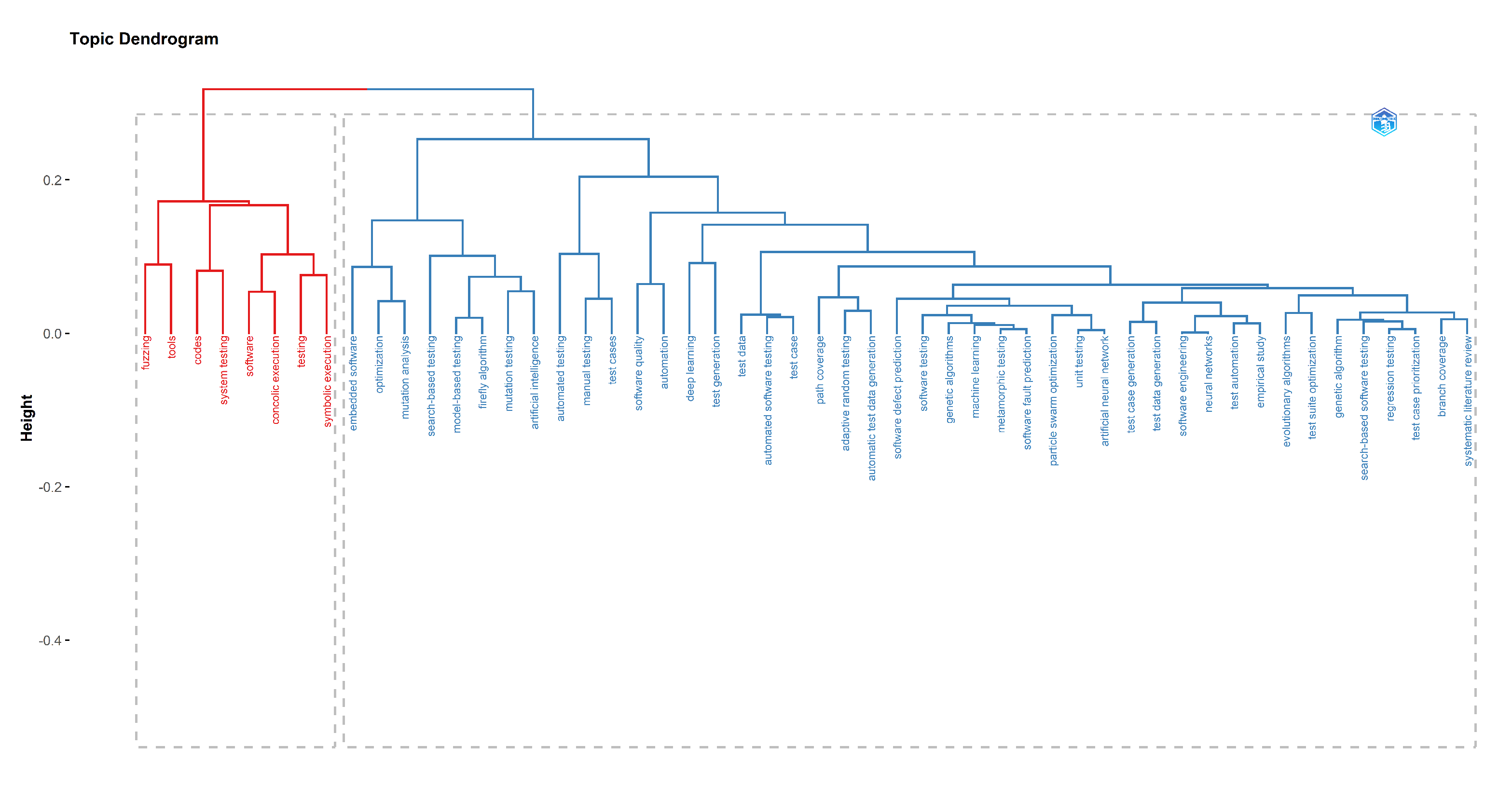

- What are the related research themes/topics?

2. Related Work

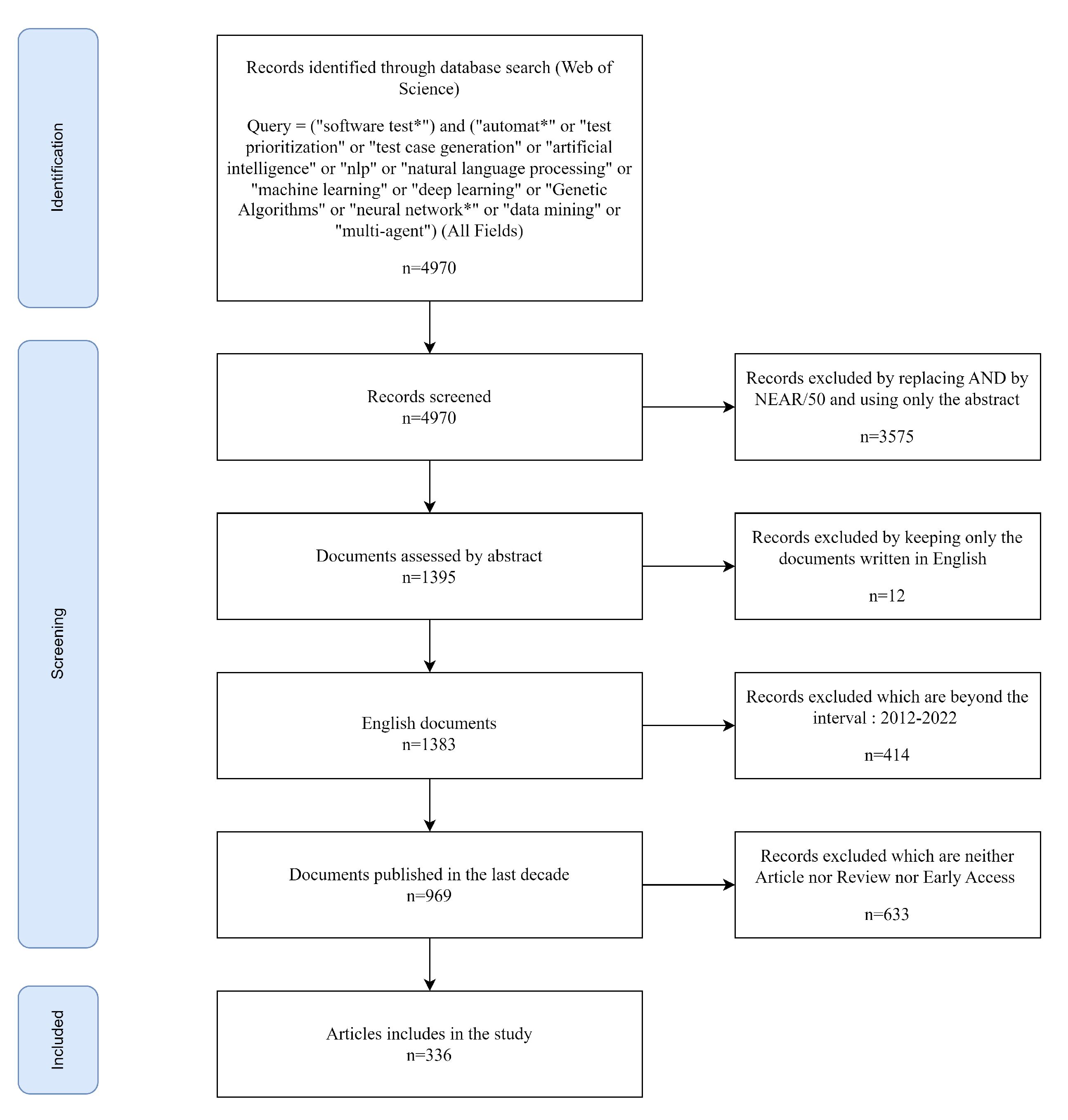

3. Data Collection

4. Research Method

- Main information: A table showing the fundamental traits of our dataset;

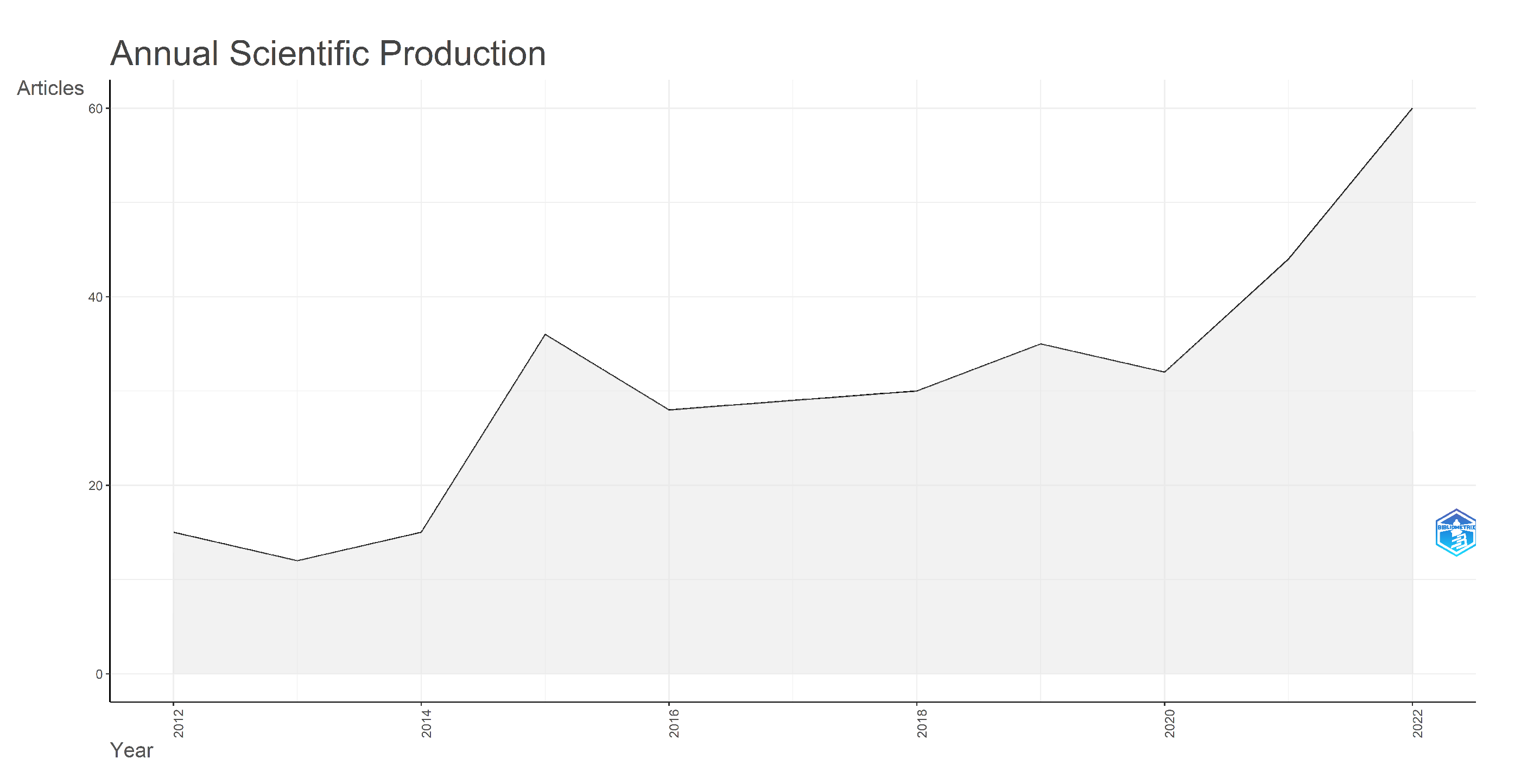

- Scientific production: A graph of the annual scientific production of the studied field;

- Citations per year: A graph showing the growth in citations by year;

- Relationship between countries, authors, and titles: Which is a Three-Field Plot showing the relationship between countries, authors, and title of articles.

- The Most Successful and Highly Cited Journals: A graph showing the best ten most active journals based on the quantity of articles they published and the top cited journals based on the quantity of citations they got;

- The Most Important Organizations: Based on the quantity of documents created, a graph showing the top 10 most prolific institutions;

- The Most Relevant Authors: A graph showing the top 10 authors based on the volume of articles created;

- The Top Developing Nations According to Corresponding Authors: A graph showing the top 10 most relevant countries based on the amount of articles produced, either in single country publication or multiple countries publication;

- The Most Globally cited authors: A graph of the top 10 most cited authors using all citations in the Web of Science database;

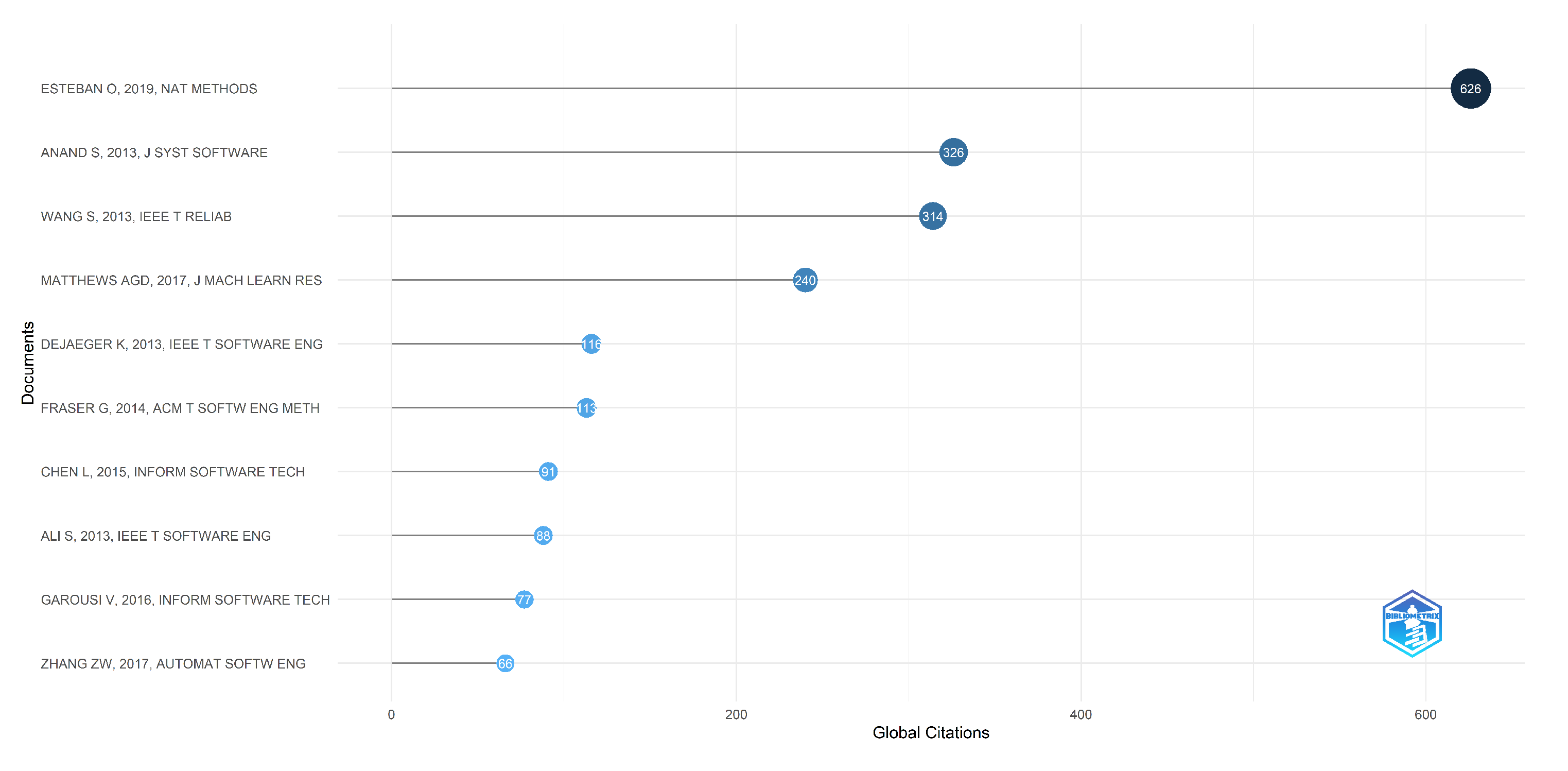

- The Most Globally cited documents: A graph of the top 10 most cited documents using all citations in the Web of Science database;

- Scientific Productivity Frequency Distribution (Lotka’s Law): A graph and table displaying the frequency analysis of research publications according to Lotka’s law;

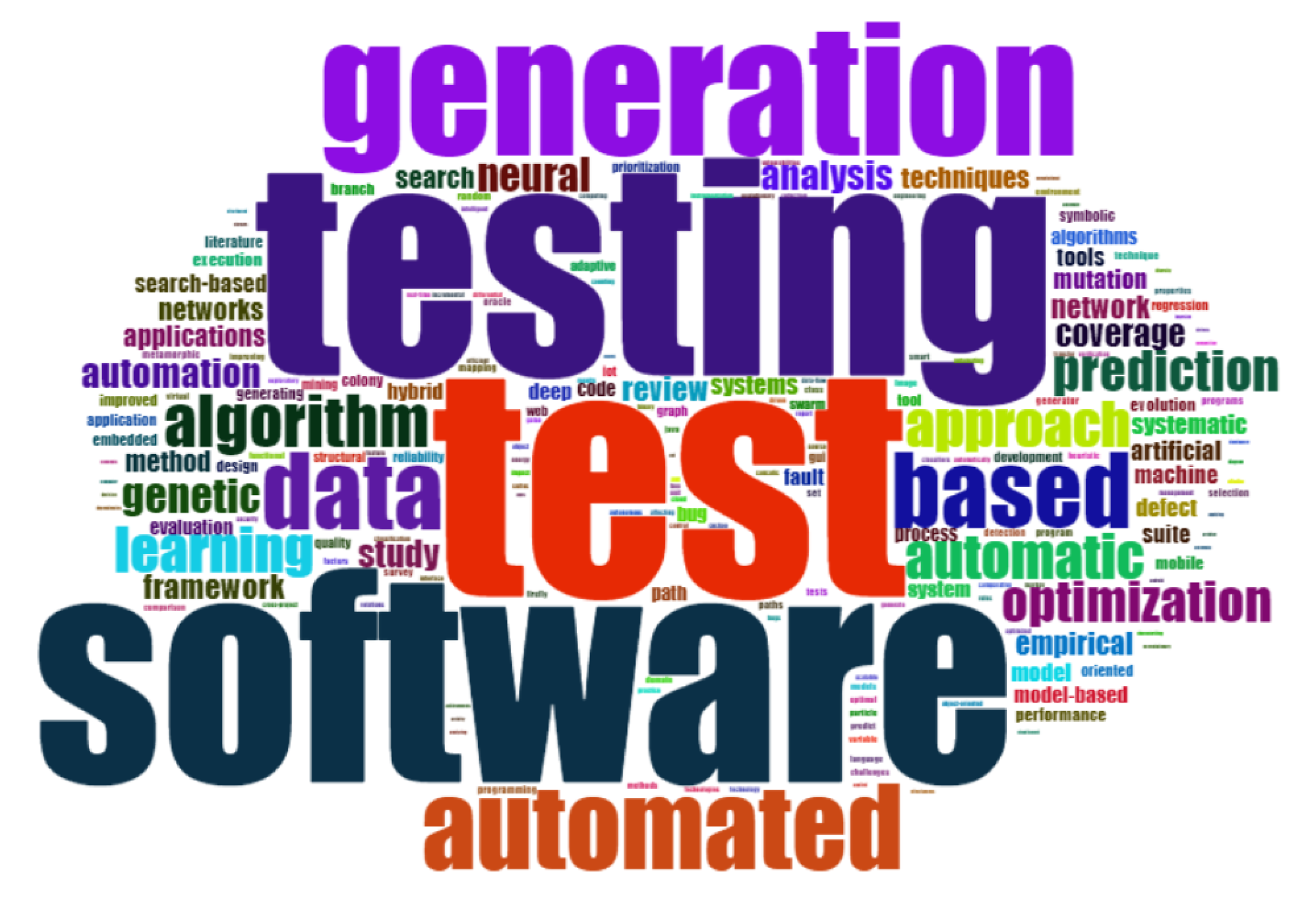

- The Most Frequent Words: A graph and a word cloud of the most used words in the documents’ abstract, title and keywords;

- Co-occurrence network of keywords: A graph that depicts the relationship between key words and divides them into smaller clusters;

- Collaboration World Map and Network: A graph and a world map showing the relationship between countries in terms of collaboration;

- Organisations Co-authorship: A graph showing a network of collaborations between organizations;

- Authors co-citation network: A graph of the network of connection between authors based on the co-citations;

- Journals co-citations network: A graph of the network of correlation between journals based on the co-citations.

- Thematic Map: A network graph, referred to as a thematic map, is created by the keywords and how they are connected. Each thematic map’s labels are identified using the name of the keyword that appears most frequently in the connected topic [73];

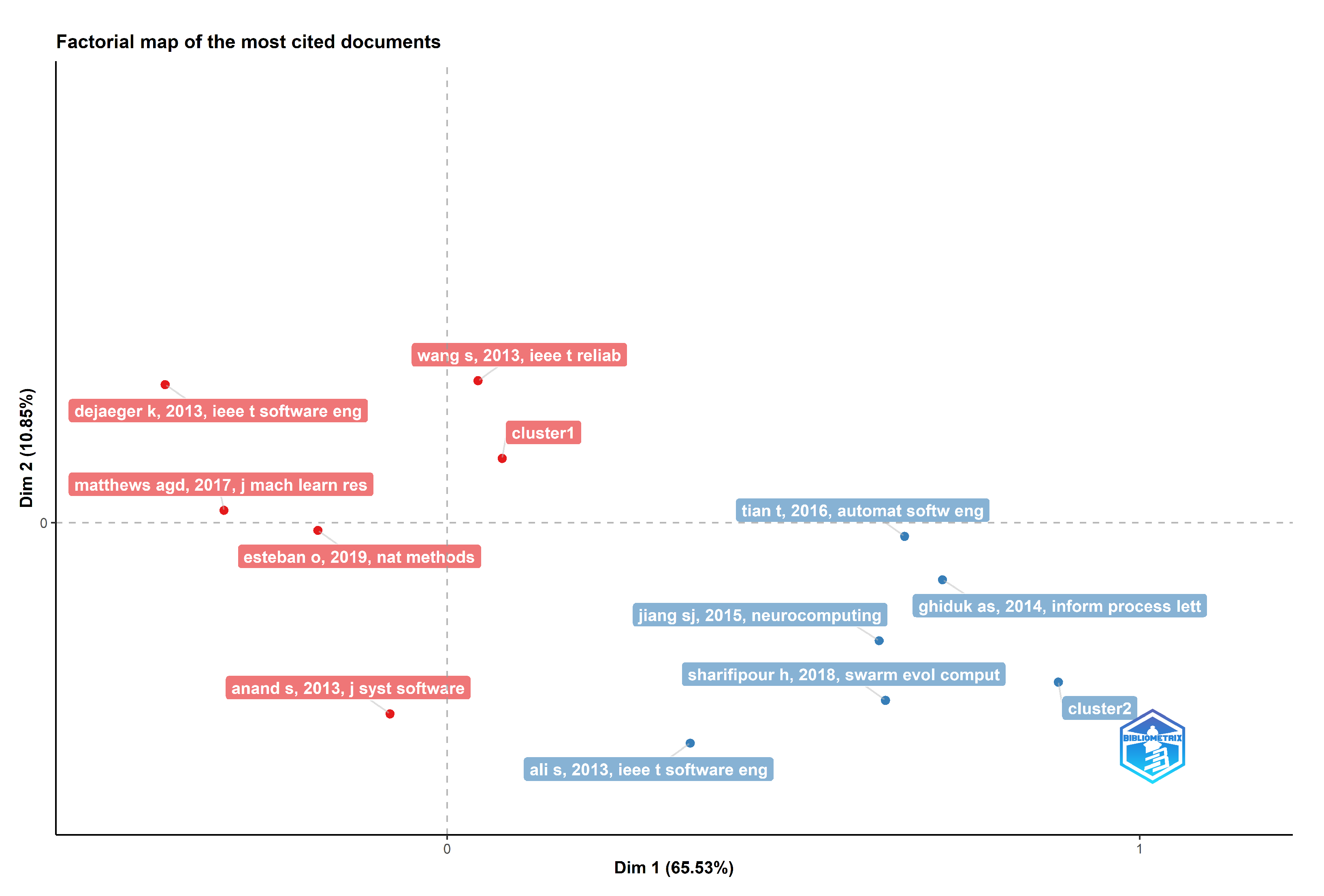

- Multiple Correspondence Analysis (MCA): For visually and mathematically analyzing such data, multivariate categorical analysis (MCA) is aa method for multidimensional analysis method [74];

- Correspondence Analysis (CA): Correspondence analysis is a visual method of comprehending the connection between items in a frequency table. It is intended to assess connections between qualitative variables and is a development of principal component analysis (or categorical data) [75];

- Multidimensional scaling: Just like MCA and CA, a dimensionality reduction approach called multidimentional scaling is used to create a map of the network under study using normalized data [69].

5. Results

5.1. Overview

5.1.1. Main Information

5.1.2. Annual Scientific Production

5.1.3. Average Citation per Year

5.1.4. Relationship between Countries, Authors, and Titles

5.2. Science Mapping

5.2.1. The Most Productive and Top-Cited Sources

5.2.2. The Most Relevant Institutions

5.2.3. The Most Relevant Authors

5.2.4. The Most Relevant Countries by Corresponding Authors

5.2.5. The Most Globally Cited Authors

5.2.6. The Most Globally Cited Documents

5.2.7. The Most Locally Cited Documents

5.2.8. Frequency Distribution of Scientific Productivity (Lotka’s Law)

5.2.9. The Most Frequent Words

5.2.10. Keywords Co-Occurrence Network

5.2.11. Collaboration World Map and Network

5.2.12. Organisations Co-Authorship

- Cluster 1:

- −

- The Certus Centre for Software Validation and Verification

- −

- Korea Advanced Institute of Science & Technology

- −

- Kyungpook National University

- −

- Nanjing University of Aeronautics and Astronautics

- −

- Simula Research Laboratory

- −

- University of Luxembourg

- −

- University of Milano-Bicocca

- −

- University of Ottawa

- −

- University of Sheffield

- Cluster 2:

- −

- Blekinge Institute of Technology

- −

- Ericsson AB

- −

- Mälardalen University

- −

- Queen’s University Belfast

- −

- Technical University of Clausthal

- −

- University of Innsbruck

- Cluster 3:

- −

- McGill University

- −

- Microsoft Research

- −

- Stanford University

- −

- University of Cambridge

- −

- University of Edinburgh

- −

- University of Oxford

5.2.13. Authors Co-Citation Network

5.2.14. Journals Co-Citations Network

5.3. Network Analysis

5.3.1. Thematic Map

5.3.2. Multiple Correspondence Analysis

5.3.3. Correspondence Analysis

5.3.4. Multidimensional Scaling

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tahvili, S.; Hatvani, L. Uncertainty, Computational Techniques, and Decision Intelligence. In Artificial Intelligence Methods for Optimization of the Software Testing Process with Practical Examples and Exercises; Elsevier Science: Amsterdam, The Netherlands, 2022. [Google Scholar]

- Khari, M.; Mishra, D.; Acharya, B.; Crespo, R. Optimization of Automated Software Testing Using Meta-Heuristic Techniques; Springer International Publishing: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Meziane, F.; Vadera, S. Artificial Intelligence Applications for Improved Software Engineering Development: New Prospects; IGI Global: Harrisburg, PA, USA, 2009. [Google Scholar]

- Qiao, L.; Li, X.; Umer, Q.; Guo, P. Deep learning based software defect prediction. Neurocomputing 2020, 385, 100–110. [Google Scholar] [CrossRef]

- Ren, J.; Qin, K.; Ma, Y.; Luo, G. On software defect prediction using machine learning. J. Appl. Math. 2014, 2014, 785435. [Google Scholar] [CrossRef]

- Battina, D.S. Artificial intelligence in software test automation: A systematic literature review. Int. J. Emerg. Technol. Innov. Res. 2019, 6, 2349–5162. [Google Scholar]

- Karhu, K.; Repo, T.; Taipale, O.; Smolander, K. Empirical observations on software testing automation. In Proceedings of the International Conference on Software Testing Verification and Validation, Denver, CO, USA, 1–4 April 2009; pp. 201–209. [Google Scholar]

- Sivakumar, N.; Vivekanandan, K. Comparing the Testing Approaches of Traditional, Object-Oriented and Agent-Oriented Software System. Int. J. Comput. Sci. Eng. Technol. (IJCSET) 2012, 3, 498–504. [Google Scholar]

- Briand, L.C.; Labiche, Y.; Bawar, Z. Using machine learning to refine black-box test specifications and test suites. In Proceedings of the The 8th International Conference on Quality Software, Oxford, UK, 12–13 August 2008; pp. 135–144. [Google Scholar]

- Rafi, D.M.; Moses, K.R.K.; Petersen, K.; Mäntylä, M.V. Benefits and limitations of automated software testing: Systematic literature review and practitioner survey. In Proceedings of the 7th International Workshop on Automation of Software Test (AST), Zurich, Switzerland, 2–3 June 2012; pp. 36–42. [Google Scholar]

- Okutan, A.; Yıldız, O.T. Software defect prediction using Bayesian networks. Empir. Softw. Eng. 2014, 19, 154–181. [Google Scholar] [CrossRef]

- Perini, A.; Susi, A.; Avesani, P. A machine learning approach to software requirements prioritization. IEEE Trans. Softw. Eng. 2012, 39, 445–461. [Google Scholar] [CrossRef]

- Wahono, R.S. A systematic literature review of software defect prediction. J. Softw. Eng. 2015, 1, 1–16. [Google Scholar]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to conduct a bibliometric analysis: An overview and guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Trudova, A.; Dolezel, M.; Buchalcevova, A. Artificial Intelligence in Software Test Automation: A Systematic. Available online: https://pdfs.semanticscholar.org/9fca/3577e28c06ff27f16bfde7855f29b8d8236c.pdf (accessed on 27 March 2023).

- Lima, R.; da Cruz, A.M.R.; Ribeiro, J. Artificial intelligence applied to software testing: A literature review. In Proceedings of the 15th Iberian Conference on Information Systems and Technologies (CISTI), Seville, Spain, 24–27 June 2020; pp. 1–6. [Google Scholar]

- Serna, M.E.; Acevedo, M.E.; Serna, A.A. Integration of properties of virtual reality, artificial neural networks, and artificial intelligence in the automation of software tests: A review. J. Software Evol. Process. 2019, 31, e2159. [Google Scholar] [CrossRef]

- Garousi, V.; Bauer, S.; Felderer, M. NLP-assisted software testing: A systematic mapping of the literature. Inf. Softw. Technol. 2020, 126, 106321. [Google Scholar] [CrossRef]

- Garousi, V.; Felderer, M.; Kılıçaslan, F.N. A survey on software testability. Inf. Softw. Technol. 2019, 108, 35–64. [Google Scholar] [CrossRef]

- Zardari, S.; Alam, S.; Al Salem, H.A.; Al Reshan, M.S.; Shaikh, A.; Malik, A.F.K.; Masood ur Rehman, M.; Mouratidis, H. A Comprehensive Bibliometric Assessment on Software Testing (2016–2021). Electronics 2022, 11, 1984. [Google Scholar] [CrossRef]

- Ghiduk, A.S.; Girgis, M.R.; Shehata, M.H. Higher order mutation testing: A systematic literature review. Comput. Sci. Rev. 2017, 25, 29–48. [Google Scholar] [CrossRef]

- Jamil, M.A.; Arif, M.; Abubakar, N.S.A.; Ahmad, A. Software testing techniques: A literature review. In Proceedings of the 6th International Conference on Information and Communication Technology for the Muslim World (ICT4M), Jakarta, Indonesia, 22–24 November 2016; pp. 177–182. [Google Scholar]

- Garousi, V.; Mäntylä, M.V. A systematic literature review of literature reviews in software testing. Inf. Softw. Technol. 2016, 80, 195–216. [Google Scholar] [CrossRef]

- Garousi, V.; Mäntylä, M.V. When and what to automate in software testing? A multi-vocal literature review. Inf. Softw. Technol. 2016, 76, 92–117. [Google Scholar] [CrossRef]

- Wiklund, K.; Eldh, S.; Sundmark, D.; Lundqvist, K. Impediments for software test automation: A systematic literature review. Softw. Testing, Verif. Reliab. 2017, 27, e1639. [Google Scholar] [CrossRef]

- Zhang, D. Machine learning in value-based software test data generation. In Proceedings of the 18th International Conference on Tools with Artificial Intelligence (ICTAI’06), Arlington, VA, USA, 13–15 November 2006; pp. 732–736. [Google Scholar]

- Esnaashari, M.; Damia, A.H. Automation of software test data generation using genetic algorithm and reinforcement learning. Expert Syst. Appl. 2021, 183, 115446. [Google Scholar] [CrossRef]

- Zhu, X.-M.; Yang, X.-F. Software test data generation automatically based on improved adaptive particle swarm optimizer. In Proceedings of the International Conference on Computational and Information Sciences, Chengdu, China, 17–19 December 2010; pp. 1300–1303. [Google Scholar]

- Koleejan, C.; Xue, B.; Zhang, M. Code coverage optimisation in genetic algorithms and particle swarm optimisation for automatic software test data generation. In Proceedings of the Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 1204–1211. [Google Scholar]

- Huang, M.; Zhang, C.; Liang, X. Software test cases generation based on improved particle swarm optimization. In Proceedings of the 2nd International Conference on Information Technology and Electronic Commerce, Dalian, China, 20–21 December 2014; pp. 52–55. [Google Scholar]

- Liu, C. Research on Software Test Data Generation based on Particle Swarm Optimization Algorithm. In Proceedings of the 5th International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 3–5 June 2021; pp. 1375–1378. [Google Scholar]

- Khari, M.; Kumar, P. A novel approach for software test data generation using cuckoo algorithm. In Proceedings of the 2nd International Conference on Information and Communication Technology for Competitive Strategies, Udaipur, India, 4–5 March 2016; pp. 1–6. [Google Scholar]

- Alshraideh, M.; Bottaci, L. Search-based software test data generation for string data using program-specific search operators. Softw. Testing, Verif. Reliab. 2006, 16, 175–203. [Google Scholar] [CrossRef]

- Mairhofer, S.; Feldt, R.; Torkar, R. Search-based software testing and test data generation for a dynamic programming language. In Proceedings of the 13th Annual Conference on Genetic and Evolutionary Computation, Dublin, Ireland, 12–16 June 2011; pp. 1859–1866. [Google Scholar]

- McMinn, P. Search-based software test data generation: A survey. Softw. Testing Verif. Reliab. 2004, 14, 105–156. [Google Scholar] [CrossRef]

- De Lima, L.F.; Peres, L.M.; Grégio, A.R.A.; Silva, F. A Systematic Literature Mapping of Artificial Intelligence Planning in Software Testing. In Proceedings of the ICSOFT, Paris, France, 7–9 July 2020; pp. 152–159. [Google Scholar]

- Dahiya, S.S.; Chhabra, J.K.; Kumar, S. Application of artificial bee colony algorithm to software testing. In Proceedings of the 21st Australian Software Engineering Conference, Auckland, New Zealand, 6–9 April 2010; pp. 149–154. [Google Scholar]

- Mala, D.J.; Mohan, V.; Kamalapriya, M. Automated software test optimisation framework—An artificial bee colony optimisation-based approach. IET Softw. 2010, 4, 334–348. [Google Scholar] [CrossRef]

- Karnavel, K.; Santhoshkumar, J. Automated software testing for application maintenance by using bee colony optimization algorithms (BCO). In Proceedings of the International Conference on Information Communication and Embedded Systems (ICICES), Chennai, India, 21–22 February 2013; pp. 327–330. [Google Scholar]

- Lakshminarayana, P.; SureshKumar, T. Automatic generation and optimization of test case using hybrid cuckoo search and bee colony algorithm. J. Intell. Syst. 2021, 30, 59–72. [Google Scholar] [CrossRef]

- Lijuan, W.; Yue, Z.; Hongfeng, H. Genetic algorithms and its application in software test data generation. In Proceedings of the International Conference on Computer Science and Electronics Engineering, Hangzhou, China, 23–25 March 2012; Volume 2, pp. 617–620. [Google Scholar]

- Berndt, D.; Fisher, J.; Johnson, L.; Pinglikar, J.; Watkins, A. Breeding software test cases with genetic algorithms. In Proceedings of the 36th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 6–9 January 2003; p. 10. [Google Scholar]

- Yang, S.; Man, T.; Xu, J.; Zeng, F.; Li, K. RGA: A lightweight and effective regeneration genetic algorithm for coverage-oriented software test data generation. Inf. Softw. Technol. 2016, 76, 19–30. [Google Scholar] [CrossRef]

- Khan, R.; Amjad, M. Automatic test case generation for unit software testing using genetic algorithm and mutation analysis. In Proceedings of the UP Section Conference on Electrical Computer and Electronics (UPCON), Allahabad, India, 4–6 December 2015; pp. 1–5. [Google Scholar]

- Dong, Y.; Peng, J. Automatic generation of software test cases based on improved genetic algorithm. In Proceedings of the International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 227–230. [Google Scholar]

- Bouchachia, A. An immune genetic algorithm for software test data generation. In Proceedings of the 7th International Conference on Hybrid Intelligent Systems (HIS 2007), Kaiserslautern, Germany, 17–19 September 2007; pp. 84–89. [Google Scholar]

- Peng, Y.P.; Zeng, B. Software Test Data Generation for Multiple Paths Based on Genetic Algorithms. Appl. Mech. Mater. 2013, 263, 1969–1973. [Google Scholar] [CrossRef]

- Cohen, K.B.; Hunter, L.E.; Palmer, M. Assessment of software testing and quality assurance in natural language processing applications and a linguistically inspired approach to improving it. In Trustworthy Eternal Systems via Evolving Software, Data and Knowledge, Proceedings of the 2nd International Workshop (EternalS 2012), Montpellier, France, 28 August 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 77–90. [Google Scholar]

- Ahsan, I.; Butt, W.H.; Ahmed, M.A.; Anwar, M.W. A comprehensive investigation of natural language processing techniques and tools to generate automated test cases. In Proceedings of the 2nd International Conference on Internet of things, Data and Cloud Computing, Cambridge, UK, 22–23 March 2017; pp. 1–10. [Google Scholar]

- Ansari, A.; Shagufta, M.B.; Fatima, A.S.; Tehreem, S. Constructing test cases using natural language processing. In Proceedings of the 3rd International Conference on Advances in Electrical, Electronics, Information, Communication and Bio-Informatics (AEEICB), Chennai, India, 27–28 February 2017; pp. 95–99. [Google Scholar]

- Wang, H.; Bai, L.; Jiezhang, M.; Zhang, J.; Li, Q. Software testing data analysis based on data mining. In Proceedings of the 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 682–687. [Google Scholar]

- Last, M.; Friedman, M.; Kandel, A. Using data mining for automated software testing. Int. J. Softw. Eng. Knowl. Eng. 2004, 14, 369–393. [Google Scholar] [CrossRef]

- Yu, S.; Ai, J.; Zhang, Y. Software test data generation based on multi-agent. In Advances in Software Engineering, Proceedings of the International Conference on Advanced Software Engineering and Its Applications (ASEA 2009), Jeju Island, Republic of Korea, 10–12 December 2009; pp. 188–195.

- Dhavachelvan, P.; Uma, G. Complexity measures for software systems: Towards multi-agent based software testing. In Proceedings of the International Conference on Intelligent Sensing and Information Processing, Chennai, India, 4–7 January 2005; pp. 359–364. [Google Scholar]

- Tang, J. Towards automation in software test life cycle based on multi-agent. In Proceedings of the International Conference on Computational Intelligence and Software Engineering, Wuhan, China, 10–12 December 2010; pp. 1–4. [Google Scholar]

- Alyahya, S. Collaborative Crowdsourced Software Testing. Electronics 2022, 11, 3340. [Google Scholar] [CrossRef]

- Górski, T. The k + 1 Symmetric Test Pattern for Smart Contracts. Symmetry 2022, 14, 1686. [Google Scholar] [CrossRef]

- Bijlsma, A.; Passier, H.J.; Pootjes, H.; Stuurman, S. Template Method test pattern. Inf. Process. Lett. 2018, 139, 8–12. [Google Scholar] [CrossRef]

- López, J.; Kushik, N.; Yevtushenko, N. Source code optimization using equivalent mutants. Inf. Softw. Technol. 2018, 103, 138–141. [Google Scholar] [CrossRef]

- Segura, S.; Troya, J.; Durán, A.; Ruiz-Cortés, A. Performance metamorphic testing: A proof of concept. Inf. Softw. Technol. 2018, 98, 1–4. [Google Scholar] [CrossRef]

- Harzing, A.W.; Alakangas, S. Google Scholar, Scopus and the Web of Science: A longitudinal and cross-disciplinary comparison. Scientometrics 2016, 106, 787–804. [Google Scholar] [CrossRef]

- Norris, M.; Oppenheim, C. Comparing alternatives to the Web of Science for coverage of the social sciences’ literature. J. Inf. 2007, 1, 161–169. [Google Scholar] [CrossRef]

- Chadegani, A.A.; Salehi, H.; Yunus, M.M.; Farhadi, H.; Fooladi, M.; Farhadi, M.; Ebrahim, N.A. A comparison between two main academic literature collections: Web of Science and Scopus databases. arXiv 2013, arXiv:1305.0377. [Google Scholar] [CrossRef]

- Feng, X.W.; Hadizadeh, M.; Cheong, J.P.G. Global Trends in Physical-Activity Research of Autism: Bibliometric Analysis Based on the Web of Science Database (1980–2021). Int. J. Environ. Res. Public Health 2022, 19, 7278. [Google Scholar] [CrossRef] [PubMed]

- Skute, I. Opening the black box of academic entrepreneurship: A bibliometric analysis. Scientometrics 2019, 120, 237–265. [Google Scholar] [CrossRef]

- Zhao, D.; Strotmann, A. Analysis and visualization of citation networks. Synth. Lect. Inf. Concepts Retr. Serv. 2015, 7, 1–207. [Google Scholar]

- Ma, X.; Zhang, L.; Wang, J.; Luo, Y. Knowledge domain and emerging trends on echinococcosis research: A scientometric analysis. Int. J. Environ. Res. Public Health 2019, 16, 842. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.C.; Chen, C.; Tsai, X.T. Visualizing the knowledge domain of nanoparticle drug delivery technologies: A scientometric review. Appl. Sci. 2016, 6, 11. [Google Scholar] [CrossRef]

- Aria, M.; Cuccurullo, C. bibliometrix: An R-tool for comprehensive science mapping analysis. J. Inf. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Van Eck, N.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- Chen, C. Science mapping: A systematic review of the literature. J. Data Inf. Sci. 2017, 2, 1–40. [Google Scholar] [CrossRef]

- Negahban, M.B.; Zarifsanaiey, N. Network analysis and scientific mapping of the e-learning literature from 1995 to 2018. Knowl. Manag. E-Learn. Int. J. 2020, 12, 268–279. [Google Scholar]

- Cobo, M.J.; López-Herrera, A.G.; Herrera-Viedma, E.; Herrera, F. An approach for detecting, quantifying, and visualizing the evolution of a research field: A practical application to the Fuzzy Sets Theory field. J. Inf. 2011, 5, 146–166. [Google Scholar] [CrossRef]

- Matute, J.; Linsen, L. Evaluating Data-type Heterogeneity in Interactive Visual Analyses with Parallel Axes. In Proceedings of the Computer Graphics Forum, Reims, France, 25–29 April 2022. [Google Scholar]

- Ejaz, H.; Zeeshan, H.M.; Ahmad, F.; Bukhari, S.N.A.; Anwar, N.; Alanazi, A.; Sadiq, A.; Junaid, K.; Atif, M.; Abosalif, K.O.A.; et al. Bibliometric Analysis of Publications on the Omicron Variant from 2020 to 2022 in the Scopus Database Using R and VOSviewer. Int. J. Environ. Res. Public Health 2022, 19, 12407. [Google Scholar] [CrossRef]

- Jaffe, K.; Ter Horst, E.; Gunn, L.H.; Zambrano, J.D.; Molina, G. A network analysis of research productivity by country, discipline, and wealth. PLoS ONE 2020, 15, e0232458. [Google Scholar] [CrossRef] [PubMed]

| Description | Results |

|---|---|

| Key Information | |

| Timespan | 2012–2022 |

| Sources | 162 |

| Documents | 336 |

| Average number of citations per document | 12.88 |

| References | 11,280 |

| Annual growth rate | 14.87% |

| Contents of Documents | |

| Keywords Used by Authors (DE) | 1075 |

| Keywords Plus (ID) | 280 |

| Authors | |

| Authors of Single-Authored Documents | 20 |

| Authors of Multi-Authored Documents | 1033 |

| Collaboration among Authors | |

| Single-authored documents | 20 |

| Co-authors per document | 3.64 |

| International Co-authorships | 26.79% |

| Country | Articles | Frequency | Single-Country Publication | Multiple-Country Publication | Multiple-Country Publication Ratio |

|---|---|---|---|---|---|

| China | 67 | 0.199 | 55 | 12 | 0.179 |

| India | 48 | 0.143 | 47 | 1 | 0.021 |

| USA | 27 | 0.080 | 19 | 8 | 0.296 |

| Brazil | 13 | 0.039 | 11 | 2 | 0.154 |

| Malaysia | 13 | 0.039 | 7 | 6 | 0.462 |

| Germany | 10 | 0.030 | 7 | 3 | 0.300 |

| Korea | 9 | 0.027 | 7 | 2 | 0.222 |

| Spain | 9 | 0.027 | 5 | 4 | 0.444 |

| United Kingdom | 9 | 0.027 | 5 | 4 | 0.444 |

| Turkey | 8 | 0.024 | 4 | 4 | 0.500 |

| Author, Year, Journal | Article Title | Total Citations | Total Citations per Year |

|---|---|---|---|

| CHEN TY, 2013, JOURNAL OF SYSTEMS AND SOFTWARE | AN ORCHESTRATED SURVEY OF METHODOLOGIES FOR AUTOMATED SOFTWARE TEST CASE GENERATION | 326 | 29.636 |

| ARCURI A, 2014, ACM TRANSACTIONS ON SOFTWARE ENGINEERING AND METHODOLOGY | A LARGE-SCALE EVALUATION OF AUTOMATED UNIT TEST GENERATION USING EVOSUITE | 113 | 11.3 |

| ARCURI A, 2013, IEEE TRANSACTIONS ON SOFTWARE ENGINEERING | GENERATING TEST DATA FROM OCL CONSTRAINTS WITH SEARCH TECHNIQUES | 88 | 8 |

| ALI S, 2013, IEEE TRANSACTIONS ON SOFTWARE ENGINEERING | GENERATING TEST DATA FROM OCL CONSTRAINTS WITH SEARCH TECHNIQUES | 88 | 8 |

| GAROUSI V, 2016, INFORMATION AND SOFTWARE TECHNOLOGY | WHEN AND WHAT TO AUTOMATE IN SOFTWARE TESTING? A MULTI-VOCAL LITERATURE REVIEW | 77 | 9.625 |

| ARCURI A, 2015, EMPIRICAL SOFTWARE ENGINEERING | ACHIEVING SCALABLE MUTATION-BASED GENERATION OF WHOLE TEST SUITES | 64 | 7.111 |

| KHARI M, 2019, SOFT COMPUTING | AN EXTENSIVE EVALUATION OF SEARCH-BASED SOFTWARE TESTING: A REVIEW | 24 | 4.8 |

| KHARI M, 2013, INTERNATIONAL JOURNAL OF BIO-INSPIRED COMPUTATION | HEURISTIC SEARCH-BASED APPROACH FOR AUTOMATED TEST DATA GENERATION: A SURVEY | 23 | 2.091 |

| KHARI M, 2018, SOFT COMPUTING | OPTIMIZED TEST SUITES FOR AUTOMATED TESTING USING DIFFERENT OPTIMIZATION TECHNIQUES | 22 | 3.667 |

| GONG DW, 2016, AUTOMATED SOFTWARE ENGINEERING | TEST DATA GENERATION FOR PATH COVERAGE OF MESSAGE-PASSING PARALLEL PROGRAMS BASED ON CO-EVOLUTIONARY GENETIC ALGORITHMS | 18 | 2.25 |

| Documents Written | Number of Authors | Proportion of Authors |

|---|---|---|

| 1 | 927 | 0.880 |

| 2 | 96 | 0.091 |

| 3 | 23 | 0.022 |

| 4 | 3 | 0.003 |

| 5 | 2 | 0.002 |

| 6 | 2 | 0.002 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boukhlif, M.; Hanine, M.; Kharmoum, N. A Decade of Intelligent Software Testing Research: A Bibliometric Analysis. Electronics 2023, 12, 2109. https://doi.org/10.3390/electronics12092109

Boukhlif M, Hanine M, Kharmoum N. A Decade of Intelligent Software Testing Research: A Bibliometric Analysis. Electronics. 2023; 12(9):2109. https://doi.org/10.3390/electronics12092109

Chicago/Turabian StyleBoukhlif, Mohamed, Mohamed Hanine, and Nassim Kharmoum. 2023. "A Decade of Intelligent Software Testing Research: A Bibliometric Analysis" Electronics 12, no. 9: 2109. https://doi.org/10.3390/electronics12092109

APA StyleBoukhlif, M., Hanine, M., & Kharmoum, N. (2023). A Decade of Intelligent Software Testing Research: A Bibliometric Analysis. Electronics, 12(9), 2109. https://doi.org/10.3390/electronics12092109